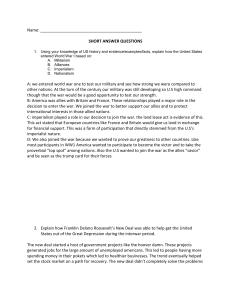

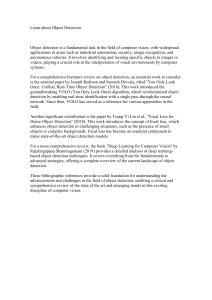

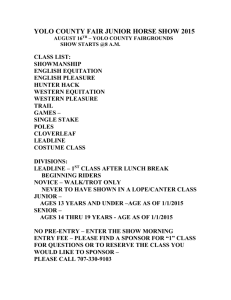

sensors Article Fast Underwater Optical Beacon Finding and High Accuracy Visual Ranging Method Based on Deep Learning Bo Zhang 1 , Ping Zhong 1, * , Fu Yang 1 , Tianhua Zhou 2 and Lingfei Shen 2 1 2 * Citation: Zhang, B.; Zhong, P.; Yang, F.; Zhou, T.; Shen, L. Fast Underwater Optical Beacon Finding and High College of Science, Donghua University, Shanghai 201620, China Key Laboratory of Space Laser Communication and Detection Technology, Shanghai Institute of Optics and Fine Mechanics, Chinses Academy of Sciences, Shanghai 201800, China Correspondence: pzhong937@dhu.edu.cn; Tel.: +86-137-6133-6705 Abstract: Visual recognition and localization of underwater optical beacons is an important step in autonomous underwater vehicle (AUV) docking. The main issues that restrict the use of underwater monocular vision range are the attenuation of light in water, the mirror image between the water surface and the light source, and the small size of the optical beacon. In this study, a fast monocular camera localization method for small 4-light beacons is proposed. A YOLO V5 (You Only Look Once) model with coordinated attention (CA) mechanisms is constructed. Compared with the original model and the model with convolutional block attention mechanisms (CBAM), and our model improves the prediction accuracy to 96.1% and the recall to 95.1%. A sub-pixel light source centroid localization method combining super-resolution generative adversarial networks (SRGAN) image enhancement and Zernike moments is proposed. The detection range of small optical beacons is increased from 7 m to 10 m. In the laboratory self-made pool and anechoic pool experiments, the average relative distance error of our method is 1.04 percent, and the average detection speed is 0.088 s (11.36 FPS). This study offers a solution for the long-distance fast and accurate positioning of underwater small optical beacons due to their fast recognition, accurate ranging, and wide detection range characteristics. Keywords: autonomous underwater vehicles; target detection; monocular vision; deep learning Accuracy Visual Ranging Method Based on Deep Learning. Sensors 2022, 22, 7940. https://doi.org/ 10.3390/s22207940 Academic Editor: Enrico Meli Received: 22 September 2022 Accepted: 17 October 2022 Published: 18 October 2022 Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. Copyright: © 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https:// creativecommons.org/licenses/by/ 4.0/). 1. Introduction Remotely Operated Vehicles (ROV) and Autonomous Underwater Vehicles (AUV) are the two main forms of Unmanned Underwater Vehicles (UUV), which are crucial tools for people exploring the deep sea [1–4]. Because of the restriction on cable length, ROVs can only operate within a certain range and are dependent on the control platform and operator, which limits their flexibility and prevents them from meeting the requirements for military operations regarding concealment. While AUV overcomes the shortcomings of ROV and has better flexibility and concealment because they do not have the limitations of cables and operating platforms, AUV technology is gradually becoming the focus of national marine research [5,6]. However, AUV is limited by the electromagnetic shielding of the water column, and existing communication technologies on land are difficult to adapt to the communication needs of the entire water column [7]. In order to exchange information and charge its batteries, the AUV must return frequently to dock with the supply platform. Typically, the current AUV return solution uses an inertial navigation system (INS) for positioning over long distances, an ultra-short base-line positioning system at a distance of about one kilometer from the docking platform, and a multi-sensor fusion navigation method to approach the docking interface [8–10]. When the AUVs are more than 10 m away from the docking interface, the aforementioned technique struggles to meet the demands of precise positioning and docking [11]. Installing identification markers, such as light sources on the AUV and the docking interface, is the current standard procedure for increasing Sensors 2022, 22, 7940. https://doi.org/10.3390/s22207940 https://www.mdpi.com/journal/sensors Sensors 2022, 22, 7940 2 of 21 docking accuracy. Additionally, machine vision technology is used in the underwater environment to gather the necessary direction and distance information by sensing the characteristics of the identification markers [12–14]. Using traditional machine vision and image processing methods, Lijia Zhong et al. proposed a binocular vision localization method for AUV docking, using an adaptive weighted OTSU threshold segmentation method to accurately extract foreground targets. The method achieves an average position error of 5 cm and an average relative error of 2% within a range of 3.6 m [15]. This technique uses binocular vision to increase detection accuracy. However, doing so increases the size of the equipment, which raises costs, reduces the method’s potential application areas, and adds pressure on the deep water. In recent years, the underwater positioning method of AUVs based on deep learning has also been widely developed. Shuang Liu et al., based on the idea of MobileNet, built a Docking Neural Network (DoNN) with a convolutional neural network, realized the target extraction of the interface through a monocular camera, and combined the RPnP algorithm to convert the 8-LED with a size of 2408 mm. The detection range of the docking interface is increased to 6.5 m, and under the condition of strong noise, the average detection error of the docking distance is only 9.432 mm, and the average detection error of the angle is 2.353 degrees [16]. Ranzhen Ren et al. used the combination of YOLO v3 and the P4P algorithm to locate an optical beacon with a length of 28 cm at a long distance of 3 m to 15 m and used Aruco markers for positioning within a range of 3 m, realizing the visual docking of the combination of far and near [17]. The underwater ranging algorithm combined with deep learning has been proven to have the advantages of better real-time performance and higher detection accuracy [17–19]. However, the following problems need to be solved: 1. 2. 3. The water pressure increases as the AUV’s navigation depth and volume increase. Therefore, it is necessary to reduce the size of the AUV and improve the ranging accuracy of the monocular camera. This poses a high demand for long-distance identification of small optical beacons, and the existing target detection algorithms are difficult to meet; With the increase in AUV working distance and the decrease in the size of the optical beacons, the light source characteristics of the optical beacon can only occupy a few pixel sizes, which makes it very difficult to locate the centroid pixel coordinates of the light source; The traditional Perspective-n-point (PnP) algorithm for optical beacon attitude calculation has low accuracy for long-distance target pose. In order to solve the above problems, the main contribution and core innovation of this paper are as follows: • • In order to solve the problem of long-distance target recognition of small optical beacons, YOLO V5 is used as the backbone network [20], and the Coordinate Attention (CA) and Convolution Block Attention Mechanisms (CBAM) are added for comparison [21,22], and training is performed on a self-made underwater optical beacon data set. It is proved that the YOLO v5 model, by adding CA has good detection accuracy for small optical beacons when the network depth is relatively shallow and solves the problem of difficulty in extracting small optical beacons at 10 m underwater; In order to solve the problem of difficulty in obtaining pixel coordinates due to the small number of pixels occupied by the feature points of small optical beacons, a Super-Resolution Generative Adversarial Network (SRGAN) was introduced into the detection process [23]. Then, the sub-pixel coordinates of the light source centroid are obtained through adaptive threshold segmentation (OTSU) and the sub-pixel centroid extraction algorithm based on Zernike moments [24,25]. It is proved that the combination of super-resolution and sub-pixel has a good effect on the localization of the pixel coordinates of the target light source in the case of 4-time upscaling reconstruction of the image; Sensors 2022, 22, 7940 • of the pixel coordinates of the target light source in the case of 4-time upscaling reconstruction of the image; In order to solve the problem of inaccurate calculation of the pose of small optical 3 of 21 beacons, a simple and robust perspective-n-point algorithm (SRPnP) is used as the pose solution method, and it is compared with the non-iterative O ( n ) solution of • the InPnP order to solve(OPnP) the problem of inaccurate calculation of the posewhich of small optical conproblem and one of the best iterative methods, is globally beacons, simple and robust perspective-n-point algorithm (SRPnP) is used as the vergent inathe ordinary case(LHM) [26–28]. pose solution method, and it is compared with the non-iterative O(n) solution of Our method adds an attention mechanism to the classical neural network model the PnP problem (OPnP) and one of the best iterative methods, which is globally YOLO convergent V5, migrates theordinary detection algorithm applied to land to the water environment, and in the case(LHM) [26–28]. improves the precision and recall of the model. The super-resolution technology is innoOur method adds an attention mechanism to the classical neural network model vatively used as an image enhancement method for small optical and is combined YOLO V5, migrates the detection algorithm applied to land to thebeacons water environment, with sub-pixel method to improve the range and accuracy of light andthe improves thecentroid precisionpositioning and recall of the model. The super-resolution technology source centroid positioning. Through the experimental verification, the distance calculais innovatively used as an image enhancement method for small optical beacons and is combined with the sub-pixel centroid positioning method to improve the rangewith and a size tion error of the proposed method for four-light source small optical beacons centroid positioning. Through the experimental of accuracy 88 mm ×of88light mmsource is 1.04%, and the average detection speed is 0.088 s verification, (11.36 FPS). Our the distance calculation error ofmethods the proposed method accuracy, for four-light source small optical method is superior to existing in detection detection speed, and detecbeacons with a size of 88 mm × 88 mm is 1.04%, and the average detection speed is 0.088 s tion range and provides a feasible and effective method for monocular visual ranging of (11.36 FPS). Our method is superior to existing methods in detection accuracy, detection underwater optical beacons. speed, and detection range and provides a feasible and effective method for monocular visual ranging of underwater optical beacons. 2. Experimental Equipment and Testing Devices 2. Experimental Equipment and Testingoptical Devicesbeacon images between 1 and 10 m deep For the experiments, underwater For the experiments, underwater optical beaconofimages between 1 and beacons 10 m deep were gathered. There are two different categories underwater optical (Figure were gathered. There are two different categories of underwater optical beacons (Figure 1): 1): cross beacons made of four light-emitting diodes (LEDs) and cross optical communicross beacons made of four light-emitting diodes (LEDs) and cross optical communication cation probes made of four laser diodes (LDs). The wavelengths of the two optical beacon probes made of four laser diodes (LDs). The wavelengths of the two optical beacon light light sources are both 520 nm and 450 nm because these two wavelengths of light have sources are both 520 nm and 450 nm because these two wavelengths of light have the least thepropagation least propagation attenuation seawater [29].between The distance between adjacent attenuation in seawater in [29]. The distance two adjacent lighttwo sources light sources is 88 mm, and the diagonal light source spacing is 125 mm. is 88 mm, and the diagonal light source spacing is 125 mm. LEDs 88 mm (a) LDs 88 mm (b) Figure 1.1.Optical A cross-light cross-lightbeacon beacon composed of three 520LEDs nm LEDs and one Figure Opticalbeacons: beacons: (a) (a) A composed of three 520 nm and one 450 nm450 nm LED; (b)(b)ananoptical probecomposed composed of four nm laser diodes. LED; opticalcommunication communication probe of four 520 520 nm laser diodes. Sony322 322 camera took pictures and and was mounted in a waterproof compartment, AASony camerathat that took pictures was mounted in a waterproof compartalong with an optical communication probe, was used to conduct underwater experiments ment, along with an optical communication probe, was used to conduct underwater exin the range of up to 10 m in an anechoic pool, as shown in Figure 2. A Cannon D60 was periments in the range of up to 10 m in an anechoic pool, as shown in Figure 2. A Cannon used in a temporary lab pool to capture the high-resolution optical beacon images at a D60 was used in a temporary lab pool to capture the high-resolution optical beacon imrange of 3 m, because a super-resolution dataset was needed. ages at a range of 3 m, because a super-resolution dataset was needed. Sensors 2022, 22, x FOR PEER REVIEW 4 of 21 Sensors Sensors 2022, 2022, 22, 22, 7940 x FOR PEER REVIEW of 21 44of CCD Waterproof Bin Installed Device Waterproof Bin Device Figure 2. CCD Underwater image acquisition device combinedInstalled with optical communication probe. Figure 2. Underwater image acquisition device combined with optical communication probe. Figure 2. Underwater image acquisition device combined with optical communication probe. In the underwater ranging experiment, the detection probe and the probe to be meas- In the underwater ranging experiment, theand detection probe and the be mea- test ured are fixed on two high-precision lifting slewing devices onprobe two to precision In the underwater ranging experiment, the detection probe and the probe to be meassured are fixed on two high-precision lifting and slewing devices on two precision platforms. The lifting rod can move in three dimensions and have its end rotate test 360 deured are fixed on two high-precision lifting and slewing devices on two precision test platforms. The lifting rod can move in three dimensions and have its end rotate 360 degrees grees at the same time (Figure 3). The accuracy of the three-dimensional movement of the platforms. The rod can in three dimensions and havemovement its end rotate 360 deat the same timelifting (Figure 3).and Themove accuracy of the three-dimensional of the transtranslation stage is 1 mm, the rotation error is 0.1 degree. In the experiment, two grees at the same timeand (Figure 3). The accuracy of degree. the three-dimensional movement of the rolation stage is 1 mm, the rotation error is 0.1 In the experiment, two rotating tating lifting rods will control the to error descend m below water surface and translation is 1 mm, thedevice rotation is below 0.13 degree. Inthe thesurface experiment, two ro-keep lifting rods stage will control theand device to descend 3m the water and keep the thetating depth constant. The devices only change the rotation angle and the relative position lifting rods will control the change device to m below surface andofkeep depth constant. The devices only thedescend rotation3angle andthe thewater relative position the of same the depth same plane. the constant. The devices only change the rotation angle and the relative position plane. of the same plane. (a)(a) (b)(b) Figure 3. Deviceinstallation installation diagram: (a) The detection device is is fixed to the lift lift bar bar andand drops to 3 to 3 Figure 3. 3. Device device fixed the drops Figure Device installationdiagram: diagram: (a) (a) The The detection detection device is fixed totothe lift bar and drops to m underwater; (b) The device to be tested is fixed to a lift rod on the other side and lowered to 3 m m underwater; (b) The device to be tested is fixed to a lift rod on the other side and lowered to 3 m 3 m underwater; (b) The device to be tested is fixed to a lift rod on the other side and lowered to underwater. underwater. 3 m underwater. The devices only change the rotation angle and the relative position of the same Thedevices devices only only change rotation angle and the positionposition of the same The changethethe rotation angle andrelative the relative of plane. the same plane. Figure 4 is a top view of the experimental conditions for image acquisition in this Figure 4 is a 4top view of the experimental conditions for imagefor acquisition in this paper. plane. Figure is a top view of the experimental conditions image acquisition paper. The experiment was carried out in an anechoic tank with a width of 15 m andina this The experiment was carried carried out in anout anechoic tank with atank width of 15 m and of a length paper. The experiment in an anechoic with a width m of and a length of 30 m. Ensure was that the device equipped with the camera is stationary, the15device 30 m. Ensure that the device equipped with the camera is stationary, the device to be tested length of 30 m. Ensure that the device equipped with the camera is stationary, the device to fixed be tested is mobile fixed onplatform the mobile and moves along theonly z-axis, and onlyinfineis on the andplatform moves along the z-axis, and fine-tuning the to tuning be tested is x-axis fixed direction on the mobile platform and moves along the in z-axis, andfield onlyoffinein the makes the optical beacon feature appear the CCD x-axis direction makes the optical beacon feature appear in the CCD field of view. Initially, tuning inInitially, the x-axis direction makes the optical beacon feature inwere the field of view. thewere two separated platforms were separated by 10 m,collected andappear images collected the two platforms by 10 m, and images were every 1 m.CCD every 1 m. view. Initially, the two platforms were separated by 10 m, and images were collected every 1 m. Sensors 2022, 22, x FOR PEER REVIEW 5 of 21 Sensors 2022, 2022, 22, 22, 7940 x FOR PEER REVIEW Sensors 55of of 21 21 Moving Stage 15.0 meters 15.0 meters Moving Stage LD Probe (to be test) LD Probe (to be test) Fixed Camera Fixed Camera Experiment pool 30.0 meters Experiment pool 30.0(top meters Figure 4. The condition of the experiment view). Figure 4. The The condition condition ofBeacon the experiment experiment (top view). and Light Source Centroid Location Figure 4. of the view). 3. Underwater Optical Target(top Detection Method 3. Underwater Optical Beacon Target Detection and Light Source Centroid 3. Underwater Optical Beacon Target Detection and Light Source Centroid Location Location Method Figure 5 depicts our method’s overall workflow: The algorithm’s input is the underMethod waterFigure optical5 beacon capturedoverall by the calibrated The target isinput detected using depictsimage our method’s workflow:CCD. The algorithm’s is the unFigure 5 depicts our method’s overall workflow: The algorithm’s input is the underYOLO V5 CA, and the target image is then fed into SRGAN for 4× super-resolution enderwater optical beacon image captured by the calibrated CCD. The target is detected water YOLO opticalAfter beacon image by the calibrated CCD. The target detected using hancement. obtaining the subpixel quality coordinates of the light source using V5 CA, and thecaptured target image is then fed into SRGAN for 4feature ×issuper-resolution YOLO V5 CA, and the target image is then fed into SRGAN for 4× super-resolution enusing the adaptive threshold segmentation Zernike of sub-pixel edge detection, enhancement. AfterOTSU obtaining the subpixel quality and coordinates the feature light source hancement. After obtaining the subpixel quality coordinates of the feature light source scale recovery is used to obtain the segmentation image coordinates of the feature points the using the adaptive OTSU threshold and Zernike sub-pixel edgewithin detection, usingrecovery theimage adaptive OTSU threshold and to Zernike sub-pixel edgewithin detection, original size. ThetoSRPnP algorithm then used determine thepoints target’s pose and scale is used obtain the segmentation imageis coordinates of the feature the scale recovery usedThe to SRPnP obtain the image coordinates feature points withinpose the distance. original image is size. algorithm is then usedoftothe determine the target’s original image size. The SRPnP algorithm is then used to determine the target’s pose and and distance. distance. Add Output Add Noise 1 Output Scale Recovery SRPnP Scale Recovery SRPnP Noise 1 Noise 2 Noise 2 Target Input image YOLOv5_CA Prediction SRGAN x4 Feature point extraction Target Input image Prediction Feature point extraction x4 Figure 5. Flow chart chart of visual positioningSRGAN algorithm. Figure 5. Flow of visual positioning algorithm. YOLOv5_CA 3.1. Underwater Target Detection Methodalgorithm. Based on YOLO V5 Figure 5. Flow chart of visual positioning 3.1. Underwater Target Detection Method Based on YOLO V5 It is very challenging to locate and extract underwater targets because of the comIt is very challenging to locate and extract underwater targets because of the com3.1. Underwater Target Detection Method Based on YOLO plexity of the underwater environment, including theV5 light deflection caused by water plexity of the underwater environment, including the light deflection caused by water flow, Itthe reflection formed by the light water surface and the waterproof chamber, and the is very challenging andwater extractsurface underwater targets because of the comflow, the reflection formedto bylocate the light and the waterproof chamber, and environmental disturbances. YOLO V5 (You Only Look Once), as a fast and accurate target plexity of the underwater environment, including theLook lightOnce), deflection caused water the environmental disturbances. YOLO V5 (You Only as a fast andby accurate recognition neural network, is used in the targetsurface detection stage. flow, the reflection formed by the light water and the waterproof chamber, and target recognition neural network, is used in the target detection stage. YOLO V5 follows the main structure regression classification YOLO the environmental disturbances. YOLO V5and (You Only Look Once), as amethod fast andof accurate V4 [30].recognition However, it also includes including target neural network,the is most used recent in the techniques, target detection stage.CIoUloss, adaptive anchor box calculation, and adaptive image scaling, to help the network converge more quickly and detect targets with greater accuracy during training. And the extraction of Sensors 2022, 22, 7940 6 of 21 overlapping targets is also better than that of YOLO V4. The most important thing is that it reduces the size of the model to 1/4 of the original model, which meets the real-time detection requirements of underwater equipment in terms of detection speed. The loss function used by YOLO V5 for training is Loss = CIoUloss S 2 Bn obj + ∑ ∑ Iij [Ci log(Ci ) + (1 − Ci ) log(1 − Ci )] i =0 j =0 S 2 Bn noobj + ∑ ∑ Iij [Ci log(Ci ) + (1 − Ci ) log(1 − Ci )] i =0 j =0 S2 Bn obj j + ∑ ∑ Iij p i (c) log ∑ i =0 j =0 c∈classes (1) p j i (c) + 1 − p j i (c) log 1 − p j i (c) In Formula (1), S is the number of grid cells into which the input image is divided, Bn is the number of anchor boxes, CIoUloss is the loss of the bounding box. The second and third terms are confidence loss, which means the confidence of the bounding box containing obj noobj the object Iij and the confidence of the bounding box without object Iij . The fourth term is the cross-entropy loss. When the jth anchor frame of the ith grid is responsible for the prediction of a real target, the bounding box generated by this anchor frame is only involved in the calculation of the classification loss function. Assuming that A is the prediction box and B is the real box, let C be the minimum convex closed box containing A and B, then the intersection over union (IoU) of the real box and the prediction box and the loss function CIoUloss are calculated as follows: IoU = CIoU = IoU − where | A ∩ B| , | A ∪ B| Distance_Center2 v2 − , 2 (1 − IOU ) + v Distance_Corner (2) (3) 4 w 2 ŵ , v = 2 arctan − arctan h π ĥ CIoUloss = 1 − CIoU. In Formula (3), Distance_Center is the Euclidean distance between the center points of A box and B box, Distance_Corner is the diagonal length of the box, and v is a parameter to measure the consistency of the aspect ratio between the predicted box and the real box. 3.2. YOLO V5 with Attention Modules Fast object detection and classification are advantages of the YOLO V5n model, but this is due to a reduction in the number and width of network layers, which also has the drawback of lowering detection accuracy. The application of attention mechanisms in machine vision enables the network model to ignore irrelevant information and focus on important information. The attention mechanisms can be divided into spatial domains, channel domains, mixed domains, etc. Therefore, this paper adds the CBAM attention mechanism and the coordinate attention mechanism to the YOLO V5n model, trains it on the self-made underwater light beacon dataset, and compares the validation set loss, recall rate, and mean average precision (Map). It is proven that the CA attention mechanism has a good improvement over the lightweight YOLO V5 model and realizes the long-distance accurate identification and detection of small optical beacons in the water environment. As seen in Figure 6a, the Convolutional Block Attention Module (CBAM) is a module that combines spatial and channel attention mechanisms. The input feature image first passes through a residual module, and then performs global maximum pooling (GMP) Sensors 2022, 22, 7940 of the two feature maps, normalization is performed to obtain the attention feature that combines channel and space. The channel feature map contains the local area information in the original image after several convolutions. The global feature information of the original image cannot be obtained because only the local information is taken into account when the maximum and 7 of 21 average values of the multi-channels at each position are used as weighting operations. This issue is effectively resolved by the coordinate attention mechanism, as seen in Figure 6b:and theglobal feature map passing through the residual module uses the pooling kernel of sumaverage pooling (GAP) to obtain two one-dimensional feature vectors, which mation along the horizontal coordinate (H, 1) and vertical coordinate (1, W) pairs respecare then subjected to convolution, ReLU activation, and 1 × 1 Convolution, weighted tively. Each features channelafter is encoded to obtain features twoand spatial orientations. with input normalization usingthe Batch Normalof (BN) Sigmoid, to obtain This featureallows maps for attention. Thethe channel feature map performs GAP and GMP on and method thechannel network to obtain feature information in one spatial direction all channels at each pixel position to obtain two feature maps, and after 7 × 7 convolutions save the other spatial position information, which helps the network to locate the target the twomore feature maps, normalization is performed to obtain the attention feature that ofof interest accurately. combines channel and space. Input Input Residual 𝑪×𝑯×𝑾 GAP+GMP Conv+ReLU 1×1 Conv Sigmoid Re-weight 𝑪×𝑯×𝑾 Channel Pool 7×7 Conv Re-weight Output BN+Sigmoid 𝑪×𝑯×𝑾 (a) Residual 𝑪×𝟏×𝟏 𝑪/𝒓 × 𝟏 × 𝟏 Channel Attention 𝑪×𝟏×𝑯 X Avg Pool 𝟏×𝑯×𝑾 Y Avg Pool Concat+Conv2d 𝑪×𝟏×𝟏 𝟐×𝑯×𝑾 𝑪×𝑯×𝑾 BatchNorm+Non-linear Split 𝑪×𝑯×𝟏 Spatial Attention 𝑪×𝑯×𝟏 Re-weight Conv2d Conv2d Sigmoid Sigmoid 𝑪×𝟏×𝑾 𝑪/𝒓 × 𝟏 × (𝑾 + 𝑯) 𝑪 × 𝟏 × (𝑾 + 𝑯) 𝑪×𝟏×𝑾 𝑪×𝟏×𝑾 𝑪×𝑯×𝑾 Output (b) Figure mechanism: Convolutional attention mechanism; Figure6.6.Diagram Diagram of of attentional attentional mechanism: (a) (a) Convolutional blockblock attention mechanism; (b) Coor-(b) Coordinate attention mechanism. dinate attention mechanism. channel feature map contains theYOLO local area in theattention original image AThe schematic representation of the V5 information structure with modules is after several convolutions. The global feature information of the original image be shown in Figure 7. Two structures were created to fit the CBAM and CA cannot characteristics. obtained because only the local information is taken into account when the maximum and Four C3 convolution modules with different depths in the backbone network are replaced average values of the multi-channels at each position are used as weighting operations. This byissue CBAM with the same input and output to address the issue that multiple convolutions is effectively resolved by the coordinate attention mechanism, as seen in Figure 6b: cause CBAM to passing lose local information. This allows CBAM to obtain weights for channel the feature map through the residual module uses the pooling kernel of summation and spatial feature maps at various depths. Due to the lightweight of the CA modules, along the horizontal coordinate (H, 1) and vertical coordinate (1, W) pairs respectively. they arechannel addedistoencoded the positions of the thefeatures 4-feature mapspatial fusion, so that theThis model can better Each to obtain of two orientations. method allows theweight network obtain the featuremaps. information in one spatial direction and save the obtain the oftodifferent feature other spatial position information, which helps the network to locate the target of interest more accurately. A schematic representation of the YOLO V5 structure with attention modules is shown in Figure 7. Two structures were created to fit the CBAM and CA characteristics. Four C3 convolution modules with different depths in the backbone network are replaced by CBAM with the same input and output to address the issue that multiple convolutions cause CBAM to lose local information. This allows CBAM to obtain weights for channel and spatial feature maps at various depths. Due to the lightweight of the CA modules, they are added to the positions of the 4-feature map fusion, so that the model can better obtain the weight of different feature maps. Sensors 2022, 22, Sensors Sensors2022, 2022,22, 22,x7940 xFOR FORPEER PEERREVIEW REVIEW of21 21 88 8of of 21 CBAM CBAM CBAM CBAM CBAM CBAM CBS CBS C3 CBS CBS CBS C3 C3 CBS C3 CBS CBS C3 C3 CBAM CBAM Convolutional ConvolutionalBlocks BlocksAttention AttentionModule Module CBS CBS C3 SPPF CBS CBS C3 SPPF Upsample Upsample CA CA RGB RGBimage imageinput input Concat Concat C3 C3 CBS CBS Upsample Upsample CA CA CBS CBS == Conv SiLU Conv BN BN SiLU C3 C3 == CBS CBS SPPF SPPF == CBS CBS Res Res Res Res == Concat Concat CBS CBS CBS CBS CBS CBS Concat Concat C3 C3 CA CA MaxPool MaxPool CBS CBS CBS CBS CBS CBS Concat Concat C3 C3 CA CA MaxPool MaxPool CBS CBS CBS CBS Coordinate Coordinate Attention Attention Module Module add add Concat Concat C3 C3 MaxPool MaxPool Concat Concat CBS CBS CBS CBS output output Figure Figure 7.YOLO YOLO v5 structure diagramwith withattention attentionmodules. modules. Figure7. 7. YOLOv5 v5structure structurediagram diagram with attention modules. In In Figure 8, the target target is is the the object object picked picked by by the the red red boxes, boxes, and and the the mirror mirror image image of of InFigure Figure8, 8, the the target is the object picked by the red boxes, and the mirror image of the target and the water’s surface, as well as the laser point for optical communication, are the well asas thethe laser point for for optical communication, are the target target and andthe thewater’s water’ssurface, surface,asas well laser point optical communication, selected by boxes. Because the chosen by box during image acare selected by blue the boxes. Because the objects chosen by blue the during image selected by the the blueblue boxes. Because the objects objects chosen by the the blueblue boxbox during image acquisition are noise and will with of labels are divided acquisition noise will interfere with the extraction of the the target, the labels are quisition areare noise andand willinterfere interfere withthe theextraction extraction ofthe thetarget, target, the labels are divided into target and noise. This makes ititnecessary to between and divided into target and noise. This makes it necessary to distinguish between noise feaand into target and noise. This makes necessary todistinguish distinguish betweennoise noise andtarget target features using neural network training. 7606 images of LED and LD optical beacons with target features using neural network training. 7606 images of LED and LD optical beacons tures using neural network training. 7606 images of LED and LD optical beacons with various attitudes were in underwater environment ranging from m with various attitudes were collected the underwater environment ranging m various attitudes were collected collected in the thein underwater environment ranging from 11from m to to110 10 to 10 of which 5895 training set data, were set data. Three models m, of which 5895 training set and 1711 were test set Three models of m, of m, which 5895 were werewere training set data, data, and and 17111711 were testtest set data. data. Three models of of YOLO V5n, YOLO V5_CBAM, YOLO V5_CA are trained separately on3090 RTX with 3090 YOLO V5n, YOLO V5_CBAM, and YOLO V5_CA are separately on YOLO V5n, YOLO V5_CBAM, andand YOLO V5_CA aretrained trained separately onRTX RTX 3090 with a 128-batch size500 and 500 epochs. To prevent overfitting of the model, anstop early stop awith size epochs. To overfitting of an mecha 128-batch 128-batch sizeand and 500 epochs. Toprevent prevent overfitting of the themodel, model, anearly early stop mechmechanism was introduced during the training where YOLO V5n stopped at 496, and anism was introduced during the training where YOLO V5n stopped at 496, and YOLO anism was introduced during the training where YOLO V5n stopped at 496, and YOLO YOLO V5_CA stopped at 492. V5_CA stopped at 492. V5_CA stopped at 492. 11m m 22m m 33m m 44m m 55m m 66m m 77m m 88m m 99m m Figure8. 8. Picturesof ofLED LEDand and LD optical beacons collected within 1–10 m. Figure Figure 8.Pictures Pictures of LED andLD LDoptical opticalbeacons beaconscollected collectedwithin within1–10 1–10m. m. An essential essential parameter to to gauge gauge the discrepancy between the the predicted predicted value and An An essential parameter parameter to gauge the the discrepancy discrepancy between between the predicted value value and and the true value is the loss of the validation set. Within 500 training rounds, all three of the the the true true value value isis the the loss loss of of the the validation validation set. set. Within Within 500 500 training training rounds, rounds, all all three three of of the the models in Figure 8 had reached convergence. In Figure 9a, the class loss of the model with models convergence. class modelsin inFigure Figure88had hadreached reached convergence.In InFigure Figure9a, 9a,the the classloss lossof ofthe themodel modelwith with 4 −4 , and the model with CBAM −−44 theCA CAmodule moduleisis 4.96 ×1010−−4− 4 , the initial model is 5.51 × 10 4.96 × 5.51 × 10 the , the initial model is , and the model with CBAM the CA module is 4.96 × 10 , the initial model is 5.51× 10 , and the model with CBAM−isis 3, is 6.13 ×−−4410−4 . In Figure 9b, the object loss of the model with the CA module is 8.65 × −3 10 6.13 loss isis 8.65 6.13××10 10 ..In 8.65××10 10−3,,the InFigure Figure9b, 9b,the theobject object lossof ofthe themodel modelwith withthe theCA CAmodule module the − 3 − 3 the initial model is 8.69 ×−−310 , and the model with CBAM is 8.87 × 10 −−33 . According to the initial model is 8.69 and the model with CBAM .. According to 8.69××10 10 3,, the 8.87××10 10with initial model andCA themodule modelhas with CBAM is is 8.87 According to the the above data, theismodel with improved compared the initial model above data, the model with the CA module has improved compared with the initial model above data, the model with the CA module has improved compared with the initial model in both target detection and classification, but the model with CBAM has degenerated. in inboth bothtarget targetdetection detectionand and classification, classification,but butthe the model modelwith withCBAM CBAM has hasdegenerated. degenerated. Sensors 2022, 22, xx FOR REVIEW Sensors2022, 2022,22, 22,7940 FOR PEER PEER REVIEW Sensors 13 13 9 of 21 9 of 21 21 9 of -4 -3 -4 10 10 YOLOv5n v5n YOLO +CBAM +CBAM +CA +CA 12 12 10.5 10.5 11 11 Object Loss Object Loss Classes Loss Loss Classes YOLO YOLO v5nv5n +CBAN +CBAN +CA +CA 1010 10 10 9.5 9.5 99 88 0.008867 0.008867 99 0.000613 0.000613 77 0.000551 0.000551 66 8.5 8.5 55 00 -3 1010 0.008686 0.008648 0.008648 0.008686 0.000496 0.000496 50 50 100 150 200 250 250 Epoch Epoch 300 300 350 350 400 400 450 450 500 500 00 5050 100 100 150 150 200 200 250 250 300 300 350350 400400 450450 500500 Epoch Epoch (a) (b) (b) Figure setset object loss. Figure 9. 9. Verification Verificationset setloss: loss:(a) (a)Verification Verificationset setclassification classificationloss; loss;(b) (b)Verification Verification object loss. Figure 9. Verification set loss: (a) Verification set classification loss; (b) Verification set object loss. The ofof the classiThe mean mean of of average averageprecision precision(mAP), (mAP), which crucial measurement the classiThe mean of average precision (mAP),which whichisis isa aacrucial crucialmeasurement measurement of the classification accuracy of the model, refers to the average prediction accuracy of each type ofof fication accuracy accuracy of of the the model, model, refers refers to to the the average average prediction prediction accuracy accuracy of of each each type type of fication target entire dataset. The recall isisa is indicator toto target and and then the average value the entire dataset. The recall acrucial crucial indicator target and then thenthe theaverage averagevalue valueofof ofthe the entire dataset. The recall a crucial indicator determine whether the object has examined. As ininFigure 10a, the maxdetermine whether thetarget target object hasbeen beenbeen examined. Asshown shown Figure 10a, the maxto determine whether the target object has examined. As shown in Figure 10a, the imum mAP of the network with the CA module is 96.1%, the maximum mAP of the netimum mAPmAP of the withwith the CA is 96.1%, the maximum mAPmAP of the maximum of network the network the module CA module is 96.1%, the maximum ofnetthe work CBAM isis 93.9%, and the maximum mAP ofofthe original YOLO V5n model is is network with CBAM is 93.9%, maximum mAP of original YOLO V5n model work with with CBAM 93.9%, andand thethe maximum mAP thethe original YOLO V5n model 94.6%. It be seen that the CA module improves the classification accuracy byby 1.5%, is 94.6%. It can seen that the CA module improves the classification accuracy by 1.5%, 94.6%. It can can bebe seen that the CA module improves the classification accuracy 1.5%, while the CBAM reduces the classification accuracy by 0.7%. The reason why the CBAM while while the the CBAM CBAM reduces reduces the the classification classification accuracy accuracy by by 0.7%. 0.7%. The The reason reason why why the the CBAM CBAM module object and the module causes network degradation that the long-distance optical signal object and the module causes causesnetwork networkdegradation degradationisis isthat thatthe thelong-distance long-distanceoptical opticalsignal signal object and the mirror target in the training image have high consistency, the target scale is small, and the mirror target in the training image have high consistency, the target scale is small, and the mirror target in the training image have high consistency, the target scale is small, and the global features cannot effectively separate the noise and the target. global the noise and the target. AsAs shown in in Figure 10b, global features featurescannot cannoteffectively effectivelyseparate separate the noise and the target. Asshown shown inFigure Figure 10b, the recall rate of the target by the CA module is also improved by 0.8%. In the detecthe rate of theoftarget by thebyCA is alsoisimproved by 0.8%. In theIn detection of 10b,recall the recall rate the target themodule CA module also improved by 0.8%. the detection of distant the network withhas CAa has a good effect on recognition classifidistant targets,targets, the network with CA good recognition and and classification. tion of distant targets, the network with CA has aeffect good on effect on recognition and classification. Therefore, in the subsequent experiments to verify the model detection the Therefore, in the subsequent experiments to verify model effect,effect, the YOLO cation. Therefore, in the subsequent experiments tothe verify the detection model detection effect, the YOLO V5_CA and YOLO V5n models are used for comparison. V5_CA and YOLO V5n models are used for comparison. YOLO V5_CA and YOLO V5n models are used for comparison. 1 1 YOLO v5n YOLO v5n +CBAM +CBAM +CA +CA 0.9 0.9 0.8 0.8 0.961 0.961 0.7 0.7 0.946 0.946 0.939 0.939 0.1 0 0 YOLO V5n YOLO V5n +CBAM +CBAM +CA +CA 1 0.9 0.9 0.8 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 0 0.951 0.940 0.951 0.9400.943 0.943 Recall Recall mAP mAP 0.5 0.5 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 1 0 100 0 100 200 300 200 Epoch 300 Epoch (a) (a) 400 400 500 500 0 100 100 200 300 Epoch 300 200 Epoch (b) (b) 400 400 500 500 Figure Figure 10. 10. Comparison Comparisonof ofnetwork networktraining trainingresults: results:(a) (a)Mean MeanofofAverage AveragePrecision; Precision;(b) (b)Recall. Recall. Figure 10. Comparison of network training results: (a) Mean of Average Precision; (b) Recall. Sensors 2022, 22, xx FOR Sensors 2022, 2022, 22, 22, 7940 FOR PEER PEER REVIEW REVIEW Sensors 10 1010of of 21 of 21 21 Figure aa comparison comparison detection YOLO and YOLO Figure 11 is is chart of the results of V5n Figure 11 11 is a comparison chart chart of of the the detection detection results results of of YOLO YOLO V5n V5n and and YOLO YOLO V5_CA. It can be seen that when the characteristics of the target object are not obvious, V5_CA. It can can be beseen seenthat thatwhen whenthe thecharacteristics characteristics target object obviV5_CA. It of of thethe target object are are not not obvious, there will aa problem of detection in of model ous, will be a problem of missed detection in the detection of the original model therethere will be be problem of missed missed detection in the the detection detection of the the original original model (Figure (Figure 11a). and characteristics appear at time (Figure 11a).objects When objects and with noisesvery withsimilar very similar characteristics the same 11a). When When objects and noises noises with very similar characteristics appearappear at the theatsame same time (Figure 11b), the original model will have the problem of misidentifying the noise time (Figure 11b), the original model will have the problem of misidentifying the noise as aaa (Figure 11b), the original model will have the problem of misidentifying the noise as as target. When detecting close-range LED targets (Figure 11c,d), the original model will target. When detecting close-range LED targets (Figure 11c,d), the original model will fail target. When detecting close-range LED targets (Figure 11c,d), the original model will fail fail to detect them. The model with the CA module has a good classification effect on the to thethe CACA module has has a good classification effecteffect on theon target to detect detectthem. them.The Themodel modelwith with module a good classification the target and and the accuracy is than the and noise, and the detection accuracy is higher than the original model.model. target and noise, noise, and the detection detection accuracy is higher higher than the original original model. (a) (a) (b) (b) (c) (c) (d) (d) (e) (e) (f) (f) (g) (g) (h) (h) Figure 11. detection result: YOLO Figure 11. 11. Target Target detection result: result: (a–d) (a–d) are YOLO V5n V5n detection detection results; results; (e–h) are YOLO V5_CA V5_CA Figure Target detection (a–d) are are YOLO YOLO V5n detection results; (e–h) (e–h) are are YOLO V5_CA detection results. detection results. results. detection We our network [31]. The region that the We used Grad-CAM Grad-CAMas asaaavisualization visualizationtool toolfor for our network [31]. region We used used Grad-CAM as visualization tool for our network [31]. TheThe region thatthat the network is most interested in is the one with the redder in Figure 12. It is clear that YOLO the network is most interested thewith one the withredder the redder in Figure It isthat clear that network is most interested in is in theisone in Figure 12. It is12. clear YOLO V5 the CA module outperforms the model in extraction and YOLO with CA module outperforms the original in beacon extraction and V5 with withV5 the CAthe module outperforms the original original model model in beacon beacon extraction and localilocalization noise and As result, in target recognition and exlocalization for both and light. a result, inunderwater the underwater target recognition zation for for both both noisenoise and light. light. As aa As result, in the the underwater target recognition andand extraction stage, the recognizer is YOLO v5 with the CA module. extraction stage, the recognizer is YOLO v5 with the CA module. traction stage, the recognizer is YOLO v5 with the CA module. (a) (a) (b) (b) (b) (b) (c) (c) (d) (d) (d) (d) (e) (e) (f) (f) (g) (g) (h) (h) (h) (h) Figure maps: (a–d) are heat maps of YOLO V5n; heat maps of YOLO V5_CA. Figure 12. 12. Heat Heat V5n; (e–h) (e–h) are are heat heat maps maps of of YOLO YOLO V5_CA. V5_CA. Figure 12. Heat maps: maps: (a–d) (a–d) are are heat heat maps maps of of YOLO YOLO V5n; (e–h) are Sensors 2022, 22, 7940 11 of 21 3.3. SRGAN and Zernike Moments-Based Sub-Pixel Optical Center Positioning Method After correctly identifying and extracting the target, the size of the light source feature points is only a few to a dozen pixels because the scale of the target at about 10 m is extremely small. Conventional image processing techniques, such as filtering and picture open and close operations, will overwhelm the target light source, making accurate location difficult. Therefore, we introduce super-resolution generative adversarial networks (SRGAN) into the detection process and perform 4× upscaling on the identified beacon image. The feature information of underwater small targets can be effectively improved by using this technique, and it also offers a guarantee for the accuracy of subsequent subpixel centroid positioning based on Zernike moments. The core of SRGAN consists of two networks: a super-resolution generator and a discriminator, where the discriminator uses the VGG19. First, apply a Gaussian filter to a real high-resolution image Î HR with channel and size C × W × H to obtain a low-resolution image I LR = C × rW × rH, where the scaling factor is r.This low-resolution image is then used as input to the generator and trained to produce a high-resolution image I HR . The original high-resolution image Î HR and the generated image I HR are both input to the discriminator to obtain the perceptual loss function l SR of the generated image and the real SR SR [23]. Then the image, which includes the content loss lVGG/i,j and the adversarial loss lGen relationship between the losses can be obtained as SR SR l SR = lVGG/i,j + 10−3 lGen , where SR lVGG/i,j (4) 2 Wi,j Hi,j 1 = ∑ φi,j I HR x,y − φi,j GθG I LR x,y , Wi,j Hi,j x∑ =1 y =1 SR lGen = N ∑ − log DθD GθG I LR . n =1 In Formula (4), GθG is the reconstructed high-resolution image, DθD is the probability that an image belongs to a real high-resolution image, i and j represent the ith maximum pooling layer and the jth convolution layer in the VGG network, respectively. We used the high-definition camera Cannon D60 to collect 7171 high-definition images of underwater optical beacons and trained 400 rounds under the condition of a scaling factor of 4. At the same time, in order to verify the advantages of SRGAN, we used the results generated by the SRResNet for comparison. Figure 13 shows the training result of SRGAN, where the generator loss (Figure 13a) drops to 5.07 × 10−3 in 400 epochs, indicating that the model converges well. Figure 12b is the peak signal-to-noise ratio (PSNR), which is mainly aimed at the error between the corresponding pixels of the generated image and the original image. The PSNR of the generated image can reach up to 32.42 dB. Structural similarity index measurement (SSIM) is an index used to measure the similarity of brightness, contrast, and structure between the generated image and the original high-resolution image. It can be seen that the maximum SSIM of the generated image can reach 91.98% (Figure 13c). The aforementioned data demonstrates that this method produces low levels of image distortion, and the image has good structural integrity and structural details. 12 1212 ofofof 21 2121 33 0.016 0.016 32 0.014 0.014 31 0.006 0.006 0.004 0.004 0 0 28 50 100 100 150 150 200 200 250 Epoch Epoch 250 300 300 350 350 400 400 25 0.86 0.84 27 27 0.82 0.86 0.84 0.82 26 26 50 SSIM 29 0.9198 0.9198 0.88 0.88 PSNR(dB) PSNR(dB) 0.00507 0.00507 32.42 32.42 30 28 0.008 0.008 0.9 0.9 31 29 0.01 0.92 0.92 32 30 0.012 0.012 0.01 33 SSIM 0.018 0.018 G Loss G Loss Sensors 2022, x FOR PEER REVIEW Sensors 2022, 22, x7940 FOR PEER REVIEW Sensors 2022, 22,22, 25 0 0.8 0 50 (a)(a) 50 100 100 150 150 200 200 250 Epoch Epoch (b)(b) 250 300 300 350 350 400 400 0.8 0 0 50 50 100 100 150 150 200 200 250 Epoch Epoch 250 300 300 350 350 400 400 (c)(c) Figure SRGAN training results: Generator loss; Peak signal-to-noise ratio; Structural Figure 13. SRGAN training results: (a) Generator loss; (b) Peak signal-to-noise ratio; (c) Structural Figure 13.13. SRGAN training results: (a)(a) Generator loss; (b)(b) Peak signal-to-noise ratio; (c)(c) Structural similarity index measurement. similarity index measurement. similarity index measurement. Figure displays the low-resolution target images, the target images produced Figure 14 displays the low-resolution target images, the target images produced by Figure 1414 displays the low-resolution target images, the target images produced byby SRGAN, and the target images produced by SRResNet in order to illustrate the benefits SRGAN, SRGAN, and and the the target target images images produced produced by by SRResNet SRResNet in in order order to to illustrate illustrate the the benefits benefits SRGAN. can be clearly seen that the image generated SRResNet will produce blurofof SRGAN. can clearly seen that the image generated by SRResNet will produce of SRGAN. ItItIt can bebe clearly seen that the image generated byby SRResNet will produce blurringproblems problems the edge the target light source (Figure 14c); noise points willalso also blurring problems atedge the edge oftarget the target light source (Figure 14c); noise points will ring atatthe ofofthe light source (Figure 14c); noise points will appear near the target feature points (Figure 14f); the background will produce grid noise also appear near the target feature points (Figure 14f); the background will produce grid appear near the target feature points (Figure 14f); the background will produce grid noise (Figure 14i). These noises all lead errors in the process of light centroid localizanoise (Figure 14i). These noises all lead toinerrors in the of process ofsource light source centroid (Figure 14i). These noises all lead toto errors the process light source centroid localization, while the images generated by SRGAN have good structural integrity and less noise localization, while the images generated by SRGAN have good structural integrity and less tion, while the images generated by SRGAN have good structural integrity and less noise (Figure 14b,e,h). noise (Figure 14b,e,h). (Figure 14b,e,h). (a)(a) (b)(b) (c)(c) (d)(d) (e)(e) (f)(f) (g)(g) (h)(h) (i)(i) Original images Original images SRGAN SRGAN SRResNet SRResNet Detail contrasts Detail contrasts Figure14. Comparisonof theoriginal originalimages imagesand andthe thesuper-resolution super-resolutionreconstruction reconstructionimages: images: Figure 14.14.Comparison Comparison ofofthe the original images and the super-resolution reconstruction images: Figure (a,d,g) are the original target images; (b,e,h) are super-resolution images of SRGAN; (c,f,i) are super(a,d,g) (a,d,g) are are the the original originaltarget targetimages; images;(b,e,h) (b,e,h)are aresuper-resolution super-resolutionimages imagesof ofSRGAN; SRGAN;(c,f,i) (c,f,i)are aresupersuperresolution images of SRResNet (the yellow box represents the SRGAN image detail, and the red box resolution resolution images images of of SRResNet SRResNet (the (the yellow yellow box box represents represents the the SRGAN SRGAN image image detail, detail, and and the the red red box box represents the SRResNet image detail). represents represents the the SRResNet SRResNet image image detail). detail). Accuratelylocating locatingthe thecenter centerof eachlight lightsource sourcein theimage imageis key step.The The Accurately key step. Accurately locating the center ofofeach each light source ininthe the image is isaa akey step. The process of the centroid extraction stage is shown in Figure 15: Firstly, the OTSU method process process of of the the centroid centroid extraction extraction stage stage is is shown shown in in Figure Figure 15: 15: Firstly, Firstly, the the OTSU OTSU method method selected for threshold segmentation, and then the image corroded and expanded isis selected for threshold segmentation, and then the image isis corroded and expanded toto is selected for threshold segmentation, and then the image is corroded and expanded to Sensors 2022, 22, x FOR PEER REVIEW Sensors 2022, 22, 7940 13 of 21 13 of 21 smooth the the light light source source edge edge to to obtain obtain the the regular regularlight lightsource sourceedge. edge. The The sub-pixel sub-pixel edge edge smooth refinement method based on the Zernike moment is used to obtain the edge of the subrefinement method based on the Zernike moment is used to obtain the edge of the sub-pixel pixel light source. Finally, the centroid coordinates of each light source are extracted by light source. Finally, the centroid coordinates of each light source are extracted by the the centroid formula. centroid formula. Start SR target image input SR gray image Calculate the threshold by OTSU method Image erode and inflation Calculate the subpixel edge and locate the centers End Figure Figure15. 15. Sub-pixel Sub-pixel optical optical center centerpositioning positioningflow flowchart. chart. The The difference difference between between the the background background of of the the feature feature image image and and the the target target light light source super-resolution enhancement hashas been obvious, sourceafter afterYOLO YOLOV5_CA V5_CAextraction extractionand and super-resolution enhancement been obviso thesoOTSU method has has been selected as as thethe threshold segmentation ous, the OTSU method been selected threshold segmentationalgorithm. algorithm.The The OTSU method can effectively separate the target light source part and the background part OTSU method can effectively separate the target light source part and the background from the foreground. It defines the segmentation threshold as a solution to maximize the part from the foreground. It defines the segmentation threshold as a solution to maximize inter-class variance. The scheme is established as follows: the inter-class variance. The scheme is established as follows: 2 2 σ2 = g2 − ge )22, σ 2ω= 1 ·( ω1g1( − g1 −geg) e )++ωω2 ·( 2 ( g 2 − g e ) , (5) (5) where and ω2 are that where the the between-class between-class variance varianceisisdenoted denotedasasσ2σ, 2ω,1 ω areprobabilities the probabilities ω2 the 1 and one pixel belongs to the target area or the background area, respectively. g and g are the 2 1 that one pixel belongs to the target area or the background area, respectively. g1 and average gray values of the target and the background pixels, respectively, while ge is the g 2 are the average gray values of the target and the background pixels, respectively, average gray value of all pixels in the image. Assuming that the image is segmented into while g e is the average gray value of all pixels in the image. Assuming that the image is the target area and the background area when the gray segmentation threshold is k, the segmentedcumulative into the target areag and the background area when the gray segmentation first-order moment k of k is brought into Formula (5): threshold is k , the first-order cumulative moment g k of k is brought into Formula (5): ( ge · ω − g ) 2 2 σ2 =2 ( g e 1ω1 − kg k ). (6) σ ω = 1 ·(1 − ω1 ) . (6) ω1 (1 − ω1 ) By traversing the 0–255 gray values in Formula (6), the corresponding σ2 is the required σ 2difference By traversing 0–255 gray values in Formula the corresponding is the rethreshold when thethe variance between classes is the (6), largest. There is a great quired threshold when the variance between classes is the largest. There is a great differbetween the light source characteristics and background characteristics of an underwater ence between the light source characteristics and background characteristics of an underoptical beacon, so the OTSU method is stable. water beacon, so the OTSU method is stable. Tooptical precisely determine the pixel coordinates of each light center, it is necessary to To precisely determine the pixel coordinates light center, it is necessary to obtain the edges of each light source after obtaining of theeach binary image following threshold obtain the edges of each light sourceedge after detection obtainingmethod the binary image following segmentation. Therefore, a sub-pixel based on the Zernikethreshold moment segmentation. Therefore, sub-pixel method basednoise on the Zernike The mois used. This method is notaaffected by edge imagedetection rotation and has good endurance. ment is used. This method is not affected by image rotation and has good noise endurance. n-order and m-order Zernike moments of the image f ( x, y) are defined as follows: The n -order and m -order Zernike moments of the image f ( x, y ) are defined as foln+1 x ∗ lows: Znm = f ( x, y)Vnm (7) (ρ, θ )dxdy, π 2 2 n +x1 +y ≤1 * Z nm = f ( x, y ) Vnm ( ρ , θ ) dxdy , (7) π x +∫∫y ≤1 2 2 Sensors 2022, 22, x FOR PEER REVIEW 14 of 21 Sensors 2022, 22, 7940 14 of 21 * where Vnm ( ρ ,θ ) is conjugate to the orthogonal n -order and m -order Zernike polyno∗ where is the conjugate to the orthogonal n-order and m-order the Zernike mial VVnm polar coordinate system unit circle. Assuming ideal polynomial edge rotates , θθ)) of nm((ρρ, Vθnm (ρ, θ ) of the polar coordinate system unit circle. Assuming the ideal edge rotates , because of the rotational invariance of Zernike moments: Z ′ = Z , Z ′ = Z11eiiθθ , θ, because of the rotational invariance of Zernike moments: Z 0 00 =00 Z0000, Z 0 11 11= Z11 e , ′ Z 20 , the sub-pixel edge of the image can be represented by Formula (8): 20 = ZZ0 20 = Z20 , the sub-pixel edge of the image can be represented by Formula (8): ϕ xs x Nd cos Nd = cos .ϕ s x + x = + ϕ . yys y y 2 2 sinsin ϕ s (8) (8) In Formula (8), d is the distance from the center of the unit circle to the ideal edge In Formula (8), d is the distance from the center of the unit circle to the ideal edge in in the polar coordinate system, and N is a template for Zernike moments, which imthe polar coordinate system, and N is a template for Zernike moments, which improves proves the accuracy as its size increases but increases the calculation time. the accuracy as its size increases but increases the calculation time. Figure1616shows showsthe the detection comparison of the low-resolution target images and Figure detection comparison of the low-resolution target images and the the super-resolution enhanced images using the method described in this paper. It is evisuper-resolution enhanced images using the method described in this paper. It is evident dent that the direct OTSU and Zernike edge detection algorithms are unable to find all of that the direct OTSU and Zernike edge detection algorithms are unable to find all of the the light source centers when the target light source structure is incomplete. When the light source centers when the target light source structure is incomplete. When the light light source far away, the light source center cannot detected due thesmall smallnumber number source is far is away, the light source center cannot be be detected due toto the of pixels occupied by each light source. The super-resolution enhancement and Zernike of pixels occupied by each light source. The super-resolution enhancement and Zernike moment sub-pixel detection method can well extract the target sub-pixel center coordinate moment sub-pixel detection method can well extract the target sub-pixel center coordinate accuracyof ofeach eachlight lightsource sourceto to0.001 0.001pixels, pixels,which whichprovides providesaaguarantee guaranteefor forsubsequent subsequent accuracy accurate attitude calculation. accurate attitude calculation. o o x x ( 7.437,4.382 ) ( 4.938,6.527 ) ( 9.519,6.788 ) ( 7.047,8.967 ) ( 3.719,3.007 ) ( 5.517,4.629 ) ( 3.718,6.366 ) ( 3.500,3.000) y y (a) (b) Figure16. 16. Comparison Comparison between between the thetraditional traditionalalgorithm algorithmand andthe thesubpixel subpixelcentroid centroidlocalization localization Figure method based on SRGAN and Zernike moments (the top-row images are targets, and the bottommethod based on SRGAN and Zernike moments (the top-row images are targets, and the bottom-row row images are results): (a) Results of OTSU threshold segmentation + Zernike moment sub-pixel images are results): (a) Results of OTSU threshold segmentation + Zernike moment sub-pixel center center search; (b) Results of our method. search; (b) Results of our method. Experimentson onAlgorithm AlgorithmAccuracy Accuracyand andPerformance Performance 4.4.Experiments Underthe thecondition condition that feature points inworld the world coordinate of the Under that thethe feature points in the coordinate systemsystem of the object object and its corresponding pixel coordinates in the image coordinate system are known, and its corresponding pixel coordinates in the image coordinate system are known, the the problem of solving the relative position between the object andcamera the camera is called problem of solving the relative position between the object and the is called the the perspective-n-points (PnP) problem. Accurately solving thisgenerally problemrequires generally reperspective-n-points (PnP) problem. Accurately solving this problem more quires more thancorresponding four known corresponding points. has done the following than four known points. This section hasThis donesection the following experiments: 1.experiments: Compare the traditional PnP algorithms, OPnP, LHM decomposition, and SRPnP in 1. 2. 2. 3. 3. Compare traditional PnPsmall algorithms, decomposition, and SRPnP in solving thethe coplanar 4-point optical OPnP, beaconLHM translation distance error; solving the coplanar 4-point optical algorithm beacon translation Compare the accuracy of thesmall traditional with thedistance methoderror; described in Compare the accuracy of the traditional algorithm with the method described in SecSection 3; tion 3; Compare the running speed of the algorithm before and after adding the superCompare the running speed of the algorithm before and after adding the super-resresolution enhancement. olution enhancement. In order to compare the average relative error and range accuracy of the OPnP, LHM, and SRPnP algorithms, 9 groups of 450 sample data were sampled 50 times every 1 m in the Sensors 2022, 22, x FOR PEER REVIEW Sensors 2022, 22, 7940 15 of 21 15 of 21 In order to compare the average relative error and range accuracy of the OPnP, LHM, and SRPnP algorithms, 9 groups of 450 sample data were sampled 50 times every 1 m in the range of 10–2 m. The average relative errors of the three algorithms are shown in Figrange 10–2 m. Thedetection average relative of the three algorithms shown in Figureare 17. ure 17.of The average distanceerrors and experimental data of theare three algorithms The average detection distance and experimental data of the three algorithms are shown shown in Table 1. in Table 1. 7200 6400 Experiment LHM OPnP SRPnP 7150 experiment LHM OPnP SRPnP 6300 7100 6200 Distance(mm) Distance(mm) 7050 6100 7000 6950 6000 6900 5900 6850 5800 6800 6750 0 10 20 30 Points Samples 40 5700 50 0 10 20 30 40 Points Samples 50 (b) (a) 5250 experiment LHM OPnP SRPnP 5200 Distance(mm) 5100 5050 SRPnP OPnP LHM 2.5 Average Relative Error(%) 5150 3 2 1.5 5000 4950 1 4900 0.5 4850 4800 0 10 20 30 Points Samples 40 0 50 7m 6m 5m 4m Samples 3m 2m (d) (c) Figure PnP algorithm distance detection results: (a) Detection results of optical beaFigure17. 17.Traditional Traditional PnP algorithm distance detection results: (a) Detection results of optical cons within 7 m; (b) Detection results of optical beacons within 6 m; (c) Detection results of optical beacons within 7 m; (b) Detection results of optical beacons within 6 m; (c) Detection results of optical beacons within 5 m; (d) The average relative error of translation. beacons within 5 m; (d) The average relative error of translation. Table 1. Experimental data and algorithm detection results. Table 1. Experimental data and algorithm detection results. Sample Groups Average Experiment Results Sample (mm) Groups 1 2 3 4 5 6 7 8 9 10,344.00 8892.00 1 7815.00 2 6968.00 6122.00 3 5015.00 4 4082.00 3012.00 5 1987.00 Average Experi- Average LHM Average OPnP Average SRPnP Average LHM Results Average OPnP Results Average SRPnP Results ment Results Results (mm) Results Results (mm) (mm) (mm) (mm) None (mm) (mm) None None None None 10,344.00 None None None None None None 8892.00 None None None None 7167.80 7143.45 7143.00 7815.00 None 6234.07 None None 6256.45 6234.07 5095.59 5075.22 6968.00 7167.80 5072.82 7143.45 7143.00 4126.44 4126.27 4124.44 6122.00 6256.45 3050.45 6234.07 6234.07 3050.97 3049.41 2014.62 2014.34 2014.14 6 7 8 9 Sensors 2022, 22, 7940 5015.00 4082.00 3012.00 1987.00 5095.59 4126.44 3050.97 2014.62 5072.82 4126.27 3050.45 2014.34 5075.22 4124.44 3049.41 16 of 21 2014.14 The experimental data in Table 1 was measured using a high-precision translation The experimental data in Table 1 was measured a high-precision platform. The 50 samples in the PnP algorithm solutionusing data of each group aretranslation randomly platform.from The 50 the PnP solution data of each group are randomly obtained thesamples shootinginvideos at algorithm different distances. By analyzing the data, it can be obtained from the shooting videos at different distances. By analyzing the data, canthe be concluded that when the small optical beacon is far away from the camera (10–7itm), concluded that when the small optical beacon is far away from the camera (10–7 m), the traditional PnP algorithm cannot be solved because it cannot obtain the coordinates of the traditional algorithm cannot be solved system. because In it cannot obtain thelong coordinates the four featurePnP points in the pixel coordinate the middle and distanceof(5–7 four feature points in the pixel coordinate system. In the middle and long distance (5–7 m) m) range, the accuracy of the three LHM iterative algorithms is low, and the average relrange, the accuracy of the three LHM low, and the average relative ative error is about 2.53%. Overall, theiterative solutionalgorithms accuracy ofisSRPnP and OPnP algorithms error is about 2.53%. Overall, the solution accuracy of SRPnP and OPnP algorithms is not is not much different, and the average relative errors are 1.51% and 1.53%, respectively. much different, and the average relative errors are 1.51% and 1.53%, respectively. In In order to reflect the accuracy and the detection range of our method, it is compared order with to reflect theresults accuracy thein detection range of our method, it is compared with SRPnP. SRPnP. The are and shown Figure 18. The results are shown in Figure 18. 9300 ×10 4 1.1 Experiment SRPnPsr Experiment SRPnPsr 9200 1.08 9100 Distance(mm) Distance(mm) 1.06 1.04 1.02 9000 8900 8800 1 8700 0.98 8600 0.96 8500 0 10 20 30 Points Samples 40 50 10 0 20 30 Points Samples (b) (a) 8100 SRPnP SRPnP sr 2.5 Average Relative Error(%) 7900 Distance(mm) 3 Experiment SRPnPsr 8000 2 1.5 7800 7700 7600 7500 50 40 1 0.5 0 10 20 30 Points Samples (c) 40 50 0 10m 9m 8m 7m 6m Samples 5m 4m 3m 2m (d) Figure results are arebased basedon onsuper-resolution super-resolution image enhancement a subpixel cenFigure 18. 18. Ranging Ranging results image enhancement andand a subpixel centroid troid localization method: (a) Detection results of optical beacons within 10 m; (b) Detection results localization method: (a) Detection results of optical beacons within 10 m; (b) Detection results of optical beacons within 9 m; (c) Detection results of optical beacons within 8 m; (d) The average relative error of translation. By examining the data in Figure 18, it can be seen that the issue with the feature points being unable to be recognized and located at a great distance (10–7 m) has been resolved, and the feature point extraction range of the remote small optical beacon has been greatly Sensors 2022, 22, 7940 being unable to be recognized and located at a great distance (10–7 m) has been resolv and the feature point extraction range of the remote small optical beacon has been grea improved. The average relative error of the SRPnP algorithm in solving a 10–7 m targe 1.25%, and the average relative error in short distances (within 7 m) is 0.83%. From of 21 above data, we can see that our method reduces the calculation error by 1733.6% and i proves the calculation accuracy. In order to further reflect the efficiency of the algorithm, our algorithm and the t improved. The average relative error of the SRPnP algorithm in solving a 10–7 m target ditional algorithm compared time under the same hardware and software con is 1.25%, and theare average relative in error in short distances (within 7 m) is 0.83%. From above data, we can see thethe calculation error by 33.6% andand the tions.the The video capture ratethat of our the method camerareduces used in experiment is 20 FPS, improves the calculation accuracy. gorithms used in this experiment are tested on a personal computer equipped with an In order to further reflect the efficiency of the algorithm, our algorithm and the tradi8750 CPU, 32 g of memory, and Windows 10. We used Visual Studio 2017 and Qt 5.9.8 tional algorithm are compared in time under the same hardware and software conditions. the pose algorithm andinneural networkis migration without GPU accel The video captureimplementation rate of the camera used the experiment 20 FPS, and the algorithms ation.used In Figure 19, the computing time of our method is 0.063 s (15.87 FPS) at a long d in this experiment are tested on a personal computer equipped with an i7-8750 CPU, g of the memory, and Windows 10.the We SRPnP used Visual Studio 2017 and Qt forFPS). the pose tance,32and computing time of algorithm is 0.060 s 5.9.8 (16.67 The comp algorithm implementation and neural network migration without GPU acceleration. In ting time of our method is 0.101 s (10.17 FPS) at a short distance, and the com-puting ti Figure 19, the computing time of our method is 0.063 s (15.87 FPS) at a long distance, and of thetheSRPnP algorithm is 0.093 s (10.83 FPS). The average detection speed of our alg computing time of the SRPnP algorithm is 0.060 s (16.67 FPS). The computing time rithmofinour the whole is 0.088 s (11.36 FPS). It can bethe seen that thetime timeofconsumpti method is range 0.101 s (10.17 FPS) at a short distance, and com-puting the (10.83 different. FPS). The average ourof algorithm in image of theSRPnP two algorithm methods isis0.093 not smuch This isdetection becausespeed the of size the target whole is 0.088 s (11.36 FPS). It can be seen that time consumption of the small,theand therange combination of super-resolution andthe sub-pixel algorithm hastwo a small i methods is not much different. This is because the size of the target image is small, and the pact. combination of super-resolution and sub-pixel algorithm has a small impact. 0.15 SRPnP SRPnP sr 0.14 0.085 SRPnP SRPnP sr 0.08 0.13 0.075 Time(s) 0.12 Time (s) 0.11 0.07 0.065 0.1 0.09 0.06 0.08 0.055 0.07 0.05 0.06 0 20 40 Frames 60 (a) 80 100 0 20 40 Frames 60 80 100 (b) Figure 19. Comparison ofofdetection speeds each frame image The operating speed of algorit Figure 19. Comparison detection speeds ofof each frame image (a) The(a) operating speed of algorithm in (b) 5 m;The (b) The operatingspeed speed of in 10–5 m. m. in 5 m; operating of algorithm algorithm in 10–5 Figure 20 is a graph of the dynamic positioning results of our algorithm, in which the Figure 20 is a graph of the dynamic positioning results of our algorithm, in which solid lines are the solution results in the X, Y, and Z axis directions, respectively, and the soliddotted lines are results the X,platform. Y, and ItZcan axis respectively, linesthe are solution the readings of the in moving bedirections, seen from the results that and ourlines method detection and theplatform. advantage It of can real-time performance. dotted arehas thehigh readings ofaccuracy the moving be seen from the results th In this paper, LED light beacon arrays of different colors and shapes are our method has high detection accuracy and the advantage of real-timedesigned performance. to verify the accuracy of the algorithm, as shown in Figure 21. Therefore, if the ranging experiment of the system with multiple AUVs is designed, the color recognition function can be added after the target detection to perform ranging detection on the installation of different types of optical beacons. It can be seen that the method described in this paper has a good solution effect, and it also has a good discrimination effect on the illusion of water surface and lens glass. 12,000 X calculated Y calculated Z calculated X measured Y measured Z measured 10,000 8000 Coordinate Value (mm) Sensors FOR PEER REVIEW Sensors 2022, 2022, 22, 22, x7940 1818ofof 21 21 6000 12,000 X calculated Y calculated Z calculated X measured Y measured Z measured 4000 Coordinate Value (mm) 10,000 2000 8000 0 6000 -2000 0 4000 10 20 Time (s) 30 40 Figure 20. Dynamic positioning experiment results. 2000 In this paper, LED light beacon arrays of different colors and shapes are designed to verify0 the accuracy of the algorithm, as shown in Figure 21. Therefore, if the ranging experiment of the system with multiple AUVs is designed, the color recognition function can-2000 be added after the target detection to perform ranging detection on the installation of 0 10 20 30 40 different types of opticalTime beacons. It can be seen that the method described in this paper (s) has a good solution effect, and it also has a good discrimination effect on the illusion of Figure 20. Dynamic positioning experiment results. results. Figure Dynamic water 20. surface andpositioning lens glass.experiment In this paper, LED light beacon arrays of different colors and shapes are designed to (a) (b) verify the accuracy of the algorithm, as shown in Figure 21. Therefore, if the ranging experiment of the system with multiple AUVs is designed, the color recognition function can be added after the target detection to perform ranging detection on the installation of different types of optical beacons. It can be seen that the method described in this paper has a good solution effect, and it also has a good discrimination effect on the illusion of water surface and lens glass. (a) (b) (c) (d) (c) (d) Figure 21. 21. Underwater Underwater optical optical beacon beacon attitude attitude calculation calculation effect effect diagram: diagram: (a) (a) Optical Optical beacon beacon of of the the Figure green cross; (b) Optical beacon of the blue cross; (c) Optical beacon of the green trapezoid; (d) Optigreen cross; (b) Optical beacon of the blue cross; (c) Optical beacon of the green trapezoid; (d) Optical cal beacon of the blue trapezoid. beacon of the blue trapezoid. 5. Discussion Table 2 shows the performance comparison between the existing underwater optical beacon detection algorithm and the algorithm described in this paper. It can be seen that the algorithm in this paper has high accuracy and a long detection range for optical beacons Figure Underwater optical beacon attitude effect diagram: (a) Optical beacon of not the much 21. smaller than the conventional size, calculation and the algorithm’s time-consuming does green cross; (b) Optical beacon of the blue cross; (c) Optical beacon of the green trapezoid; (d) Optical beacon of the blue trapezoid. Sensors 2022, 22, 7940 19 of 21 increase significantly. This shows that this paper provides an efficient and accurate optical beacon finding and positioning method for the end-docking of small AUVs. Table 2. Performance comparison between existing algorithms and our algorithm. Method Optical Beacon Size (mm) Detection Range (m) Detection Speed (s) Average Relative Error R. L.’s [15] S. L.’s [16] R. R.’s [17] Z. Y.’s [32] Ours 100 2014 280 600 88 3.6 6.5 8.0 4.5 10 0.015 0.120 0.059 0.050 0.088 2.00% 0.14% 5.00% 4.44% 1.04% 6. Conclusions In this paper, a quick underwater monocular camera positioning technique for compact 4-light beacons is presented. It combines deep learning and conventional image processing techniques. The second part introduces the experimental equipment and system in detail. A YOLO v5 target detection model with a coordinated attention mechanism is constructed and compared with the original model and the model with CBAM. The model has a classification accuracy of 96.1% for small optical beacons, which is 1.5% higher than the original network structure, and the recall is also increased by 0.8%. A sub-pixel centroid localization method combining SRGAN super-resolution image enhancement and Zernike moments is proposed, which improves the feature localization accuracy of small target light sources to 0.001 pixels. Finally, experimental verification shows that our method extends the detection range of small optical beacons to 10 m, controls the average relative error of distance detection at 1.04%, and has a detection speed of 0.088 ms (11.36 FPS). Our method proposes a feasible monocular vision ranging scheme for small underwater optical beacons, which has the advantages of fast calculation speed and high precision. The combination of super-resolution enhancement and sub-pixel edge refinement is not limited to underwater optical beacon finding in AUV docking, and it can also be extended to other object detection fields. For example, satellite image remote sensing and small target detection tasks in medical images. However, our method also has certain limitations. For example, the optical beacons and laser probes used in the experiment are only fixed on the high-precision rotary device, which is limited by the fixing method of the equipment and the moving mode of the rotary device, which cannot simulate the problems faced by the dynamic docking of AUVs in real situations. In this paper, optical beacons of various shapes and colors are designed to face the problem of visual positioning in the multi-AUV working system. However, limited by the manufacturing cost of the equipment, the multi-AUV docking experiment has not been carried out. Therefore, fixing the optical beacon and the laser probe in the full-size AUV for sea trials, verifying the performance of the algorithm under dynamic conditions, and designing the visual recognition of the multi-AUV system are the next research directions. Author Contributions: Conceptualization, B.Z.; Data curation, T.Z. and L.S.; Investigation, T.Z.; Methodology, B.Z.; Project administration, P.Z.; Resources, T.Z. and L.S.; Software, B.Z.; Supervision, P.Z. and F.Y.; Writing—original draft, B.Z.; Writing—review & editing, P.Z. and F.Y. All authors have read and agreed to the published version of the manuscript. Funding: This research was funded by National Natural Science Foundation of China, grant number 51975116 and Natural Science Foundation of Shanghai, grant number 21ZR1402900. Data Availability Statement: The data presented in this study are available on request from the corresponding author. Conflicts of Interest: The authors declare no conflict of interest. Sensors 2022, 22, 7940 20 of 21 References 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. 25. 26. 27. 28. Hsu, H.Y.; Toda, Y.; Yamashita, K.; Watanabe, K.; Sasano, M.; Okamoto, A.; Inaba, S.; Minami, M. Stereo-vision-based AUV navigation system for resetting the inertial navigation system error. Artif. Life Robot. 2022, 27, 165–178. [CrossRef] Guo, Y.; Bian, C.; Zhang, Y.; Gao, J. An EPnP Based Extended Kalman Filtering Approach forDocking Pose Estimation ofAUVs. In Proceedings of the International Conference on Autonomous Unmanned Systems (ICAUS 2021), Changsha, China, 24–26 September 2021; Springer Science and Business Media Deutschland GmbH: Changsha, China, 2022; pp. 2658–2667. Dong, H.; Wu, Z.; Wang, J.; Chen, D.; Tan, M.; Yu, J. Implementation of Autonomous Docking and Charging for a Supporting Robotic Fish. IEEE Trans. Ind. Electron. 2022, 1–9. [CrossRef] Bosch, J.; Gracias, N.; Ridao, P.; Istenic, K.; Ribas, D. Close-Range Tracking of Underwater Vehicles Using Light Beacons. Sensors 2016, 16, 429. [CrossRef] [PubMed] Wynn, R.B.; Huvenne, V.A.I.; Le Bas, T.P.; Murton, B.J.; Connelly, D.P.; Bett, B.J.; Ruhl, H.A.; Morris, K.J.; Peakall, J.; Parsons, D.R.; et al. Autonomous Underwater Vehicles (AUVs): Their past, present and future contributions to the advancement of marine geoscience. Mar. Geol. 2014, 352, 451–468. [CrossRef] Jacobi, M. Autonomous inspection of underwater structures. Robot. Auton. Syst. 2015, 67, 80–86. [CrossRef] Loebis, D.; Sutton, R.; Chudley, J.; Naeem, W. Adaptive tuning of a Kalman filter via fuzzy logic for an intelligent AUV navigation system. Control Eng. Pract. 2004, 12, 1531–1539. [CrossRef] Sans-Muntadas, A.; Brekke, E.F.; Hegrenaes, O.; Pettersen, K.Y. Navigation and Probability Assessment for Successful AUV Docking Using USBL. In Proceedings of the 10th IFAC Conference on Manoeuvring and Control of Marine Craft, Copenhagen, Denmark, 24–26 August 2015; pp. 204–209. Kinsey, J.C.; Whitcomb, L.L. Preliminary field experience with the DVLNAV integrated navigation system for oceanographic submersibles. Control Eng. Pract. 2004, 12, 1541–1549. [CrossRef] Marani, G.; Choi, S.K.; Yuh, J. Underwater autonomous manipulation for intervention missions AUVs. Ocean. Eng. 2009, 36, 15–23. [CrossRef] Nicosevici, T.; Garcia, R.; Carreras, M.; Villanueva, M.; IEEE. A review of sensor fusion techniques for underwater vehicle navigation. In Proceedings of the Oceans ’04 MTS/IEEE Techno-Ocean ’04 Conference, Kobe, Japan, 9–12 November 2004; pp. 1600–1605. Kondo, H.; Ura, T. Navigation of an AUV for investigation of underwater structures. Control Eng. Pract. 2004, 12, 1551–1559. [CrossRef] Bonin-Font, F.; Massot-Campos, M.; Lluis Negre-Carrasco, P.; Oliver-Codina, G.; Beltran, J.P. Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV. Sensors 2015, 15, 1825–1860. [CrossRef] Li, Y.; Jiang, Y.; Cao, J.; Wang, B.; Li, Y. AUV docking experiments based on vision positioning using two cameras. Ocean Eng. 2015, 110, 163–173. [CrossRef] Zhong, L.; Li, D.; Lin, M.; Lin, R.; Yang, C. A Fast Binocular Localisation Method for AUV Docking. Sensors 2019, 19, 1735. [CrossRef] Liu, S.; Ozay, M.; Okatani, T.; Xu, H.; Sun, K.; Lin, Y. Detection and Pose Estimation for Short-Range Vision-Based Underwater Docking. IEEE Access 2019, 7, 2720–2749. [CrossRef] Ren, R.; Zhang, L.; Liu, L.; Yuan, Y. Two AUVs Guidance Method for Self-Reconfiguration Mission Based on Monocular Vision. IEEE Sens. J. 2021, 21, 10082–10090. [CrossRef] Venkatesh Alla, D.N.; Bala Naga Jyothi, V.; Venkataraman, H.; Ramadass, G.A. Vision-based Deep Learning algorithm for Underwater Object Detection and Tracking. In Proceedings of the OCEANS 2022-Chennai, Chennai, India, 21–24 February 2022; Institute of Electrical and Electronics Engineers Inc.: Chennai, India, 2022. Sun, K.; Han, Z. Autonomous underwater vehicle docking system for energy and data transmission in cabled ocean observatory networks. Front. Energy Res. 2022, 10, 1232. [CrossRef] Jocher, G. YOLOv5 Release v6.0. Available online: https://github.com/ultralytics/yolov5/tree/v6.0 (accessed on 12 October 2021). Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. Hou, Q.; Zhou, D.; Feng, J.; Ieee Comp, S.O.C. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, 19–25 June 2021; pp. 13708–13717. Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4681–4690. Khotanzad, A.; Hong, Y.H. Invariant image recognition by Zernike moments. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 489–497. [CrossRef] Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [CrossRef] Lu, C.-P.; Hager, G.D.; Mjolsness, E. Fast and globally convergent pose estimation from video images. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 610–622. [CrossRef] Zheng, Y.; Kuang, Y.; Sugimoto, S.; Astrom, K.; Okutomi, M. Revisiting the pnp problem: A fast, general and optimal solution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2344–2351. Wang, P.; Xu, G.; Cheng, Y.; Yu, Q. A simple, robust and fast method for the perspective-n-point problem. Pattern Recognit. Lett. 2018, 108, 31–37. [CrossRef] Sensors 2022, 22, 7940 29. 30. 31. 32. 21 of 21 Baiden, G.; Bissiri, Y.; Masoti, A. Paving the way for a future underwater omni-directional wireless optical communication systems. Ocean Eng. 2009, 36, 633–640. [CrossRef] Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22-29 October 2017; pp. 618–626. Yan, Z.; Gong, P.; Zhang, W.; Li, Z.; Teng, Y. Autonomous Underwater Vehicle Vision Guided Docking Experiments Based on L-Shaped Light Array. IEEE Access 2019, 7, 72567–72576. [CrossRef]