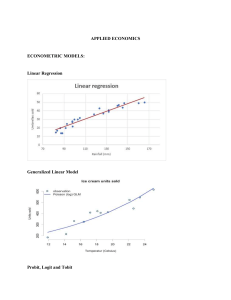

Chapter 15 Qualitative Response Regression Models Part 2 Before the recess….. • Models considered in Chapter 1 to 13 – dependent variable was quantitative whereas explanatory variables were quantitative, qualitative or a mixture • In this chapter the dependent variable (or the response variable) is qualitative • LPM – OLS – But problems Problems of LPM • LPM poses several problems (can therefore not simply extend OLS to binary dependent variable regression models) – Non-normality of the disturbance 𝑢𝑖 – Heteroscedastistic variances of disturbances – Nonfulfillment of 0 ≤ 𝐸(𝑌𝑖 |𝑋𝑖 ) ≤ 1 – Questionable value of R2 as measure of goodness of fit • Most of these problems are surmountable • But fundamental problem – LPM assumes that marginal effect of X remains constant throughout e.g. in home ownership example, the probability of owning a house increases by the same constant amount of 0.1. Alternatives to LPM • What do we need? – A probability model where, as X increases, the probability of the event occurring increases but never steps outside the 0-1 interval and – The relationship between P and X is nonlinear – “one which approaches zero at slower and slower rates as X gets small and approaches one at slower and slower rates as X gets very large.” • An S-shaped curve resembles the cumulative distribution function of a random variable • For each random variable, there is a unique CDF • CDFs commonly chosen to represent the 0-1 response models are: – Logistic (Logit) Model – Normal (Probit/Normit) Model Logit model • Home ownership example LPM • 𝑃𝑖 = 𝛽1 + 𝛽2 𝑋𝑖 • Now consider a different representation 1 1 1+𝑒 −𝑧𝑖 • 𝑃𝑖 = −(𝛽1 +𝛽2 𝑋𝑖) = 1+𝑒 distribution function) = 𝑒𝑧 1+𝑒 𝑧 where 𝑍𝑖 = 𝛽1 + 𝛽2 𝑋𝑖 (Logistic • 𝑍𝑖 ranges from −∞ to +∞, 𝑃𝑖 ranges between 0 and 1 and 𝑃𝑖 is nonlinearly related to 𝑍𝑖 (𝑋𝑖 ) – requirements solved! • But, new estimation problem created - 𝑃𝑖 also nonlinear in parameters, OLS can’t be used – but equation can be linearized Linearizing the model (1) • Probability of owning a house: – 𝑃𝑖 = 𝑒𝑍 1+𝑒 𝑍 • Probability of not owning a house: – 1 − 𝑃𝑖 = 1 1+𝑒 𝑍𝑖 • Odds ratio in favour of owning a house (ratio of the probability that a family will own a house to the probability that it will not own a house, e.g. if P=0.8, odds are 4 to 1 in favour of the family owning a house) – 𝑃𝑖 1−𝑃𝑖 = 1+𝑒 𝑍 1+𝑒 −𝑍 = 𝑒𝑍 Linearizing the model (2) • Take natural log of equation – 𝐿𝑖 = ln 𝑃𝑖 1−𝑃𝑖 = 𝑍𝑖 = 𝛽1 + 𝛽2 𝑋𝑖 – L is called the logit and therefore the logit model – The logit is the log of the odds ratio – It is not only linear in X but also linear in the parameters Features of the logit model • As P goes from 0 to 1, the logit L goes from - ∞ to + ∞, thus although the probabilities lie between 0 and 1, logits are not so bounded • Although L is linear in X, the probabilities themselves are not (in contrast with LPM, where probability increases linearly with X) • Many regressors can be added to the model • If L = positive, when value of regressor/s increases, odds that regressand = 1 increases (some event of interest happens). If L = negative, the odds that regressand = 1 decrease as the value of X increases • Slope measures the change in L for a unit change in X, how the logodds in favour of owning a house change as income changes by a unit. Intercept measures the value of log-odds in favour of owning a house if income is zero Features of the logit model • Given a certain level of income (e.g. X*) if we actually want to estimate the probability of owning a house (not the odds in favour of owning a house) it can be estimated directly from 𝑃𝑖 = 𝑒𝑧 𝑧 once the estimates of 𝛽1 𝑎𝑛𝑑 𝛽2 are available 1+𝑒 • LPM assumed that 𝑃𝑖 is linearly related to 𝑋𝑖 , logit assumes that log of odds ratio is linearly related to 𝑋𝑖 Estimation of logit model • 𝐿𝑖 = ln 𝑃𝑖 1−𝑃𝑖 = 𝛽1 + 𝛽2 𝑋𝑖 + 𝑢𝑖 ………1 • To estimate equation, we need values for X and L • Depends on type of data we have for analysis – Data at the individual / micro level – Grouped / replicated data (we will not be covering this type of data) Data at individual level • OLS estimation of equation 1 is infeasible • For example in the housing example – P = 1 if a family owns a house and P = 0 if it does not own a house – Substituting into the Logit 𝐿𝑖 = ln 𝑃𝑖 1−𝑃𝑖 we obtain: – 𝐿𝑖 = ln 1 0 if a family own a house – 𝐿𝑖 = ln 0 1 if a family does not own a house – These expressions are meaningless – therefore can’t use OLS – Will have to use maximum-likelihood (ML) method to estimate parameters Grouped / replicated data • Leave out • Second half of p 556 – 561 • Start again at 15.8 Logit model for ungrouped / individual data - example • Student’s final grade in an intermediate microeconomics course (Dependent variable – Grade, Y=1 if final grade was an A, Y=0 if final grade was a B or a C) • Independent variables – GPA – grade point average – TUCE – score on exam given at beginning of term to test entering knowledge of macroeconomics – PSI – personalized system of instruction (1 if the new teaching method is used, 0 otherwise) example Example (continued) • Logit model • 𝐿𝑖 = ln 𝑃𝑖 1−𝑃𝑖 = 𝛽1 + 𝛽2 𝐺𝑃𝐴𝑖 + 𝛽3 𝑇𝑈𝐶𝐸𝑖 + 𝛽4 𝑃𝑆𝐼𝑡 + 𝑢𝑖 • Can’t use OLS / WLS – have to use nonlinear estimating procedure – ML • Eviews Example (continued) • Keep in mind: • ML = large sample method, therefore estimated standard errors are asymptotic • Therefore instead of using t-stats to evaluate statistical significance, we use Z stat (standard normal distribution). Inferences are based on normal table Example (continued) • R2 not meaningful in binary regressand models – can make use of pseudo R2 (Eviews – McFadden R2) or count R2: – 2 𝐶𝑜𝑢𝑛𝑡 𝑅 = 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑐𝑜𝑟𝑟𝑒𝑐𝑡 𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑖𝑜𝑛𝑠 𝑡𝑜𝑡𝑎𝑙 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑜𝑏𝑠𝑒𝑟𝑣𝑎𝑡𝑖𝑜𝑛𝑠 – Since regressand in logit model takes value of 1 or zero – if predicted probability is greater than 0.5 we classify it as 1 and if less than 0.5 we classify is as 0 – Then count number of correct predictions and compute R2 as specified in equation – Keep in mind that goodness of fit is of secondary importance in these models – expected signs more important • To replicate the F-test used in the linear regression models – we can use the likelihood ratio (LR) statistic. It follows the 𝜒 2 distribution with df – number of explanatory variables Example (continued) • Grade = -13.0213 + 2.8261GPA + 0.0951TUCE + 2.3786PSI • If GPA increases with one unit, on average, the estimated logit increases with 2.83 units, ceteris paribus (positive relationship – other explanatory variables too) • More meaningful interpretation – odds ratio – Take the antilog of slope coefficients – 𝑒 2.8262 = 16.88 – Students with a higher GPA are more than 16 times (almost 17 times) as likely to get an A than students with a lower GPA • TUCE is not statistically significant, but GPA and PSI are • PSI 𝑒 2.3786 = 10.79 (students who are exposed to the new method of teaching are more than 10 times as likely to get an A than students who are not exposed to it, ceteris paribus) • Model is overall significant – p-value of LR stat < 0.05 Example (continued) • Grade = -13.0213 + 2.8261GPA + 0.0951TUCE + 2.3786PSI • If interested in actual probability of student number 10 getting an A grade: – Grade (10) = -13.0213 + 2.8261(3.92) + 0.0951(29) + 2.3786(0) =0.816595 (logit value) 1 – 𝑃𝑖 = 1+𝑒 −(0.816595) = 0.693513 (probability) – Student’s actual final grade was an A and our logit model assigns a probability of 1 to a student who gets an A, estimated probability of 0.69 is not exactly 1 but relatively close to it. • To obtain count R2: – Obtain actual, fitted, residual table – Identify incorrect predictions Example (continued) • Count R2 = 26/32 = 0.8125 • McFadden R2 = 0.374 • Values are not directly comparable – but gives an indication about the orders of magnitude (and goodness of fit not that important…..) Probit model • We know that we have to make use of a cumulative distribution function (CDF) to explain the behavior of a binary dependent variable • Logit model is not the only CDF that can be used • Normal CDF has been found useful in some applications – known as the probit model / normit model • In principle we can substitute the normal CDF in place of the logistic CDF in: • 𝑃𝑖 = 1 1+𝑒 −(𝛽1 +𝛽2 𝑋𝑖) Probit model • OR • The probit model can be presented based on utility theory / rational choice perspective on behavior, developed by McFadden • If we choose the normal distribution as the appropriate probability distribution – we can use the probit model. • Model is mathematically difficult as it involves integrals. • For practical purposes, logit and probit models give similar results. • Choice depends on ease of computation (computer packages) Probit estimation with grouped data • Leave out from bottom of p 567 to 570 • Start again with Probit model for ungrouped / individual data Probit model for ungrouped / individual data • Repeat example on final grade in intermediate microeconomics course Probit model example • Results can be compared to logit estimated previously • GPA and PSI are individually statistically significant and model is overall significant • We can’t compare the coefficients of the logit and probit regression coefficients directly Marginal effects of models • In linear regression model – slope measures change in average value of Y for a unit change in X, ceteris paribus • In LPM – slope measures change in probability of event occurring as result of unit change in X, ceteris paribus • In logit model – slope measures the change in the log of odds associated with a unit change in that variable, ceteris paribus. But rate of change in probability of event happening is given by 𝛽𝑗 𝑃𝑖 (1 − 𝑃𝑖 ). In evaluating P – all variables included in analysis are in involved • In probit model – rate of change is complicated and given by 𝛽𝑗 𝑓 𝑍𝑖 where 𝑓(𝑍𝑖 ) is the density function of standard normal variable and Z is the regression model used in the analysis Logit and probit models • Which model is preferable between logit and probit models? • Models are quite similar, logit has slightly fatter tails – therefore conditional probability approaches 0 or 1 at a slower rate in logit than probit • No compelling reason to choose one over other, many researchers choose logit because of comparative mathematical simplicity • Remember that the coefficients between 2 models are not directly comparable (have to adjust coefficients to compare due to differences in variance) Rest of the chapter • Leave out…… • Try to work through the practical before the tutorial sessions this week. Next week….. • No Lecture or tutorials • Semester Test 2 – Tuesday 19 September, 18:00 in Eles 201 and 202 – Chapters 14 (only theory) and 15 (theory and practical) – 50 Marks, 90 Minutes Logit vs. Probit – Fatter tails