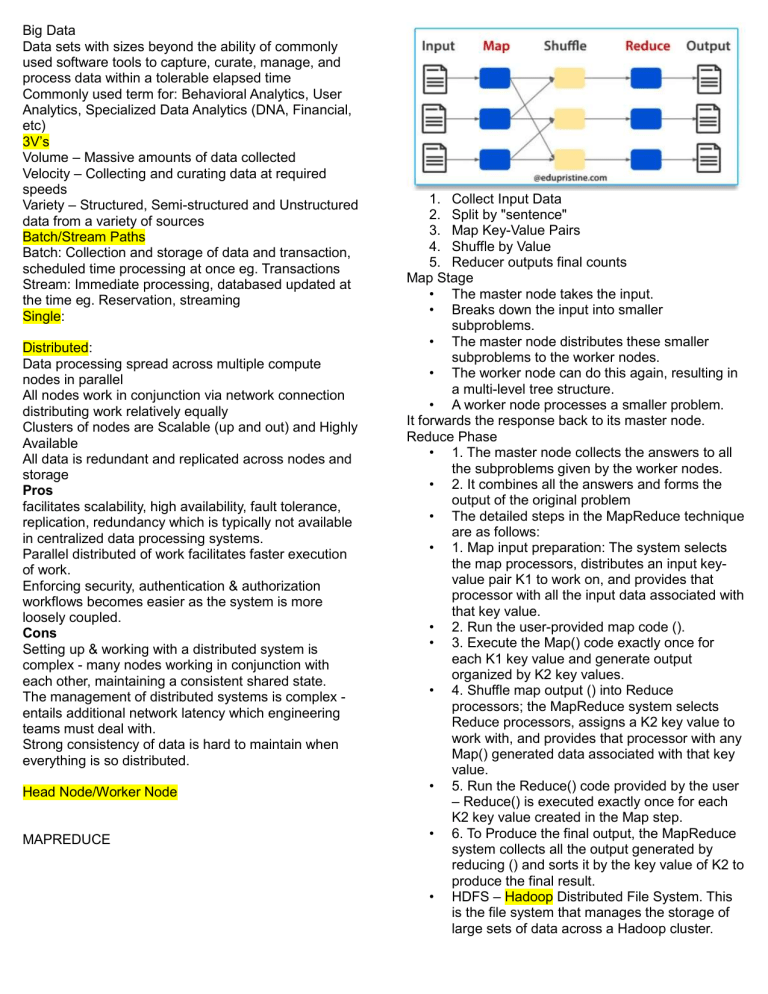

Big Data Data sets with sizes beyond the ability of commonly used software tools to capture, curate, manage, and process data within a tolerable elapsed time Commonly used term for: Behavioral Analytics, User Analytics, Specialized Data Analytics (DNA, Financial, etc) 3V’s Volume – Massive amounts of data collected Velocity – Collecting and curating data at required speeds Variety – Structured, Semi-structured and Unstructured data from a variety of sources Batch/Stream Paths Batch: Collection and storage of data and transaction, scheduled time processing at once eg. Transactions Stream: Immediate processing, databased updated at the time eg. Reservation, streaming Single: Distributed: Data processing spread across multiple compute nodes in parallel All nodes work in conjunction via network connection distributing work relatively equally Clusters of nodes are Scalable (up and out) and Highly Available All data is redundant and replicated across nodes and storage Pros facilitates scalability, high availability, fault tolerance, replication, redundancy which is typically not available in centralized data processing systems. Parallel distributed of work facilitates faster execution of work. Enforcing security, authentication & authorization workflows becomes easier as the system is more loosely coupled. Cons Setting up & working with a distributed system is complex - many nodes working in conjunction with each other, maintaining a consistent shared state. The management of distributed systems is complex entails additional network latency which engineering teams must deal with. Strong consistency of data is hard to maintain when everything is so distributed. Head Node/Worker Node MAPREDUCE 1. Collect Input Data 2. Split by "sentence" 3. Map Key-Value Pairs 4. Shuffle by Value 5. Reducer outputs final counts Map Stage • The master node takes the input. • Breaks down the input into smaller subproblems. • The master node distributes these smaller subproblems to the worker nodes. • The worker node can do this again, resulting in a multi-level tree structure. • A worker node processes a smaller problem. It forwards the response back to its master node. Reduce Phase • 1. The master node collects the answers to all the subproblems given by the worker nodes. • 2. It combines all the answers and forms the output of the original problem • The detailed steps in the MapReduce technique are as follows: • 1. Map input preparation: The system selects the map processors, distributes an input keyvalue pair K1 to work on, and provides that processor with all the input data associated with that key value. • 2. Run the user-provided map code (). • 3. Execute the Map() code exactly once for each K1 key value and generate output organized by K2 key values. • 4. Shuffle map output () into Reduce processors; the MapReduce system selects Reduce processors, assigns a K2 key value to work with, and provides that processor with any Map() generated data associated with that key value. • 5. Run the Reduce() code provided by the user – Reduce() is executed exactly once for each K2 key value created in the Map step. • 6. To Produce the final output, the MapReduce system collects all the output generated by reducing () and sorts it by the key value of K2 to produce the final result. • HDFS – Hadoop Distributed File System. This is the file system that manages the storage of large sets of data across a Hadoop cluster. HDFS can handle both structured and unstructured data. The storage hardware can range from any consumer-grade HDDs to enterprise drives. • MapReduce. The processing component of the Hadoop ecosystem. It assigns the data fragments from the HDFS to separate map tasks in the cluster. MapReduce processes the chunks in parallel to combine the pieces into the desired result. • YARN. Yet Another Resource Negotiator. Responsible for managing computing resources and job scheduling. • The set of common libraries and utilities that other modules depend on. Another name for this module is Hadoop core, as it provides support for all other Hadoop components. Data Sharding Optimization Spark • Apache Spark is an open-source tool that can run in a standalone mode or on a cloud platforms. • It is designed for fast performance and uses RAM for caching and processing data. • The Spark engine was created to improve the efficiency of MapReduce and keep its benefits. • Even though Spark does not have its own file system, it can access data on many different storage solutions. The data structure that Spark uses is called Resilient Distributed Dataset (RDD). • Apache Spark Core. The basis of the whole project. Spark Core is responsible for necessary functions such as scheduling, task dispatching, input and output operations, fault recovery, etc. Other functionalities are built on top of it. • Spark Streaming. This component enables the processing of live data streams. Data can originate from many different sources, including Kafka, Kinesis, Flume, etc. • Spark SQL. Spark uses this component to gather information about the structured data and how the data is processed. • Machine Learning Library (MLlib). This library consists of many machine learning algorithms. MLlib’s goal is scalability and making machine learning more accessible. • GraphX. A set of APIs used for facilitating graph analytics tasks. Streaming Data Lambda architecture Kappa Architecture Privacy and Security The ability for individuals and organizations to protect and control personal information that can be collected, used, shared, or sold by organizations harvesting that information. Data security means protecting digital data, such as those in a database, from destructive forces and from the unwanted actions of unauthorized users Data at Rest – Data stored in files, database, cloud, removable media, other storage – even physically! Data in Transit – Data being transmitted through networks, across the internet, cellular Data Encryption - security method where information is encoded and can only be accessed or decrypted by a user with the correct encryption key. Encrypted data, also known as ciphertext, appears scrambled or unreadable to a person or entity accessing without permission. Data masking - method of creating a structurally similar but inauthentic version of an organization's data that can be used for purposes such as software testing and user training. The purpose is to protect the actual data while having a functional substitute for occasions when the real data is not required. Single sign-on (SSO) - authentication scheme that allows a user to log in with a single ID to any of several related, yet independent, software systems. True single sign-on allows the user to log in once and access services without re-entering authentication factors. Semi (XML) is a markup language and file format for storing, transmitting, and reconstructing arbitrary data. It defines a set of rules for encoding documents in a format that is both human-readable and machinereadable. Popularized by SOAP - a messaging protocol specification for exchanging structured information in the implementation of web services in computer networks. It uses XML Information Set for its message format, and relies on application layer protocols, most often Hypertext Transfer Protocol (HTTP), although some legacy systems communicate over Simple Mail Transfer Protocol (SMTP), for message negotiation and transmission. Includes other Markup Languages, EDI, etc Underpins email and MS Word JSON Language-independent data format. It was derived from JavaScript, but many modern programming languages include code to generate and parse JSONformat data. JSON filenames use the extension .json. Any valid JSON file is a valid JavaScript (.js) file, even though it makes no changes to a web page on its own Popularized by REST - Representational state transfer (REST) is a software architectural style that describes a uniform interface between physically separate components, often across the Internet in a ClientServer architecture. NoSQL Key-value store: Key–value (KV) stores use the associative array (also called a map or dictionary) as their fundamental data model. In this model, data is represented as a collection of key–value pairs, such that each possible key appears at most once in the collection. Document store: Assume that documents encapsulate and encode data (or information) in some standard formats or encodings. Encodings in use include XML, YAML, and JSON and binary forms like BSON. Documents are addressed in the database via a unique key. API or query language to retrieve documents based on their contents. Graph: Designed for data whose relations are well represented as a graph consisting of elements connected by a finite number of relations. Examples of data include social relations, public transport links, road maps, network topologies, etc. Wide-column Store: AKA extensible record store, uses tables, rows, and columns, but unlike a relational database, the names and format of the columns can vary from row to row in the same table. A wide-column store can be interpreted as a two-dimensional key– value store. Reading Data-Driven Decision Making data can help managers make better decisions by providing insights that might not be apparent through intuition. defining the problem, identifying relevant data sources, analyzing the data, and communicating the findings to stakeholders