CONTENTS

Preface

Part I Circuits and Models

1 Introduction

1.1

1.2

1.3

1.4

1.5

1.6

1.7

1.8

1.9

Microelectronics

Semiconductor Technologies and Circuit Taxonomy

Microelectronic Design Styles

Design of Microelectronic Circuits

Computer-Aided Synthesis and Optimization

1.5.1

Circuit Models

1.5.2

Synthesis

1.5.3

Optimization

Organization of the Book

Related Problems

A Closer Look at Physical Design Problems

1.7.1

1.7.2

Computer-Aided Simulation

1.7.3 Computer-Aided Verification

1.7.4 Testing and Design for Testability

Synthesis and Optimization:

A Historic Perspective

References

-

2 Background

2.1

2.2

2.3

Notation

Graphs

2.2.1

Undirected Graphs

2.2.2

Directed Graphs

2.2.3

Perfect Graphs

Combinatorial Optimization

2.3.1

Decision and Optimization hohlems

2.3.2

Algorithms

X

CONTENTS

2.4

2.5

2.6

2.7

2.8

2.3.3

Tractable and Intractable Problems

2.3.4

Fundamental Algorithms

Graph Optimization Problems and Algorithms

The Shortest and Longest Path Problems

2.4.1

2.4.2

Vertex Cover

2.4.3

Graph Coloring

2.4.4

Clique Covering and Clique Panitioning

Boolean Algebra and Applications

2.5.1

Boolean Functions

2.5.2

Representations of Boolean Functions

2.5.3

Satisfiability and Cover

Perspectives

References

Problems

3 Hardware Modeling

3.1

3.2

3.3

3.4

3.5

3.6

3.7

Introduction

Hardware Modeling Languages

Distinctive Features of Hardware Languages

3.2.1

3.2.2

Structural Hardware Languages

3.2.3

Behavioral Hardware Languages

HDLs Used for Synthesis

3.2.4

Abstract Models

3.3.1

Structures

3.3.2

Logic Networks

3.3.3

State Diagrams

3.3.4

Data-flow and Sequencing Graghs

Compilation and Behavioral Optimi<ation

3.4.1

Compilation Techniques

3.4.2

Optimization Techniques

Perspectives

References

Problems

Part I1 Architectural-Level Synthesis and Optimization

4 Architectural Synthesis

4.1

4.2

4.3

4.4

Introduction

Circuit specifications for Architectural Synthesis

4.2.1

Resources

4.2.2

Constraints

The Fundamental Architectural Synthesis Problems

4.3.1

The Temporal Domain: Scheduling

4.3.2

The Spatial Domain: Binding

4.3.3

Hierarchical Models

4.3.4

The Synchronization Problem

Area and Performance Estimation

4.4.1

Resource-Dominated Circuits

CONTENTS

4.4.2

General Circuits

Strategies for Architectural Optimization

AreaILatency Optimization

4.5.1

4.5.2

Cycle-TimelLatency Optimization

Cycle-TimeIArea Optimization

4.5.3

4.6 Data-Path Synthesis

4.7 Control-Unit Synthesis

4.7.1

Microcoded Control Synthesis for Non-Hierarchical

Sequencing Graphs with Data-Independent Delay

Operations

4.7.2

Microcoded Control Optimization Techniques*

4.7.3

Hard-Wired ControlSynthesis for Non-Hierarchical

Sequencing Graphs with Data-Independent Delay

Operations

Control Synthesis for Hierarchical Sequencing Graphs

4.7.4

with Data-Independent Delay Operations

4.7.5

Control Synthesis for Unbounded-Latency Sequencing

Graphs*

4.8 Synthesis of Pipelined Circuits

4.9 Perspectives

4.10 References

4.1 I Problems

4.5

5 Scheduling Algorithms

5.1

5.2

5.3

5.4

5.5

5.6

5.7

Introduction

A Model for the Scheduling Problems

Scheduling without Resource Constraints

Unconstrained Scheduling: The ASAP Scheduling

5.3.1

Algorithm

5.3.2

Latency-Constrained Scheduling:

The ALAP Scheduling Algorithm

5.3.3

Scheduling Under Timing Constraints

5.3.4

Relative Scheduling*

Scheduling with Resource Constraints

5.4.1

The Integer Linear Programming Model

Multipmcessor Scheduling and Hu's Algorithm

5.4.2

5.4.3

Heuristic Scheduling Algorithms:

List Scheduling

5.4.4

Heuristic Scheduling Algorithms: Force-directed

Scheduling*

5.4.5

Other Heuristic Scheduling Algorithms*

Scheduling Algorithms for Extended Sequencing Models*

5.5.1

Scheduling Graphs with Alternative Paths*

Scheduling Pipelined Circuits*

5.6.1

Scheduling with Pipelined Resources*

5.6.2

Functional Pipelining*

5.6.3

Loop Folding*

Perspectives

-

xi

156

158

159

160

163

163

166

167

168

170

171

174

I78

181

182

183

xii

CONTENTS

5.8

5.9

References

Problems

6 Resource Sharing and Binding

6.1

6.2

Introduction

Sharing and Binding for Resource-Dominated Circuits

Resource Sharing in Non-Hierarchical Sequencing Graphs

6.2.1

Resource Sharing in Hierarchical Sequencing Graphs

6.2.2

6.2.3

Register Sharing

6.2.4

Multi-Port Memory Binding

6.2.5

Bus Sharing and Binding

6.3 Sharing and Binding for General Circuits*

UnconsIrGned Minimum-Area Binding*

6.3.1

6.3.2

Performance-ConsIrained and Performance-Directed

Binding*

Considerations for Other Binding Problems*

6.3.3

6.4 Concurrent Binding and Scheduling

6.5 Resource Sharing and Binding for Non-Scheduled Sequencing

Graphs

Sharing and Binding for Non-Scheduled Models

6.5.1

Size of the Design Space*

6.5.2

6.6 The Module Selection Problem

6.7 Resource Sharing and Binding for Pipelined Circuits

6.8 Sharing and Structural Testability*

6.9 Perspectives

6.10 References

6.11 Problems

-

Part I11 Logic-Level Svnthesis and O~timization

~

7 Two-Level Combinational Logic Optimization

7.1

7.2

7.3

7.4

Introduction

Logic Optimization Principles

7.2.1

Definitions

7.2.2

Exact Logic Minimization

7.2.3

Heuristic Logic Minimization

7.2.4

Testability Properties

Operations on Two-Level Logic Covers

7.3.1

The Positional-Cube Notation

7.3.2

Functions with Multiple-Valued Inputs

7.3.3

List-Oriented Manipulation

7.3.4

The Unate Recursive Paradigm

Algorithms for Logic Minimization

7.4.1

Expand

7.4.2

Reduce*

Irredundant*

7.4.3

7.4.4 a Essentials*

7.4.5

The ESPRESSO Minimizer

CONTEMS

7.5

7.6

7.7

7.8

7.9

Symbolic Minimization and Encoding Problems

7.5.1

Input Encoding

7.5.2

Output Encoding*

7.5.3

Output Polarity Assignment

Minimization of Boolean Relations*

Perspectives

References

Problems

8 Multiple-Level Combinational Logic Optimization

8.1

8.2

Introduction

Models and Transformations for Combinational Networks

8.2.1

Optimization of Logic Networks

8.2.2

Transformations for Logic Networks

8.2.3

The Algorithmic Approach

to Multiple-Level Logic Optimization

8.3 The Algebraic Model

8.3.1

Substitution

8.3.2

Extraction and Algebraic Kernels

8.3.3

Decomposition

8.4 The Boolean Model

8.4.1

Don't Care Conditions and Their Computation

8.4.2

Boolean Simplification and Substitution

8.4.3

Other Optimization Algorithms Using Boolean

Transformations*

8.5 Synthesis of Testable Networks

8.6 Algorithms for Delay Evaluation and Optimization

8.6.1

Delay Modeling

8.6.2

Detection of False Paths*

8.6.3

Algorithms and Transformations fw Delay Optimization

8.7 Rule-Based Systems for Logic Optimization

8.8 Perspectives

8.9 References

8.10 Problems

-

9 Sequential Logic Optimization

9.1

9.2

9.3

9.4

9.5

Introduction

Sequential Circuit Optimization Using State-Based Models

9.2.1

State Minimization

9.2.2

State Encoding

9.2.3

Other Optimization Methods and Recent Developments*

Sequential Circuit Optimization Using Network Models

9.3.1

Retiming

9.3.2

Synchronous Circuit Optimization

by Retiming and Logic Transformations

9.3.3

Don'r Care Conditions in Synchronous Networks

Implicit Finite-State Machine Traversal Methods

9.4.1

State Extraction

9.4.2

Implicit State Minimization*

Testability Considerations for Synchronous Circuits

xiv c o m w s

9.6

9.7

9.8

Perspectives

References

Problems

10 Cell-Library Binding

10.1 Inuoduction

10.2 Problem Formulation and Analysis

10.3 Algorithms for Library Binding

10.3.1 Covering Algorithms Based on Structural Matching

10.3.2 Covering Algorithms Based on Boolean Matching

10.3.3 Covering Algorithms and Polarity Assignment

10.3.4 Concurrent Logic Optimization and Library Binding*

10.3.5 Testability Properties of Bound Networks

10.4 Specific Problems and Algorithms for Library Binding

10.4.1 Look-Up Table FF'GAs

10.4.2 Anti-Fuse-Based FF'GAs

10.5 Rule-Based Library Binding

10.5.1 Comparisons of Algorithmic and Rule-Based Library

Binding

10.6 Perspectives

10.7 References

10.8 Problems

Part IV Conclusions

11 State of the Art and Future Trends

11.1 The State of the Art in Synthesis

11.2 Synthesis Systems

11.2.1 Production-Level Synthesis Systems

11.2.2 Research Synthesis Systems

11.2.3 Achievements and Unresolved Issues

11.3 The Growth of Synthesis in the Near and Distant Future

11.3.1 System-Level Synthesis

11.3.2 Hardware-Software Co-Design

11.4 Envoy

11.5 References

PREFACE

Computer-aided design (CAD) of digital circuits has been a topic of great importance

in the last two decades, and the interest in this field is expected to grow in the

coming years. Computer-aided techniques have provided the enabling methodology

to design efficiently and successfully large-scale high-performance circuits for a wide

spectrum of applications, ranging from information processing (e.g., computers) to

telecommunication, manufacturing control, transportation, etc.

The hook addresses one of the most interesting topics in CAD for digital circuits:

design synthesis and oprimization, i.e., the generation of detailed specifications of

digital circuits from architectural or logic models and the optimization of some figures

of merit, such as performance and area. This allows a designer to concentrate on the

circuit specification issues and to let the CAD programs>laborate and optimize the

corresponding representations until appropriate for implementation in some fabrication

technology.

Some choices have been made in selecting the material of this book. First, we

describe synthesis of digital synchronous circuits, as they represent the largest portion

of circuit designs. In addition, the state of the an in synthesis for digital synchronous

circuits is more advanced and stable than the corresponding one for asynchronous and

analog circuits. Second, we present synthesis techniques at the architectural and logic

level, and we do not report on physical design automation techniques. This choice is

motivated by the fact that physical microelectronic design forms a topic of its own and

has been addressed by other books. However, at present no textbook describes both

logic and architectural synthesis in detail. Third, we present an algorithmic approach

to synthesis based on a consistent mathematical framework.

Infhis textbook we combined CAD design issues that span the spectrum from

circuit modeling with hardware description languages to cell-library binding. These

problems are encountered in the design flow from an architectural or logic model of

a circuit to the specification of the interconnection of the basic building blocks in any

semicustom technology. More specifically, the book deals with the following issues:

Hardware modeling in hardware description languages, such as VHDL, Verilog,

UDWI, and Silage.

.

Compilation techniques for hardware models.

Architectural-level synthesis and optimization, including scheduling, resource sharing and binding, and data-path and control generation.

Logic-level synthesis and optimization techniques for combinational and synchronous sequential circuits.

Library binding algorithms to achieve implementations with specific cell libraries.

These topics have been the object of extensive research work, as documented by the

large number of journal articles and conference presentati'ons available in the literature.

Nevertheless, this material has never been presented in a unified cohesive way. This

book is meant to bridge this gap. The aforementioned problems are described with their

interrelations, and a unifying underlying mathematical structure is used to show the

inherent difficulties of some problems as well as the merit of some solution methods.

To be more specific, the formulation of synthesis and optimization problems considered in this book is hased upon graph theory and Boolean algebra. Most problems

can be reduced to fundamental ones, namely coloring, covering, and satisfiability. Even

though these problems are computationally intractable and often solved by heuristic

algorithms, the exact problem formulation helps in unaerstanding the relations among

the problems encountered in architectural and logic synthesis and optimization. Moreover, recent improvements in algorithms, as well as in computer speed and memory

size, have allowed us to solve some problems exactly for instances of practical size.

Thus this book presents both exact and heuristic optimization methods.

This text is intended for a senior-level or a first-pear graduate course in CAD of

digital circuits for electrical engineers andlor computer scientists. It is also intended to

be a reference book for CAD researchers and developers in the CAD industry. Moreover, it provides an imponant source of information to the microelectronic designers

who use CAD tools and who wish to understand the underlying techniques to he able

to achieve the best designs with the given tools.

A prerequisite for reading the book, or attending a course hased on the book,

is some basic knowledge of graph theory, fundamental algorithms, and Boolean algebra. Chapter 2 summarizes the major results of these fields that are relevant to the

understanding of the material in the remaining parts of the book. This chapter can

serve as a refresher for those whose knowledge is rusty, hut we encourage the totally

unfamiliar reader to go deeper into the background material by consulting specialized

textbooks.

'This book can he used in courses in various ways. It contains more material

than could be covered in detail in a quarter-long (30-hour) or semester-long (45-hour)

course, leaving instructors with the possibility of selecting their own topics. Sections

denoted by an asterisk report on advanced topics and can he skipped in a first reading

or in undergraduate courses.

Suggested topics for a graduate-level course in CAD are in Chapters 3-10. The

instructor can choose to drop some detailed topics (e.g., sections with asterisks) in

quarter-long courses. The text can be used for an advanced course in logic synthe-

IxnwacE

xvii

sis and optimization hy focusing on Chapters 3 and 7-10. Similarly, the book can

be used for an advanced course in architectural-level synthesis by concentrating on

Chapters 3 4 .

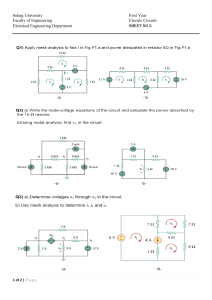

SYKTHESIS & OPTIMIZATION

Arrhitrcturill-Le\rl

Scheduling

COLORING

Functlun

Kelatiun

COVERING

M~nimi~ation

SATISFIABILITY

FUNDAMENTAI. PROBLEMS

I

For senior-level teaching. we suggest refreshing the students with some background material (Chapter 2). covering Chapters 3-4 and selected topics in scheduling

(e.g., Sections 5.2 and 5.3). hinding (e.g.. Section 6.2). two-level logic optimization

(e.g., Section 7.2), multiple-level optimization (e.g.. Sections 8.2 and 8.3). sequential

synthesis (e.g., Sections 9.2 and 9.3.1). and library binding (e.g., Section 10.3.1). Most

chapters start with a general introduction that provides valuable information without

digging into the details. Chapter 4 summarizes the major issues in architectural-level

synthesis.

The author would like to thank all those who helped him in writing and correcting the manuscript: Jonathan Allen. Massachusetts Institute of Technology; Gactano

Boniello, University of Washington: Donald Bouldin. University of Tennessee; Raul

Camposano, Synopsys: Maciej Cicsielski. University of Massachusetts at Amherst;

JosC ~ o r t e s Purdue

,

University: David Ku. Stanford University and Redwood Design

Automation: Michael Lightner. Univerlity of Colorado at Boulder: Sharad Malik,

Princeton University: Victor Nelson. Auburn University: Fabio Somenri, University

of Colorado at Boulder; and, Wayne Wolf. Princeton University. The author would

also like to acknowledge enlightening di\cussions with Robert Brayton of the University of California at Berkeley. with Randall Bryant and Robert Rutenbar of Carnegie

Mellon University, and with Alcc Stanculescu of Fintronic. The graduate students

xviii

PREFACE

of the CAD synthesis group at Stanford University provided invaluable help in improving the manuscript: Luca Benini, Claudionor Coelho, David Filo, Rajesh Gupta,

Nonis Ip, Polly Siegel, Thomas Tmong, and Jerry Yang. Thanks also to those brave

students of EE318 at Stanford, of ECE 560 at Carnegie Mellon and of CSE590F at the

University of Washington who took courses in 1992 and in 1993 based on a prototype

of this book and gave intelligent comments. Last but not least, I would like to thank

my wife Marie-Madeleine for her encouragement and patience during the writing of

this book. This work was supported in part by the National Science Foundation under

a Presidential Young Investigator Award. Comments on this book can be sent to the

author by electn)nic mail: hook@galileo.stanford.edu.

Giovanni De Micheli

SYNTHESIS AND OPTIMIZATION

OF DIGITAL CIRCUITS

I

PART

CIRCUITS

AND

MODELS

CHAPTER

INTRODUCTION

Wofur arbeitet ihr? Ich halte dafur, dass das einzige Ziel der Wissenschaft d a r k

besteht, die Miihseligkeit der mer~schlichenExistenz zu erleichtern.

What are you working for.7 I maintain that the only puTpose of science is to ease

the hardship of human existence.

B. Brecht. Leben des Galilei.

1.1 MICROELECTRONICS

Microelectronics has been the enabling technology for the development of hardware

and software systems in the recent decades. The continuously increasing level of

integration of electronic devices on a single substrate has led to the fabrication of

increasingly complex systems. The integrated circuit technology, based upon the use

of semi~onductormaterials, has progressed tremendously. While a handful of devices

were integrated on the first circuits in the 1960%circuits with over one million devices

have been successfully manufactured in the late 1980s. Such circuits, often called Very

Large Scale Integrution (VLSI) or microelecrronic circuits, testify to the acquired

ability of combining design skills with manufacturing precision (Figure I .I).

Designing increasingly integrated and complex circuits requires a larger and

larger capital investment, due to the cost of refining the precision of the manufacturing

process so that finer and finer devices can be implemented. Similarly, the growth in

1

I

0

y

1

I

I

I

1960 1965 1970 1975 1980 1985 I990 1995 2000

FIGURE 1.1

Moore's law showing the growth

of microelectronic circuit aire,

measured in terms of devices per

chip.

scale of the circuits requires larger efforts in achieving zero-defect designs. A particular

feature of microelectronics is the near impossibility to repair integrated circuits, which

raises the importance of designing circuits correctly and limiting the manufacturing

defects.

The economics of VLSl circuits relies on the principle that replicating a circuit

is straightforward and therefore the design and manufa'cturing costs can be recovered

over a large volume of sales. The trend toward higher integration is economically

positive because it leads to a reduction in the number of components per system, and

therefore to a reduced cost in packaging and interconnection, as well as to a higher

reliability of the overall system. More importantly, higher integration correlates to

faster circuits, because of the reduction of parasitic effects in the electronic devices

themselves and in the interconnections. As a result, the higher the integration level is,

the better and cheaper the final product results.

This economic analysis is valid under the simplifying assumption that the volume

of sales of a circuit (or of a system with embedded circuits) is large enough to

recapture the manufacturing and design costs. In reality, only few circuit applications

can enjoy a high volume of sales and a long life. Examples have been some general

purpose microprocessors. Unfortunately, the improvement in circuit technology makes

the u n u i t s obsolete in a short time.

At present, many electronic systems require integrated dedicated components

that are specialized to perform a task or a limited set of tasks. These are called

Application Specijic Integrated Circuir.~.or ASICs, and occupy a large portion of the

market share. Some circuits in this class may not he produced in large volume because

of the specificity of their application.

Thus other factors are important in microelectronic circuit economics. First, the

use of particular design styles according to the projected volume of sales. Second,

INTRODUCTION

5

the reduction of design time, which has two implications. A reduction of design

cost, associated with the designers' salaries and a reduction of the time-to-market.

This last factor has been shown to be key to the profitability of many applications.

Third, the quality of the circuit design and fabrication, measured in performance and

manufacturing yield.

Computer-Aided Design (CAD) techniques play a major role in supporting the

reduction of design time and the optimization of the circuit quality. Indeed, as the

level of integration increases, carrying out a full design without errors becomes an

increasingly difficult task to achieve for a team of human designers. The size of the

circuit, usually measured in terms of the number of active devices (e.g., transistors),

requires handling the design data with systematic techniques. In addition, optimizing

features of large-scale circuits is even a more complex problem, because the feasible

implementation choices grow rapidly in number with the circuit size.

Computer-aided design tools have been used since the inception of the integrated

circuits. CAD systems support different facets of the design of microelectronic circuits.

CAD techniques have reached a fairly good level of maturity in many areas, even

though there are still niches with open problems. Moreover, the continuous growth in

size of the circuits requires CAD tools with increased capability. Therefore continuous

research in this field has targeted design and optimization problems for large-scale

circuits, with particular emphasis on the design of scalable tools. The CAD industry

has grown to be a substantial sector of the global electronic industry. Overall, CAD

is as strategic for the electronic industry as the manufacturing technology is. Indeed,

CAD is the codification of the design-flow technology.

In the following sections, we consider first the different microelectronic circuit

technologies and design styles. We consider then the des&n flow and its abstraction

as a series of tasks. We explain the major issues in synthesis and optimiratiou of

digital synchronous circuits, that are the subject of this hook. Finally, we present the

organization of the book and comment on some related topics.

1.2 SEMICONDUCTOR TECHNOLOGIES

AND CIRCUIT TAXONOMY

Microelectronic circuits exploit the properties of semiconductor materials. The circuits

are constructed by first patterning a substrate and locally modifying its properties

and then by shaping layers of interconnecting wires. The fabrication process is often

very complex. It can he classified in terms of the type of semiconductor material

(e.g., silicon, gallium-arsenide) and in terms of the electronic device types being

constructed. The most common circuit technology familles for silicon substrates are

~ o r n ~ l ~ r n e n tMetal

a r y Oxide Semiconductor (CMOS), Bipolar and a combination of

the two called BiCMOS. Even within a family, such as CMOS, different specific

technologies have been developed. Examples for CMOS are: single-well (P or N), hvinwell and silicon on sapphire (SOS). Specific technologies target particular markets:

for example, SOS is less sensitive to radiation and proper for space applications.

Electronic circuits are generally classified as analog or digital. In the former

class, the information is related to the value of a continuous electrical parameter, such

6 crnctrns

AKO MODELS

as a voltage or a current. A power amplifier for an audio stereo system employs analog

circuits. In digital circuits, the inionnation is quantized. Most digital circuits we binary, and therefore the quantum of information is either a TRUE or a FALSE value. The

information is related to ranges of voltages at circuit internal nodes. Digital circuits

have shown to be superior to analog circuits in many classes of applications, primarily

electronic computing. Recently, digital applications have pervaded also the signal processing and telecommunication fields. Examples are compact disc players and digital

telephony. Today the number of digital circuits overwhelms that of analog circuits.

Digital circuits can be classified in terms of their mode of operations. Synchronoits circuits are characterized by having information storage and processing synchronized to one (or more) global signal, called clock. Asynchronous circuits do not

require a global clock signal. Different styles have been proposed for asynchronous

design methods. When considering large-scale integration circuits, synchronous operation is advantageous because it is conceptually simpler; there is a broader common

knowledge of design and verification methods and therefore the likelihood of final

correct operation is higher. Furthermore, testing techniques have been developed for

synchronous digital circuits. Conversely, the advantage of using asynchronous circuits

stems from the fact that global clock distribution becomes more difficult as circuits

grow in size, therefore invalidating the premise that designing synchronous circuits

is simpler. Despite the growing interest in asynchronous circuits, most commercial

digital designs use synchronous operation.

It is obvious that the choice of a semiconductor technology and the circuit

class affect the type of CAD tools that are required. The choice of a semiconductor

technology affects mainly the physical design of a circuit, i.e., the determination

of the geometric patterns. It affects only marginany functional design. Conversely.

the circuit type (i.e., analog, synchronous digital or asynchronous digital) affects the

overall design of the circuit. In this book we consider CAD techniques for synchronous

digital circuits, because they represent the vast majority of circuits and because these

CAD design techniques have reached a high level of maturity.

1.3 MICROELECTRONIC DESIGN STYLES

The economic viability of microelectronic designs relies on some conflicting factors,

such as projected volume, expected pricing and circuit p"eonnance required to be

competitive. For example, instruction-set microprocessors require competitive petformance and price; therefore high volumes are required to be profitable. A circuit for a

space-based application may require high performance and reliability. The projected

volume may be very low, but the cost may be of no concern because still negligible

with respect to the cost of a space mission. A circuit for consumer applications would

require primarily a low cost to be marketable.

Different design styles, often called methodologies, have been used for microelectronic circuits. They are usually classified as custom and semicustom design styles.

In the former case, the functional and physical design are handcrafted, requiring an

extensive effort of a design team to optimize each detailed feature of the circuit. In

-:.SA

--"- *ha A-c'-- -ffn.t mi r n c t are hieh. often compensated by the achievement

of high-quality circuits. It is obvious that the cost bas either to be amortized over a

large volume production (as in the case of processor design) or borne in full (as in the

case of special applications). Custom design was popular in the early years of microelectronics. Today, the design complexity bas confined custom design techniques to

specific portions of a limited number of projects, such as execution and floating point

units of some processors.

Semicustom design is based on the concept of restricting the Circuit primitives

to a limited number, and therefore reduting the possibility of fine-tuning all parts

of a circuit design. The restriction allows the designer to leverage well-designed and

well-characterized primitives and to focus on their interconnection. The reduction of

the possible number of implementation choices makes it easier to develop CAD tools

for design and optimization, as well as reducing the design time. The loss in quality

is often very small, because fine-tuning a custom design may be extremely difficult

when this is large, and automated optimization techniques for semicustom styles can

explore a much wider class of implementation choices than a designer team can afford. Today the number of semicustom designs outnumbers custom designs. Recently,

some high-performance microprocessors have been designed fully (and partially) using

semicustom styles.

Semicustom designs can be partitioned in two major classes: cell-based design

and array-based design. These classes further subdivide into subclasses, as shown in

Figure 1.2. Cell-based design leverages the use of library cells, that can be designed

once and stored, or the use of cell generators that synthesize macro-cell layouts from

their functional specifications. In cell-based design the manufacturing process is not

simplified at all with respect to custom design. Instead, the design process is simplified,

because of the use of ready-made building blocks.

Cell-based design styles include standard-cell design (Figure 1.3). In this case,

the fundamental cells are stored in a library. Cells are designed once, but updates are

required as the progress in semiconductor technology allows for smaller geometries.

Since every cell needs to be parametrized in terms of area and delay over ranges

of temperatures and operating voltages, the library maintenance is far from a trivial

task. The user of a standard-cell library must first conform his or her design to the

available library primitives, a step called library binding or technology mapping. Then,

cells are placed and wired. All these tasks have been automated. An extension is

the hierarchical standard-cell style, where larger cells can be derived by combining

smaller ones.

Macro-cell based design consists of combining building blocks that can be synthesized by computer programs, called cell or module generators. These programs vary

widely in capabilities and have evolved tremendously over the last two decades. The

first generators targeted the automatic synthesis of memory arrays and programmablelogic arrays (PLAs). Recently highly sophisticated generators have been able to syothesize the layout of various circuits with a device density and performance equal or

superior to that achievable by human designers. The user of macro-cell generators has

just to provide the hnctional description. Macro-cells are then placed and wired. Even

though these steps have been automated, they are inherently more difficult and may

be less efficient as compared to standard-cell placement and wiring, due to the irreg-

-

SEMICUSTOM

CELL-BASED

MACRO CELLS

Generators:

Memory

PLA

Sparse logic

Gate matrix

ARRAY-BASED

PREDIFFUSED

Gale arrays

Sea of gates

Cornpacled arrays

PREWIRED

Anti-fuse based

Memory-based

FlGURE 1.2

Semicustom design styles: a taxonomy.

ularity in size of the macro-cells. A major advantage of cell-based design (standard

and macro-cells) is the compatibility with custom design. Indeed custom components

can be added to a semicustom layout, and vice versa. The combination of custom

design and cell-based design has been used often for microprocessor design, and also

referred to as structured custom design (Figure 1.4).

Array-based design exploits the use of a matrix of uncommitted components,

often called sites. Such components are then personalized and interconnected. Arraybased circuits can be further classified as predi@sed and prewired, also called mask

programmable and field programmable gate arrays, respectively (MPGAs and FPGAS). In the first case hatches of wafers, with arrays of sites, are manufactured. The

chip fahrication process entails programming the sites by contacting them to wires,

i.e., by manufacturing the routing layers. As a result, only the metal and contact layers

are used to program the chip, hence the name "mask programmable." Fewer manufacturing steps correlate to lower fahrication time and cost. In addition, the cost of

the prediffused batches of wafers can be amortized over several chip designs.

There are several styles of prediffused wafers, that often go under the names

of gate arrays, compacted arraysM and sea-of-gates (Figure 1.5). The circuit designer

that uses these styles performs tasks similar to those used for standard cells. He or

sbemust first conform the design to the available sites, described by a set of library

primitives, then apply placement and wiring, that are subject to constraints typical of

the array image, e.g., slot constraints for the position of the sites. Also in this case,

all these tasks have been automated.

Prewired gate arrays have been introduced recently and are often called "field

programmable gate arrays" because these can be programmed in the "field," i.e.,

outside the semiconductor foundry. They consist of arrays of programmable modules,

each having the capability of implementing a generic logic function (Figure 1.6).

'allod luarrna a~c!.~dcuddn

u p i(q

paTJane11 U ? ~ MI ! ~ J J ! O uoqs F s a u l o ~ ~1nql

q =>!nap 1!n31!~uada un F! asn)!lur: ilr 'i([asranun3 a y n d l u a ~ l n ~

n c ! ~ d a ~ d dun

r Lq pzsl?n~ll uaq* I!n31!5 "ado up ?amoJaq isql a j n a p i ! n ~ > uoqs

! ~ 4 s! asnj v ,

.uo!mauuoaralu! pue uo!le~n4yno3

alnpom aql ol s a w p ley] uogemroju! aJols 01 p a u r m ~ 4 o r daq ues L e m a41 ap!su!

smamala Oomam 'Llan!leulallv .xaqlaZo~l o

a 8 e ~ l o01~smdu! alnpolu 8u!13auuo~

Lq paz!le!3ads aq u e suo!~?~un~

~

slrauat) ;sasn$!Im Su!mmexBo~dLq palsauuos aq 1m3

'sluaru4as jo uuoj aql u! luasaid Lpnar[n ' s a ~ xLem

! ~ om] u! paAarq3e s! Bu!lulue1801d

FIGURE 1.4

Microphotograph of the Alpha AXP chip by Digital Equipment Corporation, using several macro-cells

designed with proprietary CAD tools. (Courtesy of Digital Eqt~ipmentCorporation.)

In a prewired circuit, manufacturing is completely carried out independently of

the application, hence amortized over a large volume. The design and customization

can be done on the field, with negligible time and cost. A drawback of this design style

is the low capacity of these arrays and the inferior performance as compared to other

design styles. However, due to the relative immaturity of the underlying technology, it

is conceivable that capacity and speed of prewired circuits will improve substantially

in the future. At present, prewired circuits provide an excellent means for prototyping.

We would now like to compare the different design styles, in terms of density,

performance, flexibility, design and manufacturing times and cost (for both low and

high volumes). Manufacturing time of array-based circuits is the time spent to customize them. The comparison is reported in the following table, which can serve as

FIGURE 1.5

A mask programmable gate a r a y from the IBM Enterprise Sysfem/9000 air-cooled procmsor technology.

(Courtesy and copyright of IBM. reprinted with permi<sion.)

a guideline in choosing a style for a given application and market:

Densit)

Performance

Flexibility

Design time

Manufncturing time

Cost - low volumc

Cost - high volume

Ct~storn

Very High

Very Hiph

Very High

Very Long

Medium

Very High

Low

Cell-bared

High

High

High

Short

Medium

High

Low

Prrd(ffu.wd

Prewir~d

High

High

Medium

Shon

Short

High

Low

Medium-Low

Medium-Low

Low

Very Short

Very Shon

Low

High

Even though custom is the most obvious style for general purpose circuits and

semicustom is the one for application-specific circuits, processor implementations

FI(;LRI< 1.6

A IirliiLprograrnmehle gate anay: ACT'"' 2 by Artel Corporation. (Courtesy 01' ACTEL Corporarion.!

have been donc in semicustom and ASIC in custom, as in the case of some space

applications. It is important to stress that ASIC is not a synonym for semicustom style,

as often enoneously considered.

1.4 DESIGN OF MICROELECTRONIC

CIRCUITS

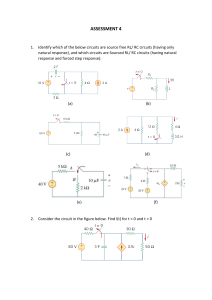

There arc four stages in the creation of an integrated circuit: design, fabrication,

resring and packaging [Y. 101 (Figure 1.7). We shall consider here the design stage in

and modeling,

more detail. and we divide it into three major tasks: coru.~~pfuulkution

synthe~isand optifnnimtion,and ~juiid[~tion.

The first task consists of casting an idea into

a model, which captures the function that the circuit will perform. Synthesis consists

of relining the model, from an abstract one to a detailed one, that has all the features

TESTING

DESIGN

1

tester

11100

FABRICATION

I

- I

FIGURE 1.7

T h e four phases in

creating a microelectronic chip.

-

required for fabrication. An objective of design is to maximize some figures of merit

of the circuit tlial relale lo its quality. Validation consists of verifying the consistency

of the models used during design, as well as some properties pf the original model.

Circuit models are used to represent ideas. Modeling plays a major role in

microelectronic design, because it represents the vehicle used to convey information.

Modeling must be rigorous as well as general, transparent to the designer and machinereadable. Today, most modeling is done using Hardware Description Languages, or

HDLs. There are different flavors of HDLs, which are described in detail in Chapter

3. Graphic models are also used, such as flow diagrams, schematic diagrams and

geometric layouts. The very large-scale nature of the problem forces the modeling

style, both textual and graphical, to support hierarchy and abstractions. These allow

a designer to concentrate on a portion of the model at any given time.

Circuit synthesis is the second creative process. The first is performed in the

designer's mind when conceptualizing a circuit and sketching a first model. The overall goal of circuit synthesis is to generate a detailed model of a circuit, such as a

geometric layout, that can be used for fabricating the chip. This objective is achieved

by means of a stepwise refinement process, during which the original abstract model

provided by the designer is iteratively detailed. As synthesis proceeds in refining the

model, more information is needed regarding the technology and the desired design

implementation style. Indeed a functional model of a circuit may be fairly independent

14 crnc~.~rs

AND

MODELS

from the implementation details, while a geometric layout model must incorporate all

technology-dependent specifics, such as, for example, wire widths.

Circuit optimization is often combined with synthesis. It entails the selection of

some particular choices in a given model, with the goal of raising one (or more) figures

of merit of the design. The role of optimization is to enhance the overall quality of the

circuit. We explain now in more detail what quality means. First of all, we mean circuit

performance. Performance relates to the time required to proc'ess some information,

as well as to the amount of information that can be processed in a given time period.

Circuit performance is essential to competitive products in many application domains.

Second, circuit quality relates to the overall area. An objective of circuit design is to

minimize the area, for many reasons. Smaller circuits relate to more circuits per wafer,

and therefore to lower manufacturing cost. The manufacturing yield2 decreases with an

increase in chip area. Large chips are more expensive to package too. Third, the circuit

quality relates to the testabilify, i.e., to the ease of testing the chip after manufacturing.

For some applications, the faulr coverage is an important quality measure. It spells

the percentage of faults of a given type that can be detected by a set of test vectors. It

is obvious that testable chips are desirable, because earlier detection of malfunctions

in electronic systems relates to lower overall cost.

Circuit validation consists of acquiring a reasonable certainty level that a circuit

will function correctly, under the assumption that no manufacturing fault is present.

Circuit validation is motivated by the desire to remove all possible design errors, before

proceeding to the expensive chip manufacturing. It can be performed by simulation and

by verification methods. Circuit simulation consists of analyzing the circuit variables

over an interval of time for one specific set (or more sets) of input patterns. Simulation

can be applied at different levels, corresponding to4he model under consideration.

Simulated output patterns must then conform to the expected ones. Even though

simulation is the most commonly used way of validating circuits, it is often ineffective

for large circuits except for detecting major design flaws. Indeed, the number of

relevant input pattern sequences to analyze grows with the circuit size and designers

must be content to monitor a small subset. Verification methods, often called formal

verification methods, consist of comparing two circuit models, and to detect their

consistency. Another facet of circuit verification is checking some properties of a

circuit model, such as, for example, whether there are deadlock conditions.

1.5 COMPUTER-AIDED SYNTHESIS AND

OPTIMIZATION

Computer-aided tools provide an effective means for designing microelectronic circuits

that are economically viable products. Synthesis techniques speed up the design cycle

he yield is the percenmge of manufacrured chips that operate correctly. In general, when fabrication

faults relate to spots. the defect density per unit area is constant and typical of a manufacturing plant and

process. Therefore the probability that one cpot makes the circuit function incorrectly increases with the

chin area.

and reduce the human effort. Optimization techniques enhance the design quality. At

present, synthesis and optimization techniques are used for most digital circuit designs.

Nevertheless their power is not yet exploited in full. It is one of the purposes of the

hook to foster the use of synthesis and optimization techniques, because they can be

instrumental in the progress of electronic design.

We report in this hook on circuit synthesis and optimization for digital syuchronous circuits. We consider now briefly the circuit models and views and then

comment on the major classification of the synthesis tasks.

1.5.1

Circuit Models

A model of a circuit is an abstraction, i.e., a representation that shows relevant features

without associated details. Synthesis is the generation of a circuit model, starting

from a less detailed one. Models can be classified in terms of levels of abstraction

and views. We consider here three main abstractions, namely: architectural, logic and

geometrical. The levels can he visualized as follows. At the architectural level a circuit

performs a set of operations, such as data computation or transfer. At the logic level,

a digital circuit evaluates a set of logic functions. At the geometrical level, a circuit

is a set of geometrical entities. Examples of representations for architectural models

are HDL models or flow diagrams; for logic models are state transition diagrams or

schematics; for geometric models are floor plans or layouts.

Example 1.5.1. A simple example of the different modeling levels is shown in Figure

1.8. At the architectural level, a processor is described by an HDL model. A schematic

captures the logic-level specification. A two-dimensio~lalgeometric picture represents the

mask layout.

Design consists of refining the abstract specification of the architectural model into

the detailed geomettical-level model, that has enough information for the manufacturing

of the circuit.

We consider now the views of a model. They are classified as: behavioral,

structural and physical. Behavioral views debcribe the function of the circuit regardless

of its implementation. Structural views describe a model as an interconnection of

components. Physical views relate to the physical objects (e.g., transistors) of a design.

Models at different levels can be seen under different views. For example, at

the architectural level, a behavioral view of a circuit is a set of operations and their

dependencies. A structural view is an interconnection of the major building blocks. As

another example, consider the logic-level model of a synchronous circuit. A behavioral

view o f i h e circuit may be given by a state transition diagram, while its structural view

is an interconnection of logic gates. Levels of abstractions and views are synthetically

represented by Figure 1.9, where views are shown as the segments of the letter Y. This

axial arrangement of the views is often referred to as Gajski and Kuhn's Y-chart [3].

Example 1.5.2. Consider again the circuit of Example 1.5.1. Figure 1.10 highlights

behavioral and structural views at both the architectural and the logic levels.

ARCHITECTURAL LEVEL

PC=PC+I;

FETCH (PC);

DECODE (INST):

LOGIC LEVEL

EEI

GEOMETRICAL LEVEL

FlGURE 1.9

Three absmtion levels of a circuit representation

FIGURE 1.9

p-view

Circuit views and levels of

absmctions.

INTRODUCTION

BEHAVIORAL

VIEW

17

STRUCTURAL

VIEW

@

CONTROL

@

state?

ARCHITECIURAL

LEVEL

LOGIC

LEVEL

FIGURE 1.10

Levels of abstractions and corresponding views

1.5.2 Synthesis

The model classification relates to a taxonomy of the synthesis tasks. Synthesis can

be seen as a set of transformations between two axial views. In particular we can

distinguish the synthesis subtasks at the different modeling levels as follows:

Architectural-level synthesis consists of generating a structural view of an architectural-level model. This corresponds to determining an assignment of the circuit

functions to operators, called resources, as well as their interconnection and the

timing pf their execution. It has also been called high-level synthesis or structural

synthesis, because it determines the macroscopic (i.e., block-level) structure of

the circuit. To avoid ambiguity, and for the sake of uniformity, we shall call it

architectural synthesis.

Logic-level synthesis is the task of generating a structural view of a logic-level

model. Logic synthesis is the manipulation of logic specifications to create logic

models as an interconnection of logic primitives. Thus logic synthesis determines

the microscopic (i.e., gate-level) structure of a circuit. The task of transforming a

a-synthesis

p-design

p-view

FIGURE 1.11

Levels of abstractions, views and

synthesis tasks.

logic model into an interconnection of instances of library cells, i.e., the hack end

of logic synthesis, is often referred to as library binding or technology mapping.

Geometrical-level synrhesis consists of creating a physical view at the geometric

level. It entails the specification of all geometric patterns defining the physical

layout of the chip, as well as their position. It is often called physical design, and

we shall call it so in the sequel.

The synthesis tasks are synthetically depicted in Figure 1.11. We now describe

these tasks in more detail. We consider them in theorder that corresponds to their use

in a top-down synthesis system. This sequence is the converse of that corresponding

to their historical development and level of maturity.

ARCHITECTURAL SYNTHESIS. A behavioral architectural-level model can be abstracted as a set of operations and dependencies. Architectural synthesis entails identifying the hardware resources that can implement the operations, scheduling the execution time of the operations and binding them to the resources. In other words,

synthesis defines a stmctural model of a data path. as an interconnection of resources,

and a logic-level model of a conrrol unit, that issues the control signals to the data

path according to the schedule.

The macroscopic figures of merit of the implementation, such as circuit area and

performance, depend heavily on this step. Indeed, architectural synthesis determines

the degree of parallelism of the operations. Optimization at this level is very important,

as mentioned in Section 1.5.3. Architectural synthesis is described in detail in Part 11.

Example 1.53. We consider here first an example of a behavioral view of an architectural model. The example has been adapted from one proposed by Paulin and Knight

161. It models a circuit designed to solve numerically (by means of the forward Euler

method) the following differential equation: y" + 3xy' + 3y = 0 in the interval [O, a ]

with step-size dx and initial values x(0) = x ; y(0) = y : y'(0) = u .

LNTRODUC~ON

II

-

*

STEERING

ALU

&

MEMORY

t

-

19

CONTROL

UNIT

t

FIGURE 1.12

Example of structural view at the architectural level

The circuit can be represented by the following HDL model:

diffeq !

read I x , y , u , dx, a I ;

repeat

!

xi =

x + dx;

.a1 =

u - l 3 ~ x * u ' d x i i 3 * y * d x l ;

yl =

y + u * dx;

c

=

x i < a;

x = xl : u = ul ; y = y i :

I

until ( c I :

write I Y I:

Let us now consider the architectural synthesis task. For the sake of simplicity,

let us assume that the data path of the circuit contains twa resources: a multiplier and

an ALU, that can perform additionlsubtraction and comparison. The circuit contains also

registers, steering logic and a control unit.

A structural view of the circuit, at the architectural level, shows the macroscopic

smchlre of the implementation. This view can be described by a block diagram, as in

Figure 1.12, or equivalently by means of a stmctural HDL.

LOGIC SYNTHESIS. A logic-level model of a circuit can be provided by a state

transition diagram of aJinite-state machine, by a circuit schematic or equivalently by

an HDL model. It may be specified by a designer or synthesized from an architecturallevel model.

The logic synthesis tasks may be different according to the nature of the circuit

(e.g., sequential or combinational) and to the starting representation (e.g., state diagram

or schematic). The possible configurations of a circuit are many. Optimization plays

a major role, in connection with synthesis, in determining the microscopic figures

of merit of the implementation, as mentioned in Section 1.5.3. The final outcome of

logic synthesis is a fully structural representation, such as a gate-level netlist. Logic

synthesis is described in detail in Part In.

Example 1.5.4. Consider the control unit of the previous circuit. Its task is to sequence

the operations in the data path, by providing appropriate signals to the resources. This is

achieved by steering the data to resources and registers in the block called "Steering &

20

CIRCU1TS AND MODELS

read

FIGURE 1.13

(a) Enample of

behe*iural view at the logic level. (b) Example of structural view

at the logic level

Memory," in Figure 1.12. A behavioral view of the control unit at the logic level is given

by a transition diagram, sketched in Figure 1.13 (a), where the signals to the "Steering

& Memory" block are not shown for the sake of simplicity. The control unit uses one

state for reading the data (reset state s,), one state for wriring the data (sg) and seven

states for executing the loop. Signal r is a reset signal.

A structural view of the control unit at the logic level is shown by the hierarchical

schematic of Figure 1.13 (b), which shows the logic gates that implement the transitions

. and that enable the reading and writing of the data. The

among the states (s,.s ~ ss]

subcircuit that controls the iteration is represented by the box labeled "loop control."

PHYSICAL DESIGN. Physical design consists of generating the layout of the chip.

The layers of the layout are in correspondence with the masks used for chip fabrication.

Therefore, the geometrical layout is the final target of microelectronic circuit design.

Physical design depends much on the design style. On one end of the spectrum, for

custom design, physical design is handcrafted by using layout editors. This means

that the designer renounces the use of automated synthesis tools in the search for

optipizing the circuit geometries by fine hand-tuning. On the opposite end of the

spectrum, in the case of prewired circuits, physical design is performed in a virtual

fashion, because chips are fully manufactured in advance. Instead, chip personalization

is done by afuse map or by a memop map.

The major tasks in physical design are placement and wiring, called also routing.

Cell generation is essential in the particular case of macro-cell design, where cells are

synthesized and not extracted from a library. These problems and their solutions are

not described in this book. A brief survey is reponed in Section 1.7.1.

lNTR"OUCTl0N

21

1.5.3 Optimization

Circuit optimization is often performed in conjunction with synthesis. Optimization

is motivated not only by the desire of maximizing the circuit quality, but also by the

fact that synthesis without optimization would yield noncompetitive circuits at all, and

therefore its value would be marginal. In this book, we consider the optimization of

two quality measures, namely, area and perfarmance. We shall comment also on the

relation to testability in Sections 6.8, 7.2.4 and 8.5.

Circuit area is an extensive quantity. It is measured by the sum of the areas of

the circuit components and therefore it can bc computed from a structural view of a

circuit, once the areas of the components are known. The area computation can be

performed hierarchically. Usually, the fundamental components of digital circuits are

logic gates and registers, whose area is known a priori. The wiring area often plays an

important role and should not be neglected. It can be derived from a complete physical

view, or estimated from a structural view using predictive or statistical models.

Circuit performance is an intensive quantity. It is not additive, and therefore its

computation requires analyzing the structure and often the behavior of a circuit. To be

more specific, we need to consider now the meaning of performance in more detail,

according to the different classes of digital circuits.

The performance of a combinational logic circuit is measured by the inputloutput

propagation delay. Often a simplifying assumption is made. All inputs are available

at the same time and the performance relates to the delay through the critical path of

the circuit.

The performance of a synchronous sequential circuit can be measured by its

cycle-time, i.e., the period of the fastest clock that can be applied to the circuit. Note

that the delay through the combinational component of a sequential circuit is a lower

bound on the cycle-time.

When considering an architectural-level model of a circuit as a sequence of operations, with a synchronous sequential implementation, one measure of performance

is circuit latency, i.e., the time required to execute the operations 141. Latency can

be measured in tenns of clock-cycles. Thus the product of the cycle-time and latency

determines the overall execution time. Often, cycle-time and latency are optimized

independently, for the sake of simplifying the optimization problem as well as of

satisfying other design constraints, such as interfacing to other circuits.

Synchronous circuits can implement a sequence of operations in apipeline fashion, where the circuit performs concurrently operations on different data sets. An

additional measure of performance is then the rate at which data are produced and

consumed, called the throughput of the circuit. Note that in a non-pipelined circuit, the

throughpa is less than (or equal to) the inverse of the product of the cycle-time times

the latency. Pipelining allows a circuit to increase its throughput beyond this limit.

Maximum-rate pipelining occurs when the throughput is the inverse of the cycle-time,

i.e., when data are produced and consumed at each clock cycle.

According to these definitions and models, performance optimization consists

in minimizing the delay (for combinational circuits), the cycle-time and the latency

(for synchronous circuits) and maximizing the throughput (for pipelined circuits).

I

1

II

1

*

ALU

*

STEERING

ALU

&

MEMORY

t

-

CONTROL

UNIT

lI

FIGURE 1.14

Alternative example of srmctural view at the architectural level

Example 1.5.5. Consider the circuit of Example 1.5.3. For the sake of simplicity, we

assume that all resources require one cycle to execute. The structure shown in Figure

1.12 has a minimal resource usage, hut it requires seven cycles to execute one iteration

of the loop. An alternative structure is shown in Figure 1.14, requiring a larger area (two

multipliers and two ALUs) but just four cycles. (A detailed explanation of the reason

is given in Chapters 4 and 5.) The two structures correspond to achieving two different

optimization goals.

We consider here design optimization as the combined minimization of area

and maximization of performance. Optimization may be subject to consuaints, such

as upper bounds on the area, as well as lower bounds On performance. We can abstract

the optimization problem as follows. The different feasible structural implementations

of a circuit define its design space. The design space is a finite set of design points.

Associated with each design point, there are values of the area and performance

evaluation functions. There are as many evaluation functions as the design objectives

of interest, such as area, latency, cycle-time and throughput. We call design evaluation

space the multidimensional space spanned by these objectives.

Example 1.5.6. Let us construct a simplified design space for the previous ex&nple, by

just considering architectural-level modeling. For the sake of the example, we assume

again that the resources execute in one cycle and the delay in the steering and control

logic is negligible. Thus we can drop cycle-time issues from consideration.

The design space corresponds to the structures with a, multipliers and a2 ALUs,

where a , . a2 are positive integers. Examples for a , = I ; a2 = 1 and for a , = 2; a2 = 2

'

were shown in Figures 1.12 and 1.14, respectively.

For the sake of the example, let us assume that the multiplier takes five units of

area, the ALU 1 unit, and the control unit, steering logic and memory and an additional

unit. Then, in the design evaluation space, the first implementation has an area equal to

seven and latency proportional to seven. The second implementation has an area equal

to 13 and latency proportional to four.

When considering the portion of the design space corresponding to the following

:

I): (1. 2): (2, 1): (2, 2)). the design evaluation space is shown in

pairs for ( o l , u 2 ) ((1,

FIGURE 1.15

Example of

space.

design evaluation

Figure 1.15. The numkrs in parentheses relate to the corresponding points in the design

space.

Circuit optimization corresponds to searching for the best design, i.e., a circuit

configuration that optimizes all objectives. Since our optimization problem involves

multiple criteria, special attention must be given to defining points of optimality. For

the sake of simplicity and clarity, and without loss of generality, we assume now that

the optimization problem corresponds to a minimization-one. Note that maximizing

the throughput corresponds to minimizing its complement.

A point of the design space is called a Pareto point if there is no other point (in

the design space) with at least an inferior objective, all others being inferior or equal

[I]. A Pareto point corresponds to a global optimum in a monodimensional design

evaluation space. It is a generalization of this concept in the more general context. Note

that there may he many Pareto points, corresponding to the design implementations

not dominated by others and hence worth consideration.

The image of the Pareto points in the design evaluation space is the set of the

optimal trade-off points. Their interpolation yields a trade-off curve or surface.

Example 15.7. Consider the design space and the design evaluation space of Example

1.5.6. The point (1.2) of the design space is not a Pareto point, because it is dominated

by p i n t (1, 1) that has equal latency andsmaller area. In other words, adding an ALU to

an implementation with one multiplier would make its area larger without reducing the

latency: hence this circuit structure can be dropped from consideration. The remaining

points ((1. 1); (2, I ) ; (2. 2)) are Pareto points. It is possible to show that no other pair

of positive integers is a Pareto point. Indeed the latency cannot be reduced further by

additional resources.

We now consider examples of design (evaluation) spaces for different circuit

classes.

475

,m, , ,,

I

I

10

FIGURE 1.16

,

,

,

,, ,,

Delay

,:

,

20

30

40

50

Design evaluation space: areaidelay trade-off

points far a M-bit adder. (Area and delay are

measured in terms of equivalent gates.)

COMBINATIONAL LOGIC CIRCUITS. In this case, the design evaluation space objectives are (area, delay). Figure 1.16 shows an example of the trade-off points in this

space, for multiple-level logic circuit implementations. These points can be interpolated to form an arealdelay trade-off curve.

Example 1.5.8. A first example of arealdelay trade-off is given by the implementation

of integer adders. Ripple-carry adders have smaller area and larger delay than cany

lwk-ahead adders. Figure 1.16 shows the variation in area and in delay of gate-level

implementations of a 64-bit integer adder ranging from a two-level cany look-ahead to

a ripple-carry style.

As a second example, consider the implementation of a circuit whose logic behavior is the product of four Boolean variables (e.g., x = pqrs). Assume that the

implementation is constrained to using two-input andlor three-input AND gates, whose

area and delay are proportional to the number of inputs. Assume also that the inputs

arrive simultaneously. Elements of the design space are shown in Figure 1.17, namely,

four different logic suuctures.

The corresponding design evaluation space is shown in Figure 1.18. Note that

stmctures labeled c and d in Figure 1.17 are not Pareto points.

SEQUENTIAL LOGIC CIRCUITS. When we consider logic-level models for sequen-

tial synchronous circuits, the design evaluation space objectives are: (area, cycle-time),

where the latter is bounded from below by the critical path delay in the combinational

logic component. Therefore, the design evaluation space is similar in concept to that

for combinational circuits, shown in Figures 1.16 and 1.18.

When considering nonpipelined architectural-level models, the design evaluation

space objectives are: (area, latency, cycle-rime), where the product of the last two

yields the overall execution delay. An example of the design evaluation space is

shown in Figure 1.19. Slices of the design evaluation space for some specific values

of one objective are also of interest. An example is the (area, latency) trade-off, as

shown in Figure 1.15.

Last, let us consider the architectural-level model of synchronous pipelined circuits. Now, performance is measured also by the circuit throughput. Hence, the design

FIGURE 1.17

Example of design space: four implementations of a logic function.

Area

7 (a) (c,d)

6 -...................7 - - - - .

5

-....................1

A....

i(b)

-

4 3 -

2

-

1 -

I

I

I

I

I

I

1

2

3

4

5

6

Delay

r

7

FIGURE1.18

Example of design evaluation space: (a)

and (b) are area/delay trade-off points

evaluation space involves the quadruple (area, latency, cycle-time, throughput). Architectural trade-offs for pipelined circuit implementations will be described in Section

4.8, where examples will also be given.

GENERAL APPROACHES TO OPTIMIZATION. Circuit optimization involves multiple objective functions. In the previous examples, the optimization goals ranged from

two to four objectives. The optimization problem is difficult to solve, due to the discontinuous nature of the objective functions and to the discrete nature of the design

space, i.e., of the set of feasible circuit implementations.

In general, Pareto points are solutions to constrained optimization problems.

Consider, for example, combinational logic optimization. Then the following two

Area

Area

Max

Latcncy

Max

FIGURE 1.19

Design evaluation space: aredatencyicycle-time trade-off points.-

problems are of interest:

.

Minimize the circuit area under delay constraints.

Minimize the circuit delay under area constraints.

Unfortunately, due to the difficulty of the optimization problems, only approximations to the Pareto points can be computed.

Consider next architectural-level models of synchronous circuits. Pareto points

are solutions to the following problems, for different values of the cycle-time:

.

Minimize the circuit area under latency constraints.

Minimize the circuit latency under area constraints.

These two problems are often referred to as scheduling problems. Unfortunately

again, the scheduling problems are hard to solve exactly in most cases and only

approximations can be obtained. When considering pipelined circuits, Pareto points

can be computed (or approximated) by considering different values of cycle-time and

throrr~hnut.and bv solving the corres~ondingscheduling problems.

rLEzEF

ABSTRACT MODELS

compilation

Operations and dependencies

(Para-flow & sequencing graphs)

mchitectuml

synthesis &

optimization

compilation

-

t

FSMs-Logic functions

(Scale-diagrams & logic networks)

I

logic

synthesis &

optimization

7

translation

c

Interconnected logic blocks

(Logic networks)

%

FIGURE 1.20

Circuit models, synthesis and optirni~alion:a simplified view

1.6 ORGANIZATION OF THE BOOK

This book presents techniques for synthesis and optimization of combinational and

sequential synchronous digital circuits, staning from models at the architectural and

logic levels. Figure 1.20 represents schematically the synthesis and optimization tasks

and their relations to the models. We assume that circuit specification is done by

means of HDL models. Synthesis and optimization algorithms are described as based

on abstract models, that are powerful enough to capture the essential information

of the HDL models, and that decouple synthesis from language-specific features.

Example of abstract models that can be derived from HDL models by compilation,

are: sequencing and dutu-flow graphs (representing operations and dependencies),

state transition diagrams (describing finite-state machine behavior) and logic networks

(representing interconnected logic blocks, corresponding to gates or logic functions).

This book is divided into four parts. Part I describes circuits and their models.

Chapter 2 reviews background material on optimization problems and algorithms, as

well as the foundation of Boolean algebra. Abstract circuit models used in this book

are based on graph and switching theory. Chapter 3 describes the essential features

of some HDLs used for synthesis, as well as issues related to circuit modeling with

HDLs. Abstract models and their optimal derivation from language models are also

presented in Chapter 3.

Part I1 is dedicated to architectural-level synthesis and optimization. Chapter 4

gives an overview of architectural-level synthesis, including data-path and control-unit

generation. Operation scheduling and resource binding are specific tasks of architectural synthesis, that are described in Chapters 5 and 6, respectively. Architectural

models that are constrained in their schedule and resource usage may still benefit

from control-synthesis techniques (Chapter 4). that construct logic-level models of

sequential control circuits.

Logic-level synthesis and optimization are described in Part 111. Combinational

logic functions can he implemented as two-level or multiple-level circuits. An example

of a two-level circuit implementation is a PLA. The optimization of two-level logic

forms (Chapter 7) has received much attention, also because it is applicable to the

simplification of more general circuits. Multiple-level logic optimization (Chapter 8)

is a rich field of heuristic techniques. The degrees of freedom in implementing a logic

function as a multiple-level circuit make its exact optimization difficult, as well as its

heuristic optimization multiform. Sequential logic models can also be implemented

by two-level or multiple-level circuits. Therefore, some synthesis and optimization

techniques for sequential circuits are extensions of those for combinational circuits.

Specific techniques for synchronous design (Chapter 9) encompass methods for optimizing jnite-state machine models (e.g., state minimization and encoding), as well

as optimizing synchronous networks (e.g., retimina Library binding, or technology

mapping (Chapter lo), is applicable to both combinational and sequential circuits and

is important to exploit at best the primitive cells of a given library.

In Pan IV, Chapter 1 I summarizes the present state of the a n in this field,

and reports on existing synthesis and optimization packages as well as on future

developments.

We consider now again the synthesis and optimization tasks and their relation to

the organization of this book. The flow diagram shown in Figure 1.21 puts the chapters

in the context of different design flows. HDL models are shown at the top and the

underlying abstract models at the bottom of the figure. Note that the order in which

the synthesis algorithm is applied to a circuit does not match with the numbering of

the chapters, for didactic reasons. We describe general issues of architectural synthesis

before scheduling and binding also because some design flows may skip these steps.

We.present combinational logic optimization before sequential design techniques for

the sake of a smooth introduction to logic design problems.

1.7 RELATED PROBLEMS

The complete design of a microelectronic circuit involves tasks other than architecturalllogic synthesis and optimization. A description of all computer-aided methods

and programs for microelectronic design goes beyond the scope of this book. We

1

HDL MODELS

LOGIC LEVEL

ARCHITECTURAL LEVEL

I

I

1

SCHEDULING

I

I

I

BINDING

I

1

SEQUENTIAL

COMBINATIONAL

I

I

1

ARCHITECT.

SYNTHESIS &

OiTIMIZATION

SEQUENRAL

OPTIMIZATION

/

OPTIMIZATION

I

LIBRARY

BINDING

STATE

DATA-FLOW AND SEQUENCING GRAPHS

ABSTRACT MODELS

FlGURE 1.21

Chapters, models and design flows.

LOGIC NETWORKS

30

ClRCUlTS AND MODELS

would like to mention briefly in Section 1.7.1 some physical design and optimization

problems. Indeed, physical design is the back end of circuit synthesis, that provides the

link between structural descriptions and the physical view, describing the geometric

patterns required for manufacturing. We comment on those topics that are tightly related to architecturaMogic synthesis, such as design validation by means of simulation

and verification, and design for testability.

1.7.1 A Closer Look at Physical Design Problems

Physical design provides the link between design and fabrication. The design information is transferred to the fabrication masks from the geometrical layout, that is