ECOM30002 Econometrics 2 Assignment 2

Group 32

Student name:

Hao Jiang 1068007

Motong Zhou 1067834

Yanxi Long 1067839

Zhangjunchen Huang 1078174

Tutor’s name: Paul Nguyen

Section 1

1.1

It is a valid IV.

The Democracy1900i induces changes in the explanatory variable (AvExprRisk i ). Because if

a 𝑐𝑜𝑢𝑛𝑡𝑟𝑦𝑖 is not democratic, its expropriation risk will be higher. Because the less democratic

a country is, the more likely it is to take away private property for some purpose. Therefore,

they are correlated, that is, the degree of democracy score of a country will affect its

‘expropriation’ risk score.

𝐶𝑜𝑣(𝐴𝑣𝐸𝑥𝑝𝑟𝑅𝑖𝑠𝑘𝑖 |𝐷𝑒𝑚𝑜𝑐𝑟𝑎𝑐𝑦1900𝑖 ) ≠ 0

Furthermore, the Democracy1900i has no independent effect on the dependent variable

(logGDPpci ). It is exogenous. Because we know from the macroeconomics that a country’s

GDP level is directly affected by its consumption, investment level, government spending and

net exports. So, the democracy score doesn’t affect a country’s GDP directly and it is

exogenous.

𝐸(𝑉𝑖 |𝐷𝑒𝑚𝑜𝑐𝑟𝑎𝑐𝑦1900𝑖 ) = 0

1.2

The claim is wrong. Because we can see from table 1 that their 2SLS estimation results are not

precisely the same.

The first stage is using the 2 valid IVs to predict the expected explanatory variable and then

the second stage is to regress the dependent variable on the fitted variables from stage 1.

So, if we want to both estimations produce precisely the estimation of the causal parameter, we

have to have the same fitted value from stage 1. However, even logSettMort i and

Democracy1900i are both valid IVs, the fitted value cannot be same which leads to a different

causal parameter.

1.3

The first stage is true.

As the first stage of 2SLS is using 𝑍𝑖 to predict 𝑋𝑖 and the IV (𝑍𝑖 ) is independent of 𝑈𝑖

(𝑐𝑜𝑣(𝑈𝑖 |𝑍𝑖 ) = 0) so the fitted value of 𝑋𝑖 is independent of 𝑈𝑖 too. And as 𝑐𝑜𝑣(𝑋𝑖 |𝑍𝑖 ) ≠ 0

which means that the fitted value of 𝑋𝑖 is correlated with 𝑍𝑖 and is independent of 𝑈𝑖 as

mentioned above. So, the fitted value of 𝑋𝑖 represents the part that does not covary with 𝑈𝑖 .

Furthermore, has a correlation with 𝑈𝑖 as 𝑐𝑜𝑣(𝑋𝑖 |𝑈𝑖 ) ≠ 0 . So, the predicted value of 𝑋𝑖

doesn’t equal the true value of 𝑋𝑖 . And their difference is the part of 𝑋𝑖 that covaries with 𝑈𝑖 .

Q2

1)

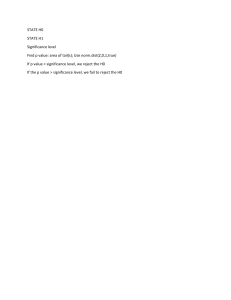

Simulation results for Section 2

Case 1

Mean (delta1)

0.009

SD (delta1)

0.146

Reject H0 (A)

0.100

Reject H0 (B)

0.049

Case 2

-0.000

0.170

0.083

0.039

Case 3

0.500

0.146

0.916

0.844

Case 4

0.495

0.171

0.816

0.852

2)

Since 𝐸(𝑈𝑖 |𝑋1,𝑖 , 𝑋2,𝑖 ) = 0, 𝐸(𝑌𝑖 |𝑋1,𝑖 , 𝑋2,𝑖 ) = 𝐸(𝛽0 + 𝛽1 𝑋1,𝑖 + 𝛽2 𝑋2,𝑖 + 𝑈|𝑋1,𝑖 , 𝑋2,𝑖 ) = 𝛽0 +

𝛽1 𝑋1,𝑖 + 𝛽2 𝑋2,𝑖 + 𝐸(𝑈𝑖 |𝑋1,𝑖 , 𝑋2,𝑖 ) = 𝛽0 + 𝛽1 𝑋1,𝑖 + 𝛽2 𝑋2,𝑖 = 𝛿0 + 𝛿1 𝑋1,𝑖 + 𝛿2 𝑋2,𝑖 . Therefore,

we can see that equation (4) is associated with equation (3). It also implies that 𝛽0 = 𝛿0 , 𝛽1 =

𝛿1 , 𝛽2=𝛿2 .

3)

This claim is not correct since X1 and X2 in equation (4) exist in both SRF and PRF. Therefore,

PRF includes all variables and no omitted variables will affect the causal effect. Therefore, no

matter whether 𝜌 = 0, the equation does not suffer from omitted variables.

4)

It is unbiased for the true beta 1 in any four cases. It is because there is no omitted variables

and U is identical and independent from X1 and X2. As a result, X1 is exogenous no matter

the value of 𝛿 is.

5)

a. In case 1 and 2, deltas are 0.009 and 0 respectively, which are approximately close to 0. As

a result, it proves that the OLS estimator delta is unbiased to the population parameter beta1.

Standard deviation is irrelevant to the result, as it is not vital for the hypothesis test of beta 1 in

this case.

For a more accurate result, different sample sizes can be taken for another test to see if the

result can have the same conclusion as above.

b. The definition of size is that rejecting H0 when H0 is true.

The definition of power is that rejecting H0 when H0 is false.

As a result, for case 1 and 2, H0 is true from question a. As a result, Reject A and B are rejecting

H0 when H0 is true. It is a size of test.

However, for case 3 and 4, the result for delta is 0.5, which means H0 is false itself. As a result,

reject A and B are rejecting h0 when H0 is false, which is a power of test.

c. For case 1 and 2:

the results of reject A are around 0.1, greater than the significance level 0.05. As a result, the

practical probability of type 1 error occurrence is higher than expected. The reject A is not wellsized.

On the other hand, reject B is around the significance level 0.05. As a result, the practical

probability of type 1 error occurrence is about the same as expected. The reject B is well-sized.

For case 3 and 4:

The results of reject A and B demonstrates large powers. Meanwhile, the reject A for case 3 is

larger than reject B, and reject A for case 4 is smaller than that of reject B.

6)

The reason why there is a difference of A and B in case 1 and 2 is that in A, we conduct 2 test

(d1=0, d2=0) in 5% significance level, so the probability that both these tests are not making

mistakes is 0.95*0.95=0.9025. Therefore, the probability that A will occur type 1 error is 10.9025=0.0975, which is approximately 10%. This result is same as the simulation result.

However, B only take 1 test, which means the size is 5%.

Another reason is that in case 3 and 4, A’s power of test is greater than B, because the size of

test will affect power of test. But the power of A in case 4 is lower than the power of A in case

3, because in case 3 rho=0, in case 4 rho=0.5. When rho=0.5, x1 and x2 is correlated, OLS

estimator suffers from imperfect multicollinearity, which causes a larger standard error of the

estimator, which lead to a smaller t-statistic, and it will be harder to reject H0. Thus, the

rejection frequency is lower, which leads to a lower power of test.

7)

This claim is incorrect.

First, the p-value of t-test in R is calculated in the way this researcher described.

Second, because we conduct 2 tests in A, and the probability that both these tests are not making

mistakes is 0.95*0.95=0.9025, which means the probability of type 1 error is about 10%, so in

case 1 and 2 A’s rejection frequency is around 0.1.

Third, in case 3 and 4 we match power of test, and it will not be close to size (0.05).

Appendix:

2.1

library(MASS)

library(AER)

library(xtable)

set.seed(42)

reps <- 1000

n <- 50

beta0<-0

beta2<-0

rhov <- c(0,0.5,0,0.5)

beta1v <- c(0,0,0.5,0.5)

M <- matrix(nrow=reps, ncol=1)

simutable=matrix(nrow=4,ncol=4)

for (i in 1:4){

rho <- rhov[i]

beta1<-beta1v[i]

rejt<-0

rejf<-0

for (j in 1:reps) {

X <- mvrnorm(n, mu=c(0,0), Sigma=cbind(rbind(1,rho),rbind(rho,1)))

X1<-X[,1]

X2<-X[,2]

U<-rnorm(n)

Y<-beta0+beta1*X1+beta2*X2+U

eq1<-lm(Y~X1+X2)

M[i,1]<-coeftest(eq1)[2,1]

pvalue<-linearHypothesis(eq1,c("X1=0","X2=0"),test="F")[2,6]

if(coeftest(eq1)[2,4]<0.05|coeftest(eq1)[3,4]<0.05) {rejt<-rejt+1}

if(pvalue<0.05) {rejf<-rejf+1}

}

simutable[1,i]<-colMeans(M)

simutable[2,i]<-apply(M,2,sd)

simutable[3,i]<-rejt/reps

simutable[4,i]<-rejf/reps

}

colnames(simutable)=c("case1","case2","case3","case4")

rownames(simutable)=c("mean","sd","rejectA","rejectB")

print(simutable)