- BUY BRAIN - Seth Stephens-Davidowitz - Everybody Lies - INGL

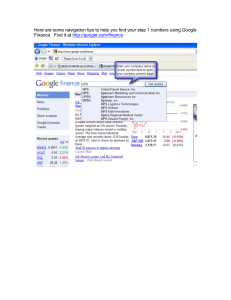

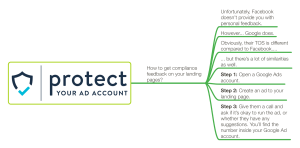

advertisement