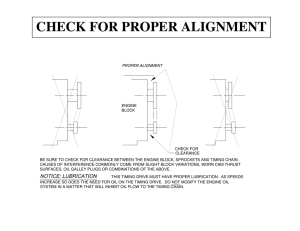

aerospace Article Thrust Prediction of Aircraft Engine Enabled by Fusing Domain Knowledge and Neural Network Model Zhifu Lin 1 , Hong Xiao 1, * , Xiaobo Zhang 1,2 and Zhanxue Wang 1 1 2 * School of Power and Energy, Northwestern Polytechnical University, Xi’an 710072, China Collaborative Innovation Center for Advanced Aero-Engine, Beijing 100191, China Correspondence: xhong@nwpu.edu.cn Abstract: Accurate prediction of aircraft engine thrust is crucial for engine health management (EHM), which seeks to improve the safety and reliability of aircraft propulsion. Thrust prediction is implemented using an on-board adaptive model for EHM. However, the conventional methods for building such a model are often tedious or overly data-dependent. To improve the accuracy of thrust prediction, domain knowledge can be leveraged. Hence, this study presents a strategy for building an on-board adaptive model that can predict aircraft engine thrust in real-time. The strategy combines engine knowledge and neural network architecture to construct a prediction model. The whole-model architecture is divided into separate modules that are mapped in a one-to-one form using a domain decomposition approach. The engine domain knowledge is used to guide feature selection and the neural network architecture design in the method. Furthermore, this study explains the relationships between aircraft engine features and how the model can predict engine thrust in flight condition. To demonstrate the effectiveness and robustness of the architecture, four different testing datasets were used for validation. The results show that the thrust prediction model created by the given architecture has maximum relative deviations below 4.0% and average relative deviations below 2.0% on all testing datasets. In comparison to the performance of the models created by conventional neural network architecture on the four testing datasets, the model created by the presented architecture proves more suitable for aircraft propulsion. Citation: Lin, Z.; Xiao, H.; Zhang, X.; Wang, Z. Thrust Prediction of Aircraft Engine Enabled by Fusing Keywords: thrust prediction; on-board adaptive model; artificial neural network; tailoring architecture; domain decomposition Domain Knowledge and Neural Network Model. Aerospace 2023, 10, 493. https://doi.org/10.3390/ aerospace10060493 Academic Editors: Erinc Erdem and Ernesto Benini Received: 17 January 2023 Revised: 15 March 2023 Accepted: 19 May 2023 Published: 23 May 2023 Copyright: © 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https:// creativecommons.org/licenses/by/ 4.0/). 1. Introduction As a complex system with high-reliability requirements, aircraft engines are developed with engine health management (EHM) to increase reliability [1]. With the advancements in avionics, research focused on EHM is developing from off-board to on-board [2]. Compared to the off-board EHM, on-board EHM enables real-time continuous engine performance monitoring [3]. One important task in on-board EHM is building an on-board adaptive model to predict the engine performance, specifically thrust [4]. In general, an on-board adaptive model consists of an on-board real-time model and a modifier [5]. The on-board real-time model is typically a linearized model based on an aerothermodynamics-based component-level model of engine [6]. Currently, the Kalman filter [7] is commonly used, and effective methods for correcting the results are predicted by an on-board real-time model. Many improvements to the Kalman filter were given [8–10] to increase the accuracy of parameter prediction. Further, the e-storm model for parameter estimation was presented based on the Kalman filter method by NASA [11]. A novel linear parameter-varying approach was made for thrust estimation [12], and developing an on-board adaptive model is still attractive. It is remarkable that on-board real-time model accuracy is crucial for the on-board adaptive model. However, complex objecting modeling such as aircraft engines require conditional assumption and can suffer from errors caused Aerospace 2023, 10, 493. https://doi.org/10.3390/aerospace10060493 https://www.mdpi.com/journal/aerospace Aerospace 2023, 10, 493 2 of 17 by modeling methods and model solving, decreasing the model accuracy [13,14]. For aircraft engines, the strong coupling in the engine systems and the degradation of engine performance is difficult to be represented by the high-precision physical model. On the other hand, data-driven methods have been developed for an on-board adaptive model for predicting engine parameters [15,16]. Data-driven methods discard physical equations and obtain information from data. Through sufficient representative data, datadriven methods use mathematics to find a model or a combination of models that approximate the exact physical model. Four typical data-driven methods, including Random Forest (RF), Generalized Regression Neural Network (GRNN), Support Vector Regression (SVR), and Radial Basis Neural Network (RBNs) have been used to develop a temperature baseline model for aircraft engine performance health management [17]. Zhao et al. performed much research on support vector machines (SVMs) and used SVMs for aircraft engine parameter estimation [18]. Zhao studied other methods for thrust prediction, such as radial basis networks [19]. An extreme learning machine is also used in aircraft engine parameter estimation [20]. However, the quality and quantity of data greatly affect the final result in a data-driven method. Relying solely on the data without physical constraints may produce an unreasonable result in data-driven methods [21]. To address the issue that the accuracy of the data-driven method model is limited by the training dataset, hybrid methods that incorporate physical information have been developed [22,23]. Reference [24] describes three methods for adding physical information to neural networks (a kind of data-driven method), namely observational biases, inductive biases, and learning biases. Observational biases add data that reflect underlying physical principles to the neural network. For example, data augmentation techniques [25] such as rotating, zooming, flipping, and shifting an image can create additional data that reflect physical invariance. Inductive bias is how to tailor a neural network architecture to satisfy a set of physical functions; for example, a node in a neural network can represents a physical symbol, and the connection between the nodes can satisfy the operator in a physical function [26,27]. Learning biases can be introduced by the intervening training phase of a data-driven model, and the training of a model converges towards a solution that adheres to underlying physical constraints. One way to introduce learning biases is by imposing physical equations or principles to penalize the loss function of conventional neural networks [28,29]. The above-mentioned methods for the hybrid model relate to differential or algebraic equations. However, due to the complexity of the physical equations related to aircraft engine systems, these methods for an on-board model may be challenging to implement in the short term. To avoid the complexity of dealing with mathematical physical equations, the focus of this study was to explore the combination of aircraft engine knowledge and the neural network models. In this study, an on-board adaptive model for predicting engine thrust is given by blending engine domain knowledge and the neural network models. In Section 2, the architecture of the hybrid model is presented in detail, which fuses networks for predicting engine parameters. The section also provides a brief introduction to the traditional neural network model. Additionally, the relationships between aircraft engine features are described to explain why the model trained with ground measurable data can be used in the flight condition. Next, a model for predicting engine thrust based on the architecture is discussed. In Section 3, the models are verified using simulation data and compared with conventional neural network models. Finally, Section 4 concludes the study. 2. On-Board Adaptive Model 2.1. Architecture by Fusing Physical Structure and Neural Network Inspired by the integration of coupling relationships between engine components (such as Figure 1a), the domain decomposition approach is used to deconstruct the neural network structure. In the digital space, a large neural network is divided into multiple independent neural networks, corresponding to engine components in the physical space. The coupling relationships guide the interconnection of independent neural networks in Aerospace 2023, 10, 493 3 of 17 the digital space. The architecture integrates neural networks and aircraft engine domain knowledge using the following points: 1. 2. 3. 4. Aerospace 2023, 10, x FOR PEER REVIEW Based on domain decomposition, a neural network is divided into multiple subnetworks and the number of which corresponds to the number of engine components. A subnetwork represents an engine component, and the input features of the subnetwork are related to the corresponding engine component. The subnetworks are interconnected based on the interconnection between the engine components. The order of data flowing through the subnetwork is based on the order in which air flows through the engine component. For example, the air flows sequentially through an inlet, a fan, and then a compressor. Correspondingly, the data flow sequentially through an inlet subnetwork, a fan subnetwork, and then a compressor subnetwork. The physical constraint on the networks is that the rotation speed of the components 4 of 17 on the same axial is equal. For example, the same rotation speed is used as input to a fan subnetwork and a low-pressure turbine subnetwork. Direction of airflow in aeroengine Airflow in air system Control order Control System Bypass Inlet Fan Compressor HP Turbine Combustor LP Turbine Air System (a) Target parameters Mapping Layer Coupling Layer Inlet network Measured parameters Compressor network Combustor network HP Turbine network System network …… LP Turbine network Fan network Input from data Input from network (b) Figure engine structure and neural network architecture. (a) A sketch of anof Figure1.1.Aircraft Aircraft engine structure and neural network architecture. (a)constructure A constructure sketch aircraft propulsion. (b) A sketch of the architecture enabledenabled by fusingbyengine and neural an aircraft propulsion. (b) A sketch of the architecture fusingstructure engine structure and network. neural network. Specifically, thedescription, architectureitconsists three that makearchitecture up the neural networks. From the above can be of seen thatlayers the presented serves as a The first layer is the component/system learning second is referred to as designing framework for a predicting model and itlayer, is notthe limited tolayer a particular engine the coupling layer, and the lastneural layer is the mapping layer, as shown inrelationships Figure 1b. Each type. Compared to conventional networks, incorporating coupling of engine components into neural networks simplifies feature selection. The input features are filtered and clustered based on the requirements of the component/system networks. Moreover, the presented architecture does not mandate all input features to be input at once. Instead, a component/system learning network only reads relevant sensor parame- Aerospace 2023, 10, 493 4 of 17 layer performs its function. The component/system learning layer processes the input features based on the coupling relationships of the engine components. The coupling layer integrates features according to the operation order of the engine components. The mapping layer is a regressive analysis that connects abstract features with target parameters. The component/system learning layer comprises multiple independent neural networks, each of which represents an engine component or system and is referred to as a component/system learning network. A sequence of component learning networks forms the main body of the component/system learning layer. The networks are arranged in the order of components located in the engine main flow. A component network reads features from two parts: sensor parameters that measure the corresponding component and the feature from a component network in the same shaft. For example, the inlet network reads the total pressure at the inlet entry, and the high-pressure turbine network reads the corresponding component feature and the feature from the compressor network. The combustor network receives the fuel flow rate as input, and the turbine network reads the temperature and pressure measured at the component section. Thus, the physical constraint that components working on the same shaft have an equal rotation speed is mapped to the digital space. A system learning network, such as an oil system network or a control system network, also reads the corresponding parameters to learn the system characteristics. The position of a system network is determined by the role of the corresponding system in the engine. Therefore, the number of component/system learning networks equals the number of engine components/systems. After processing the measured variables, the component/system learning layer outputs the abstract features as input to the coupling layer. The role of the coupling layer is to learn the relation between components/systems and extract a coupling feature from the discrete features of component/system networks. Like the sequence in which air flows through engine components, the data transmission in the coupling layer follows this order. Finally, the mapping layer associates the feature from the coupling layer with the aircraft engine performance parameter. From the above description, it can be seen that the presented architecture serves as a designing framework for a predicting model and it is not limited to a particular engine type. Compared to conventional neural networks, incorporating coupling relationships of engine components into neural networks simplifies feature selection. The input features are filtered and clustered based on the requirements of the component/system networks. Moreover, the presented architecture does not mandate all input features to be input at once. Instead, a component/system learning network only reads relevant sensor parameters, thereby reducing the need for network nodes and avoiding direct processing of unrelated input features. 2.2. Component Network The component networks play a crucial role in the training of presented architecture. One of the simplest methods for component network is to use the mature artificial neural network (ANN) [30], such as a fully connected neural network (FNN), recurrent neural networks (RNNs), convolutional neural networks (CNNs), etc. The neural network model consists of stacked neural networks, where the network is made up of nodes that store information. Nodes receive input data and distribute independent weights for each output. Figure 2 illustrates the standard mode of node connection. In a fully connected neural network, the connections between nodes are unidirectional and only exist from one layer to the next layer. As such, a fully connected neural network focuses on extracting features from single data but ignores the relationship between the data in space or time. To address this issue, recurrent neural networks were introduced. RNN is an ANN with a self-circulating structure composed of nodes participating in recursive computations, which is called a cell in terminology. The cell transfers information from the previous calculation to the following calculation, thereby improving the performance on sequence data. However, long-term dependence issues such as information morphing and vanishing may occur Aerospace 2023, 10, 493 network, the connections between nodes are unidirectional and only exist from one layer to the next layer. As such, a fully connected neural network focuses on extracting features from single data but ignores the relationship between the data in space or time. To address this issue, recurrent neural networks were introduced. RNN is an ANN with a self-circulating structure composed of nodes participating in recursive computations, which is 5 of 17 called a cell in terminology. The cell transfers information from the previous calculation to the following calculation, thereby improving the performance on sequence data. However, long-term dependence issues such as information morphing and vanishing may ocwhen vanilla RNN RNN processes overtime sequences. Long short-term memorymemory (LSTM) (LSTM) neural cur when vanilla processes overtime sequences. Long short-term network was introduced to handle long-term dependence [31]. neural network was introduced to handle long-term dependence [31]. ANN RNN CNN Direction of kernel moving Direction of information transfer Node Cell Kernel Figure2.2.The Thestandard standardmodes modesofofnode nodeconnection connectionininartificial artificialneural neuralnetworks. networks. Figure AnLSTM LSTMnetwork networkisis a kind RNN with a gate function. There are three funcAn a kind of of RNN with a gate function. There are three gate gate functions tions in an LSTM cell: gates, input gates, and output The input gate controls in an LSTM cell: input forget forget gates, gates, and output gates. gates. The input gate controls how much input information is transmitted. The forget selects part of athe information in how much input information is transmitted. Thegate forget gatea selects part of the inforthe previous sequence tosequence be discarded toaccording the input data. appropriate mation in the previous to beaccording discarded to theSelecting input data. Selecting information the following sequence is thesequence output gate function. gate formulas appropriatefor information for the following is the output The gatethree function. The three are asformulas follows: are as follows: gate f t = σ W f [ h t −1 , x t ] + b f (1) f = σ ( W f ht -1 , xt + b f ) (1) t it = iσ (=Wσi [(hWt− , x ]+b ) i 1ht −1 ,t xt + ibi ) (2) (2) = tanh +bbcc)) C t =Ctanh htc− 1ht,−1x,tx]t+ (Wc([W t (3) (3) t f ⊗ C + i ⊗ C Ct C=t f=t ⊗ t Ct−1t −+ 1 it ⊗ t Ct t O ( = σ Wo ht −1 , xt + bo ) Ot = tσ(Wo [ht−1 , xt ] + bo ) (4) (4) (5) (5) h = O ⊗ tanh ( Ct ) (6) t t ht = Ot ⊗ tanh(Ct ) (6) wherebbrepresents representsthe thebias; bias;W Wrepresents representsthe thelearnable learnableweights weightsof ofthe thenodes; nodes;xxrepresents represents where the input features; h represents the hidden state of the LSTM cell that is the cell output the input features; h represents the hidden state of the LSTM cell that is the cell output features; subscript t represents the number of cells; subscript f represents the forget gate features; subscript t represents the number of cells; subscript f represents the forget gate parameters;subscript subscriptiirepresents representsthe theinput inputgate gateparameters, parameters,and andsubscript subscriptoorepresents represents parameters; ⊗ σ the output gate parameters. represents the tensor product. is a sigmoid function the output gate parameters. ⊗ represents the tensor product. σ is a sigmoid function that that produces a result range of 0 to 1. The information flow process of an LSTM cell is produces a result range of 0 to 1. The information flow process of an LSTM cell is shown shown in3. Figure 3. Generally, gradient descent algorithms arecommon the common methods in Figure Generally, gradient descent algorithms are the methods usedused for for training artificial neural networks. More details about training algorithms available training artificial neural networks. More details about training algorithms are are available in in references [32–34] included in detail. references [32–34] andand are are not not included in detail. In some cases, it may be useful to analyze the input features in the reverse order of the input sequence. In such cases, a bidirectional LSTM (Bi-LSTM) can be employed. A bidirectional LSTM network is a composite structure of two LSTM networks with the same cell, where one LSTM network processes the input data in the original order of the input sequence, and the other processes the same input data in the reverse order of the input sequence. The outputs of the two LSTM networks are combined into one as the final network output. Aerospace 2023, 10, 493 In some cases, it may be useful to analyze the input features in the reverse order of the input sequence. In such cases, a bidirectional LSTM (Bi-LSTM) can be employed. A bidirectional LSTM network is a composite structure of two LSTM networks with the same cell, where one LSTM network processes the input data in the original order of the input sequence, and the other processes the same input data in the reverse order of the 6 of 17 input sequence. The outputs of the two LSTM networks are combined into one as the final network output. Cell state Current Cell state Current Hidden state Aerospace 2023, 10, x FOR PEER REVIEW 7 of 17 the rear component affects the front component when an adverse pressure gradient occurs in the engine. Therefore, a bidirectional LSTM is selected for the coupling layer. According above Algorithm outlines the steps involved in develgatedescription, Pending state forget gateto the input output1gate oping a thrust prediction model based on the presented architecture. Algorithm 1. The hybrid architecture-based thrust predictionoutput model. Hidden state Determine theconcatenate number of component networks according tomultiplication the aircraft engine addition Input type. 2 Connect the component network to build the component learning layer. Figure sketch of an an LSTM cell. arrows in indicate direction of of data transmission. Figure AAsketch of cell. The Theare arrows inthe thefigure figure indicate the direction data trans3 3.3.The component networks arranged in the orderthe in which air flows through mission. the components in the engine. 2.3. Model for Predicting Thrust 4 The output of component networks points to the coupling layer; Based the presented 2.3. Model foron Predicting Thrustarchitecture, a model for predicting thrust was built. Firstly, The output of the coupling layer points to the mapping layer; the Based parameters for model training werea discussed. The model training supervised the presented architecture, model predicting thrust was is built. Firstly, Theon output of the mapping layer is a targetfor parameter. learning where the input data and the corresponding output data are provided to the the parameters for model training were discussed. The model training is supervised learn5 Determine the neural network type for each layer: model during the training phase. For the on-board model, the training phase of the model ing where theComponent input data learning and the corresponding output data are provided to the model layer (component FNN; is typically completed in a ground system, while the network): usage of the model place on the during the training phase. For the on-board model, the training phasetakes of the model is Coupling Bi-LSTM; airborne system. Thus,layer: the selection of input features for the model is limited, as both the typically completed in a ground system, while the usage of the model takes place on the Mapping layer: FNN. ground system and airborne system must be capable of measuring the selected features. airborne system. Thus, the selection of input features for the model is limited, as both the 6 Measurable parameters are classified component Thrust is associated with the aircraft engineby status, controlcorrelation; law, and engine operating ground system and airborne system must be capable of measuring thethrust selected features. Measurable parameters the input for component network, as the target. environment. Consequently, theare parameters related to the condition are selected as input Thrust isPreprocess associateddata withwith the aircraft engine normalization status, control method. law, and engine operating en7 the Min–Max features. The relationship between the component performance parameters and the aircraft vironment. Consequently, the the parameters related to the condition are MSE selected as loss input 8 Set the batch size and node number of the network; choose as the engine performance parameters reflects the physical matching of components and the features.function The relationship between the component performance parameters and the airand RMSE for optimization. entire engine. The model represents the physical matching when these parameters are used craft9 engine performance parameters reflects the physical matching of components and to train Training the modelmodel: as input and target features. Thus, the thrust data collected from engine the entireFor engine. The model represents the physical matching when these parameters are i = 1 to iter: testing, including ground and high-altitude tests, can be training data for a thrust prediction used to train Tune the modelweight as input andoftarget features. Thus, thethe thrust collected from value the model minimize loss data function. model. In this way,the it is equivalent to expand the to number of measurable parameters on the engine testing, including ground and high-altitude tests, can be training data for a thrust airborneEnd system. The relationship between the parameters is shown in Figure 4. prediction model. In this way, it is equivalent to expand the number of measurable parameters on the airborne system. The relationship between the parameters is shown in Target feature for Model Input features for Model Figure 4. Next, the specific neural network used in the architecture layer for the prediction The performance The parameters measured model isparameters discussed. For example, the independent component/system networks and the measured in Expand in testing but no in in flying mappingboth layer fully connected neural network the prediction model, while the flyinguse and atesting coupling layer uses a bidirectional LSTM. Since the order of engine components is se3、T6 e.g. P Thrust quenced in space, LSTM is prioritized to complete the coupling layer. An LSTM reads and analyses the inputparameters features in the forward order of the sequence. For an aircraft engine, Environmental 1 e.g. P0、T0 Control parameters e.g. Nl、Nh Ps: P0: Total Pressure at exit of Inlet T0: Total Temperature at exit of Inlet P3: Total Pressure at exit of Compressor T6: Exhaust gas Temperature Nl : Low Pressure Rotor Speed Nh : High Pressure Rotor Speed Figure Figure4.4.The Therelationship relationshipbetween betweenthe theparameters parametersin inthe thepredicting predictingmodel. model. 3. Verification and Discussion 3.1. Case Settings The dataset used to verify the given method was collected from the performance simulation of a two-spool mixing exhaust turbofan. The engine performance simulation utilized component-level modeling where the thermodynamic cycle in each component is Aerospace 2023, 10, 493 7 of 17 Next, the specific neural network used in the architecture layer for the prediction model is discussed. For example, the independent component/system networks and the mapping layer use a fully connected neural network in the prediction model, while the coupling layer uses a bidirectional LSTM. Since the order of engine components is sequenced in space, LSTM is prioritized to complete the coupling layer. An LSTM reads and analyses the input features in the forward order of the sequence. For an aircraft engine, the rear component affects the front component when an adverse pressure gradient occurs in the engine. Therefore, a bidirectional LSTM is selected for the coupling layer. According to the above description, Algorithm 1 outlines the steps involved in developing a thrust prediction model based on the presented architecture. Algorithm 1 The hybrid architecture-based thrust prediction model 1 2 Determine the number of component networks according to the aircraft engine type. Connect the component network to build the component learning layer. The component networks are arranged in the order in which air flows through the 3 components in the engine. 4 The output of component networks points to the coupling layer; The output of the coupling layer points to the mapping layer; The output of the mapping layer is a target parameter. Aerospace 2023, 10, x FOR PEER REVIEW 8 of 17 5 Determine the neural network type for each layer: Component learning layer (component network): FNN; Coupling layer: Bi-LSTM; The engine performance simulation generated five datasets; one is the training daMapping layer: FNN. taset andMeasurable four are testing datasets. The component characteristic 6 parameters are classified by component correlation; maps used to generate Measurable parameters are the input for component network, the target. these datasets are different. High-pressure components work in thrust harshas environments and 7 Preprocess data with the Min–Max normalization method. have a faster decay rate than other engine components. Thus, different combinations of 8 Set the batch size and the node theaccount network;for choose MSEdegrees as the loss component characteristic maps werenumber createdofto varying of degradafunction and RMSE for optimization. tion in high-pressure components. The four testing datasets are named Testing 1, 2-1, 2-2, 9 Training model: and 2-3. For There are four combinations of the component characteristic maps: Maps A, Maps i = 1 to iter: B, Maps C, and D. Maps and D were generated by multiplying the correction TuneMaps the weight valueB,ofC,the model to minimize the loss function. factor based on Maps A, where the correction coefficient ranged from 0.7 to 0.99. Maps A End were used to generate the training dataset and Testing 1, while Maps B, C, D were used to generate Testing 2-1, Testing 2-2, and Testing 2-3, respectively. Therefore, the training da3. Verification and Discussion tasetCase andSettings Testing 1 simulate an aircraft engine state without performance degradation, 3.1. while Testing 2 simulates aircraft engine states with varying degrees of performance degThe dataset used to verify the given method was collected from the performance radation. simulation of a two-spool mixing exhaust turbofan. The engine performance simulation The training dataset characterizes the entire operation of an engine from start to stop. utilized component-level modeling where the thermodynamic cycle in each component To include as many engine states as possible, the training dataset has 10,001 samples. Testis simulated using a given component characteristic map. Figure 5 shows a sketch of ing 1 has 40,044 samples and characterizes engine operation under four different control the component-level model of the engine, which includes an inlet, a fan, a high-pressure laws, compared to the training dataset. Testing 2 has 10,001 samples and is used to verify compressor (HPC), a combustor, a high-pressure turbine (HPT), a low-pressure turbine model aaccuracy under engine degradation state. (LPT), mixing chamber, a bypass, and a nozzle. Figure 5. A sketch of the component-level model of the two-spool mixing exhaust turbofan. Figure 5. A sketch of the component-level model of the two-spool mixing exhaust turbofan. The engine performance simulation generated five datasets; one is the training dataset The are features fordatasets. model training are clustered based onmaps Section 2.3,toas presented in and four testing The component characteristic used generate these Table 1. Since the selected features have difference magnitudes, they are normalized using datasets are different. High-pressure components work in harsh environments and have a Min–Max normalization. To evaluate the effectiveness of the presented architecture, multiple thrust predicting models are developed based on the conventional neural network architecture (Figure 6a), simplified block neural network architecture (Figure 6b), and hybrid neural network architecture (Figure 6c). The number of nodes in the predicting mod- Aerospace 2023, 10, 493 8 of 17 faster decay rate than other engine components. Thus, different combinations of component characteristic maps were created to account for varying degrees of degradation in highpressure components. The four testing datasets are named Testing 1, 2-1, 2-2, and 2-3. There are four combinations of the component characteristic maps: Maps A, Maps B, Maps C, and Maps D. Maps B, C, and D were generated by multiplying the correction factor based on Maps A, where the correction coefficient ranged from 0.7 to 0.99. Maps A were used to generate the training dataset and Testing 1, while Maps B, C, D were used to generate Testing 2-1, Testing 2-2, and Testing 2-3, respectively. Therefore, the training dataset and Testing 1 simulate an aircraft engine state without performance degradation, while Testing 2 simulates aircraft engine states with varying degrees of performance degradation. The training dataset characterizes the entire operation of an engine from start to stop. To include as many engine states as possible, the training dataset has 10,001 samples. Testing 1 has 40,044 samples and characterizes engine operation under four different control laws, compared to the training dataset. Testing 2 has 10,001 samples and is used to verify model accuracy under engine degradation state. The features for model training are clustered based on Section 2.3, as presented in Table 1. Since the selected features have difference magnitudes, they are normalized using Min–Max normalization. To evaluate the effectiveness of the presented architecture, multiple thrust predicting models are developed based on the conventional neural network architecture (Figure 6a), simplified block neural network architecture (Figure 6b), and hybrid neural network architecture (Figure 6c). The number of nodes in the predicting models is close to the training sample size. To prevent overfitting, a dropout layer with a 0.3 drop rate is added before the mapping layer in every predicting model based on the number of training samples. Table 2 presents the configuration of the predicting models. The models AN-1, AN-2, AN-3, and LS-1 are developed based on the conventional neural network architecture. The models Str-1, Str-2, Str-3, and Str-4 are developed based on the simple block architecture. The models Str-5, Str-6, and Str-7 are developed based on the hybrid neural network architecture. Table 1. The input features for the predicting model. Type of Parameter Cross-Section of Component/ Acronym Component Network Total temperature/K Outlet of inlet/T0 Outlet of fan/T25 Exhaust gas temperature/T6 Inlet network Fan network LP turbine network Total pressure/kPa Outlet of inlet/P0 Outlet of fan/P25 Outlet of compressor/P3 Inlet network Fan network Compressor network Low-pressure rotor speed/Nl Fan network LP turbine network High-pressure rotor speed/Nh Compressor network HP turbine network Fuel flow rate/Wf Combustor network Thrust/Fn Target parameter Control/controlled parameter Aircraft engine performance parameter Control/controlled parameter LP turbine network Compressor network HP turbine network Combustor network High-pressure rotor speed/Nh Fuel flow rate/Wf Aircraft engine performance parameter Aerospace 2023, 10, 493 Thrust/Fn Target parameter9 of 17 Target parameters Target parameters Mapping Layer Mapping Layer Other Layer Second Layer Second Layer Network Block Network Layer First Layer Network Layer Network Block Network Block Network Block First Layer Network Block (a) (b) Target parameters Mapping Layer Second Layer Compressor network Inlet network Combustor network Fan network HP Turbine network Input from data Input from network LP Turbine network First Layer (c) Figure 6. 6. The predicting model. (a) (a) Conventional network architecture. (b) Figure Thearchitectures architecturesfor forthrust thrust predicting model. Conventional network architecture. Simple block architecture. (c) Hybrid neural network architecture. (b) Simple block architecture. (c) Hybrid neural network architecture. Table 2. The configuration of the thrust predicting model. Model Name Architecture First Layer Second Layer Other Layers The Total Number of Nodes AN-1 AN-2 AN-3 Conventional Conventional Conventional FC (100) FC (100) FC (100) FC (100) FC (60) FC (50) \ FC (60) FC (50)-FC (40) 11,201 10,781 10,681 Str-1 Str-2 Str-3 Str-4 Str-5 Str-6 Str-7 Simple block Simple block Simple block Simple block Hybrid Hybrid Hybrid BiL (4) FC (50)-FC (4) FC (100) BiL (2) FC (4) FC (8) FC (4) BiL (32) BiL (32) BiL (30) BiL (32) BiL (32) BiL (32) BiL (48) \ \ Lambda \ \ \ \ 11,713 11,513 10,101 9821 10,149 10,313 13,857 LS-1 Conventional BiL (12) BiL (24) Sequence Length (9) 11,569 For a model developed with conventional architecture, all input features are combined into a vector and fed into the model. In contrast, a model developed using the other two architectures, input features are grouped into multiple vectors. The input and output layers for each model are shown in Figure 7. In Table 2, FC represents the fully connected neural network layer, while BiL represents the bidirectional long short-term memory network Aerospace 2023, 10, 493 10 of 17 layer. The value in parentheses indicates the hyperparameter of the network layer. For example, AN-3 model has four fully connected neural network layers stacked, and the output nodes of each layer are 100, 50, 50, and 40, respectively. For models developed using the simple block architecture or hybrid neural network architecture, the configuration of the first layer network column in Table 2 pertains to each substructure in the first layer of the model, i.e., the network block structure or component network. For instance, in the Aerospace 2023, 10, x FOR PEER REVIEW 10 of 17 Str-5 model, all component networks (the inlet network, the fan network, the compressor network, etc.) are fully connected neural network layers with five output nodes. Thrust Output Thrust Output Thrust Output …… …… …… (1×9) Input (1×2) (a) (1×3) (1×2) (1×1) (1×1) (1×2) Input (b) (c) Thrust Output …… (1×1) dimension of feature (1×2) (1×3) (1×2) (1×1) (1×1) Input (d) Figure input layers and output layers for for eacheach model. (a) AN-1, AN-2,AN-2, AN-3.AN-3. (b) Str-5, Figure7.7.The The input layers and output layers model. (a) AN-1, (b)Str-6, Str-5, Str-7. (c) LS-1. (d) Str-1, Str-2, Str-3, Str-4. The arrows in the figure indicate the direction of data Str-6, Str-7. (c) LS-1. (d) Str-1, Str-2, Str-3, Str-4. The arrows in the figure indicate the direction of transmission. data transmission. Table 2. The configuration 3.2. Performance Metric of the thrust predicting model. Model Name AN-1 AN-2 AN-3 Str-1 Str-2 Str-3 Str-4 Str-5 Str-6 Str-7 LS-1 A scoring indicator, namely the root mean squared error (RMSE), was used measure ThetoTotal the loss in a training process and is denoted e (i). The RMSE is defined as follows: Architecture First Layer Second Layer rms Other Layers Number of Nodes 2 N 1 Conventional FC (100) (100) 11,201 (7) eFC rms (i ) = ∑ (Yi − Ŷi ) \ Conventional FC (100) FC (60) N i=1 FC (60) 10,781 Conventional FC (100) FC (50) FC (50)-FC (40) 10,681 The maximum relative deviation (MRD) and the average relative deviation (ARD) Simple block BiL (4) BiL (32) \ 11,713 were chosen as the evaluation indices to evaluate the performance of the presented model: Simple block FC (50)-FC (4) BiL (32) \ 11,513 Simple block FC (100) BiL V (30) Lambda 10,101 p − Vt RDi = , i = 1, 2, . . . , N (8) Simple block BiL (2) BiL (32) \ 9821 Vt Hybrid FC (4) BiL (32) \ 10,149 Hybrid FC (8) (32)( RD , i = 1, 2, . . . \ 10,313 (9) MRD BiL = max , N) i Hybrid FC (4) BiL (48) \ 13,857 Conventional BiL (12) BiL (24) Sequence Length (9) 11,569 1 N ARD = ∑ ( RDi ) (10) N i =1 3.2. Performance Metric where N represents the number of instances the testing dataset, Vpwas andused Vt denote the A scoring indicator, namely the root meaninsquared error (RMSE), to measpredictive and the process test data,and respectively, values are divided by as thefollows: maximum (i). The RMSE is defined ure the lossvalue in a training is denotedand ermsthe value of the dataset. RDj is the relative deviation of the instance of the jth testing dataset, 2 1 N ˆ (7) e (i) = (Y − Yi ) rms N i =1 i The maximum relative deviation (MRD) and the average relative deviation (ARD) were chosen as the evaluation indices to evaluate the performance of the presented model: RD = V p −Vt , i = 1, 2, , N Aerospace 2023, 10, 493 11 of 17 MRD denotes the maximum relative deviation of the testing dataset, and ARD denotes the average relative deviation of the testing dataset. 3.3. Results and Discussion Each model is trained five times using a training dataset to reduce the randomness of the training process. The maximum value, minimum value, mean value, and standard deviation of ARD and MRD for the five results are computed and rounded up to four decimal places. The results of the models on the four testing datasets are presented in Tables 3 and 4. The trends of the model prediction results on the four testing datasets are depicted in Figure 8. Figure 9 displays a histogram of the average MRD of the predicting models on the four testing datasets. Notably, the performance of LS-1 model on Testing 1 differs greatly from the other models, and its result is not shown in Figure 9a. For ease of discussion, the models generated with the conventional architecture are collectively referred to as the conventional models, while the other models are collectively referred to as the structural models. Table 3. Results of the thrust predicting models on the testing datasets 1. ARD Model Max % Min % MRD Std. Mean % Max % Min % Std. Mean % 2.3970 3.3842 6.0953 3.8817 4.0064 2.0010 4.0629 2.8639 5.0158 3.4931 31.642 1.0077 0.7831 2.0993 0.5153 1.2529 1.0146 0.7235 0.7364 1.2956 1.7716 29.221 0.0065 0.0101 0.0166 0.0149 0.0117 0.0037 0.0133 0.0092 0.0139 0.0067 0.0117 1.5453 2.1747 3.3400 2.7367 2.4521 1.4305 2.6834 2.0084 3.3564 2.6448 30.407 7.7096 6.9304 10.203 7.8605 7.2269 6.9090 6.0721 5.4286 8.0805 4.8455 8.0474 6.0131 2.7426 5.1283 2.2850 1.3958 2.7207 1.6456 1.8546 1.8475 3.2047 4.1085 0.0071 0.0164 0.1871 0.0223 0.0250 0.0157 0.0183 0.0137 0.0305 0.0071 0.0145 6.9651 5.3353 7.1988 4.9320 4.6247 4.9956 3.9637 3.3643 4.7318 4.1794 6.1554 Testing Dataset 1 AN-1 AN-2 AN-3 Str-1 Str-2 Str-3 Str-4 Str-5 Str-6 Str-7 LS-1 1.3633 1.332 2.3639 2.0158 2.9108 1.2415 2.5134 1.6190 2.6263 2.0936 1.2987 0.3610 0.2609 0.8563 0.2241 0.4683 0.3763 0.2215 0.2531 0.5867 0.0780 0.3633 0.0048 0.0045 0.0068 0.0070 0.0098 0.0035 0.0101 0.0053 0.0081 0.0054 0.0042 0.7324 0.8602 1.534 1.3764 1.2643 0.6599 1.4086 0.8957 1.9309 1.3602 0.9355 Testing Dataset 2-1 AN-1 AN-2 AN-3 Str-1 Str-2 Str-3 Str-4 Str-5 Str-6 Str-7 LS-1 3.7842 2.9477 4.2153 5.4999 4.5492 3.1393 2.5373 2.2245 3.6926 3.6972 4.0474 2.6882 1.0322 2.1237 1.2474 0.2893 1.2299 0.6163 0.8123 0.6533 1.4409 1.6943 0.0045 0.0078 0.0095 0.0168 0.0172 0.0076 0.0090 0.0055 0.0154 0.0101 0.00916 3.3160 2.3805 3.1021 2.6325 2.5289 2.0684 1.5616 1.3605 2.0156 2.3213 2.8629 Aerospace 2023, 10, x FOR PEER REVIEW 13 of 17 Aerospace 2023, 10, 493 12 of 17 Model Max % AN-1 AN-2 AN-3 Str-1 Str-2 Str-3 Str-4 Str-5 Str-6 Str-7 LS-1 3.8091 3.0014 4.2774 5.5318 4.6903 3.1902 2.8107 2.4076 3.7327 3.7408 4.0642 AN-1 AN-2 AN-3 Str-1 Str-2 Str-3 Str-4 Str-5 Str-6 Str-7 LS-1 3.9344 3.1761 4.4586 5.7983 4.8208 3.4819 3.1511 2.5754 3.8069 3.8111 4.3430 2 model, Str-3 model, Str-4 model, and Str-5 model, it can be observed that although the Table 4. Results of the thrust models onaffect the testing 2. of the models, they cannot structures of networks usedpredicting in the first layer the datasets accuracy play a decisive role in model accuracy. For model accuracy, the architectural design of a ARD MRD model is more important than which structure of a network is used in a model. In general, Mean Mean Max Min Min Std. Std. the Str-5 with the is superior to%other mod% % hybrid architecture, % % model, a structural model els in terms of robustness and stability. The Str-6 model adjusts the node number of comTesting Dataset 2-2 ponent networks to change the model sizing, while the Str-7 model adjusts the model siz2.7000 0.0044 3.3428 7.6944 6.0316 0.0070 6.9876 ing 1.0153 by changing0.0081 the node number of the second layer network.0.0162 Compared5.5582 to the model 2.4119 6.9846 2.8165 performance of0.0096 Str-5 and Str-6 on Testing 2, the model by 1.6% 7.2430 but the MRD 2.1498 3.1463 10.229 5.1809size varies 0.0186 1.2685 by more 0.0168 7.8891 0.0224 which 4.9705 increases than 1%.2.6604 However, in the case of2.3098 the Str-7 model, increased the 0.3445 0.0171 2.6489 7.2254 1.2766 0.0252 4.7164 model sizing by 36.53% compared to the Str-5 model, it showed an MRD increase of less 1.3591 0.0075 2.1474 6.9461 2.8543 0.0154 5.0725 than0.5281 1%. From 0.0130 the results of the Str-6 model can be inferred 1.6001 6.3564 and Str-7 1.5174model, it 0.0196 4.0076 that the 0.8850 in the model 0.0063 sizing1.4650 5.6173 1.9695 0.0143 increase by adjusting the node number of the first layer3.4874 increases the 0.6196 0.0154 2.0597 8.1307 1.9709 0.0301 4.8170 instability of the model. 1.3976 0.0103 2.3859 4.9623 3.1957 0.0075 4.2571 Additionally, it can be 2.8997 observed that the performance models on6.1853 Testing 2-3 is 1.7461 0.0090 8.0599 4.1571 of the 0.0143 the least favorable when compared to the other testing datasets, suggesting that the disTesting Dataset 2-3 parity between Testing 2-3 and the training dataset is the greatest. Figure 10 shows the 2.8795 0.0042 3.5255 7.8176 6.1732 0.0069 7.1332 thrust value difference between 2-3 and the2.9314 training dataset. on Figure 10 1.0413 0.0087 2.5571 Testing7.1321 0.0163 Based5.7190 and2.3525 the outcomes of models Str-5 to Str-7 on Testing 2-3, it can be concluded 0.0095 3.3026 10.287 5.2857 0.0183 7.3590that a 5% 1.4903 0.0172 2.8747 8.1181 2.4377 0.0229 variation between the training and testing datasets is acceptable. Despite a 5.1395 significant re0.0449 0.0171 2.7945 7.2504 1.4033 0.0246 4.8515 duction in its MRD compared to Testing 1, the LS-12.9540 model performance on5.2498 Testing 2 re1.5350 0.0079 2.3974 7.1504 0.0158 mains subpar, with an MRD still above 5%. This could be attributed to the use of distinct 0.3398 0.0118 1.7532 6.5569 1.4514 0.0194 4.2091 0.8158 0.0072 1.5926 5.7334 2.0804 0.0146 3.5879 component characteristic map in engine performance simulation, resulting in a lower 0.6172 2.1314 8.1673 2.0353 4.8812 value of thrust value for0.0155 Testing 2 than for Testing 1. However, when the0.0301 predicted thrust 1.3497 0.0106 2.4564 5.0550 3.1940 0.0078 4.3224 the 1.9969 LS-1 model 0.0091 is lower than the test data, the model4.3434 performs better 3.1685 8.2561 0.0144 on Testing 6.36922 than on Testing 1. (a) Figure 8. Cont. (b) Aerospace 2023, 10, x FOR PEER REVIEW 14 of 17 Aerospace 2023, 10, 493 13 of 17 Aerospace 2023, 10, x FOR PEER REVIEW (c) 14 of 17 (d) (c) (d) Figure 8. The trend of model thrust prediction on testing datasets. (a) 1. (b) 2-1. (c) Figure 8. 8.The Thetrend trendofofmodel model thrust prediction testing datasets. (a) Testing Testing 1.Testing (b) Testing Testing 2-1. Figure thrust prediction onon testing datasets. (a) Testing 1. (b) 2-1. (c) Testing 2-2.2-2. (d) Testing 2-3. (c) Testing 2-2. (d) Testing Testing (d) Testing 2-3.2-3. (a) (a) (b) (c) (c) (b) (d) (d) Figure Testing 1. 1. Figure 9. 9. Histogram Histogramon onthe theMRD MRDofofthe thethrust thrustpredicting predictingmodels modelson ontesting testingdatasets. datasets.(a)(a) Testing (b) Testing 2-1. (c) Testing 2-2. (d) Testing 2-3. (b) Testing 2-1. (c) Testing 2-2. (d) Testing 2-3. Figure 9. Histogram on the MRD of the thrust predicting models on testing datasets. (a) Testing 1. (b) Testing 2-1. (c) Testing 2-2. (d) Testing 2-3. Aerospace 2023, 10, 493 14 of 17 Table 3 shows that all models have small ARDs. Furthermore, the maximum and minimum values of MRD are mostly below 5% for Testing 1. Not all the structural models perform better than the conventional models on Testing 1. Since the component characteristic maps used in the performance simulation to generate Testing 1 are identical to the training dataset, a model performing well on Testing 1 may rely on the training data. Thus, it is more important to evaluate the performance of the models on the testing dataset that differs from the training data if the model accuracy is met. Additionally, the results of conventional models indicate that increasing the depth or complexity of the model layer does not necessarily improve the model performance. Engine degradation is a common phenomenon during the lifetime of its use, which leads to a decrease in thrust. When a model predicts thrust in real-time, the engine state represented by the input data typically differs from the engine state represented by the training data. To evaluate the model performance in such situations, it is further tested with testing datasets representing the engine in the degraded state. Thus, the model performance in Testing 2 reflects their robustness. The results presented in Tables 3 and 4 show that the structural models exhibit better robustness than the conventional models. This can be attributed to the fact that the structural models integrate the domain knowledge, reducing the reliance on data. Consequently, the performance degradation of the structural models is lower than that of the conventional models when the testing data significantly differ from the training data. Figures 8 and 9 indicate that the performance of the models on each subset of Testing 2 is similar. The model performance on Testing 2 shows that the average statistics for ARD and MRD of the structural models are mostly less than 5%. By comparing the Str-1 model, Str-2 model, Str-3 model, Str-4 model, and Str-5 model, it can be observed that although the structures of networks used in the first layer affect the accuracy of the models, they cannot play a decisive role in model accuracy. For model accuracy, the architectural design of a model is more important than which structure of a network is used in a model. In general, the Str-5 model, a structural model with the hybrid architecture, is superior to other models in terms of robustness and stability. The Str-6 model adjusts the node number of component networks to change the model sizing, while the Str-7 model adjusts the model sizing by changing the node number of the second layer network. Compared to the model performance of Str-5 and Str-6 on Testing 2, the model size varies by 1.6% but the MRD increases by more than 1%. However, in the case of the Str-7 model, which increased the model sizing by 36.53% compared to the Str-5 model, it showed an MRD increase of less than 1%. From the results of the Str-6 model and Str-7 model, it can be inferred that the increase in the model sizing by adjusting the node number of the first layer increases the instability of the model. Additionally, it can be observed that the performance of the models on Testing 2-3 is the least favorable when compared to the other testing datasets, suggesting that the disparity between Testing 2-3 and the training dataset is the greatest. Figure 10 shows the thrust value difference between Testing 2-3 and the training dataset. Based on Figure 10 and the outcomes of models Str-5 to Str-7 on Testing 2-3, it can be concluded that a 5% variation between the training and testing datasets is acceptable. Despite a significant reduction in its MRD compared to Testing 1, the LS-1 model performance on Testing 2 remains subpar, with an MRD still above 5%. This could be attributed to the use of distinct component characteristic map in engine performance simulation, resulting in a lower thrust value for Testing 2 than for Testing 1. However, when the predicted thrust value of the LS-1 model is lower than the test data, the model performs better on Testing 2 than on Testing 1. Aerospace 2023, 10, 493 x FOR PEER REVIEW 1515ofof 17 17 The thrust thrust value value difference difference between Testing 2-3 and the training dataset. Figure 10. The 4. Conclusions 4. Conclusions On-board engine health management (EHM) is developed for ensuring aircraft engine On-board engine health management (EHM) is developed for ensuring aircraft enreliability. One key component of the on-board EHM is an on-board adaptive model that is gine reliability. One key component of the on-board EHM is an on-board adaptive model responsible for monitoring and predicting engine performance. In this study, we present that is responsible for monitoring and predicting engine performance. In this study, we an on-board adaptive model for predicting engine thrust. The model is built based on a present an on-board adaptive model for predicting engine thrust. The model is built based hybrid architecture that combines domain knowledge of the engine with neural network on a hybrid architecture that combines domain knowledge of the engine with neural netstructure. By fusing the aeroengine domain knowledge and neural network structure, the work structure. By fusing the aeroengine domain knowledge and neural network struchyperparameters of neural networks are restricted, the interconnections between neural ture, the hyperparameters of neural networks are restricted, the interconnections between networks are tailored, and the data processing workload is reduced. neural networks are tailored, and the data processing workload is reduced. To evaluate the effectiveness and robustness of our hybrid architecture, we verified To evaluate effectiveness and robustness our simulation hybrid architecture, weexpected, verified predicting modelsthe with different architectures usingoffour datasets. As predicting models with different architectures using four simulation datasets. Asdata, exthe hybrid architecture reduces the dependence of the neural network on training pected, the hybrid architecture reduces the dependence of the neural network on training which is important because engine performance deteriorates over time, leading to differdata, is important engine performance time, leading to ences which between the engine because state used for model training. deteriorates In order to beover used on an airborne differences between the engine state used for model training. In order to be used on an system, the robustness of the on-board model is crucial. Thus, the thrust predicting model airborne system, thearchitecture robustness is ofmore the on-board is crucial. Thus, the thrust predictbuilt by the hybrid suitable model for EHM. ing model built by the hybrid architecture is more suitable for EHM. Author Contributions: Software, formal analysis, and writing—original draft preparation, Z.L.; Author Contributions: Software, formal analysis, draft preparation, Z.L.; writing—review and editing and supervision, H.X.; and data writing—original curation and writing—review and editing, writing—review and editing and supervision, H.X.; data curation and writing—review and editing, X.Z.; resources and writing—review and editing, Z.W. All authors have read and agreed to the X.Z.; resources andof writing—review published version the manuscript.and editing, Z.W. All authors have read and agreed to the published version of the manuscript. Funding: This research was funded by the National Natural Science Foundation of China, grant Funding: This research wasScience fundedand by Technology the NationalMajor Natural Science Foundation of China, grant number 52076180; National Project, grant number J2019-I-0021-0020; number 52076180; National Science and Technology Major Project, grant number J2019-I-0021-0020; Science Center for Gas Turbine Project, grant number P2022-B-I-005-001; The Fundamental Research Science Center for GasUniversities. Turbine Project, grant number P2022-B-I-005-001; The Fundamental Research Funds for the Central Funds for the Central Universities. Data Availability Statement: The data that support the findings of this study are available from the Data Availability Statement: The data that support the findings of this study are available from the corresponding author, Hong Xiao, upon reasonable request. corresponding author, Hong Xiao, upon reasonable request. Conflicts of Interest: The authors declare no conflict of interest. Conflicts of Interest: The authors declare no conflict of interest. References References 1. Litt, J.S.; Simon, D.L.; Garg, S.; Guo, T.H.; Mercer, C.; Millar, R.; Behbahani, A.; Bajwa, A.; Jensen, D.T. A Survey of Intelligent 1. 2. 2. 3. 3. Litt, J.S.;and Simon, D.L.; Garg, S.; Guo, T.H.; Mercer, C.; Millar, R.; Behbahani, A.;J.Bajwa, Jensen,Inf. D.T. A Survey of Intelligent Control Health Management Technologies for Aircraft Propulsion Systems. Aerosp.A.; Comput. Commun. 2004, 1, 543–563. Control and Health Management Technologies for Aircraft Propulsion Systems. J. Aerosp. Comput. Inf. Commun. 2004, 1, 543– [CrossRef] 563. Kobayashi, T.; Simon, D.L. Integration of On-Line and Off-Line Diagnostic Algorithms for Aircraft Engine Health Management. J. Kobayashi, T.; Simon, D.L. Integration of On-Line and Off-Line Diagnostic Algorithms for Aircraft Engine Health Management. Eng. Gas Turbines Power 2007, 129, 986–993. [CrossRef] J.Simon, Eng. Gas Turbines Power 2007, 129, 986–993. D.L. An Integrated Architecture for On-board Aircraft Engine Performance Trend Monitoring and Gas Path Fault Monitoring and3–7 GasMay Path2010. Fault DiagSimon, D.L. An Integrated Architecture for On-board Engine Performance Trend Diagnostics. In Proceedings of the 57th JANNAF JointAircraft Propulsion Meeting, Colorado Springs, CO, USA, nostics. In Proceedings of the 57th JANNAF Joint Propulsion Meeting, Colorado Springs, CO, USA, 3–7 May 2010. Aerospace 2023, 10, 493 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. 25. 26. 27. 28. 29. 30. 31. 32. 16 of 17 Armstrong, J.B.; Simon, D.L. Implementation of an Integrated On-Board Aircraft Engine Diagnostic Architecture. In Proceedings of the 47th AIAA/ASME/SAE/ASEE Joint Propulsion Conference & Exhibit, San Diego, SA, USA, 31 July–3 August 2011. NASA/TM-2012-217279, AIAA-2011-5859, 2012.. Brunell, B.J.; Viassolo, D.E.; Prasanth, R. Model adaptation and nonlinear model predictive control of an aircraft engine. In Proceedings of the ASME Turbo Expo 2004: Power for Land, Sea and Air, Vienna, Austria, 14–17 June 2004; Volume 41677, pp. 673–682. Jaw, L.C.; Mattingly, J.D. Aircraft Engine Controls: Design, System Analysis, and Health Monitoring; Schetz, J.A., Ed.; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2009; pp. 37–61. Alag, G.; Gilyard, G. A proposed Kalman filter algorithm for estimation of unmeasured output variables for an F100 turbofan engine. In Proceedings of the 26th Joint Propulsion Conference, Orlando, FL, USA, 16–18 July 1990. Csank, J.T.; Connolly, J.W. Enhanced Engine Performance During Emergency Operation Using a Model-Based Engine Control Architecture. In Proceedings of the AIAA/SAE/ASEE Joint Propulsion Conference, Orlando, FL, USA, 27–29 July 2015. Simon, D.L.; Litt, J.S. Application of a constant gain extended Kalman filter for in-flight estimation of aircraft engine performance parameters. In Proceedings of the ASME Turbo Expo 2005: Power for Land, Sea, and Air, Reno, NV, USA, 6–9 June 2005. Litt, J.S. An optimal orthogonal decomposition method for Kalman filter-based turbofan engine thrust estimation. J. Eng. Gas Turbines Power 2007, 130, 745–756. Csank, J.; Ryan, M.; Litt, J.S.; Guo, T. Control Design for a Generic Commercial Aircraft Engine. In Proceedings of the 46th AIAA/SAE/ASEE Joint Propulsion Conference, Nashville, TN, USA, 25–28 July 2010. Zhu, Y.Y.; Huang, J.Q.; Pan, M.X.; Zhou, W.X. Direct thrust control for multivariable turbofan engine based on affine linear parameter varying approach. Chin. J. Aeronaut. 2022, 35, 125–136. [CrossRef] Simon, D.L.; Borguet, S.; Léonard, O.; Zhang, X. Aircraft engine gas path diagnostic methods: Public benchmarking results. J. Eng. Gas Turbines Power 2013, 136, 041201–041210. [CrossRef] Chati, Y.S.; Balakrishnan, H. Aircraft engine performance study using flight data recorder archives. In Proceedings of the 2013 Aviation Technology, Integration, and Operations Conference, Los Angeles, CA, USA, 12–14 August 2013. Kobayashi, T.; Simon, D.L. Hybrid neural network genetic-algorithm technique for aircraft engine performance diagnostics. J. Propuls. Power 2005, 21, 751–758. [CrossRef] Kim, S.; Kim, K.; Son, C. Transient system simulation for an aircraft engine using a data-driven model. Energy 2020, 196, 117046. [CrossRef] Wang, Z.; Zhao, Y. Data-Driven Exhaust Gas Temperature Baseline Predictions for Aeroengine Based on Machine Learning Algorithms. Aerospace 2023, 10, 17. [CrossRef] Zhao, Y.P.; Sun, J. Fast Online Approximation for Hard Support Vector Regression and Its Application to Analytical Redundancy for Aeroengines. Chin. J. Aeronaut. 2010, 23, 145–152. Zhao, Y.P.; Li, Z.Q.; Hu, Q.K. A size-transferring radial basis function network for aero-engine thrust estimation. Eng. Appl. Artif. Intell. 2020, 87, 103253. [CrossRef] Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [CrossRef] Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting Adversarial Attacks with Momentum. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, Salt Lake City, UT, USA, 18–23 June 2018. Tartakovsky, A.M.; Marrero, C.O.; Perdikaris, P. Physics-Informed Deep Neural Networks for Learning Parameters and Constitutive Relationships in Subsurface Flow Problems. Water Resour. Res. 2020, 56, e2019WR026731. [CrossRef] Yang, L.; Zhang, D.; Karniadakis, G.E. Physics-informed generative adversarial networks for stochastic differential equations. SIAM J. Sci. Comput. 2020, 42, 292–317. [CrossRef] Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440. [CrossRef] Van Dyk, D.A.; Meng, X.L. The art of data augmentation. J. Comput. Graph. Stat. 2001, 10, 1–50. [CrossRef] Bronstein, M.M.; Bruna, J.; LeCun, Y.; Szlam, A.; Vandergheynst, P. Geometric deep learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 2017, 34, 18–42. [CrossRef] Cohen, T.; Weiler, M.; Kicanaoglu, B.; Welling, M. Gauge equivariant convolutional networks and the icosahedral cnn. Proc. Mach. Learn. Res 2019, 97, 1321–1330. Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural network: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [CrossRef] Robinson, H.; Pawar, S.; Rasheed, A.; San, O. Physics guided neural networks for modelling of non-linear dynamics. Neural Netw. 2022, 154, 333–345. [CrossRef] Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning—Adaptive Computation and Machine Learning Series; The MIT Press: Cambridge, MA, USA, 2016. Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [CrossRef] Jacobs, R.A. Increased Rates of Convergence Through Learning Rate Adaptation. Neural Netw. 1988, 1, 295–307. [CrossRef] Aerospace 2023, 10, 493 33. 34. 17 of 17 Duchi, J.C.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 2014 International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.