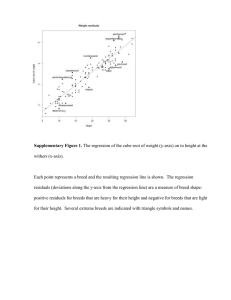

12 (Part 2) • • • • • Analysis of Variance: Overall Fit Confidence and Prediction Intervals for Y Violations of Assumptions Unusual Observations Other Regression Problems McGraw-Hill/Irwin Copyright © 2009 by The McGraw-Hill Companies, Inc. Chapter Bivariate Regression Analysis of Variance: Overall Fit Decomposition of Variance • To visualize the variation in the dependent variable around its mean, use the formula • This same decomposition for the sums of squares is Analysis of Variance: Overall Fit Decomposition of Variance • The decomposition of variance is written as SST SSR SSE + = (total variation (variation (unexplained or around the error variation) explained by the mean) regression) Analysis of Variance: Overall Fit F Statistic for Overall Fit • For a bivariate regression, the F statistic is • • For a given sample size, a larger F statistic indicates a better fit. Reject H0 if F > F1,n-2 from Appendix F for a given significance level α or if p-value < α. Confidence and Prediction Intervals for Y How to Construct an Interval Estimate for Y • The regression line is an estimate of the conditional mean of Y. • An interval estimate is used to show a range of likely values of the point estimate. • Confidence Interval for the conditional mean of Y Confidence and Prediction Intervals for Y How to Construct an Interval Estimate for Y • Prediction interval for individual values of Y is • Prediction intervals are wider than confidence intervals because individual Y values vary more than the mean of Y. Confidence and Prediction Intervals for Y MegaStat’s Confidence and Prediction Intervals Figure 12.23 Confidence and Prediction Intervals for Y Confidence and Prediction Intervals Illustrated Figure 12.24 Confidence and Prediction Intervals for Y Quick Rules for Confidence and Prediction Intervals • Quick confidence interval for mean Y • Quick prediction interval for individual Y Violations of Assumptions Three Important Assumptions 1. The errors are normally distributed. 2. The errors have constant variance (i.e., they are homoscedastic) 3. The errors are independent (i.e., they are nonautocorrelated). • • The error εi is unobservable. The residuals ei from the fitted regression give clues about the violation of these assumptions. Violations of Assumptions Non-normal Errors • • • • Non-normality of errors is a mild violation since the regression parameter estimates b0 and b1 and their variances remain unbiased and consistent. Confidence intervals for the parameters may be untrustworthy because normality assumption is used to justify using Student’s t distribution. A large sample size would compensate. Outliers could pose serious problems. Violations of Assumptions Histogram of Residuals • • Check for non-normality by creating histograms of the residuals or standardized residuals (each residual is divided by its standard error). Standardized residuals range between -3 and +3 unless there are outliers. Figure 12.25 12B-12 Violations of Assumptions Histogram of Residuals Figure 12.25 12B-13 Violations of Assumptions Normal Probability Plot • • The Normal Probability Plot tests the assumption H0: Errors are normally distributed H1: Errors are not normally distributed If H0 is true, the residual probability plot should be linear. 12B-14 Violations of Assumptions Normal Probability Plot Violations of Assumptions What to Do About Non-Normality? 1. Trim outliers only if they clearly are mistakes. 2. Increase the sample size if possible. 3. Try a logarithmic transformation of both X and Y. Violations of Assumptions Heteroscedastic Errors (Nonconstant Variance) • • • • The ideal condition is if the error magnitude is constant (i.e., errors are homoscedastic). Heteroscedastic errors increase or decrease with X. In the most common form of heteroscedasticity, the variances of the estimators are likely to be understated. This results in overstated t statistics and artificially narrow confidence intervals. Violations of Assumptions Tests for Heteroscedasticity • Plot the residuals against X. Ideally, there is no pattern in the residuals moving from left to right. Violations of Assumptions Tests for Heteroscedasticity • The “fan-out” pattern of increasing residual variance is the most common pattern indicating heteroscedasticity. Violations of Assumptions What to Do About Heteroscedasticity? • • Transform both X and Y, for example, by taking logs. Although it can widen the confidence intervals for the coefficients, heteroscedasticity does not bias the estimates. Violations of Assumptions Autocorrelated Errors • • • • Autocorrelation is a pattern of nonindependent errors. In a time-series regression, each residual et should be independent of it predecessors et-1, et-2, …, et-n. In a first-order autocorrelation, et is correlated with et-1. The estimated variances of the OLS estimators are biased, resulting in confidence intervals that are too narrow, overstating the model’s fit. Violations of Assumptions Runs Test for Autocorrelation • • • • In the runs test, count the number of the residual’s sign reversals (i.e., how often does the residual cross the zero centerline?). If the pattern is random, the number of sign changes should be n/2. Fewer than n/2 would suggest positive autocorrelation. More than n/2 would suggest negative autocorrelation. Violations of Assumptions Runs Test for Autocorrelation Positive autocorrelation is indicated by runs of residuals with same sign. Negative autocorrelation is indicated by runs of residuals with alternating signs. Violations of Assumptions Durbin-Watson Test • • • Tests for autocorrelation under the hypotheses H0: Errors are nonautocorrelated H1: Errors are autocorrelated The Durbin-Watson test statistic is The DW statistic will range from 0 to 4. DW < 2 suggests positive autocorrelation DW = 2 suggests no autocorrelation (ideal) DW > 2 suggests negative autocorrelation Violations of Assumptions What to Do About Autocorrelation? • Transform both variables using the method of first differences in which both variables are redefined as changes: • Although it can widen the confidence interval for the coefficients, autocorrelation does not bias the estimates. 12B-25 Violations of Assumptions What to Do About Autocorrelation? 12B-26 Unusual Observations Standardized Residuals: Excel • Use Excel’s Tools > Data Analysis > Regression Figure 12.32 Unusual Observations Standardized Residuals: MINITAB • MINITAB gives the same general output as Excel. Figure 12.33 Unusual Observations Standardized Residuals: MegaStat • MegaStat gives the same general output as Excel. Figure 12.34 Unusual Observations Leverage and Influence • • • A high leverage statistic indicates the observation is far from the mean of X. These observations are influential because they are at the “ end of the lever.” The leverage for observation i is denoted hi Unusual Observations Leverage and Influence • A leverage that exceeds 3/n is unusual. Figure 12.35 Unusual Observations Studentized Deleted Residuals • • • Studentized deleted residuals are another way to identify unusual observations. A studentized deleted residual whose absolute value is 2 or more may be considered unusual. A studentized deleted residual whose absolute value is 2 or more is an outlier. Other Regression Problems Outliers Outliers may be caused by - an error in recording data - impossible data - an observation that has been influenced by an unspecified “lurking” variable that should have been controlled but wasn’t. To fix the problem, - delete the observation(s) - delete the data - formulate a multiple regression model that includes the lurking variable Other Regression Problems Model Misspecification • • If a relevant predictor has been omitted, then the model is misspecified. Use multiple regression instead of bivariate regression. Other Regression Problems Ill-Conditioned Data • • • Well-conditioned data values are of the same general order of magnitude. Ill-conditioned data have unusually large or small data values and can cause loss of regression accuracy or awkward estimates. Avoid mixing magnitudes by adjusting the magnitude of your data before running the regression. Other Regression Problems Spurious Correlation • • In a spurious correlation two variables appear related because of the way they are defined. This problem is called the size effect or problem of totals. Other Regression Problems Model Form and Variable Transforms • • • • • Sometimes a nonlinear model is a better fit than a linear model. Excel offers many model forms. Variables may be transformed (e.g., logarithmic or exponential functions) in order to provide a better fit. Log transformations reduce heteroscedasticity. Nonlinear models may be difficult to interpret. End of Chapter 12B 12B-38 McGraw-Hill/Irwin Copyright © 2009 by The McGraw-Hill Companies, Inc.