See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/346967657

ANALOG SEEKrets - DC to daylight

Book · March 2011

CITATIONS

READS

0

195

1 author:

Leslie Green

Thruvision Ltd

61 PUBLICATIONS 1 CITATION

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

The TRUE Mathematics of Infinity View project

The Goldbach Conjecture View project

All content following this page was uploaded by Leslie Green on 12 December 2020.

The user has requested enhancement of the downloaded file.

ANALOG

SEEKrets

DC to daylight

… a master class in electronics design

Leslie Green ACGI CEng MIEE

ANALOG SEEKrets

DC to daylight

Copyright © Leslie Green, 2007.

All rights reserved.

The author, Leslie Green, asserts his moral rights.

No part of this document may be translated, transmitted, transcribed, reproduced or copied by any

means (except as allowed under copyright law), without prior written agreement from the copyright

holder.

Contact: logbook@lineone.net

www.logbook.freeserve.co.uk/seekrets

PUBLISHER

©

Future Science Research Press,

30 High Street, Didcot, Oxfordshire,

OX11 8EG, United Kingdom.

Printed and bound in the United Kingdom by CPI Antony Rowe, Eastbourne.

First Printing: March 2007

Second Printing: October 2007 (with minor corrections)

PDF version: March 2011

Cataloguing-in-publication data

Green, Leslie

1957 - …

Analog Seekrets, DC to daylight.

ISBN 978-0-9555064-0-6

621.3815

TK7867

ANALOG SEEKrets

i

SAFETY NOTICE

No part of this text shall be used as a reason for breaking established safety procedures. In the

unlikely event of a conflict between this text and established safety procedures, consult the relevant

responsible authority for guidance.

LEGAL NOTICE

Whilst every effort has been made to minimise errors in this text, the author and publisher specifically

disclaim any liability to anyone for any errors or omissions which remain. The reader is required to

exercise good judgement when applying ideas or information from this book.

DEVICE DATA

Where specific manufacturers’ data has been quoted, this is purely for the purpose of specific

illustration. The reader should not infer that items mentioned are being endorsed or condemned, or

any shade of meaning in between.

ACKNOWLEDGEMENTS

This book would not have been possible without the assistance of over 100 personally owned text

books, boxes full of published papers, and years of both assistance and debate with colleagues.

All SPICE simulations were done using SIMetrix v2.5.

Mathematical simulations and plots were done using Mathcad 13 (Mathsoft) and MS Excel 2003.

Word processing was done using MS Word 2003 on an AMD Athlon 62 X2 4200+ processor using

2GB of RAM. PDF output was produced using PDF995 by Software995.

a

On the front cover is an F-band (90 GHz − 140 GHz) E-H tuner from Custom Microwave Inc. On the

rear cover is a 2D field plot of the cross-section of a round pipe in a square conduit done using

2DField (see the author’s website). The unusual RC circuit on the rear cover gives a maximum

voltage gain of just over 15%. Ordinary RC circuits give less than unity voltage gain.

ii

ii

CONTENTS

PREFACE .............................................................................................................................................. vii

CH1: introduction................................................................................................................................... 1

CH2: an advanced class ....................................................................................................................... 3

2.1 The Printed Word ....................................................................................................................... 3

2.2 Design Concepts........................................................................................................................ 3

2.3 Skills ........................................................................................................................................... 4

2.4 The Qualitative Statement ......................................................................................................... 6

2.5 The Role of the Expert ............................................................................................................... 7

2.6 The Professional ...................................................................................................................... 11

2.7 Reliability .................................................................................................................................. 14

2.8 Engineering Theory.................................................................................................................. 18

CH3: tolerancing .................................................................................................................................. 19

3.1 Selection Tolerance & Preferred Values ................................................................................. 19

3.2 Drift ........................................................................................................................................... 20

3.3 Engineering Compromises ...................................................................................................... 22

3.4 Combining Tolerances ............................................................................................................. 23

3.5 Monte Carlo Tolerancing ......................................................................................................... 26

3.6 Experimental Design Verification............................................................................................. 27

3.7 The Meaning of Zero................................................................................................................ 29

CH4: the resistor .................................................................................................................................. 31

4.1 Resistor Types ......................................................................................................................... 31

4.2 Film Resistors........................................................................................................................... 32

4.3 Pulsed Power ........................................................................................................................... 34

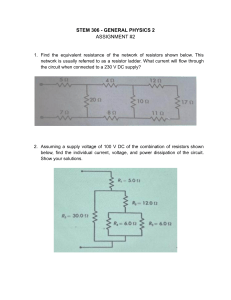

4.4 Simple Design Exercises ......................................................................................................... 37

4.5 Resistor Matching .................................................................................................................... 38

4.6 Precision Resistance ............................................................................................................... 41

4.7 Johnson Noise.......................................................................................................................... 44

4.8 Excess Noise............................................................................................................................ 45

4.9 Preferred Resistor Ratios ........................................................................................................ 47

4.10 4-Terminal Resistors.............................................................................................................. 49

CH5: the potentiometer ....................................................................................................................... 52

5.1 Types........................................................................................................................................ 52

5.2 Temperature and Time Stability .............................................................................................. 52

5.3 Analysis .................................................................................................................................... 54

5.4 Stability of p.............................................................................................................................. 56

CH6: the capacitor ............................................................................................................................... 60

6.1 Basics ....................................................................................................................................... 60

6.2 Dielectric Constant ................................................................................................................... 64

6.3 Capacitor Types ....................................................................................................................... 66

6.4 Trimmers .................................................................................................................................. 72

6.5 Size matters ............................................................................................................................. 73

6.6 Class X ..................................................................................................................................... 74

6.7 Resistive Imperfections............................................................................................................ 76

6.8 Dielectric Absorption ................................................................................................................ 82

6.9 Noise......................................................................................................................................... 85

6.10 Switched Capacitors .............................................................................................................. 86

6.11 Design Maintenance .............................................................................................................. 86

CH7: the inductor................................................................................................................................. 88

7.1 Comparison .............................................................................................................................. 88

7.2 Losses ...................................................................................................................................... 88

7.3 Self-Inductance ........................................................................................................................ 90

ANALOG SEEKrets

iii

7.4 Resonance & Q ........................................................................................................................ 92

7.5 Air-Cored Inductors .................................................................................................................. 95

7.6 Mutual Inductance .................................................................................................................... 99

7.7 Peaking................................................................................................................................... 101

7.8 Simple Filters.......................................................................................................................... 103

7.9 Magnetic Fields ...................................................................................................................... 103

7.10 Transformers and Inductive Voltage Dividers ..................................................................... 108

CH8: the diode.................................................................................................................................... 111

8.1 Historical Overview ................................................................................................................ 111

8.2 The Silicon Diode. .................................................................................................................. 112

8.3 The Germanium Rectifier....................................................................................................... 113

8.4 The Light Emitting Diode (LED) ............................................................................................. 114

8.5 The Rectifier diode. ................................................................................................................ 115

8.6 The Schottky Diode................................................................................................................ 117

8.7 The Zener (Voltage Regulator) Diode. .................................................................................. 118

8.8 The Varicap (Varactor) diode................................................................................................. 121

8.9 The PIN diode ........................................................................................................................ 124

8.10 The Step Recovery Diode (SRD). ....................................................................................... 125

8.11 The Tunnel Diode................................................................................................................. 126

8.12 The Gunn Diode ................................................................................................................... 126

8.13 The Synchronous Rectifier .................................................................................................. 127

8.14 Shot Noise ............................................................................................................................ 127

CH9: the transistor............................................................................................................................. 128

9.1 Historical Overview ................................................................................................................ 128

9.2 Saturated Switching ............................................................................................................... 129

9.3 Amplification ........................................................................................................................... 131

9.4 RF Switches ........................................................................................................................... 135

9.5 Data Sheets............................................................................................................................ 137

9.6 Differential Pair Amplifiers...................................................................................................... 139

9.7 The Hybrid-π Model................................................................................................................. 141

9.8 The JFET ................................................................................................................................ 142

9.9 The MOSFET ......................................................................................................................... 145

9.10 The IGBT ( Insulated Gate Bipolar Transistor ) .................................................................. 147

CH10: the opamp ............................................................................................................................... 148

10.1 The Rules ............................................................................................................................. 148

10.2 Gain ...................................................................................................................................... 150

10.3 Clamping .............................................................................................................................. 152

10.4 Instability............................................................................................................................... 154

10.5 Basic Amplifiers.................................................................................................................... 157

10.6 Compound Amplifiers........................................................................................................... 158

10.7 Differential Amplifiers ........................................................................................................... 161

10.8 Current Feedback ................................................................................................................ 167

10.9 Imperfections ........................................................................................................................ 168

10.10 The Schmitt Trigger ........................................................................................................... 172

CH11: the ADC and DAC ................................................................................................................... 174

11.1 Introduction to Converters .................................................................................................... 174

11.2 Non-Linear DACs ................................................................................................................. 175

11.3 DAC Glitches ........................................................................................................................ 177

11.4 Dithering An ADC ................................................................................................................. 178

11.5 Characterising ADCs ........................................................................................................... 182

11.6 PCB Layout Rules................................................................................................................ 187

11.7 The Sample & Hold .............................................................................................................. 191

CH12: the relay ................................................................................................................................... 193

12.1 Benefits of Relays ................................................................................................................ 193

12.2 Contact Types ...................................................................................................................... 193

12.3 Reed-Relays......................................................................................................................... 195

iv

ANALOG SEEKrets

12.4 Relay Coils ........................................................................................................................... 196

12.5 Drop-Out............................................................................................................................... 196

12.6 Thermal problems ................................................................................................................ 197

12.7 Small-Signal Switching ........................................................................................................ 198

12.8 Contact Bounce.................................................................................................................... 201

12.9 Coil Drive Circuits................................................................................................................. 204

12.10 AC/DC Coupling................................................................................................................. 205

CH13: rating and de-rating ............................................................................................................... 206

13.1 Introduction to Derating ....................................................................................................... 206

13.2 Safety Ratings...................................................................................................................... 206

13.3 More Derating ...................................................................................................................... 208

13.4 Derating Table...................................................................................................................... 211

CH14: circuit principles .................................................................................................................... 213

14.1 Introduction........................................................................................................................... 213

14.2 The Bootstrap....................................................................................................................... 213

14.3 Guarding & Cross-Talk ........................................................................................................ 217

14.4 Shielding............................................................................................................................... 221

14.5 The Ground Loop ................................................................................................................. 227

14.6 The Switched-Mode Power Supply ..................................................................................... 233

14.7 The Oscillator ....................................................................................................................... 241

14.8 Transistor-Level Design ....................................................................................................... 253

CH15: measurement equipment ...................................................................................................... 254

15.1 The Moving Coil Meter......................................................................................................... 254

15.2 The Digital Meter.................................................................................................................. 256

15.3 The Oscilloscope.................................................................................................................. 260

15.4 Probes & Probing ................................................................................................................. 266

15.5 The Spectrum Analyser ....................................................................................................... 271

15.6 International Standards........................................................................................................ 276

15.7 The Prime Company Standard ............................................................................................ 278

CH16: measurement techniques ..................................................................................................... 281

16.1 Measurement Uncertainties................................................................................................. 281

16.2 Measuring DC Voltage......................................................................................................... 284

16.3 Measuring Resistance ......................................................................................................... 287

16.4 Measuring AC Voltage ......................................................................................................... 299

16.5 Measuring Frequency .......................................................................................................... 308

16.6 Measuring in the Time Domain............................................................................................ 311

16.7 Measuring Interference (noise)............................................................................................ 315

CH17: design principles.................................................................................................................... 319

17.1 Packaged Solutions ............................................................................................................. 319

17.2 Custom Analog Chip design ................................................................................................ 320

17.3 Mature Technology .............................................................................................................. 321

17.4 Gain and Bandwidth............................................................................................................. 322

17.5 Surviving Component Failure .............................................................................................. 324

17.6 The Hands-On approach ..................................................................................................... 326

17.7 Second Sourcing.................................................................................................................. 327

17.8 Noise Reduction................................................................................................................... 329

17.9 Pulse Response ................................................................................................................... 349

17.10 Transmission Lines ............................................................................................................ 352

17.11 Filters.................................................................................................................................. 356

17.12 Defensive Design ............................................................................................................... 370

CH18: temperature control ............................................................................................................... 372

18.1 Temperature Scales............................................................................................................. 372

18.2 Precise Temperature Measurements .................................................................................. 373

18.3 Temperature Sensors .......................................................................................................... 374

18.4 Heat Transportation ............................................................................................................. 377

ANALOG SEEKrets

18.5

18.6

18.7

18.8

18.9

v

Thermal Models.................................................................................................................... 378

Open-Loop control systems................................................................................................. 382

ON/OFF temperature control systems ................................................................................ 382

Proportional control .............................................................................................................. 383

Radiated heat transfer ......................................................................................................... 387

CH19: lab/workshop practice ........................................................................................................... 389

19.1 Dangerous Things................................................................................................................ 389

19.2 Soldering .............................................................................................................................. 390

19.3 Prototypes ............................................................................................................................ 392

19.4 Experimental Development.................................................................................................. 395

19.5 Screws and Mechanics ........................................................................................................ 398

CH20: electronics & the law ............................................................................................................. 400

20.1 Regulations & Safety ........................................................................................................... 400

20.2 Fuses .................................................................................................................................... 402

20.2 EMC ...................................................................................................................................... 405

20.3 Radiated Emissions ............................................................................................................. 405

20.4 Conducted Emissions .......................................................................................................... 416

20.5 Harmonic Currents ............................................................................................................... 417

20.6 ESD ...................................................................................................................................... 420

20.7 European Directives............................................................................................................. 425

ENCYCLOPAEDIC GLOSSARY ........................................................................................................ 426

USEFUL DATA .................................................................................................................................... 515

First Order Approximations for Small Values ............................................................................... 515

The Rules of Logarithms ............................................................................................................... 515

Trig Identities ................................................................................................................................. 516

Summations................................................................................................................................... 516

Power Series Expansions ............................................................................................................. 516

Functions of a Complex Variable.................................................................................................. 517

Bessel Functions ........................................................................................................................... 517

Hyperbolic Functions..................................................................................................................... 517

The Gaussian Distribution............................................................................................................. 518

The Electromagnetic Spectrum .................................................................................................... 518

Electrical Physics Notes................................................................................................................ 519

Advanced Integrals and Derivatives ............................................................................................. 521

Hypergeometric Functions ............................................................................................................ 521

APPENDIX ........................................................................................................................................... 522

How much jitter is created by AM sidebands? ............................................................................. 522

How much jitter is created by FM sidebands? ............................................................................. 523

What is the basis of a phasor diagram? ....................................................................................... 524

How do you draw a phasor diagram showing different frequencies?.......................................... 525

Why is single-sideband noise half AM and half PM?................................................................... 526

Why can you convert roughly from dB to % by multiplying by 10?.............................................. 526

How is the bandwidth of a “boxcar” average digital filter calculated? ......................................... 527

Why are tolerances added? .......................................................................................................... 528

How are risetime and bandwidth related? .................................................................................... 530

What is noise bandwidth? ............................................................................................................. 530

What is the settling time of a resonant circuit?............................................................................. 531

How can multiple similar voltages be summed without much error? .......................................... 531

Why can’t the error signal be nulled completely? ........................................................................ 533

How does a Hamon transfer standard achieve excellent ratio accuracy? .................................. 534

How does impedance matching reduce noise? ........................................................................... 536

How do you measure values from a log-scaled graph?............................................................... 537

Why is a non-linear device a mixer?............................................................................................. 538

Does a smaller signal always produce less harmonic distortion? ............................................... 540

How much does aperture jitter affect signal-to-noise ratio? ........................................................ 541

How is Bit Error Ratio related to Signal-to-Noise Ratio? ............................................................. 542

vi

ANALOG SEEKrets

How are THD, SNR, SINAD and ENOB related? ........................................................................ 543

What is Q and why is it useful?..................................................................................................... 546

What is a decibel (dB)? ................................................................................................................. 548

What is Q transformation? ............................................................................................................ 549

Why is Q called the magnification factor? .................................................................................... 549

How can you convert resistive loads to VSWR and vice-versa?................................................. 550

What is the Uncertainty in Harmonic Distortion Measurements? ................................................ 551

What is RF matching?................................................................................................................... 552

What is the difference between a conjugate match and a Z0 match? ......................................... 554

What is the AC resistance of a cylindrical conductor?................................................................. 555

What are S-parameters?............................................................................................................... 556

Why does multiplying two VSWRs together give the resultant VSWR? ..................................... 558

How are variance and standard deviation related to RMS values? ............................................ 559

How does an attenuator reduce Mismatch Uncertainty? ............................................................. 560

What is the difference between Insertion Loss and Attenuation Loss? ...................................... 561

Does a short length of coax cable always attenuate the signal? ................................................ 561

How do you establish an absolute antenna calibration? ............................................................. 562

How is the coupling coefficient calculated for a tapped inductor?............................................... 564

Why is charging a capacitor said to be only 50% efficient?......................................................... 564

What coax impedance is best for transferring maximum RF power?.......................................... 565

What coax impedance gives minimum attenuation? ................................................................... 566

How long do you need to measure to get an accurate mean reading? ...................................... 567

How long do you need to measure to get an accurate RMS reading? ....................................... 568

KEY ANSWERS .................................................................................................................................. 569

INDEX .................................................................................................................................................. 574

KEY:

scope

spec

pot

ptp

+ve

−ve

&c

trig

≈

≡

//

j

C

R

L

oscilloscope

specification

potentiometer

peak-to-peak (written as p-p in some texts)

positive

negative

et cetera (and so forth)

trigonometry / trigonometric

approximately equal to

identically equal to, typically used for definitions

in parallel with, for example 10K//5K = 3K333

the imaginary operator, j ≡ i = − 1

a capacitor

a resistor

an inductor

r.m.s

2 ⋅ sin (φ )

RMS

4π f C

*EX

@EX

alias

aberration

4×π × f × C

(adjacent terms are multiplied)

key exercises (answers to all exercises on the WWW)

key exercises with answers at the back of the book

bold italics means an entry exists in the glossary

italics used for emphasis, especially for technical terms

2 × sin (φ )

(a half-high dot

means multiply)

vii

vii

PREFACE

This is a book on antenna theory for those who missed out on the RF design course. It is

a book on microwave theory for those who mainly use microwaves to cook TV dinners.

It is a book on electromagnetic theory for those who can’t confront electromagnetics

textbooks. In short, it is a book for senior under-graduates or junior design engineers

who want to broaden their horizons on their way to becoming ‘expert’ in the field of

electronics design. This book is a wide-ranging exploration of many aspects of both

circuit and system design.

Many texts are written so that if you open the book at a specific subject area, you can’t

understand what the formulas mean. Perhaps the author wrote at the beginning of the

book that all logarithms are base ten. Thus, you pull a formula out of the book and get

the wrong answer simply because you missed the earlier ‘global’ definition.

Another possible source of errors is being unsure of the rules governing the formula.

Take this example: F = log t + 1 . What is the author trying to say? Is it F = 1 + log10 (t ) ,

is it F = log e (1 + t ) , or something else? In this book, I have tried to remove all

ambiguity from equations. All functions use brackets for the arguments, even when the

brackets are not strictly necessary, thereby avoiding any possible confusion. See also the

Key, earlier.

I have adopted this non-ambiguity rule throughout and this will inevitably mean that

some of the text is “non-standard”. For an electronics engineer, 10K is a 10,000 Ω

resistor. The fact that a physicist would read this as 10 Kelvin is irrelevant. I believe that

the removal of the degree symbol in front of Kelvin temperatures 1 is a temporary

aberration, going against hundreds of years of tradition for temperature scales. Thus, I

will always use ‘degrees Kelvin’ and the degree symbol for temperature, and hope that

in the next few decades the degree symbol will reappear!

Inverse trig functions have been written using the arc- form, thus arccos( x ) is used in

preference to cos −1 ( x ) . In any case, the form cos −1 (x ) is incompatible with the form

cos 2 (x ) ; to be logically consistent, cos −1 (x ) should mean 1 cos(x ) .

Full answers to the exercises are posted on the website.

Use the password book$owner

http://www.logbook.freeserve.co.uk/seekrets

Rather than leaving the next page blank, I managed to slip in a bit of interesting, but arguably nonessential material, which had been cut earlier…

1

J. Terrien, 'News from the International Bureau of Weights and Measures', in Metrologia, 4, no. 1 (1968), pp.

41-45.

viii

ANALOG SEEKrets

CONFORMAL MAPPING: Multiplication by j, the square root of minus one, can be considered as

a rotation of 90° in the complex plane. In general, a function of a complex variable can be used to

transform a given curve into another curve of a different shape. Take a complex variable,

w ≡ u + j ⋅ v , (u and v both real). Perform a mathematical operation f(w) on w, resulting in a new

complex variable z. Then f (w) ≡ z ≡ x + j ⋅ y , (x and y both real). w is transformed (mapped) onto z.

A set of points chosen for w forms a curve. The function f(w) acting on this curve creates another

curve. For clarity, draw consecutive values of w on one graph (w-plane), and the resulting values of z

on another graph (z-plane).

For ‘ordinary’ functions such as polynomials, sin, cos, tan, arcsine, arccos, sinh, exp, log, &c, and

combinations thereof, the real (x) and imaginary (y) parts of the

∂u ∂v

∂u

∂v

transformed variable are related. Such ordinary functions are known as

=

and

=−

∂x ∂y

∂y

∂x

‘regular’ or ‘analytic’; mathematicians call them monogenic, and say

they fulfil the Cauchy-Riemann equations:

The term ‘conformal mapping’ is used because the angle of intersection of two curves in the w-plane

remains the same when the two curves are transformed into the z-plane. Consider two ‘curves’ in the

w-plane, the lines u=a and v=b (a and b real constants). The angle of intersection is 90°. The

resulting transformed curves also intersect at 90° in the z-plane. The angle of intersection is not

preserved at critical points, which occur when f ′(w) = 0 . These critical points must therefore only

occur at boundaries to the mapped region.

Further differentiation of the Cauchy-Riemann equations gives the second order partial differential

∂ 2u ∂ 2u

∂ 2v ∂ 2 v

equations

and

+

=

0

+

= 0 . These equations are recognisable as Laplace’s

∂x 2 ∂y 2

∂x 2 ∂y 2

equation in 2-dimensions using rectangular co-ordinates, which are immediately applicable to the

electric field pattern in a planar resistive film and the electric field pattern in a cross-section of a long

uniform 3D structure such as a pipe in a conduit (see rear cover field plot).

Consider lines of constant u in the w-plane as being equipotentials; lines of constant v in the w-plane

are then lines of flow (flux). Intelligent choice of the transformation function turns a line of constant u

2

(w-plane) into a usefully shaped equipotential boundary in the z-plane. Equipotential plots can then

be obtained for otherwise intractable problems. Important field problems that have been solved by

conformal mapping include coplanar waveguide, microstrip and square coaxial lines. There are

published collections of transformations of common functions, although complicated boundaries

generally require multiple transformations. One can try various functions acting on straight lines in

the w-plane and catalogue the results for future use, much like doing integration by tabulating

derivatives. Another possibility is to take the known complicated boundary problem in the z-plane

3

and transform it back to a simple solution in the w-plane. (Schwartz-Christoffel transformations).

Conformal mapping allows the calculation of planar resistances having a variety of electrode

4

configurations. Considering the field pattern as a resistance, the resistance of the pattern is

unchanged by the mapping operation. The resistance of the complex pattern is equal to that of the

simple rectangular pattern.

R=

length

× Ω ⁄ , planar resistance.

width

C = ε 0ε r ×

width

F/m, microstrip capacitance

length

Far from being an obscure and obsolete mathematical method, superseded by numerical

techniques, conformal mapping is still important because it produces equations of complex field

patterns rather than just numerical results for any specific pattern.

2

S. Ramo, J.R. Whinnery, and T. Van Duzer., 'Method of Conformal Transformation', in Fields and Waves in

Communication Electronics, 3rd edn (Wiley (1965) 1994), pp. 331-349.

3

L.V. Bewley, Two-Dimensional Fields in Electrical Engineering (Macmillan, 1948; repr., Dover, 1963).

4

P.M. Hall, 'Resistance Calculations for Thin Film Patterns', in Thin Solid Films, 1 (1967/68), pp. 277-295.

1

1

CH1: introduction

A SEEKret is a key piece of knowledge which is not widely known, but which may be

‘hidden’ in old textbooks or obscure papers. It is something that you should seek-out,

discover or create in order to improve your skills. Whilst others may already know this

SEEKret, their knowing does not help you to fix your problems. It is necessary for you to

find out these trade SEEKrets and later to create some of your own. For this reason many

key papers are referenced in this book.

Recent papers can be important in their own right, or because they have references to

whole chains of earlier papers. There are therefore two ways to seek out more

information about a particular area: you can either pick a modern paper and work back

through some of its references, or you can pick an early paper and use the Science

Citation Index to see what subsequent papers refer back to this original.

This book is written for self-study. You are expected to be able to sit down and study the

book from beginning to end without needing assistance from a tutor. Thus, all problems

have solutions (on the WWW) and everything is written so that it should be accessible to

even the slowest advanced student.

This is advanced material, which assumes that you have fully understood earlier

courses. If not, then go back to those earlier texts and find out what you didn’t get.

As you read through (your own copy of) this text, have a highlighter pen readily to

hand. You will find parts that are of particular interest and you should mark them for

future reference. It is essential to be able to rapidly find these key points of interest at

some later time. Thus, you should also go to the index and either highlight the

appropriate entry, or write an additional index entry which is meaningful to you.

Many of the exercises are marked with a *. These are essential to the flow of the text,

and the answers are equally part of the text. It is important for you to follow these

exercises and answers as part of the learning process; write down answers to the

exercises, even when the answers appear obvious. The act of writing down even the

simplest of answers makes the information ‘stick’ in your mind and prevents you from

‘cheating’. Exercises prefixed by @ have answers at the back of the book.

I don’t expect you to do well with the exercises. I actually want you to struggle with

them! I want you to realise that you don’t yet know this subject. You are encouraged to

seek out information from other textbooks before looking up the answer. If you just look

up the answers then you will have missed 90% of the benefit of the exercises.

Unlike standard texts, the exercises here may deliberately give too much information,

too little information, or may pose unanswerable questions; just like real life in fact. The

idea is that this is a ‘finishing school’ for designers. In many senses, it is a bridge

between your academic studies and the real world. In any question where insufficient

information has been given, you will be expected to state what has been left out, but also

to give the most complete answer possible, taking the omission into account. Don’t just

give up on the question as “not supplying enough information”.

Words written in bold italics are explained in the Encyclopaedic Glossary. Many

important concepts are explained there, but not otherwise covered in the text. The first

time a technical word or phrase is used within a section it may be emphasised by the use

of italics. Such words or phrases can then be further explored on the WWW using a search

2

ANALOG SEEKrets

engine such as Google.

Be aware that many words have two or more distinct definitions. Take a look at

stability in the glossary. When you read that a particular amplifier is “stable”, the

statement is ambiguous. It might mean that the amplifier doesn’t oscillate; or it might

mean that the amplifier’s gain does not shift with time; or it might mean that the DC

offset of the amplifier doesn’t shift with temperature.

If the text doesn’t seem to make sense, check with a colleague/teacher if possible. If

it still makes no sense then maybe there is an error in the book. Check the website:

http://www.logbook.freeserve.co.uk/seekrets. If the error is not listed there, then

please send it in by email. In trying to prove the book wrong, you will understand the

topic better. The book itself will also be improved by detailed scrutiny and criticism.

I need to introduce some idea of costing into this book. With different currencies, and

unknown annual usage rates, this is an impossible job. My solution is to quote prices in

$US at the current rate. You will have to scale the values according to inflation and

usage, but the relative ratios will hold to some degree.

The statement “resistors are cheaper than capacitors” is neither totally true nor

wholly false. A ±1% 250 mW surface mount resistor costs about $0.01, whereas a

0.002% laboratory standard resistor can cost $1400. A surface mount capacitor currently

costs about $0.04 whereas a large power factor correction capacitor costs thousands of

dollars. Look in distributors’ catalogues and find out how much the various parts cost.

In older text books the term “&c” is used in place of the more recent form “etc.”, both

being abbreviations for the Latin et cetera, meaning and so forth. The older form uses

two characters rather than four and therefore deserves a place in this book. Terms such

as r.m.s. are very long winded with all the period marks. It has become accepted in

modern works to use the upper case without periods for such abbreviations. Thus terms

such as RMS, using small capitals, will be used throughout this book.

For the sake of brevity, I have used the industry standard short-forms:

scope

spec

pot

ptp

+ve

−ve

trig

oscilloscope

specification

potentiometer

peak-to-peak (written as p-p in some texts)

positive

negative

trigonometry / trigonometric

I may occasionally use abbreviated notation such as ≤ ±3 µA. Clearly I should have said

the magnitude is ≤ 3 µA. This ‘sloppy’ notation is also used extensively in industry.

3

3

CH2: an advanced class

2.1 The Printed Word

Service manuals for older equipment give a detailed description of the equipment and

how it works. This does not happen nowadays. Detailed information about designs is

deliberately kept secret for commercial reasons. This subject is called intellectual

property. It is regarded as a valuable commodity that can be sold in its own right.

Universities, national metrology institutes, and commercial application departments

have the opposite form of commercial pressure, however. Universities need to maintain

prestige and research grants by publication in professional journals. Doctoral candidates

may need to ‘get published’ as part of their PhD requirements. National metrology

institutes publish research work to gain prestige and public funding. Corporate

applications departments produce “app notes” to demonstrate their technical expertise

and to get you to buy their products. And individual authors reveal trade SEEKrets to get

you to buy their books. Publication in the electronics press is seldom done for

philanthropic reasons.

It takes time, and therefore money, to develop good ways of doing or making things;

these good ways are documented by drawings and procedures. It is essential that you

read such documentation so you don’t “re-invent the wheel”. Your new component

drawings will be company confidential and require a clause preventing the manufacturer

from changing his process after prototypes have been verified. The drawing cannot

specify the component down to the last detail; even something as simple as a change in

the type of conformal coating used could ruin the part for your application.

2.2 Design Concepts

Rival equipment gives important information to a designer. It is an essential part of a

designer’s job to keep up to date with what competitors are up to, but only by legal

means.

It is quite usual to buy an example of your competitor’s product and strip it down.

When theirs is the same as yours, this validates your design. When theirs is better, you

naturally use anything that isn’t patented or copyrighted.

There will almost always be a previous design for anything you can think of. If you work

out your new design from first principles, it will probably be a very poor solution at best.

The thing you have to do is to examine what is already present and see what you can

bring to the product that will enhance it in some way. You have to ask these sorts of

questions:

Can I make it less expensive?

Can I make it smaller?

Can I make it lighter?

Can I make it more attractive to look at?

Can I make it last longer?

Can I make it more versatile?

4

ANALOG SEEKrets

Can I make it more efficient?

Can I make it more effective?

Can I make it perform the work of several other devices as well?

Can I make it more beneficial to the end-user ?

Can I make it more accurate?

Can I make it more reliable?

Can I make it work over a wider range of temperature/humidity/pressure?

Can I make it cheaper to service and/or calibrate?

Can I make it as good as the rival one, but use only local labour and materials?

Can I make it easier and cheaper to re-cycle at the end of its life?

Can I reduce the environmental impact on end-of-life disposal?

These are general design considerations that apply to all disciplines and are not given in

order of importance. A new design need only satisfy one of these points. The new design

has to justify the design cost. If not, then why do the work?

From a manager’s point of view, this subject is considered as return on investment. If

the company spends $20,000 of design effort, it might expect to get $200,000 of extra

sales as a result. Engineering department budgets are often between 5% and 20% of

gross product sales.

2.3 Skills

This table should not be taken too literally:

poor spec

average spec

excellent spec

Cheap parts

poor

Good

GRAND MASTER

Expensive parts

unemployable

average

Master

Anybody can take expensive parts and make a pile of rubbish out of them. It takes skill

to make something worthwhile. If you can take inexpensive parts and make a spectacular

product you deserve the title Grand Master, provided that you can do it consistently, or

for lots of money.

FIGURE 2.3A:

Skills Chart

an analog designer’s necessary skills

Analog Designer

DC & LF

Designer

RF

Designer

Microwave

Designer

Digital

Designer

Power Electronics

Physicist

Materials Expert

Mechanical design

Metrologist

Software Analyst

SPICE analysis

Fault locator

PCB designer

Lab technician

Safety regulations

Research Physicist

Mechanical design

Software Analyst

SPICE analysis

Fault locator

PCB designer

Lab technician

EMC regulations

Research Physicist

Mechanical design

Software Analyst

SPICE / other CAD

Fault locator

PCB designer

Lab technician

Microwave safety

Transmission Lines

SPICE / other CAD

Fault locator

PCB designer

EMC regulations

Lab technician

This chart suggests skills you

should acquire. Your ability

should at least touch upon

every

skill

mentioned.

However, I do not insist that

you agree with me on this

point. It is an opinion, not a

fact. All too often people,

including engineers, elevate

opinions to the status of facts.

Learn the facts, and decide

which opinions to agree with.

CH2: An Advanced Class

5

I once had a boss who insisted that any wire-link modifications to production PCBs

should be matched to the PCB colour to blend in. The quality department, on the other

hand, wanted contrasting wire colours in order to make inspection easier. Both sides felt

passionately that the other side was obviously wrong!

An opinion, theory or result stated/published by an eminent authority is not

automatically correct. This is something that is not mentioned in earlier school courses.

In fact, you get the greatest dispute amongst eminent authorities about the most

advanced topics.

Having said that opinions are not facts, engineering is not the exact science you

might like. Often you have to take a decision based on incomplete facts. Now, technical

opinion, in the form of engineering judgement, is vital. In this case, the opinion of a

seasoned professional is preferable to that of a newcomer. Furthermore, this opinion, if

expressed coherently and eloquently, can carry more weight than the same opinion

mumbled and jumbled in presentation. Thus, communication skills are needed to allow

your ideas to be brought to fruition. Do not underestimate the importance of both verbal

and written communication skills, especially in large companies or for novel products.

You may need to convince technically illiterate customers, managers or venture

capitalists that your idea is better than somebody else’s idea. If you succeed then wealth

will follow, provided your idea was good!

A related idea is that of intuition. Sometimes you can’t directly measure a particular

obscure fault or noise condition. In this case you may have to invent a “theory” that

might explain the problem, and then test by experimentation to see if it fits. Good

intuition will save a lot of time and expense. Could it be that the fluorescent lights are

causing this noise effect? Turn them off and see!

Be prepared for textbooks to contain errors, both typographical and genuine author

misunderstandings. And do not make the mistake of believing a theory is correct just

because everybody knows it to be true. That a theory has been accepted for a hundred

years, and re-published by many authors, does not automatically make it correct. Indeed,

the greatest scientific discoveries often smash some widely held belief.

The great James Clerk Maxwell asserted in his Treatise that magnetic fields did not

act directly on electric currents. A young student didn’t believe this statement and tested

it; the result was the Hall Effect.

Experts make mistakes. Even an expert must be willing to be corrected in order to

make them more right in the future. Don’t hang on to a theory which doesn’t work, just

to show how right you were.

The difference between a professional design and a “cook book” solution or an

“application note” solution is that the professional design works. It may not look elegant.

Many professional designs require corrections, even after they have been in production

for some little while. A diode here, a capacitor there. These are the vital pieces that stop

the circuit from blowing up at power-off. These are the parts that fix some weird

response under one set of specific conditions. These are the parts that give a factor of

two improvement for little extra cost.

I am not encouraging you to need these ‘afterthought’ components in your designs.

The point is that the difference between a workable, producible, reliable design and an

unworkable ‘text book solution’ can be a matter of a few key components that were not

6

ANALOG SEEKrets

mentioned in your textbooks. Don’t feel ashamed to add these parts, even if the circuit

then looks ‘untidy’; add them because they are needed or desirable.

The best engineer is the one who can consistently solve problems more rapidly or

cost effectively than others. Now this may mean that (s)he is just much smarter than

everyone else is. Alternatively (s)he may just have a better library than others. If you

have enough good textbooks at your disposal, you should be able to fix problems faster

than you would otherwise do. After all, electrical and electronic engineering are mature

subjects now. Most problems have been thought about before.

By seeing how somebody else solved a similar problem, you should be able to get

going on a solution faster than if you have to think the whole thing through from first

principles. The vital thing you have to discover is that the techniques of design are not

tied to the components one designs with. The basics of oscillator design, for example,

date back to 1920, and are the same whether you use thermionic valves, transistors, or

superconducting organic amplifiers.

2.4 The Qualitative Statement

Engineers should try to avoid making qualitative statements.

Qualitative:

Quantitative:

concerned with the nature, kind or character of something.

concerned with something that is measurable.

If I say ‘the resistance increases with temperature’ then that is a qualitative statement.

You can’t use that statement to do anything because I have not told you how much it

increases. If it increases by a factor of 2× every 1°C then that is quite different to

changing 0.0001%/°C.

Qualitative statements come in all shapes and sizes, and sometimes they are difficult

to spot. Even the term RF [Radio Frequency] is almost meaningless! Look at the table of

“radio frequency bands” in the useful data section at the back of the book. Radio

Frequency starts below 60 kHz, “long waves”. Almost any frequency you care to think

of is being transmitted by somebody and can be picked up by unscreened circuitry. The

term RF therefore covers such a vast range of frequencies that it has very little meaning

on its own; 60 kHz radiated signals behave quite differently to 6 GHz signals, despite

them both being electromagnetic radiation. Light is also electromagnetic radiation, but it

is easy to see that it behaves quite differently to radio frequency signals.

The original usage of radio frequency (RF) was for those signals which are received

at an antenna {aerial} or input port, being derived from electromagnetic propagation.

After the signal was down-converted to lower frequencies it was called an intermediate

frequency (IF). The final demodulated signal was the audio frequency (nowadays baseband) signal. This idea has been extended for modern usage so that any signal higher

than audio frequency could be considered as a radio frequency, although there is no

reason why a signal in the audio frequency range cannot be used as a radio frequency

carrier.

Because radio frequency radiation pervades the modern environment over a range

extending beyond 60 kHz to 6 GHz, it is clear that all ‘sensitive’ amplifiers and control

systems needed to be screened.

CH2: An Advanced Class

7

All circuitry must be considered sensitive to radiated

fields unless proven otherwise by adequate testing.

Since RF covers too broad a range of frequencies to be useful for most discussions, I

have tried to eliminate it from the text as much as is sensible. I like using terms such as

VHF+ , meaning at VHF frequencies and above, the reason being that it is appropriately

vague. To say that a specific technique does not work above 10 MHz is unreasonable.

There will, in practice, be a band of frequencies where the technique becomes

progressively less workable. Thus a term like VHF (30 MHz to 300 MHz) with its ×10

band of frequencies gives a more realistic representation of where a particular

phenomenon occurs. The compromise is to occasionally give an actual frequency, on the

understanding that this value is necessarily somewhat uncertain.

These example qualitative statements do not communicate well to their audience:

Calibrate your test equipment regularly.

Zero the meter scale frequently when taking readings.

Make the test connections as short as possible.

Do not draw excessive current from the standard cells.

Ensure that the scope has adequate bandwidth to measure the pulse risetime.

Use an accurate 10 Ω resistor.

Make the PCB as small as possible.

The reader will be left with questions such as: how often, how accurate, how short, how

small? Answer the questions by providing the missing data. The following examples

illustrate the solution to the qualitative statement problem, but do not consider these

examples as universally true requirements:

☺

☺

☺

☺

☺

☺

☺

Calibrate your test equipment at least once a week.

Zero the meter scale every 5 minutes when taking readings.

Make the test connections as short as possible, but certainly less than 30 cm.

Do not draw more than 1 µA from the standard cells, and even then do it for not

more than a few minutes every week.

Ensure that the scope risetime is at least 3× shorter than the measured pulse

risetime.

Use a 10 Ω resistor with better than ±0.02% absolute accuracy.

Make the PCB as small as possible, but certainly not larger than 10 cm on each

side.

2.5 The Role of the Expert

Design engineers dislike the idea of experts. After all, every good designer thinks (s)he

could have done it at least 10% faster, 10% cheaper, 10% smaller, or better in some

other way. Nevertheless there are experts, defined as those people with considerable

8

ANALOG SEEKrets

knowledge in a particular specialised field. An expert does not therefore have to be the

very best at that particular subject. This expert could be one of the best.

Electronics is too large a field for one to ever truly be ‘an electronics expert’. This

would be a title endowed by a newspaper. An expert would be a specialist. The term

‘expert’ is so disliked that the titles guru and maven are sometimes used to avoid it.

Finding an expert can be difficult. You will find that anybody selling their own

contract or consultancy services will be “an expert” in their promotional material.

Remember also that real experts may not wish to reveal their SEEKrets, particularly to a

potential rival. This knowledge will have taken them years to acquire. You may

therefore need to develop a rapport with such an expert before they impart some of their

wisdom to you; this comes under the heading of people skills.

The expert should be able to predict what might happen if you do such and such

operation, and if unsure should say so. The expert should be able to say how that task

has been accomplished in the past and possibly suggest improvements. The expert

should be able to spot obvious mistakes that make a proposal a non-starter.

What an expert should not do is stifle change and development. It is all too easy to

discourage those with new ideas. The key is to let new ideas flourish whilst not wasting

money investigating things which are known to have not worked.

Bad ‘Expert’: (circa 1936). An aeroplane can’t reach the speed of sound because the

drag will become infinite (Prandtl−Glauert rule). The plane will

disintegrate as it approaches the sound barrier.

True Expert: (circa 1936). We know that drag increases very rapidly as we approach

Mach 1. We can’t test the validity of the Prandtl−Glauert rule until we

push it closer to the limit. Let’s do it!

Given that the drag can rapidly increase by a factor of 10× as you approach the speed of

sound, you should wonder if you could have been as brave as the true experts who broke

the sound barrier.1

Every now and then an engineer should be allowed to try out an idea that seems

foolish and unworkable to others. The reason is that some of these ideas may work out

after all! The only proviso on this rule is that the person doing the work has to believe in

it. Anyone can try a known ‘null’ experiment and have it fail. However, a committed

person will struggle with the problem and possibly find a new angle of attack. In any

case, young engineers who are not allowed to try different things can become frustrated,

and consequently less effective.

Another form of expert is the old professor of engineering or science. It may be that

this old professor is past his/her best in terms of new and original ideas. However, such

an expert would know what was not known. (S)he could then guide budding PhD

candidates to research these unknown areas. There is no point in getting research done

into something that is already fully understood!

When struggling with a problem it is important to realise when additional help would

resolve the issue more cost effectively. Don’t struggle for days / weeks when someone

else can point you in the right direction in a few minutes.

1

J.D. Anderson, Fundamentals of Aerodynamics, 2nd edn (McGraw-Hill, 1991).

CH2: An Advanced Class

9

PRE-SUPERVISOR CHECKLIST

Is the power switched on and getting to the system (fuse intact, as evidenced

by lights coming on for example)?

Are all the power rails in spec?

Is the noisy signal due to a faulty cable ?

Is the system running without covers and screens so that it can be interfered

with by nearby signal generators, wireless keyboards, soldering iron switching

transients, mobile phones, wireless LANs &c.

Is the signal on your scope reading incorrectly because the scope scaling is not

set up for the 10:1 probe you are using, or is set for a 10:1 probe and you are

using a straight cable?

Is the ground/earth lead in the scope probe broken?

Is the scope probe trimmed correctly for this scope and this scope channel?

Is the probe ‘grabber’ making contact with the actual probe tip? (If you just

see the fast edges of pulses this suggests a capacitive coupling due to a

defective probe grabber connection.)

Are you actually viewing the equipment and channel into which the

probe/cable is connected?

Is your test equipment running in the correct mode? (An FFT of a pure

sinusoid shows spurious harmonic distortion if peak detection is selected on

the scope. Spectrum analysers give low readings when the auto-coupling

between resolution bandwidth, video bandwidth and sweep speed is disabled.)

Has any equipment been added or moved recently? [In one case an ultra-pure

signal generator moved on top of a computer monitor produced unpleasant

intermodulation products that caused a harmonic distortion test to fail.]

Often, in explaining the problem to somebody else, you suddenly realise your own

mistake before they can even open their mouth! Failing that, another person may quickly

see your mistake and get the work progressing again quickly. That doesn’t make this

other person cleverer than you; they are just looking at the problem from a fresh

perspective.

The difficulty comes when you are really stuck. You have been through the presupervisor list and called the supervisor in, and other colleagues, and no-one has helped.

If you call in a consultant from outside you will have to pay real money. Before doing

this there is the pre-consultant checklist.

10

ANALOG SEEKrets

PRE-CONSULTANT CHECKLIST

Have you used the pre-supervisor checklist and discussed the problem

“internally” with as many relevant personnel as possible?

Have you established which part of the system is causing the problem?

(Remove parts completely where possible.) Use a linear bench power supply

to replace a suspect switched-mode supply. Reposition sub-assemblies on

long leads to test the effect.

Have you faced up to difficult / expensive / unpleasant tests which could settle

the problem? It may be that the only way to be sure of the source of a problem

is to damage the equipment by drilling holes or cutting slots. There is no point

calling in a consultant to tell you what you already knew, but were unwilling

to face up to.

Have you prepared a package of circuit diagrams, component layouts, specs,

drawings and so forth, so that the consultant gets up to speed quickly?

Consultant’s time is expensive, so you must use it efficiently. Also, in

preparing the package you may spot the error yourself because you are having

to get everything ready for somebody new.

Have you tried to ‘step back’ from the problem and see how it would appear

to a consultant? Have you thought what a consultant might say in this

situation? For noise the obvious answers are board re-layout, shielding, more

layers on the PCB and so forth. (If the advice is a re-layout, it is difficult to tell

the difference between a good consultant and a bad consultant.) You need to

ask if it is possible to model the improvements before scrapping the existing

design / layout. You should get the consultant to verify the detail of the new

layout, since you got the layout wrong last time.

Does the consultant have proven experience in this particular area?

Have you set a budget in time / money for the consultancy: a day, a week, a

month &c? Consultancy can easily drag on past an optimum point as there is

always more to do.

Let’s suppose your company designs and manufactures phase displacement

anomalisers.† Your company is the only one in the country that does so. You will not

find a consultant with direct experience of the subject, but you may find one with

expertise in the particular area you are having trouble with. The consultant will want to

ask questions, and it is essential that there is always somebody on hand who is willing,

able, and competent to immediately answer these questions. These questions may seem

silly, but they are key to solving the problem. The problem has to be reduced to a

†

Invented product type.

CH2: An Advanced Class

11

simplicity. The car won’t start: is it a fuel problem, an electrical problem, or a

mechanical problem?

Any problem can seem overwhelming when you are immersed in it too deeply and

you are under time pressure to solve it. The consultant is not under such pressure. If the

power supply is a pile of rubbish, (s)he can say so without guilt because (s)he didn’t

design it. (S)he can therefore be more objective as (s)he has no vested interest in

protecting his/her design. You, on the other hand, may not want to ‘blame’ your

cherished power supply as being the guilty party!

This is the point where you need to try to be objective about your own design. Come

back in the next day without a tie on, or park in a different place, or get out of bed the

wrong side, or something, so that you can assume a different viewpoint. You may need

to look at the problem from a different angle in order to solve it.

In solving difficult problems in the past, I have always found it better to have at least

two people working on the problem. It is more efficient, as there is less stress on the

individual. I don’t mean that there is one person being helped by another. There are two

people assigned to the problem, both of whom are equally responsible for getting a