See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/311896109

Script Identification in Natural Scene Images: A Dataset and Texture-Feature

Based Performance Evaluation

Chapter · December 2017

DOI: 10.1007/978-981-10-2107-7_28

CITATIONS

READS

10

586

4 authors, including:

Manisha Verma

Balasubramanian Raman

Osaka University

Indian Institute of Technology Roorkee

34 PUBLICATIONS 847 CITATIONS

326 PUBLICATIONS 6,011 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

Machine Learning Applications View project

Encrypted domain processing of big multimedia data over cloud View project

All content following this page was uploaded by Manisha Verma on 26 November 2017.

The user has requested enhancement of the downloaded file.

SEE PROFILE

Script Identification in Natural Scene

Images: A Dataset and Texture-Feature

Based Performance Evaluation

Manisha Verma, Nitakshi Sood, Partha Pratim Roy

and Balasubramanian Raman

Abstract Recognizing text with occlusion and perspective distortion in natural

scenes is a challenging problem. In this work, we present a dataset of multi-lingual

scripts and performance evaluation of script identification in this dataset using

texture features. A ‘Station Signboard’ database that contains railway sign-boards

written in 5 different Indic scripts is presented in this work. The images contain

challenges like occlusion, perspective distortion, illumination effect, etc. We have

collected a total of 500 images and corresponding ground-truths are made in semiautomatic way. Next, a script identification technique is proposed for multi-lingual

scene text recognition. Considering the inherent problems in scene images, local texture features are used for feature extraction and SVM classifier, is employed for script

identification. From the preliminary experiment, the performance of script identification is found to be 84 % using LBP feature with SVM classifier.

Keywords Texture feature

classifier ⋅ k-NN classifier

⋅ Local binary pattern ⋅ Script identification ⋅ SVM

M. Verma (✉)

Mathematics Department, IIT Roorkee, Roorkee, India

e-mail: manisha.verma.in@ieee.org

N. Sood

University Institute of Engineering and Technology,

Panjab University, Chandigarh, India

e-mail: nitakshi.sood@gmail.com

P.P. Roy ⋅ B. Raman

Computer Science and Engineering Department, IIT Roorkee, Roorkee, India

e-mail: proy.fcs@iitr.ac.in

B. Raman

e-mail: balarfma@iitr.ac.in

© Springer Science+Business Media Singapore 2017

B. Raman et al. (eds.), Proceedings of International Conference on Computer Vision

and Image Processing, Advances in Intelligent Systems and Computing 460,

DOI 10.1007/978-981-10-2107-7_28

309

310

M. Verma et al.

1 Introduction

The documents in multiple script environment, comprise mainly text information

in more than one script. Script recognition can be done at different levels as page/

paragraph level, text line level, word level or character level [8]. It is necessary

to recognize different script regions of the document for automatic processing of

such documents through Optical Character Recognition (OCR). Many techniques

has been proposed for script detection in past [2]. Singhal et al. proposed a hand

written script classification based on Gabor filters [11]. A single document may hold

different kind of scripts. Pal et al. proposed a method for line identification in multilingual Indic script in one document [6]. Sun et al. proposed a method to locate the

candidate text regions using the low level image features. Encouraging experimental results have been obtained on the nature scene images with the text of various

languages [12]. A writer identification method, independent of text and script, has

been proposed for handwritten documents using correlation and homogeneity properties of Gray Level Co-occurrence Matrices (GLCM) [1]. Several methods have

been proposed on the identification technique that detects scripts, from document

images using vectorization which can be implemented to the noisy and degraded

documents [9]. Video script identification also uses the concept of text detection by

studying the behavior of text lines considering the cursiveness and smoothness of

the given script [7].

In the proposed work, a model is designed to identify words of Odia, Telugu,

Urdu, Hindi and English scripts from a railway station board that depicts the name

of place in different scripts. For this task, first a database of five scripts has been made

using railway station board images. The presented method is trained to learn the distinct features of each script and then use k nearest neighbor or SVM for classification.

Given a scene image of railways station, the yellow station board showing the name

of the station in different scripts can be extracted. The railway station boards have the

name of station written in different languages which includes English, Hindi, and any

other regional language of that place. The image is first stored digitally in grayscale

format and then it is further processed to have a script recognition accurately. It is a

problem of recognizing script in natural scene images and as a sub problem it refers

to recognizing words that appear on railway station boards. If these kind of scripts

can be recognized, they can be utilized for a large number of applications.

Previously researchers have worked to identify text in natural scene images, but

their scopes are limited to horizontal texts in the image documents. However, railway

station boards can be seen in any orientation, and with perspective distortion. The

extraction of region of interest, i.e. text data from the whole image is done in a semiautomatic way. Given a segmented word image, the aim is to recognize the script

from it. Most of the script detection work have been done on binary images. In the

conversion of grayscale to binary, the image can lose text information and hence

detection process may affect. To overcome this issue, the proposed method is using

grayscale images to extract features for images.

Script Identification in Natural Scene Images: A Dataset and Texture-Feature . . .

311

1.1 Main Contribution

Main contributions are as follows.

∙ An approach has been presented to identify perspective scene texts of random orientations. This problem appears in many real world issues, but has been neglected

by most of the preceding works.

∙ For performance testing, we present a dataset with different scripts, which comprises texts from railway station scene images with a variety of viewpoints.

∙ To tackle the problem of script identification, texture features using local patterns

have been used in this work.

Therefore, the main issue of handling perspective texts has been neglected by previous works. In this paper, recognition of perspective scripts of random orientations

has been addressed in different natural scenes (such as railway station scene images).

Rest of the paper is structured as follows. Section 2 presents the data collection and scripts used in our dataset of scene images. Section 3 describes the texture

features extracted from scene text images. The classification process is described

in Sect. 4. Results obtained through several experiments are presented in Sect. 5.

Finally, we conclude in Sect. 6 by highlighting some of the possible future extensions of the present work.

2 Data Collection

Availability of standard database is one of the most important issues for any pattern

recognition research work. Till date no standard database is available for all official

Indic scripts. Total 500 images are collected from different sources. Out of 500 script

images, 100 for each script are taken.

Initially there were scenic images present in the database, which was further segmented into the yellow board pictures by selecting the four corner points of the

desired yellow board. Further, these images were converted into grayscale and then

into binary image using some threshold value. The binary image was then segmented

into words of each script. For those images, which could not give the required perfect segments, vertical segmentation followed by horizontal segmentation have been

carried out otherwise the manual segmentation for those script images has been done

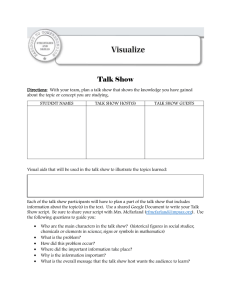

(Fig. 1).

Challenges: Script recognition is challenging for several reasons. The first and

most obvious reason is that there are many script categories. The second reason is the

viewpoint variation where many boards can look different from different angles. The

third reason is illumination in which lighting makes the same objects look like different objects. The fourth reason is background clutter in which the classifier cannot

distinguish the board from its background. Other challenges include scale, deformation, occlusion, and intra-class variation. Some of the images from database have

been shown in Fig. 2.

312

M. Verma et al.

Fig. 1 Data collection from station board scenic images

Fig. 2 Original database images

2.1 Scripts

Having said all that, when we look at country India, precisely, incredibly diverse

India, where language changes from one region to another just as easily as notes of a

classical music piece. In India, moving few kilometers north, south, east or for that

matter west, there’s a significant variation in language, both the dialect and the script

change, not to mention the peculiar accents that occasionally adorn the language.

Each region of this country is totally different from the rest, and this difference is for

sure inclusive of the language too.

Narrowing the horizon and talking of Hindi, Punjabi, Bengali, Urdu and English, these languages have their own history, each equally unique and very ancient

of course. Hindi, being in the Devanagari Script, Punjabi in the Gurumukhi Script,

Bengali in the Bangla Script, Urdu in the Persian Script with a typical Nasta’liq Style,

and English in the Roman Script, are very different in many ways despite a different

script. But scripts mark an important component of studying variations in languages.

The scripts decide to a much extent the development of a language. In the following

section a brief outline about the English, Hindi, Odia, Urdu and Telugu languages is

provided.

1. Roman Script: It is used to write English language which is an international language. This script is a descendant of the ancient Proto-Indo-European language

family. About 328 million people in India use this language as a communication

medium.

2. Devanagari Script: Hindi is the one of the most popular languages in India which

uses this script. This language is under Indo-European language family. In India,

about 182 million people mainly residing in northern part use this language as

their communication medium.

Script Identification in Natural Scene Images: A Dataset and Texture-Feature . . .

313

3. Odia: Odia is language of Indian state Odisha and spoken by people of this state.

Moreover, it is spoken in other Indian states, e.g., Jharkhand, West Bengal and

Gujarat. It is an Indo-Aryan language used by about 33 million people.

4. Urdu Script: Urdu script is written utilizing Urdu alphabets in right-to-left order

with 38 letters and no distinct letter cases, the Urdu alphabet is usually written in

the calligraphic Nasta’liq script.

5. Telugu Script: Telugu script is utilized to write Telugu language and it is from the

Brahmic family of scripts. Telugu is the language of Andhra Pradesh and Telangana states and spoken by people of these states alongwith few other neighboring

states.

3 Feature Extraction

Features represent appropriate and unique attributes of an image. It is mainly important when image data is too large to process directly. Images in database are of different size and orientation, and hence feature extraction is crucial task of system to

make a unique process for all images. Converting the input image into the set of features is called feature extraction [8]. In pattern recognition, many features have been

proposed for image representation. There are mainly high level and low level feature

which correspond to user and image perspective respectively. In low level features,

color, shape, texture, etc. are most common features. Texture is an significant feature

in images that can be noticed easily. In the proposed work, we have extracted texture

features of image using local patterns. Local patterns work with the local intensity

of each pixel in image, and transform the whole image into a pattern map.

Feature extraction is performed directly on images. After the pre-processing of the

input script images, next phase is to carry out the extraction and selection of different

features. It is a very crucial phase for the recognition system. Computation of good

features is really a challenging task. The term “good” signifies the features which

are good enough to capture the maximum variability among inter-classes and the

minimum variability within the intra-classes and still computationally easy. In this

work, LBP (local binary pattern), CS-LBP (center symmetric local binary pattern)

and DLEP (directional local extrema pattern) features of both training and testing

data for each script were extracted and studied. All three local patterns are extracted

from grayscale version of original image. Brief description of each of the local pattern is given below:

3.1 Local Binary Pattern (LBP)

Ojala et al. proposed local binary pattern [5] in which, each pixel of the image is

considered as a center pixel for calculation of pattern value. A neighborhood around

314

M. Verma et al.

each center pixel is considered and local binary pattern value is computed. Formulation of LBP for a given center pixel Ic and neighboring pixel In is as follows:

LBPP,R (x1 , x2 ) =

P−1

∑

2n × T1 (In − Ic )

(1)

n=0

{

T1 (a) =

H(L) ∣LBP =

1 a≥0

0 else

m

n

∑

∑

x1 =1 x2 =1

L ∈ [0, (2 − 1)]

T2 (LBP(x1 , x2 ), L);

(2)

P

{

T2 (a1 , b1 ) =

1

0

a1 = b1

else

(3)

LBPP,R (x1 , x2 ) computes the local binary pattern of pixel Ic , where number of

neighboring pixels and the radius of circle taken for computation are denoted as P

and R and (x1 , x2 ) are coordinates of pixel Ic . H(L) computes the histogram of local

binary pattern map where m × n is the image size (Eq. 2).

3.2 Center Symmetric Local Binary Pattern (CSLBP)

Center-symmetric local binary patterns is modified form of LBP that calculated the

pattern based on difference of pixels in four different directions. Mathematically,

CSLBP can be represented as follows:

(P∕2)−1

CSLBPP,R =

∑

2n × T1 (In − In+(P∕2) )

(4)

n=0

H(L) ∣CSLBP =

m

n

∑

∑

x1 =1 x2 =1

T2 (CSLBP(x1 , x2 ), L);

(5)

L ∈ [0, 5]

where In and In+(P∕2) correspond to the intensity of center-symmetric pixel pairs on

a circle of radius R with number of neighboring pixels P. The radius is set to 1 and

the number of neighborhood pixels are taken as 8. More information about CSLBP

can be found in [3].

3.3 Directional Local Extrema Pattern (DLEP)

The Directional Local Extrema Patterns (DLEP) are used to compute the relationship

of each image pixel with its neighboring pixels in specific directions [4]. DLEP has

been proposed for edge information in 0◦ , 45◦ , 90◦ and 135◦ directions.

Script Identification in Natural Scene Images: A Dataset and Texture-Feature . . .

315

I ′ (i ) = In − Ic ∀

(6)

n = 1, 2, … , 8

′

D𝜃 (Ic ) = T3 (Ij′ , Ij+4

) ∀𝜃 = 0◦ , 45◦ , 90◦ , 135◦

′

T3 (Ij′ , Ij+4

)=

{

∀

1

0

j = (1 + 𝜃∕45)

Ij′

′

× Ij+4

≥0

else

(7)

{

}

|

DLEPpat (Ic ))| = D𝜃 (Ic ); D𝜃 (I1 ); D𝜃 (I2 ); … D𝜃 (I8 )

|𝜃

DLEP(Ic )|𝜃 =

8

∑

2n × DLEPpat (Ic )|𝜃 (n)

(8)

n=0

H(L) ∣DLEP(𝜃) =

m

n

∑

∑

x1 =1 x2 =1

T2 (DLEP(x1 , x2 ) ∣𝜃 , L);

L ∈ [0, 511]

where DLEP(Ic )|𝜃 is the DLEP map of a given image and H(L) ∣DLEP(𝜃) is histogram

of the extracted DLEP map.

For all three features (LBP, CSLBP and DLEP) final histogram of pattern map

work as a feature vector of image. Later, the feature vector for all scripts of training

and testing data was made for experimental purpose.

4 Classifiers

After feature extraction, classifier is used to differentiate scripts into different classes.

In the proposed work, script classification has been done using two well-known classifiers, i.e., k-NN and SVM classifier.

4.1 k-NN Classifier

Image classification is based on image matching, and it is calculated by feature

matching. After feature extraction, similarity matching has been observed for testing

image. Different distance measuring techniques are Canberra distance, Manhattan

distance, Euclidean distance, chi-square distance, etc. In the proposed work, the best

results were found from the Euclidean distance measure.

(

D(tr, ts) =

) 12

L

∑

|

2|

|(Ftr (n) − Fts (n)) |

|

|

n=1

(9)

316

M. Verma et al.

Distance measures of a testing image from each training image are computed and

sorted. Based on sorted distances k nearest distance measures are selected as good

matches.

4.2 SVM Classifier

A Support Vector Machine (SVM) is a discriminative classifier formally defined

by a separating hyperplane. A classification task usually involves separating data

into training and testing sets. The goal of SVM is to produce a model (based on the

training data) which predicts the target values of the test data given only the test data

attributes. Though new kernels are being proposed by researchers, beginners may

find in SVM books the following four basic kernel [13].

Linear

K(zi , zj ) = zTi zj

(10)

Polynomial

K(zi , zj ) = (𝛾zTi zj + r)d , 𝛾 > 0

(11)

Radial basis function (RBS)

K(zi , zj ) = exp(−𝛾‖zi −

Sigmoid

zj ‖2 ), 𝛾 > 0

K(zi , zj ) = tanh(𝛾zTi zj + r)

(12)

(13)

Here 𝛾, r and d are kernel parameters.

5 Experimental Results and Discussion

5.1 Dataset Details

We evaluate our algorithm on the ‘Station boards’ data set. The results using texturebased features with k-NN and SVM classifier are studied in this section.

5.2 Algorithm Implementation Details

Initially the images were present in the form of station boards, out of which each

word of different script was extracted. Later, different features were extracted out of

these images such as CS-LBP, LBP and DLEP. These feature vectors of have been

used to train and test the images together with support vector machine (SVM) or k

nearest neighbour (k-NN) to classify the script type.

Script Identification in Natural Scene Images: A Dataset and Texture-Feature . . .

317

5.3 Comparative Study

We compare the results of SVM and k-NN for identification of 5 scripts. In the experiments, cross validation with 9:1 ratio has been adopted. Testing image set of 10

images and 90 training images have been taken for each script. During experiment,

different set of testing images has been chosen and average result has been obtained

from all testing sets. In each experiment, 50 images are used as test images and 450

images are total training images. We use multi-class SVM classifier with different

kernels to get better results. In SVM classifier, Gaussian kernel with Radial Basis

Function has given better performance than other kernels. The main reason for poor

accuracy of k-NN is the less number of samples for training as it requires large training data base samples to improve the accuracy.

In k-NN, we found the distance between the feature vectors of training and testing data using various distance measures, whereas more computations are required

in SVM such as kernel processing and matching feature vector with different parameter settings. The k-NN and SVM represent different approaches to learning. Each

approach implies different model for the underlying data. SVM assumes there exist

a hyper-plane separating the data points (quite a restrictive assumption), while k-NN

attempts to approximate the underlying distribution of the data in a non-parametric

fashion (crude approximation of parsen-window estimator).

The SVM classifier gave 84 % for LBP and 80.5 % for DLEP features whereas

k-NN gave 64.5 % accuracy for LBP feature. Results for both k-NN and SVM are

given in Table 1. The reason for poorer results for the k-NN as compared to SVM is

that the extracted features lack in regularity between text patterns and these are not

good enough to handle broken segments [10].

Some images are shown in Fig. 3 for which the proposed system has identified

correctly. First and fourth images are very noisy and hard to understand. Second

image is not horizontal and tilted with a small angle. Our proposed method worked

well for these kind of images. Few more images have been shown in Fig. 4 for which

Table 1 Comparative results

of different algorithms

Fig. 3 Correctly identified

images

Method

k-NN (%)

SVM (%)

LBP

CSLBP

DLEP

64.5

54.5

62.5

84

57

80.5

318

M. Verma et al.

Fig. 4 Wrong identified

images

Table 2 Confusion matrix

with LBP feature and SVM

classification

Predicted/Actual

English Hindi Odia

Telugu

Urdu

English

Hindi

Odia

Telugu

Urdu

90

5

10

0

0

0

2.5

17.5

82.5

2.5

2.5

2.5

0

0

97.5

7.5

77.5

0

7.5

0

0

12.5

72.5

10

0

the system could not identify the accurate script. Most of the images in this category

are very small in size. Hence, the proposed method does not work well for very small

size images. Confusion matrix for all scripts used in this database is shown in Table 2.

It shows that texture feature based method worked very well for English and Urdu

scripts and average for other scripts.

6 Conclusion

In this work, we presented a dataset of multi-lingual scripts and performance evaluation of script identification in this dataset using texture features. A ‘Station Signboard’ database that contains railway sign-boards written in 5 different Indic scripts

is used for texture-based feature evaluation. The images contain challenges like

occlusion, perspective distortion, illumination effect, etc. Texture feature analysis has

been done using well-known local pattern features that provide fine texture details.

We implemented two different frameworks for image classification. With a proper

learning process, we could observe that SVM classification outperformed k-NN classification. In future, we plan to include more scripts in our dataset. We hope that this

work will be helpful for the research towards script identification in scene images.

References

1. Chanda, S., Franke, K., Pal, U.: Text independent writer identification for oriya script. In:

Document Analysis Systems (DAS), 2012 10th IAPR International Workshop on. pp. 369–

373. IEEE (2012)

Script Identification in Natural Scene Images: A Dataset and Texture-Feature . . .

319

2. Ghosh, D., Dube, T., Shivaprasad, A.P.: Script recognition–a review. Pattern Analysis and

Machine Intelligence, IEEE Transactions on 32(12), 2142–2161 (2010)

3. Heikkilä, M., Pietikäinen, M., Schmid, C.: Description of interest regions with local binary

patterns. Pattern recognition 42(3), 425–436 (2009)

4. Murala, S., Maheshwari, R., Balasubramanian, R.: Directional local extrema patterns: a new

descriptor for content based image retrieval. International Journal of Multimedia Information

Retrieval 1(3), 191–203 (2012)

5. Ojala, T., Pietikäinen, M., Mäenpää, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. Pattern Analysis and Machine Intelligence, IEEE

Transactions on 24(7), 971–987 (2002)

6. Pal, U., Sinha, S., Chaudhuri, B.: Multi-script line identification from indian documents. In:

Proceedings of Seventh International Conference on Document Analysis and Recognition. pp.

880–884. IEEE (2003)

7. Phan, T.Q., Shivakumara, P., Ding, Z., Lu, S., Tan, C.L.: Video script identification based on

text lines. In: International Conference on Document Analysis and Recognition (ICDAR). pp.

1240–1244. IEEE (2011)

8. Shi, B., Yao, C., Zhang, C., Guo, X., Huang, F., Bai, X.: Automatic script identification in the

wild. In: Proceedings of ICDAR. No. 531–535 (2015)

9. Shijian, L., Tan, C.L.: Script and language identification in noisy and degraded document

images. Pattern Analysis and Machine Intelligence, IEEE Transactions on 30(1), 14–24 (2008)

10. Shivakumara, P., Yuan, Z., Zhao, D., Lu, T., Tan, C.L.: New gradient-spatial-structural features

for video script identification. Computer Vision and Image Understanding 130, 35–53 (2015)

11. Singhal, V., Navin, N., Ghosh, D.: Script-based classification of hand-written text documents in a multilingual environment. In: Proceedings of 13th International Workshop on

Research Issues in Data Engineering: Multi-lingual Information Management (RIDE-MLIM).

pp. 47–54. IEEE (2003)

12. Sun, Q.Y., Lu, Y.: Text location in scene images using visual attention model. International

Journal of Pattern Recognition and Artificial Intelligence 26(04), 1–22 (2012)

13. Ullrich, C.: Support vector classification. In: Forecasting and Hedging in the Foreign Exchange

Markets, pp. 65–82. Springer (2009)

View publication stats