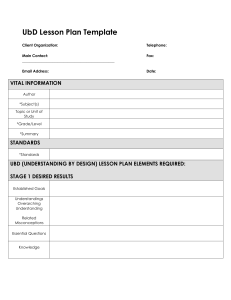

GP Wiggins, JMcTighe - Understanding By Design, 2nd Edition (2005) - libgen.li

advertisement