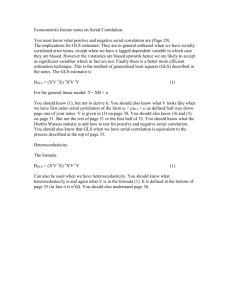

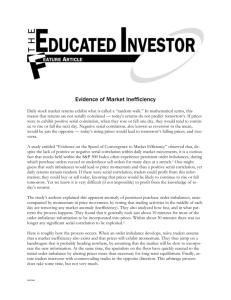

Machine learning trading using S&P 500 stocks This Thesis is submitted in accordance with the requirements of the Xi'an Jiaotong-Liverpool University for the degree of Masters in Financial Mathematics By Matthew Brice Delikatny Department of Financial Mathematics Xi'an Jiaotong-Liverpool University December 2020 1 Acknowledgments I would like to thank Ahmet Goncu, who guided me through my Thesis and encouraged me to pick a topic that I was extremely passionate about. I am extremely grateful for all the time that Ahmet gave me to support me through my Thesis as well as providing me with knowledge that will benefit me as a financial professional. I have to thank my parents, who have been incredibly loving and unbelievably supportive of me and all my dreams. Words can't express the amount of love they have given me and the sacrifices they have had to make to help me achieve my dream of becoming a Quant. I would like to thank my loving wife for supporting me throughout my studies, encouraging me to keep going with my studies even during difficult times. I finally would like to thank the other professors in the department who were incredibly supportive and helpful in my academic journey, as well as XJTLU for accepting me as a master's student in Financial Mathematics. 2 Abstract There are two main sections to the paper: the weak market efficiency hypothesis of the stocks in the S&P 500, testing machine learning algorithms on stocks in the S&P 500 to test the predictability of the stock returns. The weak market hypothesis testing includes many types of tests conducted over the entire period of January 3rd, 2010, to June 3rd, 2020. Weak form market efficiency has many types of tests such as the Runs test and Kolmogorov–Smirnov goodness of fit test, autocorrelation (LBQ test), and variance ratio. Over the entire period, these tests can have results that contradict each other due to long periods of time, having brief periods of gross market inefficiency, making the test declare that the market is inefficient. A new market efficiency concept proposed by Andrew Lo in 2004, called the Adaptive Market Hypothesis (AHM), was also applied to the stock data. AHM asserts that the market's efficiency cyclically varies with time. When comparing the stocks in the S&P500 using first-order autocorrelations, it can be seen that the market efficiency of these stocks fluctuates cyclically over time. Using machine learning algorithms on S&P 500 stocks, some algorithms are more effective in predicting the stock market's movements. Support Vector Machine (SVM) and Gradient Boosting Classifier (GB) both have shown to be the most profitable. The machine learning algorithms were not more profitable than the Buy and Hold strategy. However, it was seen that the machine learning algorithms had lower drawdowns and higher Sharpe ratios. 3 Contents 1.0 Introduction ............................................................................................................................................ 6 2.0 Literature review..................................................................................................................................... 7 2.1 Market Efficiency ................................................................................................................................ 7 2.1.1 Efficient Market Hypothesis (EMH) ............................................................................................. 7 2.1.2 Adaptive Market Hypothesis ....................................................................................................... 7 2.2 Machine Learning Algorithms ........................................................................................................... 10 2.3 Backtesting Machine Learning Algorithms ....................................................................................... 11 3.0 Methodology......................................................................................................................................... 12 3.1 Efficient Market and Adaptive Market Hypothesis........................................................................... 12 3.1.1 Vratio.......................................................................................................................................... 12 3.1.5 First Order Autocorrelation........................................................................................................ 13 3.2 Machine Learning Methodology ....................................................................................................... 13 3.2.1 Logistic Regression ..................................................................................................................... 14 3.2.2 Support Vector Machine ............................................................................................................ 14 3.2.3 Decision Trees ............................................................................................................................ 14 3.2.4 Random Forest ........................................................................................................................... 15 3.3 Features ............................................................................................................................................ 15 3.3.1 Technical indicators ................................................................................................................... 15 3.3.2 Economic features ..................................................................................................................... 18 4.0 Data ....................................................................................................................................................... 20 5.0 Results ................................................................................................................................................... 23 5.1 Efficient Market Hypothesis.............................................................................................................. 23 5.2 Adaptive Market Hypothesis ............................................................................................................ 24 5.3 Machine Learning.............................................................................................................................. 27 5.4 Backtest ............................................................................................................................................. 28 6.0 Conclusion ............................................................................................................................................. 31 7.0 References ............................................................................................................................................ 32 8.0 Appendix ............................................................................................................................................... 36 8.1 Market Efficiency Tables ................................................................................................................... 36 8.2 Machine learning results................................................................................................................... 53 4 List of Figures Figure 1-Serial Correlation Percent of the Standard &Poor's (S&P) Composite Index ................................. 8 Figure 2- Piecewise analysis of Machine Learning Algorithms (Ryll & Seidens, 2020) ............................... 12 Figure 3-Extra Tree Classifiers ..................................................................................................................... 16 Figure 4- Feature Importance Technical Indicators .................................................................................... 17 Figure 5-MACD example ............................................................................................................................. 18 Figure 6-A summary of ML papers using macroeconomic predictors. ....................................................... 19 Figure 7-Important factors for U.S. major indices & sectors ...................................................................... 19 Figure 8-Box Plot of Mean Daily Log Returns Of All Stocks ........................................................................ 20 Figure 9-Heat Map of Economic Indicators ................................................................................................ 22 Figure 10-Serial Correlations and Adjusted Close Price of S&P500 Over Time .......................................... 25 Figure 11-BAC Vratio pValues over time..................................................................................................... 26 Figure 12- Run test for BAC over time ........................................................................................................ 27 Figure 14- Portfolio Value Over Time ............................................................ Error! Bookmark not defined. List of Tables Table 1-Adaptive Market Hypothesis Studies ............................................................................................... 9 Table 2-Backtesting Literature Review ....................................................................................................... 11 Table 3-Machine Learning Algorithms used ............................................................................................... 13 Table 4- Technical Indicators used by Academics ....................................................................................... 15 Table 5-T-Test of Machine Learning Algorithms to Random Walk ............................................................. 28 Table 6-Backtest Results of Machine Learning Algorithms......................................................................... 30 Table 7-Vratio Test over 10 years ............................................................................................................... 36 Table 8- LQB test ......................................................................................................................................... 38 Table 9-KStest ............................................................................................................................................. 40 Table 10-TS-ADF test ................................................................................................................................... 42 Table 11-Vratio 60 day rolling window of stocks ........................................................................................ 45 Table 12- Ljung-Box Q-test for all stocks .................................................................................................... 47 Table 13-Run test for all stocks ................................................................................................................... 49 Table 14-Machine Learning Algorithms using Technical Analysis .............................................................. 53 Table 15-Machine Learning Algorithms using Technical Analysis and Economic Indicators ...................... 55 5 1.0 Introduction In the financial world, everyone is chasing the next big strategy that will generate consistent profits. The new trend is for people to use machine learning algorithms on the stock market. This thesis first looks at the market efficiency of the 90 stocks selected. Looking at the efficiency of a stock is essential to see if the market moves randomly or not. If the stock moves like a random walk, the machine learning algorithm will not accurately predict the next movement. Using different market efficiency tests, the stocks were not efficient at all times, allowing for profits to be made. The tests implemented were the Runs test and Kolmogorov–Smirnov goodness of fit test, autocorrelation (LBQ test), and variance ratio. Further market efficiency analysis was done using the Adaptive Market Hypothesis (ADH); it could be seen that market efficiency varies with time. The markets were very inefficient during times of uncertainty, with the recent COVID-19 being an excellent example of the market's inefficiency and where machine learning algorithms could be profitable. Multiple machine learning algorithms used are a wide range of classification, regression, and clustering algorithms. The algorithms try to predict one period forward whether the stock will move up or down. If the algorithms guess correctly, it makes money; if it fails to determine the stock's movement, it loses money. The algorithms' average accuracy was around 50 percent, with the precision, recall, and F-score averages were 48,48 and 41 percent, respectively. When introducing economic data alongside technical analysis data, there were no significant improvements to the accuracy, precisions, recalls, or F-scores. This can be due to this publicly available data is already priced into the price of the stock. It is common practice to backtest any strategy being considered before actually start using them to trade on the stock market. Conducting backtests on all the machine learning algorithms, most were not more profitable than a buy and hold strategy; however, the algorithms had lower drawdowns and higher Sharpe ratios than the buy and hold strategy. 6|Page 2.0 Literature review 2.1 Market Efficiency An efficient market is a market in which the price fully reflects all available information. Fama defines three levels of efficiency: weak form, semi-strong form, and strong form. Fama (1995) The weak form efficient market, the price movements, volumes, and earnings data do not affect the stock's price and cannot predict the stock's future direction. A semi-strong form efficient market is where all publicly-available data are included in the price of all stocks in a market, and the price changes to new equilibrium levels are reflections of that information. Finally, the strong form of an efficient market, all information in a market, whether public or private, has been accounted for in the stock's price. 2.1.1 Efficient Market Hypothesis (EMH) The concept of market efficiency is a cornerstone of finance. The primary empirical investigation of market efficiency involves measuring the statistical dependence between price changes. If no statistical dependence is found in the prices, that means that the price changes are random. The lack of statistical dependence provides evidence favoring the weak-form of EMH, which implies that no profitable investment trading strategies can be derived based on past prices. If statistical dependence is uncovered, that means prices do not change randomly; a trading strategy can be created around that fact. If statistical dependence is discovered, this means that the weak form of the EMH does not exist. Empirical evidence on the weak-form efficiency indicates mixed results. Cooper (1982) studied world stock markets using monthly, weekly and daily data for 36 financial markets. Cooper examined the random walk hypothesis's validity by employing correlation analysis, run tests, and spectral analysis. When it came to the U.S. and U.K. stock markets, the evidence supported the random walk hypothesis. Seiler and Rom (1997) examined the behavior of the U.S. market's daily stock returns from February 1885 to July 1962, partitioned annually. Using the Box-Jenkins analysis for each of the 77 years, they indicated that changes in historical stock prices were completely random. In 1965, Fama used 30 U.S. companies of the Dow Jones, found evidence that dependence on the price change. The first-order autocorrelation of daily returns was positive for 23 of the 30 stocks, and they were significant for 11 of the 30 stocks examined. In Lanne and Saikkonen (2004), monthly excess U.S. stock returns from January 1946 to December 2002 are analyzed. The results indicated that the presence of conditional skewness in stock returns. The evidence suggests informational inefficiency, which means that stock prices could be predicted with a fair degree of reliability. 2.1.2 Adaptive Market Hypothesis The EMH asserts that market prices incorporate all publicly available information, and various researches that are familiar with behavioral economics and finance have challenged this hypothesis. They argue that markets are not rational but are driven by fear and greed instead. Andrew Lo, in 2004, proposed a new framework that reconciles market efficiency with behavioral alternatives by applying the principles of evolution to approach economic interactions. The proposed idea by Andrew Lo is called 7|Page the "Adaptive Markets Hypothesis" (AMH). Lo, and other researchers have stated in their publications that the AMH implies complex market dynamics, such as cycles, herding, bubbles, crashes, trends, and other phenomena in the financial markets. In their papers, emphasized that market efficiency varies throughout time using first-order autocorrelations. They showed that the market was more efficient in 1950 than in the early 1990s. Andrew Lo used an example by computing the rolling first-order autocorrelation for monthly returns of the Standard & Poor's (S&P) Composite Index from January 1871 to April 2003. Figure 1-Serial Correlation Percent of the Standard &Poor's (S&P) Composite Index The possibility that market efficiency evolves and changes with time is also stated in the paper by Self and Mathur (2006): "The true underlying market structure of asset prices is still unknown. However, we do know that, for some time, it behaves according to the classical definition of an efficient market; then, for a period, it behaves in such a way that researchers can systematically find anomalies to the behavior expected of an efficient market." Researchers have looked at the intertemporal stability over time of the excess returns generated by several technical trading rules in the foreign exchange market (Neely et al., 2009). Their findings were consistent with the AMH, as they showed that both institutional and behavioral factors influence investment strategies and opportunities in the foreign exchange market. Research from other researchers like Brock et al. (1992) and Sullivan et al. (1997) have found evidence that, in particular, classes of technical trading rules enabled the possibility of earning abnormal profits from the stock market. The effect of changing market conditions on DJIA return predictability is consistent with the adaptive market hypothesis found (Kim et al., 2011). They concluded, return predictability is associated with 8|Page market volatility and economic fundamentals. There are studies which point out the importance of behavioral finance and explain investor's behavior such as overreactions and overconfidence in particular assets which are not rational ( Barber and Ordean, 2001) Apart from the main finding of time-varying weak-form efficiency in stock markets, the rolling window approach also allows previous studies to gain additional insights. AMH also has several concrete implications for the practice of investment management. Table 1-Adaptive Market Hypothesis Studies Study Data and Methodology Results Andrew.W.Lo 2004 Monthly returns of the S&P Composite Index analyzed over a five-year rolling window (1871-2003) The degree of market efficiency varies through time cyclically. Markets adapt to evolutionary selection pressures. Self, J.K and Machur,I 2006 Daily returns of G7 national indices (1992-2003) analyzed using the MTAR model and E-G stationary tests Revealed existence of asymmetric stationary periods, anomalous market behavior ad the degree of market efficiency varies over time. The market adapts to evolutionary selection pressures Neely C.L et al. 2009 Todea.A et al. 2009 Kim, Jae H, et al. 2011 Stability of excess returns (1973-2005) to technical trading rules in the foreign exchange market using ARIMA models The degree of market efficiency varies though time in a cyclical fashion Daily returns of 6 indices-All ordinary index, Hang Seng Index, BSE National Index, Kuala Lumpur Composite Index, Straits Times Index, and Nikkei 225 Index( 1997-2008) analyzed using Moving Average Strategy. The degree of market efficiency varies through time cyclically. Daily returns of the DJIA(19002009) analyzed using automatic variance ratio, automatic portmanteau tests, and generalized spectral tests The degree of market efficiency varies through time cyclically. Markets adapt to evolutionary selection pressures. Markets adapt to evolutionary selection pressures. Markets adapt to evolutionary selection pressures. 9|Page 2.2 Machine Learning Algorithms Previous studies succeeded in establishing machine learning models that generate signals. One method of signal generation is to apply piecewise linear representation to discover turning points. The buy and sell signals generated from turning points are then denoted as the target variable for machine learning models (Luo et al., 2017). Research models focus on the transaction probabilities of uptrend/downtrend to downtrend/uptrend to generate profitable trading strategies. Chavarnakul and Enke (2008) determine that neural networks can provide more appropriate trading signals based on two technical indicators: volume adjusted moving average and ease of movement. Meanwhile, Chang et al. (2009) identify that when training artificial neural networks, buy and sell signals are generated according to experts' experience, but not the stock prices for each input. A recent study generates trading signals by news based data and trains the model through a random forest (Feuerriegel and Prendinger, 2016). Creating a consistently profitable autonomous trading system have been attempted multiple times by single machine learning models, such as SVM (Chen and Hao, 2018), and neural network (Chenoweth et al., 2017). Multi-layer perceptron (MLP) is perceived to be capable of approximating arbitrary functions since the artificial neural network is a highly elaborate analytical technique for modeling complex non-linear functions (Principe et al., 2000). The application of neural networks on stock market trading has been studied for years and has many research papers. For instance, Dunis and Williams (2002) concluded that neural network regression models could forecast EUR/USD returns. According to empirical evidence from the Madrid Stock Market, the technical trading rule is always superior to the buy-and-hold strategy for' bear' and' stable' market episodes (Fernandez-Rodrıguez et al., 2000). Chen et al. (2003) reveal that probabilistic neural network-based investment strategies obtain higher returns than other investment strategies on the Taiwan Stock Index. A new form of machine learning algorithms is called ensemble learning models. These models are becoming very popular across many fields, including credit risk forecasts and price predictions on the oil market, the stock futures market, the gold market, and the electricity market. Previous literature indicates that the simple combination of neural networks outperforms individual networks when trading S&P 500 futures contracts (Trippi and DeSieno, 1992). Paleologo et al. (2010) compared the bagging Decision Tree with SVM when contending with credit scoring problems. The local weighted polynomial Regression formulates a sophisticated ensemble model for exposing the stock index (Chen et al., 2007). multiple classifiers have been shown in studies to be superior to the single classifier in terms of prediction accuracy for stock returns (Tsai et al., 2011). The 3-stage nonlinear ensemble model proposed by Xiao et al. (2014) includes training through three neural networkbased models, optimization by improved particle swarm optimization, and SVM prediction. The 3-layer expert trading system comprises random forest prediction, ensembles, and signal filtering alongside risk management (Booth et al., 2014). To predict stock prices, Ballings et al. (2015) establish an innovative idea to generate imbalanced data based on certain thresholds of annual stock price returns. Consequently, the results were unresponsive to the selected threshold. Ballings et al. (2015) indicate that exponential smoothing is fundamental for predicting stock price movements through random forest. 10 | P a g e 2.3 Backtesting Machine Learning Algorithms Backtesting is a common practice in algorithmic trading. Backtesting is when an algorithm is developed and tested against unseen past data or a random walk. The algorithm uses the data to decide whether it should buy or sell a stock or index. At the end of the backtest, the user can see if they implemented this algorithm could potentially make money or not. The table below shows researchers who have done backtests on machine learning algorithms on the NYSE and the S&P500. Table 2-Backtesting Literature Review Author Market Period Andrada-Felix and FernandezRodrigues(2008) New York Stock Exchange Composite Index S&P 500 Index S&P500 Index New York Stock Exchange Composite Index Chavarnakul and Enke(2008) Chenoweth et al. (2017) Leigh et al. (2002) Transaction costs 0.2 percent per stock Result 1993-2002 Machine learning algorithm used Boosting 1998-2003 Neural Network Profitable Returns 1982-1993 Neural Network 1981-1996 Neural Network No Transaction costs Break-even cost at 0.35% None Profitable returns Profitable returns Profitable returns Gauch models have been tests on the NYSE index; they found that the Gauch models were profitable even with conditional skewness in the market(Leigh et al.,2002). In 2008, Andrada-Felix and FernandezRodrigues used evolutionary algorithms to try and predict the NYSE. They found that these algorithms can be more profitable than a buy and hold strategy with a lower standard deviation. Neural networks have been applied to the S&P500 using well known technical indicators. It has been found that they can beat a buy and hold strategy( Chavarnakul and Enke, 2008) In an extensive literature review study of 150 papers, it was found that machine learning algorithms often outperform most traditional stochastic calculus methods in market forecasting. It was also found that recurrent neural networks outperform feed-forward neural networks along with support vector machines on average. These findings imply that the existence of exploitable temporal dependencies in financial time series across multiple asset classes and geographies. (Ryll & Seidens, 2020) In Figure 2, piecewise ranking analysis has shown what percentage of time one algorithm has beaten another. Looking at the first column is an Artificial network (ANN), and we can see when looking at the 11 | P a g e last row in column 1 that the ANN beats the Buy and Hold (BH) strategy 91 percent of the time. Some of the algorithms below say no data. Values of 50 percent mean that the two strategies have similar performance. The authors have stated that this is due to the lack of studies comparing the two. (Ryll & Seidens, 2020) Figure 2- Piecewise analysis of Machine Learning Algorithms (Ryll & Seidens, 2020) 3.0 Methodology 3.1 Efficient Market and Adaptive Market Hypothesis 3.1.1 Vratio The Vratio test is available in MATLAB as a built-in function. It is based on a ratio of variance estimates of returns r(t) = y(t)–y(t–1) and period q return horizons r(t) + ... + r(t–q+1). The overlapping horizons increase the efficiency of the estimator and add credence to the test. Under either null, uncorrelated innovations e(t) imply that the period q variance is asymptotically equal to q times the period one variance. The variance of the ratio depends on the degree of heteroscedasticity; therefore, on the null. ("Variance ratio test for a random walk - MATLAB vratiotest", 2020) Rejection of the null due to dependence of the innovations does not imply that the e(t) are correlated. Dependence allows that nonlinear functions of the e(t) are correlated, even when the e(t) are not. For example, it can hold that Cov[e(t),e(t–k)] = 0 for all k ≠ 0, while Cov[e(t)2,e(t–k)2] ≠ 0 for some k ≠ 0. ("Variance ratio test for random walk - MATLAB vratiotest", 2020) 12 | P a g e Cecchetti and Lam show that sequential testing using multiple values of q results in small-sample size distortions beyond those that result from the asymptotic approximation of critical values. ("Variance ratio test for a random walk - MATLAB vratiotest", 2020) 3.1.5 First Order Autocorrelation First Order Autocorrelation is a common metric used to test for the AMH. When performing multiple linear regression using the data in a sample of size n, there are n error terms, called residuals, defined by ei = yi – ŷi. One of the linear regression assumptions is that there is no autocorrelation between the residuals, i.e. for all i ≠ j, cov(ei, ej) = 0. ("Sample autocorrelation - MATLAB autocorr", 2020) The autocorrelation (aka serial correlation) between the data is cov(ei, ej) says the data is autocorrelated or there exists autocorrelation. If cov(ei, ej) ≠ 0 for some i ≠ j. ("Sample autocorrelation MATLAB autocorr", 2020) In this paper, correlations were checked between today’s price and yesterday’s price using a rolling window of 60 days. 3.2 Machine Learning Methodology All machine learning algorithms used in this thesis were created using a python package known as Scikitlearn. Scikit-learn is a free software machine learning library that Python can read. It features various classification, regression and clustering algorithms, including support vector machines, random forests, gradient boosting, k-means, and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy. ("Scikit-learn", 2020). The machine learning algorithms used in this thesis are listed in the table below. Table 3-Machine Learning Algorithms used Machine Learning Algorithms Logistical Regression Logistical Regression (Ridge Regression) Linear Discrimination Analysis K Neighbors Classifier Gradient Boosting Classifier Ada Boost Classifier Random Forest Classifier Support Machine Vectors Support Machine Vectors Perceptron Support Machine Vectors Hinge Multilayer Perceptron Abbreviation LR LR-L2 LDA KNN GB ABC RF SVM SVMP SVMH MLP 13 | P a g e 3.2.1 Logistic Regression Logistic Regression is the first machine learning model, is the simplest, and is a handy classifier when dealing with classification problems. The advantage of Logistic Regression is that it can give a binary class probability by producing a simple probabilistic formula for the classification. Logistical Regression does not require strict assumptions like normally distributed input variables. A linear relationship between the input and output variables is not required as well. Assumptions when using Linear Regression are: 1) It requires that the dependent variable be binary, with the groups being discrete, non-overlapping, and identifiable. 2) It considers the cost of false positives and false negatives in selecting the optimal cut-off probability (Ohlson, 1980). 3.2.2 Support Vector Machine Support Vector Machine (SVM) is the second classifier to be utilized. It can produce a binary classifier optimal separating hyperplanes - by an extremely non-linear mapping of the input vectors into a highdimensional feature space (Pai et al., 2011). The idea of SVM is to transform the data that is non-linear separable in its original space to a higher dimensional space in which a simple hyperplane can separate it. SVM, proposed by Vapnik in 1998, generates a classification hyperplane that separates two data classes with a maximum margin. In SVM, a linear model estimates a decision function using non-linear class boundaries based on support vectors. SVM trains to create an optimal hyperplane that separates the data into the maximum distance between the hyperplane and the closest training points. The training points closest to the optimal separating hyperplane are called support vectors (Kim and Sohn, 2010). There are some advantages of SVM: 1) there are purely two free parameters, which are the upper bound and the kernel parameter. (Shin et al., 2005). 2) SVM is a unique, optimal, and global process since the training of an SVM is conducted by solving a linearly constrained quadratic problem; (Shin et al., 2005). 3) SVM is based on the structural risk minimization principle; therefore, this type of classifier minimizes the upper bound of the actual risk, whereas other classifiers minimize the empirical risk (Shin et al., 2005). 3.2.3 Decision Trees A Decision Tree is to recursively divide data into subsets until the state of the target predictable attribute in each subset is homogeneous. The Decision Tree classification technique consists of two phases, namely tree building and tree pruning. Tree building is a top-down process that recursively partitions the tree until all data items are assembled into the same class label. Meanwhile, tree pruning is a bottom-up process. Thus, reducing over-fitting, prediction, and classification accuracy will be improved (Imandoust and Bolandraftar, 2014). 14 | P a g e The Decision Tree commonly imitates the human mindset, deeming it straightforward to comprehend data and provide accurate interpretations. Decision trees even provide the logic for interpreting data, unlike black-box algorithms such as SVM and MLP. 3.2.4 Random Forest A random forest's overall objective is to combine the predictions of multiple binary decision trees to establish more accurate predictions than individual models. One advantage of the random forest algorithm is its relative robustness when confronted with noisy data. According to Patel et al. (2015), the utilization of sub-sampling and random decision trees often generates sounder predictive results than single decision trees. Moreover, the random forest contains methods for balancing errors in unbalanced data sets of class population. The random forest algorithm minimizes overall prediction errors by maintaining low error rates on larger classes while permitting high error rates (Breiman, 1999). 3.3 Features 3.3.1 Technical indicators Features for each observation are generated from the stock price information (open price, close price, low price, high price, and volume), which means that technical indicators are utilized as features. Some scholars support that technical indicator can be employed to generate positive trading strategies (Metghalchi et al., 2008;) Table 4- Technical Indicators used by Academics Technical Indicator Description Calculation 100 100 − (1 + 𝑆𝑀𝐴(𝑝𝑢𝑝, 𝑛1) RSI Relative Strength Index STCK Fast Slow Stochastic Oscillator %K=(pc-min(pl)-max(pl)-min(pl))*100 STCD Slow Stochastic Oscillator WILLS Williams %R ROCP Rate of Change Percentage %D=SMA(%K,N) 𝐻𝑖𝑔ℎ𝑒𝑠𝑡 𝐻𝑖𝑔ℎ − 𝐶𝑙𝑜𝑠𝑒 𝐻𝑖𝑔ℎ𝑒𝑠𝑡 𝐻𝑖𝑔ℎ − 𝐿𝑜𝑤𝑒𝑠𝑡 𝐿𝑜𝑤 𝐶𝑙𝑜𝑠𝑒 𝑃𝑟𝑖𝑐𝑒 − 𝐶𝑙𝑜𝑠𝑒 𝑃𝑟𝑖𝑐𝑒𝑛 𝑝𝑒𝑟𝑜𝑖𝑑𝑠 𝑎𝑔𝑜 𝐶𝐿𝑜𝑠𝑒 𝑃𝑟𝑖𝑐𝑒𝑛 𝑝𝑒𝑟𝑜𝑖𝑑𝑠 𝑎𝑔𝑜 Moving Average Convergence Divergence (MACD) OBV Moving Average Convergence Divergence On Balance Volume 𝐸𝑀𝐴𝑡 (𝑝𝑐 , 𝑠) − 𝐸𝑀𝐴𝑡 (𝑝𝑐 , 𝑓) 𝑂𝐵𝑉𝑡 = 𝑂𝐵𝑉𝑡 − 1 Some papers report the mixed results of technical trading strategies over different trading periods (Schulmeister, 2009). Other scholars argue that technical trading rules are unprofitable after data snooping bias or transaction costs are taken into account (Marshall et al., 2008). In this thesis, a python package known as TA, which allows users to input OHLC data as well as Adjusted Closes and volume data, was used to generate all the technical analysis data. The package takes this 15 | P a g e data and outputs 70 well known technical indicators. In doing feature analysis to determine which features were the most important in predicting the stock’s movement, found the best was to use a machine learning algorithm called Extra Trees Classifiers (ETC), which is similar to a Random Forest classifier. With the use of Extra Trees Classifiers to introduce more variation into the ensemble, we will change how we build trees. The features and splits are selected at random. Since splits are chosen randomly for each feature in the Extra Trees Classifier, it is less computationally expensive than a Random Forest. Figure 3-Extra Tree Classifiers Decision Trees show high variance, where Random Forests show medium variance, and Extra Trees show low variance. After putting all 70 features through the ETC, the figure below can be created. It can be seen in Figure 4 that the most valuable indicators are the daily returns as well as the standard technical indicators listed in Table 4 are more important in the prediction of the stock movement. 16 | P a g e Figure 4- Feature Importance Technical Indicators Sullivan et al. (1999) divide trading rules into five groups: filter rules, moving averages, support and resistance rules, channel breakouts, and on-balance volume averages. Some commonly used technical indicators used in academia and industry are relative strength index, stochastic line, Williams, moving average convergence divergence, rate of change percentage, On Balance, and Volume is selected from the five categories of indicators. Relative strength index (RSI) is a strength indicator computed as a rising rate over fall rate in a period. It is usually used to identify an overbought or oversold state in the trading of an asset. RSI ranges from 0 to 100. If the RSI is below level 30, the asset is oversold; when RSI is above 70, it is overbought. Surges and drops in an asset's price will influence the RSI by creating false buy or sell signals. Therefore, the RSI is best used with other tools together to determine the trading signals (Wilder, 1978). The stochastic lines (STCK, STCD) are composed of two smooth moving average lines (%K and %D) to judge the buy/sell points. The fast-stochastic K represents a percent measure of the last close price related to the highest of the highest high value and the lowest of the lowest low value of the last n periods. The theory behind the stochastic line is that the price is likely to close near its high (or low) in an upward-trending (or downward- trending) market. Transaction signals occur when the STCK crosses the STCD (Basak et al., 2019). William (WILLS) indicates stock overbuy or oversell. It can be used to discover the peak or trough of the stock price. If the difference between the lowest stock prices and close prices is massive (or small), and 17 | P a g e the stock is overvalued (or undervalued), traders need to wait for a short (or long) trading signal to sell (or buy) the stock (Chang et al., 2009). Therefore, WILLS is adopted to assist the turning point decision through examining stock is overvalued or undervalued. Moving average convergence divergence (MACD) is to find the fluctuation of stock price via an exponential moving average of the last n observations. When trading, traders look for a signal-line crossover to occur. This occurs when the MACD and average lines cross each other. When the MACD diverges from the average lines, this is an indicator that the stock should be bought. If the MACD converges on to the average line, this is a strong indicator that the stock should be sold off. Figure 5-MACD example The rate of change percentage (ROCP) of a time series pc evaluates the breadth of the range between high and low prices. ROCP can be used to confirm price moves or detect divergences (Larson, 2012). On balance volume (OBV) uses positive and negative volume flow to predict stock price change. It adds the volume when the close has increased and subtracts it when the close has decreased (Granville, 1976). 3.3.2 Economic features Along with the technical indicators to help predict the stock market's movement, economic indicators can also help the machine learning algorithms make accurate predictions. A fair amount of work has been done in this field. The figure below shows the macroeconomic factors applied to indexes such as the S&P500, Taiwan Stock Index, and the NIKKEI 225. 18 | P a g e Figure 6-A summary of ML papers using macroeconomic predictors. In these studies, the macroeconomic indicators' correlation is strongly correlated to the target variable, which could be the stock movement, excess stock returns, or volatility. The accuracies of the machine learning algorithms range from 66 to 79 percent. Bin Weng and his colleagues analyzed stock exchanges and financial sectors using the monthly closing prices in the United States. Weng and his team did feature analysis where each feature is ranked between 0 to 1. From there, Weng and his team chose variables that have feature importance greater than 0.6. (Weng et al., 2018) Figure 7-Important factors for U.S. major indices & sectors 19 | P a g e 4.0 Data All analysis is done on the S&P500 stocks. The stocks were selected by looking at the average volume traded daily from January 3rd, 2010, to June 3rd, 2020. Instead of analyzing all 505 stocks on the S&P500, it was easier to select stocks with 30 of high volume traded, 30 stocks around the average, and 30 low traded stocks. A box plot of the daily average of all 90 stocks can be seen below. Figure 8-Box Plot of Mean Daily Log Returns Of All Stocks Further analysis of the log-returns can be seen by the histogram below, which shows that the distribution is relatively normal with very heavy tails. This is not abnormal for stock data; stock data is usually a Cauchy distribution. When the log-returns is taken, it is an attempt to try and normalize the data. It can be seen that most of the data does follow a normal distribution, but heavy tails still exist. 20 | P a g e All pricing data was acquired using a python package called YFinance. YFinance allows the user to insert the desired timeframe and stock symbol. From there, the package uses Yahoo Finance to collect the Open, High, Low, Close, Adjusted Close, and Volume. Macroeconomic data was collected by using the Federal Reserve Economic Data(FRED) and Quandl. The FRED had M1, M2, M3, Effective Federal Fund Rate (EFFR), ten year Treasury Constant Maturity Rate(10YT), 3 Month Treasury Bills (3MTB), Interest Rate Discount Rate for the United States (IRDR), Unemployment rate (unemp), Consumer Price Index (CPI), Manufacturing Composite Index (MCI), Nonmanufacturing Composite Index ( NMI), as well as investment sentifimate of how many investors are Bullish, bearish and neutral to the market. The FRED records this data on a monthly basis. Looking at the correlations of each of the variables and plotting it onto a heatmap seen below. It can be seen looking at the graph that many of the variables are correlated with one another. 21 | P a g e Figure 9-Heat Map of Economic Indicators Further analysis can be done with a box plot. First, all the Economic Indicators need to be normalized due to vast differences in the order of magnitude. Once this has been done, a box plot can be applied to the Economic Indicators, and the figure below can be seen. 22 | P a g e It can be seen that the normalized variations in the values can be extreme, with values ranging from 0 to 1. The MCI, Bull, Bear,neu, CS, and NMI have smaller ranges due to how those values are calculated. They often move around an average value making their movements less noticeable. Quandl is a data management company that has access to Economic as well as Alternative data. They sell to Hedge funds, Discretionary Funds, Fintech Companies, Corporations, Sell-Side Firms, and Academics. The data used from Quandl was the University of Michigan Consumer Survey Index of Consumer Sentiment, AAII investor Sentiment Data, Manufacturing Composite Index, and the NonManufacturing Index. 5.0 Results 5.1 Efficient Market Hypothesis To test the Efficient Market Hypothesis(EMH), a commonly used test is called the Vratio test. This test takes the data and has an output value of h, p, stat, cvalue, and ratio. The essential values are h, which is just a comparison of the pvalue calculated vs. the default value of alpha, which is 0.05. If the value is more significant than 0.05, the dataset is similar to that of a random walk; however, it is not random if the p-value is less than 0.05. Looking at Table 7 in the Appendix, it can be seen that most stocks are not random. The randomness means that most of the stocks are not market efficient, which is not shocking. When looking at papers, it is possible to find papers that have results that are market efficient. Over ten years, it is reasonable for this calculation to report back that the market is inefficient. This is because if there is any herding, investor overconfidence, and loss aversion during the ten years, the dataset will no 23 | P a g e longer be random. Researchers had argued that this is the weakness of the efficient market hypothesis over long time periods that it did not accurately detect the times when the market was efficient and not efficient. The Ljung–Box test is a test that tests the overall randomness based on several lags. Using Matlab, the number of lags by default is 20. A lag of 20 would mean for the data used that it will check 20 days of adjusted close prices. Looking at Table 8 in the Appendix, it can be seen in the data that the data has significant ARCH effects as well as serial correlations to each other. These results would be expected because of the vratio test that was conducted; it is known that most of the stocks do not move randomly, which means they can be predicted. The serial correlations are also expected because if a stock is doing well today, it is highly likely that the stock will do well tomorrow. The same can also be said if a stock is not doing well today, it will not do well tomorrow. In the literature, this phenomenon is described as stocks having memory. The One-sample Kolmogorov-Smirnov test (KStest), as well as Anderson–Darling test (ADtest), can be used to check for normality of the data. Both tests take the data and project it onto a normal distribution and output a p-value and an h value. The h value is 0 or 1, which is either the acceptance or the rejection of the null hypothesis of normal distribution. The h value is determined by comparing the p-value to the default alpha value of 0.05. Looking at Table 9 and Table 10 in the Appendix, it can be seen that all the stocks do not have a normal distribution. This result is not shocking compared to the literature; it is known that stock prices are a Cauchy distribution. Industry uses log-returns of stocks to make stock returns more normal, so statistical methods that can be used for risk analysis can be done. To give more of a perspective of how far from the normal distribution prices can move in the stock market, during the 1987 stock market crash, the S&P 500 dropped by over 23 percent. Professor Henry T.C. Hu of the University of Texas calculated that this event would be a "25 standard deviation event," which meant "if the stock market never took a holiday from the day the earth was formed, such a decline was still unlikely." (Burch, 2020) The Adjusted-Dicky Fuller Test (ADF test) is to test the stationarity of a dataset. If a dataset is unstationary, it is tough to model, and the dataset needs to be made stationary. When the ADF test is done on stock prices, it can be seen in Table 9 in the Appendix that the prices are not stable. To make any predictions such as Arima modeling, the dataset would need to be made stationary. If the log returns are taken of each stock, it can be seen in Table 10 in the Appendix that the log-returns are stable. Looking at the data, it can be seen that the stock market is inefficient and could be modeled through Arima models. Looking at very long periods is that it gives false confidence to a trader that the market is predictable at all times. Looking at smaller periods at different parts of the data gives different results of how efficient the market is. Most times, the markets are efficient, but at times the market is not efficient. The Adaptive Market Hypothesis helps put these two contradictory ideas together. 5.2 Adaptive Market Hypothesis 24 | P a g e The Adaptive Market Hypothesis(AMH) has been used to explain inefficiencies in the market, such as cycles, herding, bubbles, crashes, trends, and other phenomena in the financial markets. Andrew Lo’s first paper looked at the serial correlations between periods using a 60-month rolling window using the S&P500. The figure below shows his graph's recreation to include more current dates and a 60-day rolling window. It can be seen that the market cycles with the market being more inefficient when the autocorrelations are the farthest from 0 and more efficient the closer the autocorrelations are to 0. Figure 10-Serial Correlations and Adjusted Close Price of S&P500 Over Time A paper done by Amini in 2010 described how positive autocorrelation often means that the next day’s price will change similarly to yesterday’s price. This leads to a tendency of random disturbances to spill over from one time period to the next. Negative autocorrelation means that today’s price will move in the opposite direction of yesterday’s price. This makes a situation where there are tendencies for successive disturbances to alter sign over time, a contrarian effect. If there is no autocorrelation, then the prices have no relationship, hence suggesting an efficient market. Another method that researchers have tried to use is the Vratio test. This was done on all 90 stocks, and the figure below is a representative sample of the other stocks. The stock below is of BAC with a 60-day rolling window. When the pValue drops to the 0.05 and lower level. This means that the returns are not random, and they are predictable. However, it can be seen that the vast majority of the time that the stock prices move with a degree of randomness, making the returns harder to predict. 25 | P a g e Figure 11-BAC Vratio pValues over time The stocks have varying efficiencies over time, with when the p-value is 1 means that the market or stock is perfectly efficient at that time. From the figure above, it can be seen that the market is rarely ever perfectly efficient, which supports the Grossman-Stiglitz Paradox, which argues perfectly informationally efficient markets are an impossibility since if prices perfectly reflected available information, there is no profit to gathering information, in which case there would be little reason to trade. Markets would eventually collapse (Lo,2007). The stocks appear to not be predictable around 94 percent of the time. The other stock efficiency percent can be seen in Table 11 in the Appendix. The runs test is a test that tests the randomness of a dataset. The figure below is of the BAC stock over time. It can be seen that most of the time, the p values are larger than 0.05. This means that the returns of the stock are random. 26 | P a g e Figure 12- Run test for BAC over time Looking at all the other stocks, it appears that their returns are random most of the time. Looking at Table 13 in the Appendix, it can be seen that returns of a stock move randomly, on average, 96 percent. The inefficient periods violate the EMH suggesting that markets are not weak market efficient when they fluctuate between efficient and inefficient. It can be argued that the high efficiencies still prove the EHM still holds most of the time. These high efficiencies are seen in yearly, monthly, and sometimes daily frequency data. However, during most daily frequency data and intraday frequency, research has shown that the market is less efficient, allowing people to arbitrage trade due to these inefficiencies. A drawback to the Adaptive market hypothesis is that few tests are recognized to measure it accurately. According to the literature, there are two main ways of measuring the ADH: the correlations between periods and the Vratio test. From the tests, it can be seen that returns from a one-time frame to the next are autocorrelated, but looking at the returns over time, the returns appear to be relatively random most of the time. The autocorrelations and the lack of perfect randomness allow investors to create alpha trading strategies (Smith,2017). A significant difference between the statistical tests covered above in comparison to machine learning is that the machine learning algorithms can use non-linear equations. This allows for more possibilities to try and find trading strategies that could be more profitable than B&H strategies. 5.3 Machine Learning 27 | P a g e Since it can be seen that the market is not efficient, it may be possible to try and make money using machine learning algorithms. In this thesis, various classification and regression machine learning algorithms were applied to publicly available data. Machine learning algorithms used are listed below: The machine learning algorithms had were tested for Accuracy, Precision, Recall, and F-Score on the technical analysis data only. It can be seen in Error! Reference source not found. in the Appendix that the Accuracies of the machine learning algorithms are around 50 percent, with the precision, recall, and F-score averages were 48,48 and 41 percent, respectively. When adding the economic indicators to the technical analysis data, accuracy, precisions, recalls, and F-scores had no significant changes. This can be seen in Error! Reference source not found. of the Appendix. This outcome is expected because the economic data is usually collected monthly, and their values are already included in the prices unless something happens in the unforeseen market. The figure below results from conducting a two-sided T-test on the accuracy of the machine learning algorithms to a random walk. If the values below are greater than 1.96, then the accuracies would be statistically significant over a random walk. Looking below, it can be seen that none of the machine learning algorithms are able to predict the movements of the market better than a random walk. Table 5-T-Test of Machine Learning Algorithms to Random Walk T testing LR LR_L2 LDA KNN GB ABC RF XGB 0.475167 0.474114 0.241046 -0.03247 0.036973 -0.00101 0.038182 0.127863 SVM SVMP 0.36744 0.135689 SVMH mlp 0.14557 0.098083 This shows that the publicly available data used does not help to predict the market. Due to the algorithms only trying to predict one time period ahead vs multiple days ahead caused the accuracy to be low. It is also possible that alternative data would help increase the accuracies of machine learning algorithms. In the literature, there are machine learning algorithms that have higher accuracy. This is often due to the lagging of specific indicators that researchers may think are leading indicators. This increases the accuracy to a point where it would be statistically significant and would make an academic or trader go to the next step of backtesting. 5.4 Backtest Once the highest accuracy of machine learning is chosen from above, they are usually backtested against previous data to see how they would perform in real life. Similar to machine learning techniques, they have a training set and a test set. In this thesis, all the machine learning algorithms will be compared to see which ones perform better. The backtest starts from December 2017 to June 3rd, 2020. There are various types of metrics that are used in the backtest. This paper's metrics are: Cumulative Return, Sharpe Ratio, Max Drawdown, and Sortino Ratio. The figure below shows BAC with different machine learning algorithms applied to the stock trying to make money. It can be seen that the cumulative value is very volatile over time within this instance, KNN being the most profitable. 28 | P a g e Figure 13-Portfolio Value Over Time The highly volatile returns are not shocking when seen from the previous section, where the accuracies are not statistically more significant than a random walk. 29 | P a g e Table 6-Backtest Results of Machine Learning Algorithms ABC GB KNN LDA LR LR_L2 MLP RF SVM Algorithm Average of Cumulative Value $9,466.69 $10,555.56 $10,370.09 $10,288.16 $9,846.25 $9,637.24 $9,805.74 $10,335.71 $10,563.31 SVMH $10,214.58 SVMP $9,434.89 Machine Learning Algorithm Buy and Hold Average Cumulative Value $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 $12,610.22 Average of Algorithm Sharpe Average of B&H Sharpe Average of algo drawdown Average of B&H drawdown Average of Sortino ratio 1.06 1.12 1.08 1.11 1.08 1.07 1.04 1.11 1.09 0.59 0.59 0.59 0.59 0.59 0.59 0.59 0.59 0.59 $3,024.74 $3,252.91 $3,250.24 $3,289.85 $3,150.28 $3,201.79 $3,275.83 $3,088.38 $3,489.61 $5,147.05 $5,147.05 $5,147.05 $5,147.05 $5,147.05 $5,147.05 $5,147.05 $5,147.05 $5,147.05 -0.15 -0.04 -0.09 -0.03 -0.1 -0.12 -0.02 -0.03 -0.07 1.11 0.59 $3,113.84 $5,147.05 0.03 1.07 0.59 $2,996.40 $5,147.05 0 30 | P a g e It was seen in the Error! Reference source not found. that the max drawdown was lower than that of a B&H strategy, and that the Sharpe ratios are over 1. It can also be seen that the Sortino ratios were poor. However, this observation has been seen in the literature where the max drawdown is lower than a B&H strategy. However, because the backtest does not include transaction costs and the algorithms closing their positions at the end of each day. The transaction costs in a real-world situation would destroy most, if not all, the algorithms' profits. 6.0 Conclusion It can be seen that the EMH does not hold over long periods. If the time period selected has an extended period of time, the market saw behavioral bias such as herding, confirmation, and confidence that the EHM hypothesis testing will claim that the market is inefficient. Using the AMH, it was seen that the stocks have periods where the market is efficient and inefficient and is seen cyclically. This is similar to other observations in the literature review. Applying machine learning algorithms to the stock market, it was seen that GB and SVM were very successful on average returning profitable portfolios. However, when doing a t-test against a random walk, none of the algorithms were statistically better than a random walk. It was seen that the algorithms on average had better Sharpe ratios, and lower drawdowns, suggesting that the algorithms though not more profitable, were better at managing risk. However, due to the algorithms not being statistically better than the random walk, it may be that they were better with managing risk during these backtesting periods, but in other backtests, they would not. Also, due to the algorithms only predicting one time period into the future and closing their positions every day, there is a high likelihood that none of the algorithms would be profitable in the real world. The Sortino ratios on average were low, suggesting that the downward movements standard deviations were high. 31 | P a g e 7.0 References Barber, B. M. & Odean, T. Boys will be boys: gender, overconfidence, and typical stock investment. Quarterly Journal of Economics,116(1), (2001): 261-292. Ballings, M., Van den Poel, D., Hespeels, N., Gryp, R., 2015. Evaluating multiple classifiers for stock price direction prediction. Expert Systems with Applications 42 (20), 7046–7056. Basak, S., Kar, S., Saha, S., Khaidem, L., Dey, S. R., 2019. Predicting the direction of stock market prices using tree-based classifiers. The North American Journal of Economics and Finance 47, 552–567. Booth, A., Gerding, E., Mcgroarty, F., 2014. Automated trading with performance weighted random forests and seasonality. Expert Systems with Applications 41 (8), 3651–3661. Breiman, L. [1999] Using adaptive bagging to debias regressions, Technical Report 547, Statistics Dept. UCB Brock. W., Lakonishok, J., & LeBaron, B. Simple Technical Trading Rules and the Stochastic Properties of Stock Returns. Journal of Finance, 47, (1992): 1731-1764. Burch, S. (2020). Black Monday, 1987. Retrieved October 6th 2020, from http://j469.ascjclass.org/2014/11/12/black-monday-1987/ Chang, P.-C., Liu, C.-H., Lin, J.-L., Fan, C.-Y., Ng, C. S., 2009. A neural network with a casebased dynamic window for stock trading prediction. Expert Systems with Applications 36 (3), 6889–6898. Chavarnakul, T., Enke, D., 2008. Intelligent technical analysis based equivolume charting for stock trading using neural networks. Expert Systems with Applications 34 (2), 1004–1017. Chenoweth, T., Obradovi C, Z., Lee, S. S., 2017. Embedding technical analysis into neural network-based trading systems. Artificial Intelligence Applications on Wall Street, 523–541. Chen, A.-S., Leung, M. T., 2004. Regression neural network for error correction in foreign exchange forecasting and trading. Computers & Operations Research 31 (7), 1049–1068. Chen, A.-S., Leung, M. T., Daouk, H., 2003. Application of neural networks to an emerging financial market: forecasting and trading the Taiwan stock index. Computers & Operations Research 30 (6), 901–923. Chen, T.-l., Chen, F.-y., 2016. An intelligent pattern recognition model for supporting investment decisions in the stock market. Information Sciences 346, 261– 274. Chen, Y., Hao, Y., 2018. Integrating principle component analysis and weighted support vector machine for stock trading signals prediction. Neurocomputing 321, 381–402. Chen, Y., Yang, B., Abraham, A., 2007. Flexible neural trees ensemble for stock index modeling. Neurocomputing 70 (4-6), 697–703. 32 | P a g e Cooper, J C B (1982): 'World Stock Markets: Some Random Walk Tests,' Applied Economics, 14, pp. 515-31. Cox, D. R., 1958. The regression analysis of binary sequences. Journal of the Royal Statistical Society: Series B (Methodological) 20 (2), 215–232. Fama, E F (1965): 'The Behaviour of Stock Market Prices', Journal of Business, 38, pp 34-105. Fama, E. F., & French, K. R. (1995). Size and Book‐to‐Market Factors in Earnings and Returns. The Journal of Finance, 50(1), 131-155. Fernández-Blanco, P., Bodas-Sagi, D. J., Soltero, F. J., Hidalgo, J. I., 2008. Technical market indicators optimization using evolutionary algorithms. Proceedings of the 10th annual conference companion on Genetic and evolutionary computation, 1851–1858. Fernandez-Rodrıguez, F., Gonzalez-Martel, C., Sosvilla-Rivero, S., 2000. On the profitability of technical trading rules based on artificial neural networks: Evidence from the Madrid stock market. Economics Letters 69 (1), 89–94. Feuerriegel, S., Prendinger, H., 2016. News-based trading strategies. Decision Support Systems 90, 65–74. Imandoust, S. B., Bolandraftar, M., 2014. Forecasting the direction of stock market index movement using three data mining techniques: the case of Tehran stock exchange. International Journal of Engineering Research and Applications 4 (6), 106–117. Kim, J. H., Shamsuddin, A., & Lim, Kian-Ping. Stock Return Predictability and the Adaptive Markets Hypothesis: Evidence from Century-Long U.S. data. Journal of Empirical Finance, 18 (5), (2011): 868-879. Lanne, M, and PSaikkonen (2004): 'A Skewed Garch-in-Mean Model: An Application to US Stock Returns,' unpublished paper, University of Jyvaskyla, Finland Lo, Andrew (2007). "Efficient market hypothesis". In Blume, Steven; Durlauf, Lawrence (eds.). The New Palgrave: A Dictionary of Economics (PDF) (2nd ed.). Palgrave McMillan. Lo, A.W. & MacKinlay, A.C. (1988). Stock market prices do not follow random walks: Evidence from a simple specification test. Review of Financial Studies, 1(1), 41–66. Luo, L., You, S., Xu, Y., Peng, H., 2017. Improving the integration of piecewise linear representation and weighted support vector machine for stock trading signal prediction. Applied Soft Computing 56, 199–216. MACD. (, 2020). Retrieved October 6th 2020, from https://en.wikipedia.org/wiki/MACD#Signal-line_crossover 33 | P a g e Marshall, B. R., Cahan, R. M., 2005. Is the 52-week high momentum strategy profitable outside us? Applied Financial Economics 15 (18), 1259–1267. Metghalchi, M., Chang, Y.-H., Marcucci, J., 2008. Is the Swedish stock market efficient? Evidence from some simple trading rules. International Review of Financial Analysis 17 (3), 475–490. Neely, C. J., Weller, P. A., & Ulrich, J. M. The Adaptive Markets Hypothesis: Evidence from the Foreign Exchange Market. Journal of Financial and Quantitative Analysis, 44(2), (2009): 467- 488. Ng, A., 2000. Cs229 lecture notes. CS229 Lecture notes 1 (1), 1–3. Ohlson, J. A., 1980. Financial ratios and the probabilistic prediction of bankruptcy. Journal of accounting research, 109–131. Pai, P.-F., Hsu, M.-F., Wang, M.-C., 2011. A support vector machine-based model for detecting top management fraud. Knowledge-Based Systems 24 (2), 314– 321. Principe, J. C., Iuliano, N. R., Lefebvre, W. C., 2000. Neural and adaptive systems: fundamentals through simulations. Vol. 672. Wiley New York. Ryll, L., & Seidens, S. (2020). Evaluating the Performance of Machine Learning Algorithms in Financial Market Forecasting: A Comprehensive Survey. Retrieved July 6th 2019, from https://arxiv.org/pdf/1906.07786.pdf Sample autocorrelation - MATLAB autocorr. (2020). Retrieved 7 November 2020, from https://www.mathworks.com/help/econ/autocorr.html Schulmeister, S., 2009. The profitability of technical stock trading: Has it moved from daily to intraday data? Review of Financial Economics 18 (4), 190–201. Scikit-learn. (2020). Retrieved October 30th 2020, from https://en.wikipedia.org/wiki/Scikit-learn Seiler, Michael J and Walter Rom (1997): 'A Historical Analysis of Market Efficiency: Do Historical Returns Follow a Random Walk,' Journal of Financial and Strategic Decisions, 10 Self, J. K., & Mathur, I. (2006). Asymmetric Stationarity in National Stock Market Indices: An MTAR Analysis. Journal of Business, 79(6), 3153–3174. Shin, K.-S., Lee, T. S., Kim, H.-j., 2005. An application of support vector machines in bankruptcy prediction model. Expert systems with applications 28 (1), 127–135. Sullivan, R., Timmermann, A., & White, H. Data-Snooping, Technical Trading Rule Performance, and the Bootstrap. Journal of Finance. 54, (1997): 1647-1691. 34 | P a g e Trippi, R. R., DeSieno, D., 1992. Trading equity index futures with a neural network. Journal of Portfolio Management 19, 27–27. Tsai, C.-F., Lin, Y.-C., Yen, D. C., Chen, Y.-M., 2011. Predicting stock returns by classifier ensembles. Applied Soft Computing 11 (2), 2452–2459. Vapnik, V., 1998. Statistical learning theory. John wiley&sons. Inc., New York. Variance ratio test for random walk - MATLAB vratiotest. (2020). Retrieved October 22nd 2020, from https://www.mathworks.com/help/econ/vratiotest.html Weng, B., Martinez, W., Tsai, Y., Li, C., Lu, L., Barth, J. R., & Megahed, F. M. (2018). Macroeconomic indicators alone can predict the monthly closing price of major U.S. indices: Insights from artificial intelligence, time-series analysis, and hybrid models. Applied Soft Computing, 71, 685-697. DOI:10.1016/j.asoc.2018.07.024 Wilder jr, J. (1978). New concepts in technical trading systems. Greensboro/N. C.: Trend Research.GA Xiao, Y., Xiao, J., Lu, F., Wang, S., 2014. Ensemble ANNs-PSO-GA approach for day-ahead stock e-exchange prices forecasting. International Journal of Computational Intelligence Systems 7 (2), 272–290. 35 | P a g e 8.0 Appendix 8.1 Market Efficiency Tables Table 7-Vratio Test over 10 years Ticker AAPL ABMD ADP ADSK AMAT AMD AMT ANSS ANTM APTV ARE ATO AVGO AZO BAC BLL BWA C CBRE CERN CMCSA CMS COO COST CSCO CTXS DISH DRE EBAY ESS EXPE F FB FCX FRT h pValue stat cValue ratio h analysis 1 0.037332 -2.0821 1.96 0.81541 Not a Random walk 0 0.17464 1.3575 1.96 1.0523 Random walk 1 0.012729 -2.4912 1.96 0.77985 Not a Random walk 1 0.036898 -2.0869 1.96 0.87603 Not a Random walk 1 0.013206 -2.4782 1.96 0.82069 Not a Random walk 0 0.05086 -1.9527 1.96 0.87196 Random walk 0 0.10447 -1.6236 1.96 0.80431 Random walk 1 0.02372 -2.2616 1.96 0.7844 Not a Random walk 1 0.035662 -2.1008 1.96 0.86871 Not a Random walk 0 0.77561 -0.28505 1.96 0.98418 Random walk 0 0.10072 -1.6414 1.96 0.83874 Random walk 0 0.18151 -1.3361 1.96 0.88114 Random walk 1 0.02554 -2.2331 1.96 0.87784 Not a Random walk 1 0.047147 -1.985 1.96 0.88308 Not a Random walk 0 0.16637 -1.384 1.96 0.91375 Random walk 0 0.54783 -0.60102 1.96 0.9616 Random walk 0 0.2401 1.1747 1.96 1.0271 Random walk 0 0.31549 -1.0038 1.96 0.94561 Random walk 0 0.85091 0.18796 1.96 1.01 Random walk 0 0.20173 -1.2766 1.96 0.96872 Random walk 1 0.004867 -2.8157 1.96 0.84156 Not a Random walk 0 0.3708 -0.89497 1.96 0.90546 Random walk 1 0.0073237 -2.6818 1.96 0.84076 Not a Random walk 1 0.043134 -2.0224 1.96 0.84531 Not a Random walk 1 0.020089 -2.3247 1.96 0.87111 Not a Random walk 0 0.11424 -1.5794 1.96 0.93922 Random walk 0 0.86393 0.17137 1.96 1.005 Random walk 0 0.1175 -1.5654 1.96 0.81585 Random walk 0 0.5184 -0.64582 1.96 0.9797 Random walk 0 0.1033 -1.629 1.96 0.89992 Random walk 0 0.51345 0.65348 1.96 1.0181 Random walk 0 0.22852 1.2042 1.96 1.0295 Random walk 0 0.10388 -1.6263 1.96 0.92501 Random walk 0 0.145 1.4574 1.96 1.0377 Random walk 0 0.070567 -1.8083 1.96 0.91018 Random walk 36 | P a g e GE GM GWW HII HOG HOLX HPE HPQ IEX INTC IPGP IT JKHY JPM KO LNC LRCX MAA MCHP MKTX MMC MS MSFT MSI MTD MU MXIM NFLX NLSN NVR ORCL PAYX PFE RE RF ROP SIVB SNA STE T TDG TFX TRV 0 0.37771 0.88213 0 0.68702 0.4029 0 0.16242 -1.397 0 0.80137 0.25157 0 0.57677 -0.55811 0 0.69531 -0.39167 0 0.74417 -0.32633 0 0.11695 -1.5677 0 0.081724 -1.7408 1 0.010162 -2.5703 0 0.77367 -0.28757 0 0.6938 -0.39371 0 0.43071 -0.78797 0 0.070608 -1.808 0 0.41168 -0.82094 0 0.85778 -0.1792 1 0.01812 -2.3632 0 0.18723 -1.3188 0 0.17262 -1.3638 0 0.45701 -0.74378 1 0.027366 -2.2063 0 0.085189 -1.7213 1 0.0083615 -2.6371 0 0.14833 -1.4455 0 0.27186 -1.0988 0 0.26099 -1.1241 1 0.027482 -2.2046 0 0.15715 -1.4147 0 0.1971 -1.2899 0 0.84636 0.19376 1 0.031558 -2.15 1 0.032845 -2.134 0 0.19554 -1.2944 1 0.024886 -2.2432 0 0.52636 -0.63357 1 0.0077731 -2.6618 0 0.48079 -0.70503 0 0.31557 -1.0036 0 0.062547 -1.8624 0 0.18405 -1.3284 0 0.92587 0.093048 0 0.14803 -1.4465 1 0.026462 -2.2194 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.0257 1.0128 0.93914 1.0111 0.98032 0.98717 0.98593 0.95214 0.87414 0.74173 0.98868 0.98611 0.94799 0.83601 0.95347 0.99213 0.82914 0.88585 0.92217 0.94252 0.8461 0.90007 0.66597 0.87955 0.93291 0.95324 0.86677 0.93757 0.97053 1.013 0.84456 0.82462 0.93719 0.85601 0.97154 0.77467 0.96989 0.96083 0.87397 0.92132 1.0087 0.89451 0.81587 Random walk Random walk Random walk Random walk Random walk Random walk Random walk Random walk Random walk Not a Random walk Random walk Random walk Random walk Random walk Random walk Random walk Not a Random walk Random walk Random walk Random walk Not a Random walk Random walk Not a Random walk Random walk Random walk Random walk Not a Random walk Random walk Random walk Random walk Not a Random walk Not a Random walk Random walk Not a Random walk Random walk Not a Random walk Random walk Random walk Random walk Random walk Random walk Random walk Not a Random walk 37 | P a g e TT TWTR TXT VZ WAT WFC WLTW WM WST WYNN XOM ZBRA 0 0.83529 0 0.76075 0 0.79968 1 0.049842 1 0.0090252 0 0.18661 1 0.0038686 1 0.02177 0 0.31158 0 0.56085 0 0.33176 1 0.030756 0.20793 -0.30449 -0.25376 -1.9613 -2.6111 -1.3207 -2.8887 -2.2944 -1.0119 0.58158 -0.97058 -2.1602 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.96 1.0049 0.98568 0.99081 0.9143 0.88669 0.93905 0.85808 0.79273 0.93456 1.0174 0.97223 0.87261 Random walk Random walk Random walk Not a Random walk Not a Random walk Random walk Not a Random walk Not a Random walk Random walk Random walk Random walk Not a Random walk Table 8- LQB test Ticker AAPL ABMD ADP ADSK AMAT AMD AMT ANSS ANTM APTV ARE ATO AVGO AZO BAC BLL BWA C CBRE CERN CMCSA CMS COO COST CSCO CTXS h pValue 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 stat cValue 49705 31.41 51491 31.41 51379 31.41 50102 31.41 50757 31.41 48718 31.41 50363 31.41 49927 31.41 51024 31.41 40539 31.41 50888 31.41 51515 31.41 51208 31.41 50456 31.41 51217 31.41 50678 31.41 48564 31.41 49545 31.41 50275 31.41 50340 31.41 51263 31.41 51284 31.41 51000 31.41 50840 31.41 51221 31.41 48873 31.41 h analysis Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects p analysis serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation 38 | P a g e DISH DRE EBAY ESS EXPE F FB FCX FRT GE GM GWW HII HOG HOLX HPE HPQ IEX INTC IPGP IT JKHY JPM KO LNC LRCX MAA MCHP MKTX MMC MS MSFT MSI MTD MU MXIM NFLX NLSN NVR ORCL PAYX PFE RE 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 50726 50957 49999 50932 50691 45909 38823 50368 50393 50688 44605 49635 45406 48578 49990 20195 50644 51207 50083 50496 50998 50608 51435 50615 50537 50307 50623 50461 49428 50957 50726 50202 51262 51047 50575 50781 50562 44575 51172 50110 51382 51116 51419 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation 39 | P a g e RF ROP SIVB SNA STE T TDG TFX TRV TT TWTR TXT VZ WAT WFC WLTW WM WST WYNN XOM ZBRA 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 50896 50829 50869 51278 50550 50485 50799 50920 51412 37373 29618 50399 50719 51070 50543 50045 51494 49326 48748 47277 50038 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 31.41 Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects Significant ARCH effects serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation serial correlation Table 9-KStest Ticker AAPL ABMD ADP ADSK AMAT AMD AMT ANSS ANTM APTV ARE ATO AVGO AZO BAC BLL BWA h p 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 k 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 c 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 h analysis Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution 40 | P a g e C CBRE CERN CMCSA CMS COO COST CSCO CTXS DISH DRE EBAY ESS EXPE F FB FCX FRT GE GM GWW HII HOG HOLX HPE HPQ IEX INTC IPGP IT JKHY JPM KO LNC LRCX MAA MCHP MKTX MMC MS MSFT MSI MTD 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution 41 | P a g e MU MXIM NFLX NLSN NVR ORCL PAYX PFE RE RF ROP SIVB SNA STE T TDG TFX TRV TT TWTR TXT VZ WAT WFC WLTW WM WST WYNN XOM ZBRA 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 2.20E-36 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.12546 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 0.026463 Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Not a normal distribution Table 10-TS-ADF test Ticker AAPL ABMD ADP ADSK AMAT AMD AMT h pvalue stat cValue 0 0.93569 -1.0449 -3.4141 0 0.63069 -1.923 -3.4141 0 0.062208 -3.3286 -3.4141 0 0.69756 -1.7879 -3.4141 0 0.164 -2.8972 -3.4141 0 0.98563 0.44793 -3.4141 0 0.84093 -1.4637 -3.4141 h analysis not a random process not a random process not a random process not a random process not a random process not a random process not a random process 42 | P a g e ANSS ANTM APTV ARE ATO AVGO AZO BAC BLL BWA C CBRE CERN CMCSA CMS COO COST CSCO CTXS DISH DRE EBAY ESS EXPE F FB FCX FRT GE GM GWW HII HOG HOLX HPE HPQ IEX INTC IPGP IT JKHY JPM KO 0 0 0 0 1 0 0 0 0 0 0 0 0 1 1 1 0 0 0 0 1 1 1 0 0 1 0 0 0 0 0 0 0 1 0 0 0 1 0 1 0 0 1 0.92101 0.08315 0.11753 0.08866 0.005933 0.05863 0.21407 0.26186 0.48834 0.49519 0.19213 0.058049 0.21422 0.002695 0.004247 0.009272 0.5317 0.1609 0.71625 0.90325 0.002712 0.019057 0.009219 0.56326 0.42423 0.007337 0.24965 0.88243 0.97355 0.14182 0.14436 0.3728 0.69457 0.015639 0.86627 0.29151 0.14261 0.03383 0.4098 0.033532 0.41402 0.11596 0.002462 -1.1362 -3.2084 -3.0577 -3.181 -4.1367 -3.3524 -2.7646 -2.668 -2.2105 -2.1967 -2.8151 -3.3563 -2.7643 -4.4268 -4.2577 -3.9977 -2.1229 -2.9068 -1.7501 -1.2283 -4.4251 -3.7615 -3.9999 -2.0592 -2.34 -4.079 -2.6927 -1.3171 -0.677 -2.9691 -2.9602 -2.4439 -1.7939 -3.8267 -1.3784 -2.6081 -2.9663 -3.5606 -2.3692 -3.5639 -2.3607 -3.064 -4.4523 -3.4141 -3.4141 -3.4142 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4143 -3.4141 -3.4141 -3.4141 -3.4142 -3.4141 -3.4142 -3.4141 -3.4141 -3.4145 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 not a random process not a random process not a random process not a random process random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process random process random process random process not a random process not a random process not a random process not a random process random process random process random process not a random process not a random process random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process random process not a random process not a random process not a random process random process not a random process random process not a random process not a random process random process 43 | P a g e LNC LRCX MAA MCHP MKTX MMC MS 0 0.80275 0 0.32579 1 0.018999 1 0.03882 0 0.999 1 0.017228 0 0.12162 MSFT MSI MTD MU MXIM NFLX NLSN NVR ORCL PAYX PFE RE RF ROP SIVB SNA STE T TDG TFX TRV TT TWTR TXT VZ WAT WFC WLTW WM WST WYNN XOM ZBRA 0 0 0 0 1 0 0 0 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 1 0 0 0 0 0 0.96591 0.37309 0.16781 0.2371 0.005888 0.65907 0.90997 0.11453 0.001 0.016336 0.015908 0.068352 0.48044 0.23071 0.29422 0.59368 0.70687 0.093939 0.26121 0.24716 0.14997 0.65668 0.47381 0.84875 0.007584 0.016038 0.9792 0.029384 0.33016 0.99892 0.54738 0.78858 0.6908 -1.5734 -2.5389 -3.7626 -3.5102 0.57896 -3.7964 -3.0412 0.77826 -2.4433 -2.8854 -2.718 -4.1386 -1.8656 -1.1953 -3.0698 -5.2271 -3.8134 -3.8215 -3.2901 -2.2265 -2.731 -2.6027 -1.9977 -1.7691 -3.1559 -2.6694 -2.6977 -2.9407 -1.8704 -2.2398 -1.4397 -4.068 -3.8191 -0.5853 -3.6111 -2.5301 0.38369 -2.0913 -1.604 -1.8016 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 not a random process not a random process random process random process not a random process random process not a random process -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4142 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4142 -3.4143 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 -3.4141 not a random process not a random process not a random process not a random process random process not a random process not a random process not a random process random process random process random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process not a random process random process random process not a random process random process not a random process not a random process not a random process not a random process not a random process 44 | P a g e Table 11-Vratio 60 day rolling window of stocks Tickers BAC AAPL GE F MSFT AMD INTC CSCO FB PFE MU C T HPQ CMCSA WFC FCX JPM TWTR NFLX EBAY ORCL RF VZ XOM KO MS HPE AMAT GM CBRE LNC AVGO HOLX MXIM ADSK DRE DISH TXT CTXS Efficiency of the stock 96% 96% 94% 95% 95% 94% 91% 95% 95% 95% 97% 93% 95% 94% 96% 93% 95% 98% 98% 94% 93% 92% 93% 94% 96% 92% 93% 97% 97% 95% 94% 95% 98% 92% 95% 96% 98% 96% 93% 94% 45 | P a g e NLSN WYNN CMS EXPE BLL COST LRCX MCHP AMT ADP WM PAYX ANTM APTV TRV MMC HOG BWA CERN MSI WAT GWW ARE ATO MAA IT WLTW ROP ABMD SIVB STE ANSS FRT TDG COO SNA IPGP JKHY IEX ZBRA TT ESS AZO 93% 97% 91% 93% 93% 96% 93% 91% 95% 95% 96% 96% 94% 94% 91% 94% 96% 95% 91% 94% 95% 94% 94% 97% 91% 94% 96% 95% 93% 94% 91% 95% 93% 95% 95% 94% 93% 90% 68% 96% 93% 93% 94% 46 | P a g e HII RE WST TFX MKTX MTD NVR Average of all the stocks 94% 96% 90% 95% 94% 96% 95% 94% Table 12- Ljung-Box Q-test for all stocks Tickers BAC AAPL GE F MSFT AMD INTC CSCO FB PFE MU C T HPQ CMCSA WFC FCX JPM TWTR NFLX EBAY ORCL RF VZ XOM KO MS HPE Percent of time not autocorrelated 93% 97% 90% 91% 88% 92% 85% 96% 89% 91% 89% 91% 91% 91% 89% 88% 90% 89% 96% 93% 89% 87% 92% 90% 95% 94% 90% 89% 47 | P a g e AMAT GM CBRE LNC AVGO HOLX MXIM ADSK DRE DISH TXT CTXS NLSN WYNN CMS EXPE BLL COST LRCX MCHP AMT ADP WM PAYX ANTM APTV TRV MMC HOG BWA CERN MSI WAT GWW ARE ATO MAA IT WLTW ROP ABMD SIVB STE 96% 93% 92% 94% 92% 90% 89% 89% 93% 93% 94% 91% 90% 97% 95% 88% 92% 94% 93% 89% 92% 92% 95% 88% 91% 92% 90% 91% 92% 93% 92% 91% 88% 91% 91% 96% 89% 91% 93% 90% 90% 89% 88% 48 | P a g e ANSS FRT TDG COO SNA IPGP JKHY IEX ZBRA TT ESS AZO HII RE WST TFX MKTX MTD NVR Average of all the stocks 92% 93% 90% 90% 93% 97% 89% 41% 88% 91% 91% 93% 90% 93% 91% 90% 91% 95% 91% 91% Table 13-Run test for all stocks Tickers BAC AAPL GE F MSFT AMD INTC CSCO FB PFE MU C T HPQ CMCSA WFC Percent of time data is random 97% 97% 94% 97% 95% 95% 97% 93% 94% 96% 96% 94% 94% 94% 96% 94% 49 | P a g e FCX JPM TWTR NFLX EBAY ORCL RF VZ XOM KO MS HPE AMAT GM CBRE LNC AVGO HOLX MXIM ADSK DRE DISH TXT CTXS NLSN WYNN CMS EXPE BLL COST LRCX MCHP AMT ADP WM PAYX ANTM APTV TRV MMC HOG BWA CERN 99% 97% 99% 97% 97% 95% 98% 99% 97% 93% 94% 94% 98% 96% 97% 98% 99% 96% 95% 95% 99% 99% 95% 94% 96% 98% 96% 96% 97% 97% 96% 96% 96% 97% 93% 94% 97% 99% 92% 92% 94% 92% 97% 50 | P a g e MSI WAT GWW ARE ATO MAA IT WLTW ROP ABMD SIVB STE ANSS FRT TDG COO SNA IPGP JKHY IEX ZBRA TT ESS AZO HII RE WST TFX MKTX MTD NVR Average of all the stocks 94% 98% 94% 97% 99% 98% 92% 96% 93% 96% 97% 95% 96% 97% 94% 98% 95% 95% 94% 82% 95% 94% 92% 92% 95% 96% 97% 98% 97% 97% 93% 96% 51 | P a g e 52 | P a g e 8.2 Machine learning results Table 14-Machine Learning Algorithms using Technical Analysis count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max LR 82 51% 3% 47% 50% 51% 52% 73% LR 82 49% 9% 17% 48% 52% 54% 64% LR LR_L2 82 51% 3% 47% 50% 51% 52% 73% LR_L2 82 49% 9% 17% 48% 52% 54% 64% LR_L2 LDA 82 51% 3% 48% 50% 50% 51% 71% LDA 82 53% 5% 19% 52% 54% 56% 62% LDA KNN 82 50% 3% 48% 49% 50% 50% 71% KNN 82 54% 6% 20% 51% 54% 54% 54% KNN GB 82 50% 2% 46% 49% 50% 51% 69% Accuracy ABC 82 50% 3% 47% 49% 50% 51% 72% RF 82 50% 3% 47% 49% 50% 51% 73% XGB 82 50% 3% 48% 49% 50% 51% 71% SVM 82 51% 3% 46% 50% 51% 52% 78% SVMP 82 50% 3% 48% 49% 50% 51% 75% SVMH 82 50% 2% 46% 49% 50% 51% 57% mlp 82 50% 3% 47% 49% 50% 51% 75% GB 82 55% 5% 24% 52% 55% 57% 66% Precision ABC 82 54% 6% 24% 51% 54% 57% 68% RF 82 55% 5% 25% 53% 56% 58% 65% XGB 82 53% 4% 26% 52% 54% 55% 61% SVM 82 45% 11% 9% 37% 48% 53% 60% SVMP 82 30% 8% 10% 26% 31% 35% 47% SVMH 82 32% 8% 11% 26% 33% 37% 49% mlp 82 39% 8% 16% 35% 39% 46% 55% GB Recall ABC RF XGB SVM SVMP SVMH mlp 53 | P a g e count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max 82 62% 22% 1% 42% 67% 80% 99% LR 82 49% 15% 2% 38% 53% 59% 68% 82 62% 22% 1% 43% 67% 80% 99% LR_L2 82 49% 15% 2% 39% 53% 59% 68% 82 49% 10% 22% 43% 49% 55% 69% LDA 82 46% 7% 18% 42% 45% 50% 58% 82 38% 11% 14% 30% 38% 46% 64% KNN 82 37% 8% 19% 31% 37% 44% 54% 82 37% 8% 21% 31% 37% 44% 62% 82 37% 9% 18% 30% 36% 43% 60% 82 36% 8% 18% 30% 35% 43% 58% 82 41% 6% 30% 37% 41% 46% 57% 82 62% 23% 4% 45% 63% 81% 100% 82 53% 15% 10% 41% 55% 61% 90% 82 52% 15% 20% 40% 49% 60% 90% 82 51% 13% 20% 43% 51% 58% 85% GB 82 39% 6% 25% 35% 39% 42% 55% F-Score ABC 82 37% 6% 24% 33% 37% 42% 54% RF 82 39% 6% 27% 35% 38% 42% 52% XGB 82 43% 4% 30% 41% 43% 47% 52% SVM 82 47% 15% 5% 38% 48% 58% 71% SVMP 82 38% 10% 14% 31% 37% 45% 56% SVMH 82 36% 9% 15% 28% 35% 43% 53% mlp 82 39% 9% 13% 34% 41% 45% 61% 54 | P a g e Economics and technical analysis Table 15-Machine Learning Algorithms using Technical Analysis and Economic Indicators count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max count LR 82 51% 3% 47% 50% 51% 52% 73% LR 82 49% 9% 17% 48% 52% 54% 62% LR 82 LR_L2 82 51% 3% 47% 50% 51% 52% 73% LR_L2 82 49% 9% 17% 48% 52% 54% 62% LR_L2 82 LDA 82 51% 3% 48% 50% 50% 51% 71% LDA 82 53% 5% 19% 52% 54% 56% 62% LDA 82 KNN 82 50% 3% 48% 49% 50% 50% 71% KNN 82 54% 6% 20% 51% 54% 58% 68% KNN 82 GB 82 50% 3% 47% 49% 50% 51% 70% Accuracy ABC 82 50% 3% 47% 49% 50% 51% 72% RF 82 50% 3% 48% 49% 50% 51% 72% XGB 82 50% 3% 48% 49% 50% 51% 71% SVM 82 51% 3% 46% 50% 51% 52% 78% SVMP 82 50% 1% 47% 49% 50% 51% 53% SVMH 82 50% 2% 47% 49% 50% 51% 67% mlp 82 50% 2% 48% 49% 50% 51% 63% GB 82 55% 5% 24% 53% 55% 57% 64% Precision ABC 82 54% 6% 24% 51% 54% 57% 68% RF 82 55% 5% 24% 53% 55% 57% 63% XGB 82 53% 4% 26% 52% 54% 55% 61% SVM 82 45% 11% 9% 37% 48% 53% 60% SVMP 82 31% 9% 10% 26% 31% 36% 56% SVMH 82 31% 8% 15% 25% 31% 37% 53% mlp 82 40% 8% 18% 36% 41% 47% 54% GB 82 Recall ABC 82 RF 82 XGB 82 SVM 82 SVMP 82 SVMH 82 mlp 82 55 | P a g e mean std min 25% 50% 75% max count mean std min 25% 50% 75% max 63% 22% 1% 41% 68% 80% 99% LR 82 49% 15% 2% 38% 54% 59% 68% 63% 22% 1% 43% 68% 80% 99% LR_L2 82 49% 15% 2% 38% 54% 59% 68% 49% 10% 22% 43% 49% 55% 69% LDA 82 46% 7% 18% 42% 45% 49% 58% 38% 11% 14% 30% 38% 46% 64% KNN 82 37% 8% 19% 31% 37% 44% 54% 37% 9% 20% 32% 37% 43% 61% 36% 9% 18% 30% 36% 42% 60% 36% 9% 22% 30% 35% 41% 58% 41% 6% 30% 37% 41% 46% 57% 62% 23% 4% 45% 63% 81% 100% 49% 16% 20% 38% 50% 62% 87% 51% 17% 12% 40% 50% 60% 90% 53% 13% 19% 44% 53% 62% 84% GB 82 39% 6% 24% 35% 39% 43% 54% F-Score ABC 82 37% 6% 25% 33% 37% 42% 54% RF 82 39% 6% 24% 34% 38% 43% 53% XGB 82 43% 4% 30% 41% 43% 47% 52% SVM 82 47% 15% 5% 38% 48% 58% 71% SVMP 82 36% 10% 12% 28% 35% 42% 63% SVMH 82 35% 9% 14% 28% 36% 41% 56% mlp 82 40% 11% 16% 32% 42% 49% 59% 56 | P a g e