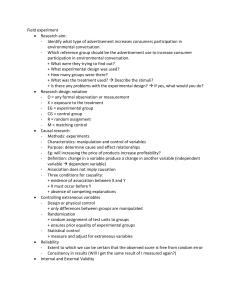

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/359161855 Understanding Research in Second Language Learning~James Dean Brown by Zahra Farajnezhad Presentation · March 2022 DOI: 10.13140/RG.2.2.24711.27048 CITATIONS READS 0 819 2 authors, including: Zahra Farajnezhad Islamic Azad University, Najafabad Branch 24 PUBLICATIONS 605 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: Working Memory and Second Language Learning View project All content following this page was uploaded by Zahra Farajnezhad on 11 March 2022. The user has requested enhancement of the downloaded file. Understanding Research in Second Language Learning Dean Brown James Chapter1 what is research? types of research Research defines as a systematic inquiry to describe, explain, predict, and control the observed phenomenon. research involves inductive and deductive methods. Inductive research methods are used to analyze an observed event. Deductive methods are used to verify the observed event. Inductive approaches are associated with qualitative research and deductive methods are more commonly associated with quantitative research. Characteristics of Research A systematic approach must be followed for accurate data. Rules and procedures are an integral part of the process Research is based on logical reasoning and involves both inductive and deductive methods. The data or knowledge that is derived is in real-time from actual observations in natural settings. There is an in-depth analysis of all data collected so that there are no anomalies associated with it. research creates a path for generating new questions. Existing data helps create more opportunities for research. Research is analytical in nature. It makes use of all the available data so that there is no ambiguity in inference. Accuracy is one of the most important aspects of research. The information that is obtained should be accurate and true to its nature. Primary Research A primary source provides direct or firsthand evidence about an event, object, person, or work of art. Primary sources include historical and legal documents, eyewitness accounts, results of experiments, statistical data, pieces of creative writing, audio and video recordings, speeches, and art objects. It consists of primary, firsthand information. Primary data refers to the first hand data gathered by the researcher himself. Secondary research Unlike primary research, secondary research is developed with information from secondary sources, which are generally based on scientific literature and other documents compiled by another researcher. Secondary Page | 1 data means data collected by someone else earlier. Surveys, observations, experiments, questionnaire, personal interview, etc. Government publications, websites, books, journal articles, internal records etc. Primary research Secondary research Data was collected By you (or a company hire you) By someone else Examples Surveys Focus groups Interviews Observations Experiments N/A – the act of looking for existing data is secondary research Qualitative/Quantitative Can be either Can be either Key Benefits Specific to your needs and you control the quality Usually cheap and quick Disadvantages Usually costs more and take a lot of time Data can be too old Or not specific enough for your needs Primary research It subdivided in to: 1. Case studies 2. Statistical studies Statistical studies fall into two additional subcategories: Survey studies and Experimental studies Survey studies is a quantitative and qualitative method with two important characteristics. First, the variables of interest are measured using self-reports. Survey researchers ask their participants (who are often called respondents in survey research) to report directly on their own thoughts, feelings, and behaviour. Second, considerable attention is paid to the issue of sampling. Survey researchers have a strong preference for large random samples because they provide the most accurate estimates of what is true in the population. Surveys can be long or short. They can be conducted in person, by telephone, through the mail, or over the Internet. Survey Research is defined as the process of conducting research using surveys that researchers send to survey respondents. The data collected from surveys is then statistically analyzed to draw meaningful research conclusions. Experimental studies are study in which a treatment, procedure, or program is intentionally introduced and a result or outcome is observed. True experiments have four elements: manipulation, control, random assignment, and random selection. The most important of these elements are manipulation and control. Manipulation means that something is purposefully changed by the researcher in the environment. Control is used to prevent outside factors from influencing the study outcome. experiments involve highly controlled and systematic procedures in an effort to minimize error and bias which also increases our confidence that the manipulation “caused” the outcome. You can conduct experimental research in the following situations: Time is a vital factor in establishing a relationship between cause and effect. Page | 2 Invariable behavior between cause and effect. You wish to understand the importance of the cause and effect. Characteristics of Statistical studies [1] Systematic research: it has a clear structure with definite procedural rules that must be followed. These rules can help you read, interpret, and critique statistical studies. [2] Logical research: the rules and procedures underlying these studies form a straightforward, logical pattern- a step by step progression of buildings blocks, each of which is necessary for the logic to succeed. If the procedures are violated one or more building blocks may be missing and the logic will break down. [3] Tangible research: It is based on the collection and manipulation of data from the real world. The set of data may take the form of test scores, students’ ranks on course grades, the number of language learners who have certain characteristics, and so on. The must be quantifiable, each data must be number that represents some well-defined quantity, rank, or category. It is the manipulation or processing of these data that links the study to the real world. [4] Replicable research: the researcher’s proper presentation and explanation of the system, logic, data collection and data manipulation in a study should make it possible for the reader to replicate the study (do it again under the same condition). [5] Reductive research: statistical research can reduce the confusion of facts that language and language teaching frequently present sometimes on a daily basis. You may discover new patterns in the facts or through these investigations and the eventual agreement among many researchers, general patterns and relationships may emerge that clarify the field as whole. It is these qualities that make statistical research reductive. The value of Statistical Research Surveys and experimental studies can provide important on individuals and groups that is not available in other types of research. 12345- Systematically structured with definite procedural rules Based on a step-by-step logical patterns Based on tangible, quantified information, called data Replicable in that it should be possible to do them again Reductive in that they can help form patterns in the seeming confusion of facts that surround us. ____________________________________________________________________________ Chapter2 Variables Definition: an element, feature, or factor that is liable to vary or change. A variable is any characteristics, number, or quantity that can be measured or counted. A variable may also be called a data item. Age, sex, business income and expenses, country of birth, capital expenditure, class grades, eye colour and vehicle type are examples of variables. Page | 3 operationalization of variables Operationalization means turning abstract concepts into measurable observations. Although some concepts, like height or age, are easily measured, others, like spirituality or anxiety, are not. Through operationalization, you can systematically collect data on processes and phenomena that aren’t directly observable. In quantitative research, it’s important to precisely define the variables that you want to study. Without specific operational definitions, researchers may measure irrelevant concepts or inconsistently apply methods. Operationalization reduces subjectivity and increases the reliability of your study. Operationalization example: The concept of social anxiety can’t be directly measured, but it can be operationalized in many different ways. For example: self-rating scores on a social anxiety scale number of recent behavioral incidents of avoidance of crowded places intensity of physical anxiety symptoms in social situations Independent vs. Dependent Variables In research, variables are any characteristics that can take on different values, such as height, age, temperature, or test scores. Researchers often manipulate or measure independent and dependent variables in studies to test cause-and-effect relationships. The independent variable is the cause. Its value is independent of other variables in your study. An independent variable is the variable you manipulate or vary in an experimental study to explore its effects. It’s called “independent” because it’s not influenced by any other variables in the study. The dependent variable is the effect. Its value depends on changes in the independent variable. A dependent variable is the variable that changes as a result of the independent variable manipulation. It’s the outcome you’re interested in measuring, and it “depends” on your independent variable. Example: Independent and dependent variables You design a study to test whether changes in room temperature have an effect on math test scores. Your independent variable is the temperature of the room. You vary the room temperature by making it cooler for half the participants, and warmer for the other half. Your dependent variable is math test scores. You measure the math skills of all participants using a standardized test and check whether they differ based on room temperature. Mediator vs Moderator Variables A mediating variable (or mediator) explains the process through which two variables are related, while a moderating variable (or moderator) affects the strength and direction of that relationship. Including mediators and moderators in your research helps you go beyond studying a simple relationship between two variables for a fuller picture of the real world. These variables are important to consider when studying complex correlational or causal relationships between variables. Mediating variables Page | 4 A mediator is a way in which an independent variable impacts a dependent variable. It’s part of the causal pathway of an effect, and it tells you how or why an effect takes place. If something is a mediator: It’s caused by the independent variable. It influences the dependent variable When it’s taken into account, the statistical correlation between the independent and dependent variables is higher than when it isn’t considered. Mediation analysis is a way of statistically testing whether a variable is a mediator using linear regression analyses or ANOVAs. Moderating variables A moderator influences the level, direction, or presence of a relationship between variables. It shows you for whom, when, or under what circumstances a relationship will hold. Moderators usually help you judge the external validity of your study by identifying the limitations of when the relationship between variables holds. For example, while social media use can predict levels of loneliness, this relationship may be stronger for adolescents than for older adults. Age is a moderator here. Moderators can be: Categorical variables such as ethnicity, race, religion, favorite colors, health status, or stimulus type, Quantitative variables such as age, weight, height, income, or visual stimulus size. Extraneous Variables In an experiment, an extraneous variable is any variable that you’re not investigating that can potentially affect the outcomes of your research study. If left uncontrolled, extraneous variables can lead to inaccurate conclusions about the relationship between independent and dependent variables. Extraneous variables can threaten the internal validity of your study by providing alternative explanations for your results. In an experiment, you manipulate an independent variable to study its effects on a dependent variable. Confounding variables A confounding variable is a type of extraneous variable that is associated with both the independent and dependent variables. A confounding variable influences the dependent variable, and also correlates with or causally affects the independent variable. To ensure the internal validity of your research, you must account for confounding variables. If you fail to do so, your results may not reflect the actual relationship between the variables that you are interested in. A variable must meet two conditions to be a confounder: It must be correlated with the independent variable. This may be a causal relationship, but it does not have to be. It must be causally related to the dependent variable. Control Variables A control variable is anything that is held constant or limited in a research study. It’s a variable that is not of interest to the study’s aims, but is controlled because it could influence the outcomes. Variables may be controlled directly by holding them constant throughout a study (e.g., by controlling the room temperature Page | 5 in an experiment), or they may be controlled indirectly through methods like randomization or statistical control (e.g., to account for participant characteristics like age in statistical tests). Control variables enhance the internal validity of a study by limiting the influence of confounding and other extraneous variables. This helps you establish a correlational or causal relationship between your variables of interest. Aside from the independent and dependent variables, all variables that can impact the results should be controlled. If you don’t control relevant variables, you may not be able to demonstrate that they didn’t influence your results. Uncontrolled variables are alternative explanations for your results. In an experiment, a researcher is interested in understanding the effect of an independent variable on a dependent variable. Control variables help you ensure that your results are solely caused by your experimental manipulation. _______________________________________________________________________ Chapter3 Scales What is the Scale? A scale is a device or an object used to measure or quantify any event or another object. Levels of Measurements The data can be defined as being one of the four scales. The four types of scales are: Nominal Scale Ordinal Scale Interval Scale Ratio Scale Page | 6 Nominal Scale A nominal scale is the 1st level of measurement scale in which the numbers serve as “tags” or “labels” to classify or identify the objects. A nominal scale usually deals with the non-numeric variables or the numbers that do not have any value. Characteristics of Nominal Scale A nominal scale variable is classified into two or more categories. In this measurement mechanism, the answer should fall into either of the classes. It is qualitative. The numbers are used here to identify the objects. The numbers don’t define the object characteristics. The only permissible aspect of numbers in the nominal scale is “counting.” Example: An example of a nominal scale measurement is given below: What is your gender? M- Male F- Female Here, the variables are used as tags, and the answer to this question should be either M or F. Ordinal Scale The ordinal scale reports the ordering and ranking of data without establishing the degree of variation between them. Ordinal represents the “order.” Ordinal data is known as qualitative data or categorical data. It can be grouped, named and also ranked. Characteristics of the Ordinal Scale The ordinal scale shows the relative ranking of the variables It identifies and describes the magnitude of a variable Along with the information provided by the nominal scale, ordinal scales give the rankings of those variables The interval properties are not known The surveyors can quickly analyse the degree of agreement concerning the identified order of variables Example: Ranking of school students – 1st, 2nd, 3rd, etc. Ratings in restaurants Evaluating the frequency of occurrences Very often Often Not often Not at all Assessing the degree of agreement Totally agree Agree Neutral Page | 7 Disagree Totally disagree Interval Scale The interval scale is the 3rd level of measurement scale. It is defined as a quantitative measurement scale in which the difference between the two variables is meaningful. In other words, the variables are measured in an exact manner, not as in a relative way in which the presence of zero is arbitrary. Characteristics of Interval Scale: The interval scale is quantitative as it can quantify the difference between the values It allows calculating the mean and median of the variables To understand the difference between the variables, you can subtract the values between the variables The interval scale is the preferred scale in Statistics as it helps to assign any numerical values to arbitrary assessment such as feelings, calendar types, etc. Example: Likert Scale Net Promoter Score (NPS) Bipolar Matrix Table Ratio Scale The ratio scale is the 4th level of measurement scale, which is quantitative. It is a type of variable measurement scale. It allows researchers to compare the differences or intervals. The ratio scale has a unique feature. It possesses the character of the origin or zero points. Characteristics of Ratio Scale: Ratio scale has a feature of absolute zero It doesn’t have negative numbers, because of its zero-point feature It affords unique opportunities for statistical analysis. The variables can be orderly added, subtracted, multiplied, divided. Mean, median, and mode can be calculated using the ratio scale. Ratio scale has unique and useful properties. One such feature is that it allows unit conversions like kilogram – calories, gram – calories, etc. Example: An example of a ratio scale is: What is your weight in Kgs? Less than 55 kgs 55 – 75 kgs 76 – 85 kgs 86 – 95 kgs More than 95 kgs _________________________________________________________________________________ Page | 8 Chapter4 Controlling Extraneous Variables Validity refers to how accurately a method measures what it is intended to measure. If research has high validity, that means it produces results that correspond to real properties, characteristics, and variations in the physical or social world. High reliability is one indicator that a measurement is valid. The validity of a study will be approached form 4 perspectives: Environmental issue Grouping issue People issue Measurement issue Environmental issue: 1_ Naturally occurring variables: some variables can affect the results. Primary among the environmental variables that may be encountered in studies of language teaching are noise, temperature, adequacy of light, time of day and seating arrangements. All such potential environmental variables should be considered by the researcher as well as by reader. 2_ Artificiality: another environmental issue that may alter the intentions of a study is the artificiality of the arrangements within the study. This issue is analogous to the issue of whether experimental mice will perform the same way in a laboratory, in which the conditions are artificial as they would in the real world. Grouping issue 1_Self-selection 2_Mortality 3_Maturation Self-selection It generally refers to the practice of letting the subjects decide which group to join. There are certain dangers inherent in this practice. Volunteering is just one way that self-selection may occur. Another notso-obvious form of self-selection may happen when two existing classes are compared. Inequality of between groups may occur. Mortality Mortality in the sample refers to a problem that arises when employing a longitudinal design and participants who start the research process are unable to complete the process. Essentially, the persons begin the research process but then fail to complete the entire set of research procedures and measurements. The impact of the mortality, or dropout, rate of participants poses a threat to any empirical evaluation because the reasons for the lack of completion may relate to some underlying process that undermines any claim. This entry describes the process of mortality and the implications for the conduct of research as well as different means to assess or prevent the occurrence. Maturation The maturation effect is defined as when any biological or psychological process within an individual that occurs with the passage of time has an impact on research findings. When a study focuses on people, maturation is likely to threaten the internal validity of findings. Internal validity is concerned with correctly concluding that an independent variable and not some extraneous variable is responsible for a change in the Page | 9 dependent variable. Over time, people change and these maturity processes can affect findings. Most participants can, over time, improve their performance regardless of treatment. This can apply to many types of studies in the physical or social sciences, psychology, management, education, and many other fields of study. People issue 1_Hawthorne effect 2_Halo effect 3_Subject expectancy 3_Researcher expectancy Hawthorne effect The Hawthorne effect refers to the increase in performance of individuals who are noticed, watched, and paid attention to by researchers or supervisors. The Hawthorne effect refers to a tendency in some individuals to alter their behavior in response to their awareness of being observed. This phenomenon implies that when people become aware that they are subjects in an experiment, the attention they receive from the experimenters may cause them to change their conduct. Halo effect The halo effect is a well-documented social-psychology phenomenon that causes people to be biased in their judgments by transferring their feelings about one attribute of something to other, unrelated, attributes. For example, a tall or good-looking person will be perceived as being intelligent and trustworthy, even though there is no logical reason to believe that height or looks correlate with smarts and honesty. The halo effect works both in both positive and negative directions: If you like one aspect of something, you'll have a positive predisposition toward everything about it. If you dislike one aspect of something, you'll have a negative predisposition toward everything about it. Subject expectancy In scientific research the subject-expectancy effect, is a form of reactivity that occurs when a research subject expects a given result and therefore unconsciously affects the outcome, or reports the expected result. Because this effect can significantly bias the results of experiments (especially on human subjects), double-blind methodology is used to eliminate the effect. Researcher expectancy It refers to how the perceived expectations of an observer can influence the people being observed. This term is usually used in the context of research, to describe how the presence of a researcher can influence the behavior of participants in their study. This may lead researchers to draw inaccurate conclusions. Specifically, since the observer expectancy effect is characterized by participants being influenced by the researcher’s expectations, it may lead the research team to conclude that their hypothesis was correct. False positives in research can have serious implications. The observer expectancy effect arises due to demand characteristics, which are subtle cues given by the researcher to the participant about the nature of the study, as well as confirmation bias, which is when the researcher collects and interprets data in a way that confirms their hypothesis and ignores information that contradicts it. Page | 10 Measurement issue 1_Practice effect 2_Reactivity effect 3_Instability of measures and instruments Practice effect Practice effects, defined as improvements in cognitive test performance due to repeated evaluation with the same test materials, have traditionally been viewed as sources of error variance rather than diagnostically useful information. Recently, however, it has been suggested that this psychometric phenomenon might prove useful in predicting cognitive outcome. any change or improvement that results from practice or repetition of task items or activities. The practice effect is of particular concern in experimentation involving within-subjects’ designs, as participants’ performance on the variable of interest may improve simply from repeating the activity rather than from any study manipulation imposed by the researcher. Reactivity effect Reactivity, also known as the observer effect, takes place when the act of doing the research changes the behavior of participants, thereby making the findings of the research subject to error. Reactivity occurs when the subject of the study (e.g. survey respondent) is affected either by the instruments of the study or the individuals conducting the study in a way that changes whatever is being measured. In survey research, the term reactivity applies when the individual's response is influenced by some part of the survey instrument (e.g. an item on a questionnaire); the interviewer; the survey organization sponsor conducting the study, or both; or the environment where the survey is taking place. For example, the respondent may respond positively or negatively based on the interviewer's reactions to the answer. A smile, nod, frown, or laugh may alter how the subject chooses to respond to subsequent questions. Instability of measures and instruments It refers to the degree to which the results on the measures are consistent. Instability of the results of a study refers to the degree to which the results would be likely to recur if the study was replicated. It is not possible to control both types of instability; statistical procedures provide the researcher with tools to determine the degree to which measures are stable or consistent. Double-Blind Study In experimental research, subjects are randomly assigned to either a treatment or control group. A doubleblind study withholds each subject’s group assignment from both the participant and the researcher performing the experiment. If participants know which group they are assigned to, there is a risk that they might change their behavior in a way that would influence the results. If researchers know which group a participant is assigned to, they might act in a way that reveals the assignment or directly influences the results. Double blinding guards against these risks, ensuring that any difference between the groups can be attributed to the treatment. When the researchers administering the experimental treatment are aware of each participant’s group assignment, they may inadvertently treat those in the control group differently from those in the treatment group. This could reveal to participants their group assignment, or even directly influence the outcome itself. In double-blind experiments, the group assignment is hidden from both the participant and the person administering the experiment. Page | 11 Controlling extraneous variables Four Perspectives Potential problem Steps toward control 1. Natural variables Prearrangement of conditions 2. Artificiality Approximation of “natural” conditions 1. Self-selection Random, matched-pair, or stratified assignment 2. Mortality Short duration, track down missing subjects 3. Maturation Short duration or built in moderator or control variables 1. Hawthorne effect Double-blind technique 2. Halo effect Built in general attitude as moderator or control variables 3. Subject expectancy Minimize obviousness of aims, Distraction from aims provided. 4. Researcher expectancy Double-blind technique 1. Practice effect Counterbalancing 2. Reactivity Careful study of measures 3. Instability of measures and results Statistical estimates of stability and probability A. Environment B. Grouping C. People D. Measurement Counterbalancing Counterbalancing is a procedure that allows a researcher to control the effects of nuisance variables in designs where the same participants are repeatedly subjected to conditions, treatments, or stimuli (e.g., within-subjects or repeated-measures designs). Counterbalancing refers to the systematic variation of the order of conditions in a study, which enhances the study’s interval validity. In the context of experimental designs, the most common nuisance factors (confounds) to be counterbalanced are procedural variables (i.e., temporal or spatial position) that can create order and sequence effects. In quasi-experimental designs, blocking variables (e.g., age, gender) can also be counterbalanced to control their effects on the dependent variable of interest, thus compensating for the lack of random assignment and the potential confounds due to systematic selection bias. _____________________________________________________________________________________ Page | 12 Chapter6 Mean, Mode, Median What is frequency in Research? A frequency is the number of times a data value occurs. For example, if four people have an IQ of between 118 and 125, then an IQ of 118 to 125 has a frequency of 4. Frequency is often represented by the letter f. Frequency distribution The frequency (f) of a particular value is the number of times the value occurs in the data. The distribution of a variable is the pattern of frequencies, meaning the set of all possible values and the frequencies associated with these values. Frequency distributions are portrayed as frequency tables or charts. Frequency distributions can show either the actual number of observations falling in each range or the percentage of observations. In the latter instance, the distribution is called a relative frequency distribution. Frequency distribution tables can be used for both categorical and numeric variables. Continuous variables should only be used with class intervals, which will be explained shortly. Frequency distribution can be visualized using: a pie chart (nominal variable), a bar chart (nominal or ordinal variable), a line chart (ordinal or discrete variable), or a histogram (continuous variable). Measures of central tendency The best way to summarize a data set with a single value is to find the most representative value, the one that indicates where the centre of the distribution is. This is called the central tendency. The three most commonly used measures of central tendency are The arithmetic mean, which is the sum of all values divided by the number of values, The median, which is the middle value when all values are arranged in increasing order, The mode, which is the most typical value, the one that appears the most often in the data set. Calculating the mean The mean can be calculated only for numeric variables, no matter if they are discrete or continuous. It’s obtained by simply dividing the sum of all values in a data set by the number of values. The calculation can be done from raw data or for data aggregated in a frequency table. Example: Mount Rival hosts a soccer tournament each year. This season, in 10 games, the lead scorer for the home team scored 7, 5, 0, 7, 8, 5, 5, 4, 1 and 5 goals. What is the mean score of this player? The sum of all values is 47 and there are 10 values. Therefore, the mean is 47 ÷ 10 = 4.7 goals per game. Calculating the median The median is the value in the middle of a data set, meaning that 50% of data points have a value smaller or equal to the median and 50% of data points have a value higher or equal to the median. For a small data set, you first count the number of data points (n) and arrange the data points in increasing order. If the number of data points is uneven, you add 1 to the number of points and divide the results by 2 to get the rank of the Page | 13 data point whose value is the median. The rank is the position of the data point after the data set has been arranged in increasing order: the smallest value is rank 1, the second-smallest value is rank 2, etc. The advantage of using the median instead of the mean is that the median is more robust, which means that an extreme value added to one extremity of the distribution don’t have an impact on the median as big as the impact on the mean. Therefore, it is important to check if the data set includes extreme values before choosing a measure of central tendency. Example: Imagine that a top running athlete in a typical 200-metre training session runs in the following times: 26.1 seconds, 25.6 seconds, 25.7 seconds, 25.2 seconds, 25.0 seconds, 27.8 seconds and 24.1 seconds. How would you calculate his median time? There are n = 7 data points, which is an uneven number. The median will be the value of the data points of rank: (n + 1) ÷ 2 = (7 + 1) ÷ 2 = 4.///// The median time is 25.6 seconds. Calculating the mode the mode is the value that appears the most often in a data set and it can be used as a measure of central tendency, like the median and mean. But sometimes, there is no mode or there is more than one mode. There is no mode when all observed values appear the same number of times in a data set. There is more than one mode when the highest frequency was observed for more than one value in a data set. In both of these cases, the mode can’t be used to locate the centre of the distribution. The mode can be used to summarize categorical variables, while the mean and median can be calculated only for numeric variables. This is the main advantage of the mode as a measure of central tendency. It’s also useful for discrete variables and for continuous variables when they are expressed as intervals. The mode is not used as much for continuous variables because with this type of variable, it is likely that no value will appear more than once. For example, if you ask 20 people their personal income in the previous year, it’s possible that many will have amounts of income that are very close, but that you will never get exactly the same value for two people. In such case, it is useful to group the values in mutually exclusive intervals and to visualize the results with a histogram to identify the modal-class interval. Measures of dispersion Measures of central tendency aim to identify the most representative value of a data set, that is, the centre of a distribution. To better describe the data, it is also good to have a measure of the spread of the data Page | 14 around the centre of the distribution. This measure is called a measure of dispersion. The most commonly used measures of dispersion are The range, which is the difference between the highest value and the smallest value; The interquartile range, which is the range of the 50% of data that is central to the distribution; The variance, which is the mean squared distance between each point and the centre of the distribution; The standard deviation, which is the square root of variance. KEY notes Frequency distribution in statistics is a representation that displays the number of observations within a given interval. The representation of a frequency distribution can be graphical or tabular so that it is easier to understand. Frequency distributions are particularly useful for normal distributions, which show the observations of probabilities divided among standard deviations. In finance, traders use frequency distributions to take note of price action and identify trends. _______________________________________________________________________________________ Chapter8 Reliability, types and Validity The 4 Types of Reliability Reliability tells you how consistently a method measures something. When you apply the same method to the same sample under the same conditions, you should get the same results. If not, the method of measurement may be unreliable. There are four main types of reliability. Each can be estimated by comparing different sets of results produced by the same method. Test-retest reliability Test-retest reliability measures the consistency of results when you repeat the same test on the same sample at a different point in time. You use it when you are measuring something that you expect to stay constant in your sample. Many factors can influence your results at different points in time: for example, respondents might experience different moods, or external conditions might affect their ability to respond accurately. Page | 15 Test-retest reliability can be used to assess how well a method resists these factors over time. The smaller the difference between the two sets of results, the higher the test-retest reliability. How to measure it To measure test-retest reliability, you conduct the same test on the same group of people at two different points in time. Then you calculate the correlation between the two sets of results. Interrater reliability Interrater reliability measures the degree of agreement between different people observing or assessing the same thing. You use it when data is collected by researchers assigning ratings, scores or categories to one or more variables. People are subjective, so different observers’ perceptions of situations and phenomena naturally differ. Reliable research aims to minimize subjectivity as much as possible so that a different researcher could replicate the same results. When designing the scale and criteria for data collection, it’s important to make sure that different people will rate the same variable consistently with minimal bias. This is especially important when there are multiple researchers involved in data collection or analysis. How to measure it To measure interrater reliability, different researchers conduct the same measurement or observation on the same sample. Then you calculate the correlation between their different sets of results. If all the researchers give similar ratings, the test has high interrater reliability. Internal consistency Internal consistency assesses the correlation between multiple items in a test that are intended to measure the same construct. You can calculate internal consistency without repeating the test or involving other researchers, so it’s a good way of assessing reliability when you only have one data set. When you devise a set of questions or ratings that will be combined into an overall score, you have to make sure that all of the items really do reflect the same thing. If responses to different items contradict one another, the test might be unreliable. How to measure it Two common methods are used to measure internal consistency. Average inter-item correlation: For a set of measures designed to assess the same construct, you calculate the correlation between the results of all possible pairs of items and then calculate the average. Split-half reliability: You randomly split a set of measures into two sets. After testing the entire set on the respondents, you calculate the correlation between the two sets of responses. Which type of reliability applies to my research? It’s important to consider reliability when planning your research design, collecting and analyzing your data, and writing up your research. The type of reliability you should calculate depends on the type of research and your methodology. Page | 16 The 4 Types of Validity Validity tells you how accurately a method measures something. If a method measures what it claims to measure, and the results closely correspond to real-world values, then it can be considered valid. There are four main types of validity: Construct validity: Does the test measure the concept that it’s intended to measure? Content validity: Is the test fully representative of what it aims to measure? Face validity: Does the content of the test appear to be suitable to its aims? Criterion validity: Do the results accurately measure the concrete outcome they are designed to measure? Note that this article deals with types of test validity, which determine the accuracy of the actual components of a measure. If you are doing experimental research, you also need to consider internal and external validity, which deal with the experimental design and the generalizability of results. Construct validity Construct validity evaluates whether a measurement tool really represents the thing we are interested in measuring. It’s central to establishing the overall validity of a method. What is a construct? A construct refers to a concept or characteristic that can’t be directly observed, but can be measured by observing other indicators that are associated with it. Constructs can be characteristics of individuals, such as intelligence, obesity, job satisfaction, or depression; they can also be broader concepts applied to organizations or social groups, such as gender equality, corporate social responsibility, or freedom of speech. Example There is no objective, observable entity called “depression” that we can measure directly. But based on existing psychological research and theory, we can measure depression based on a collection of symptoms and indicators, such as low self-confidence and low energy levels Page | 17 Construct validity is about ensuring that the method of measurement matches the construct you want to measure. If you develop a questionnaire to diagnose depression, you need to know: does the questionnaire really measure the construct of depression? Or is it actually measuring the respondent’s mood, self-esteem, or some other construct? To achieve construct validity, you have to ensure that your indicators and measurements are carefully developed based on relevant existing knowledge. The questionnaire must include only relevant questions that measure known indicators of depression. Content validity Content validity assesses whether a test is representative of all aspects of the construct. To produce valid results, the content of a test, survey or measurement method must cover all relevant parts of the subject it aims to measure. If some aspects are missing from the measurement (or if irrelevant aspects are included), the validity is threatened. Example A mathematics teacher develops an end-of-semester algebra test for her class. The test should cover every form of algebra that was taught in the class. If some types of algebra are left out, then the results may not be an accurate indication of students’ understanding of the subject. Similarly, if she includes questions that are not related to algebra, the results are no longer a valid measure of algebra knowledge. Face validity Face validity considers how suitable the content of a test seems to be on the surface. It’s similar to content validity, but face validity is a more informal and subjective assessment. Example You create a survey to measure the regularity of people’s dietary habits. You review the survey items, which ask questions about every meal of the day and snacks eaten in between for every day of the week. On its surface, the survey seems like a good representation of what you want to test, so you consider it to have high face validity. As face validity is a subjective measure, it’s often considered the weakest form of validity. However, it can be useful in the initial stages of developing a method. Criterion validity Criterion validity evaluates how well a test can predict a concrete outcome, or how well the results of your test approximate the results of another test. To evaluate criterion validity, you calculate the correlation between the results of your measurement and the results of the criterion measurement. If there is a high correlation, this gives a good indication that your test is measuring what it intends to measure. Example A university professor creates a new test to measure applicants’ English writing ability. To assess how well the test really does measure students’ writing ability, she finds an existing test that is considered a valid measurement of English writing ability, and compares the results when the same group of students take both tests. If the outcomes are very similar, the new test has high criterion validity. Page | 18 View publication stats