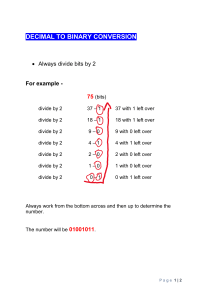

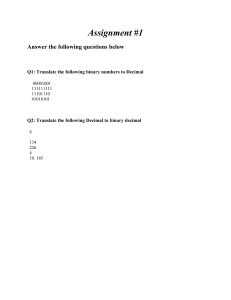

TEST 1 NOTES The test would consists of 23 multiple choice questions to be written in 45 minutes. About 30% weight on Representing numbers, 20% on Representing text, 20% on Excel. Other topics weight about 13%-5% each. Test starts at the beginning of your lecture time. Bring your York ID (or government issued ID if you don't have York ID yet), pencils (HB), eraser, and pen. You will write answers on a Scantron sheet (aka bubble sheet). See an example of filling the Scantron below. You are required to write the test in your own section (lecture room); otherwise your test will not be graded. No calculators can be used. We will provide the power of 2 from 2-5 to 28. See sample cover page below. History of Computing Part 1: Ancient History: up to 1930 - Abacus = first developed calculator in Babylonia, over 5000 years ago, “finger-powered pocket” calculator - Algorithm = a finite set of unambiguous instructions to solve problem - Raised from 9th century mathmatician Muhammad ibn Musa al-Khwarizmi Latinized ‘algorithmi’ - Earliest examples – 300 BC - Early calculating machines - Either manual or mechanical Ie. Slide rule Ie. Mechanical calculators (since 1600s) - Jacquard Loom (1801) - Used punch cards to define complex patterns woven into textiles - Punch cards were later used for computer program codes - George Boole (1815-1864) - Boolean algebra - Laid logical foundations of digital computing circuitry Part 2: Birth of the Electronic Computer (1930-1951) - Claude Shannon (1916-2001) 1937: introduced the application of Boolean logic in creating digital computing machines 1948: published “A mathematical theory of communication” which establishes the principles for encoding information so it might be reliably transmitted electronically Many consider him the father of the modern information age - - - Von Neumann Architecture “stored program” Binary internal coding CPU-memory-I/O organization “fetch-decode-execute” instruction cycle Central processing unit, a memory, mass storage, IO Earlier computers (eg. ENIAC) hard-wired to do one task Stored program computer can run different programs Basis for modern computers Alan Turing (1912-1954) Led the world war 2 research group that broke the (encrypted) code for the (Germany) enigma machine Proposed a simple abstract universal machine model for defining computability – The Turing Machine Devised the “Turing Test” for Artificial Intelligence The Enigma Machine Invented in 1918, it was the most sophisticated code system of its day, and a priority for the allies to break it as the Germans believed it was unbreakable Contributed to the electronic computing machines in 1940s that helped decrypt German coded messages The first electronic general-purpose computer ENIAC Electronic Numerical Integrator And Computer o First general purpose electronic digital computer o Commissioned by the United States Army for computing ballistic firing missiles Noted for massive scale and redundant design Used vacuum tubes to control the flow of electronic signals o Vacuum tubes are large, generate a lot of head and are prone to fail Decimal internal coding Operational in 1946 Manual programming of boards, switches and “function table” o Not “Von Neumann Architecture” Part 3: Age of the mainframes (1951-1970) - Since 1950s, computers got smaller over time - Four generations of vacuum tube computer circuits, showing the reduction in size during 1950s - Grace Murray Hopper (1906-1992) - Created first compiler because she was tired of writing machine code by hand. Greatly improved programming speed and efficiency - The transistor (The most important invention of the 20th century) - Transistor replaced vacuum tubes, which were bulky - Integrated Circuit allowed placement of many transistors onto a small surface - - - - - - Lowered cost and decreased space compared to using individual transistors This enabled computers and other electronic devices to become smaller and cheaper to build and maintain - Today exceeding 10 billion transistors in a single package of approximately 25 square centimeters A hearing aid - The first device built with transistors, in 1953 - Zenith royal-T “Tubeless” hearing aid Transistor Radio IBM System/360 (1960s) - Introduced in 1964 - Family of computers, with compatible architecture, covering a wide price range - Established the standard for mainframes for a decade and beyond Going to the moon - Apollo space program depended on computers to calculate trajectories and control guidance - Trajectories calculated using IBM mainframes - Onboard guidance computer had less processing power than modern appliances, but had autopilot capabilities - Margaret Hamilton Led the team who coded programming for the guidance computer Considered a pioneer in software engineering Gordon Bell: The “microcomputer” Digital Equipment Corporation (DEC) - Developed first mini computers, 1960-1983 - Brought computing to small business - Created major competition for IBM, Univac, who only built mainframes - DEC PDP Series Offered mainframe performance at a fraction of the cost PDP-8 introduced at $20,000 vs $1M for a mainframe Specialized supercomputers - First developed in the late 1970s - High-performance systems used for scientific applications (weather forecasting, code breaking) - Advanced special purpose designs Supercomputers today: IBM Summit supercomputer - Used for hydrodynamics, quantum chemistry, molecular dynamics, climate modeling and financial modeling Part 4: Age of the personal computers (after 1970) - Intel 4004 microprocessor (1972) - First commercially available microprocessor – first used in a programmable calculator - Made the personal computer possible - Contained 2300 transistors and ran at 100 kHz - Desktop and portable computers (1975 and later) - Use microprocessors - All-in-one designs, performance/price tradeoffs - Aimed at mass audience - Personal computers - Workstations - - - - - - - - - Altair 8080 First kit micro computer (1975) by MITS The beginning of Microsoft - In 1975, Bill Gates and Paul Allen approached Ed Roberts of MITS, the company that developed the Altair, and promised to deliver a compiler for BASIC language. They did so, and from that sale, Microsoft was born. Radio Shack TRS-80 (1978) - First plug and play personal computer available at retail - Programmed in BASIC - Very successful - Very affordable - Limited commercial software - Created a “cottage industry” Osborne I (1981) - First “portable” personal computer - Came with lots of bundled software - Only weighed around 20 kilos and sold for $1795 IBM PC (1982) - IBM’s first personal computer - Significant shift for IBM - Open architecture - Established a new standard – legitimized the personal computer - Operating System (OS) supplied by Microsoft Xerox Palo Alto Research Center (PARC) - Contributions to computing include: Ethernet networking technology Laser printers/copiers Object-Oriented Programming (OOP) programming paradigm Workstations Alto and Star were the first to use a window-based Graphical User Interface (GUI) Xerox Star (1981) - The first to use a window-based Graphical User Interface (GUI) Apple Macintosh (1984) - Second personal computer with GUI interface - Adapted from the work done at Xerox - Designed to be a computer appliance for “real people” - Introduced at the 1984 superbowl Advanced Research Projects Agency Network (ARPANET) - Large-area based computer network, established in 1969 - Allowed universities to share data University of California, Los Angeles University of California, Santa Barbara Stanford Research University of Utah, CS Dept. - Communications protocols developed for ARPANET in early 1980s served as the basis for the internet Todays price/performance - Over 3 billion operations per second costs less than $300 - Memory is measured in gigabytes, not megabytes or kilobytes - Secondary storage is terabytes, soon to be petabytes - Communication speeds measured in megabits or gigabits per second, not kilobits Analog vs. Digital Information Information, Data, Signal - Information: some knowledge you want to record or transmit - Ie. Persons weight, current time, picture of a cat - Data: the presentation of the above - Ie. Persons weight 63kg, 139lb, 9 st 13 (“9 stone 13”) One average goat, or seven watermelons - Time 13:34:16, 1:34:16 PM, 18:34:16 UTC, 183416Z - Signal: some means to record or transmit the above - Voltage, current, handwritten note, marking on wood (nat a major factor of this course) Types of information - Inherently continuous (infinitely many values in any range) - - Mass - Temperature - Most other physical properties (ie. Body temperature, blood pressure) Inherently discrete (finite number of values in any range) - Days in a week - Current study term - Names of cities - Numbers of steps walked - Number of students on campus today - Text or any other typed or written symbols Analog vs. Digital - 2 main ways to represent information - Analog data: continuous representation, analogous to the actual information it represents - Digital data: discrete representation, using a finite number of digits (or any other sets of symbols) to record the data - Can we represent continuous information using digital data? - Spirit (or mercury) thermometer exemplifies analog data as it continually rises and falls in direct proportion to the temperature - Digital displays only show (represent) information in a discrete fashion - What is the current temperature? - A thermometer provides infinite precision, directly corresponding to actual temperature - Our reading is limited only by our ability to measure its value - What time is it? - 21 hours, 4 minutes, but what about seconds? That information has been lost in the digitalization of time - Computers are finite - Information often possesses an infinite range of values Ie. How many real numbers are in the interval [0…1]? - However computers are finite and deterministic (ie. Not random) They can only operate on a fixed amount of data at a time The amount and type of data must be known ahead of time - How to represent information from an infinite range? Represent enough of the range to meet our computational needs - Conversion of analog to digital data in 2 steps - Sampling (discretization) Converts continuous variation to discrete snapshots Ie. Digitalization of video: 24-30 still frames per second Dividing a still picture into pixels (ie. HDTV: 1920 x 1080) Digitalization of audio - Quantization (truncation) Converts infinite range of values to a finite one Examples o 1/3 = 0.3333333 (some finite number of digits) o = 3.14159 o 21.41421 - Discretization of computer variation by sampling Signal sampling representation. The continuous signal is represented by the green line, while discrete samples are indicated by the blue vertical lines - But… the information can be lost? - YES, some information is allowed to be lost - BUT We decide what can be lost at the very beginning There are many mechanisms to determine proper parameters to digitize analog data (continuous information) with as much precision as necessary Nyquist – Shannon sampling theorem (yes, that C. Shannon) Quantization error models Any losses are completely avoidable after the digitalization is performed - Bits and Bites - The basis for representing digital data is the binary digit (bit), with the unit symbol b - A bit holds one of two values: 0 or 1 - Often combined in groups of eight to represent data A group of 8 bits is called a byte, with the unit symbol B - Can be combined with metric prefixes for larger magnitudes Ie. Mb for megabit and MB for megabyte prefix symbol multiplier (decimal) multiplier (binary) kilo k, K 103 = 1000 210 = 1024 (also, kibi, Ki) mega giga tera M G T 106 = 1 000 000 109 1012 220 = 1 048 576 (also, mebi, Mi) 230 (also, gibi, Gi) 240 (also, tebi, Ti) - Binary vs. Decimal multipliers - Decimal - - - - Communication (gigabit ethernet: 1 billion bits per second) Data transfer (PC3-12800 RAM transfers 12,800,000,000 bytes per second) Clock rates (A 2-GHz CPU receives 2,000,000,000 ticks per second) Storage, by manufacturers (10TB: 10 trillion bytes) Storage, by some operating systems (MacOS, Linux) DVD’s Binary Memory capacity (8GB: 8,589, 934, 592 bytes) Storage, by some operating systems (Windows, occasionally Linux: 3.63 TB: 4,000,000,000,000 bytes) CD’s So, why digital and why binary? Computers cannot work well with analog data Discretize the data (ie. Breaking it into discrete samples) Quantize the values (or approximate the quantities) Problem: large range of values in analog representation Low end is limited by noise (electronic, electromagnetic..) o Low-noise devices are more costly o Sometimes you hit fundamental limits (ie. Charge of one electron) High end is limited by maximum available voltages, strength of electrical insulation, power consumption, etc. o High voltages increase sizes and (especially) power consumption Why do we use binary (and not decimal, etc.)? Representing one of only two states benefits cost and reliability Benefits of digital in signal transmission (and storage) Analog signals continuously fluctuate in voltage up and down Digital signals have only a high (1) or low (0) state if binary; or a small number of easily distinguishable states otherwise When transmitted, all electronic signals (both analog and digital) degrade as they move down a line o The voltage of the signal fluctuates due to “noise” produced by environmental effects o Similar effects when the signals are recorded Digital: if the distortion is small enough, can completely regenerate the signal and regain its original shape Analog: degradation of analog signals is permanent; there is no way to determine if the distortion was not present originally Benefits of digital for storage and compression Both digital and analogue data can be recorded o Magnetic audio tapes, vinyl records, pencil drawings, VHS tapes, CDS and Blu-ray, USB-sticks, SSD.. o Digital copies are always completely identical to the original o Error-detection and -correction codes exist for digital data Most of the data we encounter has some redundancy o Uniform areas in pictures, silence in sound, values that are changing in a very predictable way o Removing this redundancy is much easier with mathematical algorithms that work with discrete values Digital Representation: Summary Easier to process digital data Easier to transmit reliably Digital signals can be completely regenerated (if the distortions are not too severe) Easier storage and compression Soon, we will look at how numbers, texts, images, audio etc. are stored as digital signals Representing Numbers DECIMAL (base 10) - Numbers - Natural numbers Zero and any number obtained by repeatedly adding one to it Ie. 100, 0, 45645, 32 - Negative whole numbers Values less than 0, indicated with a “-“ sign before the left-most digit Ie. -24, -1, -45645, -32 Side note: hyphen -, minus - , and M-dash – are four different typographical symbols; use them correctly in your writing - Integers Values that are either a natural number, or a negative whole number o Ie. 249, 0, -46545, -32 - Rational numbers Values that are integers, or the quotient of two integers Ie. -249, -1, 0, 3/7, -2/5 - NOTE: , e, √2, √5... are irrational numbers - Positional numbers 48c = forty-eight cents $428 = four hundred and twenty-eight dollars 1820lb = one thousand, eight hundred and twenty pounds 2108 kg = two thousand, one hundred and eight kilograms 1820 - Positional Notation - 4 3 2 1 0 - Allows us to count past 10 while using just 10 digits - Each number in the row above represents a power of the base. This exponent is the order of magnitude from the column 10000 1000 100 10 1 2 7 9 1 6 = 20000 + 7000 + 900 + 10 + 6 =27916 - Decimal system is based on the number of digits (fingers) we (usually) have The magnitude of each column is the base, raised to its exponent The magnitude of the number is determined by multiplying the magnitude of the column by the digit in the column and summing the products Binary (base 2) - Non-decimal number systems - Numbers can be in any base: 2, 3, 4… 8, 9, 10… 16… - Decimal is base-10 and has 10 possible digits - - 0, 1, 2, 3, 4, 5, 6, 7, 8, 9 Binary is base-2 and has 2 digits 0, 1 Octal is base-8 and has 8 digits 0, 1, 2, 3, 4, 5, 6, 7 Hexadecimal is base-16 and has 16 digits 0, 1, 2, 3, 4, 5, 6, 7, 8, A, B, C, D, E, F NOTE: for a value to exist in a given base, it can only contain the digits for that base, which range from 0 up to (but not including) the base Ie. What bases can these values be in? 122 = 8 decimal 198 = 8 decimal 178 = 8 decimal G1A4 = hexadecimal Positional notation An n-digit unsigned integer in base b (di is the digit in the ith position in the number) o The number: (𝑑𝑛−1 𝑑𝑛−2 … 𝑑𝑖 …𝑑1 . . 𝑑0 ) 𝑏 - 1 (= 1) 11 (=3) Examples: 2 1 0 = 642 (642)10 = 6×10 + 4×10 + 2×10 3 2 1 0 = 2619 (5073)8 = 5×8 + 0×8 + 7×8 + 3×8 3 2 1 0 = 11 (1011)2 = 1×2 + 0×2 + 1×2 + 1×2 Binary is base 2 and has 2 digits: 0 and 1 100 (=4) 101 (=5) 111 (=7) 1000 (=8) 1001 (=9) 1010 (=10) - Binary numbers and computers - Computers have storage units called binary digits or bits For instance, Low voltage = 0 High voltage = 1 All bits are 1 or 0 - Byte = 8 bits 1 byte 4 bytes (32 bits) - - 1 single byte = 7, 6, 5, 4 ,3 ,2 ,1 ,0 27 26 25 24 128 64 32 16 23 8 22 4 21 2 There is also a representation for zero, giving 256 (28 ) 20 1 - - 1 bit: 2 values (0, 1); 2 bits: 4 values (00, 01, 10, 11); … - Every one bit added doubles the range of values - 255 is the largest value (in decimal) that can be expressed using 8 bits Arithmetic in Binary - Remember that there are only 2 digits in binary, 0 and 1 0+0=0 0+1=1 1 + 1 = 0 with a carry Octal notation - - Base 8… uses 8 numbers - 0, 1, 2, 3, 4, 5, 6, 7 - Position represents power of 8 - 15238 (1 ∗ 83 ) + (5 ∗ 82 ) + (2 ∗ 81 ) + (3 ∗ 80 ) = 512 + 320 + 16 + 3 = 851 Positional notation For base B, B digit symbols (0~B-1) Hexadecimal notation - Base 16… uses 16 symbols - 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F - Position represents power of 16 - 𝐵65𝐹16 OR 0 x B65F (11 ∗ 163 ) + (6 ∗ 162 ) + (5 ∗ 161 ) + (15 ∗ 160 ) = 45056 + 1536 + 80 + 15 = 46687 Representing Text - Character set : list of characters and the codes used to represent each one ASCII: American Standard Code for Information Interchange - Originally 7 bits for each character, 128 unique characters 33 of them control characters (ie. Used by old printers) The rest are decimal digits, English letters, punctuation signs - Later extended ASCII evolved so that all 8 bits were used Represented lines, symbols, and letters with accents Several incompatible versions - First 32 and last one are control characters (or hidden characters); they control how text appears, but do not appear as text - Decimal Arabic numbers (ie. 0, 1, 2, etc..) start at code 48 to 57 Ie. The code for 6 is 48 + 6 - Uppercase letters (A, B, C..) start at 65 to 90 Ie. Code for J(10th letter) is 65 + (10-1) = 74 - Lowercase letters (a, b, c..) start at 97 Ie. Code for j (10th letter) is 97 + (10-1) = 106 - Corresponding upper- and lowercase letters are separated by 32 (106-74 = 32) - Later: a bigger character set table Unicode, 0-127 are same - Even the extended ASCII is limited - Symbols A lot of common symbols are missing ie. ©, ™, € - Languages Extended portion can be changed sometimes Still no way to use multiple languages at the same time (ie. French and Greek) - Unicode is the superset of ASCII - The first 128 characters of Unicode character set correspond exactly to ASCII - Uses 16+ bits per character and can represent more than 1 million characters Lossless comparison: when some things are redundant - Data compression - It is important that we find ways to store and transmit data efficiently, which leads computer scientists to find ways to compress it - Data compression is a reduction in the amount of space needed to store a piece of data - Compression ratio is the size of the compressed data divided by the size of the original data Data compression technique can be: Lossless; which means data can be retrieved without any loss of the original information Lossy; which means some information may be lost in the process of compaction As examples, we look at the following 3 techniques: - Keyword encoding o Frequently used words are replaced with a single character Ie. As = ^, the = ~, and = +, that = $, must = & o Note that the characters used to encode cannot be part of the original text o Ie. 349 characters in the original paragraph (including space and punctuation)… 314 characters in the encoded paragraph 349-315 = 35 characters savings 314/349 = 0.9 x 100 = 90% A compression ratio of 90% is not very good - Run-length encoding (RLE) - a. Single character may be repeated over and over again in long sequence. This type of repetition doesn’t generally take place in English text, but often occurs in large data streams or in images representing paper documents b. A sequence of repeated characters is replaced by: i. A flag character, ii. Followed by the repeated character, iii. Followed by a single digit that indicates how many times the character is repeated c. Example i. AAAAAAA would be encoded as *A7 d. Ie. i. Original text contains 51 characters ii. The encoding string contains 35 characters iii. 35/51 = 68% Huffman encoding a. Why should the blank, which is used very frequently, take up the same number of bits as the character ‘X’ which is seldom used in text? b. Huffman code uses variable-length bit strings to represent each character c. A few characters may be represented by 5 bits, and another few by 6 bits, and yet another few by 7 bits, and so forth d. Challenge of variable-length encoding idea i. Need to know where one symbol ends and other begins 1. Ie. “e” = 01, “q”= 0101.. is “010101” “eee”, “eq”, or “qe”? e. Solution i. Prefix-property: no code is a prefix of another code ii. Enables unambiguous decodability f. If we use only a few bits to represent characters that appear often and reserve longer bit strings for characters that don’t appear often, the overall size of the document will be smaller g. The idea comes from Morse code i. Morse code uses variable-length code of dats and dashes (like 0 and 1) 1. Fewer dots and dashes for more frequent letters (a, e, i) ii. NOTE: no prefix property! (ie. A and J codes) h. Example of Huffman alphabet i. Usually different for different input data j. Generated during compression step DOORBELL = 1011110110111101001100100 Here is why you need prefix property No code is a prefix to another code Input: symbols and their frequency counts Output: binary-code for each symbol Huffman Code 00 01 100 110 111 1010 1011 Character A E L O R B D Property: optimum compression-rate with prefix-property Huffmans Algorithm Step 1: sort them in ascending order of frequency Step 2: replace the 2 minimum frequency symbols by one “combined symbol” and place it in a 2 nd sorted queue Step 3: repeat step 2 until only 1 character remains in queue A binary tree results Label left branches 0, right branches 1* The code for each symbol is the binary sequence along the corresponding root-to-leaf path 1) Prefix property 2) Optimum compression ratio Original = (4 + 6 + 11 + 13 + 17) x 8 = 408 (assume extended ascii) Compression ratio = 112 / 408 = 27% Lossless comparison: Summary - Keyword encoding alone the least effective of the three Huffman coding the most effective of the three - - Applications: - Run-length encoding (RLE) is used when compressing data with lots of repetition White space in faxes, any data that changes very slowly Sometimes combined with others (ie. Hoffman) - Hoffman encoding is used in a lot of algorithms as building blocks JPG, MP3, ZIP Huffman encoding is optimal for per-character compression Can do it better if consider more symbols at a time during analysis (ie. In English, ing, the, ed, wh, gh; keyword encoding?) Can we compress much better? - Yes, if (small) losses are acceptable: JPG, MPEG.. Intro to Microsoft Word - - Intro - Microsoft Word (a member of the Microsoft Office suite) is a very widely-used word processor Word files have the extensions .doc (with older versions) or .docx (with more recent versions) Word processors are computer applications used to create, format, store, and print typed documents e.g., theses - Other popular word processors include Google Docs, Libre Office, Pages, Zoho Writer, Notebooks, Corel WordPerfect... Common features - Word document is another name for a microsoft word file - - Typically contain following objects and more: Text; with attributes including font, size, face, color, etc Pictures and drawings Tables Embedded PDFs and media objects like audio and videos Math equations Word doc window - Document area = workspace - Tools contained in tool bar = ribbon Represented by icons that describe their functions Also reveal tips when you hover over them - Each ribbon divided into named groups, each group performs related functions - Caption Do not change the caption number as word ‘remembers’ only those numbers it automatically assigns, so that you have it generate a list of numbered illustrations later Also lets you change the position of the caption (Usually above tables and below figures), define new labels (other than figure, table, and equation) for illustrations and its numbering style