NETWORK APPLICATIONS:

THE BIG NOTE – A HARTMAN PRODUCTION

Really enjoying the big note? Buy me a coffee ☕

UNIT 1 – ELEMENTS OF MODERN NETWORKING:

THE KEY ELEMENTS OF A MODERN NETWORKING ECOSYSTEM:

The entire ecosystem exists to provide services to end users. The

term end user refers to the person who is working within an

enterprise or in a public setting or at home.

The user platform can be fixed (e.g. PC or workstation), portable

(e.g. laptop), or mobile (e.g. tablet or smartphone).

Users connect to network-based services and content through a

wide variety of network access facilities. These include digital

subscriber line (DSL) and cable modems, Wi-Fi and Worldwide

Interoperability for Microwave Access (WiMAX) wireless modems,

and cellular modems.

Such network access facilities enable the user to connect directly to

the Internet or to a variety of network providers, including Wi-Fi

networks and cellular networks.

Users also use network facilities to access applications and content. Application providers provide

applications, or apps, that run on the user’s platform. The application provider downloads software to

the user’s platform; however, the application service provider acts as a server or host of application

software that is executed on the provider’s platforms. A content provider is an organization or individual

that creates information, including educational or entertainment content, distributed via the Internet or

enterprise networks.

The networking ecosystem can be deployed via two different architectures:

1. Data center networking which consists of a very large number of interconnected servers.

Typically, as much as 80% of the data traffic is within the data center network, and only 20

percent relies on external networks to reach users.

2. IoT or Fog networking consists of millions of devices and the vast bulk of the data traffic to and

from these devices is machine to machine rather than user to machine.

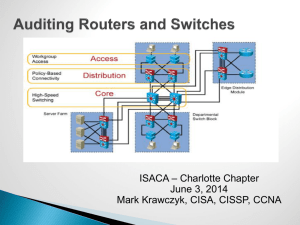

Consider two examples of networking ecosystems. Begin

with an architecture that could represent an enterprise

network of national or global extent, or a portion of the

Internet with some of its associated networks. Then present

another example to illustrate how enterprises design their

network facilities in a three-tier hierarchy: access,

distribution, and core. This example is illustrated to the

right.

Notice in the figure that the IP backbone (or core network)

typically consists of core routers, edge routers, and

aggregation routers.

Core routers are high performance routers that are

interconnected with high-volume optical links. The optical

links often use wavelength division multiplexing (WDM),

such that each link has multiple logical channels occupying

different portions of the optical bandwidth.

Edge routers are the routers that provide connectivity to external networks and users. Aggregation

routers are used within an enterprise network to connect several routers and switches to external

resources, such as an IP backbone or a high-speed WAN.

Enterprises often design their network facilities in a three-tier hierarchy of access, distribution, and core.

An access network is a local-area network (LAN) or campuswide network that consists of LAN switches (typically

Ethernet switches) and in larger LANs also consists of IP

routers that provide connectivity among the switches.

The distribution network connects access networks with

each other and with the core network. An edge router in

the distribution network connects to an edge router in an

access network to provide connectivity. This connection

between edge routers is referred to as peering.

The core network (also referred to as the backbone

network) connects geographically dispersed distribution

networks as well as provides access to other networks that

are not part of the enterprise network. The core network

uses very high-performance routers, high-capacity

transmission lines, and multiple interconnected routers for

increased redundancy and capacity.

AN OVERVIEW OF ETHERNET:

Ethernet, Wi-Fi, and 4G/5G cellular networks are the key network transmission technologies that have

evolved to support very high data rates.

ETHERNET APPLICATIONS:

Ethernet is the commercial name for a wired local-area network technology. Ethernet involves the use

of a shared physical medium, a medium access control protocol, and the transmission of data in packets.

It supports data rates up to 100Gbps and distances from a few meters to tens of kilometers. Ethernet

has become essential for supporting personal computers, workstations, servers, and massive data

storage devices in organizations large and small.

Ethernet in the Home:

Ethernet has long been used to create a local network of computers with access to the Internet via a

broadband modem/router. With the increasing availability of high-speed but low-cost Wi-Fi technology,

Ethernet has declined. Nevertheless, almost all home networking setups include some use of Ethernet.

Two recent extensions of Ethernet technology have enhanced and extended the use of Ethernet in the

home: Powerline carrier (PLC) and Power over Ethernet (PoE). The PLC extension uses the power wire as

a communication channel to transmit Ethernet packets on top of the power signal. The PoE extension

uses existing Ethernet cables to distribute power to devices on the network.

Ethernet in the Office:

Ethernet has been the dominant network technology for wired local-area networks (LANs) in the office

environment. There was some competitors such as IBM’s Token Ring LAN and the Fiber Distributed Data

Interface (FDDI), but the simplicity and wide availability of Ethernet hardware made Ethernet the

preferrable choice.

Today, the wired Ethernet technology exists side by side with the wireless Wi-Fi technology. Ethernet

retains its popularity because it can support many devices at high speeds, it isn’t subject to interference,

and it provides a security advantage as it’s resistant to eavesdropping. Therefore, a combination of

Ethernet and Wi-Fi is the most common architecture.

Ethernet in the Enterprise:

A great advantage of Ethernet is that it is possible

to scale the network both in terms of distance and

data rate with the same Ethernet protocol and

associated quality. An enterprise can easily extend

an Ethernet using a mixture of cable types and

Ethernet hardware. It can extend among several

buildings with links ranging from 10 Mbps to 100

Gbps. This is because all the hardware and

communications software conform to the same

standard within different vendor equipment.

Ethernet in the Data Center:

Ethernet is widely used in the data center where very high data rates are needed to handle massive

volumes of data among networked servers and storage units.

Two great features of the new Ethernet approach are co-located servers and storage units as well as the

backplane Ethernet. Co-located servers and storage units have high-speed Ethernet fiber links and

switches providing the needed networking infrastructure. The backplane Ethernet runs over copper

jumper cables that can provide up to 100Gbps over very short distances. The backplane Ethernet is ideal

for Blade servers where multiple server modules are housed in a single chassis.

ETHERNET STANDARDS:

10 = 10mbps

10Base2

Base = baseband signaling

10Base5

5 = 500m, 2 = 185m

10BaseT

T = twisted pair 100m

100BaseT

100 mbps

Gigabit

1000 mbps

10 GBase-?

10 gbps

100 GBase-?

100 gbps

Carrier sense multiple access

CSMA/CD

with collision detection facility

Addressing

MAC address

Framing

802.3

Thick & Thin Ethernets:

10Base5 (thick Ethernet) uses a bus topology with a thick

coaxial cable as the transmission medium.

10Base2 (thin Ethernet or Cheapernet) uses a bus topology

with a thin coaxial cable as the transmission medium.

Twisted-pair and Fiber Optic Ethernets:

10BaseT (twisted-pair Ethernet) uses a physical star topology

(the logical topology is still a bus) with stations connected by

two pairs of twisted-pair cables to the hub.

10BaseF (fiber link Ethernet) uses a star topology (the logical

topology is still a bus) with stations connected by a pair of

fiber-optic cables to the hub.

Fast Ethernet Implementation:

The two-wire implementation is called 100Base-X. It

uses either twisted-pair cables (100Base-TX) or fiberoptic cables (100Base-FX).

The four-wire implementation is designed only for

twisted-pair cables (100Base-T4).

Ethernet CSMA/CD:

Carrier sense, multiple access with collision detection (CSMA/CD) devices use the following process to

send data:

1. Every station has an equal right to the medium (multiple access)

2. Every station with a frame to send will first listen (sense) the medium. If there is no data on the

medium, the station can start sending (carrier sense).

3. It may happen that two stations both sense the

medium, find it idle, and start sending. In this case a

collision occurs. The protocol forces the station to

continue to listen to the line after sending has begun.

When collisions occur, each sending station sends a

jam signal to destroy the data on the line and after

each waits a different random amount of time (called

the backoff), it will try again. The random times

prevent the simultaneous resending of data.

Ethernet Frame Structure:

Packets sent in an Ethernet LAN are called a frame. The

Ethernet frame contains seven fields as illustrated to the right.

The preamble contains seven bytes (56 bits) of alternating 0s and 1s that alert the receiving system to

the coming fame and enable it to synchronize its input timing. The preamble is actually added at the

physical layer and is not formally part of the frame.

The start frame delimiter (SFD) is one byte (10101011) which signals the beginning of the frame. The

SFD gives the station a last chance for synchronization. The last two bits are 11 to signal that the next

field is the destination address.

The destination address (DA) is six bytes and contains the physical address of the next station. The

source address (SA) is also six bytes and contains the physical address of the sender.

The length/type has one of two meanings. If the value of the field is less than 1518 then it’s a length

field and defines the length of the data field that follows. If the value of the field is greater than 1536 it

defines the upper layer protocol that uses the services of the Internet.

The data field carries data encapsulated from the upper layer protocols. It is a minimum of 46 bytes and

has a maximum of 1500 bytes.

The CRC (CRC-32) is the last field in the 802.3 frame and contains the error detection information which

is checked at the receiver. If an error is detected the frame is dropped.

Cyclic Redundancy Check (CRC):

The cyclic redundancy check will view data bits, D, as a binary

number. It will choose a random number, R, of length r-bits

(called CRC) and choose a generator, G, of length r+1 bits such

that <D,R> is exactly divisible by G (modulo 2). The receiver

divides <D,R> by G as the receiver knows G. If there’s a non-zero remainder, an error has been detected.

CRC Example:

ETHERNET PERFORMANCE:

AN OVERVIEW OF WI-FI:

WI-FI APPLICATIONS:

Wi-Fi is standardized by IEEE 802.11. It has become the dominant technology for wireless LANs. The first

important use was in the home to replace Ethernet cabling or connecting desktop and laptop computers

with each other and the Internet.

Wi-Fi provides a cost-effective way to the Internet and is essential to implementing the Internet of

Things (IoT).

Enterprise Wi-Fi:

The economic benefit of Wi-Fi is most clearly seen in the enterprise. Approximately half of all enterprise

network traffic is via Wi-Fi rather than the traditional Ethernet.

Two trends have driven the transition to a Wi-Fi centered enterprise:

1. Demand has increased with more and more employees preferring to use laptops, tablets, and

smartphones to connect to the enterprise network.

2. The arrival of Gigabit Ethernet allows the enterprise network to support high-speed connections

to mobile devices simultaneously.

WI-FI STANDARDS:

Interoperability is essential to the success of Wi-Fi. Wi-Fi enabled devices must be able to communicate

with Wi-Fi access points regardless of the manufacturer of the device or access point.

Interoperability is guaranteed by the following 3 things:

1. IEEE 802.11 wireless LAN committee develops the protocol and signaling standards

2. The Wi-Fi alliance creates test suites to clarify interoperability for commercial products that

conform to various IEEE 802.11 standards

3. The term Wi-Fi (wireless fidelity) is used for products certified by the alliance

WI-FI PERFORMANCE:

THE DIFFERENCES BETWEEN THE FIVE GENERATIONS OF CELLULAR NETWORKS:

FIRST GENERATION (1G):

First generation cellular networks were the original cellular networks. The provided

analog traffic channels and were designed to be an extension of the public switched

telephone networks.

The most widely deployed system was the advanced mobile phone service (AMPS)

developed by AT&T. Voice transmission was purely analog and control signals were sent

over a 10kbps analog channel.

SECOND GENERATION (2G):

Second generation cellular networks were developed to provide higher-quality signals,

higher data rates for support of digital services, and greater capacity. The key differences

between 1G and 2G networks were 2G had digital traffic channels, encryption, error

detection and correction, as well as channel access.

THIRD GENERATION (3G):

The objective of third generation cellular networks was to provide fairly high-speed

wireless communication to support multimedia, data, and video in addition to voice.

Third generation cellular networks had the following design features:

-

Bandwidth

Data rate

Multirate

FOURTH GENERATION (4G):

Fourth generation cellular networks had ultra-broadband Internet access for a variety of

mobile devices, including laptops, smartphones, and tablets.

4G networks support mobile web access and high-bandwidth applications such as high

definition mobile TV, mobile video conferencing, and gaming services. 4G networks are

designed to maximize bandwidth and throughput while also maximizing spectral

efficiency.

4G networks have the following characteristics:

-

Based on an all-IP packet switched network

Support peak data rates

Dynamically share and use network resources to support more simultaneous users per cell

Support smooth handovers across heterogeneous networks

Support high QoS for next-generation multimedia applications

FIFTH GENERATION (5G):

Fifth generation cellular networks are still some years away. By 2020 the huge

amounts of data traffic generated by tablets and smartphones will be

augmented by an equally huge amount of traffic from the Internet of Things

(which includes shoes, watches, appliances, cars, thermostats, door locks, and

much more).

The focus of 5G will be on:

-

Building more intelligence into the network

Meeting service quality demands by dynamic use of priorities

Adaptive network reconfiguration

Other network management techniques

AN OVERVIEW OF CLOUD COMPUTING CONCEPTS:

Cloud computing first became available in the early 2000s. It was particularly targeted at large

enterprises but has spread to small and medium-size businesses (and recently to consumers).

Apple’s iCloud was launched in 2012 and had 20 million users within a week of the launch. Evernote

launched in 2008 and approached 100 million users in less than six years. In 2014 Google announced

that Google Drive had almost a quarter of a billion active users.

CLOUD COMPUTING CONCEPTS:

The National Institute of Standards and Technology (NIST) defines the essential characteristics of cloud

computing as:

-

-

-

-

Broad network access in terms of the

capability to access the network through

heterogeneous platforms (e.g. mobile phones,

laptops, PDAs, etc…)

Rapid elasticity in terms of the ability of users

to expand and reduce resources according to

their specific service requirement

Measured service which allows resource

usage to be monitored, controlled, and

reported

On-demand self service

Resource pooling

BENEFITS OF CLOUD COMPUTING:

Cloud computing provides economies of scale, professional network management, and professional

security management.

Another big advantage of using cloud computing to store your data and share it with others is that the

cloud provider takes care of security. Unfortunately, the customer isn’t always protected as there have

been a number of security failures among cloud providers.

CLOUD NETWORKING:

Cloud networking refers to the networks and network management functionality that must be in place

to enable cloud computing. Many cloud computing solutions rely on the Internet, but that is only a piece

of the networking infrastructure.

One example is the provisioning high-performance/high-reliability networking between the provider and

subscriber. In this case, some, or all of the traffic between an enterprise and the cloud bypasses the

Internet and uses dedicated private network facilities owned or leased by the cloud service provider.

More generally, cloud networking refers to the collection of network capabilities required to access a

cloud. This includes making use of specialized services over the Internet, linking enterprise data centers

to a cloud, and using firewalls and other network security devices at critical points to enforce access

security policies.

CLOUD STORAGE:

Cloud storage can be thought of as a subset of cloud computing. It consists of a database storage and

database applications hosted remotely on cloud servers. Cloud storage enables small businesses and

individual users to take advantage of data storage that scales with their needs and to take advantage of

a variety of database applications without having to buy, maintain, and manage the storage assets.

THE INTERNET OF THINGS:

The internet of things is a term that refers to the expanding interconnection of smart devices, ranging

from appliances to tiny sensors. A dominant theme is the embedding of short-range mobile transceivers

into a wide array of gadgets and everyday items, enabling new forms of communication between people

and things and between things themselves.

The internet of things is primarily driven by deeply embedded devices. These devices are lowbandwidth, low-repetition data capture, and low-bandwidth data-usage appliances that communicate

with each other and provide data via user interfaces.

EVOLUTION OF THE INTERNET OF THINGS:

With reference to the end systems supported, the Internet has gone through roughly four generations

of deployment culminating in the internet of things:

1. Information Technology (IT)

PCs, servers, routers, firewalls, and so on

Bought as IT devices by enterprise IT people, primarily using wired connectivity

2. Operation Technology (OT)

Machines/appliances with embedded IT built by non-IT companies such as medical

machinery, SCADA, process control, and kiosks

Bought as appliances by enterprise OT people and primary using wired connectivity

3. Personal Technology

Smartphones, tablets, and eBook readers

Bought as IT devices by consumers exclusively using wireless connectivity and often in

multiple forms of wireless connectivity

4. Sensor/Actuator Technology

Single-purpose devices

Bought by consumers, IT, and OT people exclusively using wireless connectivity,

generally of a single form, as part of larger systems

LAYERS OF THE INTERNET OF THINGS:

Sensors and Actuators:

Sensors and actuators are the “things” in the internet of things. Sensors observe their environment and

report back quantitative measurements. Actuators operate on their environment.

Connectivity:

A device may connect via either a wireless or wired link into a network to send collected data to the

appropriate data center (sensor) or receive operational commands from a controller site (actuator).

Capacity:

The network supporting the devices must be able to handle a potentially huge flow of data.

Storage:

There needs to be a large storage facility to store and maintain backups of all the collected data.

Data Analytics:

For large collections of devices, “big data” is generated which requires data analytics capabilities to

process the data flow.

NETWORK CONVERGENCE:

Network convergence refers to the merger of previously distinct telephony and information

technologies and markets. This convergence can be thought of in terms of a three-layer model of

enterprise communications:

1. Application Convergence

These are seen by the end users of a business.

Convergence integrates communications

applications with business applications.

2. Enterprise Services

At this level, the manager deals with the

information network in terms of the services that

must be available to ensure that users can take

full advantage of the applications that they use.

3. Infrastructure

The network and communications infrastructure consists of the communication links,

LANs, WANs, and Internet connections available to the enterprise. A key aspect of

convergence at this level is the ability to carry voice, image, and video over networks

that were originally designed to carry data traffic.

UNIFIED COMMUNICATIONS (UC):

Unified communications focus on the integration of real-time communication services to optimize

business processes. IP is the cornerstone on which UC systems are built.

Key elements of UC include:

-

UC systems typically provide a unified user interface and consistent user experience across

multiple devices and media

UC merges real-time communications services with non-real-time services and business process

applications

Typical Components of a UC Architecture:

UNIT 2 – PEER-TO-PEER NETWORKS:

INTRODUCTION:

DEFINITION OF P2P SYSTEMS:

There’s no universally accepted definition of P2P systems, however, there are many definitions and

there’s some common characteristics shared by most P2P systems. These common characteristics are:

-

A ‘peer’ is a computer that can act as both server and/or client.

A P2P system should consist of at least 2 or more peers.

Peers should be able to exchange resources directly among themselves. Such resources include

files, storages, information, central processing unit power and knowledge.

Dedicated servers may or may not be present in a P2P system depending on the nature of the

applications.

P2P systems without dedicated servers are sometimes described as ‘pure’ P2P systems.

Peers can join and/or leave the system freely.

ADVANTAGES OF P2P SYSTEMS:

Some benefits of P2P systems are that the workload is spread to all peers. It’s possible to have millions

of computers in a P2P network, which can deliver huge resources and power. Another benefit is that

P2P systems maximize system utilization. Many office computers are not used at all from 5p to 9am, so

P2P computers can use these resources which maximizes the utilization.

P2P systems also have the benefit of not having a single point of failure. For example, the Internet and

the Web don’t have a central point of failure. P2P network will still function when some of its peers are

not working properly. Thus, it’s more fault tolerant than other systems. This also gives P2P systems great

scalability as every peer is alike, it’s possible to add more peers to the system scaling to larger networks.

DISADVANTAGES OF P2P SYSTEMS:

Some disadvantages of P2P computing is that the peer will be more susceptible to hacker’s attacks. It’s

also difficult to enforce standards in P2P systems. P2P networks can’t guarantee that a particular

resource will be available all the time. For example, the owner may shut down their computer or delete

a file. It’s also difficult to predict the overall performance of a system. Another disadvantage is that it

can be difficult to prevent illegal uploading and downloading of copyrighted materials in a P2P system.

And lastly, popular P2P systems can generate enormous amounts of network traffic and as a result,

some universities didn’t allow their students to access some P2P applications inside the campus.

P2P TOPOLOGIES:

CENTRALIZED:

Centralized systems are the most familiar form of topology. They are typically seen

as the client/server patter used by databases, web servers, and other simple

distributed systems. All function and information is centralized into one server with

many clients connecting directly to the server to send and receive information.

Many peer-to-peer applications also have a centralized component. For example, the original Napster’s

search architecture was centralized, although the file sharing was not.

RING:

A single centralized server cannot handle high client’s load. A common solution is to

use a cluster of machines arranged in a ring to act as distributed servers.

Communication among the nodes coordinates state-sharing to provide identical

function with fail-over and load-balancing capabilities.

Ring systems are generally built on the assumption that the machines are all nearby on the network and

owned by a single organization.

HIERARCHICAL:

Hierarchical systems have a long history on the Internet. The best-known hierarchical

system on the Internet is the Domain Name Service where authority flows from the

root name servers to the server for the registered name.

The Network Time Protocol (NTP) is a protocol for synchronizing the clocks of computer systems over

networks. There are root time servers that have authoritative clocks, and other computers synchronize

to root time servers in a self-organizing tree.

DECENTRALIZED:

Decentralized topology systems are the opposite of centralized topology systems. In

decentralized topology systems, all peers communicate symmetrically and have equal

roles. Decentralized systems are not new and in fact, the Internets routing architecture

is largely decentralized with the Border Gateway Protocol between various

autonomous systems. Gnutella is probably the “purest” decentralized system used in

practice today.

HYBRID:

Real-world systems often combine several topologies into one system making a hybrid topology. Nodes

typically play multiple roles in such a system. For example, a node might have a centralized interaction

with one part of the system, while being part of a ring with other nodes.

Centralized and Ring:

A combination of centralized and ring topologies is a very common hybrid. Most web

server applications often have a ring of servers for load balancing and fail-over. The

system as a whole is a hybrid, as a centralized system for clients where the server is

itself a ring.

Centralized and Decentralized:

Combining centralized and decentralized topologies typically results in an architecture of

centralized systems embedded in decentralized systems. Most peers have a centralized

relationship to a super node forwarding all file queries to this server. Instead of super

nodes being standalone servers, they band themselves together in a decentralized

network propagating queries.

EVALUATING TOPOLOGIES:

Things to consider when deciding which topology to use are listed below:

-

Manageability (How hard is it to keep working in terms of updating, repairing, and logging?)

Information coherence (If a bit of data is found in the system, is that data correct?)

Extensibility (How easy is the system to grow?)

Fault tolerance (How well can it handle failures?)

Resistance to legal or political intervention (How hard is it to shut down?)

Security (How hard is the system to be attacked?)

Scalability (How big can the system grow?)

Centralized:

Hierarchical:

Centralized + Ring:

Ring:

Decentralized:

Centralized + Decentralized:

P2P APPLICATIONS:

P2P COMPUTING APPLICATIONS:

Some common applications of P2P computing are listed below:

-

-

-

File sharing

o Improves data availability

o Replications to compensate for failures

o E.g. Napster, Gnutella, Freenet, KaZaA, etc…

Process sharing

o For large-scale computations

o Data analysis, data mining, scientific computing, etc…

Collaborative environments

o For remote real-time human collaboration

o Instant messaging, shared whiteboards, teleconferencing, etc…

o E.g. Skype, Messenger, etc…

Some technical challenges of P2P applications are peer identification, routing protocols, network

topologies, peer discovery, communication/coordination protocols, quality of service, and security.

NAPSTER MODEL:

Created in 1999 by Shawn Fanning, Napster was a P2P application network which gives its members the

ability to connect directly to other members’ computers and search their hard drives for digital music

files to share and trade.

Members download a software package from Napster and install it on their computers. The Napster

central computer maintains directories of music files from members who are currently connected to the

network. These directories are automatically updated when a member logs on or off the network.

Whenever a member submits a request to search for a file, the central

computer provides information to the requesting member. The

requesting member can then establish a connection directly with another

member’s computer containing that particular file. The download of the

target file takes place directly between the members’ computers,

bypassing the central computer.

Over 36 million people joined the Napster community. It rapidly accelerated the development and

implementation of other P2P models. It’s main limitation was that it could only share music files, and in

July 2001 the Recording Industry Association of America (RIAA) ordered to shutdown Napster due to the

free copying of copyrighted material.

OTHER P2P SYSTEMS:

Napster was ordered to shutdown because it maintained a central directory for its members. New filesharing P2P systems bypass the legal problems as they don’t hold a central directory. They don’t even

need a central server or any company to run the system. Thus, it’s impossible to kill the network. These

new P2P systems include Gnutella, KaZaA, LimeWire, Direct Connect, etc…

THE NETWORK STRUCTURE OF GNUTELLA:

The idea of Gnutella is similar to the “search strategies” employed by humans. If a

user wants to get a particular file, they ask one of their friends. If they don’t have the

file, they will ask their friends. This request will be conveyed from one person to

another until it reaches someone who has the file. This piece of information will then

be routed to the user according to the original path.

Computers in the network have different connection speeds. A high-speed computer will connect many

computers, while the low-speed computer will only connect to a few computers. Over the course of

time, the network will have a high-speed computer in the core.

P2P AND THE INTERNET:

P2P OVERLAYS AND NETWORK SERVICES:

Peers in P2P applications communicate with other peers using messages transmitted over the Internet

or other types of networks. The protocol of various P2P applications have some common features:

-

Protocols are constructed at the application layer.

Peers have a unique identifier, which is the peer ID or peer address.

P2P protocols support some type of message-routing capability where a message intended for

one peer can be transmitted via intermediate peers to reach the destination peer.

To distinguish the operation of the P2P protocol at the application layer from the behavior of the

underlying physical network, the collection of peer connections in a P2P network is called a P2P overlay.

The image to the right shows the correspondence between peers

connecting in an overlay network with the corresponding nodes in

the underlying physical network. Peers form an overlay network

(top) use network connections in the native network (bottom). The

overlay organization is a logical view that might not directly mirror

the physical network.

OVERLAY NETWORK TYPES:

Depending on how the nodes in a P2P overlay are linked, the overlay network can be classified as either

an unstructured or structured overlay network.

Unstructured networks have the nodes linked randomly. A search in unstructured P2P is not very

efficient, and a query may not be resolved. Gnutella and Freenet are examples of unstructured P2P

networks.

Structured networks use a predefined set of rules to link nodes so that a query can be effectively and

efficiently resolved. The most common technique used for this purpose is the Distributed Hash Table

(DHT). One popular P2P file sharing protocol that uses DHT is BitTorrent.

DISTRIBUTED HASH TABLE (DHT):

DHTs distribute data items (objects) among a set of nodes according to some predefined rules. Each

peer in a DHT-based network becomes responsible for a range of data items. Both the data item and the

responsible peer are mapped to a point in a large address space of size 2𝑚 (most of the DHT

implementations use 𝑚 = 160).

The address space is designed using modular arithmetic,

which means that the points in the address space are

distributed on a circle with 2𝑚 points (0 to 2𝑚 − 1) using

clockwise direction as shown in the image to the right.

Hashing Peer/Object Identifier:

The first step in creating the DHT system is to place all peers on the address space ring. This is normally

done using a hash function that hashes the peer identifier (normally its IP address) to an m-bit integer

called a node ID.

Node ID = hash(Peer IP address)

DHT uses some of the cryptographic hash functions such as Secure Hash Algorithm (SHA-1) that are

collision resistant. The name of the object (e.g. a file) to be shared is also hashed to an m-bit integer in

the same address space called a key.

Key = hash(Object name)

In the DHT, an object is normally related to the pair (key, value) in which the key is the hash of the

object name, and the value is the object itself (or a reference to it).

Storing the Object:

There are two strategies for storing the object. The first option is the direct method where the object is

stored in the node whose ID is the closest to the key in the ring. The term closest is defined differently in

each protocol. The second option is the indirect method where the peer that owns the object keeps the

object, but a reference to the object is created and stored in the node whose ID is closest to the key

point. This means, the physical object and the reference to the object are stored in two different

locations (peers). Most DHT systems use the indirect method due to efficiency. In either case, a search

mechanism is needed to find the object if the name of the object is given.

EXAMPLE:

The normal value of 𝑚 is 160, but for the purpose of

demonstration, consider a scenario where 𝑚 = 5. The

node N5 with IP address 110.34.56.23 has a file names

“SE3314b-Assignment” that it wants to share with its

peers. The file is stored in N5, the key of the file is k14,

but the reference to the file is stored in node N17.

UNSTRUCTURED OVERLAY TOPOLOGY:

An unstructured P2P network is formed when the overlay links are established arbitrarily. Unstructured

overlays (e.g. Gnutella) organize nodes into a random graph and use floods or random walks to discover

data stored by overlay nodes.

Each node visited during a flood or random walk evaluates the query locally on the data items that it

stores. Unstructured overlays don’t impose any constraints on the node graph or on data placement

(e.g. each node can choose any other node to be its neighbor in the overlay). Unstructured overlays

cannot find rare data items efficiently, and it doesn’t guarantee that an object can be found if it exists in

the overlay.

FLOODING AND EXPANDING RING:

When each peer keeps a list of its neighbors and when this neighbor’s relations are

transitive, the result is connectivity graphs as shown to the right.

In this particular graph, peers have degree from 2-5. Increasing the degree reduces

the diameter of the overlay, but requires more storage at each peer. Peers can

echange messages with other peers in its neighbor list and messages can be a query

that contains the search criteria (such as a filename or keywords).

Flooding:

Because it’s unkown which peers in the overlay have the information, a flooding

algorithm is used. The peer tries to send a query to all its neighbors. If the neighbor

peers don’t have the information, they can in turn, forward the request to their

neighbors and so on. To prevent messages from circulating endlessly, message

identifiers are used and a TTL value is attached to a message to limit its lifetime.

Each peer has a list of neighbors. It initializes its list of

neighbors when it joins the overlay (e.g. by getting a copy

of the neighbor list of the first peer it connects to). When

the query is satisfied at some peer, a response message is

sent to the requesting peer. If the object is not found

quickly, the flooding mechanism continues to propagate

the query message along other paths until the TTL value

expires or the query is satisfied.

FloodForward(Query q, Source p)

// have we seen this query before?

if(q.id ∈ oldIdsQ) return // yes, drop it

oldIdsQ = oldIdsQ ∪ q.id // remember this query

// expiration time reached?

q.TTL = q.TTL – 1

if q.TTL ≤ 0 then return // yes, drop it

// no, forward it to remaining neighbors

foreach(s ∈ Neighbors) if(s ≠ p) FloodForward(q,s)

Expanding Ring:

The flooding mechanism creates substantial redundant messaging, which is inefficient for the network.

The search may start with a small TTL value. If this succeeds the search stops. Otherwise, the TTL value is

increased by a small amount and the query is reissued. This variation of flooding is called iterative

deepening or expanding ring.

Random Walk:

To avoid the message overhead of flooding, unstructured overlays can

use some type of random walk. In random walk, a single query

message is sent to a randomly selected neighbor. The message has a

TTL value that is decremented at each hop.

If the desired object is found, the search terminates.

Otherwise, the query fails by a timeout or an explicit

failure message. The same process may be repeated

to another randomly chose path.

To improve the response time, several random walk

queries can be issued in parallel.

RandomWalk(source, query, TTL)

if (TTL > 0) {

TTL = TTL – 1

// select next hop at random, don’t send back to source

while((next_hop = neighbors[random()]) == source){}

RandomWalk(next_hop, query, TTL)

}

STRUCTURED OVERLAY TOPOLOGY:

MOTIVATIONS AND CATEGORIES:

The earliest peer-to-peer systems used unstructured overlays that were easy to implement but had

inefficient routing and an inability to locate rare objects. These problems turned the attention to

designing overlays with routing mechanisms that are deterministic and can provide guarantees on the

ability to locate any object stored in the overlay.

The large majority of these designs used overlays with a specific routing geometry and are called

structured overlays.

STRUCTURE OVERLAYS & DIRECTED SEARCHES:

The idea of structured overlays is to assign particular nodes to hold particular content (or pointers to it

like an information booth). When a node wants that content, it goes to the node that is supposed to

have or know about it.

The challenges with this idea are making it distributed and adaptive. The responsibilities should ideally

be distributed among existing nodes in the overlay and the nodes should be able to easily join and leave

the P2P overlay. The knowledge responsibility should be distributed to joining nodes and redistributed

from leaving nodes.

Structured overlays support key-based routing such that object identifiers are mapped to the peer

identifiers address space and an object request (lookup message) is routed to the nearest peer in the

peer address space. P2P systems using key-based routing are called distributed object location and

routing (DOLR) systems. A specific type of DOLR is a distributed hash table (DHT).

Pastry:

Pastry is designed by Antony Rowstron and Peter Druschel in 2001, and it uses DHT. Nodes and data

items are identified by 𝑚-bit IDs that create an identifier space of size 2𝑚 points distributed in a circle in

the clockwise direction. The common value for 𝑚 is 128. The protocol uses the SHA-1 hashing algorithm

with 𝑚 = 128.

𝑚

In Pastry, an identifier is seen as an 𝑛-digit string in base 2𝑏 in which 𝑏 is normally 4 and 𝑛 = 𝑏 . For

instance, an identifier is a 32-digit number in base 16 (hexadecimal). In Pastry, a key is stored in the

node whose identifier is numerically closest to the key.

Each node in Pastry can resolve a query using

two entities: a routing table and a leaf set. A

routing table might look like the table to the

right.

For node 𝑁, 𝑇𝑎𝑏𝑙𝑒[𝑖, 𝑗] gives the ID of a node (if it exists) that shares the 𝑖 leftmost digits with the ID for

𝑁 and its (𝑖 + 1)th digit has a value of 𝑗. The first row (row 0) shows the list of live nodes whose

identifiers have no common prefix with 𝑁. The last row (row 31) shows the list of all live nodes that

share the leftmost 31 digits wth node 𝑁 (only the last digit is different).

For example, assume the node 𝑁 ID is

(574𝐴234𝐵12𝐸374𝐴2001𝐵23451𝐸𝐸𝐸4𝐵𝐶𝐷)16. The

value of the 𝑇𝑎𝑏𝑙𝑒[2, 𝐷] can be the identifier of a node

such as (57𝐷 … ). Note that the leftmost 2 digits are 57

which are common with the first two digits of 𝑁, but the next digit is D, which is the value corresponding

to the Dth column. If there are more nodes with the prefix 57D, the closest one, according to the

proximity metric, is chosen and its identifier is inserted in this cell.

The proximity metric is a measurement of closeness determined by the application that uses the

network. It can be based on the number of hops between the two nodes, the round-trip time between

the two nodes, or other metrics.

A leaf set is another entity used in routing and is a set of

2𝑏 identifiers (the size of a row in the routing table). The

left half of the set is a list of IDs that are numerically

smaller than the current node ID. The right half is a list of

IDs that are numerically larger than the current node ID.

The leaf set gives the identifier of 2𝑏−1 live nodes located

before the current node in the ring and the list of 2𝑏−1

nodes located after the current node in the ring.

Pastry enables the lookup

operation, where given a

key, it will find the node that

stores the information about

the key or the key itself.

Lookup (key) {

if (key is in the range of N's leaf set)

forward the message to the closest node in the leaf set

else

route (key, Table)

}

route (key, Table) {

p = length of shared prefix between key and N

v = value of the digit at position p of the key // Position starts from 0

if (Table [p, v] exists)

forward the message to the node in Table [p, v]

else

forward the message to a node sharing a prefix as long as

the current node, but numerically closer to the key.

}

Two examples of how the pastry lookup works can be seen

below.

The process of joining the ring in Pastry is as follows:

1. The new node, X, should know at least one node 𝑁0 , which should be close to X, and send a join

message to it (assume that 𝑁0 has no common prefix with X)

2. Node 𝑁0 sends the contents of its row 0 to node X. Since the two nodes have no common prefix,

node X uses the appropriate parts of this information to built its row 0.

3. Node 𝑁0 calls a lookup operation with X’s ID as a key, which will forward the join message to

node 𝑁1 whose identifier is closest to X.

4. Node 𝑁1 sends the contents of its row 1 to node X since the two nodes have one common

prefix.

5. The process continues until the routing table of node X is complete.

6. The last node in the process, which has the

longest common prefix with X, also sends its

leaf set to node X, which becomes the leaf

set of X.

Consider the example shown to the right. A new

node X with node ID n2212 uses the information in

four nodes as shown to create its initial routing

table and leaf set for joining the ring. Assume that

node 0302 is nearby to node 2212 based on the

proximity metric.

Each Pastry node periodically tests the liveness of the nodes in its leaf set and routing table by

exchanging probe messages. If a local node finds that a node in its leaf set is not responding to the

probe message, it assumes that the node has failed or departed. The local node then contacts the live

node in its leaf set with the largest identifier and repairs its leaf set with the information in the leaf set

of that node.

If a local node finds that a node in its routing table, 𝑇𝑎𝑏𝑙𝑒[𝑖, 𝑗], is not responsive to the probe message,

it sends a message to a live node in the same row and requests the identifier in 𝑇𝑎𝑏𝑙𝑒[𝑖, 𝑗] of that node.

This identifier replaces the failed or departed node.

Kademlia:

Kademlia is a DHT peer-to-peer network that is designed by Petar Maymounkov and David Mazires in

2002. Kademlia routes messages based on the distance between nodes. The distance between the two

identifiers (nodes or keys) is measured as the bitwise exclusive-or (XOR) between them. For instance, if x

and y are two identifiers, the distance between them is defined as:

𝑑𝑖𝑠𝑡𝑎𝑛𝑐𝑒(𝑥, 𝑦) = 𝑥 ⊕ 𝑦

This distance function has the properties shown in

the table to the right.

Nodes and data items are 𝑚-bit identifiers that

create an identifier space of 2𝑚 points distributed

on the leaves of a binary tree. The protocol uses the

SHA-1 hashing algorithm with 𝑚 = 160. For

example, if 𝑚 = 4, there are 16 IDs distributed on

the leaves of a binary tree as shown to the right.

In the binary tree shown to the side, 𝑘3 is stored in 𝑁3 because 3 ⊕ 3 = 0. 𝑘7 is stored in 𝑁6 not in 𝑁8

because 6 ⊕ 7 = 1 but 7 ⊕ 8 = 15. 𝑘12 is stored in 𝑁15 not in 𝑁11 because 11 ⊕ 12 = 7, but 12 ⊕

15 = 3.

Kademlia keeps only one routing table for each node

(there’s no leaf set). Each node 𝑁 divides the bianry tree

into 𝑚 subtrees. A subtree 𝑖 includes nodes that share 𝑖

leftmost bits (common prefix 𝑃) with the the node 𝑁,

and doesn’t include the node 𝑁 itself. For example, the

node 𝑁5 (0101) divides the previous tree as shown to

the right.

The routing table is made of 𝑚 rows but only one column

as can be seen to the right. The idea is the same as that

used by Pastry, but the length of the common prefix is

based on the number of bits instead of the number of

digits in base 2𝑏 .

For example, the routing table for the previous example can be found as shown below. To make the

example simple, it was assumed that each row only uses one identifier.

In the above example, it’s assumed node 𝑁0 (0000)2 receoves a lookup message to find the node

responsible for 𝑘12(1100)2.

The length of the common prefix between node 𝑁0 and 𝑘12 is 0. 𝑁0 sends the message to the node in

row 0 of its routing table, node 𝑁8. In 𝑁8, the length of the common prefix is 1. It checks row 1 and

sends the query to 𝑁15 which is responsible for 𝑘12. The routing process is then terminated and the

route is determined as 𝑁0 → 𝑁8 → 𝑁15.

For more efficieny, Kademlia requires that each row in the routing table keeps at least up to 𝐾 = 20

nodes from the corresponding subtree. For this reason, each row in the routing table is referred to as a

k-bucket. Having more than one node in each row allows the node to use an alternative node when a

node leaves the network or fails. Kademlia keeps those nodes in a bucket that has been connected in

the network for a long time.

Just as in Pastry, a node that needs to join the network needs to know at least one other node. The

joining node sends its identifier to the node as though it’s a key to be found. The response it receives

allows the new node to create its k-buckets. When a node leaves the network or fails, other nodes

update their k-buckets using the lookup process.

Chord:

Chord was published by Stoica in 2001. Chord uses 𝑚-bit numbers to identify the data items denoted as

𝑘 (for key) and to identify the peers denoted as 𝑁 (for node). The identifier space of 2𝑚 points

distributed in a circle in the clockwise direction. All arithmetic in the identifier space is done modulo 2𝑚 .

Chord recommends the cryptographic hash function SHA-1 for the identifier space generation. SHA-1

produces output of fixed length equal to 160 bits.

The closest peer 𝑁 ≥ 𝑘 is called the successor of 𝑘 and hosts the value (𝑘, 𝑣) where 𝑘 is the key (hash of

the data name) and 𝑣 is the value (information about the peer that has the actual object).

Any node should be able to resolve a query/lookup that asks

for the node identifier responsible for a given key. If a node

has no information about this key it forwards the query to

another node that may know. To do forwarding, each node

needs to know about 𝑚 successor nodes and one

predecessor node. This information is saved in a routing

table called a Finger table.

For example, consider a ring with few nodes and 𝑚 = 5 to make

the example simpler. Only the successor column from the finger

table is shown.

I Chord, the lookup operation is used to find where an object is

located among the available peers in the ring. To find the object, a

peer needs to know the node that is responsible for that object

(the peer that stores reference to that object). A peer that is the

successor of a set of keys in the ring is the responsible peer for

those keys, so finding the responsible node is actually finding the

successor of a key.

To find the successor of a key, the lookup operation is used as

follows:

1. Find the predecessors of the key (using find_predecessor

function)

2. From the predecessor node, find the next node in the ring

which is the value of finger[1]

3. If the key is located far from the current node, the node

needs the help of other nodes to find the predecessor

(using find_closest_predecessor function)

Lookup (key) {

if (the current node N is responsible for the key)

return (N’s ID)

else

return find_successor (key)

}

find_successor (id) {

x= find_ predecessor (id)

return x.finger[1]

}

find_predecessor (id) {

x=N // N is the current node

while (id Ï (x, x.finger[1]) {

x = x.find_closest_predecessor (id) // Let x find it

}

return x

}

find_closest_predecessor (id) {

for (i = m downto 1) {

if (finger [i] Î (N, id)) //N is the current node

return (finger [i])

}

return N //The node itself is closest predecessor

}

Leaving and joining of a node or a group of nodes may destabilize

the ring. Chord defines an operation called stabilize to address this issue resulting in each node in the

ring periodically using stabilize to validate the information about their successor and to let the successor

validate their information about their predecessor.

In other words:

1. Node 𝑁 uses the value of finger[1], S, to ask node 𝑆 to

return its predecessor 𝑃

2. If the return value 𝑃 from this query is between 𝑁 and 𝑆,

this means that there’s a node with the ID equal to 𝑃 that

lies between 𝑁 and 𝑆

3. Node 𝑁 makes 𝑃 its successor and notifies 𝑃 to make node

𝑁 its predecessor

Stabilize ( ) {

P= finger[1].Pre // Ask the successor to return its predecessor

if(P Î (N, finger[1]))

finger[1] =P

// P is the possible successor of N

finger[1].notify (N) // Notify P to change its predecessor

}

Notify (x) {

if (Pre = null or x Î (Pre, N))

Pre = x

}

Destabilization may change the finger table of up to 𝑚

nodes. Chord defines a fix_finger function to updates its

finger tables. Each node in the ring must periodically call

this function. To avoid traffic on the system, each node

must only update its fingers in each call. This finger is

chosen randomly.

When a new node 𝑁 joins the ring, it uses the join

operation. Join functions need to know an ID of another

node (say 𝑥) to find the successor of the new node and set

its predecessor to null. It immediately will then call the

stabilize function to validate its successor. The new node

then asks the successor to call the Move_Keys function that

transfers the keys that the new node is responsible for.

Fix_Finger () {

Generate (i Î (1, m]) // Randomly generate i such that 1< i ≤ m

finger[i] =find_successor (N + 2 i– 1) // Find value of finger[i]

}

Join (x) {

Initialize (x)

finger[1].Move_Keys (N)

}

Initialize (x) {

Pre = null

if (x = null) finger[1] = N

else finger[1] = x. Find_Successor (N)

}

Move_Keys (x) {

for (each key k)

if (x Î [k, N)) move (k to node x) // N is the current node

}

Note that, after this operation the finger table of the new joined node is empty and the finger table of

up to 𝑚 predecessors is out of date. The stabilize and fix_finger operations that run periodically after

this event will gradually stabilize the system.

When a peer leaves the ring or the peer fails, the status of the ring will be disrupted unless the ring

stabilizes itself. Each node exchanges ping and pong messages with neighbors to find out if they are

alive. When a node doesn’t receive a pong message in response to its ping message, the node knows

that the neighbor is dead. The node that detects the problem can immediately launch these stabilize

and fix finger operations. Note that the data managed by the node that left or failed is no longer

available. Therefore, Chord requires that data and references be duplicated on other nodes.

UNIT 3 – SOFTWARE DEFINED NETWORKING:

THE LIMITATIONS OF THE TRADITIONAL NETWORK ARCHITECTURES:

EVOLVING NETWORK REQUIREMENTS:

A number of trends are driving network providers and users to reevaluate traditional approaches to

network architecture. These trends can be grouped under the following categories:

-

Demand (due to the increase in cloud computing, big data, mobile traffic, IoT, etc…)

Supply (due to the increase in capacity of the network transmission technologies such as 5G)

Traffic patterns

Traditional network architectures are inadequate. The traditional internetworking approach is based on

the TCP/IP protocol architecture. There are three significant characteristics of this approach:

1. Two-level end system addressing

2. Routing based on destination

3. Distributed autonomous control

The Open Networking Foundation (ONF) cites four general limitations of traditional network

architectures:

1.

2.

3.

4.

Static and complex architecture

Inconsistent policies

Inability to scale

Vendor dependence

THE KEY REQUIREMENTS FOR AN SDN ARCHITECTURE:

PRINCIPAL REQUIREMENTS FOR A MODERN NETWORK:

Networks must adjust and respond dynamically, based on application needs, business policy, and

Adaptability

network conditions.

Policy changes must be automatically propagated so that manual work and errors can be reduced.

Automation

Maintainability Introduction of new features and capabilities (software upgrades and patches) must be seamless with

Model

Management

Mobility

Integrated

Security

On-demand

Scaling

minimal disruption of operations.

Network management software must allow management of the network at a model level, rather than

implementing conceptual changes by reconfiguring individual network elements.

Control functionality must accommodate mobility, including mobile users and virtual servers.

Network applications must integrate seamless security as a core service instead of as an add-on

solution.

Implementations must have the ability to scale up or scale down the network and its services to

support on-demand requests.

SOFTWARE DEFINED NETWORKING (SDN):

To provide adaptability and scalability, two key technologies that are rapidly being deployed by a variety

of network services and application providers are SDN and NFV. Network functions virtualization (NFV) is

outside of the scope of this course and will be covered in SE4455.

SDN is replacing the traditional networking model as it provides an enhanced level of flexibility to meet

the needs of newer networking and IT trends such as cloud, mobility, social networking, and video.

In SDN, there are two elements involved in forwarding packets

through routers.

1. A control function which decides the route for the flow to

take and the relative priority of traffic.

2. A data function which forwards data based on controlfunction policy.

To the right is a comparison of traditional networking and

the SDN approach. Note that in traditional networking

each switch has both a data and control plane within it.

This is because the routing is handled in each router in

contrast to it being centralized in one SDN controller.

The Data Plane:

The data plane consists of physical switches and virtual switches, which are responsible for forwarding

packets. The internal implementation of buffers, priority parameters, and other data structures should

be uniform and open to the SDN controllers. This can be defined in terms of an open application

programming interface (API) between the control plane and the data plane (southbound API). The most

prominent example of such an open API is OpenFlow.

The Control Plane:

SDN controllers can be implemented directly on a server or on a

virtual server. OpenFlow or some other open API is used to

control the switches in the data plane. In addition, controllers

use information about capacity and demand obtained from the

networking equipment through which the traffic flows. SDN

controllers also expose northbound APIs which allow developers

and network managers to deploy a wide range of off-the-shelf

and custom-built network applications. A number of vendors

offer a Representational State Transfer (REST)-based API to

provide a programmable interface to their SDN controller.

The Application Plane:

At the application plane there are a variety of applications that interact with SDN controllers. SDN

applications are programs that may use an abstract view of the network for their decision-making goals.

These applications convey their network requirements and desired network behavior to the SDN

controller via northbound API. Examples of applications are energy-efficient networking, security

monitoring, access control, and network management.

Characteristics of SDN:

The control plane is separated from the data plane so the data plane devices become simple packet

forwarding devices.

The control plane is implemented in a centralized controller or set of coordinated centralized

controllers. The SDN controller has a centralized view of the network or networks under its control. The

controller is portable software that can run on servers and is capable of programming the forwarding

devices based on a centralized view of the network.

The network is programmable by applications running on top of the SDN controllers. The SDN

controllers present an abstract view of network resources to the applications.

STANDARDS-DEVELOPING ORGANIZATIONS:

Unlike some technology areas such as Wi-Fi,

there’s no single standards body responsible

for developing open standards for SDN and

NFV. Rather, there’s a large and evolving

collection of standards-developing

organizations (SDOs), industrial consortia, and

open development initiatives involved in

creating standards and guidelines for SDN and

NFV.

The table to the right lists the main SDOs and

other organizations involved in the effort and

the main outcomes so far produced.

OPENDAYLIGHT AND OPENSTACK:

OpenDaylight:

OpenDaylight is an open-source software activity under the auspices of the Linux foundation. Its

member companies provide resources to develop an SDN controller for a wide range of applications. It’s

more in the nature of an open development initiative and a consortium. It also supports network

programmability via southbound protocols, programmable network services, collections of northbound

APIs, and applications.

OpenStack:

OpenStack is an open-source software project that aims to produce an open-source cloud operating

system. It provides multitenant Infrastructure as a Service (IaaS) and aims to meet the needs of public

and private clouds regardless of size, by being simple to implement and massively scalable. SDN

technology is expected to contribute to its networking part, and to make the cloud operating system

more efficient, flexible, and reliable.

THE FUNCTIONS OF THE SDN DATA PLANE:

THE SDN DATA PLANE:

The SDN data plane is referred to as the resource layer or as

the infrastructure layer, where network forwarding devices

perform the transport and processing of data according to

decisions made by the SDN control plane.

The important characteristics of the network devices in an SDN

network is that these devices perform a simple forwarding

function without embedded software to make autonomous

decisions. The data plane network devices are also called data

plane network elements or switches.

A SIMPLE FORWARDING FUNCTION:

The principal functions of the network device are the following:

-

-

Control support function

o Interacts with the SDN control layer to support

programmability via resource-control interfaces.

o The switch communicates with the controller

and the controller manages the switch via the

OpenFlow switch protocol.

Data forwarding function

o Accepts incoming data flows from other network devices and forwards them along the

data forwarding paths that have been computed and established by the SDN controller

according to the rules defined by the SDN applications.

The network device can alter the packet header before forwarding or discard the packet. As shown,

arriving packets may be placed in an input queue awaiting processing by the network device, and

forwarded packets are generally placed in an output queue awaiting transmission.

THE OPENFLOW LOGICAL ARCHITECTURE AND NETWORK PROTOCOL:

THE OPENFLOW:

There must be a common logical architecture in all network

devices to be managed by an SDN controller. The SDN controller

should see a uniform logical switch functionality. A standard

secure protocol is needed between the SDN controller and the

network device.

OpenFlow is both a protocol between SDN controllers

and network devices and a specification of the logical

structure of the network switch functionality.

OpenFlow is defined in the OpenFlow switch specification published by the Open Networking

Foundation (ONF). An SDN controller communicates with OpenFlow-compatible switches using the

OpenFlow protocol running over Transport Layer Security (TLS).

Each switch connects to other OpenFlow switches and possibly to end-user devices that are the sources

and destinations of packet flows. On the switch side, the interface is known as an OpenFlow channel.

These connections are via OpenFlow ports. An OpenFlow port also connects the switch to the SDN

controller.

Switch Ports:

OpenFlow defines three types of ports:

1. Physical port: corresponds to a hardware interface of the switch (e.g. an Ethernet switch)

2. Logical port: doesn’t correspond directly to a hardware interface of the switch and may be

defined in the switch using non-OpenFlow methods (e.g. link aggregation groups, tunnels,

loopback interfaces) and may map to various physical ports

3. Reserved port: specifies generic forwarding actions like sending to/receiving from the controller,

flooding, or forwarding using non-OpenFlow methods such as “normal” switch processing

Tables:

OpenFlow defines three types of tables:

1. Flow table: matches incoming packets to a particular flow and specifies what functions are to be

performed on the packets (often multiple flow tables are combined to operate in a pipeline

fashion)

2. Group table: when a flow is directed to a group table, it may trigger a variety of actions that

affect one or more flows

3. Meter table: consists of meter entries that can trigger a variety of performance-related actions

on a flow

Using the OpenFlow switch protocol, the controller can add, update, and delete flow entries in tables. It

can do this both reactively (in response to packets) and proactively.

Flow Tables:

Each packet that enters an OpenFlow switch passes

through one or more flow tables. Each flow table consists

of a number of rows (called entries) consisting of seven

components. The seven components are as follows:

1. Match fields: used to select packets that match the values in the fields

2. Priority: relative priority of table entries (a 16-bit field with 0 corresponding to the lowest

priority)

3. Counters: updated for matching packets (OpenFlow specification defines a variety of counters)

4. Instructions: instructions to be performed if a match occurs

5. Timeouts: maximum amount of idle time before a flow is expired by the switch

6. Cookie: 64-bit data value chosen by the controller (may be used by the controller to filter flow

statistics, flow modification, and flow deletion)

7. Flags: alter the way flow entries are managed

Match Field Categories:

1. Ingress port: the identifier of the port on this switch on which the packet arrived (may be a

physical port or a switch-defined virtual port and is required in ingress tables)

2. Egress port: the identifier of the egress port from action set (required in egress tables)

3. Ethernet source and destination addresses: each entry can be an exact address, a bit masked

value, or a wildcard value

4. Ethernet type field: indicates type of the Ethernet packet payload

5. IP: version 4 or 6

6. IPv4 or IPv6 source address and destination address: each entry can be an exact address, a bit

masked value, a subnet mask value, or a wildcard value

7. TCP source and destination ports: exact match or wildcard value

8. UDP source and destination ports: exact match or wildcard value

Counters:

Counter

Reference count (active entries)

Duration (seconds)

Received packets

Transmitted packets

Duration (seconds)

Transmit packets

Duration (seconds)

Duration (seconds)

Duration (seconds)

Usage

Per Flow Table

Per Flow Entry

Per Port

Per Port

Per Port

Per Queue

Per Queue

Per Group

Per Meter

Bit Length

32

32

64

64

32

64

32

32

32

Packet Flow Through the Processing Pipeline:

A switch includes one or more flow tables. If there’s more than one flow table, they are organized as a

pipeline where the tables are labeled with increasing numbers starting at zero. The use of multiple

tables in a pipeline (rather than a single flow table) provides the SDN controller with considerable

flexibility.

The OpenFlow specification defines two stages of

processing: Ingress processing and Egress processing.

Ingress processing always happens, beginning with Table 0, and

uses the identity of the input port. Table 0 may be the only table,

in which case the ingress processing is simplified to the

processing performed on that single table, and there’s no egress

processing.

Egress processing is the processing that happens after the

determination of the output port. It happens in the context of

the output port. This stage is optional, and if it occurs it may

involve one or more tables.

Ingress Processing:

At the final table in the pipeline, forwarding to another flow table isn’t an option. If and when a packet is

finally directed to an output port, the accumulated action set is executed and then the packet is queued

for output.

Egressing Processing:

If egress processing is associated with a particular output port, then after a packet is directed to an

output port in the ingress process, the packet is directed to the first flow table of the egress pipeline.

There’s no group table processing at the end of the egress pipeline.

Using Multiple Tables:

The use of multiple tables enables the breaking down of a single

flow into a number of parallel sub flows. The use of multiple

tables simplifies the processing in both the SDN controller and

the OpenFlow switch.

Actions such as next hop that apply to the aggregate flow can be defined once by the controller then

examined and performed once by the switch.

The addition of new subflows at any level involves less setup. Therefore, the use of pipelined, multiple

tables increases the efficiency of network operations, provides granular control, and enables the

network to respond to real-time changes at the application, user, and session levels.

The Group Tables:

During the pipeline processing, a flow table may direct a flow of packets to the group table rather than

another flow table. The group table and group actions enable OpenFlow to represent a set of ports as a

single entity for forwarding packets.

Different types of groups are provided to represent different forwarding abstractions, such as

multicasting and broadcasting.

Each group table consists of a number of rows called group entries, consisting of four components:

1. Group identifier: a 32-bit unsigned integer uniquely identifying the group (a group is defined as

an entry in the group table)

2. Group type: determines group semantics, explained in the next slide

3. Counters: updated when packets are processed by a group

4. Action buckets: an ordered list of action buckets, where each action bucket contains a set of

actions to execute

The action list is executed in sequence and generally ends with the Output action, which forwards the

packet to a specified port. The action list may also end with the Group action, which sends the packet to

another group.

A group is designated as “all”, “select”, “fast failover”, or “indirect”.

-

-

-

-

“all” executes all buckets in the group

o Each arriving packet is effectively cloned

o Each bucket will designed a different output port, so that the

incoming packet is then transmitted on multiple output ports

o This group is used for multicast

“select” executes one bucket in the group based on a switch-computed

selection algorithm (e.g. hash on some user-configured tuple or simple

round robin)

o The selection algorithm should implement equal load sharing or

load sharing based on bucket weights assigned by the SDN

controller

“fast failover” executes the first live bucket

o Port liveness is managed by code outside of the scope of

OpenFlow and may have to do with routing algorithms

o The buckets are evaluated in order, and first live bucket is

selected

o This group type enables the switch to change forwarding without

requiring a round trip to the controller

“indirect” allows multple packet flows (multiple flow table entries) to point to

a common group identifier

o This type provides for more efficient management by the controller in

certain situations

OpenFlow Protocol:

The OpenFlow protocol describes message exchanges that take place between an OpenFlow controller

and an OpenFlow switch. Typically, the protocol is implemented on top of TLS, providing a secure

OpenFlow channel.

The OpenFlow protocol enables the controller to perform add, update, and delete actions to the flow

entries in the flow tables. It supports three types of messages:

1. Controller-to-Switch

2. Asynchronous

3. Symmetric

OpenFlow Messages:

Message

Description

Controller-to-Switch

Features

Request the capabilities of a switch. Switch responds with a features reply that

specifies its capabilities.

Configuration

Set and query configuration parameters. Switch responds with parameter settings.

Modify-State

Add, delete, and modify flow/group entries and set switch port properties.

Read-State

Collect information from switch, such as current configuration, statistics, and

capabilities.

Packet-Out

Direct packet to a specified port on the switch.

Barrier

Barrier request/reply messages are used by the controller to ensure message

dependencies have been met or to receive notifications for completed operations.

Role-Request

Set or query role of the OpenFlow channel. Useful when switch connects to

multiple controllers.

Asynchronous- Set filter on asynchronous messages or query that filter. Useful when switch

Configuration

connects to multiple controllers.

Asynchronous

Packet-In

Transfer packet to controller.

Flow-Removed Inform the controller about the removal of a flow entry from a flow table.

Port-Status

Inform the controller of a change on a port.

Role-Status

Inform controller of a change of its role for this switch from master controller to

slave controller.

ControllerInform the controller when the status of an OpenFlow channel changes. This can

Status

assist failover processing if controllers lose the ability to communicate among

themselves.

Flow-Monitor

Inform the controller of a change in a flow table. Allows a controller to monitor in

real time the changes to any subsets of the flow table done by other controllers.

Symmetric

Hello

Exchanged between the switch and controller upon connection startup.

Echo

Echo request/reply messages can be sent from either the switch or the controller,

and must return an echo reply.

Error

Used by the switch or the controller to notify problems to the other side of the

connection.

Experimenter

For additional functionality.

THE FUNCTIONS OF THE SDN CONTROL PLANE: