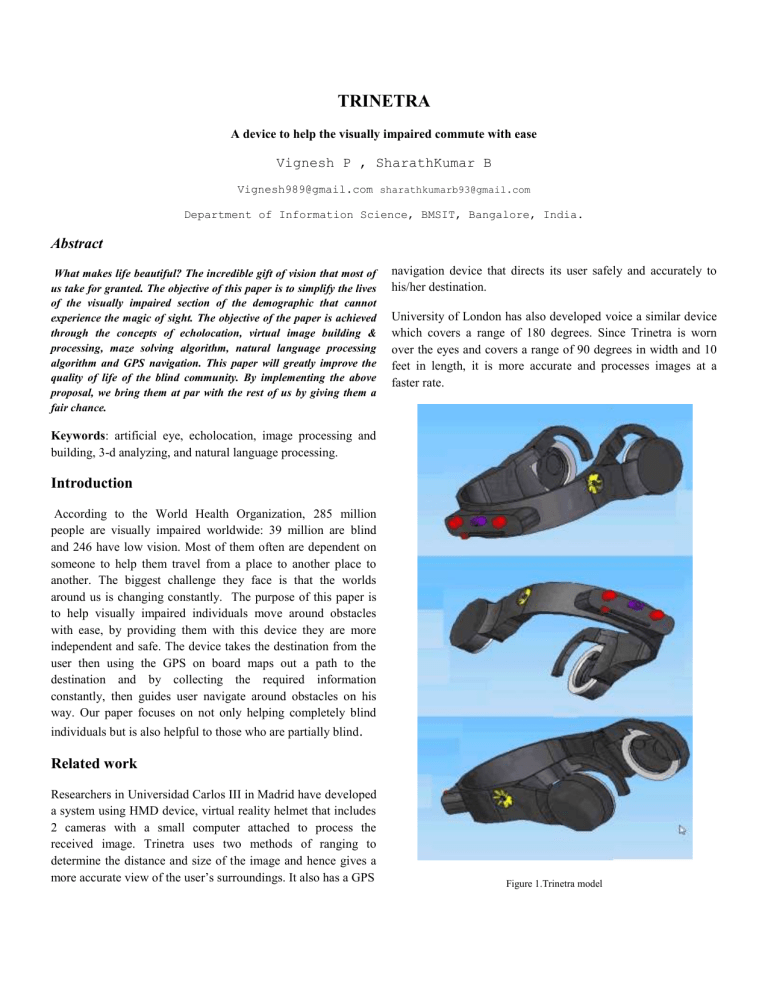

TRINETRA A device to help the visually impaired commute with ease Vignesh P , SharathKumar B Vignesh989@gmail.com sharathkumarb93@gmail.com Department of Information Science, BMSIT, Bangalore, India. Abstract What makes life beautiful? The incredible gift of vision that most of us take for granted. The objective of this paper is to simplify the lives of the visually impaired section of the demographic that cannot experience the magic of sight. The objective of the paper is achieved through the concepts of echolocation, virtual image building & processing, maze solving algorithm, natural language processing algorithm and GPS navigation. This paper will greatly improve the quality of life of the blind community. By implementing the above proposal, we bring them at par with the rest of us by giving them a fair chance. navigation device that directs its user safely and accurately to his/her destination. University of London has also developed voice a similar device which covers a range of 180 degrees. Since Trinetra is worn over the eyes and covers a range of 90 degrees in width and 10 feet in length, it is more accurate and processes images at a faster rate. Keywords: artificial eye, echolocation, image processing and building, 3-d analyzing, and natural language processing. Introduction According to the World Health Organization, 285 million people are visually impaired worldwide: 39 million are blind and 246 have low vision. Most of them often are dependent on someone to help them travel from a place to another place to another. The biggest challenge they face is that the worlds around us is changing constantly. The purpose of this paper is to help visually impaired individuals move around obstacles with ease, by providing them with this device they are more independent and safe. The device takes the destination from the user then using the GPS on board maps out a path to the destination and by collecting the required information constantly, then guides user navigate around obstacles on his way. Our paper focuses on not only helping completely blind individuals but is also helpful to those who are partially blind . Related work Researchers in Universidad Carlos III in Madrid have developed a system using HMD device, virtual reality helmet that includes 2 cameras with a small computer attached to process the received image. Trinetra uses two methods of ranging to determine the distance and size of the image and hence gives a more accurate view of the user’s surroundings. It also has a GPS Figure 1.Trinetra model Figure -4 Conceptual block diagram Problem statement About ninety percent of the worlds visually impaired live in developing countries. Therefore as people who are gifted with this ability, it is our responsibility to ensure that the disabled community leads a wholesome and effortless life. . ` Figure 2: Line of sight Analysis In an economical country like India, where many cannot afford surgery, this device is extremely helpful and cost efficient. There are a total of 8 types of blindness. Since there no cure exists for all types, this device will act as a faithful companion that leads its user safely from one point to another. Another program determines the direction to take in order to avoid the obstacle with ease. The entire process of reverberation and imaging takes place in 60 frames per second which makes it extremely accurate in predicting the path of a moving entity as well as stationary objects. This information is then converted to audio form by a translator that converts machine level language into a human interactive language which is conveyed to the user though a speaker. The language of this audio can be customized according to the specifications of the user. We use sound waves that are of a very higher frequency (more than 20,000 kHz and hence cannot be heard by humans. It also eliminates the possibility of undesirable noises and interference. Since the GPS navigation device will be dedicated to one city or state depending the specific user’s location and preference. We can make the maps very detail and accurate. This also means we would require less space to store the map on board. Due to the use of infrared cameras as a secondary device for ranging, we are able to determine the object distance depending on the heat signatures of the object. Solution Approach GPS navigation device to receive directions (traffic constraints are also taken into account). Obstacles encountered are avoided by providing an alert to its user. STEP 1 We use a technique called flash-SONAR also called as echolocation. An oscillator generates a high frequency sound that reflects back on collision with an obstacle as shown in figure 5. We use a GPS navigation device to receive directions from the source to destination, also taking traffic constraints into account. A route map is preloaded into the memory; it then provides directions to its user guiding him/her towards the previously specified destination in shortest available route. The obstacles encountered on the way are avoided by providing constant alert to its user. An oscillator generates a high frequency sound that reflects back on collision with an obstacle. This information includes time taken for the sound to reflect back and the loss of intensity of the sound during this process. Using this information, the distance and density of the object from the user can be calculated. The infrared bulb along with the infrared camera is then used to capture the heat signatures of the obstacle. This image is then pixilated which gives the device an idea of the size of the obstruction. Software is programmed in such a way that the virtual sound image and the IR image are superimposed on each other. It determines both the distance and the size of the obstruction. Figure 5. Based on this information we are able to construct a virtual image. The working of this phase is similar to that of a RADAR gun. The drawback in this step is that we are unable to determine the width of the object ahead of us. Figure-6 IR image STEP 3 • The drawback faced in step 1 is overcome here. • The virtual image obtained in step 1 and the image obtained from step 2 is superimposed to give us a fully detailed 2D image. Figure-6 Flash sonar image STEP 2 The infrared camera along with the infrared bulbs generates an IR radiation that captures the heat signatures of the obstacle as shown in figure-6. Here we use a technique called V-SLAM (visual based simultaneous localization and mapping). In this step geometrical potentials and optical flow potentials are used to form graph like structures. A graph based segmentation algorithm then forms clusters of nodes of similar potentials, to form a graph like structure. This structure is used to calculate multi-view geometrical constraints. A two dimensional image gives us an exact layout of the surroundings. Figure-7: superimposing of two images. STEP 4 A maze solving algorithm called Random mouse is used. This is modified to manoeuvre through the obstacles. STEP 5 Budget We use a Natural Language Processing Algorithm. It is used to translate human understandable language to machine level language and vice versa. This information is then converted to audio form by a Natural Language Processing Algorithm that converts machine level language into a human interactive language and vice versa which is conveyed to the user though a speaker. Table 1. The table below specifies the cost of building a prototype. MATERIAL GPS navigation device RADAR gun Two infrared bulbs Infrared camera Image and sound building circuit Processing circuit Speaker and mike Software development COST(INR) 4,000 7,000 5,000 2,500 3,000 10,000 500 2,50,000 Timeline The language of this audio can be customized according to the specifications of the user.(i.e. English, Hindi, Tamil, Kannada, etc) Image processing and data processing: • • We use a real time operating system Vx-WORKS: WIND RIVER by personal preference.(because it is Supported by platforms such as x86, x86-64, MIPS, PowerPC, SH-4, ARM, SPARC Version 8 (V8) and it can be programmed in C, C++, Java) We also use the world’s fastest GPU to process the image based on Kepler’s GPU architecture and use two technologies that have been used by the world’s leading graphic card manufacturer NVIDIA1. controls like GPU temperature target, overclocking, and overvoltage to ensure the GPU works at the ultimate performance. CUDA. (Aka Compute Unified Device Architecture) is a parallel computing platform and programming model created by NVIDIA. Conclusion Every application has its disadvantages. Here are some disadvantages of Trinetra: NVIDIA GPU Boost 2.0 Intelligently monitors work with even more advanced 2. Figure -3 Time line Based on such image processing and data processing units we are able to achieve an image refresh rate of 60 frames/second. 1. Overheating of the device. It is a possible issue since we are using high end GPU, which we are working on to eliminate. 2. It is not useful for individuals who are hearing as well as visually impaired. All the above mentioned disadvantages are not fatal enough to deem the device unusable or ineffective. As mentioned above, there are several types of incurable blindness’ along with cases of partial blindness and night blindness. Trinetra not only makes their lives easier but also makes them more independent. By not having to depend on others to commute, the visually impaired will gain a sense of confidence which will help them face the world without feeling inferior. References IEEE papers [1]. Search Aid System Based on Machine Vision and Its Visual Attention Model for Rescue Target Detection: Ran Xin ; Ren Lei, Intelligent Systems (GCIS), 2010 Second WRI Global Congress. [2].High-resolution imaging technique for aperture-array dynamic sensing systems: D'Errico, M.S. ; Lee, H. Signals, Systems and Computers, 1993. 1993 Conference Record of the Twenty-Seventh Asilomar Conference. [3]. S. Avidan and A. Shashua. Trajectory triangulation: 3D reconstruction of moving points from a monocular image sequence. PAMI, 22(4):348–357, 2002. 1 [4] T. Brehard and J. Le Cadre. Hierarchical particle filter for bearingsonly tracking. IEEE TAES, 43(4):1567–1585, 2008. 2, 4 [5] G. Brostow, J. Fauqueur, and R. Cipolla. Semantic object classes in video: A high-definition ground truth database. PRL, 30(2):88–97,2009. 6 [6] A. Davison, I. Reid, N. Molton, and O. Stasse. MonoSLAM: Real-time single camera SLAM. PAMI, 29(6):1052–1067, 2007. 1, 2 [7] K. Egemen Ozden, K. Cornelis, L. Van Eycken, and L. Van Gool. Reconstructing 3D trajectories of independently moving objects using generic constraints. CVIU, 96(3):453–471, 2004. 2, 6, 7 [8] R. I. Hartley and A. Zisserman. Multiple View Geometry in Computer Vision. Cambridge University Press, 2004. 1, 6 [9] G. Klein and D. Murray. Parallel tracking and mapping for small AR workspaces. In ISMAR, 2007. 1, 4 [10] A. Kundu, K. M. Krishna, and C. V. Jawahar. Realtime motion segmentation based multibody visual slam. In ICVGIP, 2010. 3