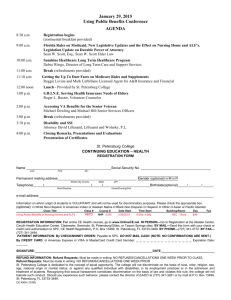

1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) Stanford Encyclopedia of Philosophy The St. Petersburg Paradox First published Wed Nov 4, 1998; substantive revision Mon Jun 17, 2013 The St. Petersburg game is played by flipping a fair coin until it comes up tails, and the total number of flips, n, determines the prize, which equals $2n. Thus if the coin comes up tails the first time, the prize is $21 = $2, and the game ends. If the coin comes up heads the first time, it is flipped again. If it comes up tails the second time, the prize is $22 = $4, and the game ends. If it comes up heads the second time, it is flipped again. And so on. There are an infinite number of possible ‘consequences’ (runs of heads followed by one tail) possible. The probability of a consequence of n flips (P(n)) is 1 divided by 2n, and the ‘expected payoff’ of each consequence is the prize times its probability. The following table lists these figures for the consequences where n = 1 … 10: n P(n) Prize 1 2 3 4 5 6 7 8 9 10 1/2 1/4 1/8 1/16 1/32 1/64 1/128 1/256 1/512 1/1024 $2 $4 $8 $16 $32 $64 $128 $256 $512 $1024 Expected payoff $1 $1 $1 $1 $1 $1 $1 $1 $1 $1 (Only the start of the full table, which is infinite.) The ‘expected value’ of the game is the sum of the expected payoffs of all the consequences. Since the expected payoff of each possible consequence is $1, and there are an infinite number of them, this sum is an infinite number of dollars. A rational gambler (who values only money and whose desire for any extra dollar does not depend on the size of her fortune) would enter a game iff the price of entry was less than the expected value. In the St. Petersburg game, any finite price of entry is smaller than the expected monetary value of the game. Thus, the rational gambler would play no matter how large the finite entry price was. But it seems obvious that some prices are too high for a rational agent to pay to play. Many commentators agree with Hacking's (1980: 563) estimation that “few of us would pay even $25 to enter such a game.” If this is correct—and if most of us are rational—then something has gone wrong with this way of thinking about the game. This problem was discovered by the Swiss eighteenth­century mathematician Nicolaus Bernoulli and was published by his brother Daniel in the St. Petersburg Academy Proceedings (1738; English trans. 1954); thus it's called the St. Petersburg Paradox. 1. A Limit on Utility 2. Risk­Aversion 3. Finitely Many Consequences 4. The Average Win 5. Infinite Value? 6. Theory and Practicality Bibliography Works Cited Other Discussions Academic Tools Other Internet Resources Related Entries https://plato.stanford.edu/entries/paradox­stpetersburg/ 1/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) 1. A Limit on Utility Bernoulli's reaction to this problem was that one should distinguish the utility—desirability or satisfaction production—of a payoff from its dollar amount. His approach in a moment: but first consider this way (not his) of trying to solve this problem. Suppose that one reaches a saturation point for utility: that at some point, a larger dose of the good in question would not be enjoyed even a little bit more. Consider someone who loves chocolate ice­cream, and every tablespoon of it he eats gives him an equal pleasure—equivalent to one utile. But when he has eaten a pint of it (32 tablespoons), he suddenly can't enjoy even a tiny bit more. Thus giving him 16 tablespoons of chocolate ice­cream would provide him with 16 utiles, and 32 tablespoons with 32 utiles, but if he was given any larger quantity, he'd enjoy only 32 tablespoons of it, and get 32 utiles. Now imagine a St. Petersburg game in which the prizes are as above, except in tablespoons of chocolate ice cream. Here is the start of the table of utiles it provides: n 1 2 3 4 5 6 7 8 9 10 P(n) 1/2 1/4 1/8 1/16 1/32 1/64 1/128 1/256 1/512 1/1024 Prize Utiles of Prize Expected utility 2 tbs 2 1 4 tbs 4 1 8 tbs 8 1 16 tbs 16 1 32 tbs 32 1 64 tbs 32 .5 128 tbs 32 .25 256 tbs 32 .125 512 tbs 32 .0625 1024 tbs 32 .03125 The sum of the last column now is not infinite: it asymptotically approaches 6. In the long run, a player of this game could expect an average payoff of six utiles. A rational ice­cream eater would pay anything up to the cost of 6 tablespoons of chocolate ice­cream. I've chosen ice­cream for that example because that sort of utility­maxing might be considered less implausible than when other goods are involved. Compare the original case, where payment is in dollars. Replacement ($X for X tablespoons) shows a maximum utility reached by any dollar prize over $32, and a fair entry cost of $6. But putting the maximizing point there would mean that $10,000 was worth no more— provided no more utiles—than $32. Nobody feels that way. So should we raise the point at which an additional dollar has no additional value? Setting that point at (say) $17 million is better. It's such a large prize that you might feel that anything larger wouldn't be better. Setting the maximum point there, by the way, means that the maximum rational entry price for this sort of game would be a little above $25, which is Hacking's intuition about the reasonable maximum price to pay. So is that the point where utility maximizes out, for money? Agreed, that's a lot of money, and it would take some imagination for some of us to figure out what to do with more; but it has seemed to most people thinking about this sort of thing that there isn't any point at which an additional dollar means literally nothing—confers no utility at all. It seems that the­ more­the­better desires are possible—even common. But that does not mean that the expected value of St. Petersburg must be infinite. There are ways to approach this such that every additional dollar confers additional utility, but the sum of the rows is not infinite. The first proposal of this sort was due to Bernoulli himself. The same paper in which he proposed this problem contains the first published exposition of the “Principle of Decreasing Marginal Utility,” which he developed to deal with St. Petersburg. This principle, later widely accepted in the theory of economic behavior, states that marginal utility (the utility obtained from consuming an extra increment of the good) decreases as the quantity consumed increases; in other words, that each additional good consumed is less satisfying than the previous one. He went on to suggest that a realistic measure of the utility of money might be given by the logarithm of the amount. Here is the beginning of the table for this gamble if utiles = log($): n P(n) Prize Utiles Expected Utility https://plato.stanford.edu/entries/paradox­stpetersburg/ 2/11 1/17/2017 1 2 3 4 5 6 7 8 9 10 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) 1/2 1/4 1/8 1/16 1/32 1/64 1/128 1/256 1/512 1/1024 $2 $4 $8 $16 $32 $64 $128 $256 $512 $1024 0.301 0.602 0.903 1.204 1.505 1.806 2.107 2.408 2.709 3.010 0.1505 0.1505 0.1129 0.0753 0.0470 0.0282 0.0165 0.0094 0.0053 0.0029 The sum of expected utilities is not infinite: it reaches a limit of about 0.602 utiles (worth $4.00). The rational gambler, then, would pay any sum less than $4.00 to play. But there is no maximum utility for any outcome: any of the possible outcomes on the list confers more utility than the one above it. Some have found this response to the paradox unsatisfactory, because Bernoulli's association of utility with the logarithm of monetary amount seems way off. On his scale, the utility gained by doubling any amount of money is the same; thus the difference in utility between $2 and $4 is the same as the difference between $512 and $1024. However there are other ways of discounting utility as the total goes up which may seem more intuitively plausible (see for example Hardin 1982; Gustason 1994; Jeffrey 1983). The main point here is that if the sum of the utilities in the right­hand column approaches a limit, then the St. Petersburg problem is solved. The rational amount to pay is anything less than this limit. In his classical treatment of the problem, Menger (1967 [1934]) argues that the assumption that there is an upper limit to utility of something is the only way that the paradox can be resolved. However, decreasing marginal utility, approaching a limit, may not solve the problem. Let us agree that money has a decreasing marginal utility, and accept (for the purposes of argument) that a reasonable calculation of the utility of any dollar amount takes the logarithm of the amount in dollars. The St. Petersburg game as proposed, then, presents no paradox, but it is easy to construct another St. Petersburg game which is paradoxical, merely by altering the dollar prizes. Suppose, for example, that instead of paying $2n for a run of n, the prize were $10 to the power 2n. Here is the beginning of the table for this game: n 1 P(n) 1/2 Prize 2 1/4 $104 4 1 3 1/8 $108 8 1 4 1/16 $1016 16 1 5 1/32 $1032 32 1 6 1/64 $1064 64 1 7 1/128 $10128 128 1 8 1/256 $10256 256 1 9 1/512 $10512 512 1 10 1/1024 $101024 1024 1 $102 Utiles of Prize Expected utility 2 1 The expected value of this game—the sum of the infinite series of numbers in the last column—is infinite, not just very large. That means no amount of money is too large to pay for one game. The problem returns. Bernoulli's log function is not the only way to relate dollar values to utility, but there is a general objection to all of them. Imagine a generalized paradoxical St. Petersburg game (suggested by Paul Weirich (1984: 194), following Menger (1967 [1934])) which offers prizes in utiles instead, at the rate of 2n utiles for a run of n (however that number of utiles is to be translated into dollars or other goods). This game would have infinite expected value, and the rational gambler should pay any amount, however large, to play. https://plato.stanford.edu/entries/paradox­stpetersburg/ 3/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) But should we say that there's a limit to how many utiles anyone can absorb? It seems not. Even if the utility per unit of every particular good diminishes with quantity, this does not imply that the capacity to appreciate additional utiles diminishes somehow. When you're sick of ice­cream, you still can enjoy Mozart. The mega­ rich seem always to be able to find something new that they're interested in. There is no law of the diminishing utility of utiles. For simplicity, we shall ignore the generalized version of the game, and continue to discuss it in terms of the original dollar prizes, recognizing, however, that the diminishing marginal utility of dollars may make some revision of the prizes necessary to produce the paradoxical result. 2. Risk­Aversion Consider the following argument. The St. Petersburg game offers the possibility of huge prizes. A run of forty would, for example, pay a whopping $1.1 trillion. Of course, this prize happens rarely: only once in about 1.1 trillion times. Half the time, the game pays only $2, and you're 75% likely to wind up with a payment of $4 or less. Your chances of getting more than $25 (the entry price which Hacking suggests is a reasonable maximum) are less than one in 25. Very low payments are very probable, and very high ones very rare. It's a foolish risk to invest more than $25 to play. This sort of reasoning is appealing, and may very well account for intuitions that agree with Hacking's. Many of us are risk­averse, and unwilling to gamble for a very small chance of a very large prize. There are a couple of ways of factoring in this risk­aversion. One way is building it into the utility function, so that the utility of (for example) a sure­thing $10 is higher than the utility of a 50% chance of $20. Another way counts them as the same, but adds in the negative utility of the anxiety of prior uncertainty. Weirich (1984), claiming that considerations of risk­aversion solve the St. Petersburg paradox, offers a complicated way (which we need not go into here) of including a risk­aversion factor in calculations of expected utility, with the result that there is a finite upper limit to the rational entrance fee for the game. But there are objections to this approach. For one thing, risk­aversion is not a generally applicable consideration in making rational decisions, because some people are not risk averse. In fact, some people may enjoy risk. What should we make, for example, of those people who routinely play state lotteries, or who gamble at pure games of chance in casinos? (In these games, the entry fee is greater than the expected utility.) It's possible to dismiss such behavior as merely irrational, but sometimes these players offer the explanation that they enjoy the excitement of risk. Differences among people in their feelings about risk (and, for that matter, in how much decrease in the marginal utility of money they experience) might be accommodated by allowing utility functions individually tailored to particular personalities. But in any case, it's not at all clear that risk­aversion can explain why the St. Petersburg game would be widely intuited to have a fairly small maximum rational entry fee, while so many people are willing to risk very large sums of money for highly unlikely huge payoffs in other games. But even if we assume, for the purposes of argument, that risk­aversion is responsible for the intuition that the appropriate entrance­fee for the St. Petersburg game is finite and small, this will not make the paradox go away, for we can again adjust the prizes to take account of this risk­aversion. It seems likely that someone who didn't like to gamble would play if the prize were increased enough. The fact that many more people enter lotteries when unusually big prizes are announced, keeping risk more or less constant, is evidence for this. But perhaps these considerations do not apply to all aspects of the St. Petersburg game; if it has infinite utility, then even a very high entry price is justified, and the risk of losing that amount is very high. The most compelling examples of the rational unacceptability of risk no matter how high the prize, are the ones in which the entry price is high and the prize improbable. Imagine, for example, that you are risk averse, and are offered a gamble in which the entry price is your life­savings of (say) $100,000, and the chances of the prize are one­in­a­million. It seems rational to refuse, no matter how huge the prize. Perhaps that is what explains the unwillingness to make a big investment in St. Petersburg. But note however that this may not be a sufficient response to the paradox. This sort of risk­aversion would also provide a psychological explanation of why (some) people are unwilling to gamble large sums when the finite expected utility is greater than the initial payment. So, for example, many people would be unwilling to risk $1000 for a one­in­ https://plato.stanford.edu/entries/paradox­stpetersburg/ 4/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) a­hundred chance at winning $200,000 (expected value $2000). If risk is not a disutility that can be compensated for by prize increase, then maybe their behavior runs counter to the expected­value theory of rational choice; and if they're rational, then maybe this shows the theory is wrong. The paradox raised by St. Petersburg, however, is not thereby fully dealt with. It is not merely a case—others of which are well­known —in which apparently rational behavior disobeys the advice to maximize expected value. What's paradoxical about the St. Petersburg paradox is that its expected value is infinite. 3. Finitely Many Consequences Gustason suggests that, in order to avoid the St. Petersburg problem, one must either restrict legitimate games to (a) those that have consequences with an upper bound on values, (the possibility we've been looking at), or to (b) those in which each act has only finitely many consequences. Let us consider the imposition of restriction (b). Imagine a life insurance policy bought for someone at birth, which pays to that person's estate, at eventual death, $100 for each birthday the person has passed, without limit. What price should an insurance company charge for this policy? Standard empirically­based mortality charts give the chances of living another year at various ages. Of course, they don't give the chances of surviving another year at age 140, because there's no empirical evidence available for this; but a reasonable extension of the mortality curve indefinitely beyond what's provided by available empirical evidence can be produced; this curve asymptotically approaches zero. On this basis, ordinary mathematical techniques can give the expected value of the policy. But note that it promises to pay off without limit. If we think that, for each age, there is a (large or small) probability of living another year, then there are an indefinitely large number of consequences to be considered when doing this calculation, but mathematics can calculate the limit of this infinite series; and (ignoring other factors) an insurance company will make a profit, in the long run, buy charging above this amount. There's no problem in calculating its expected value. This is not a “game,” of course, but this casts doubt on the necessity of restricting the consideration of probabilities and payoffs to cases with finite consequences. Because it's often insisted that St. Petersburg, as described, is not suited for considerations given for ordinary games, thinking sensibly about it requires modifications in the way it would work—modifications we would expect if this game were really offered in casinos. One way to do this is to assume that the way the game would not run exactly as described. For example, the casino might terminate play after some number of consecutive heads, call it N, and pay off for a run of N, even though it had not been ended by throwing tails. Thus there would be only a finite number of possible consequences. If N were set at 25, then the game would have an expected value of $25, and that would be the maximum entry price which a rational agent would pay to play (as in Hacking's intuition). Do we, perhaps unconsciously, assume that casinos would truncate any run of 25 heads? Why 25? Many authors have pointed out that, practically speaking, there must be some point at which a run of heads would be truncated without a final tail. For one thing, the patience of the participants of the game would have to end somewhere. If you think that this sets too narrow a limit N, consider the considerably higher limit set by the life­spans of the participants, or the survival of the human race; or the limit imposed by the future time when the sun explodes, vaporizing the earth. Any of these limits produces a finite expected value for the game, but sets an N which is considerably higher than 25; what, then, explains Hacking's $25 intuition? A more realistic limit for N might be set by the finitude of the bankroll necessary to fund the game. Any casino that offers the game should (it seems) be prepared to truncate any run that, were it to continue, would cost them more than the total funds they have available for prizes. A run of 25 would require a prize of a mere $33,554,432, possibly within the reach of a larger casino. A run of 40 would require a prize of about 1.1 trillion dollars. So any casino offering St. Petersburg must truncate very long runs. If a state backing a casino were crazy enough to print up enough money to pay off a colossal prize, economic havoc would result, including massive inflation severely reducing the utility of the prize. Might these practical difficulties may be circumvented by a casino's following the suggestion made by Michael Clark (2002) that an enormous win would be offered merely as credit to the winner? Would anyone believe that promise? It's of course true that any real game would impose some upper limit N, producing a finite number of possible consequences of the game; but this does not solve the St. Petersburg puzzle because these finite https://plato.stanford.edu/entries/paradox­stpetersburg/ 5/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) games are not St. Petersburg. Our question was about the St. Petersburg game, not about its N­limited relative. Russell's Barber Paradox is resolved by showing that there is no such barber; might realistic considerations resolve the St. Petersburg paradox by showing there is no such game? Jeffrey (1983: 154) says that “anyone who offers to let the agent play the St. Petersburg game is a liar, for he is pretending to have an indefinitely large bank.” He modifies this by imagining the indefinitely large bank of a government able to print up money at will, but points out that inflation would then make the expected utility finite. If someone without this government backing appears to be offering this game, then Jeffrey claims this offer must be “illusory”: he's not really offering St. Petersburg, but rather a Petersburgesque game in which there will be an upper bound on winning. Now, it's true that anyone who makes this offer should realise that it includes some highly unlikely consequences he'd be unable to manage; but this is not lying. Someone who didn't realize that his finite bank couldn't cover possible consequences might sincerely offer the game, as might someone who realized that, but took the risk of owing what he couldn't pay. The advice, “Don't make bets you can't cover”—that is, never bet without the ability to pay for the worst outcome—has a point because sometimes people do make such bets. The St. Petersburg bet can't be covered, but it can seriously be offered. When someone offers a bet but doesn't pay off because he can't, we don't conclude that the offer was “illusory.” We persist in the belief that the offer was genuine. Maybe Jeffrey's point is really to explain why people (supposedly) wouldn't pay more than a modest amount ($25?) to play: because they wouldn't believe they are being offered a genuine St. Petersburg game. That, however, goes no way toward “Resolving the Paradox” (Jeffrey's title for this part of his book, p. 154). The paradox is that the real St. Petersburg game has infinite desirability and is a bet that can't be covered by the person offering. The fact that we wouldn't trust anyone who appears to offer it doesn't “resolve” anything. 4. The Average Win Expected value is in effect average payback in the long run. Consider the graph of average wins in a series of the simple game in which you get $12 when a fair die comes up 6, nothing otherwise. After one game is played, the average win is either $12 or $0. As games continue, the line representing the average so far will go up and down, but as more and more games are played, the line gets closer and closer to $2. If the cost of playing each game is $1, and one is playing very many games, one can count on coming out increasingly close to a net gain (payout minus cost) of $1 per game. A very odd casino offering this game is likely to lose on average very close to $1 per game, as many customers play one or more games each. This average over the long run is what we're supposed to consider when calculating the rationality of playing a single game; why the average result is relevant to the rationality of playing a single game is an interesting philosophical question about any sort of game, and need not concern us here. But even if every customer plays only one game, we can see that in the long run the casino will lose money, and the customers (as a group) will gain. The line graphing average wins for a series of these dice games can be expected to swing up and down with decreasing amplitude, narrowing in closer and closer to $2. But the line for a series of St. Petersburg games will behave quite differently. It will characteristically start off quite low—after all, in three quarters of the games, the payoff will be either $2 or $4. But after a while there will be a sudden spectacular jump upward, as an improbable but huge payoff occurs. Following this, the line will gradually sink, but then will jump upward for another huge payoff. These big jumps will occur increasingly rarely, but big jumps will bring the general tendency of the line increasingly higher. A typical graph can be seen below (Hugh 2005). https://plato.stanford.edu/entries/paradox­stpetersburg/ 6/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) Unlike the dice game's graph, the one for the St. Petersburg game approaches no limit; the longer a series of plays, the larger the average win (roughly speaking). The more games played in a casino, the larger the casino's average payout per game will get. The casino will lose in the long run, and the customers as a group will gain, no matter what price is charged per game. All this is just a recasting of what we've already talked about, from a slightly different angle. But this way of looking at things suggests some conclusions that follow, and some that don't. First: note that if you're going to play St. Petersburg with a substantial fee per game, you're likely to have to play a very very long time before you come out with a positive net payoff. Ordinary practical considerations thus apply, as we've seen, but in theory, you'd make a net gain if you continued long enough. In this respect, playing St. Petersburg is rather like the Martingale Strategy for games of chance. Here's a version of Martingale. In advance, set a target for net winnings (winnings minus losses). Bet an amount on the first game such that if you win, you'll meet your target. If you win, go home. If you lose, bet an amount on the next game such that if you win, you'll get an amount equal to your loss on the first game plus your target. If you win, go home. If you lose, bet an amount on the next game such that if you win, you'll get an amount equal to all your losses so far plus your target. Continue this till you win. One win will give you a net gain of your target. This Martingale strategy would always work, if pursued long enough. But of course there are practical difficulties. You may have to play a very long time before winning once. But worse: in a series of losses, the amount needed for the next bet rises. How much bankroll would you need to guarantee success with the Martingale? Whatever bankroll you take into the casino, there's a chance that this will not be enough for a successful Martingale. Similarly, whatever the cash reserves of the casino, there's a chance this will not be enough to pay off a big St. Petersburg winner. So we should be clear about both St. Petersburg and Martingale. With a finite very large bankroll, a Martingale player might be likely to win, but there's a chance that he'll run out of money to invest before that. In practical terms, the Martingale player could be certain of winning only if he could be certain that his bankroll would survive any number of consecutive losses. And he can't be certain of this. Similarly, with a https://plato.stanford.edu/entries/paradox­stpetersburg/ 7/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) finite very large reserve, a casino would be likely to make money offering a high­entry­payment St. Petersburg, but there's a chance, increasing with more plays, that it will be caught with insufficient funds. 5. Infinite Value? The St. Petersburg game is sometimes dismissed because it is has infinite expected value, which is, it's argued, not merely practically impossible, but theoretically objectionable—beyond the reach even of thought­experiment. But is it? Imagine you were offered the following deal. For a price to be negotiated, you will be given permanent possession of a cash machine with the following unusual property: every time you punch in a dollar amount, that amount is extruded. This is not a withdrawal from your account; neither will you later be billed for it. You can do this as often as you care to. Now, how much would you offer to pay for this machine? Do you find it impossible to perform this thought­experiment, or to come up with an answer? Perhaps your answer is: any price at all. Provided that you can defer payment of the initial price for a suitable time after receiving the machine, you can collect whatever you need to pay for it from the machine itself. Of course, there are practical considerations: how long would it take you to collect its enormous purchase price from the machine? Would you (or the machine) be worn out or dead before you are finished? Any bank would be crazy to offer to sell you an infinite cash machine (and unfortunately I seem to have lost the address of the crazy bank which has made this offer). But so what? The point is that there appears to be nothing unthinkable about this thought experiment. It imagines an action (buying the machine) with no upper limit on expected value. It seems unlikely that your intuitions tell you to offer (say) $25 at most for this machine. But the only difference between this machine and a single­play St. Petersburg game is that this machine guarantees an indefinitely large number of payouts, while the game offers a one­time lottery from among an indefinitely large number of possible payouts, each with a certain probability. The only difference between them is the probability factor: the same difference that exists between a game which gives you a guaranteed prize of $5, and one which gives you half a chance of $10, and half a chance of $0. The expected value of both the St. Petersburg game and the infinite cash machine are both indefinitely large. You should offer any price at all for either. We'd suspect, in real life, that the offer of either was illusory. But nevertheless it seems that the notion of infinite expected value is at least thinkable. Of course, that notion does create havoc. Suppose you assign infinite value to going to heaven. God tells you that you'd increase your chances of going to heaven, currently zero, by 1% for every good deed you do from now on, making heaven certain after the hundredth. The expected value of doing only one good deed is .01 × ∞. But this equals the expected value of doing 100 good deeds: 1.00 × ∞; so you should help that old person across the street, and then lapse back into your old complete selfishness. But that conclusion is crazy. (Jeffrey (1984: 153) gives what's basically this example under the title “De contemptu mundi”.) And unbounded parameters can play havoc with considerations of rational choice. Here's a practical example of that from real life. Since home computers were introduced, they have steadily been getting better and cheaper. Should you buy a new one now? No, it's better to make do with the one you have now, and wait a few months till new better and cheaper ones come out. But, on the assumption that this will always be the case, it's always a good idea to wait; so you'll never buy a new one no matter how good and cheap they get. Where's the mistake in this reasoning? We can avoid this paradox by introducing some “realistic” considerations, not mentioned in the story given, as we can for St. Petersburg. But if we don't change the story or introduce additional information, crazy things follow from the computer story and St. Petersburg. That's why they're both paradoxes. 6. Theory and Practicality The St. Petersburg game is one of a large number of examples which have been brought against standard (unrestricted) Bayesian decision theory. Each example is supposed to be a counter­example to the theory because of one or both of these features: https://plato.stanford.edu/entries/paradox­stpetersburg/ 8/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) 1. the theory, in the application proposed by the example, yields a choice people really do not, or would not make; thus it is descriptively inadequate. 2. the theory, in the application proposed by the example, yields a choice people really ought not to make, or which a fully, ideally rational person, would not make; thus it is normatively inadequate. If you see the standard theory as normative, you can ignore objections of the first type. People are not always rational, and some people are rarely rational, and an adequate descriptive theory must take into account the various irrational ways people really do make decisions. It's no surprise that the classical rather a­prioristic theory fails to be descriptively adequate, and to criticize it on these grounds rather misses its normative point. The objections from standpoint (2) need to be taken more seriously; and we have been treating the responses to St. Petersburg as cases of this sort. Various sorts of “realistic” considerations have been adduced to show that the result the theory draws in the St. Petersburg game about what a rational agent should do is incorrect. It's concluded that the unrestricted theory must be wrong, and that it must be restricted to prevent the paradoxical St. Petersburg result. When considering the plausibility of restricting expected value calculations in various ways that would take care of the paradox. Amos Nathan (1984: 133) remarks, it ought, however, to be remembered that important and less frivolous application of [unrestricted­value] games have nothing to do with gambling and lie in the physical world where practical limitations may assume quite a different dimension. Nathan doesn't mention any physical applications of analogous infinite value calculations. But it's nevertheless plausible to think that imposing restrictions on theory to rule out St. Petersburg bath water would throw out some babies as well. Any theoretical model is an idealization, leaving aside certain practicalities. “From the mathematical and logical point of view,” observes Resnik (1987: 108), “the St. Petersburg paradox is impeccable.” But this is the point of view to be taken when evaluating a theory per se (though not the only point of view ever to be taken). By analogy, the aesthetic evaluation of a movie does not take into account the facts that the only local showing of the movie is far away, and that finding a baby sitter at this point would be very difficult. If aesthetic theory tells you that the movie is wonderful, but other considerations show you that you shouldn't go, this isn't a defect in aesthetic theory. Similarly, the mathematical/logical theory for explaining ordinary casino games is not defective because it ignores practicalities encountered in making a real gambling decision. For example, in deciding whether to raise, see, fold, or cash in and go home, in a particular poker game, you must consider the facts that it's 5 A.M. and you are cross­eyed from fatigue and drink; but these are matters which the mathematical theory that considers only the value of the pot and the probability of winning the hand has no concern with. Döring and Feger (2010: 93–94) see decision theory as a normative theory for evaluating actions by considering expected payoffs. Accordingly, they argue, “realism”, which introduces practical considerations such as real­life limitations on money supplies, or time, or whether anyone will really offer the game, is irrelevant. That sort of decision theory tells us that no amount is too great to pay as an ideally rationally acceptable entrance fee for St. Petersburg—a strange result, but one that does not force the conclusion that there's something wrong with the theory. We might just try to accept the strange result. As Clark (2002: 176) says, “This seems to be one of those paradoxes which we have to swallow.” Bibliography Works Cited Bernoulli, Daniel, 1954 [1738], “Exposition of a New Theory on the Measurement of Risk”, Econometrica, 22: 23–36. Clark, Michael, 2002, “The St. Petersburg Paradox”, in Paradoxes from A to Z, London: Routledge, pp. 174– 177. https://plato.stanford.edu/entries/paradox­stpetersburg/ 9/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) Döring, Sabine and Fretz Feger, 2010, “Risk Assessment as Virtue”, in Emotions and Risky Technologies (The International Library of Law and Technology 5), Sabine Röser, ed, New York: Springer, 91–105. Gustason, William, 1994, Reasoning from Evidence, New York: Macmillan College Publishing Company. Hacking, Ian, 1980, “Strange Expectations”, Philosophy of Science, 47: 562–567. Hardin, Russell, 1982, Collective Action, Baltimore: The Johns Hopkins University Press. Jeffrey, Richard C., 1983, The Logic of Decision, Second Edition, Chicago: University of Chicago Press. Menger, Karl, 1967 [1934], “The Role of Uncertainty in Economics”, in Essays in Mathematical Economics in Honor of Oskar Morgenstern (ed. Martin Shubik), Princeton: Princeton University Press. Nathan, Amos, 1984, “False Expectations”, Philosophy of Science, 51: 128–136. Resnik, Michael D., 1987, Choices: An Introduction to Decision Theory, Minneapolis: University of Minnesota Press. Weirich, Paul, 1984, “The St. Petersburg Gamble and Risk”, Theory and Decision, 17: 193–202. Other Discussions Arntzenius, Frank, Adam Elga, and John Hawthorne, 2004, “Bayesianism, Infinite Decisions, and Binding,” Mind, 113: 251–283 Ball, W. W. R. and H. S. M. Coxeter., 1987, Mathematical Recreations and Essays, 13th ed., New York: Dover. Bernstein, Peter, 1996, Against The Gods: the Remarkable Story of Risk, New York: John Wiley & Sons. Cowen, Tyler and Jack High, 1988, “Time, Bounded Utility, and the St Petersburg Paradox”, Theory and Decision: An International Journal for Methods and Models in the Social and Decision Sciences, 25: 219–223. Gardner, Martin, 1959, The Scientific American Book of Mathematical Puzzles & Diversions. New York: Simon and Schuster. Kamke, E, 1932, Einführung in die Wahrscheinlichkeitstheorie. Leipzig: S. Hirzel. Keynes, J. M. K., 1988, “The Application of Probability to Conduct”, in The World of Mathematics, Vol. 2 (K. Newman, ed.), Redmond, WA: Microsoft Press. Kraitchik, M., 1942, “The Saint Petersburg Paradox”, in Mathematical Recreations. New York: W. W. Norton, pp. 138–139. Todhunter, I., 1949 [1865], A History of the Mathematical Theory of Probability, New York: Chelsea. Academic Tools How to cite this entry. Preview the PDF version of this entry at the Friends of the SEP Society. Look up this entry topic at the Indiana Philosophy Ontology Project (InPhO). Enhanced bibliography for this entry at PhilPapers, with links to its database. Other Internet Resources Hugh, Brent, 2005, “File:Petersburg­Paradox.png” Wikimedia Commons, 18:12, 20 March 2006 version, original upload 30 September 2005. (Colors were altered by the SEP editors.) [http://commons.wikimedia.org/wiki/File:Petersburg­Paradox.png] “Two Lessons from Fractals and Chaos”, a preprint of a paper in Complexity, 5(4), 2000, pp. 34–43, by Larry S. Liebovitch and Daniela Scheurle (Florida Atlantic University). Related Entries decision theory: causal | Pascal's wager Copyright © 2013 by Robert Martin <martin@dal.ca> https://plato.stanford.edu/entries/paradox­stpetersburg/ 10/11 1/17/2017 The St. Petersburg Paradox (Stanford Encyclopedia of Philosophy) Open access to the Encyclopedia has been made possible by a world­wide funding initiative. See the list of contributing institutions. If your institution is not on the list, please consider asking your librarians to contribute. The Stanford Encyclopedia of Philosophy is copyright © 2016 by The Metaphysics Research Lab, Center for the Study of Language and Information (CSLI), Stanford University Library of Congress Catalog Data: ISSN 1095­5054 https://plato.stanford.edu/entries/paradox­stpetersburg/ 11/11