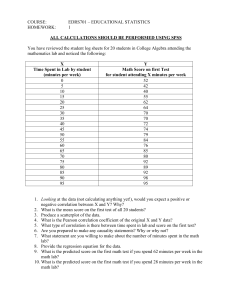

![Stats for Food Scientist [Revised 2021] [Autosaved]](http://s2.studylib.net/store/data/026089175_1-5f7c08abd6ff6309a745fe08ad67544b-768x994.png)

Course Title: Statistics for Food Scientist Course code: FST 301 By R. A. Atuna ratuna@uds.edu.gh 1 Course outline • Basic definitions and fundamental concepts – – – – Statistics Why stats in research validity and reliability Sampling and sampling techniques • Data, Measures and Collection Methods • Basic experimental design – Parametric Data analysis – Fitting statistical models to the data • • • • Test of association Mean comparison Single factor analyses Factorial analyses – Non Parametric Data Analysis 2 • Objectives The objectives of the FST 301 course include, but not limited to the following: – To train students to independently design experiments – To collect and enter data and analyse appropriately – To train students on handling parametric data – To train students on handling non-parametric data from sensory analysis • Learning outcomes – On successful completion of this course, students should be able to: – Design and conduct research – Collect data, clean, analyse, present and interpreted results • Mode of delivery – Lecture, tutorials, and seminars 3 • Research is the“the systematic investigation into existing or new knowledge, to establish or co firm facts, reaffirm the results of previous work, solve new or existing problems, support theorems, or develop new theories”. – Leads to the advancement of knowledge • Systematic investigation (Burns, 1997) or inquiry whereby data are collected, analyzed and interpreted in some way in an effort to "understand, describe, predict or control an educational or psychological phenomenon or to empower individuals in such contexts" (Mertens, 2005, p.2). 4 Statistics • It is the study of the collection, organization, analysis, and interpretation of data. • It also deals with the planning of data collection, in terms of the design of surveys and experiments. 5 Statistics • It is the study of the collection, organization, analysis, and interpretation of data. • It also deals with the planning of data collection, in terms of the design of surveys and experiments. Why Statistics in Research • Statistics assists researches to be understood, to describe phenomena and to help draw reliable conclusions about these phenomena. • In every research there will be some results, sometimes numerical or logical statement. • Statistics checks the accuracy, consistency and acceptability of the resul ts obtained in a research based on probability measures. 6 • It provides a platform for research as to; how to go about the research, the techniques to use in data collection, how to go about the data description, analysis and he inference to be made. • It plays the role of an arbiter in research it has power and influence to make judgements and decide on what to be done for the acceptability of research. • Statistics does not decide any research conclusion(s) but assists in reaching the conclusion(s). • It stand as a bedrock (principles) on which any research is based. 7 Factors to be considered in choosing a research method • Research questions – To answer my research question, should I or do I need to . . . • • • • • • Make causal inferences? Generalize from the cases studied (sample) to a broader group (population)? Study change over time? Interact with subjects/participants? Generate my own data sources and data? Use more than one design? • Ethics – Thinking about how the research impacts on those involved with the research process. – Ethical research should gain informed consent, ensure confidentiality, be legal and ensure that respondents and those related to them are not subjected to harm. – All this needs to be weighed up with the benefits of the research. 8 • Budget • Time – As a general rule, the more in-depth the method the more time consuming it is. Also, doing your own primary research tends to take longer than using secondary sources. 9 Validity and Reliability Validity Appropriateness of the methods to the research Question • Addresses whether your research explains or measures what you said you would be measuring or explaining • Validity of interpretations of the data it must be a product of analysis– Internal validity • Representativeness of sample – Content validity • Generalizability to a population – External validity 10 Reliability • Internal consistency – Cronbach alpha • How closely related a set of items are as a group • Test Retest • Whether similar results are obtained when the same participants respond to the same test a second time. • Consistency of measure. 11 Research experimental design • The plan a researcher will follow in conducting a study. • The art of planning and executing experiments. • The greatest strength of an experimental research design, is largely due to random assignment, is its internal validity. – the degree to which the results are attributable to the independent variable and not some other rival explanation. • The greatest weakness of experimental designs may be external validity. – The extent to which the results of a study can be generalized 12 Design of experiment begins with – Determining the objectives of an experiment – Selecting the process factors for the study. An experimental design is the laying out of a detailed experimental plan in advance of doing the experiment. Experiment – A series of test or a test in which purposeful changes one made to input variables of a process or system so we may observe and identify reasons for changes that may be observed in the output response. – A study undertaken in which the researcher has control over some conditions in which the study takes place and control over (some aspects of) the independent variables being studied. 13 Survey • A research design in which a sample of subjects is drawn from a population and studied (usually interviewed) to make inferences about a population. Census • A survey of an entire population in contrast to a survey of a sample drawn from a population. 14 Natural experiment • A situation happening naturally, that is, without the researcher’s manipulation that approximates an experiment. • Variables occur naturally in such a way that they have some of the characteristics of the control and experimental groups. – E.g a solar eclipse provides astronomers opportunities to observe the sun that they cannot provide for themselves by experimental manipulation. – the comparison has been made between the number of dental cavities in a city with naturally occurring fluoridated water and a similar city without fluoridation. 15 Quasi-experiment: • A type of research design for conducting studies in field or real life situations where the researcher may be able to manipulate some independent variables but cannot randomly assign subjects to control and experimental groups. • For example, you cannot cut off someone’s unemployment benefits to see how well he or she could get along without them or to see whether an alternative job-training program would be more effective for some unemployed persons. But you could try to find volunteers for the new program. 16 Descriptive research: • Research that describes phenomena as they exist. Descriptive research is usually contrasted with experimental research in which experiments are controlled and subjects are given different treatments. Control group • In experimental research, a group that, for the sake of comparison, does not receive the treatment the experimenter is interested in. 17 Experimental group • A group receiving some treatment in an experiment. • Data collected about people in the experimental group are compared with data about people in a control (who received no treatment) and/or another experimental group (who received a different treatment). Experimental unit • The smallest independently treated unit of a study. – E.g. – if 90 subjects were randomly assigned to 3 treatment groups, the study would have three experimental units, and not 90. 18 Treatment • In experiments, a treatment is what researchers do to subjects in the experimental group but not to those in the control group. A treatment is thus an independent variable. Factor • In analysis of variance, an independent variable, that is, a variable presumed to cause or influence another variable. 19 Random • Said of events that are unpredictable because their occurrence is unrelated to their characteristics. • The chief importance of randomness in research is that, by using it, researchers increase the probability that their conclusions will be valid. • Random assignment increases internal validity. • Random sampling increases external validity. 20 Random assignment • Putting subjects into experimental and control groups in such a way that each individual in each group is entirely assigned by chance. • In other words, each subject has an equal probability of being placed in each group. Using random assignment reduces the likelihood of bias. 21 Sampling Methods 22 23 Uses of probability sampling • There are multiple uses of probability sampling: – Reduce Sample Bias: Using the probability sampling method, the bias in the sample derived from a population is negligible to non-existent. The selection of the sample mainly depicts the understanding and the inference of the researcher. Probability sampling leads to higher quality data collection as the sample appropriately represents the population. – Diverse Population: When the population is vast and diverse, it is essential to have adequate representation so that the data is not skewed towards one demographic. For example, if Square would like to understand the people that could make their point-of-sale devices, a survey conducted from a sample of people across the US from different industries and socioeconomic backgrounds helps. – Create an Accurate Sample: Probability sampling helps the researchers plan and create an accurate sample. This helps to obtain well-defined data. 24 • Nonrandom Sampling Techniques – Convenience sampling (i.e., it simply involves using the people who are the most available or the most easily selected to be in your research study). – Quota sampling (i.e., setting quotas and then using convenience sampling to obt ain those quotas). – Purposive sampling (i.e., the researcher specifies the characteristics of the popul ation of interest and then locates individuals who match those characteristics). – Snowball sampling (i.e., each research participant is asked to identify other pote ntial research participants who have a certain characteristic). 25 Methods of data collection • Tests (personality, aptitude, achievement, and performance) • Questionnaires (i.e., self report instruments). • Interviews (i.e., situations where the researcher interviews the participants). • Focus groups (i.e., a small group discussion with a group moderator present to ke ep the discussion focused). • Observation (i.e., looking at what people actually do). • Existing or Secondary data (i.e., using data that are originally collected and then a rchived or any other kind of “data” that was simply left behind at an earlier time f or some other purpose). 26 Guidelines for Designing Experiments To use the statistical approach in designing and analysing an experiment, it is necessary for everyone involved in the experiment to have a clear idea in advance of exactly what is to be studied, how the data are to be collected, and at least a qualitative understanding of how these data are to be analysed. 27 Recognition of and statement of the problem • This may seem to be a rather obvious point, but in practice often neither it is simple to realize a problem requiring experimentation exist, nor is it simple to develop a clear and generally accepted statement of this problem. • It is necessary to develop ideas necessary to develop all ideas about the objectives of the experiment. • Usually, it is important to solicit input from all concerned parties: engineering, quality assurance, manufacturing, marketing, management, customer, and operating personnel (who usually have much insight and who are too often ignored). 28 Selection of the response variables • In selecting the response variable, the experimenter should be certain that this variable really provides useful information about the process under study. • Most often, the average or standard deviation (or both) of the measured characteristic will be the response variable. • It is usually critically important to identify issues relayed to defining the responses of interest and how they are to be measured before conducting the experiment. • Sometimes designed experiments are employed to study and improve the performance of measurement systems. 29 Choice of factors levels (treatments) and range • When considering the factors that may influence the performance of a process or system, the experimenter usually discovers that these factors can be classified as either potential design factors or nuisance factors. • The potential design factors are those factors that the experimenter may wish to vary in the experiment. • Often we find that there are a lot of potential design factors, and some further classification of them is helpful. – Designed factors: The design factors are the factors actually selected for study in the experiment. – held-constant factors: Held-constant factors are variables that may exert some effects on the response, but for purposes of the present experiment these factors are not of interest, so they will be held at a specific level. – allowed-to –vary factors. 30 Choice of experimental design • If the above pre-experimental planning activities are done correctly, this step is relatively easy. • Choice of design involves consideration of sample size (number of replicates), selection of a suitable run order for the experimental trials, and determinations of whether or not blocking or other randomization restrictions are involved. • In selecting a design, it is important to keep the experimental objectives in mind. 31 Performing the experiment • When running the experiment, it is vital to monitor the process carefully to ensure that everything is being done according to plan. • Error in experimental procedure at this stage will usually destroy experimental validity. • Up-front planning is crucial to success. It is easy to underestimate the logistical and planning aspects of running a designing experiment in a complex manufacturing or research and development environment. 32 Statistical analysis of the data • Statistical methods should be used to analyse the data so that results and conclusions are objective rather than judgemental in nature. • If the experiment has been designed correctly and performed according to the design, the statistical method required is not elaborate. • Often we find that simple graphical methods play an important role in data analysis and interpretation. Because many of the questions that the experimenter wants to answer can be cast into a hypothesis-testing framework, hypothesis testing and confidence interval estimation procedures are very useful in analysing data from designed experiment. • Remember that statistical methods cannot prove that a factor (factors) has a particular effect. They only provide guidelines as the reliability and validity of results. • When properly applied, statistical methods do not allow anything to be proved experimentally, but they do allow us to measure the likely error in a conclusion or to attach a level of confidence to a statement. • The primary advantage of statistical method is that they add objectivity to the decision-making process. Statistical techniques usually lead to sound conclusions. 33 Conclusion and recommendations • Once the data have been analysed, the experimenter must draw practical conclusions about the results and recommend a course of action. • Graphical methods are often useful in this stage, particularly in presenting the results to others. • Follow-up runs and confirmation testing should also be performed to validate the conclusions from the experiment. 34 Lecture 2 Data, Measures and Collection Methods 35 Variables and Measurements • Statistics is essentially concerned with the application of logic and objectivity in the understanding of events. • Necessarily, the events have to be identified in terms of relevant characteristics or occurrences that could be measured in numeric or non-numeric expressions. • The characteristic or occurrence is technically referred to as a variable because it may assume different values or forms within a given range of values or forms known as the domain of the variable. • The act of attaching values or forms to variables is known as measurement. • A variable may be qualitative or quantitative; and a quantitative variable may be continuous or discontinuous (i.e. discrete). 36 Quantitative Variable • A quantitative variable Y is numeric. It may assume values y that can be quantified. Age, weight, height, volume are all quantitative variables. Production, prices, costs, sales, sizes, etc., are also quantitative. • A quantitative variable Y may be coded qualitatively • E.g – In an agronomic trial, maize plot response may be coded A for yields exceeding 7500 kg/ha; B for yields in the bracket 6001 – 7500 kg/ha; and C for yields between 5001 and 6000 and D for yields 5000 and below. 37 Qualitative Variable • A qualitative variable X may have forms x that could only be described but could not be measured numerically. • It is a non-numeric entity. – Nutrient when identified only as nitrogen, phosphorus, potassium or zinc; thus, “Nutrient” is a qualitative variable or factor. Other examples are “variety” of maize; “sex” of a farmer; “country” of origin, etc. – Yield scores when recorded as high, moderate or low is qualitative. • A qualitative variable has states or forms, which can be described, categorised, classified, or qualified. • A qualitative X may be recorded as if a quantitative variable – E.g – in a farm survey, a male farmer may be coded as 0 and a female as 1. – In an entomological experiment, response may be classified as 0 if the plant survives a chemical treatment and 1 if otherwise. 38 Continuous and Discrete Variables • A quantitative variable may be continuous or discontinuous i.e. discrete. • The family size of farming household, X, in a crop survey may take between values 1, 2, 3, 4, 5, 6, 7, etc. • In the interval (0, 100) the number of values which X can take is countable (finite), it is 101; but the size of farms Y cultivated by the household may take an uncountable (infinite) number of values within even the shortest of intervals when measurement is not limited by degree of accuracy nor by the precision of the measuring equipment. Family size variable X is a typical discrete variable while farm size Y is a typical continuous one. 39 Scale of Measurement • Any form of measurement falls into four categories (or scales): – Nominal, – Ordinal, – Interval, and – Ratio The scale of measurement will ultimately dictate the statistical procedures (if any) that can be used in processing the data. 40 Nominal Scale of Measurement • The word nominal comes from the Latin nomen, meaning “name”. Hence, we can “measure” data to some degree by assigning names to them. For example, we can measure a group of children by dividing groups: boys & girls; overweight, normal weight & underweight. • Nominal measurement is quite simplistic, but it does divide data into discrete categories that can be compared with one another. • We have alluded to situations where a basically qualitative variable is measured (coded) in numeric terms. Socio-economic classes namely lower, middle and upper may be coded as 1, 2, 3, male and female gender as 1 and 2; rural, semi- urban and urban locations as 1, 2 and 3 respectively. • Only a few statistical procedures are appropriate for analysing nominal data. We can use the mode as an indicator of the most frequently occurring category within our data set; for instance, we might determine that there are more males than females in the second Year BMB class in UDS. 41 Ordinal Scale of Measurement • With an ordinal scale of measurement, can think in terms of the symbols “greater than” (>) and “less than” (<). We can compare various pieces of data in terms of one being greater or higher than another. In essence, this scale allows us to rank-order our data (hence its name ordinal). • We can roughly measure level of education on an ordinal scale by classifying people as being unschooled or having an elementary, high school, college, or graduate education. • The ordinal level is the second weakest level of measurement; it does not permit use of the four arithmetic operations. • An ordinal scale expands the range of statistical techniques we can apply to the data. • We can also determine the median or halfway point in a set of data. • We can use a percentile rank to identify the relative position of any item or individual in a group. • We can determine the extent of relationship between two characteristics by the means of Spearman’s rank order correlation. 42 Interval Scale of Measurement • An interval scale of measurement is characterised by two features: – It has equal units of measurement and – Its zero point has been established arbitrarily. • E.g – The Fahrenheit (F) and Celsius (C) scales. • The intervals between any two successive numbers of degrees reflect equal changes in temperature but the zero point is not equivalent to a total absence of heat. • Because an interval scale reflects equal distance among adjacent points any statistics that are calculated using addition or subtraction (for instance means, standard deviations and Pearson’s product moment correlation) can now be used. 43 Ratio Scale • Two measurement instruments may help you understand the difference between interval and ratio scales: a thermometer or a yard stick. • If we have a thermometer that measures temperature on either the Fahrenheit or Celsius scale, we CANNOT say that 80°F is as twice as warm as 40°F. Why? Because these scales do not originate from a point of absolute zero; a substance may have some degree of heat even though its measured temperature falls below zero. • With a yard stick, however, the beginning of a linear measurement is absolutely the beginning. If we measure a desk from the left edge to the right edge, that’s it. • A measurement of “zero” means there is no desk there at all, and a “minus” distance is not even possible. 44 • More generally, a ratio scale has two characteristics: – Equal measurement units (similar to an interval scale) and – An absolute zero point, such that zero on the scale reflects a total absence of the quantity being measured. – Only ratio scales allow us to make comparisons that involve multiplication or division. 45 We can summarise our description of the four scales this way: if you can say that • One object is different from another you have a nominal scale; • One object is bigger or better or more of anything than another, you have an ordinal scale; • One object is so many units (degrees or inches) more than another, you have an interval scale; • One object is so many times as big or bright or tall or heavy as another, you have a ratio scale. 46 Interval scales Non-interval scales Scale Characteristics of the Scale Statistical Possibilities of the Scale Table 1- A summaryMeasurement of measurement scales, their characteristics and their statistical implications 47 Nominal scale A scale that “measures” in terms of names or designations of discrete units or categories Enables one to determine the mode, the percentage values, or the chi-square Ordinal scale A scale that “measures” in terms of such values as “more” or “less,” “larger or smaller,” but without specifying the size of the intervals Enables one also to determine the median, percentile rank, and rank correlation Interval scale A scale that measures in terms of equal intervals or degrees of difference, but whose zero point, or point of beginning, is arbitrarily established Enables one also to determine the mean, standard deviation, and product moment correlation; allows one to conduct most inferential statistical analyses Ratio scale A scale that measures in terms of equal intervals and an absolute zero point of origin Enables one also to determine the geometric mean and the percentage variation; allows one to conduct virtually any inferential statistical analysis Lecture 3 Basic experiment design 48 49 Data analysis • When data are qualitative, analysis involves looking at your data graphically to see what the general trends are, and also fitting statistical models to it. Some methods for analysis include the following: – Frequency distribution (Histogram) Once you have collected some data a very useful thing to do is to plot a graph of how many times each score occurs. This is known as a frequency distribution, or histogram, which is a graph plotting of values of observations on the horizontal axis, with a bar show many times each value occurred in the data set. 50 Frequency distributions come in many different shapes and sizes. It is quite important, therefore, to have some general descriptions for common types of distributions. In an ideal world our data would be distributed symmetrically around the centre of all scores. This is known as a normal distribution 51 • There are two main ways in which a distribution can deviate from normal: – lack of symmetry-called skew and – Pointyness-called kurtosis A positively (left figure) and negatively (right figure) skewed distribution 52 Distributions with positive kurtosis (leptokurtic, left figure) and negative kurtosis (platykurtic, right figure) 53 The centre of a distribution • We can also calculate where the centre of a frequency distribution lies, known as the central tendency. There are three measures commonly used: the mean, the mode and the median. – The Mean • The mean is the measure of central tendency that you are most likely to have heard of because it is simply the average score and the media are full of average scores. To calculate the mean, we simply add up all the scores and then divide by the total number of scores we have. We can write is in equation form as: 𝑀𝑒𝑎𝑛 = 𝑛 𝑖=1 𝑋𝑖 ; where the numerator simply means, “add up all the scores”; Xi just means “ a particular score, and the n (denominator) the total number of scores. 𝑛 54 The Mode • The mode is simply the score that occurs most frequently in the data set. This is easy to spot in a frequency distribution because it will be the tallest bar. • To calculate the mode, simply arrange the data points in ascending (or descending) order; count how many times each score occurs, and the scores that occurs most is the mode. One problem with mode is that it can often take on several values. The Median • Another way to quantify the centre of a distribution is to look for the middle score when scores are ranked in order of magnitude. This is called the median. • To calculate the median, we first arrange these scores into ascending order. • Next, we find the position of the middle score by counting the number of scores we have collected (n), adding 1 to this value, and then dividing by 2. that is (n + 1)/2 . • Then we find the score that is positioned at the location we have just calculated. 55 The dispersion in a distribution • It can also be interesting to try to quantify the spread, or dispersion, of scores in the data. The easiest way to look at dispersion is to subtract the smallest score from the largest score. This is known as the range of scores. • One problem with the range is that because it uses only the highest and the lowest scores, it is affected dramatically by extreme scores. – E.g. using the following data, 108, 103, 252, 121, 93, 57, 40, 53, 22, 116 and 98. The range is 252-22 = 230. – By dropping the largest score, the range will be 99, which is less than half the size. 56 • One way around this problem is to calculate the range when we exclude values at the extremes of the distribution. • One convention is to cut off the top and bottom 25% of scores and calculate the range of the middle 50% of scores – known as the interquartile range. 57 A summary of measures of Central Tendency and Variability Measure How it’s determined (N = number of scores; X = individual score, and M = Mean) Data for which it is appropriate Measures of Central Tendency The most frequently occurring score is identified Median The scores are arranged in order from smallest to the largest, and the middle score when N is an odd number or the midpoint between to middle scores when N is an even number Mean All the scores are added together, and their sum is divided by the total number (N) of scores Range The difference between the highest and lowest scores in the distribution Measures of variability Interquartile range Standard deviation The difference between the percentiles 𝑆= 𝑋−𝑀 𝑁 25th and Data on interval and ratio scales Data that fall in a normal distribution Data o ordinal, interval, and ratio scales Data on ordinal, interval, and ratio scales Especially useful for highly skewed data Data on interval and ratio scales Most appropriate for normally distributed data 75th 2 𝑆2 = 𝑋−𝑀 𝑁 2 Data on ordinal, interval, and ratio scale Data that are highly skewed Variance 58 Mode Data on nominal, ordinal, interval, and ratio scales Multimodal distributions - two or more modes may be identified when a distinction has multiple peaks Data on interval and ratio scales Most appropriate for normally distributed data Especially useful in inferential statistical procedures (e.g., analysis of variance) EXPLORING THE DATA Exploratory Data Analysis (EDA) • After the data are entered into spreadsheet and imported to any statistical software (e.g., GENSTAT, SPSS, Minitab, SAS, etc) or entered directly into the statistical software, the first step to complete (before running any inferential statistics) is EDA, which involves computing various descriptive statistics and graphs. 59 • Exploratory Data Analysis is used to examine and get to know your data. EDA is important to do for several reasons: – To see if there are problems in the data such as outliers, non-normal distributions, problems with coding, missing values, and/or errors inputting the data. – To examine the extent to which the assumptions of the statistics that you plan to use are met. – To get basic information regarding the demographics of subjects to report in the method section or results section. – To examine relationships between variables to determine how to conduct the hypothesis-testing analyses. 60 • There are two general methods used for EDA: generating plots of the data and generating numbers from your data. Both are important and can be very helpful methods of investigating the data. • Descriptive Statistics (including the minimum, maximum, mean, standard deviation, and skewness), frequency distribution tables, boxplots, histograms, and stem and leaf plots are a few procedures used in EDA. 61 Box and Whisker Plots • In a box-and-whisker plot (or boxplot), there is a box that spans the inter quartile range of the values in the variable, with a line indicating the median. Whiskers are drawn extending beyond the ends of the box as far as the minimum and maximum values. • The whiskers are lines, and extend only to the inner "fences" which are shown as continuous lines. The upper line is defined as the upper quartile and the lower line is defined as the lower fence. • Thus a boxplot is the graphical representation of a 5-number summary of a dataset: minimum, Q1, median, Q3, maximum. 62 Box and Whisker Plots 63 Uses of boxplot • Comparing groups – Boxplots are a useful tool to compare groups of data. In Fig. 3.11 for instance, it looked as if the yield of the new variety is higher than the yield of the standard variety. There is however some overlap and remember the scale we are working with (minimum value is 1.7 tons/ha, maximum value is 2.5). A formal statistical test has to confirm the difference, but if this test shows completely different results to the graph, we know something is wrong. 64 • Outliers 65 Shape of distribution • A boxplot gives an idea about the shape of the distribution, although you can also get this information from other plots. 66 Stem & Leaf Diagram • This draws a stem and leaf plot from a numeric data. The plot comprises stems, indicating leading digits, and leaves indicating subsequent digits. • Stem-and-leaf plots are a method for showing the frequency with which certain classes of values occur. • You could make a frequency distribution table or a histogram for the values, or you can use a stemand-leaf plot and let the numbers themselves to show pretty much the same information. 67 • For instance, suppose you have the following list of values: 12, 13, 21, 27, 33, 34, 35, 37, 40, 40, 41. You could make a frequency distribution table showing how many tens, twenties, thirties, and forties you have: 68 Frequency Class Frequency 10 - 19 2 20 - 29 2 30 - 39 4 40 - 49 3 • You could make a histogram, which is a bargraph showing the number of occurrences, with the classes being numbers in the tens, twenties, thirties, and forties: 69 • The downside of frequency distribution tables and histograms is that, while the frequency of each class is easy to see, the original data points have been lost. You can tell, for instance, that there must have been three listed values that were in the forties, but there is no way to tell from the table or from the histogram what those values might have been. 70 • On the other hand, you could make a stemand-leaf plot for the same data: The "stem" is the left-hand column which contains the tens digits. The "leaves" are the lists in the right-hand column, showing all the ones digits for each of the tens, twenties, thirties, and forties. 71 How to check for errors • Look over the raw data (questionnaires, interviews, or observation forms) to see if there are inconsistencies, double coding, obvious errors, etc. Do this before entering the data into the computer. • Check some, or preferably all, of the raw data (e.g., questionnaires) against the data in your SPSS Data Editor file to be sure that errors were not made in the data entry. • Compare the minimum and maximum values for each variable in your Descriptive output with the allowable range of values in your codebook. • Examine the means and standard deviations to see if they look reasonable, given what you know about the variables. • Examine the N column to see if any variables have a lot of missing data, which can be a problem when you do statistics with two or more variables. Missing data could also indicate that there was a problem in data entry. • Look for outliers in the data. Check the Assumptions 72 Statistical Assumptions • Every statistical test has assumptions. • They are much like the directions for appropriate use of a product found in an owner's manual. • Assumptions explain when it is and isn't reasonable to perform a specific statistical test. • When t-test was developed, for example, the person who developed it needed to make certain assumptions about the distribution of scores, etc., in order to be able to calculate the statistic accurately. • If these assumptions are not met, the value that software calculates, which tells the researcher whether or not the results are statistically significant, will not be completely accurate and may even lead the researcher to draw the wrong conclusion about the results. 73 Parametric tests: • These include most of the familiar ones (e.g., analysis of variance, Pearson correlation, etc). They usually have more assumptions than nonparametric tests. Parametric tests were designed for data that have certain characteristics, including approximately normal distributions. • Some parametric statistics have been found to be "robust" to one or more of their assumptions. Robust means that the assumption can be violated without damaging the validity of the statistic. – E.g, one assumption of ANOVA is that the dependent variable is normally distributed for each group. • Statisticians who have studied these statistics have found that even when data are not completely normally distributed (e.g., they are somewhat skewed), they still can be used. 74 Nonparametric tests • These tests (e.g., chi-square, Kruskal-Wallis, Mann-Whitney U, Spearman rho) have fewer assumptions and often can be used when the assumptions of a parametric test are violated. For example, they do not require normal distributions of variables or homogeneity of variances. 75 Lecture 4 – 7 Fitting statistical models to the data 76 Hypotheses • There are two very important hypotheses that are used when analysing data. • These are scientific statements that can be split into testable hypotheses. • The hypothesis or prediction that comes from theory is usually saying that our effect will be present. This hypothesis is called the alternative hypothesis, and is denoted by H1. • The other type of hypothesis is known as the null hypothesis (H0). This hypothesis is the opposite of the alternative hypothesis and so would usually state that an effect is absent. – E.g.- Alternative hypothesis (H1): Days after planting of orangefleshed sweetpotato have an effect on β-carotene. – Null hypothesis (H0): Days after planting will not affect the βcarotene of orange-fleshed sweetpotato. 77 • The reason that we need the null hypothesis is because we cannot prove the experimental hypothesis (alternative hypothesis) using statistics, but we can reject the null hypothesis. • If our data give us confidence to reject the null hypothesis then this provides support for our experimental hypothesis. 78 Types of analyses • There are numerous types of analyses you can apply depending the data set you have. In this course, we will consider the following: • Test of associations – Regression & Correlation • Comparing two means • Single factor analysis of variance – One-way ANOVA- Completely Randomised Design (CRD) – Randomised Complete Block design • Factorial design 79 Regression and correlation analyses • In many problems, two or more variables are related, and it is of interest to model and explore this relation. Types of association • Association between response variables (outcome variables). Example, acidity of maize and pH of the dough • Association between response and treatment. When treatments are quantitative, such as different fermentation times, and the acidity of maize dough. It is possible to describe the association between the treatment and response. • Association between response and environment 80 • There are two tests of association Regression Correlation Correlation • It provides a measure of the degree of association between the variables or the goodness of fit of a prescribed relationship to the data at hand. – Correlation coefficient (r) • The quantity r, called the linear correlation coefficient, measures the strength and the direction of a linear relationship between two variables. • The value of r is such that -1 < r < +1. • The + and – signs are used for positive linear correlations and negative linear correlations, • • • • 81 respectively. Positive correlation: If x and y have a strong positive linear correlation, r is close to +1. An r value of exactly +1 indicates a perfect positive fit. Negative correlation: If x and y have a strong negative linear correlation, r is close to -1. An r value of exactly -1 indicates a perfect negative fit. No correlation: If there is no linear correlation or a weak linear correlation, r is close to 0. A value near zero means that there is a random, nonlinear relationship between the two variables A perfect correlation: of ± 1 occurs only when the data points all lie exactly on a straight line. If r = +1, the slope of this line is positive. If r = -1, the slope of this line is negative. The value of r ranges between ( -1) and ( +1) The value of r denotes the strength of the association as illustrated by the following diagram. strong -1 intermediate -0.75 -0.25 weak weak 0 indirect perfect correlation 82 intermediate 0.25 strong 0.75 1 Direct no relation perfect correlation 83 • A correlation greater than 0.8 is generally described as strong, whereas a correlation less than 0.5 is generally described as weak. Coefficient of Determination, r 2 or R2 • The coefficient of determination, r2, is useful because it gives the proportion of the variance (fluctuation) of one variable that is predictable from the other variable. • It is a measure that allows us to determine how certain one can be in making predictions from a certain model/graph. • The coefficient of determination represents the percent of the to the line of best fit. – E.g. – if r = 0.922, then r 2 = 0.850, which means that 85% of the total variation in y can be explained by the linear relationship between x and y (as described by the regression equation). 84 A sample of 6 children was selected, data about their age in years and weight in kilograms was recorded as shown in the following table. It is required to find the correlation between age and weight. serial No 85 Age (years) Weight (Kg) 1 2 3 7 6 8 12 8 12 4 5 5 6 10 11 6 9 13 Importance of Correlation • Correlation helps us in determining the degree of relationship between variables. It enables us to make our decision for the future course of actions. • Correlation analysis helps us in understanding the nature and degree of relationship which can be used for future planning and forecasting. • Forecasting without any prior correlation analysis may prove to be defective, less reliable and more uncertain. Limitation of Correlation • r is a measure of linear relationship only • Correlation does not always imply causality. 86 Regression analysis • It describes the effect of one or more variables (designated as independent variables) on a single variable (designated as the dependent variable) by expressing the latter as a function of the former. • For the analysis, it is important to clearly distinguish between the dependent and independent variable, a distinction that is always not obvious. • The purpose of this analysis is to examine how effectively one or more variables allow you to predict the value of another (dependent) variable. 87 Simple linear regression • A simple linear regression generates an equation in which a single independent variable yields a prediction for the dependent. • For simple linear regression, the following conditions must hold: – There is only one independent variable X affecting the dependent variable Y. – The relationship between Y and X is known, or can be assumed, to be linear. Multiple linear regression • A multiple linear regression yields an equation in which two or more independent variables are used to predict the dependent variables. – The relationship between two variables is linear if the change is constant throughout the whole range under consideration – It is a straight line and represented by the equation (y = mx + c) • To predict the yield Y, for a given X, the value of X, should be in the range of X min to X max. You cannot extrapolate the line outside this range. 88 • Assumptions of Multiple linear regression: – A linear relationship between the dependent and independent variables. – The independent variables are not highly correlated with each other. – The variance of the residuals is constant. – Independence of observation. – Multivariate normality 89 Classifications • Regression and correlation analyses can be classified into four types: Simple linear regression and correlation Multiple linear regression and correlation Simple non linear regression and correlation Multiple non linear regression and correlation Solve examples practically using GenStats 90 Comparing Two Means • Often we have two unknown means and are interested in comparing them to each other. • Ho: no differences between the populations. Paired t-test- for comparing paired or matched data. E.g. - “before and after” sometimes also called “longitudinal” studies also produce pair data. Each patient contributes two paired observation: thus before values and after values. Two sample t-test • Comparing two independent samples- this is also called “two sample” t- test. • Here treatment A or B is applied to different subjects. 91 One sample t-test • Comparing a single mean to a referenced mean- some experiments involve comparing only one population mean μ to a specified value, say, μo. The hypotheses are – Ho: μ = μo – H1: μ ≠ μo 92 Single factor analysis • Experiments in which a single factor varies whiles all others are kept constant are called single-factor experiments. • Treatments consists solely of the different levels of the single variable factor • All other factors are applied uniformly to all plots at a single prescribed level. – Example, testing the effect of different fermentation times (say, 1-day, 2-day and 3-day duration) on microbial population in pito is a single-factor experiment. – Only the fermentation duration differs from one experiment to another, and all others kept constant (type of millet, level of inoculums added, temperature the work is held, etc) 93 • There are two groups of experimental design that are applicable to a single-factor experiment • One group is the family of complete block designs, which is suited for experiments with a small number of treatments and is characterized by blocks, each of which contains at least one complete set of treatments. Example, is the Completely Randomised Design (CRD) • The other group is the family of incomplete block designs, suited for large number of treatments and is characterized by blocks, each of which contains only a fraction of the treatments to be tested. 94 Analysis of variance for CRD • Two sources of variations among the n observation • One is the treatment; the other is experimental error • A major advantage of the CRD is the simplicity in the computation of its analysis of variance, especially when the number of replications is not uniform for all the treatments. • The model for One-Way ANOVA: Yij = µ + Ai + eij Where: Yij: jth observation in the ith treatment group µ: a general mean (overall mean) Ai: parameter unique to the ith treatment eij: random residual error 95 Randomised Complete Blocks Design (RCBD) • Here, there is one disturbance factor, namely the blocks. • The experimental units are partitioned in blocks and each block is a complete single replicate of the treatments. • This is the connotation of the word ‘complete’; the randomisation of treatments to units within blocks is done independently of other blocks and treatment comparisons are made within blocks; hence variation between blocks is eliminated. • The Model for RCBD The model is specified as Yij = + Ai + Bj + eij Where: Yij: jth observation in the ith treatment group µ: a general mean (overall mean) Ai: parameter unique to the ith treatment Bj: effect of the jth block eij: random residual error 96 Factorial design • Biological organisms are simultaneously exposed to many growth factors during their lifetime. • Because an organism’s response to any single factor may vary with level of the other factors, single-factor experiments are often criticised for their narrowness. • A full factorial experiment is one where design consists of two or more factors, each with discrete possible values or “levels”, and where experimental units take on all possible combinations of these levels across all such factors. • Full factorial design may also be called a fully crossed design • Such an experiment allows the investigator to study the effect of each factor on the response variable, as well as the effects of interactions between factors on the response variable • For vast majority of factorial experiments, each factor has only two levels • This is usually called 2x2 factorial design (22) • It considers two levels (base) for each of two factors (the power or superscript), or number of levels number of factors, producing 2x2=4 factorial points • When a design is denoted a 23 factorial, this identifies the number of factors (3); and the levels for each factor is 2; and 8 (23 = 2 x 2 x 2) experimental conditions are in the design (23 = 8) 97 • The model is specified as Yijk = + Ai + Bj + Ai*Bj + eij Yijk: kth observation in the ith treatment group (A) and jth treatment group B µ: a general mean (overall mean) Ai: effect of the ith treatment group Bj: effect of the jth treatment group Ai*Bj: interaction between Ai and Bj eij: random residual error 98