lOMoARcPSD|9177240

Stat2008 Cheat sheet - Summary Regression Modelling

Regression Modelling (Australian National University)

Studocu is not sponsored or endorsed by any college or university

Downloaded by Joshua Palframan (joshuapalframan@gmail.com)

lOMoARcPSD|9177240

𝑑𝑓 = 𝑛 − 1 (degrees of freedom) this many variables have the freedom Mallow’s Cp based on the idea that the model is mis-specified or over Abs.val of DFFITS check for influential points. Points over 2√𝑝/𝑛 if

fitted then variance of error terms is inflated. Poor model, MCP is large. data is large, or 1 is small – rought guide. = COOKS Don’t need both

to vary if the mean remains the same.

2

2

𝑆𝑆𝐸

Residual SE = √

() k =length(model$coefficients)-1 (to ignore 𝐶𝑝 = 𝑝 + (𝑛−𝑝)(𝑠𝑝 −𝜎̂ ) . 𝜎̂ 2 = error variance of true model - So just use DFBETA – assess the change in coefficients due to observation I when

𝑛−(1+𝑘)

̂2

𝜎

intercept)

MSE from full model P = number of regressors in model. Prefer models that observation is removed from the model fit. 𝐷𝐹𝐵𝐸𝑇𝐴𝑘(𝑖) =

𝑏𝑘 −𝑏𝑘(𝑖)

. 𝑐𝑘𝑘 is diagonal element of (X’X)-1 matrix. 𝑣𝑎𝑟(𝛽̂ ) =

where 𝐶 = 𝑝 (but if use full model this will always be the case, so use

Stat2008 Cheat sheet.

Predictor – x-axis – independent

Response – y-axis – dependent

Homoscedasticity: error term is same across all independent variables.

𝑆𝑆𝑅

𝑅2 = 𝑟 2 =

(Coefficient of Determination OR Multiple R squared) r

Constant VAR. Heteroscedasticity – increasing var

𝑆𝑆𝑇

If p-value is less than 𝛼 reject the null.

= correlation. Measures how close the data is the fitted regression line.

Explained variation over total variation. 0 means the model explains

none of the variability of the response data around its mean - 100

means all. Doesn’t comment on the model fit or identify bias – need

residual plots. Think Anscombe’s Quartet.

Adjusted R squared measures the proportion of variation explained by

only those independent variables that really help in explaining the

dependent.

𝑆𝑆𝑇 = ∑(𝑦𝑖 − 𝑦̅)2 (total variability in Y)

(1−𝑅 2 )(𝑛−1)

2

𝑅𝑎𝑑𝑗𝑢𝑠𝑡𝑒𝑑

=1−

::::: k = number of predictors

𝑆𝑆𝐸 = ∑(𝑦 − 𝑦̂)2 (Unexplained variability)

𝑖

𝑆𝑆𝑅 = ∑(𝑦̂ − 𝑦̅)2 = b12∑(𝑋𝑖 − 𝑋̅)2 (Explained Variability)

SST = SSE + SSR and in MLR SST = SSv1+SSv2 + .. + SSE

Cobram’s Theorem

𝑀𝑆𝐸 =

𝑀𝑆𝑅 =

𝑆𝑆𝐸

𝑛−2

𝑆𝑆𝑅

𝑑𝑓

=

=

𝑆𝑆𝐸

𝑑𝑓

𝑆𝑆𝑅

1

(Mean square error)

= 𝑆𝑆𝑅 (regression mean square)

𝐸{𝑀𝑆𝐸} = 𝜎 2 (Expected Mean square)

𝐸{𝑀𝑆𝑅} = 𝜎 2 + β12 ∑(𝑋𝑖 − 𝑋̅)2 (Expected regression mean square)

𝐹∗ =

𝑀𝑆𝑅

(F Statistic) . Large 𝐹∗ support 𝐻𝑎 . Compute the critical f-

𝑏1

(t test). Large 𝑡 ∗ support 𝐻𝑎 . Two tailed 𝐻0 : 𝛽1 = 0 𝐻𝑎 : 𝛽1 ≠

𝑀𝑆𝐸

statistic. At the 𝛼 level of significance, and df numerator =1, df

denominator = df. Don’t use f-test for hypothesis that aren’t =. Use ttest for <>.

𝑡∗ =

𝑆𝐸(𝑏1 )

0 else one.

Types of T-test. 𝐻0 : 𝛽1 = 𝛽10 𝐻𝑎 : 𝛽1 ≠ 𝛽10 ∴ 𝑡 ∗ =

𝛼

Confidence intervals: 𝛽̂1 ± 𝑡𝑛−2 ( ) 𝑆𝐸(𝛽̂1 )

2

Prediction intervals:

𝑏1 −𝛽10

𝑆𝐸(𝑏1 )

𝑛−𝑘−1

Difference between 𝑅 2 and adjusted: More independent variables will

increase 𝑅 2 no matter what, but adjusted R squared will increase only

if the independent variable is significant.

𝜎

𝑆𝐸 =

√𝑛

Diagnostics

Partial regression or added variable plots help isolate the effect on y of

the different predictor variables (𝑥𝑖 ). Check If an issue with nonlinearity and if transformation to predictors will help. Added variable

plots regress y on all x except 𝑥𝑖 (𝑥𝑗 ) and calculates the residuals(𝛿).

Represents y with the other effects taken out. Regress 𝑥𝑖 on all x and

calculate the residuals (𝛾). Plot 𝛿 and 𝛾. y/xj vs xi/xj. Any nonlinear in

the pattern suggests that that variable has a non-linear relation with y.

Partial Regression plot better than avp for detecting non-linearity. A

plot of response with the predicted effects of all x other than 𝑥𝑖 taken

out – plotted against 𝑥𝑖 . Use termplot

Transformation applied to response variable y-axis. Can use BOX-COX

transformation. Only for positive y. then transformation 𝑔(𝑦) =

{

𝑦 𝜆−1

𝜆

̂0 )

𝑆𝐸(𝛽

(

Standardised residuals have the same variance = 1. 𝑟𝑖 =

𝜀̂𝑖

̂ √1−ℎ𝑖𝑖

σ

where

𝜀̂𝑖 is the normal residual. σ

̂ = √𝑀𝑆𝐸, std.residual. ℎ𝑖𝑖 = leverage of ith

observati. Gives a standard axis on Q-Q plot, most values should be in

±2 – anything outside of this could be outlier or influential.

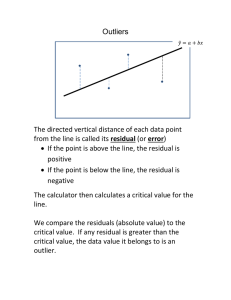

Outliers recap

𝜆 ≠ 0} 𝑜𝑟{log(𝑦) 𝜆 = 0}. Just take 𝑦 𝜆 as the value. Round 𝜆 to

1

1−𝑅12

. To get 𝑅𝑖2 do regression on Studentised residuals. Predict y when case i is left out of the model.

̃ 0.5

(𝑛−1)−𝑝

𝑡𝑑𝑓=(𝑛−1)−𝑝 𝑟𝑖 is the normal residual. Large

𝑡𝑖 = 𝑟𝑖 (

2)

𝑥𝑖 with relation to all others. High VIF (smallest is 1) investigate for

Summary Table

multicollinearity! Looks at all predictors, not just one (like correlation)

𝑆𝑆𝑥𝑦 = ∑(𝑋𝑖 − 𝑋̅)(𝑌𝑖 − 𝑌̅ )

Model selection

2

̅

𝑆𝑆𝑥𝑥 = ∑(𝑋𝑖 − 𝑋)

Favour models with smallest error. Lowest 𝑅𝑆𝐸 = 𝜎̂. But there are

𝑆𝑆𝑥𝑦

𝑟𝑆𝑦

𝛽̂1 =

=

(Slope Coeff) r = correlation Sx = √covariance Sy other ways. Can do f-tests to compare if 𝜎̂ drops across models

𝑆𝑆𝑥𝑥

𝑆𝑥

(depends on scale). But can use 𝑅 2 as it is standardised. But – how big

=√covariance. LOOK AT T VAL CAN CALC

should

it be? Does not protect from overfitting (too close to observed

𝛽̂0 = 𝑦̅ − 𝛽̂1 𝑥̅ (Intercept Coeff) 𝑥̅ = mean of predictor, 𝑦̅ = mean of

data, making predictions useless) & every additional x increases R2.

response

Table of best predictors – best subsets. Plots for SE and adjusted R

̂

̂

𝜎

𝜎

=

(Standard Error) 𝜎̂ = Residual SE, 𝑛 =

𝑆𝐸(𝛽̂1 ) =

√(𝑛−1)𝑆𝑥2

√𝑆𝑆𝑥𝑥

squared for number of predictors. Other criterion: PRESSP. predicted

𝜀̂

number of samples, 𝑆𝑥2 = Sx = covariance

sum of squares residuals are 𝜀̂(𝑖) = 𝑌𝑖 − 𝑌̂(𝑖) = 𝑖 (find influential

1−ℎ𝑖𝑖

̂ −0

𝛽

𝑡𝑜𝑏𝑠 (𝛽1 ) = 1 ̂ ( t-value = estimate/Std.Error)

points). Press is sum of these for each residual. Smaller the press

𝑆𝐸(𝛽1 )

̂0 −0

𝛽

√𝑀𝑆𝐸(𝑖) 𝑐𝑘𝑘

make easy to interpret. Use boxcox (untransformed) don’t need to

know math. Just find 𝜆 that maximises the output plot (𝜆̂) – sometimes

fit is bad, then don’t use it.

Multicollinearity: in exploratory data analysis a thing seems positive,

but summary table shows negative. This is multicolin. It relates to the

degree of interrelation among the covariates values. Predictors are

correlated. In cor(data) if any off-diagonals are large then they are

highly correlated and thus result in multicollinearity. Remove a highly

correlated variable to fix. Inflation of variance is due to correlation.

Variance inflation factor VIF 𝑉𝐼𝐹𝑖 =

𝑡𝑜𝑏𝑠 (𝛽0 ) =

𝑝

small model with 𝐶𝑝 as close to p as possible).

𝜎 2 (𝑋 ′ 𝑋)−1 = 𝜎 2 𝑐𝑘𝑘 . Plot DFBETAS and large points are influential. A

AIC Akaike Inform Criteria. (same MCp for linear modes). 𝐴𝐼𝐶 = guide is values over 2/√𝑛 for large data or 1 otherwise.

1

−2 log(𝐿(𝜃̂ )) + 2𝑝 = 2 (𝑆𝑆𝐸 + 2𝑝𝜎̂ 2 ) . Lowest AIC of various Once a variables is removed you must check all tests again.

̂

𝑛𝜎

Interaction terms COME BACK TO THIS

models is best. Pick it.

BIC Bayesian Inform Criteria. 𝐵𝐼𝐶 = −2 log(𝐿(𝜃̂ )) + 𝑝𝑙𝑜𝑔(𝑛) = How good a fit is our MLR. In multi

1

𝑀𝑆𝑅 𝑎𝑑𝑑 𝑢𝑝 𝑆𝑆𝑅 𝑑𝑖𝑣𝑖𝑑𝑒 𝑏𝑦 𝑑𝑒𝑔𝑟𝑒𝑒𝑠 𝑜𝑓 𝑓𝑟𝑒𝑒𝑑𝑜𝑚

(𝑆𝑆𝐸 + log(𝑛)𝑝𝜎̂ 2) BIC always makes a smaller model because it

=

𝐹𝑜𝑏𝑠 =

𝑛

𝑀𝑆𝑟𝑒𝑔𝑟𝑒𝑠𝑠𝑖𝑜𝑛

𝑀𝑆𝐸

penalises larger models more strictly than AIC. Lowest BIC is best.

(𝐼𝑡𝑠 𝑑𝑒𝑔𝑟𝑒𝑒𝑠 𝑜𝑓 𝑓𝑟𝑒𝑒𝑑𝑜𝑚)𝑛 − 𝑝

Model Refinement

Forward selection: Start at base model (null model). Choose the best

If focus on a particular predicture have it last in the anova table

predictor to add to the model – repeat until you reach the optimal

model (as per r-squared, AIC, BIC). Backward Selection Start with the

“full” model including all covariates. Re-order, select variables with

smallest sequential F-stat or largest p-valu (doesn’t account for

scientific importance). If not statistically significant, remove it. Repeat.

Outliers and Influential Points

Residuals are difference between actual and the fitted response item.

Residuals vs fitted plots are overleaf. For MLR plot covariates vs resid –

look for clear interest points, transform the variables to Reduce skew.

statistic the better. good model > residuals small > SSE small > 𝜀̂(𝑖) small.

𝑛−𝑝−𝑟𝑖

studentised residuals (rcode is rstudent) mean the point is potentially

influential. Do this at each data point then need n tests. Bonferroni

correction 𝛼 ∗ = 𝛼/(𝑛 ∗ 2).

Leverages over 2p/n are potentially highly influential. Half norm plots

make it easier to see those highest values.

Cook’s distance looks at difference in residuals between a fitted value

where all observations are used, and where one is removed. Large

cooks distances are potentially influential, the cut off line comes from

the F-distribution – a rough guide.

DFFITS – change in fitted value for observation when that observation

is removed from the model fit. 𝐷𝐹𝐹𝐼𝑇𝑖 =

Downloaded by Joshua Palframan (joshuapalframan@gmail.com)

𝑦̂𝑖 −𝑦̂𝑖(𝑖)

√𝑀𝑆𝐸(𝑖) ℎ𝑖𝑖

= 𝑡𝑖 (

ℎ𝑖𝑖

1−ℎ𝑖𝑖

0.5

)

lOMoARcPSD|9177240

Residuals vs fitted – check homoscedasticity (constant variance).

SSresiduals = (n−p)MSresiduals = (27)∗(3108.062) = 83917.67

(THE ROW ABOVE RESIDUALS Has same p val as sum table and f-value

is special. See below)

Pr(> F)Shots = 2.44e−05

F −valueShots = t^2 = 5.083^2 = 25.83689

MSShots = F −valueShots ∗MSresiduals = 25.83689∗3108.062 =

80302.66

DFShots = 1

SSShots = MSShots ∗DFShots = 80302.66

MSaddition = F −valueaddition ∗MSresiduals = 100.9∗3108.062 =

313603.5

SSaddition = DFaddition ∗MSaddition = 2∗313603.5 = 627207

SSPasses = SSaddition −SSShots = 627207−80302.66 = 546904.3

DFPasses = 1

MSPasses = SSPasses/DFPasses = 546904.3

F −valuepasses = MSPasses/MSresiduals = 546904.3/3108.062 =

175.96

Regression SS = (Overall F × MSE) × Regression df

QQ plots – check for normality

Shapiro Wilk Test – test of normality. Use only with Q-Q plots. Because

it detects mild non-normality in lrg samples.. Null hypothesis ‘the

residuals are normally distributed’. Gives test stat and p-value.

b) Are Attend and Employ significant.

Prediction interval example:

95% CI for Beta1

Exam Q’s: a) Calc values in ANOVA table

MSresiduals = (residual standard error)^2 = 55.75^2 = 3108.062

DFresiduals = n−p = 30−3 = 27

Random Help

Downloaded by Joshua Palframan (joshuapalframan@gmail.com)