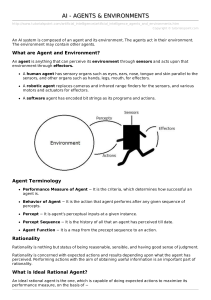

Chapter 2 Intelligent Agents Introduction • Agent: anything that can be viewed as perceiving its environment through actuators. 2 Introduction A human agent has ears, eyes, and other organs for sensors, and hands, legs, vocal tracts for actuators. A robotic agent might have cameras, infrared range finders for sensors and various motors for actuators. A software agent receives keystrokes, file contents, and network packets as sensory inputs and acts on the environment by displaying on the screen, writing files and sending network packets. 3 Introduction 4 Agents and Environment • Percept: refers to agent’s perceptual inputs at any given instant. • Percept sequence: the complete history of everything the agent has ever perceived. • An agent’s choice of action at any given instant can depend on the entire percept sequence observed to date, but not on anything it hasn’t perceived. 5 Agents and Environment • Internally, the agent function for an artificial agent will be implemented by an agent program. • Agent Function: an abstract mathematical description • Agent Program: concrete implementation, running within some physical system. 6 Agents and Environment • Figure 2.2 illustrates a vacuum cleaner world with two locations. • The vacuum agent perceives which square it is and whether there is dirt in the square. • It can choose to move left, move right, suck up the dirt, or do nothing. • Partial tabulation of this agent function is shown in Figure 2.3. 7 Agents and Environment 8 Agents and Environment • The obvious question, then, is this: How should the vacuum act? • In other words, what makes an agent good or bad, intelligent or stupid? • How does it know not to make a mistake? • Should we monitor it at all times? • What happens if it is put in a different environment? 9 Good Behavior: Rationality • Rational Agent: one that does the right thing. Conceptually speaking, every entry in the table for the agent function is filled out correctly. • But, what does it mean to do the right thing? • This question is answered the old way: by considering the consequences of the agent’s behavior. 10 Good Behavior: Rationality • When agent is plunked down in an environment, it generates a sequence of actions according to the percepts it receives. • If the sequence is desirable, then the agent has performed well. • The notion of desirability is captured by a performance measure that evaluates any given sequence of environment states. 11 Rationality • What is rational at any given time depends on four things: The performance measure that defines the criterion of success. The agent’s prior knowledge of the environment. The actions that the agent can perform. The agent’s percept sequence to date. • This leads us to a definition of a rational agent: 12 Rational Agent For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has. 13 Rationality • Is the Vacuum in Figure 2.3 a rational agent? • With what it knows about the environment, yes, it is a rational agent. • The Vacuum would stop being rational if, when all the dirt is cleaned up, the agent oscillates needlessly back and forth. 14 Rationality • Rationality is not the same as perfection Cross The Street Example • Rationality maximizes expected performance. It is not perfection • Information gathering: doing actions in order to modify future percepts. • Our definition requires a rational agent not only to gather information, but also to learn as much as possible from what it perceives. • In extreme cases in which the environment is completely known (priori), the agent simply acts correctly. 15 16 Rationality • To the extent that an agent relies on the prior knowledge of its designer rather than on its own percepts, we say the agent lacks autonomy. • A rational agent should be autonomous, it should learn what it can to compensate for partial or incorrect prior knowledge. 17 The Nature of Environments • Task Environments: the “problems” to which the rational agents are the “solution”. • Task Environment Specification (PEAS) Performance Environment Actuators Sensors 18 The Nature of Environments 19 The Nature of Environments 20 The Nature of Environments • Properties of Task Environments Fully Observable vs Partially Observable Fully Observable – sensors detect all aspects that are relevant to the choice of action Partially Observable – parts of the state are missing from the sensor data. 21 The Nature of Environments Task Env. Observable Agents Chess Fully Poker Partially Image Analysis Fully Butler Robot Partially Episodic Static Discrete 22 The Nature of Environments • Properties of Task Environments Single Agent vs Multi agent Multi Agent – many agents are in the environment that may affect choice of actions Single Agent – only one agent in the environment 23 The Nature of Environments Task Env. Observable Agents Chess Fully Multi Poker Partially Multi Image Analysis Fully Single Butler Robot Partially Single Episodic Static Discrete 24 The Nature of Environments • Properties of Task Environments Episodic vs Sequential Episodic – the agent’s experience is divided into episodes. In each episode the agent receives a percept and then performs a single action. The next episode does not depend on the actions taken in previous episodes. Sequential – current decision could affect all future decisions, so the environment needs to be checked every time a decision is to be made 25 The Nature of Environments Task Env. Observable Agents Episodic Chess Fully Multi Sequential Poker Partially Multi Sequential Image Analysis Fully Single Episodic Butler Robot Partially Single Sequential Static Discrete 26 The Nature of Environments • Properties of Task Environments Static vs Dynamic Static – when environment does not change while an agent is deliberating Dynamic – when environment changes while deliberating 27 The Nature of Environments Task Env. Observable Agents Episodic Static Chess Fully Multi Sequential Static Poker Partially Multi Sequential Static Image Analysis Fully Single Episodic Static Butler Robot Partially Single Sequential Dynamic Discrete 28 The Nature of Environments • Properties of Task Environments Discrete vs Continuous Discrete: the environment has set attributes Continuous: infinite number of attributes for the environment 29 The Nature of Environments Task Env. Observable Agents Episodic Static Discrete Chess Fully Multi Sequential Static Discrete Poker Partially Multi Sequential Static Discrete Image Analysis Fully Single Episodic Static Continuous Butler Robot Partially Single Sequential Dynamic Continuous 30