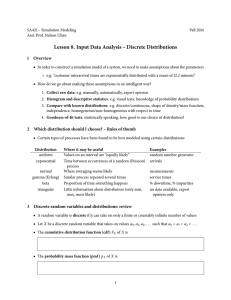

Statistics 2EA1: Random Variables & Probability Distributions

advertisement

Statistics 2EA1 Random Variables Each outcome of an experiment can be associated with a number by specifying a rule of association. Such a rule of association is called a random variable — a variable because different numerical values are possible, and random because the observed value depends on which of the possible experimental outcomes results. Random Variables Definition: For a given sample space S of some experiment, a random variable (rv) is any rule that associates a number with each outcome in S. In mathematical language, a random variable is a function whose domain is the sample space and whose range is the set of real numbers. Random Variables Random variables are customarily denoted by uppercase letters, such as X and Y, near the end of our alphabet. In contrast to our previous use of a lowercase letter, such as x, to denote a variable, we will now use lowercase letters to represent some particular value of the corresponding random variable. The notation X(s) = x means that x is the value associated with the outcome s by the rv X. Example 3.1 Random Variables Definition: Any random variable whose only possible values are 0 and 1 is called a Bernoulli random variable. Example 3.2 Two Types of Random Variables Definition: Example 3.3 Example 3.4 A discrete random variable is an rv whose possible values either constitute a finite set or else can be listed in an infinite sequence in which there is a first element, a second element, and so on. A random variable is continuous if both of the following apply: 1.Its set of possible values consists either of all numbers in a single interval on the number line (possibly infinite in extent, e.g., from -∞ to ∞) or all numbers in a disjoint union of such intervals (e.g., [0, 10] union [20, 30]). 2.No possible value of the variable has positive probability, that is, P(X = c) = 0 for any possible value c. Probability Distributions for Discrete Random Variables The probability distribution for a discrete random variable X resembles the relative frequency distributions we constructed in Chapter 1. It is a graph, table or formula that gives the possible values of X and the probability p(x) associated with each value. We must have p ( x) 0 and px 1 Probability Distributions for Discrete Random Variables Definition: The probability distribution or probability mass function (pmf) of a discrete rv is defined for every number x by: px P X x Pall s S : X s x Example 3.8 Example 3.9 Example 3.10 Probability Distributions for Discrete Random Variables Definition: Suppose p(x) depends on a quantity that can be assigned any one of a number of possible values, with each different value determining a different probability distribution. Such a quantity is called a parameter of the distribution. The collection of all probability distributions for different values of the parameter is called a family of probability distributions. Example 3.12 Probability Distributions for Discrete Random Variables Definition: Suppose p(x) depends on a quantity that can be assigned any one of a number of possible values, with each different value determining a different probability distribution. Such a quantity is called a parameter of the distribution. The collection of all probability distributions for different values of the parameter is called a family of probability distributions. Example 3.12 The Cumulative Distribution Function Definition: The cumulative distribution function (cdf) F(x) of a discrete rv variable X with pmf p(x) is defined for every number x by F x P X x p y y: y x For any number x, F(x) is the probability that the observed value of X will be at most x. Example 3.13 The Cumulative Distribution Function Proposition: For any two numbers a and b with a b Pa X b F b F a For any two integers a and b with a b Pa X b F b F a 1 Example 3.15 Expected Values Let X be a discrete rv with set of possible values D and pmf p(x). The expected value or mean value of X, denoted by E(X) or X is E X X x. p x xD Provided that x . px xD Expected Values Expected Value E(X): The value that you would expect to observe on average if the experiment is repeated over and over again Examples Page 110 - 111 Leave out Examples 3.19 & 3.20 Expected Value of a Function Proposition: If the rv X has a set of possible values D and pmf p(x), then the expected value of any function h(X), denoted by E[h(X)] or h X is computed by E h X h x . p x D assuming that Examples Page 112 - 113 h x . p x D Expected Value of a Function PROOF! Proposition: EaX b aE X b The Variance of X Definition: Let X have pmf p(x) and expected value . Then the variance of X, denoted by V(X) or X2 is V X x . p x E X 2 D The standard deviation (SD) of X is X X Example 3.24 2 2 A Shortcut Formula PROOF! Proposition: V X 2 2 2 x . px D E X Example 3.25 2 E X 2 Rules of Variance PROOF! Proposition: 2 2 2 V aX b aX a . b X aX b a . X Example 3.26