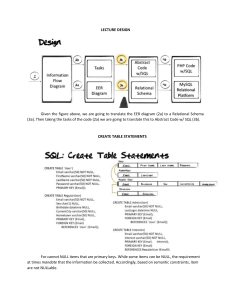

M 3.1: Database Design Flow

When examining the pieces which go into database design and implementation, it is useful to

keep in mind the overall flow of the process.

Requirements collection and analysis

o This important step is often downplayed in courses due to the necessity

of focusing on the main concepts of the course. This should in no way

minimize the importance of this step.

Conceptual Design

o The data requirements from the requirements collection are used in the

conceptual design phase to produce a conceptual schema for the

database.

Functional Analysis

o In parallel with the conceptual design, functional requirements are used

to determine the operations which will need to be applied to the

database. This process will not be discussed here as it is covered in other

courses.

Logical Design

o Starts implementation; conceptual schema transformed from high-level

data model to implementation data model; produces a database schema.

Physical Design

o Specifies internal storage, file organizations, indexes, etc. Produces

internal schema. This type of design is important for larger databases,

but is not needed when working with relatively small databases. Physical

design will not be covered in this course.

This module presents basic ER model concepts for conceptual schema design

M 3.2: The Building Blocks of the ER

Model

In the Entity-Relationship (ER) Model, data is described as entities, relationships,

and attributes. We will start by looking at entities and attributes.

An entity is the basic concept that is represented in an ER model. It is a thing or object in the

real world. An entity may be something with a physical existence, such as a student or a

building. An entity may also be something with a conceptual existence, such as a bank account

or a course at a university . The concept of an entity is similar to the concept of an object in C++

or Java.

Each entity has attributes, which are properties which describe the entity.

Attributes

There are many choices for attributes for the student entity. Some you might have considered

include, name, age, height, weight, gender, hair color, eye color, photo, social security number,

student id, home address, home telephone, cell phone number, campus (or local) address,

campus telephone, major, class rank, spouse name, how many children (and names), whether

working on campus, car make and model, car license, etc.

There are, of course, many additional attributes which are not listed above. You might have

listed some of these additional attributes. Since a database is only representing some aspect of

the real world (sometimes referred to as a miniworld in the text), not all attributes we can

think of will be included in the database, but only those required for the miniworld the

database is representing. Which attributes to include are determined during this design step

and are directed by the requirements.

There are several types of attributes that can be used in an ER model. The types can be

classified as follows:

Composite vs. Simple (Atomic) Attributes: A composite attribute can be divided into

parts which represent more basic attributes. An example of this is an address, which

can be subdivided into street, city, state, and zip (of course there are additional

ways to subdivide an address). If an attribute cannot be further subdivided, it is

known as a simple or atomic attribute. If there is no need to look at the components

individually (such as the city part of an address), then the attribute (address) can be

treated as a simple attribute.

Single-Valued vs. Multivalued Attributes: Most attributes can take only one

value. These are called single-valued attributes. An example of a single-valued

attribute would be social security number for a student. An example of a

multivalued attribute would be names of dependents.

Stored vs. Derived Attributes: When two or more attribute values are related, it is

sometimes possible to derive the value of one given the value of the other. For

example, if age and birth date are both attributes of an entity (such as student), age

can be stored directly in the database, in which case it is a stored attribute. Rather

than being stored directly in the database, age can also be calculated from the birth

date and the current date. If this option is chosen, age is calculated from (or derived

from) birth data and is known as a derived attribute.

NULL Value: In some cases, an attribute does not have an applicable value. When

this is true, a special value, called the NULL value is used. This can be used in a few

cases. The first is when the value does not exist, for example the SSN of a spouse

when the person is not married. The second is when it is known that the value

exists, but is missing, such as a person's unknown birth date. Finally, NULL is used

when it is not known whether the value exists, for example the license plate number

of a person's car (since the person may not own a car).

Complex Attributes: Composite and multivalued attributes can be nested, e.g.,

Address(Street_Address(Number, Street, Apartment), City, State, Zip). These are

called complex attributes.

Entity Types and Entity Sets

An entity is defined by its attributes. This is the type of data we want to collect about the entity.

As a very simple example, for a student entity, we may want to collect name, student id, and

address for each student. This would define the entity type, which can be thought of as a

template for the student entity. Each individual would, of course, have his/her own values for

the three attributes. The collection of all individual entities (i.e. the collection of all

entity instances) of a particular entity type in the database at any point in time is known as

an entity set or entity collection.

Keys and Domains

Keys

One important constraint that must be met by an entity type is called

the key constraint or uniqueness constraint. An entity type will have one attribute (or a

combination of attributes) whose values are unique for each individual entity in the entity set.

Such an attribute is called a key attribute. For example, in a student entity, a given student id

can be used as a key since the id will be assigned to one and only one student. The values of a

key attribute can be used to uniquely identify an entity instance. This means that no two entity

instances may have the same value for the key attribute. We will discuss keys further in this and

later modules.

Value Sets (Domains) of Attributes

Each simple attribute of an entity type is associated with a value set (or domain of values). This

indicates the set of allowable values which may be assigned to that attribute for each entity

instance. Value sets are normally not displayed on basic ER diagrams. For example, if we want

to include the weight of a student in the database, we may indicate that the weight will be in

pounds and we will store the weight rounded to the nearest integer. The integer values may

range from 50 to 500 pounds. Most basic domains are similar to the data types such as integer,

float, and string contained in most programming languages

M 3.3: Initial Design of Database

As indicated earlier, the database design starts with a set of requirements. We will work

through a simple example where we build an ER diagram from a set of requirements.

A simple set of requirements for the example is:

This database will contain data about certain aspects of a university.

1. The university needs to keep information about its students. It wants to store the

university assigned ID of the student. This is recorded as a five-digit number. The

university also wishes to keep the student's name. At this time, the name can be

stored as a full name, in first name-last name order. There is no need to break the

name down any further. The major program of the student needs to be recorded

using the five letter code assigned by the university. The student's class rank also

needs to be kept as a two-letter code (FR for freshman, SO for sophomore, etc.).

Finally, the university wants to keep track of the academic advisor assigned to the

student. The advisor will be a faculty member in the student's department and will

be identified by the faculty ID assigned to the advisor.

2. The university also needs to keep information about its faculty. It wants to store the

university assigned ID of the faculty member. This is recorded as a four-digit

number. The faculty member's name must also be kept and like the name of a

student it will be stored as a full name and will not need to be broken down any

further. Finally, each faculty member is assigned to a department and this will be

stored as a five letter code, which also represents the major program of a student.

3. Information about each department will also be kept. This includes the assigned

code which represents the department, the full department name, and a code for

the building in which the department office is located.

4. Course information must also be kept. This includes the course number which

consists of the code for the department offering the course and the number of the

course within the department. This is stored as a single item, such as CMPSC431.

The course name will also be kept. This name will as shown in the university catalog

and may include abbreviations, such as Database Mgmt Sys. The credit hours earned

for the course will be kept, as will and the code for the department offering the

course. The code will most often be the first part of the course name, but the

department code will be repeated as a separate item.

5. Finally, information must be maintained about each section of a course. A section ID

will be assigned to each section offered. This is a six digit number representing a

unique section - a specific offering of a specific course. For each section, the course

number, the semester offered, and the year offered must also be stored.

These requirements are admittedly not as comprehensive as they would be had a full

requirements study been done, but they will suffice for our simple example.

The next step is to use the requirements to determine the entities which should be included in

the design. For each entity, the attributes describing the entity should then be listed.

After this, the first part of the ER diagram will be drawn. Take a few minutes to develop the list

of entities and associated attributes on your own.

Then watch the video where we will work through this together and introduce the first part of

the ER diagram.

I encourage you to get in the habit of writing a "first draft" of your ER Diagram. Then take a

look at the draft and determine how the positioning and spacing of the diagram looks. Then

note modifications and adjustments you want to make to the visual presentation of the

diagram. Then draw a "clean" copy of the diagram, possibly using a tool such as Visio.

Sometimes you can draw a first draft followed by the final diagram. Other times you may need

to work through several drafts before producing the final copy.

Below is a final copy of the diagram for the draft copy shown on the white board in the above

video. It also includes a few additional points concerning ER diagrams.

ER Diagram and Additional Notes

Figure 3.1: ER Diagram - Part 1

There are a few things to note here. As I suggested, I took my initial draft from the white board

and adjusted it when I created the diagram. Also, I cheated a bit since I knew where the

diagram was heading in the next step and "prearranged" the placement here. Normally this

step and the next are taken together when drawing the draft diagram, so the initial draft would

include more than this draft did.

Note that in this diagram attribute names are reused for different entities (ID is an example of

this). This works fine from a "syntax" standpoint, but is not encouraged unless the ID values are

the same. In this case they are not, and it is better in most cases to give them unique names

such as Student_id, Faculty_id, etc.

As a reminder, the following conventions are used:

Entities are placed in a rectangular box. The entity name is included inside the box in

all capitals.

Attributes are places in an oval. The attribute name is included inside the oval with

only the first letter capitalized. If multiple words are used for clarity, they are

separated by underscores. Words after the first are not capitalized. Attribute names

are underlined for attributes which are the key (or part of the key) for the entity.

Attributes are connected to the entity they represent by a line.

M 3.4: Relationship Types,

Relationship Sets, Degree, and

Cardinality

When looking at the preliminary design of entity types, it can be seen that there are some

implicit relationships among some of the entity types. In general, when an attribute of one

entity type refers to another entity type, some relationship exists between the two entities. In

the example database, a faculty member is assigned to a department. The department attribute

of the faculty entity refers to the department to which the faculty member is assigned. In this

module, we will work through how to categorize these relationships and then how to represent

them in an ER diagram.

In a pattern similar to entity types and entity instances, the term relationship type refers to a

relationship between entity types. The term relationship set refers to the set of all relationship

instances among the entity instances.

The degree of a relationship type is the number of entities which participate in the relationship.

The most common is a relationship between two entities. This is a relationship of degree two

and is called a binary relationship. Also fairly common are relationships between three entities

(a relationship of degree three) which is called a ternary relationship. This and other higherdegree relationships tend to be more complex and will be further discussed in the next module.

We will also discuss in the next module the possibility of a relationship between an entity and

itself. This is often called a unary relationship, and is referred to in the text as

a recursive or self-referencing relationship.

For the rest of this module, we will focus only binary relationships. Relationship types normally

have constraints which limit the possible combinations of entities that may participate in the

relationship set. For example, the university may have a rule that each faculty member is placed

administratively in exactly one department. This constraint should be captured in the schema.

The two main types of constraints on binary relationships are cardinality and participation.

The cardinality ratio for a binary relationship is the maximum number of entity instances which

can participate in a particular relationship. In the above example of faculty and departments,

we can define a BELONGS_TO relationship. This is a binary relationship since it is between two

entities, FACULTY and DEPARTMENT. Each faculty member belongs to exactly one department.

Each department can have several faculty members. For the DEPARTMENT:FACULTY

relationship, we say that the cardinality ratio is 1:N. This means that each department has

several faculty (the "several" is represented by N, indicating no maximum number), while each

faculty belongs to at most one department. The cardinality ratios for binary relationships are

1:1, 1:N, and M:N. Note that the text also shows N:1, but this is usually converted to a 1:N by

reversing the order of the entities.

Note that so far we have considered maximum number of entity instances which can

participate in a relation. We will wait until the next module to discuss whether it makes sense

to talk about a minimum number of instances which can participate.

An example of 1:1 binary relationship would be if we wished to keep the department chair in

the database. To simplify, we will specify that the chair is a faculty member of the department,

which is true in the vast majority of the cases. In this case, the faculty would be the chair of at

most one department, and the department would have at most one chair. 1:1 binary

relationships are explored in more depth in the next sub-module.

If the relationship between two entities is such that an instance entity one may be related to

several instances of entity two and also that an instance of entity two may be related to several

instances of entity one, we say that this is a M:N binary relationship. The use of M and N, rather

than using N twice, indicates that the number of related instances need not be the same in

both directions. We will look more closely at M:N relationships in the next module.

The next step is to examine the entities and their attributes to determine any relationships

which exist among the entities. For each relationship, the degree of the relationship should be

determined, as should the cardinality ratio of the relationship.

After this, the next part of the ER diagram will be drawn. Take a few minutes to develop the list

of relationships on your own. Be sure to determine both the degree and cardinality ratio. To

keep this simple at first, we will look at only 1:1 and 1:N binary relationships.

Then watch the video where we will work through this together and add the next part of the ER

diagram.

Hopefully this video reinforces the suggestion to develop the habit of writing a "first draft" of

your ER Diagram. The positioning and spacing is less of an issue when beginning work with only

the entities and attributes as we did in the earlier video. When you begin to include

relationships, the position of the entities becomes important to permit the relationships to be

added while keeping a clean look to the diagram. Note that the overall positioning of the

entities was not too bad in the video, but it would benefit from minor adjustments. The spacing

between entities in the draft developed in the video was not sufficient in a few cases. The

relationship diamond was too cramped between entity boxes. Making the lines longer would

make the cardinality labels (the 1 and the N) much easier to read.

The choice of names is not always straightforward. We will discuss some guidelines to consider

and naming suggestions in the next module.

Below is a final copy of the diagram for the draft copy shown on the white board in the above

video. The attributes are also included in the diagram below.

ER Diagram and Additional Notes

Figure 3.2: ER Diagram - Part 2

There are a few things to note with this diagram also. The first item is that on the board I used

lower case with an initial capital when I put the names in the relationship boxes. This does not

follow the convention used in the text where relationship names are entered in all caps. I

followed the text convention when I drew the final copy of the ER diagram.

Next, binary relationship names are normally chosen so that the ER diagram is read from left to

right and top to bottom. To make the diagram consistent with this guideline, I changed the

name of the relationship between student and department from MAJORS to MAJORS_IN.

Notice that a binary relationship can be "read" in either direction with a slight modification of

the name. Since the SECTION entity was below the COURSE entity in the diagram on the board,

I used the name of OFFERING for the relationship. Since I moved SECTION to the left of COURSE

in the final diagram, I changed the relationship name to OFFERING_OF since a section is a

specific offering of a course. Finally, I was going to change the name of the relationship

between DEPARTMENT and COURSE to OFFERS to make it read from top to bottom. I then

realized that I did not like the use of a form of "offer" twice, so I changed the name of the

relationship to OWNS.

Also note that the diagram on the board was somewhat cramped. The cardinality ratios were

appropriately placed on the participating edge, but it did not clearly show that it is customary

to place the cardinality ratio closer to the relationship diamond than to the entity rectangle.

As a reminder, the new conventions are listed first below. They are followed by conventions we

have used before.

Relationships are placed in a diamond. The relationship name is included inside the

box in all capitals.

For a binary relationship, the cardinality ratio value is placed on the edge between

the entity rectangle and the relationship diamond. It is placed closer to the

relationship diamond.

Entities are placed in a rectangular box. The entity name is included inside the box in

all capitals.

Attributes are places in an oval. The attribute name is included inside the oval with

only the first letter capitalized. If multiple words are used for clarity, they are

separated by underscores. Words after the first are not capitalized. Attribute names

are underlined for attributes which are the key (or part of the key) for the entity.

Attributes are connected to the entity they represent by a line.

M 3.5: An Example of a 1:1 Binary

Relationship

In the previous sub-module we proposed an example of 1:1 binary relationship. The example

considered a desire to keep the department chair in the database. We added the simplification

that the chair is a faculty member of the department, which is true in the vast majority of the

cases. In this case, the faculty would be the chair of at most one department, and the

department would have at most one chair. We can call the relationship CHAIR_OF. Another

simplification is that each department has a chair (it is possible that the spot is vacant, but we

will ignore that for now). Of course, there would be many faculty who are not chair, but this

does not invalidate the 1:1 relationship.

This relationship will not be added to the ER diagram from the last sub-module. The ER diagram

below shows how this part would be included. It includes the new CHAIR_OF relationship with

the BELONGS_TO relationship included for context.

No new symbols are needed for this relationship. The cardinality ratio is shown as before, but in

this case both values are 1.

Figure 3.3 ER Diagram Showing 1:1 Binary Relationship

M 4.1: Continued Design of a

Database

For our example in the last module, we worked from a set of requirements which produced a

conceptual design which contained several entities and their associated attributes. It also

contained several 1:N relationships. Here, we expand the example. This starts by expanding the

requirements and then by adding the necessary parts to the design and ER diagram.

A expanded set of requirements for the example is given below. Note that only point #6 has

been added; the rest of the requirements remain the same

This database will contain data about certain aspects of a university.

1. The university needs to keep information about its students. It wants to store the

university assigned ID of the student. This is recorded as a five-digit number. The

university also wishes to keep the student's name. At this time, the name can be

stored as a full name, in first name-last name order. There is no need to break the

name down any further. The major program of the student needs to be recorded

using the five letter code assigned by the university. The student's class rank also

needs to be kept as a two-letter code (FR for freshman, SO for sophomore, etc.).

Finally, the university wants to keep track of the academic advisor assigned to the

student. The advisor will be a faculty member in the student's department and will

be identified by the faculty ID assigned to the advisor.

2. The university also needs to keep information about its faculty. It wants to store the

university assigned ID of the faculty member. This is recorded as a four-digit

number. The faculty member's name must also be kept and like the name of a

student it will be stored as a full name and will not need to be broken down any

further. Finally, each faculty member is assigned to a department and this will be

stored as a five letter code, which also represents the major program of a student.

3. Information about each department will also be kept. This includes the assigned

code which represents the department, the full department name, and a code for

the building in which the department office is located.

4. Course information must also be kept. This includes the course number which

consists of the code for the department offering the course and the number of the

course within the department. This is stored as a single item, such as CMPSC431.

The course name will also be kept. This name will as shown in the university catalog

and may include abbreviations, such as Database Mgmt Sys. The credit hours earned

for the course will be kept, as will and the code for the department offering the

course. The code will most often be the first part of the course name, but the

department code will be repeated as a separate item.

5. Information must be maintained about each section of a course. A section ID will be

assigned to each section offered. This is a six digit number representing a unique

section - a specific offering of a specific course. For each section, the course number,

the semester offered, and the year offered must also be stored.

6. Finally, a transcript much be kept for each student. This must contain data which

includes the ID of the student, the ID of the section, and the grade earned by the

student in that section. Of course, there will be several instances for each student one for each section that was taken by the student.

Although these requirements are slightly expanded, they are still not comprehensive. Again,

they will suffice for our expanded, but still relatively simple example.

Since the earlier requirements were not modified, and the only change was an additional

requirement (requirement #6), the next step is to use the modified requirements to determine

if any of the original entities and their attributes need to be modified. If so, the modifications

should be noted. Then any new entities which should be included in the design should be

noted. For each new entity, the attributes describing the entity should then be listed.

After this, an expanded ER diagram will be drawn. Take a few minutes to develop the list of

modifications as well as new entities and associated attributes on your own.

Then keep this list handy as you move to the next sub-module.

M 4.2: The M:N Relationship

Hopefully, as you looked at the new set of requirements, you realized that with the first five

requirements remaining unchanged, the entities and attributes from the last module will not

need to be modified. The relationships identified in the last module will also not need to be

modified. That leads to the sixth (the new) requirement. Will it dictate changes to any of the

earlier work with entities or attributes? Will new entities, attributes, and/or relationships need

to be created?

At first it seems that a new entity, TRANSCRIPT, should be created. It will have attributes

STUDENT ID, SECTION ID, and GRADE. After noting that a grade is associated with a specific

student in a specific section, realize that a student will be in many sections while in school and

each section will consist of many students.

This leads to the realization that TRANSCRIPT is different. If STUDENT ID is chosen as the key

(remember that a key must be unique), SECTION_ID and GRADE would need to become

multivalued attributes since each student would have many sections and grades associated

with the STUDENT_ID. If SECTION_ID is chosen as the key, STUDENT_ID and GRADE would need

to become multivalued attributes since each section would have many students and grades

associated with the SECTION_ID. We will see in the next module that the relational model does

not allow attributes to be multivalued. Since that is the case, neither STUDENT_ID nor

SECTION_ID can be the key for a TRANSCRIPT entity. This will be further discussed in the

module on normalization later in the course.

Since a given student will take many sections over his/her time at the university, and since a

given section will contain many students, the relationship between student and section is

many-to-many. A many-to-many relationship is called an M:N relationship. Based on this, rather

than being an entity like we saw in the last module, the TRANSCRIPT "entity" is actually formed

from the relationship between the STUDENT entity and the SECTION entity. It will be shown as

a relationship (diamond) in the ER diagram.

What about the GRADE attribute? It will not be an attribute of the STUDENT entity since a given

student will have many grades. This would lead to GRADE being a multivalued attribute.

Similarly GRADE will not be an attribute of the SECTION entity since each section having many

students would again lead to GRADE being a multivalued attribute. It turns out that GRADE is an

attribute of the relationship. As such, the GRADE attribute will be shown in the ER diagram in an

oval which is connected to the the diamond containing the TRANSCRIPT relationship. Note that

TRANSCRIPT is not a good name for a relationship. Even though I used that name in the video, a

more appropriate name for a relationship is used in the ER diagram presented after the

video. We will see in later modules how this relationship will actually become an entity in the

schema design.

What about keys for this relationship? Since it is represented in diagram as a relationship with a

single attribute, GRADE, there is no actual key that is identified for the relationship (note that

GRADE does not qualify). As such, the GRADE is not underlined in the ER diagram. Later, as we

develop a schema design, we will develop this as an entity with the joint key of STUDENT_ID

and SECTION_ID. This is not stated at the ER diagram level, but will come out during schema

design.

At this point, take a few minutes to sketch how you think this would look when added to the ER

diagram.

Then watch the video, "ER Diagram - Part 3," where we will work through this together and

update the ER diagram.

ER Diagram - Part 3

ER Diagram and Additional Notes

Figure 4.1: ER Diagram Showing Inclusion of M:N Relationship

There are a few things to note with this diagram. First, since grade is actually an attribute of the

relationship rather than an attribute of one of the entities, the grade attribute is attached to

the relationship diamond.

On the board I used the name TRANSCRIPT for the relationship. Not following my sample

diagram closely enough during the production of the video, I looked at the new requirement

instead. Since the requirement discusses a transcript, I used that name on the board. Note that

this is an entity-type name since "transcript" looks like an entity on first reading. Since this is

actually a relationship, I changed the name to the more relationship appropriate name

ENROLLED_IN in the final diagram. This indicates that a student is (or was, depending on the

time we are looking at it) enrolled in a particular section.

Also on the board, I used N as the cardinality value on both sides of the transcript diamond and

noted that the N can represent a different value on the two sides. It just means "many" in this

context. This is common with many, but not all, authors even though the many-many

relationship is indicated by M:N . The authors of the text use M on one side and N on the other

as a reminder that the values can be different. I used this in the final diagram. Note as you

review the chapter that they do switch to using N on both sides when they introduce the (min,

max) cardinality notation later in the chapter.

We need no new symbols to represent the M:N relationship.

ER Diagram Naming Guidelines

The choice of names for entity types, attributes, and relationship types is not always

straightforward. We will discuss some guidelines to consider and naming suggestions. The

choice of names for roles will be discussed in the Recursive Relationships sub-module. As much

as possible, choose names that convey the meaning of the construct being named. The text

chooses to use singular (rather than plural) names for entity types since the name applies to

each individual entity instance in the entity type. Entity type and relationship type names are in

all caps, while attribute names and role names have only the first letter capitalized.

When looking at requirements for the database, some nouns lead to entity type names. Other

nouns describe these nouns and lead to attribute names. Verbs lead to the names of

relationship types.

When choosing names for binary relationships, attempt to choose names that make the ER

diagram readable from left to right and from top to bottom. Note that when it seems a

relationship type name should read from bottom to top, adjusting the name can usually allow

the reading to go from top to bottom.

M 4.3: Relationship Types of Degree

Higher than Two

In Module 3 we defined the degree of a relationship type as the number of entities which

participate in a relationship. So far, we have only looked at relationships of degree two, which

are called binary relationships. It is worth noting again, that degree and cardinality should not

be confused. Binary relationships (degree two) have cardinality of 1:1, 1:N, and M:N. Here we

examine relationships of degree three (ternary relationships). We will also look at the

differences between binary and higher-degree relationships. We will consider when to choose

each, and we will conclude by showing how to specify constraints on higher-degree

relationships.

Consider a store where the entities CUSTOMER, SALESPERSON, and PRODUCT have been

identified. Assume the store, such as an appliance store or a furniture store, sells higher priced

items where unlike other stores such as a grocery store, most customers buy only one or a few

items at a time from a salesperson who is on commission. The customer is identified by a

customer number, the product is identified by an item number, and the salesperson is

identified by a salesperson number. It is desirable to keep track of which items a customer

purchased on a particular day from which salesperson. Although not common, a customer may

buy more than one of a particular item on a given day. An example would be to buy two

matching chairs. Since that is a possibility, we want to record the quantity of a particular item

which is purchased as well as the date purchased. We can then define a ternary relationship,

we'll call it PURCHASE, between CUSTOMER, SALESPERSON, and PRODUCT. Note that other

names for the relationship will work, and may be preferable, depending on the actual

application. Also note that the three entities will have other attributes. To avoid clutter which

may detract from the main point being demonstrated here, only the key attribute is shown for

the three entities. How this is represented in ER diagram notation is shown in the figure below.

ER Diagram Representation of a Ternary Relationship

The ER diagram above shows how the three entities are related. Since the date of sale and the

quantity sold are both part of an individual purchase of the customer, salesperson, and product

(there may be several of these purchases over time) these two attributes are attached to the

PURCHASE relationship rather than to any of the individual entities.

It is possible to define binary relationships between the three entities. We can define an M:N

relationship between CUSTOMER and SALESPERSON. Let's call it BOUGHT_FROM. We can also

define an M:N relationship between CUSTOMER and PRODUCT and name the relationship

BOUGHT. Finally, we can define an M:N relationship between SALESPERSON and PRODUCT and

call it SOLD. A representation of this in ER diagram notation is shown below.

ER Diagram Representation of Three Binary Relationships

among the Three Entities

Are the three binary relationships, taken together, equivalent to the ternary relationship? At

first glance, the answer appears to be "yes." However, closer examination shows that the two

methods of representation are not exactly equal. If customer Fred buys two matching chairs

from salesperson Barney on November 15, 2020, that information can be captured in the

ternary relationship (PURCHASE in this case). In the three binary relationships we can capture

much, but not all of the information. The fact that Barney bought items from Fred can be

captured in the BOUGHT FROM relationship. The fact that Barney bought at least one chair can

be captured in the BOUGHT relationship. The fact that Fred sold chairs can be captured in the

SOLD relationship.

What is not clearly captured is that Fred sold the chairs to Barney. It is possible that Fred sold

something, say a table, to Barney. Fred sold chairs to someone, say Wilma. Barney bought the

chairs but not from Fred. Maybe he bought them from Betty. If you examine this closely, the set

of facts will generate the same information in the binary relationships as the sale in the last

paragraph did. Also, it would not be possible to capture the date of sale or quantity sold in the

same manner in three binary relationships. This should become even clearer in the next module

which discusses relational database design.

M 4.4: Recursive Relationships

In some cases, a single entity participates more than once in a relationship type. This happens

when the entity takes on two different roles in the relationship. The text refers to this as

a recursive relationship or self-referencing relationship. Looking at this further, this is a

relationship of degree one since there is only one entity involved in the relationship. A

relationship of degree one is known as a unary relationship. A cardinality can be assigned to

such a relationship depending on the number of entity instances that can participate in one role

and the number of entity instances that can participate in the other role.

As an example, consider the FACULTY entity. Assume that all department chairs are also faculty.

As in the past this is mostly true, but not always. We will make the assumption for simplicity. If

dictated by the requirements, we can consider a SUPERVISES relationship where the chair

supervises the other faculty in the department. Since only one entity instance represents the

"chair side" of the relationship, but many entity instances represent the "other faculty in the

department" side of the relationship, the cardinality of the relationship is 1:N. This, then,

represents a 1:N unary relationship.

In a unary relationship, role names become important since we are trying to capture the role

played by the participating entity instances. We can define one role as chair, and the other

as regular faculty. There are probably better names for the roles (especially the second one).

Can you think of any?

This relationship will again not be added to the larger ER diagram. The ER diagram below shows

how this relationship would be captured. Note how the roles are depicted.

ER Diagram Representation of a Recursive Relationship

Figure 4.4: ER Diagram of Recursive Relationship

M 4.5 Participation Constraints and

Existence Dependencies

In the last module, we looked at cardinality ratios (1:1, 1:N, and M:N). These

indicate the maximum number of entity instances which can participate in a particular

relationship. Is there a concern about the minimum number of instances which can (in this case

it would be must) participate?

The text discusses this concept by discussing participation constraints. The participation

constraint indicates whether in order to exist, an entity instance must be related to an instance

in another entity involved in the relationship. Since this constraint specifies

the minimum number of entities that can participate in the relationship, it is also called

the minimum cardinality constraint.

Participation constraints are split into two types - total and partial. Going back to the ER

diagram for the example database, FACULTY and DEPARTMENT were related by the

BELONGS_TO relation. If there is a requirement that all faculty members must be assigned to a

department, then a faculty instance cannot exist unless it participates in at least one

relationship instance. This means that a faculty instance cannot be included unless it is related

to a department instance in the BELONGS_TO relationship instance.

This type of participation is called total participation, which means that every faculty

record must be related to a department record. Total participation is also called existence

dependency.

As a different example, consider that STUDENT and DEPARTMENT are related by the

MAJORS_IN relationship. If the requirement for students and majors specifies that a student is

not required to have a declared major until junior year, that means that not every student is

required to participate in the MAJOR relationship at all times. This is an example of partial

participation meaning that only some or part of all students participate in the MAJOR

relationship.

The cardinality ratio and participation constrains taken together are known as the structural

constraints of a relationship. This is also called the (min, max) cardinality. This is further

discussed in the toggle at the end of this sub-module.

For ER diagrams, the text uses cardinality ratio/single-line/double-line notation. To keep the

example fairly simple, we will use Figure 4.1 as an example. This uses the cardinality ratio as we

did in Figure 4.1. In that figure, we also used a line (single-line) to connect entities and

attributes and to connect all entities and relationships. We will modify this to connect an entity

to a relationship in which it totally participates by using a double-line rather than a single line. If

the participation is partial, then we will leave the connection as a single line.

Figure 4.1: ER Diagram Showing Inclusion of M:N Relationship

This forces us to obtain additional information about relationships as the requirements are

gathered. Below is shown the ER diagram of Figure 4.1 modified to include double-line

connectors as required by the following assumptions.

As in the example above, for the BELONGS_TO relationship we will assume that all

faculty members must be assigned to a department. We will further assume that

every department must have at least one faculty member. This implies that there is

total participation in both directions. This means that there will be double-lines

connecting both entities to the relationship.

As in the second example above, we will assume that a student is not required to

declare a major until junior year. Since this indicates partial participation, there will

be only a single line connecting student to the MAJORS_IN relationship. We will

further assume that some departments may be "support departments" which do

not offer majors, so this is partial participation also. This means that there will be

single lines connecting both entities to the relationship.

To continue with the relationships, assume that each student must have an advisor,

but not all faculty advise students. So student fully participates in the ADVISED_BY

relationship and should be connected by a double line. Faculty, on the other hand,

only partially participates so will be connected by a single line.

For the OWNS relationship, we will assume that each department must own at least

one course and every course must be owned by a department. This again means

that there is total participation in both directions and there will be double-lines

connecting both entities to the relationship.

For the OFFERING_OF relationship, each section must be must be an offering of a

particular course, so there is total participation and a double-line in that direction.

To allow the possibility that a new course can be added without immediately being

scheduled, we will not require that every course will have a section associated with

it (although we assume that it will in the near future). This is then partial

participation and the connection is a single-line.

Finally, for the ENROLLED_IN relationship, we will allow for a student (probably a

new student) to be entered into the STUDENT entity if the student is not yet

enrolled in courses. This implies a partial participation in the relationship. Similarly,

we will allow a new section to be posted to the SECTION entity before any students

are enrolled. Again, this implies a partial participation in the relationship. Since both

sides have partial participation, the single lines will remain in place.

The revised ER diagram below shows participation constraints.

Figure 4.5: ER Diagram Showing Participation Constraints

An Example of (min, max) notation

We will start this example by again considering the BELONGS_TO relationship discussed above.

In the example, we assumed that all faculty members must be assigned to a department. We

will clarify that the faculty member can be assigned to only one department. We further

assumed that every department must have at least one faculty member. We will clarify that

most departments have several faculty members. Based on the assumptions, this gives

DEPARTMENT:EMPLOEE a cardinality ratio of 1:N. Since every faculty must be assigned to a

department, and every department must have at least one faculty member, this implies that

there is total participation in both directions. Total participation means that the minimum

cardinality must be at least one. Based on this, the (min, max) notation for the department side

of the relationship would be (1, N). The department must have at least one faculty member and

may have several faculty members. The (min, max) for the faculty side of the relationship is (1,

1). This indicated that the faculty member must be in at least one department and may be in at

most one department. This was represented in Figure 4.5 by using double lines to connect both

the FACULTY and DEPARTMENT entities to the BELONGS_TO relationship, indicating that the

minimum cardinality on both sides is one. In figure 4.5, the 1 on the DEPARTMENT side

indicates that the maximum cardinality for FACULTY is one, while the N on the faculty side

indicates that the maximum cardinality for DEPARTMENT is N.

Using the (min, max) notation in an ER diagram is one of the many alternative notations

mentioned in the text. Using this notation for the BELONGS_TO relationship and the two

entities would look like the following. Note that the attributes are omitted to allow focus on the

notation.

Figure 4.6: ER Diagram Showing use of (min, max) Notation

The notation used in the text for total participation (the double line) indicates that the

minimum cardinality is one. What if the minimum cardinality is greater than one? This cannot

be directly represented by the text notation. If there is a requirement that each department

have at least three faculty assigned, that fact cannot be directly represented by the notation

used in the text (nor can it be represented in most notations). It can only represent a full or

partial participation - a minimum of zero or one. The (min, max) notation allows representation

of this. The (1, N) on the DEPARTMENT side would be replaced by (3, N), thereby indicating that

there must be at least three faculty in the department.

A note is in order here about which side of the relationship should be used to place the various

values. This is not consistent across various notations. Back in the 1990s, the terms Look

Across and Look Here were introduced to indicate where the cardinality and participation

constraints were placed in the various notations. Looking again at the BELONGS_TO

relationship in Figure 4.5, the notation followed in the text uses Look Across for the cardinality

constraints. A faculty member can work for for only one department. A department can have

many faculty. So the 1 for faculty is placed across the relationship diamond, on the

DEPARTMENT side, while the N for the department is placed across the relationship, on the

FACULTY side. Different notations use Look Here and would reverse the placement.

The text then switches to Look Here for the participation constrains. BELONGS_TO is not a good

relationship to demonstrate this since the minimum cardinality on both sides of of the

relationship is one, so both entities are connected by double lines to the relationship. Looking

at the ADVISED_BY relationship, we see that participation is partial on one side and total on the

other side. Since a student must have an advisor, the STUDENT entity is connected by a double

line to the ADVISED_BY relationship. Since the double line is on the STUDENT side, that is a

Look Here notation. Similarly, some faculty do not have advisees, so that is partial participation

(minimum cardinality of zero). This means that the FACULTY entity is connected to the

relationship by a single line. Since this line is placed on the FACULTY side, it again shows the

Look Here guidelines of the notation.

Returning to Figure 4.6, the text uses Look Here when illustrating the (min, max) notation. A

faculty belongs to at least one and at most one department, so Look Here places the (1, 1) on

the FACULTY side. A department has at least one, but possibly many faculty, so Look Here

places the (1, N) on the DEPARTMENT side. As the text indicates, other conventions use Look

Across when using (min, max) notation.

To avoid confusion, we will stick with the primary notation used by the text. This is used in

Figure 4.5. Just be aware that if you are involved with creating or reading ER diagrams in the

future, be sure to check to see what notation is being used since it might be different from

what we are using here.

M 4.6: Design Choices, Topics Not

Covered, and Additional Notes

In section 3.7.3, the text covers design choices for ER conceptual design. It is sometimes

difficult to decide how to capture a particular concept in the miniworld. Should it be modeled

as an entity type, an attribute, as relationship type, or something else? Some general guidelines

are given in the text. It is important to again note that conceptual schema design overall, and

the production of an ER diagram should be thought of as an iterative process. An initial design

should be created and then refined until the most appropriate design is obtained. The text

suggests the following refinements.

In many cases, a concept is first modeled as an attribute and then refined into a

relationship when it is determined that the attribute is a reference to another entity

type. An example of this is looking at the attribute "Advisor" for the STUDENT entity

in sub-module 3.3. This attribute is a reference to the FACULTY entity type and is

captured as the ADVISED_BY relationship type in sub-module 3.4. The text then

indicates that in the notation used in the text, once an attribute is replaced by a

relationship, the attribute itself should be removed from the entity type to avoid

duplication and redundancy. This removal was done in the "complete" ER diagram

shown in Figure 3.2 in the text. However, this type of attribute was included in later

figures (e.g. Figure 3.8) which show the development of an ER diagram and would be

produced earlier in the design process. In the actual development process, Figure

3.2 would be produced by the end of the iterative process. The text shows it at the

beginning of the chapter so it can refer back to it during the chapter. We have not

removed those attributes in the diagrams so far produced. Many authors will not

remove those attributes, depending on the ER diagram notation being used. They

are removed in the diagram in the next module when the diagram is being used as

an example for illustrating the steps of an algorithm for mapping an ER diagram to a

relational model.

Sometimes an attribute is captured in several entity types. This occurred in the

example where Department Number was captured as an attribute in the FACULTY,

COURSE, and STUDENT (as Major) entity entity types. This is an attribute which

should be considered for "promotion" to its own entity type. Note that in the

example, DEPARTMENT had already been captured as its own entity type, so this

type of consideration did not apply to the example. If it had not already been

captured as an entity type, such a promotion would be considered based on this

guideline.

The reverse refinement can be considered in other cases. For example, suppose

DEPARTMENT was identified in the initial design and the only attribute identified

was Department_code. Further, assume that this was only identified as needed as

an attribute on one other entity type, say FACULTY. It should then be considered to

"demote" DEPARTMENT to an attribute of FACULTY.

In order to avoid additional complexity, a few topics that could be covered in this module are

intentionally not discussed in this course. Some are important in designing certain aspects of

very large databases. Others can be included in the design at the conceptual (ER model) level,

but will be "modified out" when the design is used to create a relational model. The intent here

is to develop a good understanding of the basics. If you are working on a design, possibly even

something you work on for a portion of your final project, and want to represent something

that just doesn't seem to fit into what we have covered here, please come back to the text and

explore the items we omitted.

Some specifics are:

Multivalued and composite attributes are not allowed in a relational schema. It is

usually better to modify the conceptual design to not include them.

Weak entity types (Section 3.5 in the text) can be handled by key selection in the

relational schema. We will handle them the same way as we handled other entity

types and relationships.

Whether or not an attribute will be derived is a design choice better made when

looking at the DBMS being used. This will be specified like other attributes in the ER

diagram.

It should again be pointed out that there are several alternate notations for drawing ER

diagrams. Some of the alternate notations are shown in Appendix A of the text. Most have both

good and not-so-good features. Often, it is what you become accustomed to use or what your

company has decided to use. It is not important in this course that you learn them all. You just

need to be aware that alternatives do exist.

We are not going to discuss (or use) UML Class Diagrams in this course. They are presented in

Section 3.8 in the text. You are likely already familiar with them and have seen a more detailed

presentation in earlier courses.

M 5.1: The Relational Database Model

and Relational Database Constraints

The relational data model was first introduced in in 1970 in a (now) classic paper by Ted Codd

of IBM. The idea was appealing due to its simplicity and its underlying mathematical

foundation. It is based on the concept of a mathematical relation which, in simple terms, is a

table of values. The computers at that time did not have sufficient processing power to make a

DBMSs built on this concept commercially viable due to the slow response time. Two research

relational DBMSs were built in the mid-1970s and became well known at the time: System R at

IBM and Ingres at the University of California Berkeley. During the 1980s, computer processing

power had improved to the point where relational DBMSs became feasible for many

commercial database applications and several commercial products were released, most

notably DB2 from IBM (based on System R), a commercial version of Ingres (which was faulted

for its poor user interface), and Oracle (from a new company named Oracle). These products

were well received, but at their initial release still could not be used for very large databases

which still required more processing power to effectively use a relational database. As

computer processing power kept improving, by the 1990s, most commercial database

applications were built on a relational DBMS.

Today, major RDBMSs include DB2 and Oracle as well as Sybase (from SAP) and SQLServer and

Microsoft Access (both from Microsoft). Open source systems are also available such as MySQL

and PostgreSQL.

The mathematical foundations for the relational model include the relational algebra which is

the basis for many implementations of query processing and optimization. The relational

calculus is the basis for SQL. Also included in the foundations are aspects of set theory as well

as the concepts of AND, OR, NOT and other aspects of predicate logic. This topic is covered in

much greater detail in Chapter 8 of the text. Please read this chapter if you are interested in

this topic, but it will not be covered in this introductory course.

M 5.1.1: Concepts and Terminology

The relational model represents the database as a collection of relations. A relation is similar to

a table of values, or a flat file of records. It is called a flat file since each record has a simple flat

structure. A relation and a flat file are similar, but there are some key differences which will be

discussed shortly. Note that a relational database will look similar to the example database

from Module 1.

When looking at a relation as a table of values, each row in the table represents a set of related

values. This is called a tuple in relational terminology, but in common practice, the formal term

tuple and the informal term row are used interchangeably.

The table name and the column names should be chosen to provide, or at least suggest, the

meaning of the values in each row. All values in a given column have the same data type.

Continuing with the more formal relational terminology, a column is called an attribute and

a table is called a relation. Here also, in common practice the formal and informal terms are

used interchangeably.

The data type which indicates the types of values which are allowed in each column is called

the domain of possible values.

More details and additional concepts are discussed below.

Domains

A domain defines the set of possible values for an attribute. A domain is a set of atomic

values. Atomic means that each value in the domain cannot be further divided as far at the

relational model is concerned. Part of this definition does rely on design issues. For example, a

domain of names which are to be represented as full names can be considered to be atomic in

one design because there is no need to further subdivide the name for this design. Another

design might require the first name and last name to be stored as separate items. In this design,

the first name would be atomic and the last name would be atomic, but the full name would

not be atomic since it is further divided in this design.

Each domain should be given a (domain) name, a data type, and a format. One example would

be phone_number as a domain name. The data type can be specified as a character string. The

format can be specified as ddd-ddd-dddd, where "d" represents a decimal digit. Note that there

are some further restrictions (based on phone company standards) which are placed on both

the first three digits (known as the area code) and the second set of three digits (known as the

exchange). These can be completely specified to reduce the chance of a typo-like error, but this

is usually not done.

Another example would be item_weight as a domain name. The data type could be an integer,

say from 0 to 500. It could also be a float from 0.0 to 500.0 if fractions are important, especially

at lower weights. An additional format specification would not be needed in this case.

However, in this case a unit of weight (pounds or kilograms) should be specified.

It is possible for several attributes in a relation to have the same domain. For example, many

database applications require a phone number to be stored. This can be assigned

the phone_number domain given above. In addition, it is becoming common for databases to

store home phone, work phone, and cell phone. In this case there would be three attributes. All

would use the same phone_number domain.

Relation Schema

If we skip the mathematical nomenclature, a relation schema consists of a relation name and a

list of attributes. Each attribute names a role played by some domain in the relation schema.

The degree (also known as arity) of a relation is the number of attributes in the relation. If a

relation is made up of five attributes, the relation is said to be a relation of degree five.

Relation State

The relation state (sometimes just relation) of a relation schema, is defined by the set of rows

currently in a relation. If the degree of the relation is n, each row consists of an n-tuple of

values. Each value in the n-tuple is a member of the domain of the attribute which matches the

position of the value in the tuple. The exception is that a tuple value may contain the value

NULL, which is a special value. Sometimes, the schema is known as the relation intension and

state is known as the relation extension.

The value NULL is assigned to a value in a tuple in three different cases. First, it is used when a

value does not apply. For example, many databases include information about a

customer's company in their sales record. Assume the database keeps track of both corporate

and individual customers. If the sale is personal (to an individual) and not on behalf of the

company, all information related to the customer's company would be given the value of NULL

in that record. A second case where NULL is used is when a value does not exist. An example is a

customer home phone. While the value does apply in general, many people no longer have land

line phones at home. If a customer does not have a home phone, that value would be stored as

NULL in the record. Finally, NULL is also used when it is unknown whether a value exists.

Consider again the phone example above. In the above example we knew that the customer did

not have a home phone. There is also a case where we don't know whether or not the

customer has a home phone. If the customer has a home phone, we do not know the number.

We would need to record a NULL value in this case also.

M 5.1.2: Characteristics of Relations

A relation can be viewed as similar to a file or a table. There are similarities, but there are

differences as well. Some of the characteristics which make a relation different are discussed

below.

Ordering of Tuples in a Relation

A relation is defined as a set of tuples. In mathematics, there is no order to elements of a set.

Thus, tuples in a relation are not ordered. This means that in a relation, the tuples may be in

any order - one ordering is as good as any other. There is no preference for any particular

order.

In a file, on the other hand, records are stored physically (on a disk for example), so there is

going to be an ordering of the records which indicates first, second, and so on until the last

record. Similarly, when we display a relation in a table, there is an order in which the rows are

displayed: first, second, etc. This is just one particular display of the relation and the next

display of the same relation, may show the table with the tuples in a different order.

However, there is a restriction on relations that no two tuples may be identical. This is

different from a file, where duplicate records may exist. In many file uses, there are checks to

make sure that duplicate records do not exist, but the nature of a file does not place

restrictions on duplicate records. In a relational DBMS, duplicate tuples will not be allowed. No

additional software checks will need to be performed.

Ordering of Values within a Tuple

The ordering of values in a tuple is important. The first value in a tuple indicates the value for

the first attribute, the second value in a tuple indicates the value for the second attribute, etc.

There is an alternative definition where each tuple is viewed as a set of ordered pairs, where

each pair is represented as (<attribute>, <value>). This provides self-describing data, since the

description of each value is included in the tuple. We will not use the alternative definition in

this course.

Values in the Tuples

Each value in a tuple must be an atomic value. This means that it cannot be subdivided within

the current relational design. Therefore, composite and multivalued attributes are not allowed.

This is sometimes called the flat relational model. This is one of the assumptions behind the

relational model. We will see this concept in more detail when we discuss normalization in a

later module. Multivalued attributes will be represented by using separate relations. In the

strictly relational models, composite attributes are represented by their simple component

attributes. Some extensions of the strictly relational model, such as the object-relational model,

allow complex-structured attributes. We will discuss this in later modules. For the next several

modules, we will assume that all attributes must be atomic.

Interpretation (Meaning) of a Relation

A relation schema can be viewed as a type of assertion. As an example, consider the example

database presented in Module 1. The COURSE relation asserts that a course entity has Number,

Name, Credit_hours, and Department. Each tuple can be viewed as a fact, or an instance of the

assertion. For example, there is a COURSE with Number CMPSC121, Name "Introduction to

Programming, Credit_hours: 3, and Department "CMPSC".

Some relations, such as COURSE represent facts about entities. Other relations represent facts

about relationships, such as the TRANSCRIPT relation in the example database. The relational

model represents facts about both entities and relationships as relations. In the next major

section of this module, we will examine how different constructs in an ER diagram are

converted into relations.

M 5.1.3: Relational Model Constraints

There are usually many restrictions or constraints that should be put on the values stored in a

database. The constraints are directed by the requirements representing the miniworld that the

database is to represent. This sub-module discusses the restrictions that can be specified for a

relational database. The constraints can be divided into three main categories.

1. Constraints from the data model, called inherent constraints. These are modelbased.

2. Constraints which are schema-based or explicit constraints. These are expressed in

the schemas of the data model, usually by specifying them in the DDL.

3. Constraints which cannot be expressed in either #1 or #2. They must be expressed in

a different way, often by the application programs. These are called semantic

constraints or business rules.

Constraints of type #1 are driven by the relational model itself and will not be further discussed

here. An example of a constraint of this type would be the fact that no relation can have two

identical tuples. Constraints of type #3 are often difficult to express and enforce within the data

model. They relate to the meaning and behavior of the attributes. These constraints are often

enforced by application programs that update the database. In some cases, this type of

constraint can be handled by assertions in SQL. We will discuss these in a future module.

An additional category of constraints is called data dependencies. These include functional

dependencies and multivalued dependencies. This category focuses on the quality of the

relational database design. Database normalization uses these. We will discuss normalization in

a later module.

Below, the type #2 constraints, the schema-based constraints are listed and discussed.

Domain Constraints

Domain constraints indicate the requirement for the value of a particular attribute in each tuple

of the relation by specifying the domain for the attribute. In an earlier sub-module, we

discussed how domains are specified. Some data types associated with domains are: Standard

data types for integers and real numbers, characters, Booleans, and both fixed and variablelength strings. Other special data types are available. These include date, time, timestamp, and

others. This is further discussed in the next module.

It is also possible to further restrict data types, for example by taking an int data type and

restricting the range of integers which are allowed. It is also possible to provide a list of values

which are allowed in the attribute.

Key Constraints and Constraints on NULL Values

We indicated above that in the definition of a relation, no two tuples may have the exact same

values for all their elements. It is often the case that many subsets of attributes will also have

the property that no two tuples in the relation will have the exact same values for the subset of

attributes. Any such set of attributes is called a superkey of the relation. A superkey specifies a

uniqueness constraint in that no two tuples in the relation will have the exact same values for

the superkey. Note that at least one superkey must exist; it is the subset which consists of all

attributes. This subset must be a superkey by the definition of a relation.

A superkey may have redundant attributes - attributes which are not needed to insure the

uniqueness of the tuple. A key of a relation is a superkey where the removal of any attribute

from the set will result in a set of attributes which is not longer a superkey. More specifically, a

key will have the properties:

1. Two different tuples cannot have identical values for all attributes in the key. This is

the uniqueness property.

2. A key must be a minimal superkey. That means that it must be a superkey which

cannot have any attribute removed and still have the uniqueness property hold.

Note that this implies that a superkey may or may not be a key, but a key must be a superkey.

Also note that a key must satisfy the property that it is guaranteed to be unique as new tuples

are added to the relation.

In many cases, a relation schema may have more than one key. Each of the keys is called

a candidate key. One of the candidate keys is then selected to be the primary key of the

relation. The primary key will be underlined in the relation schema. If there are multiple

candidate keys, the choice of the primary key is somewhat arbitrary. Normally, a candidate key

with a single attribute, or only a small number of attributes is chosen to be the primary key. The

other candidate keys are often called either unique keys or alternate keys.

One additional constraint that is applied to attributes specifies whether or not NULL values are

permitted for the attribute. Considering the example database from Module 1, if every student

must have a declared major, then the MAJOR attribute is constrained to be NOT NULL. If it is

acceptable for a student to at times not have a declared major, then the attribute is not so

constrained.

Entity Integrity, Referential Integrity, and Foreign Keys

The entity integrity constraint requires that no tuple may have a NULL value in any attribute

which makes up the primary key. This follows from the fact that the primary key is used to

uniquely identify tuples in a relation.

Both entity integrity constraints and key constraints deal with a single relation. On the other

hand, referential integrity constraints are used between two relations. They are used to

maintain consistency between the tuples of the two relations. A referential integrity constraint

indicates that a tuple in one of the relations refers to a tuple in a second relation. Further, it

must refer to an existing tuple in the second relation. For example, in the example database,

the MAJOR attribute in the STUDENT relation refers to and must match the value in some tuple

in the CODE attribute of the DEPARTMENT relation.

More specifically, we need to define the concept of a foreign key. A set of attributes in Relation

One is a foreign key that references Relation Two if it satisfies:

1. The attributes of the foreign key in Relation One have the same domains as the

primary key attributes in Relation Two. The foreign key is said to reference Relation

Two.

2. The value of the foreign key in a tuple of Relation One must either occur as a value

of a primary key in Relation Two or must be NULL.

Note that it is possible for a foreign key to refer to the primary key in its own relation. This can

occur in a unary or recursive relationship.

Integrity constraints should be indicated on the relational database schema. Many of these

constraints can be specified in the DDL and automatically enforced by the DBMS.

M 5.1.4: Update Operations and

Dealing with Constraint Violations

Operations applied to an RDBMS can be split into retrievals and updates. Queries are used to

retrieve information from a database. Retrievals will be discussed later. Retrieving information

does not violate any integrity constraints. There are three types of update operation:

1. Insert (sometimes referred to as create) is used to add a new tuple to the database.

2. Update (sometimes referred to as modify) is used to change the value of one or

more attributes in an existing tuple.

3. Delete is used to remove a tuple from the database.

Each of the three update operations can potentially violate integrity constraints as indicated

below. How the potential violations can be handled is also discussed.

Insert Operation

The insert operation provides a list of attribute values for a new tuple to be added to a relation.

This operation can violate:

1. Domain constraints if a value is supplied for an attribute which is not of the correct

data type or does not adhere to valid values in the domain.

2. Key constraints if the key value for the new tuple already exists in another tuple in

the relation.

3. Entity integrity if any part of the primary key of the new tuple contains the NULL

value.

4. Referential integrity if the value of any foreign key in the new tuple refers to a tuple

which does not exist in the referenced relation.

In most cases, when an insert operation violates any of the above constraints, the insert is

rejected. There are other options, but they are not commonly used.

Delete Operation

The delete indicates which tuple should be deleted. This operation can only violate referential

integrity.

This violation happens when the tuple being deleted is referenced by foreign keys in other tuples

in the database. When deleting a tuple would result in a referential integrity violation, there are

three main options available:

1. Restrict - reject the deletion.

2. Cascade - try to propagate the deletion by deleting not only the tuple specified in