Table of Contents

Chapter 1

QoS for Wireless Applications ...............3

CCNP Wireless

(642-742 IUWVN)

Quick Reference

Jerome Henry

ciscopress.com

Chapter 2

VoWLAN Architecture .......................... 38

Chapter 3

VoWLAN Implementation .................... 58

Chapter 4

Multicast over Wireless ....................... 95

Chapter 5

Video and High-Bandwidth

Applications over Wireless ................ 115

[2]

CCNP Wireless (642-742 IUWVN) Quick Reference

About the Author

Jerome Henry is technical leader at Fast Lane. Jerome has more than 10 years of experience teaching technical

Cisco courses in more than 15 countries and in 4 different languages, to audiences ranging from Bachelor degree

students, to networking professionals, to Cisco internal system engineers. Jerome joined Fast Lane in 2006. Before

this, he consulted and taught Heterogeneous Networks and Wireless Integration with the European Airespace team,

which was later acquired by Cisco and became its main wireless solution. He is a Certified Wireless Networking

Expert (CWNE #45), CCIE Wireless (#24750), and CCNP Wireless, and has developed several Cisco courses

focusing on wireless topics, including CUWSS, IAUWS, IUWNE, IUWMS, IUWVN, CWLBS, and the CWMN

lab guide. With more than 20 IT industry certifications and more than 10,000 hours in the classroom, Jerome was

awarded the IT Training Award Best Instructor silver medal in 2009. He is based in Cary, North Carolina.

About the Technical Reviewer

Denise Papier is senior technical instructor at Fast Lane. Denise has more than 11 years of experience teaching

technical Cisco courses in more than 15 different countries, to audiences ranging from Bachelor degree students to

networking professionals and Cisco internal system engineers. Focusing on her wireless experience, Denise joined

Fast Lane in 2004. Before that, she was teaching the Cisco Academy Program and lecturing BSc (Hons) Information

Security at various universities. She is CCNP Wireless and developed several Cisco courses focusing on wireless

topics (IUWNE, IAUWS, ACS, ISE, lab guides, and so on). With more than 15 IT industry certifications (from

Cisco CCNP R & S, CCIP to Microsoft Certified System Engineer and Security Specialist, CICSP - Cisco IronPort

Certified Security Professional) and more than 5000 hours in the classroom, Denise is a fellow member of the

Learning and Performance Institute (LPI). She is based in the United Kingdom.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[3]

CCNP Wireless (642-742 IUWVN) Quick Reference

Chapter 1

QoS for Wireless Applications

Quality of service (QoS) for wireless applications is an important topic because it can lead, if misunderstood, to many structural

mistakes in wireless networks deployments and poor quality when QoS-dependent devices (for example, VoIP phones) are

added. Expect to be tested extensively on this topic on the IUWVN exam. Make sure to understand the concepts and their related

configuration.

QoS Concepts

QoS is described as a tool for network convergence (that is, the efficient coexistence of VoIP, video, and data in the same network).

QoS does not replace bandwidth, but provides a means to ensure that all traffic gets the best treatment (adapted to each traffic type) in

times of network congestion. QoS can be implemented in three different ways:

■

Best effort, this is basically “no implementation”: All traffic is treated the same way. When buffers are full, additional

frames are dropped, regardless of what type of traffic they carry.

■

Integrated services (IntServ), also called “hard” QoS: Out-of-band control messages are used to check and reserve endto-end bandwidth before sending packets into the network. Resource Reservation Protocol (RSVP) and H.323 are both

examples of IntServ QoS methods. Each node on the path must support IntServ, which is difficult to achieve in large IP

networks.

■

Differentiated services (DiffServ), the most common method: Each type of traffic receives an importance value

represented by a number. Each node on the path independently implements one or several prioritization techniques based on

each traffic number.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[4]

Chapter 1: QoS for Wireless Applications

DiffServ is the method used in most IP networks and can be implemented with different techniques, as detailed in the following

sections. You can use each alone or in combination with others.

Classification and Marking

The first step in a QoS approach is to identify the different types of traffic that traverse the network and classify them into categories,

such as:

■

Internetwork control traffic: Control and Provisioning of Wireless Access Points (CAPWAP) Protocol, Enhanced Interior

Gateway Routing Protocol (EIGRP) updates that need to be transmitted for the network to function

■

Critical traffic: VoIP that cannot be delayed without impacting the call quality

■

Standard data traffic: Email, web browsing, and so on

■

Scavenger traffic: Traffic that is accepted but receives the lowest priority, such as peer-to-peer file download and so forth

Classification can be done through deep packet inspection to look at the packet content at Layer 7 (for example, Cisco Network

Based Application Recognition [NBAR]) is a function of the IOS that can recognize applications); through access control lists (ACL)

based on incoming interface, source, or destination ports or addresses; or through many other techniques. Once each traffic type is

established, mark each identified packet with a number showing the traffic priority value. This marking should be done as close to

the packet source as possible. Some devices (IP phones, for example) can mark their own traffic. You should decide where traffic

is identified and marked, and from where marking is trusted (called the trust boundary). This trust boundary should be as close as

possible to the point where the packet enters the network (for example, at the sending device network interface, or at the access switch

where the device connects). You cannot always trust devices or user marking, or that the access switch will perform the classification,

so you might have to move this trust boundary to the distribution switch.

To apply marking on Cisco IOS using standard QoS configuration commands (called the Modular QoS Console [MQC]), which is a

component of the IOS command set), you can create a class map and specify one or several conditions that identify the traffic. (Each

condition can be enough to identify the traffic if you use the keyword match-any, or they must all match if you use the keyword

match-all.) Example 1-1 shows a class map.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[5]

Chapter 1: QoS for Wireless Applications

Example 1-1

Class Map

Router(config)# access-list 101 permit tcp any host 192.168.1.1 eq 80

Router(config)# class-map match-any MyExample

Router(config-cmap)# match ip dscp 46

Router(config-cmap)# match ip precedence 5

Router(config-cmap)# match access-group 101

Router(config-cmap)# match protocol http

Router(config-cmap)# exit

Marking can be done at Layer 2 or Layer 3. At Layer 2, the priority tag can be inserted into the Class of Service (CoS) field available

in the 802.1p section of the 802.1Q 4-byte element, which is added to frames transiting on a trunk. The CoS field offers 3 bits and

8 values (from 0 [000] to 7 [111]). Its limitations are that it is only present on frames that have an 802.1Q VLAN tag (that is, not

on frames sent on switch ports set to access mode, and not on frames using the native VLAN on trunks) and that it does not survive

routing (a router removes the Layer 2 header before routing a packet, thus losing the CoS value). Its advantage is that it is on a low

layer and can be used efficiently by Layer 2 switches.

At Layer 3, the Type of Service (ToS) field in the IP header can be used for marking. One way to use this field is called IP

Precedence, and uses 3 bits to duplicate the Layer 2 CoS value and position this value at Layer 3, allowing the QoS tag to survive

routing. Eight values may still be a limited range for advanced classification, and the ToS field contains 8 bits. Therefore, another

way to use this field exists: Differentiated Service Code Point (DSCP). DSCP uses 6 of the 8 bits (allowing for 64 QoS values). The

last 2 bits are used to inform the destination point about congestion on the link. The first 3 bits are used to create a priority category

(or class). There are four types of classes: Best Effort (BE) for class 000, Assured Forwarding (AF) for classes 001 to 100, and

Expedited Forwarding (EF) for class 101. A special class, Class Selector (CS), is also used when only these first 3 bits are used (and

the other bits set to 0), thus mapping perfectly to IP Precedence and CoS. The next 2 bits are used to determine a drop probability

(DP); a higher DP means a higher probability for the packet to be dropped if congestion occurs. (Therefore, within a class, a packet

with a DP value of 2 is dropped before another packet of the same class with a DP of 1 or 0.) The last bit is usually set to 0. Figure 1-1

shows the various tagging methods.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[6]

Chapter 1: QoS for Wireless Applications

1 bit

12 bits

3 bits (CoS)

User Priority Format

VLAN ID

16 bits

Protocol ID

Layer 2

802.1Q/p

Pream.

SFD

DA

TAG

4 Bytes

PT

Data

FCS

Three Bits Used for CoS (User Priority)

Layer 3

IPV 4

Version

Length

SA

IP Precedence or DSCP

ToS

1 Byte

0

Len

1

ID

Offset

2

TTL Proto

3

4

IP Precedence

3 bits

0

1

FCS

IP-SA

5

IP-DA

Data

6

7

6

7

Unused

5 bits

2

3

4

5

DSCP

6 bits

Class

3 bits

Unused for QoS

2 bit

Drop Probability

2 bits

0

1 bit

Example: PHB AF11 (DSCP 10)

0

0

1

0

Class

QoS Tags:

Layer 2 = CoS

Layer 3 = ToS

1

0

Value

AF1

001

dp

0

AF2

AF3

AF4

010

011

100

dp

dp

dp

0

0

0

AF = Assured Forwarding

(DSCP 10 to DSCP 38)

EF(46) 101 11

EF = Expedited Forwarding

CS3(24) 011 00

(DSCP 46)

CS = Class Selector

Used for compatibility with IP precedence

0

0

Drop

AF

Probability Value

Value

(dp)

Low

Medium

High

01

10

11

AF11

AF12

AF13

Figure 1-1 Marking Techniques

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[7]

Chapter 1: QoS for Wireless Applications

Note

Notice that the DP is

valid only within a class:

AF21 has a lower priority than AF32 (because

AF32 has a higher class

than AF21).

DSCP QoS tags are sometimes difficult to read. The DSCP value itself is usually represented in decimal. For example, DSCP

011010 is converted to decimal and usually written as DSCP 26. The initial 011 distinguishes that it is in the AF class. To better

read the difference between the class and the drop probability, DSCP tags are also often written using the per-hop behavior (PHB)

convention, writing the class (AF in this example), then the class translated into decimal (011 = 3), and then the DP value (01 = 1),

ignoring the last bit (which is always set to 0 anyway). Therefore, DSCP 26 can be written as PHB AF31; both are equivalent. PHB

is often used because it helps clearly see the DP value. DSCP 26 (011010) and DSCP 30 (011110) might be difficult to compare, but

expressed in PHB, they become AF31 and AF32, and you can see that AF31 has a higher priority than AF32. (Yes, higher. They both

belong to the same class, but AF32 has a higher DP than AF31; if one packet must be drop within the AF3 class, packets with the

higher DP are dropped first.)

When all the DP bits are set to 0, the PHB naming convention uses CS. For example, 011000 could be written AF30, but is in fact

written CS3, to remind that it does not have any DP bits and perfectly maps to CoS or IP Precedence. Tag 101110 is written DSCP 46

(101110 in decimal). Because the class is 101, this tag can also be called EF. EF usually has only one DP (101110), and is used for

voice. Because this class commonly uses only one DP, it is often simply called EF (and not EF52).

All these numbers may be complex to master. Luckily, as a wireless engineer, you need to know only a limited number of tags: EF for

voice, AF31 or CS3 for voice signaling, and a few other values for controller-related traffic (see Chapter 2, “VoWLAN Architecture,”

and Chapter 4, “Multicast over Wireless”).

Congestion Management

Once traffic is marked, you can decide to prioritize some traffic in case of congestion (and drop traffic of lower priority). With the

MQC, this decision is usually done with the keyword policy-map (followed by a name). You then call each class map created during

the classification phase and decide which prioritization system to use. Prioritization can be accomplished in many ways. Each way

solves a specific network traffic problem and has a particular effect on network performance. Any technique that drops or marks

packets when they reach predefined burst limits is called policing (inbound or outbound). Another technique, shaping, uses outbound

only, thus enabling you to buffer packets when they reach predefined burst limits. Later, if bandwidth use decreases, these buffered

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[8]

Chapter 1: QoS for Wireless Applications

packets may be sent. Policing is adapted for VoIP, but shaping is more adapted for non-latency-sensitive applications (TCP, for

example). Beyond their policing or shaping aspect, each congestion management method prioritizes packets in a specific way:

■

Priority queuing (PQ) guarantees strict service of a queue (servicing this queue in priority, regardless of the number of

packets in the other queues), which is good for the prioritized queue but may starve the other queues.

■

Custom queuing (CQ) allocates a percentage of the bandwidth to each queue, ensuring good load balancing to each queue,

but no real prioritization.

■

Weighted fair queuing (WFQ) sends in priority smaller packets (low-bandwidth consumption) and packets with a higher

ToS value, using an automatic classification algorithm. WFQ is implemented simply by entering the command fair-queue

at the interface level. A common variant, class-based weighted fair queuing (CBWFQ), allows you to define classes and the

amount of bandwidth each should get. Example 1-2 shows a policy map implementing CBWFQ.

Example 1-2

CBWFQ Policy Map

R1(config)# policy-map MyPolicy

R1(config-pmap)# class MyClass1

R1(config-pmap-c)# bandwidth percent 20

R1(config-pmap)# class MyClass2

R1(config-pmap-c)# bandwidth percent 30

R1(config-pmap)# class class-default

R1(config-pmap-c)# bandwidth percent 35

R1(config-pmap)# end

R1#

■

Low-latency queuing (LLQ), commonly used for VoIP, is a variation from CBWFQ where one queue is serviced in priority

(in a PQ logic), but only up to a certain amount of bandwidth (so as not to starve the other queues). For the other queues, you

define classes and amount of bandwidth. These queues are served in a CBWFQ manner. Notice that with LLQ, any packet in

the priority queue that exceeds the allocated bandwidth is dropped (otherwise, you would be back to simple PQ). Example 1-3

shows a policy map implementing LLQ. The keyword priority is the maker of an LLQ policy.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[9]

Chapter 1: QoS for Wireless Applications

Example 1-3

LLQ Policy Map

R1(config)# policy-map MyPolicy

R1(config-pmap)# class MyVoiceClass

R1(config-pmap-c)# priority percent 20

R1(config-pmap)# class MyClass2

R1(config-pmap-c)# bandwidth percent 30

R1(config-pmap)# class class-default

R1(config-pmap-c)# bandwidth percent 35

R1(config-pmap)# end

R1#

There are also mechanisms for congestion avoidance. For example, weighted random early detection (WRED) drops additional TCP

packets as the link becomes more congested (before link saturation), recognizing that TCP windowing will resend the lost packets and

reduce the TCP window (thus slowing down the flow). This mechanism avoids link oversubscription, but is adapted only for TCP. To

implement WRED, just use the keyword random-detect at the interface level.

For traffic using UDP (like VoIP), call admission control (CAC) allows only a certain number of concurrent calls, avoiding

oversubscription of the queue allocated for this traffic. CAC is usually implemented on the platform managing the calls.

Another mechanism, header compression, enables you to drop packet header size from 40 bytes (Layer 4 to Layer 2) to 2 bytes or 4

bytes, by simply indexing each packet. This technique works only on point-to-point links.

On slow-speed links, you can use link fragmentation and interleaving (LFI) to break large packets into smaller packets, and

interleave small and urgent packets. This technique is efficient to avoid delaying small packets behind large packets, but adds

additional overhead. (Each broken packet becomes several small packets, each with an individual header.)

After creating your policy, you can apply it at the interface level. On the Cisco MQC, you generally do so with the service-policy

{input | output} command. Example 1-4 shows a complete, but simple policy using MQC, making sure that voice traffic (RTP

audio) and voice signaling (SIP or Skinny, see Chapter 2) are marked properly, using LLQ for voice and CBWFQ for voice signaling

and any other traffic.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 10 ]

Chapter 1: QoS for Wireless Applications

Example 1-4

Complete MQC Policy Example

R1(config)# class-map match-any Voice

R1(config-cmap)# match protocol rtp audio

R1(config-cmap)# exit

R1(config)# class-map match-any Voice_signaling

R1(config-cmap)# match protocol sip

R1(config-cmap)# match protocol sccp

R1(config-cmap)# exit

R1(config)# policy-map MyVoicePolicy

R1(config-pmap)# class Voice

R1(config-pmap-c)# set ip dscp ef

R1(config-pmap-c)# priority percent 20

R1(config-pmap-c)# exit

R1(config-pmap)# class Voice_signaling

R1(config-pmap-c)# set ip dscp cs3

R1(config-pmap-c)# bandwidth percent 15

R1(config-pmap)# class class-default

R1(config-pmap-c)# bandwidth percent 50

R1(config-pmap-c)# exit

R1(config-pmap)# exit

R1(config)# interface FastEthernet 0/1

R1(config-if)# service-policy output MyVoicePolicy

R1(config-if)# end

R1#

QoS techniques are used throughout the entire network. At the network access layer, you use classification and marking, congestion

management (policing or shaping), admission control, and congestion avoidance. Congestion management and congestion avoidance

are also used in the core of the network. At the WAN edge of the network, you use the same techniques as at the access layer, with

header compression and LFI if needed.

As an IUWVN candidate, you are not supposed to be a QoS expert, but must have a good understanding of the basic QoS

mechanisms. Keep in mind that QoS does not solve congestion issues, but simply helps safeguard the more important traffic when

congestion occurs. When implementing QoS in a corporate network, start by defining the objectives to be achieved via QoS. Then,

analyze the different traffic types and create matching classification, marking, and policies. Always test before expanding the

configuration to the entire network. Once QoS is implemented, monitor the traffic to decide whether you need to change the policies.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 11 ]

Chapter 1: QoS for Wireless Applications

Table 1-1 shows recommendations for common traffic type marking. You might need to create more or fewer classes depending on

your implementation requirements, and you may also need to use another marking.

Note

AutoQoS is an automated

tool. As such, it does not

have the same intelligence of the network as a

QoS expert. For complex

deployments, seek the

help of a QoS expert.

Table 1-1 Cisco QoS Standard Baseline

Application

L2 CoS

IP Precedence

PHB

DSCP

IP routing

6

6

CS6

48

Voice

5

5

EF

46

Interactive video

4

4

AF41

34

Streaming video

4

4

CS4

32

Locally defined mission-critical data

3

3

—

25

Call signaling

3

3

AF31/CS3

26/24

Transactional data

2

2

AF21

18

Network management

2

2

CS2

16

Bulk data

1

1

AF11

10

Scavenger

1

1

CS1

8

Best effort

0

0

0

0

IP routing

6

6

CS6

48

In some cases, you can use Cisco AutoQoS to simplify your QoS implementation. AutoQoS is an intelligent macro via which you can

enter one or two simple AutoQoS commands to enable the appropriate features for the recommended QoS settings for an application

on a specific interface.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 12 ]

Chapter 1: QoS for Wireless Applications

Wireless QoS

QoS is also used in the wireless world. At the cell level, you can use wireless QoS to prioritize voice traffic over other traffic.

Wireless CAC is added to limit the number of concurrent calls in the cell. Between the access point (AP) and the controller, all traffic

is encapsulated into CAPWAP, and you need to take care of the CoS and ToS values on the transmitted packets. You may also need

to manipulate QoS values for packets entering or leaving the controller.

DCF Mechanism

QoS in the wireless cell was implemented through the 802.11e amendment ratified in 2005. Without 802.11e, media access works

through the Distributed Coordination Function (DCF) you learned in IUWNE. Each station that wants to send a frame has to wait.

Time is determined by two basic timers: the short interframe space (SIFS), which is the smallest time space in use (before 802.11n),

and the slot time (SlotTime), which is the speed at which stations count time (like a metronome). Table 1-2 shows the main values, as

they depend on the protocol you use.

Note

The DIFS, which is the

standard silence between

frames, is calculated as

DIFS = SIFS + 2 x

SlotTime.

Table 1-2 SIFS and SlotTime Values

Protocol

SIFS (Microseconds)

Slot Time (Microseconds)

DIFS (Microseconds)

802.11b

10

20

50

802.11g/n

10

9

28

802.11a/n

16

9

34

You need to know two other interframe spaces: the reduced interframe space (RIFS), which is a 2-microsecond silence used between

frames during an 802.11n frame burst; and the extended interframe space (EIFS), used when frames collide. The emitting station

detecting the collision has to wait an EIFS (calculated as SIFS + (8 x ACK) + Preamble length + PLCP header length + Distributed

interframe space [DIFS]) before retrying to send.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 13 ]

Chapter 1: QoS for Wireless Applications

When a station needs to send with DCF, it picks up a random number within a contention window (CW). This window is a range of

values between a minimum (CWmin) and a maximum (CWmax). For 802.11b, CWmin is 31. For 802.11a/g/n, CWmin is 15. For all

protocols, CWmax is 1023. For a first attempt to send a given frame, the station usually uses the CWmin value itself. The number

picked is called the backoff timer. The total time the station has to wait before sending is called the network allocation vector (NAV).

The station then waits for a DIFS and starts counting down from the chosen number (so, initially, NAV = DIFS + Backoff timer). At

every number time mark, the station listens to the cell. If no traffic is detected, the station waits a slot time and counts one number

less. If traffic is detected, the station tries to read the 802.11 header duration field (that expresses the time needed in microseconds

to send the frame, wait a SIFS, and receive an ACK frame for unicast transmissions), and increments its NAV to reflect the

additional time necessary for the other station transmission. (For example, if an 802.11a station countdown reached 11 when another

transmission was detected with a duration value of 90 microseconds [10 slot times], the station set is NAV to 21 and restart counting

from there, while the other station transmits.) The process repeats during the countdown for every new detected transmission. When

the countdown reaches 0, if no other transmission is detected, the station sends its frame. If the frame is multicast or broadcast, no

acknowledgment is expected. If the frame is unicast, the receiver waits a SIFS, and then sends back an acknowledgment (ACK)

frame. If no ACK is received, the emitter picks up a new backoff timer (typically twice the previous timer), waits an EIFS, and

then restarts counting down. If, after several unsuccessful transmissions, the backoff timer reaches CWmax, the station keeps using

CWmax until the frame is dropped or transmitted successfully.

Stations counting down attempt to read the duration field in other sending stations’ frames to update their NAV, but this might

not always be possible. The entire frame is sent at the same data rate, and the listening station might not be able to demodulate the

sending station frame. (For example, the sending station is close to the AP and uses 54-Mbps 64-QAM, and the listening station is at

the edge of the cell and cannot demodulate anything faster than 6 Mbps.) The listening station may be able to use the PHY header that

is sent slower than the 802.11 frame itself, and which also contains the duration of the frame (expressed in microseconds, or in bytes

and transmission speed). The PHY header does not specify whether the frame is broadcast or unicast. (The listening station does not

know if a SIFS/ACK sequence is expected after the frame is sent.) This limited information may result in collisions. VoWLAN cells

are built small to mitigate this issue and to ensure that most stations detect other stations’ frame headers.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 14 ]

Chapter 1: QoS for Wireless Applications

802.11e and WMM

The default DCF mechanism does not differentiate traffic. Small voice packets are sent the same way as large FTP packets. To

improve this mechanism, the 802.11e amendment introduces QoS mechanisms and creates eight priority values, called user priorities

(UP), with the same logic as the CoS values explained earlier. UPs are grouped two by two into access categories (AC), as shown in

Table 1-3. Most systems use QoS only down to the AC level, not down to the UP sublevel.

Note

Notice that CoS 3 and 0

are in the same AC. (3 is

best effort, and 0 is “no

tag,” which results in

best effort treatment.)

Table 1-3 UPs and ACs

Priority

CoS (802.1p)

802.11e Code

Highest

7

NC

6

VO

5

VI

4

CL

3

EE

0

BE

2

-

1

BK

Lowest

AC Code

AC Name (and Purpose)

AC_VO

Platinum (voice)

AC_VI

Gold (video)

AC_BE

Silver (best effort)

AC_BK

Bronze (background)

When a QoS station needs to send, it first allocates the packet to a QoS category. The station then picks up a backoff timer, but the

CW is smaller for packets with higher priority, as shown in Table 1-4.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 15 ]

Chapter 1: QoS for Wireless Applications

Note

Notice that most values are calculated from

CWMin.

Table 1-4 Firewall Policy of the Inner Router

802.11b

802.11a/g/n

CWMin

CWMax

CWMin

CWMax

AC_VO

7 (CWmin + 1) / 4 – 1)

15 (CWmin + 1) / 2 – 1

3 (CWmin + 1) / 4 – 1)

7 (CWmin + 1) / 2 – 1

AC_VI

15 (CWmin + 1) / 2 – 1

31 (CWMin)

5 (CWmin + 1) / 2 – 1

15 (CWMin)

AC_BE

31 (CWMin)

1023

15 (CWMin)

1023 (CWMax)

AC_BK

31 (CWMin)

1023 (CWMax)

15 (CWMin)

1023 (CWMax)

The station then waits an interframe space. It is not the DIFS like for DCF, but the arbitration interframe space (AIFS). The AIFS is

1 SIFS + AIFSN, where AIFSN (the AIFS number) is a number of slot times that depends on the queue to use. AIFSN is 2 for AC_

VO and AC_VI, 3 for AC_BE, and 7 for AC_BK. Notice that AIFS is always equal or longer than DIFS (never shorter).

The station then counts down from the backoff timer chosen for that queue. When the queue reaches 0, the frame is sent. All queues

count down in parallel inside the station. Because AIFS and CW are smaller for higher-priority queues, chances are higher to send

more voice packets faster than packets of the other queues. This is true for countdowns inside the station, and between stations of the

same cell.

802.11e also defines transmit opportunities (TXOP) that are transmitted by the QoS AP in its beacons (in a field called EDCA

Information Element) and express for each AC a service period (SP), which is the duration (in units of 32 microseconds) for which a

station can send in each period, for each AC. For example, the QoS AP may tell the cell that for the AC_VI queue, the service period

is 3.008 ms and the TXOP 4, which means that a station can send up to 4 video frames without stopping, per period of 3.008 ms. This

allows for a station to send more than one frame once it gets access to the medium. A station ready to send (NAV = 0) that needs to

send several frames, and knows that the TXOP information allows for these several frames to be sent (the TXOP is expressed in time

duration, so the station has to base its determination of the number of frames that can be sent on the data rate at which the station is

planning to send), is going to send several frames in a burst (called content-free burst [CFB]). TXOPs are configurable on the AP and

are usually higher (more frames can be sent in a burst) for higher-priority ACs.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 16 ]

Chapter 1: QoS for Wireless Applications

This queuing mechanism is different from the one used by non-QoS stations. Therefore, APs and stations implementing 802.11e do

not use DCF, but another coordination function called Hybrid Coordination Function (HCF). 802.11e defines two submodes:

■

Enhanced Distributed Channel Access (EDCA): Similar in concept to DCF (with the addition of the features described

earlier: ACs, AIFS and TXOPs). EDCA performs the Enhanced Distributed Coordination Function (EDCF).

■

HCF Controlled Channel Access (HCCA): The AP takes control of the cell and decides which station has the right to send.

This mode resembles an older and unused mode, called Point Coordination Function (PCF). Just like PCF, HCCA has not

been implemented by any vendor yet.

802.11e also improves power management mechanisms. With standard 802.11, a station saves battery power by sending a null

(empty) frame to the AP with the Power Management bit in the header set to 1. The AP then buffers subsequent traffic for that station.

The station wakes up at regular intervals to listen to the AP beacon, which contains a field called the Traffic Indication Map (TIM),

indicating the list of stations for which some traffic is buffered. Some beacons also contain a Delivery Traffic Indication Message

(DTIM), indicating that the AP has broadcast or multicast traffic that is going to be sent just after the beacon. The station should stay

awake to receive the broadcast/multicast frame. If the station sees its number in the TIM, it sends a special frame (PS-Poll) to the AP

to ask for the first buffered packet. The AP contends for medium access and sends the frame, indicating with the More Data bit in the

frame header if more packets are buffered. The process is repeated until the More Data bit is set to 0, showing that no more traffic is

buffered. This process consumes a lot of frames. 802.11e introduces Automatic Power Save Delivery (APSD), with two submodes:

■

With Scheduled APSD (S-APSD), the station and the AP negotiate a wakeup time. No further frame exchange is needed.

The AP recognizes when the station is going to wake up, and simply sends the buffered traffic in due time. S-APSD is not

implemented often.

■

With Unscheduled APSD (U-APSD), the station still informs (with the Power Management bit) the AP that the station goes

to doze mode, and still listens to the TIMs in the beacons. The station can then inform the AP about its awaken state at any

time, with any frame where the Power Management bit is set to 0 (no need for PS-Poll frames, and no need to inform the AP

just after a beacon). The AP then empties its buffer in a burst (no need for one trigger frame per buffered frame). U-APSD

is implemented often and dramatically increases the efficiency of the delivery mechanism. U-APSD not only improves

power save management (compared to PS-Poll), but also increases the cell call capacity (because there is less time wasted in

buffered frame management). With its flexible trigger mechanism, U-APSD also allows the voice client to synchronize the

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 17 ]

Chapter 1: QoS for Wireless Applications

transmission and reception of voice frames with the AP. The client can send a burst of frames, then go to power-save mode,

and then trigger the AP to send buffered frames as soon as the client’s receive buffer level gets low. This way, a voice client

wireless card can spend most of its time in power save mode, even during an active call.

Bursts are built with block acknowledgments, also introduced by 802.11e, where stations and APs can negotiate the exchange of burst

of frames. The entire burst is acknowledged once, with one block ACK frame.

The Wi-Fi Alliance (WFA) certifies as Wireless Multimedia (WMM) systems that implement EDCA (with at least four AC queues,

TXOPs, AIFS, and the specific queue timers explained earlier). The Wi-Fi Alliance also certifies as WMM Power Save systems that

implement WMM and U-APSD. S-APSD and HCCA are not tested in the certification (that is, they can be implemented, but are not

needed for the WMM certification).

QoS-Enabled Cells

A WMM station or AP adds a 2-byte QoS control field to the end of its 802.11 header that mentions (among other parameters) the

UP for the current frame. The non-WMM station assumes that this field is part of the frame body and simply ignores it, allowing nonWMM stations to coexist with WMM stations.

The 802.11e/WMM cell also implements several other efficiency mechanisms:

■

In each beacon, a QoS Basic Service Set Information Element (QBSS-IE) informs the cell about the number of stations

currently associated to the AP, the percentage of the AP radio resources currently used by these clients, and a count of the

space left for new stations. This QBSS-IE allows WMM roaming stations to choose the best AP, based on signal and space.

■

WMM stations can also (optionally) use Traffic Specification (TSPEC) to specify the type of traffic required to send and

receive (UP and volume upward and downward). The AP can notify the station if that traffic can be admitted or not, and can

subtract this traffic when informing the cell about the space left in the cell in the next QBSS IE (and TXOP information in

the beacon EDCA IE). The TSPEC is sent in a frame exchange called Add Traffic Specification (ADDTS). With EDCA, the

station can ignore the AP answer. TSPEC is important because it is used for CAC in the controller-based solution.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 18 ]

Chapter 1: QoS for Wireless Applications

Yet, 802.11e does not (and cannot) address issues related to frame sizes versus throughput. A lot of 802.11 cell time is spent in

overhead (interframe spaces, acknowledgments, 802.11 PHY and MAC headers). A small packet requires the same overhead as a

large packet. In proportion, time spent in overhead increases as the payload (packet size) decreases. This phenomenon makes that the

real throughput decreases as the packet size decreases. Table 1-5 shows example maximum throughput for some typical packet sizes.

Table 1-5 Typical Maximum Throughput for Common Packet Sizes

Data Rate

Throughput for 300 B Packets

Throughput for 900 B Packets

Throughput for 1500 B Packets

802.11g, 54 Mbps

11.4 Mbps

24.6 Mbps

31.4 Mbps

802.11b, 11 Mbps

2.2 Mbps

4.7 Mbps

6 Mbps

There is not much you can do to solve this issue. You have to be aware that throughput degrades as the packet size gets smaller. When

deploying a new application over a wireless network, always test to determine the real effective throughput.

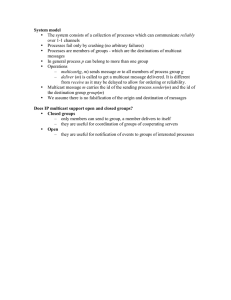

QoS Marking Between APs and Controllers

QoS needs to be controlled when frames are sent between the controller and the APs. For each WLAN, you can define a QoS level

that determines the maximum AC allowed on the cell. Make sure that packets transmitted between the APs and the controller take the

entering packet tag into account, without exceeding the policy configured for the WLAN. Figure 1-2 summarizes the QoS tag control

mechanism used between APs and controllers. You need to understand and remember its principles.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 19 ]

Chapter 1: QoS for Wireless Applications

Payload

WMM or

non-WMM client

non-WMM client

WLAN with no 802.1p QoS Mapping CAPWAP Encapsulated

or incoming packet with no QoS

Payload

802.1p capped to WLAN QoS maximum

DSCP Payload

CAPWAP Encapsulated

WMM client

802.1p

802.11e DSCP Payload

DSCP

DSCP

Payload

DSCP copied to 802.11e

capped to WLAN QoS maximum

802.1p

DSCP

Payload

DSCP value

copied unchanged

AP

Access Ports

WLAN

Controller

Trunk

CAPWAP Encapsulated

DSCP

802.11e DSCP Payload

802.11e copied to DSCP

capped to WLAN QoS maximum

non-WMM client

Payload

802.11e

DSCP

Payload

802.11e copied to 802.1p

capped to WLAN QoS maximum

WLAN with no 802.1p QoS Mapping CAPWAP Encapsulated

or incoming packet with no QoS

Payload

802.1p

DSCP

Payload

DSCP value

copied unchanged

Payload

Figure 1-2 QoS Between APs and WLCs

Spend some time memorizing the principles displayed in this figure. A key element needed to understand the process is that the controller

usually connects to a switch through a trunk interface. An AP (in local mode) connects to a switch through an interface in access mode.

Therefore, 802.1Q (and 802.1p/CoS) is only available on the trunk link to the controller, not on the access link to the AP.

When a packet reaches the controller, the controller is always going to keep the DSCP original marking unchanged. This is true for

wired packets sent to the AP (DSCP inside the CAPWAP encapsulated frame and DSCP in the outer CAPWAP header are the DSCP

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 20 ]

Chapter 1: QoS for Wireless Applications

value in the incoming packet) and for packets received from the AP (the original DSCP value is maintained inside the CAPWAP

packet). But the controller is always going to cap the 802.1p value to whatever QoS value is set for the destination WLAN. For

example, if the incoming packet requests 802.1p 5 / DSCP 46 (EF) and if the WLAN maximum is AC_BE [802.1p 3], the packet sent

to the AP will show an inner and outer DSCP of 46, but an outer 802.1p of 3. Of course, if the requested QoS level is lower than the

WLAN maximum, the requested QoS level is granted.

This logic implies that you should set a QoS policy on your switch to handle this discrepancy. (Trust the CoS tag and re-mark the

DSCP tag accordingly, or proceed the opposite way if you trust your customer requested QoS levels.)

The AP is also going to keep the original DSCP marking unchanged (inside the CAPWAP encapsulated packet or the 802.11 frame).

But the AP always checks the WLAN policy, and caps to the WLAN maximum the 802.11 UP, or the CAPWAP outer DSCP.

(Because the AP is on an access port, the AP cannot use 802.1p tag.) For example, if the AP receives a frame from the cell with UP 6

and DSCP 46 (EF) and if the WLAN maximum is AC_BE [802.1p 3], the packet is forwarded from the AP toward the controller with

the same inner DSCP UP 6 and DSCP 46 (EF), but the outer DSCP is capped to the WLAN maximum, which is AF 21 or DSCP 18

(802.1p 3 translated into DSCP).

The H-REAP is a special case because it may reside on a trunk and switch traffic locally. The H-REAP basically follows both logics,

capping 802.1p when using an 802.1Q tag (and keeping DSCP unchanged), or capping DSCP when traffic is sent untagged (native

VLAN or access port).

This AP-to-wireless LAN controller (WLC) tagging behavior assumes that you configure a QoS level on your WLAN QoS tab (Silver

is default), but also enables wireless-to-wired mapping on the controller, from Wireless > QoS Profiles for the QoS level chosen for

the WLAN. Without wireless-to-wired mapping, or if the incoming packet does not have any QoS tag (non-WMM wireless client or

non-QoS wired source), no QoS is applied between the controller and the AP (and the AP uses the DCF queue to forward the frame to

the cell).

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 21 ]

Chapter 1: QoS for Wireless Applications

Cisco, IETF, and IEEE QoS Policies

The highest 802.1p value is 7 and is kept for emergencies. The IEEE therefore states that the first value you should use for your most

urgent traffic, voice, should be 6. This is why voice is UP 6 in 802.11e. The IETF and Cisco state that before voice is forwarded, the

network should exist, and that CAPWAP traffic and routing updates should have a higher priority than user traffic, even voice. In a

Cisco network, CAPWAP control is tagged CS6 (802.1p 6), and voice EF (802.1p 5). Translation is done automatically for voice at

the controller and AP levels when you choose the default 802.1p mapping for the Platinum queue. (You see UP 6, but will get 802.1p

5 and EF.) The same logic applies for the other queues, as specified in Table 1-6.

Table 1-6 Cisco QoS Mapping

Traffic Type

Cisco Unified

Communications

IP DSCP

Cisco Unified

Communications

802.1p UP

IEEE 802.11e UP (Seen

in Wireless Cell and/or

on WLC QoS Profile)

Notes

Network control

56

7

N/A

Reserved for network control only

Internetwork control

48

6

7 (AC_VO)

CAPWAP control

Voice

46 (EF)

5

6 (AC_VO)

Controller: Platinum QoS profile

Video

34 (AF41)

4

5 (AC_VI)

Controller: Gold QoS profile

Voice control

24 (CS3)

3

4 (AC_VI)

—

Best effort

0 (BE)

0

3 (AC_BE) 0 (AC_BE)

Controller: Silver QoS profile

Background (Cisco

AVVID Gold background)

18 (AF21)

2

2 (AC_BK)

—

Background (Cisco

AVVID Silver background)

10 (AF11)

1

1 (AC_BK)

Controller: Bronze QoS profile

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 22 ]

Chapter 1: QoS for Wireless Applications

You should memorize Table 1-6. These values mean that you should leave the default mapping as it appears on the QoS profile pages.

If you change Platinum mapping to 802.1p 5, you will in fact get 802.1p 4! Notice that automatic mapping does not occur on the

autonomous AP. Figure 1-3 shows the mapping configuration pages on the controller and the autonomous AP.

Figure 1-3 QoS Mapping Pages in the WLC and Autonomous AP

When building your QoS policy, remember that standard CAPWAP control uses CS6 and that CAPWAP data QoS level depends

on the WLAN policy. When an AP first joins a controller and gets its configuration, this initial CAPWAP control exchange is sent

as best effort (no QoS tag). 802.1X/EAP traffic (between the AP, the WLC, and the RADIUS server) uses CS4. Exchanges between

controllers (for mobility messages or Radio Resource Management [RRM]), and multicast/broadcast traffic forwarded from the

controller to any WLAN through the APs, are sent as best effort (no QoS tag).

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 23 ]

Chapter 1: QoS for Wireless Applications

CAPWAP does not represent a large volume of traffic. The discovery process consumes 203 bytes (97-byte request and 106-byte

response), the join phase 3000 bytes, and the configuration phase approximately 6000 bytes. Subsequent CAPWAP control traffic

represents 0.35 Kbps per AP. RRM represents 396 bytes exchanged every 60 seconds between controller pairs, and 2660 bytes every

180 seconds for updates. Each initial RRM contact consumes 1400 bytes, and each RRM parameter change 375 bytes per changed

AP. CAPWAP itself adds 60 bytes to each data frame (14 bytes for CAPWAP information, and 46 bytes for the outer Layer 4, 3, and

2 header).

CAPWAP control traffic should be kept with its CS6 QoS tag. Nevertheless, you may face customers worried about the impact of

CAPWAP traffic on the network, and who want to re-mark CAPWAP control with a lower QoS value. Although this is unnecessary

in most networks and not recommended, Example 1-5 shows a way to re-mark and rate-limit CAPWAP control traffic based on its

destination port. (The controller management interface IP address is 10.10.10.10 in this example.)

Example 1-5

Policy to Re-Mark and Rate-Limit CAPWAP Control Traffic

R1(config)# access-list 110 permit udp any host 10.10.10.10 eq 5246

R1(config)# class-map match-all CAPWAPCS6

R1(config-cmap)# match access-group 110

R1(config-cmap)# match dscp cs6

R1(config-cmap)# exit

R1(config)# policy-map CAPWAPCS6

R1(config-pmap)# class CAPWAPCS6

R1(config-pmap-c)# set dscp cs3

R1(config-pmap-c)# police 8000 conform-action transmit exceed-action set-dscp-transmit 24

R1(config-pmap-c)# exit

R1(config-pmap)# exit

R1(config)# interface FastEthernet 0

R1(config-if)# service-policy output CAPWAPCS6

R1(config-if)# end

R1#

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 24 ]

Chapter 1: QoS for Wireless Applications

Configuring QoS on Controllers

You can define the maximum QoS level expected for a WLAN from the WLAN QoS configuration tab. You can choose Platinum,

Gold, Silver, or Bronze for the WLAN. In the same tab, you can enable or disable WMM. WMM is allowed by default, which means

that the AP uses WMM for that WLAN (QoS information in the frame headers, QBSS IE in the beacons, TSPEC used in CAC).

WMM and non-WMM stations are allowed to join the cell. You can disable WMM, or change it to mandatory (so that non-WMM

stations can no longer associate).

On the same page, you can configure QoS parameters for the 7920 phone. The 7920 is an old 802.11b phone that does not support

WMM. Cisco developed a proprietary QBSS IE to help this phone roaming (called Cisco pre-standard draft 6 QBSS IE, sometimes

called CCX QBSS IE v1, and available in the WLAN QoS configuration tab as 7920 Client CAC), before the 802.11e amendment

was released. This information element was located in AP beacons exactly where the 802.11e QBSS IE is located today. Therefore,

you cannot enable 7920 Client CAC if you enable WMM (which activates the QBSS IE in the AP beacons). When 802.11e was

published, Cisco moved the proprietary QBSS IE deeper in the frame and updated the 7920 phone firmware (2.01 and later) to make

the phone aware of the new location, allowing both the Cisco proprietary QBSS and the 802.11e QBSS to coexist on the same AP.

This new mode is called 7920 AP CAC in the controller interface (CCX QBSS IE v2 in frame captures), and is compatible with

WMM. Enable 7920 AP CAC if you have 7920 phones, but only on the 2.4-GHz band. (The 7920 is 802.11b only.)

After defining the QoS level for the WLAN, you can navigate to Wireless > QoS > Profiles, click each profile (Platinum, Gold,

Silver, and Bronze), and activate the 802.1p mapping for each profile. Leave the default mapping (6, 5, 3, and 1, respectively),

knowing that this IEEE value will automatically be translated into the Cisco-recommended mapping (5, 4, 0, and 1, respectively).

From the same page, you can choose to allocate a maximum bandwidth value for each user using each WLAN using the configured

QoS profile. You can set a maximum bandwidth value for TCP packets (average data rate and burst data rate) or UDP packets

(average real time and burst real time). Figure 1-4 shows the main QoS items you need to configure on a controller.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 25 ]

Chapter 1: QoS for Wireless Applications

Figure 1-4 QoS-Related Items on a Controller

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 26 ]

Chapter 1: QoS for Wireless Applications

Your next stop can be on the Wireless > 802.11a/n | 802.11b/g/n > Media page, where you can configure wireless CAC for voice or

video, as shown in Figure 1-4. From the Wireless > 802.11a/n | 802.11b/g/n > EDCA parameters page, you can also refine the way

WMM allocates resources to QoS stations. The default mode, WMM, is adapted to general deployments (data, or large proportion of

data along with some voice). You can change the CWMin, CWMax, AIFS, and TXOPs allocated to each AC by changing the EDCA

Profile to Voice Optimized (most resources are allocated to voice, and few resources to other ACs), Voice and Video Optimized,

or SpectraLink Voice Priority. The AP prioritizes frames containing the SpectraLink Radio Protocol, protocol 119, because some

SpectraLink phones are not WMM. See Chapter 3, “VoWLAN Implementation,” for more details about these pages.

As part of your QoS configuration, you can also control the bandwidth allocated to users on Webauth WLANs. This is accomplished

by first creating a QoS role from Wireless > QoS > Roles. Create a new role, click Apply, and then click the role name to edit its

properties and allocate and bandwidth limitation for users with that profile. You can set a maximum bandwidth value for TCP packets

(average data rate and burst data rate) or UDP packets (average real time and burst real time). Then, when creating local users on the

controller, from Security > AAA > Local Net Users, you can check the Guest User Role check box and select the QoS profile you

created. The user bandwidth will be limited as defined in the QoS role. Notice that this function is available only for users of Webauth

WLANs. Figure 1-5 shows the main QoS profile configuration on a controller.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 27 ]

Chapter 1: QoS for Wireless Applications

Figure 1-5 WebAuth User QoS Profile Configuration on a Controller

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 28 ]

Chapter 1: QoS for Wireless Applications

Notice that you can configure all QoS items from Cisco Wireless Control System (WCS) through controller templates.

Configuring QoS on Autonomous APs

QoS on autonomous APs is slightly different from QoS on controllers. Some items are similar in concept to the controller parameters.

For example, from the Services > QoS > Access Categories tab, you can fine-tune the AP EDCA parameters. You can manually set

the CWMin, CWMax, TXOP, and AIFSN values for each AC. You can also choose the WFA default values (which is the equivalent

to WMM EDCA configuration on the WLC) or an optimized voice mode (equivalent to voice-optimized EDCA configuration on

the WLC). When choosing the voice mode, the AP changes the EDCA parameters, but also starts sending probe responses every

10 ms (called gratuitous probe responses). This feature is useful when phones are discovering WLANs by passively listening to

AP beacons. Standard beacon interval is 102.4 ms. By sending unsolicited probe responses every 10 ms, the AP expedites WLAN

discovery. The AP also starts prioritizing voice traffic, using an LLQ logic. Figure 1-6 shows the Access Categories tab.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 29 ]

Chapter 1: QoS for Wireless Applications

Figure 1-6 Autonomous AP Access Categories Tab

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 30 ]

Chapter 1: QoS for Wireless Applications

At the bottom of the same page, you can configure CAC to allow voice traffic to use up to a configurable maximum percentage of

the AP radio bandwidth. New calls are not allowed if their addition makes the overall voice traffic in the cell exceed the configurable

value. A percentage of that value is kept for roaming users (so that users roaming to the cell do not get disconnected even if the

maximum allowed bandwidth is already in use by local calls). Notice that roaming bandwidth is taken from the CAC maximum

bandwidth (for example, if CAC Max Channel capacity is set to 75% {default value] and Roam Channel Capacity to 6% [default

value], the actual bandwidth available for local calls is 69% [75 – 6]).

You can further configure this LLQ from Services > QoS > Streams, as shown in Figure 1-7. Bandwidth allocation for LLQ on

an IOS AP (which is a Layer 2 device) differs slightly from LLQ on a router. On the autonomous AP, LLQ means that as soon as a

packet arrives in the AP buffer for the queue set for LLQ, the packet is sent regardless of what other packets may be present in the

other queues. In that sense, it is a form of PQ. Bandwidth limitation for that queue is achieved by combining two other parameters:

■

Retries: Packets sent from the AP radio may fail (collisions). You can define for the LLQ queue how many times the AP

should try resending a packet (default is three attempts) before dropping it.

■

Rate: Lower down in the page, you can configure what rates should be used for LLQ packets. You can define Nominal rates

(rates the AP should try first), Non-Nominal rates (rates the AP should try if none of the nominal rates succeeds), and Disable

rates (rates that should not be used for LLQ).

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 31 ]

Chapter 1: QoS for Wireless Applications

Figure 1-7 Autonomous AP LLQ Configuration

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 32 ]

Chapter 1: QoS for Wireless Applications

From the Services > QoS > Advancedpage, shown in Figure 1-8, you can configure parameters that are close to concepts present on

controllers, but configured with a different logic. You can enable or disable WMM for each radio. (On WLCs, WMM is configured at

the WLAN level.) You can also choose to apply the AVVID Priority mapping, which translates 802.11e UP 6 into 802.1p CoS 5. This

parameter is disabled by default (which means that 802.11e UP 6 translates into 802.1p CoS 6 by default). You need to enable this

parameter for the autonomous AP to behave like the controller and CAPWAP AP, translating the Voice UP 6 into the IETF/Ciscorecommended wired QoS level of CoS 5. Notice that this translation is valid only for voice traffic (not for the other ACs).

From the same page, you can configure the AP to prioritize voice traffic, regardless of any other QoS configuration on the AP, by

checking the QoS Element for Wireless Phones check box. Enabling this feature makes that the AP automatically starts prioritizing

voice traffic over its radio interfaces in a PQ logic (again, regardless of any other QoS configuration on the AP). The AP also starts

adding the QBSS IE in its beacons. It is the Cisco QBSS Version 2. If you check the Dot11e check box, the AP also includes the

802.11e QBSS IE. Figure 1-8 shows the QoS > Advanced.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 33 ]

Chapter 1: QoS for Wireless Applications

Figure 1-8 Autonomous AP QoS > Advanced Page

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 34 ]

Chapter 1: QoS for Wireless Applications

You can configure some parameters on autonomous APs that you cannot configure on controllers. For example, you can configure a

QoS policy to mark/prioritize traffic (just as you would on a router with the MQC, which is not possible on a controller). You do so

from Services > QoS > QoS Policies. From that page, you can define a new policy, providing a name and selecting (from drop-down

lists) the type of traffic that should be targeted (based on IP Precedence, DSCP value, or by targeting protocol 119). Lower down on

the page, you can decide which 802.11e user priority to apply to this traffic (useful for traffic sent out of the AP wireless interfaces).

You can also configure a traffic bandwidth limitation. (Make sure to take into account the interface bandwidth before configuring

such limitation, because the policy allows you to set a limit of up to 2 Gbps, which is too high for any radio or wired interface.) At the

bottom of the page, you can apply the policy to the wired or wireless interface (or to VLANs if VLANs are configured on the AP), in

the incoming or outgoing direction. You can apply the same policy at several locations. When checking the resulting policy from the

CLI, you would see the classic sequence class map, policy map, and service policy explained earlier in this chapter.

Configuring QoS on Routers and Switches

On routers, you can configure QoS using the MQC as explained earlier in this chapter. QoS configuration differs slightly on switches

because switch buffers are built differently from router buffers (and switches aim at moving frames between interfaces at hardware

speed). On multilayer switches (Layer 2 and Layer 3 switches that can switch frames and route packets), start by enabling QoS

support with the mls qos global command keyword. At each interface level, decide whether incoming QoS tags should be trusted.

For ports to CAPWAP APs (all modes), trust DSCP. This is true even for H-REAPs on trunks, because H-REAPs always get their

management interface on the native (untagged) VLAN and use this untagged VLAN to communicate with the controller (therefore,

CAPWAP control has DSCP tag CS6, but no 802.1p tag). For ports to controllers, trust CoS (unless you do not want to cap your

customers requested QoS levels, which is not the majority of cases).

Switches buffers commonly have four hardware outgoing queues. You can configure these queues with a policing logic (each queue

receives a percentage of the interface bandwidth, the excess is dropped) with the command srr-queue shape. (For example, srrqueue shape 25 25 25 25 allocates 25% of the interface bandwidth to each queue, packets in excess in each queue are dropped.) You

can also use a share logic, just like on routers (each queue receives a percentage of the interface bandwidth, the excess is buffered

and sent later if possible) with the command srr-queue share. You can use shape and share together. The shape command takes

precedence; share is ignored, except for queues for which the shape value is 0. In that case, share is used for that queue.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 35 ]

Chapter 1: QoS for Wireless Applications

You can also make that queue 1, which is by default going to match voice traffic, receives priority treatment with the command

priority-queue out. When you combine these three commands (shape, share, priority-queue) together, the result is effectively

LLQ for voice. Example 1-6 is a typical configuration for a port to a controller. Notice that the standard spanning-tree elements

were added. (The port is a trunk, only the VLANs needed by the controller are allowed, Port Fast is enabled to enable the port

without waiting for spanning tree to detect whether a switch is connected, and BPDU guard is added to disable the port if a switch is

connected in place of the controller.)

Example 1-6

Switch Configuration for Controller Ports

switch(config)# mls qos

switch(config)# interface gigabitethernet 0/1

switch(config-if)# switchport trunk encapsulation dot1q

switch(config-if)# switchport mode trunk

switch(config-if)# switchport trunk allowed vlans 10,20,30,100

switch(config-if)# spanning-tree portfast trunk

switch(config-if)# spanning-tree bpduguard enable

switch(config-if)# mls qos trust cos

switch(config-if)# srr-queue bandwidth share 10 10 60 20

switch(config-if)# srr-queue bandwidth shape 10 0 0 0

switch(config-if)# priority-queue out

switch(config-if)# end

Example 1-7 is a typical configuration for a port to a CAPWAP AP.

Example 1-7

Switch Configuration for AP Ports

switch(config)# mls qos

switch(config)# interface gigabitethernet 0/2

switch(config-if)# switchport access vlan 50

switch(config-if)# switchport mode access

switch(config-if)# spanning-tree portfast

switch(config-if)# spanning-tree bpduguard enable

switch(config-if)# mls qos trust dscp

switch(config-if)# srr-queue bandwidth share 10 10 60 20

switch(config-if)# srr-queue bandwidth shape 10 0 0 0

switch(config-if)# priority-queue out

switch(config-if)# end

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 36 ]

Chapter 1: QoS for Wireless Applications

The IOS AP may be on an access port or a trunk. For an IOS AP on an access port, you need to trust DSCP because there is no CoS.

For an IOS AP on a trunk port, you can trust DSCP, just like for other APs. If all WLANs are mapped to VLANs (that is, all WLAN

traffic is tagged with 802.1Q /802.1p when sent to the wired interface), you can also trust CoS, which may be useful if the switch is

only Layer 2 capable. You can check the QoS configuration on the switch with the show mls qos interface command.

Other important QoS elements configured on switches are the CoS-to-DSCP and the DSCP-to-CoS maps. When you trust CoS, the

Layer 3 switch checks the incoming frame DSCP value and rewrites this value if it does not match the expected DSCP value for the

trusted CoS. The same logic applies in reverse when you trust DSCP (CoS is rewritten). Therefore, whenever you trust CoS or DSCP,

you should verify the CoS-to-DSCP map and the DSCP-to-CoS map with the show mls qos maps [cos-dscp | dscp-cos] command.

If the default values do not match your requirements (for example, CoS 5 is often mapped by default to DSCP 40 instead of the

recommended DSCP 46 for voice traffic), you can change the mapping. To change the DSCP value you want for a trusted CoS value,

use the command mls-qos cos-dscp map. For this command, you provide eight DSCP values, to match the eight trusted CoS values

(from 0 to 7). To change the CoS value you want for a trusted DSCP value, use the command mls-qos dscp map. For this command,

you provide the DSCP value you trust, you add the keyword to, and you type the CoS value you want for that trusted DSCP value.

Example 1-8 shows a CoS-to-DSCP and DSCP-to-CoS map change.

Example 1-8

CoS-to-DSCP and DSCP-to-CoS Changes

switch(config)#

switch(config)#

switch(config)#

switch(config)#

switch(config)#

mls

mls

mls

mls

end

qos

qos map cos-dscp 0 8 16 26 32 46 48 56

qos map dscp-cos 26 to 3

qos map dscp-cos 46 to 5

Be aware that fewer possible CoS values exist (8) than possible DSCP values (64). Therefore, when you trust CoS, several DSCP

values will translate to the same CoS value. When you translate CoS values, there will be a default DSCP value for each CoS value,

which again limits the scope of values you will see in your network. Table 1-7 shows the default translation values when frames

transit through controllers and APs. When an incoming packet exceeds the maximum QoS level defined for the target WLAN (as

defined in the first column), the AP and WLC cap the outer QoS header as listed. The AP uses an outer DSCP marking, and the WLC

an outer CoS marking.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 37 ]

Chapter 1: QoS for Wireless Applications

Table 1-7 Capped Values for Each AC

AC

Max DSCP Value on APs

Max CoS Value on WLCs

Platinum

EF (DSCP 46)

5

Gold

AF 41 (DSCP 34)

4

Silver

AF 21 (DSCP 18)

3

Bronze

CS 1 (DSCP 8)

1

When these capped packets reach the switch, the untrusted value is rewritten. Table 1-8 shows standard equivalents for DSCP-to-CoS

and CoS-to-DSCP re-marking. The first value in each column is the trusted value, and the second value is the re-marked value. For

example, line two of the first column (EF [DSCP 46] -> 5) shows that when DSCP is trusted and the incoming packet shows a DSCP

value of 46 (EF), the packet Layer 2 CoS value is rewritten (regardless of the original CoS value) as CoS 5.

Table 1-8 Common DSCP-to-CoS and CoS-to-DSCP Re-Marking on Switches

Trusted DSCP Value, and CoS Re-Marking When DSCP Is

Trusted

Trusted CoS Value, and DSCP Re-Marking When CoS Is

Trusted

EF (DSCP 46) -> 5

5 -> EF (DSCP 46)

AF 41 (DSCP 34) -> 4

4 -> AF 41 (DSCP 34)

AF 21 (DSCP 18) -> 2

3 -> AF 31 (DSCP 26)

CS 1 (DSCP 8) -> 1

1 -> AF 11 (DSCP 10)

From these tables, you can see that a frame coming from the wireless space with UP 6 in a WLAN for which QoS level is set to Silver

will have an AF21 DSCP value (on the outer CAPWAP packet) when leaving the AP, which will be translated as 802.1p 2 on a trunk.

The same packet coming from the wired side through the controller will get 802.1p 3, which the switch will translate as AF 31. This

means that the same AC may not get the same outer tag, depending on the direction of the packet.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 38 ]

CCNP Wireless (642-742 IUWVN) Quick Reference

Chapter 2

VoWLAN Architecture

Quality of service (QoS) is a key element in a Voice over WLAN (VoWLAN) architecture. You also need to understand how

VoWLAN networks are built, the type of frames and volumes that VoWLAN devices exchange, and the proper way of designing

VoWLAN cells. Design is key knowledge expected from a CCNP Wireless professional. Too many VoWLAN networks were built by

simply adding VoWLAN phones to a wireless layout deployed with data traffic in mind. You need to clearly understand what makes

an effective WLAN for voice support. After mastering the design and deployment phase, you will be ready to configure your wireless

devices and make your VoWLAN a success.

Voice Architecture

Early analog telephone systems were quite simple. A cable pair was connected to the phone speaker, and another pair to the

microphone. Both pairs ran from the end-user location to a neighboring board where an operator managed calls. The user pressed

a lever to make a light blink on the operator board. The operator connected a speaker to the user microphone line connector on the

board, and a microphone to the user speaker line, which allowed communication between the operator and the user. When the user

explained the phone destination to be reached, the operator connected the user microphone line connector to the destination speaker

connector on the same board, and the user speaker line connector to the destination microphone connector on the same board. If the

destination was remote, the operator connected the user line to another operator closer to the destination, and the chain would repeat

until the user was connected to the final destination.

During the 20th century, automated central office (CO) switches appeared to replace operators, performing the same connection

functions via mechanical switches instead of human action. The development of communication brought more complex features

to determine the best route to a destination, manage inter-CO switch links (called trunks) availability and load, and so on. Trunks

became digital to allow sending more traffic over the same physical circuit. (The digital-to-analog conversion was done in the CO

switch, the end-user system staying analog.)

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 39 ]

Chapter 2: VoWLAN Architecture

Corporations started installing smaller versions of CO switches (called private branch exchange [PBX]) inside their facilities to

manage internal calls (and to avoid paying a fee for these calls). PBX often received additional functions specific to enterprise needs

(call parking, voicemail, music on hold, and so forth).

Several drivers pushed to a transition to VoIP. A major concern was cost. Having to dedicate an entire circuit for each call made it

very expensive. VoIP allows operators to use a single link to send several calls.

VoIP also offers many advanced features that are more complex to implement with analog systems, such as the following:

■

Advanced routing: The possibility to choose the best path to any destination based on link speed or cost or any other

criterion.

■

Unified messaging: Messages can be managed from IP-based applications that can be reached from anywhere in the world.

■

Applications integration: For example, the possibility with XML to receive information on the phone screen.

Because most corporations already have an IP network, VoIP is often seen as just adding a new function to an existing infrastructure,

not as a dramatic change. The phone itself is digital (and is called IP phone), and specialized servers (called application servers) can

be added to provide additional features, such as video telephony or conferencing. The IP phone connects, using IP-based protocols,

to a communications manager platform that provides the phone with an extension number and performs call management functions

(call admission or rejection based on destination or user profile, call setup and routing to destination, ongoing call monitoring, call

termination). You may find in the VoIP world specialized devices that do not exist in the analog telephony world. For example, the

gatekeeper (or call agent) provides call admission control (CAC), bandwidth control, and management. The gateway translates

between protocols (for example between VoIP and the standard public switched telephone network [PSTN]). The multipoint control

unit (MTU) provides real-time connectivity for participants to conference calls. The communications manager platform can perform

one or several of these functions. The communications manager can be centralized, which allows for easier management, but also

creates a single point of failure. In case of failure, a local simplified backup system using Survivable Remote Site Telephony (SRST)

can provide minimum functions to maintain part of the VoIP system. The manager can also be distributed to several locations, which

enhances resistance to failures, but also increases the system complexity.

© 2012 Cisco Systems, Inc. All rights reserved. This publication is protected by copyright. Please see page 139 for more details.

[ 40 ]

Chapter 2: VoWLAN Architecture

Protocols are needed to communicate between VoIP systems. Of the many protocols, you need to know four of them:

■

H.323 is a suite of protocol developed by the ITU for audio and video communications. It is often described as an “umbrella

of protocols” because it contains several subprotocols to handle particular functions (for example, H.225 for call signaling

and Registration Admission and Status [RAS]), to establish, maintain, and manage calls; or H.245 for negotiation of each

endpoint capability and channels). H.323 is the most widely used protocol in the VoIP world.

■

Media Gateway Control Protocol (MGCP) is an example of master-slave protocol, where the endpoint (or media gateway

[MG]) is entirely controlled by the MG controller (MGC) down to the tone to play when the user presses a key on the phone.

■

Session Initiation Protocol (SIP), developed by the IETF, is (in contrast) very distributed. Each endpoint (user agent [UA])

uses technologies from the Internet (DNS, MIME, HTTP, and so on) to establish and maintain sessions. SIP is so flexible that