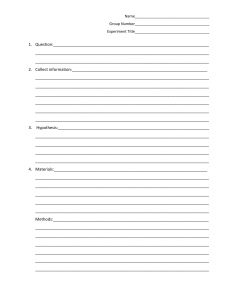

lOMoAR cPSD| 17744894 Business research method Human resource management (Graphic Era Deemed to be University) StuDocu is not sponsored or endorsed by any college or university Downloaded by kan AR (kaanvivek@gmail.com) lOMoAR cPSD| 17744894 Important Questions with Answers Research Methodology (2810006) Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 1 lOMoAR cPSD| 17744894 1. Prepare a research plan for marketing manager of XYZ Automobile who wants to know about customer satisfaction level across India who recently purchased newly Introduced car. Answer: Format of the Research Proposal: 1. Problem Definition 2. Relevant Review of Literatures 3. Research Objectives, Research Hypotheses 4. Research Methodology: Sources of Data Collection (Primary/Secondary), Research Design (Exploratory/Descriptive/Causal), Sampling Technique (Probability/NonProbability Sampling), Sample Size, Sample Units (Customers, Employees, Retailers), Contact Method for Data Collection (Personal Interview/Mail/Electronic/Telephonic), Research Instrument for Data Collection (Questionnaire/Observation Form/CCTV Camera), Sampling Area (Rajkot/Ahmedabad) 5. Scope of the Study 6. Limitations of the Study 7. Proposed Chapter Scheme of the Research Report 8. Time Schedule 9. Rationale/Significance of the Study 10. Bibliography/References 11. Appendices (Questionnaire) 2. What is a Research Problem? State the main issue which should receive the attention of the researcher. Give examples to illustrate your answer. Answer: Problem Definition is the first and most crucial step in the research process - Main function is to decide what you want to find out about. - The way you formulate a problem determines almost every step that follows. Sources of research problems Research in social sciences revolves around four Ps: • People- a group of individuals • Problems- examine the existence of certain issues or problems relating to their lives; to ascertain attitude of a group of people towards an issue • Programs- to evaluate the effectiveness of an intervention • Phenomena- to establish the existence of regularity. Considerations in selecting a research problem: These help to ensure that your study will remain manageable and that you will remain motivated. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 2 lOMoAR cPSD| 17744894 1. Interest: a research endeavor is usually time consuming, and involves hard work and possibly unforeseen problems. One should select topic of great interest to sustain the required motivation. 2. Magnitude: It is extremely important to select a topic that you can manage within the time and resources at your disposal. Narrow the topic down to something manageable, specific and clear. 3. Measurement of concepts: Make sure that you are clear about the indicators and measurement of concepts (if used) in your study. 4. Level of expertise: Make sure that you have adequate level of expertise for the task you are proposing since you need to do the work yourself. 5. Relevance: Ensure that your study adds to the existing body of knowledge, bridges current gaps and is useful in policy formulation. This will help you to sustain interest in the study. 6. Availability of data: Before finalizing the topic, make sure that data are available. 7. Ethical issues: How ethical issues can affect the study population and how ethical problems can be overcome should be thoroughly examined at the problem formulating stage. 3. What is Research? Explain with a diagram the different steps of a research process. Answer: Research is a systematic and objective Identification, Collection, Analysis, Dissemination, and use of Information for the purpose of improving decision related to the identification and solution of problems and opportunities. The Process of Research has following steps: Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 3 lOMoAR cPSD| 17744894 4. What is hypothesis? Explain characteristics of good hypothesis and different types of hypotheses. Answer: Hypothesis: a. A statement which is accepted temporarily as true and on this basis the research work confines, b. A tentative assumption drawn from knowledge and theory which is used as a guide in the investigation of other facts and theories that are yet unknown, c. States what the researchers are looking for. Hence, a hypothesis looks forward. d. It is a tentative supposition or provisional guess, generalization which seems to explain the situation under observation. Characteristics of a Good Hypothesis A good hypothesis implies that hypothesis which fulfils its intended purposes and to be up to mark following and some important point, a. A good hypothesis should be stated in the simplest possible terms. It is also called the principle of the economy or business. It should be clear and precise. b. A good hypothesis is in agreement with the observed facts. It should be based on original data derived directly. c. It should be so designed that bits test will provide an answer to the original problem which farms the primary purpose of the investigation. d. Hypothesis should state relationship between variables, if, it happens to be a rational hypothesis. Different Forms of Hypothesis: On the basis of desire and use, the hypotheses are divided into various types. Some of them frequently available in the literature are: a. Null Hypothesis: This form of hypothesis states that there is no significant difference between the variables. This type of hypothesis generally mathematical model form which are used in statistical test of hypothesis. Here, the assumption is of two groups, are tested and then found to be equal. It is denoted by H0 b. Alternative Hypothesis: The alternative hypothesis includes possible values of the population parameter which is not included in the null hypothesis. It is a hypothesis which states that there is a difference between the procedures and is denoted by H1. 5. What is research design? Explain features, objectives and methods used in different research designs. Answer: Research Design: A research design is a framework or blueprint for conducting the marketing research project. It details the procedures necessary for obtaining the information needed to structure or solve marketing research problems. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 4 lOMoAR cPSD| 17744894 Objectives Characteristics Methods Exploratory Descriptive Causal Research Research Research Discovery ideas insights of Describe the Determine and market cause and effect characteristics relationships Flexible, Versatile, The front end of the research design Marked by the prior formulation of the specific hypothesis, Preplanned and structured design Manipulation of one or more independent variables, control of other mediating variables Expert Surveys, Pilot Surveys, Secondary Data, Qualitative Research Secondary Data, Experiments Quantitative Analysis, Surveys, Panels, Observation, 6. Explain various types of Descriptive Research Designs. Answer: 1. Cross Sectional Design 2. Longitudinal Design 1. Cross Sectional Design: Involve the collection of information from any given sample of population elements only once. In single cross-sectional designs, there is only one sample of respondents and information is obtained from this sample only once. In multiple cross-sectional designs, there are two or more samples of respondents, and information from each sample is obtained only once. Often, information from different samples is obtained at different times. Cohort analysis consists of a series of surveys conducted at appropriate time intervals, where the cohort serves as the basic unit of analysis. A cohort is a group of respondents who experience the same event within the same time interval. 2. Longitudinal Design: A fixed sample (or samples) of population elements is measured repeatedly on the same variables A longitudinal design differs from a cross-sectional design in that the sample or samples remain the same over time Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 5 lOMoAR cPSD| 17744894 7. Describe the Qualitative vs. Quantitative Research Answer: Objectives Sample Data Collection Data Analysis Outcome Qualitative Quantitative Research Research To gain a qualitative understanding of the underlying reasons and motivations To quantify the data and generalize the results from the sample to the population of interest Small number of representative cases non- Large number representative cases Unstructured Structured Non-statistical Statistical Develop an understanding initial Recommend a course of action of final 8. Suggest sources of secondary data. Answer: Written: Non-written: Company/Organisation data: INTERNAL Financial accounts; Sales data; Prices; Product development; Advertising expenditure; Purchase of supplies; Human resources records; Customer complaint logs Company/Organisation data: EXTERNAL Company information is available from a variety of sources, eg.: Biz@advantage; www.whowhere.com; www.hoovers.com – 12,000 companies, USA & others; Australian Stock Exchange (www.asx.com.au); AGSM Annual reports; Kompass, Dun & Bradstreet (www.dnb.com), Fortune 500 Possible documentary data? Journals and books; Case study materials; Committee minutes; AIRC documentation; Hansard transcripts; Mailing list discussions; Web-site content; Advertising banners Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 6 lOMoAR cPSD| 17744894 Multiple Source Geographically-based: Time-series based: Censuses and Surveys Censuses national: -usa.gov www.hist.umn.edu/~rmccaa/IPUMSI 9. Explain Focused Group Discussions. What is the function of a focus group? Explain the advantages and disadvantages of focus groups. Answer: The focus group, is a panel of people (typically 6 to 10 participants), led by a trained moderator, who meet for 90 minutes to 2 hours. The facilitator or moderator uses group dynamics principles to focus or guide the group in an ex-change of ideas, feelings, and experiences on a specific topic. Focus groups are often unique in research due to the research sponsor’s involvement in the process. – Most facilities permit sponsors to observe the group in real time, drawing his or her own insights from the conversations and nonverbal signals observed. – Many facilities also allow the client to supply the moderator with topics or questions generated by those observing in real time. (This option is generally not available in an individual depth interview, other group interviews, or survey research.) Focus groups typically last about two hours. A mirrored window allows observation of the group, without interfering with the group dynamics. Some facilities allow for product preparation and testing, as well as other creative exercises. As sessions become longer, activities are needed to bring out deeper feelings, knowledge, and motivations. Functions of Focus Group: To generate ideas for product development To understand the consumer vocabulary To reveal the consumer motivation, likes, dislikes, method of uses To validate the quantitative research findings Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 7 lOMoAR cPSD| 17744894 Advantages of Focus Groups: Synergism= 1+1=3 Snowballing= comment of one gives ideas to others Stimulation Security= individual is protected in a group Spontaneity= on the spot discussion and not pre-planned Specialization Scientific scrutiny Structure Speed Disadvantages of Focus Groups: Misuse Misjudge Moderation Messy Misrepresentation 10. In business research errors occurs other than sampling error. What are different non-sampling errors? Answer: • Non-Sampling Error: Non-sampling errors can be attributed to sources other than sampling, and they may be random or nonrandom: including errors in problem definition, approach, scales, questionnaire design, interviewing methods, and data preparation and analysis. Non-sampling errors consist of non-response errors and response errors. • Non-response error arises when some of the respondents included in the sample do not respond. • Response error arises when respondents give inaccurate answers or their answers are misrecorded or misanalyzed. • Non-Sampling Error/Response Error/Researcher Error • Surrogate Information Error • Measurement Error • Population Definition Error • Sampling Frame Error • Data Analysis Error Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 8 lOMoAR cPSD| 17744894 • • Non-Sampling Error/Response Error/Interviewer Error • Respondent Selection Error • Questioning Error • Recording Error • Cheating Error Non-Sampling Error/Response Error/Respondent Error • Inability Error • Unwillingness Error 11. Enlist the different methods of conducting a survey. Answer: Survey Approach most suited for gathering descriptive information. Structured Surveys: use formal lists of questions asked of all respondents in the same way. Unstructured Surveys: let the interviewer probe respondents and guide the interview according to their answers. Survey research may be Direct or Indirect. Direct Approach: The researcher asks direct questions about behaviours and thoughts. e.g. Why don’t you eat at MacDonalds? Indirect Approach: The researcher might ask: “What kind of people eat at MacDonald’s?” From the response, the researcher may be able to discover why the consumer avoids MacDonald’s. It may suggest factors of which the consumer is not consciously aware. ADVANTAGES: -can be used to collect many different kinds of information -Quick and low cost as compared to observation and experimental method. LIMITATIONS: -Respondent’s reluctance to answer questions asked by unknown interviewers about things they consider private. -Busy people may not want to take the time -may try to help by giving pleasant answers -unable to answer because they cannot remember or never gave a thought to what they do and why -may answer in order to look smart or well informed. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 9 lOMoAR cPSD| 17744894 Mail Questionnaires: Advantages: -can be used to collect large amounts of information at a low cost per respondent. -respondents may give more honest answers to personal questions on a mail questionnaire -no interviewer is involved to bias the respondent’s answers. -convenient for respondent’s who can answer when they have time - good way to reach people who often travel Limitations: -not flexible -take longer to complete than telephone or personal interview -response rate is often very low - researcher has no control over who answers. Telephone Interviewing: - quick method - more flexible as interviewer can explain questions not understood by the respondent - depending on respondent’s answer they can skip some Qs and probe more on others - allows greater sample control - response rate tends to be higher than mail Drawbacks: -Cost per respondent higher -Some people may not want to discuss personal Qs with interviewer -Interviewer’s manner of speaking may affect the respondent’s answers -Different interviewers may interpret and record response in a variety of ways -under time pressure ,data may be entered without actually interviewing Personal Interviewing: It is very flexible and can be used to collect large amounts of information. Trained interviewers are can hold the respondent’s attention and are available to clarify difficult questions. They can guide interviews, explore issues, and probe as the situation requires. Personal interview can be used in any type of questionnaire and can be conducted fairly quickly. Interviewers can also show actual products, advertisements, packages and observe and record their reactions and behaviour. This takes two formsIndividual- Intercept interviewing Group - Focus Group Interviewing Individual Intercept interviewing: Widely used in tourism research. -allows researcher to reach known people in a short period of time. - only method of reaching people whose names and addresses are unknown -involves talking to people at homes, offices, on the street, or in shopping malls. -interviewer must gain the interviewee’s cooperation -time involved may range from a few minutes to several hours( for longer surveys compensation may be offered) Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 10 lOMoAR cPSD| 17744894 --involves the use of judgmental sampling i.e. interviewer has guidelines as to whom to “intercept”, such as 25% under age 20 and 75% over age 60 Drawbacks: -Room for error and bias on the part of the interviewer who may not be able to correctly judge age, race etc. -Interviewer may be uncomfortable talking to certain ethnic or age groups. 12. Define projective techniques. Explain with illustration four different types of projective techniques. Answer: Because researchers are often looking for hidden or suppressed meanings, Projective techniques can be used within the interview structures. Some of these techniques include: o Word or picture association Participants match images, experiences, emotions, products and services, even people and places, to whatever is being studied. “Tell me what you think of when you think of Kellogg’s Special K cereal.” o Sentence completion Participants complete a sentence. “Complete this sentence: People who buy over the Internet...” o Cartoons or empty balloons Participants write the dialog for a cartoon-like picture. “What will the customer comment when she sees the salesperson approaching her in the new-car showroom.” o Thematic Apperception Test Participants are confronted with a picture and asked to describe how the person in the picture feels and thinks. o Component sorts Participants are presented with flash cards containing component features and asked to create new combinations. o Sensory sorts Participants are presented with scents, textures, and sounds, usually verbalized on cards, and asked to arrange them by one or more criteria. o Laddering or benefit chain Participants link functional features to their physical and psychological benefits, both real and ideal. o Imagination exercises Participants are asked to relate the properties of one o thing/person/brand to another. “If Crest toothpaste were a college, what type of college would it be?” o Imaginary universe Participants are asked to assume that the brand and its users populate an entire universe; they then describe the features of this new world. o Visitor from another planet Participants are asked to assume that they are aliens and are confronting the product for the first time, then describe their reactions, questions, and attitudes about purchase or retrial. o Personification Participants imagine inanimate objects with the traits, characteristics and features, and personalities of humans. “If brand X were a person, what type of person would brand X be?” o Authority figure Participants imagine that the brand or product is an authority figure and to describe the attributes of the figure. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 11 lOMoAR cPSD| 17744894 o Ambiguities and paradoxes Participants imagine a brand as something else (e.g., a Tide dog food or Marlboro cereal), describing its attributes and position. o Semantic mapping Participants are presented with a four-quadrant map where different variables anchor the two different axes; they place brands, product components, or organizations within the four quadrants. o Brand mapping Participants are presented with different brands and asked to talk about their perceptions, usually in relation to several criteria. They may also be asked to spatially place each brand on one or more semantic maps. 13. When is observation as a method of data collection used in research? What are its strength and limitation as a method of data collection? Answer: It is the gathering of primary data by investigator’s own direct observation of relevant people, actions and situations without asking from the respondent. e.g. • A hotel chain sends observers posing as guests into its coffee shop to check on cleanliness and customer service. • A food service operator sends researchers into competing restaurants to learn menu items prices, check portion sizes and consistency and observe point-of purchase merchandising. • A restaurant evaluates possible new locations by checking out locations of competing restaurants, traffic patterns and neighborhood conditions. Observation can yield information which people are normally unwilling or unable to provide. e.g. Observing numerous plates containing uneaten portions the same menu items indicates that food is not satisfactory. Types of Observation: 1. Structured – for descriptive research 2. Unstructured—for exploratory research 3. Disguised Observation 4. Undisguised Observation 5. Natural Observation 6. Contrived Observation Observation Methods: 1. Personal Observation 2. Mechanical Observation 3. Audit 4. Content Analysis 5. Trace Analysis Advantages: • They permit measurement of actual behavior rather than reports of intended or preferred behavior. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 12 lOMoAR cPSD| 17744894 • There is no reporting bias, and potential bias caused by the interviewer and the interviewing process is eliminated or reduced. • Certain types of data can be collected only by observation. • If the observed phenomenon occurs frequently or is of short duration, observational methods may be cheaper and faster than survey methods. Limitations: • The reasons for the observed behavior may not be determined since little is known about the underlying motives, beliefs, attitudes, and preferences. • Selective perception (bias in the researcher's perception) can bias the data. • Observational data are often time-consuming and expensive, and it is difficult to observe certain forms of behavior. • In some cases, the use of observational methods may be unethical, as in observing people without their knowledge or consent. It is best to view observation as a complement to survey methods, rather than as being in competition with them. 14. What are experiments? What is experimental Design? Explain the three types of most widely accepted experimental research designs. Answer: The process of examining the truth of a statistical hypothesis, relating to some research problem, is known as an experiment. For example, we can conduct an experiment to examine the usefulness of a certain newly developed drug. Experiments can be of two types viz., absolute experiment and comparative experiment. If we want to determine the impact of a fertilizer on the yield of a crop, it is a case of absolute experiment; but if we want to determine the impact of one fertilizer as compared to the impact of some other fertilizer, our experiment then will be termed as a comparative experiment. Often, we undertake comparative experiments when we talk of designs of experiments. An experimental design is a set of procedures specifying: the test units and how these units are to be divided into homogeneous subsamples, what independent variables or treatments are to be manipulated, what dependent variables are to be measured; and how the extraneous variables are to be controlled. Experimental Research Designs: • • • Pre-experimental designs do not employ randomization procedures to control for extraneous factors: the one-shot case study, the one-group pretest-posttest design, and the static-group. In true experimental designs, the researcher can randomly assign test units to experimental groups and treatments to experimental groups: the pretest-posttest control group design, the posttest-only control group design, and the Solomon four-group design. Quasi-experimental designs result when the researcher is unable to achieve full manipulation of scheduling or allocation of treatments to test units but can still apply part of the apparatus of true experimentation: time series and multiple time series designs. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 13 lOMoAR cPSD| 17744894 • A statistical design is a series of basic experiments that allows for statistical control and analysis of external variables: randomized block design, Latin square design, and factorial designs. 15. What is primary and secondary data? Write its advantages and disadvantages? Answer: • • Primary data are originated by a researcher for the specific purpose of addressing the problem at hand. The collection of primary data involves all six steps of the marketing research process. Secondary data are data that have already been collected for purposes other than the problem at hand. These data can be located quickly and inexpensively. Advantages of Primary Data: 1. Reliability 2. Specific to problem on hand Disadvantages of Primary Data: 1. Time consuming 2. Costly Advantages and Disadvantages of Secondary Data Advantages – Disadvantages Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 14 lOMoAR cPSD| 17744894 16. What do you understand by extraneous variables? Discuss some of the extraneous variables that affect the validity of experiment. Answer: • • • • • • • • • • Extraneous variables are all variables other than the independent variables that affect the response of the test units, e.g., store size, store location, and competitive effort. Some of the extraneous variables that affects the validity of experiments: History refers to specific events that are external to the experiment but occur at the same time as the experiment. Maturation (MA) refers to changes in the test units themselves that occur with the passage of time. Testing effects are caused by the process of experimentation. Typically, these are the effects on the experiment of taking a measure on the dependent variable before and after the presentation of the treatment. The main testing effect (MT) occurs when a prior observation affects a latter observation. In the interactive testing effect (IT), a prior measurement affects the test unit's response to the independent variable. Instrumentation (I) refers to changes in the measuring instrument, in the observers or in the scores themselves. Statistical regression effects (SR) occur when test units with extreme scores move closer to the average score during the course of the experiment. Selection bias (SB) refers to the improper assignment of test units to treatment conditions. Mortality (MO) refers to the loss of test units while the experiment is in progress. 17. What are the evaluating factors used for secondary data sources? Also explain how each of the five factors influences on evaluation of the secondary sources. Answer: Criteria for evaluating Secondary Data: • Specifications: Methodology Used to Collect the Data • Error: Accuracy of the Data • Currency: When the Data Were Collected • Objective(s): The Purpose for Which the Data Were Collected • Nature: The Content of the Data • Dependability: Overall, How Dependable Are the Data Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 15 lOMoAR cPSD| 17744894 18. Explain Internal and External source of Secondary data. Answer: Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 16 lOMoAR cPSD| 17744894 19. Discuss various methods of observation. Answer: 1. Personal Observation: A researcher observes actual behavior as it occurs. The observer does not attempt to manipulate the phenomenon being observed but merely records what takes place. For example, a researcher might record traffic counts and observe traffic flows in a department store. 2. Mechanical Observation: Do not require respondents' direct participation. – The AC Nielsen audimeter – Turnstiles that record the number of people entering or leaving a building. – On-site cameras (still, motion picture, or video) – Optical scanners in supermarkets Do require respondent involvement. – Eye-tracking monitors – Pupilometers – Psychogalvanometers – Voice pitch analyzers – Devices measuring response latency 3. Audit: The researcher collects data by examining physical records or performing inventory analysis. Data are collected personally by the researcher. The data are based upon counts, usually of physical objects. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 17 lOMoAR cPSD| 17744894 Retail and wholesale audits conducted by marketing research suppliers were discussed in the context of syndicated data. 4. Content Analysis: The objective, systematic, and quantitative description of the manifest content of a communication. The unit of analysis may be words, characters (individuals or objects), themes (propositions), space and time measures (length or duration of the message), or topics (subject of the message). Analytical categories for classifying the units are developed and the communication is broken down according to prescribed rules. 5. Trace Analysis: Data collection is based on physical traces, or evidence, of past behavior. The number of different fingerprints on a page was used to gauge the readership of various advertisements in a magazine. The position of the radio dials in cars brought in for service was used to estimate share of listening audience of various radio stations. The age and condition of cars in a parking lot were used to assess the affluence of customers. 20.What is measurement? What are the different measurement scales used in research? What are the essential differences among them? Explain in brief with example. Answer: Measurement means assigning numbers or other symbols to characteristics of objects. Basic Scales of Data Measurement: 1. Nominal Scale 2. Ordinal Scale 3. Interval Scale 4. Ratio Scale 1. Nominal Scale: The numbers serve only as labels or tags for identifying and classifying objects. When used for identification, there is a strict one-to-one correspondence between the numbers and the objects. The numbers do not reflect the amount of the characteristic possessed by the objects. The only permissible operation on the numbers in a nominal scale is counting. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 18 lOMoAR cPSD| 17744894 Only a limited number of statistics, all of which are based on frequency counts, are permissible, e.g., percentages, and mode. 2. Ordinal Scale: A ranking scale in which numbers are assigned to objects to indicate the relative extent to which the objects possess some characteristic. Can determine whether an object has more or less of a characteristic than some other object, but not how much more or less. Any series of numbers can be assigned that preserves the ordered relationships between the objects. In addition to the counting operation allowable for nominal scale data, ordinal scales permit the use of statistics based on centiles, e.g., percentile, quartile, median. 3. Interval Scale: Numerically equal distances on the scale represent equal values in the characteristic being measured. It permits comparison of the differences between objects. The location of the zero point is not fixed. Both the zero point and the units of measurement are arbitrary. It is not meaningful to take ratios of scale values. Statistical techniques that may be used include all of those that can be applied to nominal and ordinal data, and in addition the arithmetic mean, standard deviation, and other statistics commonly used in marketing research. 4. Ratio Scale: Possesses all the properties of the nominal, ordinal, and interval scales. It has an absolute zero point. It is meaningful to compute ratios of scale values. All statistical techniques can be applied to ratio data. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 19 lOMoAR cPSD| 17744894 21. Explain comparative and non comparative scaling techniques in brief. Comparative scales involve the direct comparison of stimulus objects. Comparative scale data must be interpreted in relative terms and have only ordinal or rank order properties. Comparative Scaling Techniques: 1. Paired Comparison Technique: A respondent is presented with two objects and asked to select one according to some criterion. The data obtained are ordinal in nature. Paired comparison scaling is the most widely-used comparative scaling technique. With n brands, [n(n - 1) /2] paired comparisons are required. Under the assumption of transitivity, it is possible to convert paired comparison data to a rank order. 2. Rank Order Scale: Respondents are presented with several objects simultaneously and asked to order or rank them according to some criterion. It is possible that the respondent may dislike the brand ranked 1 in an absolute sense. Furthermore, rank order scaling also results in ordinal data. Only (n - 1) scaling decisions need be made in rank order scaling. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 20 lOMoAR cPSD| 17744894 3. Constant Sum Scale: Respondents allocate a constant sum of units, such as 100 points to attributes of a product to reflect their importance. If an attribute is unimportant, the respondent assigns it zero points. If an attribute is twice as important as some other attribute, it receives twice as many points. The sum of all the points is 100. Hence, the name of the scale. In noncomparative scales, each object is scaled independently of the others in the stimulus set. The resulting data are generally assumed to be interval or ratio scaled. Non-Comparative Scaling Techniques: 1. Continuous Rating Scale: Respondents rate the objects by placing a mark at the appropriate position on a line that runs from one extreme of the criterion variable to the other. The form of the continuous scale may vary considerably. 2. Itemised Rating Scale: The respondents are provided with a scale that has a number or brief description associated with each category. The categories are ordered in terms of scale position, and the respondents are required to select the specified category that best describes the object being rated. The commonly used itemized rating scales are the Likert, semantic differential, and Stapel scales. a. Likert Scale: The Likert scale requires the respondents to indicate a degree of agreement or disagreement with each of a series of statements about the stimulus objects. b. Semantic Differential Scale: The semantic differential is a seven-point rating scale with end points associated with bipolar labels that have semantic meaning. The negative adjective or phrase sometimes appears at the left side of the scale and sometimes at the right. This controls the tendency of some respondents, particularly those with very positive or very negative attitudes, to mark the rightor left-hand sides without reading the labels. Individual items on a semantic differential scale may be scored on either a -3 to +3 or a 1 to 7 scale. c. Stapel Scale: The Stapel scale is a unipolar rating scale with ten categories numbered from -5 to +5, without a neutral point (zero). This scale is usually presented vertically. The data obtained by using a Stapel scale can be analyzed in the same way as semantic differential data. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 21 lOMoAR cPSD| 17744894 22. Discuss major criteria for evaluating a measurement tool. Answer: 1. Reliability: Consistency of the instrument is called as Reliability. Reliability can be defined as the extent to which measures are free from random error, XR. If XR = 0, the measure is perfectly reliable. If the same scale/instrument is used and we use the scale/instrument for the similar conditions then the measured data should be consistent (same). The systematic error does not affect the reliability but random error does. So, for better reliability, the scale should be free from random errors. If random error=O, the scale is most reliable. Methods of Reliability: In test-retest reliability, respondents are administered identical sets of scale items at two different times and the degree of similarity between the two measurements is determined. If the association or correlation between test and retest result is high, we can conclude that scale is highly reliable. The problem with this are: It is illogical to conduct research on same scale twice and sometimes impossible also (Mall intercept), interval between test and retest influence reliability, change in attitude between test and retest. In alternative-forms reliability, two equivalent forms of the scale are constructed and the same respondents are measured at two different times, with a different form being used each time. The scales are similar to each other and the same groups are measured. 2 instruments: A and B. the correlation or association Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 22 lOMoAR cPSD| 17744894 between both instruments, it has high reliability. Problems: Constructing two scales is difficult and time consuming. Internal consistency reliability determines the extent to which different parts of a summated scale are consistent in what they indicate about the characteristic being measured. In split-half reliability, the items on the scale are divided into two halves and the resulting half scores are correlated. The scale contains multi items and we split them into equal half. If total 20 items, we make 2 instruments, each one with 10 items and check its association or correlation, if it is high, it is highly reliable. 2. Validity: A measure has validity if it measures what it is supposed to measure. For example, a study on consumer likeliness of taste in a restaurant, should have questions related to taste only and so it is called valid instrument. If it includes question of service provided, it is invalid scale for measuring likeliness of taste in a restaurant. An instrument is valid if it is free from systematic and random errors. The validity of a scale may be defined as the extent to which differences in observed scale scores reflect true differences among objects on the characteristic being measured, rather than systematic or random error. Perfect validity requires that there be no measurement error (XO = XT, XR = 0, XS = 0). Methods of Checking Validity: Face or Content validity: Does it look like a scale what we want to measure. It is a subjective but systematic evaluation of how well the content of a scale represents the measurement task at hand. For example, if a person recall an advt by exposure to an advt, we can conclude that he has been exposed to it earlier as well. Content validity can be improved by having other researchers critique the measurement instrument. Criterion validity reflects whether a scale performs as expected in relation to other variables selected (criterion variables) as meaningful criteria. It has 2 forms: concurrent validity and predictive validity. Concurrent Validity: is demonstrated where a test correlates well with a measure that has previously been validated. The two measures may be for the same construct, or for different, but presumably related, constructs. Concurrent validity applies to validation studies in which the two measures are administered at approximately the same time. For example, an employment test may be administered to a group of workers and then the test scores can be correlated with the ratings of the workers' supervisors taken on Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 23 lOMoAR cPSD| 17744894 the same day or in the same week. The resulting correlation would be a concurrent validity coefficient. Predictive Validity: If scale can correctly predict the respondent future behavior, it is having high predictive validity. For example, after using intention to buy scale, we can check the respondent actual purchase of the product later. Predictive validity differs only in that the time between taking the test and gathering supervisor ratings is longer, i.e., several months or years. In the example above, predictive validity would be the best choice for validating an employment test, because employment tests are designed to predict performance. Construct validity refers to whether a scale measures or correlates with the theorized psychological scientific construct that it purports to measure. In other words, it is the extent to which what was to be measured was actually measured. It addresses the question of what construct or characteristic the scale is, in fact, measuring. Construct validity includes convergent, discriminant, and nomological validity. Convergent validity is the extent to which the scale correlates positively with other measures of the same construct. For example, Research on 300 people and finding average age be 20 and to verify (validate) it, if we conduct research on 45 again and find average age be 19.9 or 20.1, it has high convergent validity. Discriminant validity is the extent to which a measure does not correlate with other constructs from which it is supposed to differ. Response should be different of different constructs. Respondents attitude towards theft and fire security are same, the scale lacks discriminant validity. Nomological validity is the extent to which the scale correlates in theoretically predicted ways with measures of different but related constructs. 3. Generalizability: It is the degree to which a study based on a sample applies to a universe of generalizations. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 24 lOMoAR cPSD| 17744894 23. Explain 10 step questionnaire design process. Answer: 24. Explain the reasons for sampling. Elaborate the process of sampling. Answer: Reasons for Sampling: o Lower cost: o Greater accuracy of result o Greater speed of data collection o Availability of population elements Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 25 lOMoAR cPSD| 17744894 1. Define the Population: The target population is the collection of elements or objects that possess the information sought by the researcher and about which inferences are to be made. The target population should be defined in terms of elements, sampling units, extent, and time. An element is the object about which or from which the information is desired, e.g., the respondent. A sampling unit is an element, or a unit containing the element, that is available for selection at some stage of the sampling process. Extent refers to the geographical boundaries. Time is the time period under consideration. 2. Determining the Sampling Frame: A sampling frame is a list of populations from which the samples are drawn. Example: A telephone directory, a directory of GIDC. If such list is not available, researcher has to prepare it. 3. Selecting the sampling Technique: Probability or Non Probability Sampling techniques can be used. 4. Determining the sample size: Important qualitative factors in determining the sample size are: the importance of the decision the nature of the research Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 26 lOMoAR cPSD| 17744894 the number of variables the nature of the analysis sample sizes used in similar studies resource constraints 5. Execute the sampling process 25. Discuss various probability and non-probability sampling techniques with their strengths and weaknesses. Answer: 1. Non-Probability Sampling Sampling techniques that do not use chance selection procedures. Rather, they rely on the personal judgment of the researcher. 2. Probability Sampling A sampling procedure in which each element of the population has a fixed probabilistic chance of being selected for the sample. 1. Non-Probability Sampling Techniques: a. Convenience Sampling Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 27 lOMoAR cPSD| 17744894 b. Judgmental Sampling c. Quota Sampling d. Snowball Sampling a. Convenience sampling attempts to obtain a sample of convenient elements. Often, respondents are selected because they happen to be in the right place at the right time. use of students, and members of social organizations mall intercept interviews without qualifying the respondents department stores using charge account lists “people on the street” interviews Advantages: Least Expensive, Least Time Consuming and Most Convenient Disadvantages: Selection Bias, Non Representative Samples, Not useful for descriptive or causal research b. Judgmental sampling is a form of convenience sampling in which the population elements are selected based on the judgment of the researcher. test markets purchase engineers selected in industrial marketing research Advantages: Low Cost, Less Time consuming and convenient Disadvantages: Subjective selection, Doesn’t allow generalizations c. Quota sampling may be viewed as two-stage restricted judgmental sampling. The first stage consists of developing control categories, or quotas, of population elements. In the second stage, sample elements are selected based on convenience or judgment. Advantages: Sample can be controlled for certain characteristics Disadvantages: Selection Bias, No assurance of representativeness d. In snowball sampling, an initial group of respondents is selected, usually at random. After being interviewed, these respondents are asked to identify others who belong to the target population of interest. Subsequent respondents are selected based on the referrals. Advantages: can estimate rare characteristics Disadvantages: Time consuming 2. Probability Sampling Techniques: a. Simple Random Sampling b. Systematic Sampling c. Stratified Random Sampling d. Cluster Sampling a. Simple Random Sampling: Each element in the population has a known and equal probability of selection. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 28 lOMoAR cPSD| 17744894 Each possible sample of a given size (n) has a known and equal probability of being the sample actually selected. This implies that every element is selected independently of every other element. Advantages: Easily Understood, Results projectable Disadvantages: Difficult to construct sampling frame, expensive, lower precision, no assurance of representativeness b. Systematic Sampling: The sample is chosen by selecting a random starting point and then picking every ith element in succession from the sampling frame. The sampling interval, i, is determined by dividing the population size N by the sample size n and rounding to the nearest integer. When the ordering of the elements is related to the characteristic of interest, systematic sampling increases the representativeness of the sample. If the ordering of the elements produces a cyclical pattern, systematic sampling may decrease the representativeness of the sample. For example, there are 100,000 elements in the population and a sample of 1,000 is desired. In this case the sampling interval, i, is 100. A random number between 1 and 100 is selected. If, for example, this number is 23, the sample consists of elements 23, 123, 223, 323, 423, 523, and so on. Advantages: Can increase representativeness, easier to implement than SRS, sample frame not necessary Disadvantages: Can decrease representativeness c. Stratified Random Sampling: A two-step process in which the population is partitioned into subpopulations, or strata. The strata should be mutually exclusive and collectively exhaustive in that every population element should be assigned to one and only one stratum and no population elements should be omitted. Next, elements are selected from each stratum by a random procedure, usually SRS. A major objective of stratified sampling is to increase precision without increasing cost. The elements within a stratum should be as homogeneous as possible, but the elements in different strata should be as heterogeneous as possible. The stratification variables should also be closely related to the characteristic of interest. Finally, the variables should decrease the cost of the stratification process by being easy to measure and apply. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 29 lOMoAR cPSD| 17744894 In proportionate stratified sampling, the size of the sample drawn from each stratum is proportionate to the relative size of that stratum in the total population. In disproportionate stratified sampling, the size of the sample from each stratum is proportionate to the relative size of that stratum and to the standard deviation of the distribution of the characteristic of interest among all the elements in that stratum. Advantages: Include all sub-population, precision Disadvantages: Difficult to select relevant stratification variables, not feasible to stratify on many variables, expensive d. Cluster Sampling: The target population is first divided into mutually exclusive and collectively exhaustive subpopulations, or clusters. Then a random sample of clusters is selected, based on a probability sampling technique such as SRS. For each selected cluster, either all the elements are included in the sample (onestage) or a sample of elements is drawn probabilistically (two-stage). Elements within a cluster should be as heterogeneous as possible, but clusters themselves should be as homogeneous as possible. Ideally, each cluster should be a small-scale representation of the population. In probability proportionate to size sampling, the clusters are sampled with probability proportional to size. In the second stage, the probability of selecting a sampling unit in a selected cluster varies inversely with the size of the cluster. Types of Cluster Sampling: 1. One Stage Cluster Sampling 2. Two Stage Cluster Sampling 3. Multi Stage Cluster Sampling Advantages: Easy to implement and cost effective Disadvantages: Imprecise, Difficult to compute and interpret results 26. Discuss various qualitative factors to be considered while deciding sample size. Answer: Factors to be considered while deciding sample size: 1) Importance of the decision 2) Nature of the research 3) Number of variables 4) Nature of the analysis 5) Sample sizes used in similar studies 6) Resource constraints Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 30 lOMoAR cPSD| 17744894 27. Discuss the process of hypothesis testing in brief. Answer: Process of Hypothesis Testing: 1) Formulate Null and Alternate Hypothesis 2) Select Appropriate Test Statistic 3) Choose Level of Significance (Alpha) 4) Collect data and calculate test statistic 5) Determine the critical value 6) Compare the critical value and make the decision 7) Make the conclusion 28. Define Type-I and Type-II errors with suitable example. Answer: Type I Error: Type I error occurs when the sample results lead to the rejection of the null hypothesis when it is in fact true. The probability of type I error is also called the alpha and level of significance. Type II Error Type II error occurs when, based on the sample results, the null hypothesis is not rejected when it is in fact false. The probability of type II error is denoted by beta. Unlike, which is specified by the researcher, the magnitude of depends on the actual value of the population parameter (proportion). Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 31 lOMoAR cPSD| 17744894 29. What is parametric and non parametric test? Explain with one example from each? Answer: Parametric Tests: Parametric methods make assumptions about the underlying distribution from which sample populations are selected. In a parametric test, a sample statistic is obtained to estimate the population parameter. Because this estimation process involves a sample, a sampling distribution and a population, certain parametric assumptions are required to ensure all components are compatible with each other. Parametric tests are based on models with some assumptions. If the assumptions hold good, these tests offer a more powerful tool for analysis. It usually assumes certain properties of the parent population from which we draw samples. Assumptions like observations come from a normal population, sample size is large, assumptions about population parameters like mean, variance, etc., must hold good before parametric tests can be used. But these are situations when the researcher cannot or does not want to make such assumptions. In such situations we use statistical methods for testing hypotheses which are called non-parametric tests because such tests do not depend on any assumption about the parameters of the parent population. Besides, most non-parametric tests assume only nominal or ordinal data, whereas parametric tests require measurement equivalent to at least an interval or ratio scale. As a result, nonparametric tests need more observations than parametric tests to achieve the same size of type-I and Type-II errors. All these tests are based on the assumptions of normality, the source of data is considered to be normally distributed. Popular parametric tests used are t-test, z-test and F-test. Non-Parametric Tests: As the name implies, non-parametric tests do not require parametric assumptions because interval data are converted to rank ordered data. Handling of rank ordered data is considered strength of non-parametric tests. All practical data follow normal distribution. Under such situations, one can estimate the parameters such as mean, variance, etc., and use the standard tests, they are known as parametric tests. The practical data may be non-normal and/or it may not be possible to estimate the parameters of the data. The tests which are used for such situations are called non-parametric tests. Since, these tests are based on data which are free from distribution and parameter; these tests are known as non-parametric tests. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 32 lOMoAR cPSD| 17744894 The non-parametric tests require less calculation, because there is no need to compute parameters. Also, these tests can be applied to very small samples more specifically during pilot studies in market research. The test technique makes use of one or more values obtained from sample data to arrive at a probability statement about the hypothesis. But no such assumptions are made in case of nonparametric tests. In a statistical test, two kinds of assertions are involved, an assertion directly related to the purpose of the investigation and other assertions to make a probability statement. The former is an assertion to be tested and is technically called a hypothesis, whereas the set of all other assertions is called the model. When we apply a test without a model, it is a distribution free test or the non-parametric test. Non-parametric tests do not make an assumption about the parameters of the population and thus do not make use of the parameters of the distribution. In other words, under non-parametric tests we do not assume that a particular distribution is applicable, or that a certain value is attached to a parameter of the population. Popular non-parametric tests are Chi-Square Test, Kolmogorov-Smirnov Test, Run Test, Median, Mann-Whitney U Test, Wilcoxon Signed Rank Test, Kruskal Wallis Test 30. Explain coding, editing, and tabulation of a data. Answer: Coding: Before permitting statistical analysis, a researcher has to prepare data for analysis. This preparation is done by data coding. Coding of data is probably the most crucial step in the analytical process. Codes or categories are tags or labels for allocating units of meaning to the descriptive or inferential information compiled during a study. In coding, each answer is identified and classified with a numerical score or other symbolic characteristics for processing the data in computers. While coding, researchers generally select a convenient way of entering the data in a spreadsheet. The data can be conveniently exported or imported to other software such as SPSS and SAS. The character of information occupies the column position in the spreadsheet with the specific answers to the questions entered in a row. Thus, each row of the spreadsheet will indicate the respondent’s answers on the column heads. Rules that guide the establishment of category sets – Appropriate to the research problem and purpose – Exhaustive – Mutually exclusive – Derived from one classification principle Raw data, particularly qualitative data can be very interesting to look at, but do not express any relationship unless anlysed systematically. Qualitative data are textual, non-numerical and unstructured. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 33 lOMoAR cPSD| 17744894 There are two methods of creating codes. • The first one is used by an inductive researcher who may not want to pre-code any datum until he/she has collected it. • This type of coding approach is popularly called among the researchers as ‘grounded approach of data coding’. • The other one, the method preferred by Miles and Huberman, is to create a provisional ‘start list’ of codes prior to fieldwork. • That list comes from the conceptual framework, list of research questions, hypotheses, problem areas and/or key variables that the researcher brings to the study. Editing: Editing is actually checking of the questionnaire for suspicious, inconsistent, intelligible, and incomplete answers visible from careful study of the questionnaire. Field editing is a good way to deal with the answering problems in the questionnaire and is usually done by the supervisor on the same day when the interviewer has conducted the interview. Editing on the same day of the interview has various advantages. In the case of any inconsistent or illogical answering, the supervisor can ask the interviewer about it, and the interviewer can also answer well because he or she has just conducted the interview and the possibility of forgetting the incident if minimal. The interviewer can then recall and discuss the answer and situation with the supervisor. In this manner, a logical answer to the question may be arrived. Field editing can also identify any fault in the process of the interview just on time and can be rectified without much delay in the interview process. In some interview techniques such as mail technique, field editing is not possible. In such a situation, in-house editing is done, which rigorously examines the questionnaire for any deficiency and a team of experts edit and code these data. Editing includes: Detects errors and omissions, Corrects them when possible, and Certifies that minimum data quality standards are achieved Guarantees that data are – accurate – consistent with other information – uniformly entered – complete – arranged to simplify coding and tabulation Field Editing – translation of ad hoc abbreviations and symbols used during data collection – validation of the field results. Central Editing Tabulation of Data: A table is commonly meant as an orderly and systematic presentation of numerical data in columns and rows. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 34 lOMoAR cPSD| 17744894 The main object of a table is to arrange the physical presentation of numerical facts in such a manner that the attentions of the readers are automatically directed to relevant information. General Purpose Tables: The general purpose table is informative. Its main aim is to present a comprehensive set of data in such an arrangement that any particular item can be easily located. Special Purpose Table: Special purpose tables are relatively small in size and are designed to present as effectively as possible the findings of a particular study for which it is designed. 31. Explain the different components of a research report. Answer: After conducting any research, the researcher needs to draft the work. Drafting is a scientific procedure and needs a systematic and careful articulation. When the organization for which the researcher is conducting the research has provided guidelines to prepare the research report, one has to follow them. However, if there is no guideline available from the sponsoring organization, then the researcher can follow a reasonable pattern of presenting a written research report. In fact, there is no single universally accepted guideline available for organization of a written report. In fact, the organization of the written report depends on the type of the target group that it addresses. However, following is the format for the written report. Although this format presents a broad guideline for report organization, in real sense, the researcher enjoys flexibility to either include or exclude the items from the given list. 1. Title Page 2. Letter of Transmittal 3. Letter of Authorization 4. Table of Contents (including list of figures, tables and charts) 5. Executive Summary Objective Concise statement of the methodology Results Conclusions Recommendations 6. Body Introduction Research Objective Research Methodology (Sample, Sample Size, Sample Technique, Scaling Technique, Data source, Research Design, Instrument for data collection, Contact Methods, Test statistics, Field Work) Results and findings Conclusions and Recommendations Limitations of the research 7. Appendix Copies of data collection forms Statistical output details General tables that are not included in the body Bibliography Other required support material Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 35 lOMoAR cPSD| 17744894 32. Explain Univariate, Bivariate and Multivariate analysis with examples. Answer: Empirical testing typically involves inferential statistics. This means that an inference will be drawn about some population based on observations of a sample representing that population. Statistical analysis can be divided into several groups: • Univariate statistical analysis tests hypotheses involving only one variable. Univariate analysis is the simplest form of quantitative (statistical) analysis. The analysis is carried out with the description of a single variable and its attributes of the applicable unit of analysis. For example, if the variable age was the subject of the analysis, the researcher would look at how many subjects fall into a given age attribute categories. Univariate analysis contrasts with bivariate analysis – the analysis of two variables simultaneously – or multivariate analysis – the analysis of multiple variables simultaneously. Univariate analysis is also used primarily for descriptive purposes, while bivariate and multivariate analysis are geared more towards explanatory purposes. Univariate analysis is commonly used in the first stages of research, in analyzing the data at hand, before being supplemented by more advance, inferential bivariate or multivariate analysis. A basic way of presenting univariate data is to create a frequency distribution of the individual cases, which involves presenting the number of attributes of the variable studied for each case observed in the sample. This can be done in a table format, with a bar chart or a similar form of graphical representation. A sample distribution table and a bar chart for an univariate analysis are presented below (the table shows the frequency distribution for a variable "age" and the bar chart, for a variable "incarceration rate"): - this is an edit of the previous as the chart is an example of bivariate, not univariate analysis - as stated above, bivariate analysis is that of two variables and there are 2 variables compared in this graph: incarceration and country. There are several tools used in univariate analysis; their applicability depends on whether we are dealing with a continuous variable (such as age) or a discrete variable (such as gender). In addition to frequency distribution, univariate analysis commonly involves reporting measures of central tendency (location). This involves describing the way in which quantitative data tend to cluster around some value. In the univariate analysis, the measure of central tendency is an average of a set of measurements, the word average being variously construed as (arithmetic) mean, median, mode or other measure of location, depending on the context. Another set of measures used in the univariate analysis, complementing the study of the central tendency, involves studying the statistical dispersion. Those measurements look at how the values are distributed around values of central tendency. The dispersion measures most often involve studying the range, interquartile range, and the standard deviation. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 36 lOMoAR cPSD| 17744894 • Bivariate statistical analysis tests hypotheses involving two variables. Bivariate analysis is one of the simplest forms of the quantitative (statistical) analysis. It involves the analysis of two variables (often denoted as X, Y), for the purpose of determining the empirical relationship between them. In order to see if the variables are related to one another, it is common to measure how those two variables simultaneously change together (see also covariance). Bivariate analysis can be helpful in testing simple hypotheses of association and causality – checking to what extent it becomes easier to know and predict a value for the dependent variable if we know a case's value on the independent variable (see also correlation). Bivariate analysis can be contrasted with univariate analysis in which only one variable is analysed. Furthermore, the purpose of a univariate analysis is descriptive. Subgroup comparison – the descriptive analysis of two variables – can be sometimes seen as a very simple form of bivariate analysis (or as univariate analysis extended to two variables). The major differentiating point between univariate and bivariate analysis, in addition to looking at more than one variable, is that the purpose of a bivariate analysis goes beyond simply descriptive: it is the analysis of the relationship between the two variables. Types of analysis Common forms of bivariate analysis involve creating a percentage table, a scatterplot graph, or the computation of a simple correlation coefficient. For example, a bivariate analysis intended to investigate whether there is any significant difference in earnings of men and women might involve creating a table of percentages of the population within various categories, using categories based on gender and earnings: Earnings Men Women under 20,000$ 47% 52% 20,000–50,000$ 45% 47% over 50,000$ 8% 1% The types of analysis that are suited to particular pairs of variables vary in accordance with the level of measurement of the variables of interest (e.g., nominal/categorical, ordinal, interval/ratio). Bivariate analysis is a simple (two variable) variant of multivariate analysis (where multiple relations between multiple variables are examined simultaneously). Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 37 lOMoAR cPSD| 17744894 Steps in Conducting Bivariate Analysis Step 1: Define the nature of the relationship whether the values of the independent variables relate to the values of the dependent variable or not. Step 2: Identify the type and direction, if applicable, of the relationship. Step 3: Determine if the relationship is statistically significant and generalizable to the population. Step 4: Identify the strength of the relationship, i.e. the degree to which the values of the independent variable explain the variation in the dependent variable. • Multivariate statistical analysis tests hypotheses and models involving multiple (three or more) variables or sets of variables. Multivariate statistics is a form of statistics encompassing the simultaneous observation and analysis of more than one outcome variable. The application of multivariate statistics is multivariate analysis. Methods of bivariate statistics, for example simple linear regression and correlation, are NOT special cases of multivariate statistics because only one outcome variable is involved. Multivariate statistics concerns understanding the different aims and background of each of the different forms of multivariate analysis, and how they relate to each other. The practical implementation of multivariate statistics to a particular problem may involve several types of univariate and multivariate analysis in order to understand the relationships between variables and their relevance to the actual problem being studied. In addition, multivariate statistics is concerned with multivariate probability distributions, in terms of both: how these can be used to represent the distributions of observed data; how they can be used as part of statistical inference, particularly where several different quantities are of interest to the same analysis. There are many different models, each with its own type of analysis: 1. Multivariate analysis of variance (MANOVA) extends the analysis of variance to cover cases where there is more than one dependent variable to be analyzed simultaneously: see also MANCOVA. 2. Multivariate regression analysis attempts to determine a formula that can describe how elements in a vector of variables respond simultaneously to changes in others. For linear relations, regression analyses here are based on forms of the general linear model. 3. Principal components analysis (PCA) creates a new set of orthogonal variables that contain the same information as the original set. It rotates the axes of variation to give a Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 38 lOMoAR cPSD| 17744894 new set of orthogonal axes, ordered so that they summarize decreasing proportions of the variation. 4. Factor analysis is similar to PCA but allows the user to extract a specified number of synthetic variables, fewer than the original set, leaving the remaining unexplained variation as error. The extracted variables are known as latent variables or factors; each one may be supposed to account for co variation in a group of observed variables. 5. Canonical correlation analysis finds linear relationships among two sets of variables; it is the generalized (i.e. canonical) version of bivariate correlation. 6. Redundancy analysis is similar to canonical correlation analysis but allows the user to derive a specified number of synthetic variables from one set of (independent) variables that explain as much variance as possible in another (independent) set. It is a multivariate analogue of regression. 7. Correspondence analysis (CA), or reciprocal averaging, finds (like PCA) a set of synthetic variables that summarize the original set. The underlying model assumes chisquared dissimilarities among records (cases). There is also canonical (or "constrained") correspondence analysis (CCA) for summarizing the joint variation in two sets of variables (like canonical correlation analysis). 8. Multidimensional scaling comprises various algorithms to determine a set of synthetic variables that best represent the pair wise distances between records. The original method is principal coordinates analysis (based on PCA). 9. Discriminant analysis, or canonical variate analysis, attempts to establish whether a set of variables can be used to distinguish between two or more groups of cases. 10. Linear discriminant analysis (LDA) computes a linear predictor from two sets of normally distributed data to allow for classification of new observations. 11. Clustering systems assign objects into groups (called clusters) so that objects (cases) from the same cluster are more similar to each other than objects from different clusters. 33. Explain in brief the following and their specific application in data presentation a. Cross Tabulation Cross tabulation approach is especially useful when the data are in nominal form. Under it we classify each variable into two or more categories and then cross classify the variables in these subcategories. Then we look for interactions between them which may be symmetrical, reciprocal or asymmetrical. A symmetrical relationship is one in which the two variables vary together, but we assume that neither variable is due to the other. A reciprocal relationship exists when the two variables mutually influence or reinforce each other. Asymmetrical relationship is said to exist if one variable (the independent variable) is responsible for another variable (the dependent variable). The cross classification procedure begins with a two-way table which indicates whether there is or there is not an interrelationship between the variables. This sort of analysis can be further elaborated in which case a third factor is introduced into the association through cross-classifying the three variables. By doing so we find conditional relationship in which factor X appears to affect factor Y only when factor Z is held constant. The correlation, if any, found through this approach is not considered a very Powerful form of statistical correlation and accordingly we use some other methods when data happen to be either ordinal or interval or ratio data. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 39 lOMoAR cPSD| 17744894 b. Pareto diagrams Pareto analysis is a statistical technique in decision making that is used for selection of a limited number of tasks that produce significant overall effect. It uses the Pareto principle – the idea that by doing 20% of work, 80% of the advantage of doing the entire job can be generated. Or in terms of quality improvement, a large majority of problems (80%) are produced by a few key causes (20%). Pareto analysis is a formal technique useful where many possible courses of action are competing for attention. In essence, the problem-solver estimates the benefit delivered by each action, then selects a number of the most effective actions that deliver a total benefit reasonably close to the maximal possible one. Pareto analysis is a creative way of looking at causes of problems because it helps stimulate thinking and organize thoughts. However, it can be limited by its exclusion of possibly important problems which may be small initially, but which grow with time. It should be combined with other analytical tools such as failure mode and effects analysis and fault tree analysis for example. This technique helps to identify the top portion of causes that need to be addressed to resolve the majority of problems. Once the predominant causes are identified, then tools like the Ishikawa diagram or Fish-bone Analysis can be used to identify the root causes of the problems. While it is common to refer to pareto as "20/80", under the assumption that, in all situations, 20% of causes determine 80% of problems, this ratio is merely a convenient rule of thumb and is not nor should it be considered immutable law of nature. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 40 lOMoAR cPSD| 17744894 The application of the Pareto analysis in risk management allows management to focus on those risks that have the most impact on the project. c. Box Plots In descriptive statistics, a box plot or boxplot (also known as a box-and-whisker diagram or plot) is a convenient way of graphically depicting groups of numerical data through their five-number summaries: the smallest observation (sample minimum), lower quartile (Q1), median (Q2), upper quartile (Q3), and largest observation (sample maximum). A boxplot may also indicate which observations, if any, might be considered outliers. Boxplots display differences between populations without making any assumptions of the underlying statistical distribution: they are non-parametric. The spacings between the different parts of the box help indicate the degree of dispersion (spread) and skewness in the data, and identify outliers. Boxplots can be drawn either horizontally or vertically. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 41 lOMoAR cPSD| 17744894 d. Mapping e. Sum and leaf diagram A maths test is marked out of 50. The marks for the class are shown below: 7, 36, 41, 39, 27, 21 24, 17, 24, 31, 17, 13 31, 19, 8, 10, 14, 45 49, 50, 45, 32, 25, 17 46, 36, 23, 18, 12, 6 This data can be more easily interpreted if we represent it in a stem and leaf diagram. This stem and leaf diagram shows the data above: Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 42 lOMoAR cPSD| 17744894 The stem and leaf diagram is formed by splitting the numbers into two parts - in this case, tens and units. The tens form the 'stem' and the units form the 'leaves'. This information is given to us in the Key. It is usual for the numbers to be ordered. So, for example, the row shows the numbers 21, 23, 24, 24, 25 and 27 in order. f. Histogram In statistics, a histogram is a graphical representation showing a visual impression of the distribution of data. It is an estimate of the probability distribution of a continuous variable and was first introduced by Karl Pearson. A histogram consists of tabular frequencies, shown as adjacent rectangles, erected over discrete intervals (bins), with an area equal to the frequency of the observations in the interval. The height of a rectangle is also equal to the frequency density of the interval, i.e., the frequency divided by the width of the interval. The total area of the histogram is equal to the number of data. A histogram may also be normalized displaying relative frequencies. It then shows the proportion of cases that fall into each of several categories, with the total area equaling 1. The categories are usually specified as consecutive, non-overlapping intervals of a variable. The categories (intervals) must be adjacent, and often are chosen to be of the same size. The rectangles of a histogram are drawn so that they touch each other to indicate that the original variable is continuous. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 43 lOMoAR cPSD| 17744894 Histograms are used to plot density of data, and often for density estimation: estimating the probability density function of the underlying variable. The total area of a histogram used for probability density is always normalized to 1. If the length of the intervals on the x-axis are all 1, then a histogram is identical to a relative frequency plot. An alternative to the histogram is kernel density estimation, which uses a kernel to smooth samples. This will construct a smooth probability density function, which will in general more accurately reflect the underlying variable. g. Pie charts A pie chart (or a circle graph) is a circular chart divided into sectors, illustrating proportion. In a pie chart, the arc length of each sector (and consequently its central angle and area), is proportional to the quantity it represents. When angles are measured with 1 turn as unit then a number of percent is identified with the same number of centiturns. Together, the sectors create a full disk. It is named for its resemblance to a pie which has been sliced. The size of the sectors are calculated by converting between percentage and degrees or by the use of a percentage protractor. The earliest known pie chart is generally credited to William Playfair's Statistical Breviary of 1801. The pie chart is perhaps the most widely used statistical chart in the business world and the mass media. However, it has been criticized,[5] and some recommend avoiding it, pointing out in particular that it is difficult to compare different sections of a given pie chart, or to compare data across different pie charts. Pie charts can be an effective way of displaying information in some cases, in particular if the intent is to compare the size of a slice with the whole pie, rather than comparing the slices among them. Pie charts work particularly well when the slices represent 25 to 50% of the data, but in general, other plots such as the bar Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 44 lOMoAR cPSD| 17744894 chart or the dot plot, or non-graphical methods such as tables, may be more adapted for representing certain information. 34. Explain following terms with reference to research methodology: a. Concept and Construct: Concepts and constructs are both abstractions, the former from our perceptions of reality and the latter from some invention that we have made. A concept is a bundle of meanings or characteristics associated with certain objects, events, situations and the like. Constructs are images or ideas developed specifically for theory building or research purposes. Constructs tend to be more abstract and complex than concepts. Both are critical to thinking and research processes since one can think only in terms of meanings we have adopted. Precision in concept and constructs is particularly important in research since we usually attempt to measure meaning in some way. b. Deduction and Induction: Both deduction and induction are basic forms of reasoning. While we may emphasize one over the other from time to time, both are necessary for research thinking. Deduction is reasoning from generalizations to specifics that flow logically from the generalizations. If the generalizations are true and the deductive form valid, the conclusions must also be true. Induction is reasoning from specific instances or observations to some generalization that is purported to explain the instances. The specific instances are evidence and the conclusion is an inference that may be true. c. Operational definition and Dictionary definition: Dictionary definitions are those used in most general discourse to describe the nature of concepts through word reference to other familiar concepts, preferably at a lower Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 45 lOMoAR cPSD| 17744894 abstraction level. Operational definitions are established for the purposes of precision in measurement. With them we attempt to classify concepts or conditions unambiguously and use them in measurement. Operational definitions are essential for effective research, while dictionary definitions are more useful for general discourse purposes. d. Hypothesis and Preposition: A proposition is a statement about concepts that can be evaluated as true or false when compared to observable phenomena. A hypothesis is a proposition made as a tentative statement configured for empirical testing. This further distinction permits the classification of hypotheses for different purposes, e.g., descriptive, relational, correlational, causal, etc. e. Theory and Model: A theory is a set of systematically interrelated concepts, constructs, definitions, and propositions advanced to explain and predict phenomena or facts. Theories differ from models in that their function is explanation and prediction whereas a model’s purpose is representation. A model is a representation of a system constructed for the purpose of investigating an aspect of that system, or the system as a whole. Models are used with equal success in applied or theoretical work. f. Scientific method and scientific Attitude: The characteristics of the scientific method are confused in the literature primarily because of the numerous philosophical perspectives one may take when “doing” science. A second problem stems from the fact that the emotional characteristics of scientists do not easily lend themselves to generalization. For our purposes, however, the scientific method is a systematic approach involving hypothesizing, observing, testing, and reasoning processes for the purpose of problem solving or amelioration. The scientific method may be summarized with a set of steps or stages but these only hold for the simplest problems. In contrast to the mechanics of the process, the scientific attitude reflects the creative aspects that enable and sustain the research from preliminary thinking to discovery and on to the culmination of the project. Imagination, curiosity, intuition, and doubt are among the predispositions involved. One Nobel physicist described this aspect of science as doing one’s utmost with no holds barred. g. Concept and Variable: Concepts are meanings abstracted from our observations; they classify or categorize objects or events that have common characteristics beyond a single observation (see a). A variable is a concept or construct to which numerals or values are assigned; this operationalization permits the construct or concept to be empirically tested. In informal usage, a variable is often used as a synonym for construct or property being studied. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 46 lOMoAR cPSD| 17744894 35. Explain characteristics of a good research. Answer: 1. The purpose of the research should be clearly defined and common concepts be used. 2. The research procedure used should be described in sufficient detail to permit another researcher to repeat the research for further advancement, keeping the continuity of what has already been attained. 3. The procedural design of the research should be carefully planned to yield results that are as objective as possible. 4. The researcher should report with complete frankness, flaws in procedural design and estimate their effects upon the findings. 5. The analysis of data should be sufficiently adequate to reveal its significance and the methods of analysis used should be appropriate. The validity and reliability of the data should be checked carefully. 6. Conclusions should be confined to those justified by the data of the research and limited to those for which the data provide an adequate basis. 7. Greater confidence in research is warranted if the researcher is experienced, has a good reputation in research and is a person of integrity. In other words, we can state the qualities of a good research as under: 1. Good research is systematic: It means that research is structured with specified steps to be taken in a specified sequence in accordance with the well defined set of rules. Systematic characteristic of the research does not rule out creative thinking but it certainly does reject the use of guessing and intuition in arriving at conclusions. 2. Good research is logical: This implies that research is guided by the rules of Logical reasoning and the logical process of induction and deduction are of great value in carrying out research. Induction is the process of reasoning from a part to the whole whereas deduction is the process of reasoning from some premise to a conclusion which follows from that very premise. In fact, logical reasoning makes research more meaningful in the context of decision making. 3. Good research is empirical: It implies that research is related basically to one or More aspects of a real situation and deals with concrete data that provides a basis for external validity to research results. 4. Good research is replicable: This characteristic allows research results to be verified by replicating the study and thereby building a sound basis for decisions. 36. Explain one tailed and two tailed tests of significance with example. Answer: One Tailed Test: A statistical test in which the critical area of a distribution is one-sided so that it is either greater than or less than a certain value, but not both. If the sample that is being tested falls into the one-sided critical area, the alternative hypothesis will be accepted instead of the null hypothesis. The one-tailed test gets its name from testing the area under one of the tails (sides) of a normal distribution, although the test can be used in other nonnormal distributions as well. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 47 lOMoAR cPSD| 17744894 An example of when one would want to use a one-tailed test is in the error rate of a factory. Let’s say a label manufacturer wants to make sure that errors on labels are below 1%. It would be too costly to have someone check every label, so the factory selects random samples of the labels and test whether errors exceed 1% with whatever level of significance they choose. This represents the implementation of a one-tailed test. Example Suppose we wanted to test a manufacturers claim that there are, on average, 50 matches in a box. We could set up the following hypotheses H0: µ = 50, against H1: µ < 50 or H1: µ > 50 Either of these two alternative hypotheses would lead to a one-sided test. Presumably, we would want to test the null hypothesis against the first alternative hypothesis since it would be useful to know if there is likely to be less than 50 matches, on average, in a box (no one would complain if they get the correct number of matches in a box or more). Yet another alternative hypothesis could be tested against the same null, leading this time to a two-sided test: H0: µ = 50, against H1: µ not equal to 50 Here, nothing specific can be said about the average number of matches in a box; only that, if we could reject the null hypothesis in our test, we would know that the average number of matches in a box is likely to be less than or greater than 50. Two Tailed Test: A statistical test in which the critical area of a distribution is two sided and tests whether a sample is either greater than or less than a certain range of values. If the sample that is being tested falls into either of the critical areas, the alternative hypothesis will be accepted instead of the null hypothesis. The two-tailed test gets its name from testing the area under both of the tails (sides) of a normal distribution, although the test can be used in other non-normal distributions. An example of when one would want to use a two-tailed test is at a candy production/packaging plant. Let’s say the candy plant wants to make sure that the number of candies per bag is around 50. The factory is willing to accept between 45 and 55 candies per bag. It would be too costly to have someone check every bag, so the factory selects random samples of the bags, and tests whether the average number of candies exceeds 55 or is less than 45 with whatever level of significance it chooses. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 48 lOMoAR cPSD| 17744894 Example Suppose we wanted to test a manufacturers claim that there are, on average, 50 matches in a box. We could set up the following hypotheses H0: µ = 50, against H1: µ < 50 or H1: µ > 50 Either of these two alternative hypotheses would lead to a one-sided test. Presumably, we would want to test the null hypothesis against the first alternative hypothesis since it would be useful to know if there is likely to be less than 50 matches, on average, in a box (no one would complain if they get the correct number of matches in a box or more). Yet another alternative hypothesis could be tested against the same null, leading this time to a two-sided test: H0: µ = 50, against H1: µ not equal to 50 Here, nothing specific can be said about the average number of matches in a box; only that, if we could reject the null hypothesis in our test, we would know that the average number of matches in a box is likely to be less than or greater than 50. 37. Explain null and alternate hypothesis with example. Answer: Null Hypothesis: A type of hypothesis used in statistics that proposes that no statistical significance exists in a set of given observations. The null hypothesis attempts to show that no variation exists between variables, or that a single variable is no different than zero. It is presumed to be true until statistical evidence nullifies it for an alternative hypothesis. The null hypothesis assumes that any kind of difference or significance you see in a set of data is due to chance. For example, Chuck sees that his investment strategy produces higher average returns than simply buying and holding a stock. The null hypothesis claims that there is no difference between the two average returns, and Chuck has to believe this until he proves otherwise. Refuting the null hypothesis would require showing statistical significance, which can be found using a variety of tests. If Chuck conducts one of these tests and proves that the difference between his returns and the buy-and-hold returns is significant, he can then refute the null hypothesis. Alternate Hypothesis: The alternative hypothesis, H1, is a statement of what a statistical hypothesis test is set up to establish. For example, in a clinical trial of a new drug, the alternative Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 49 lOMoAR cPSD| 17744894 hypothesis might be that the new drug has a different effect, on average, compared to that of the current drug. We would write H1: the two drugs have different effects, on average. The alternative hypothesis might also be that the new drug is better, on average, than the current drug. In this case we would write H1: the new drug is better than the current drug, on average. The final conclusion once the test has been carried out is always given in terms of the null hypothesis. We either "Reject H0 in favour of H1" or "Do not reject H0". We never conclude "Reject H1", or even "Accept H1". If we conclude "Do not reject H0", this does not necessarily mean that the null hypothesis is true, it only suggests that there is not sufficient evidence against H0 in favour of H1. Rejecting the null hypothesis then, suggests that the alternative hypothesis may be true. Summary: 1. A null hypothesis is a statistical hypothesis which is the original or default hypothesis while any other hypothesis other than the null is called an alternative hypothesis. 2. A null hypothesis is denoted by H0 while an alternative hypothesis is denoted by H1. 3. An alternative hypothesis is used if the null hypothesis is not accepted or rejected. 4. A null hypothesis is the prediction while an alternative hypothesis is all other outcomes aside from the null. 5. Both the null and alternative hypotheses are necessary in statistical hypothesis testing in scientific, medical, and other research studies. Research Methodology (2810006) Downloaded by kan AR (kaanvivek@gmail.com) 50