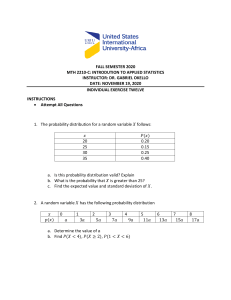

STAT6110/STAT3110

Statistical Inference

Topic 1- Probability and random samples

Jun Ma

Topic 1

Semester 1, 2020

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

1 / 58

Details

Unit Convenor: Jun Ma

I

Location: 526 Level 5, 12 Wally’s Walk

I

Phone: 9850 8548

I

Email: jun.ma@mq.edu.au

I

Consultation: Monday 1-3pm

Tutor: Sophia Shen

I

Email: Sophia.Shen@mq.edu.au

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

2 / 58

Unit Outline

Topic 1: Probability and random samples

Topic 2: Large sample probability concepts

Topic 3: Estimation concepts

Topic 4: Likelihood

Topic 5: Estimation methods

Topic 6: Hypothesis testing concepts

Topic 7: Hypothesis testing methods

Topic 8: Bayesian inference

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

3 / 58

Statistical inference

This unit is about the theory behind Statistical Inference

Statistical inference is the science of drawing conclusions on the basis

of numerical information that is subject to randomness

The core principle is that information about a population can be

obtained using a “representative” sample from that population

A “representative” sample requires that the sample has been taken at

random from the population

To model variability in random samples we use probability models

This means we need probability concepts to study statistical inference

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

4 / 58

Population and Sample

Extrapolate

Population

Sample

e.g. all adults in a

population of interest

Jun Ma (Topic 1)

e.g. 300 adults

chosen at random

STAT6110/STAT3110 Statistical Inference

Inferences

based on the

sample

e.g. at least one-third

of adults have high

cholesterol

Semester 1, 2020

5 / 58

Topic 1 Outline: Probability and random samples

Populations and random samples

Probability and relative frequency

Probability and set theory

Probability axioms

Random variables and probability distributions

Joint probability distributions

Independence

Common probability distributions including the normal distribution

Sampling variation and statistical inference

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

6 / 58

Probability and random samples

We usually interpret probability to be the long-run frequency with

which an event occurs in repeated trials

We can then model random variation in our sample using the

probabilistic variation in repeated samples from the population

This leads to the Frequentist approach to statistical inference, which

is the most common approach and will be our main focus in this unit

There is also another approach called Bayesian statistical inference,

which is based on a different interpretation of probability (we will do

one lecture on this later in the unit)

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

7 / 58

Relative frequency

Consider N “samples” taken in identical fashion from a population of

interest

Consider an event of interest that could possibly occur in each of

these samples

Let fN be the number of samples where the event occurred

Then fN /N is called the relative frequency with which the event

occurred

The probability of the event to occur is then the limit of this relative

frequency

fN

probability = lim

N→∞ N

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

8 / 58

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

9 / 58

Set theory

A rigorous description of probability theory uses concepts from set

theory

A set is a collection of objects

An element of a set is a member of this collection

If ω is element of a set Ω we write ω ∈ Ω

A is a subset of a set Ω, written A ⊂ Ω, if ω ∈ A implies ω ∈ Ω

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

10 / 58

Set operations

Denote a union as A ∪ B

which means ω ∈ A ∪ B ⇒

ω ∈ A or ω ∈ B.

Denote an intersection as

A ∩ B which means ω ∈

A ∩ B ⇒ ω ∈ A and ω ∈ B.

Denote a complement of A

as Ac (or A), so that ω ∈ Ac

means that ω ∈ Ω but ω 6∈ A.

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

11 / 58

Outcomes, sample spaces and events

Random samples involve uncertainty or variability

The term outcome refers to a given realisation of this sampling

process

The set of all possible outcomes is referred to as the sample space

A subset of outcomes in the sample space is called an event

An event can be interpreted as an observation that could occur in our

sample e.g. a coin toss yields a head

Each event has a probability assigned to it reflecting the “chance”

that it will occur

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

12 / 58

Example

Suppose our sample consists of two individuals for whom we record

whether or not a particular infection is present or absent

Denote presence or absence of the infection by 1 and 0, respectively

One possible outcome is that both individuals have the infection,

denoted by (1, 1)

The sample space is the set of all possible outcomes, that is, all

possible pairs of infection statuses for the two individuals

Ω = {(0, 0), (0, 1), (1, 0), (1, 1)}

The event “there is exactly one infected individual in the sample” is

denoted by the subset of the sample space {(0, 1), (1, 0)}

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

13 / 58

Probability and sets

Since events are defined mathematically as sets, we can use set

operations to construct new events from existing events

Consider two events E1 and E2 , then the new event E1 ∩ E2 is

interpreted as the event that both E1 and E2 occur

The new event E1 ∪ E2 is interpreted as the event that either E1 or E2

or both occur

The new event E1c is interpreted as the event that E1 does not occur

The empty set ∅ is interpreted as an impossible event

If E1 ∩ E2 = ∅ then E1 and E2 are called mutually exclusive events

with the interpretation that the two events cannot both occur

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

14 / 58

Example (cont.)

The event “either 1 or 2 individuals in the sample are infected”

corresponds to the event union

{(0, 1), (1, 0)} ∪ {(1, 1)} = {(0, 1), (1, 0), (1, 1)}

The event “both 1 and 2 individuals in the sample are infected ”

corresponds to the event intersection

{(0, 1), (1, 0)} ∩ {(1, 1)} = ∅

which is impossible since these events are mutually exclusive

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

15 / 58

Valid probabilities

Probability is a function of events, or a function of subsets of the

sample space

Consider an event E that is a subset of the sample space Ω

Then Pr(E) denotes the probability that event E will occur

The function “Pr” is allowed to be any function of subsets of the

sample space that satisfies certain requirements that make it a valid

probability

Any valid probability must satisfy the following intuitively natural

requirements, called axioms

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

16 / 58

Axioms of probability

1

The probability of any event E is a number between 0 and 1 inclusive.

That is,

0 ≤ Pr(E) ≤ 1

2

The probability of an event with certainty is 1 and the probability of

an impossible event is 0. That is,

Pr(Ω) = 1

3

and

Pr(∅) = 0

If two events E1 and E2 are mutually exclusive, so they cannot both

occur, the probability that either event occurs is the sum of their

respective probabilities. That is,

if E1 ∩ E2 = ∅

Jun Ma (Topic 1)

then

Pr(E1 ∪ E2 ) = Pr(E1 ) + Pr(E2 )

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

17 / 58

Probability properties

Many properties follow from the probability axioms. For example:

1

If A ⊂ B, then P(A) ≤ P(B)

2

P(Ac ) = 1 − P(A)

3

P(A ∪ B) = P(A) + P(B) − P(A ∩ B)

These types of properties can be illustrated using a Venn diagram similar

to those on slide 10 (see also tutorial)

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

18 / 58

Example (cont.) - 3 probability assignments

Event

∅

{(0, 0)}

{(0, 1)}

{(1, 0)}

{(1, 1)}

{(0, 0), (0, 1)}

{(0, 0), (1, 0)}

{(0, 0), (1, 1)}

{(0, 1), (1, 0)}

{(0, 1), (1, 1)}

{(1, 0), (1, 1)}

{(0, 0), (0, 1), (1, 0)}

{(0, 0), (0, 1), (1, 1)}

{(0, 0), (1, 0), (1, 1)}

{(0, 1), (1, 0), (1, 1)}

Ω

Jun Ma (Topic 1)

probability 1

0

0.9025

0.0475

0.0475

0.0025

0.9500

0.9500

0.9050

0.0950

0.0500

0.0500

0.9975

0.9525

0.9525

0.0975

1

probability 2

0

0.3025

0.2475

0.2475

0.2025

0.5500

0.5500

0.5050

0.4950

0.4500

0.4500

0.7975

0.7525

0.7525

0.6975

1

STAT6110/STAT3110 Statistical Inference

probability 3

0

0.3000

0.3000

0.3000

0.3000

0.6000

0.6000

0.6000

0.6000

0.6000

0.6000

0.9000

0.9000

0.9000

0.9000

1

Semester 1, 2020

19 / 58

Example (cont.)

The probability axioms are only satisfied for probability assignments 1

and 2. Probability assignment 3 is invalid because

Pr Ω = Pr {(0, 0), (0, 1), (1, 0), (1, 1)} = 1

6= Pr {(0, 0)} + Pr {(0, 1)} + Pr {(1, 0)} + Pr {(1, 1)} = 1.2

Consider event E1 “exactly one individual is infected” and event E2

“the first individual is infected”

E1 = {(0, 1), (1, 0)}

E2 = {(1, 0), (1, 1)}

E1 ∩ E2 = {(1, 0)}

Using property 3 from slide 18, Pr(E1 ∪ E2 ) is

0.0950+0.0500−0.0475 = 0.0975 or 0.4950+0.4500−0.2475 = 0.6975

In each case the calculation agrees with the probability assigned to

the event {(0, 1), (1, 0), (1, 1)}

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

20 / 58

Random variables

A random variable is a function of outcomes in the sample space

It can be thought of as an uncertain quantity that takes on values

with particular probabilities

A random variable that can take on only a discrete set of values then

it is referred to as a discrete random variable

A random variable that can take on a continuum of values is referred

to as a continuous random variable

For example: the gender of a randomly sampled individual is discrete

while their cholesterol level is continuous

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

21 / 58

Random variables and probabilities

Statements about a random variable taking on a particular value or

having a value in a particular range are events

For a random variable X and a given number x, statements such as

X = x and X ≤ x are events

We can therefore assign probabilities Pr(X = x) and Pr(X ≤ x) to

such events

A general convention is that random variables are denoted by

upper-case letters, while the values that they can take on are

denoted by lower-case letters

This distinction will be important in subsequent lectures

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

22 / 58

Probability distributions

The probability distribution for a random variable is a rule for

assigning a probability to any event stating that the random variable

takes on a specific value or lies in a specific range

There are various ways to specify the probability distribution of a

random variable

We will use 3 functions for specifying the probability distribution of a

random variable

1

Cumulative distribution function (or simply called distribution function)

2

Probability function

3

Probability density function

These are not the only functions that can be used to specify a

probability distribution, but they are the only ones we will use

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

23 / 58

Cumulative distribution function

The cumulative distribution function of a random variable X is a

function FX (x) such that

FX (x) = Pr(X ≤ x)

for any value x

Any valid cumulative distribution function must therefore satisfy the

following three properties:

(i) lim FX (x) = 1

x→∞

(ii)

lim FX (x) = 0

x→−∞

(iii) FX (x1 ) ≥ FX (x2 )

where x1 ≥ x2

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

24 / 58

Probability function

For a discrete random variable X , the probability function is a

function that gives the probability that the random variable will equal

any specific value

The probability function is

fX (x) = Pr(X = x)

where x is any number in the set of possible values that X can take on

P

For any discrete random variable, x fX (x) = 1, where the

summation is taken over all possible values that X can take on

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

25 / 58

Probability density function

For a continuous random variable X , the probability density

function is the derivative of the cumulative distribution function

fX (x) =

d

FX (x)

dx

It specifies the probability that a continuous random variable will fall

into any given range through the relationship

Z u

Pr(l ≤ X ≤ u) =

fX (x)dx

l

It must therefore always integrate to 1 over the range (−∞, ∞)

The probability density function does not give the probability that a

continuous random variable is equal to a specific value

For a continuous random variable it is always the case that

Pr(X = x) = 0 (can you think why?)

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

26 / 58

Attributes of probability distributions: Expectation

The probability distribution of a random variable has various

attributes that summarise the way the random variable tends to

behave

The expectation, or mean, of a random variable is the average value

that the random variable takes on

For discrete random variables the expectation is

X

xfX (x)

E(X ) =

x

where the summation is over all possible values of the random

variable X

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

27 / 58

Attributes of probability distributions: Expectation (cont.)

For continuous random variables the expectation is given by

Z ∞

xfX (x)dx

E(X ) =

−∞

Since the sum or integral of a linear function yields a linear function

of the sum or integral, expectations possess an important linearity

property, namely, for constants c0 and c1

E(c0 + c1 X ) = c0 + c1 E(X )

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

28 / 58

Attributes of probability distributions: Variance

The variance of a random variable is a measure of the degree of

variation that a random variable exhibits

It is defined as

2 Var(X ) = E X − E(X )

= E(X 2 ) − E(X )2

for both continuous and discrete random variables

Unlike expectations, the linearity property does not hold for variances,

but is replaced by the equally important property

Var(c0 + c1 X ) = c12 Var(X )

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

29 / 58

Attributes of probability distributions: Percentiles

Another important attribute are percentiles

For α ∈ (0, 1), the α-percentile of a probability distribution is the

point below which 100α% of the distribution falls

The α-percentile of a probability distribution with cumulative

distribution function FX (x) is the point pα that satisfies

FX (pα ) = α

For example, the 0.5 percentile, called the median, is the point below

which half of the probability distribution lies

The 0.25 and 0.75 percentiles, called quartiles, specify the points

below which one-quarter and three-quarters of the distribution lies

Other percentiles of a probability distribution will also be of interest,

particularly when we come to discuss confidence intervals in

subsequent topics.

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

30 / 58

Example (cont.)

Define a random variable T to be the number infected in the sample

of 2 people

T is a discrete random variable since its possible values are 0, 1 and 2

The table gives the value of T for each outcome in the sample space

The table also gives the probability distribution of T under the

probability assignment 1 discussed earlier

t Event T = t

fT (t)

FT (t)

0

{(0, 0)}

0.9025 0.9025

1 {(0, 1), (1, 0)} 0.0950 0.9975

2

{(1, 1)}

0.0025

1

E(T ) = 0 × 0.9025 + 1 × 0.0950 + 2 × 0.0025 = 0.1

Var(T ) = (02 × 0.9025 + 12 × 0.0950 + 22 × 0.0025) − (0.12 ) = 0.095

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

31 / 58

Conditional probability

The probability of an event might change once we know that some

other event has occurred, this means this event depends on the other

event

For two events E1 and E2 , the conditional probability that E1 occurs

given that E2 has occurred is denoted Pr(E1 |E2 ) and is defined as

Pr(E1 |E2 ) =

Pr(E1 ∩ E2 )

Pr(E2 )

This is defined only for events E2 that are not impossible, so that

Pr(E2 ) 6= 0 in the denominator

It does not make sense for us to condition on the occurrence of an

impossible event

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

32 / 58

Independence

A property that applies to both events and random variables

Using the definition of conditional probability, two events E1 and E2

are independent events if

Pr E1 |E2 = Pr E1

The occurrence of the event E2 does not affect the probability of

occurrence of the event E1 (and vice versa)

We can re-express this definition by saying that E1 and E2 are

independent events if they satisfy the multiplicative property

Pr E1 ∩ E2 = Pr E1 Pr E2

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

33 / 58

Independent random variables

Statistical inference makes more use of the concept of independence

when applied to random variables

Consider two random variables X1 and X2 , with cumulative

distribution functions F1 (x1 ) and F2 (x2 )

X1 and X2 are said to be independent random variables if

Pr(X1 ≤ x1 | X2 ≤ x2 ) = Pr(X1 ≤ x1 ) = F1 (x1 )

Pr(X2 ≤ x2 | X1 ≤ x1 ) = Pr(X2 ≤ x2 ) = F2 (x2 )

where x1 and x2 are in the range of possible values of X1 and X2

Knowing the value of one random variable does not affect the

probability distribution of the other

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

34 / 58

Independent random variables (cont.)

Like independence of events, independence of random variables can

be defined using the multiplicative property

Pr({X1 ≤ x1 }∩{X2 ≤ x2 }) = Pr(X1 ≤ x1 ) Pr(X2 ≤ x2 ) = F1 (x1 )F2 (x2 )

We can see from this form that independence of random variables is

defined in terms of independence of the two events X1 ≤ x1 and

X2 ≤ x2

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

35 / 58

Joint probability distributions

The above discussion introduces us to the concept of the joint

probability distribution of two random variables

Generalisation of the definition of a probability distribution for a

single random variable to define distribution for two or more random

variables

The joint probability distribution of two random variables is a rule

for assigning probabilities to any event stating that the two random

variables simultaneously take on specific values or lie in specific ranges

Like the probability distribution of a single random variable, the joint

probability distribution can be characterised by various functions

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

36 / 58

Joint cumulative distribution function

The first such function is a generalisation of the cumulative

distribution function

Consider the shorthand notation

Pr(X1 ≤ x1 , X2 ≤ x2 ) ≡ Pr({X1 ≤ x1 } ∩ {X2 ≤ x2 })

Then the joint cumulative distribution function of two random

variables X1 and X2 is the function of two variables

FX1 ,X2 (x1 , x2 ) = Pr(X1 ≤ x1 , X2 ≤ x2 )

So independence of two random variables is equivalent to their joint

cumulative distribution function factoring into the product of their

individual cumulative distribution functions

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

37 / 58

Joint probability function

The joint probability function of two discrete random variables X1

and X2 is the function of two variables

fX1 ,X2 (x1 , x2 ) = Pr(X1 = x1 , X2 = x2 )

The multiplicative property for independence of two discrete random

variables can equivalently be expressed in terms of their joint

probability function

That is, two discrete random variables X1 and X2 are independent if

fX1 ,X2 (x1 , x2 ) = f1 (x1 )f2 (x2 )

where f1 (x1 ) and f2 (x2 ) are the probability functions of X1 and X2

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

38 / 58

Joint probability density function

The joint probability density function of two continuous random

variables X1 and X2 is the function of two variables

∂

∂

FX1 ,X2 (x1 , x2 )

fX1 ,X2 (x1 , x2 ) =

∂x1 ∂x2

where the symbol ∂ means partial differentiation of a multivariable

function, rather than the symbol d used in univariable differentiation

The joint probability density function specifies the probability that the

two continuous random variables will simultaneously fall into any two

given ranges through the relationship

Z u1 Z u2

Pr(l1 ≤ X1 ≤ u1 , l2 ≤ X2 ≤ u2 ) =

fX1 ,X2 (x1 , x2 )dx2 dx1

l1

Jun Ma (Topic 1)

l2

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

39 / 58

Correlation and covariance

The covariance of X and Y is defined as

Cov(X , Y ) = E X − E(X ) Y − E(Y ) = E(XY ) − E(X )E(Y )

We say that X and Y are uncorrelated when Cov(X , Y ) = 0, i.e.

when

E(XY ) = E(X )E(Y )

Being uncorrelated random variables is a weaker property than being

independent random variables

Independent implies uncorrelated but not vice versa

Covariance is a generalisation of variance

Cov(X , X ) = Var(X )

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

40 / 58

Correlation and covariance (cont.)

A measure of the extent to which two random variables depart from

being uncorrelated is the correlation

Cov(X,Y)

Corr(X , Y ) = p

Var(X )Var(Y )

Correlation is scaled such that it always lies between −1 and 1, with 0

corresponding to being uncorrelated

It is important in studying the linear relationship between two

variables, with the extremes of −1 and 1 corresponding to a perfect

negative and positive linear relationship, respectively

Although being uncorrelated implies that there is no linear

relationship between two variables, it does not preclude that some

other relationship exists. This is another reason why independence is

a stronger property than being uncorrelated

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

41 / 58

Correlation example

Y

Suppose (X , Y ) can be either

(2, 2)

(−1, 1)

(1, −1)

(−2, −2)

with

with

with

with

10%

40%

40%

10%

probability,

probability,

probability,

probability.

The random variables X and Y

are certainly dependent, since if

we know what one of them is,

we can figure out what the

other one is too.

Jun Ma (Topic 1)

2

u

-2

1

-1

1

-1

r

STAT6110/STAT3110 Statistical Inference

r

6

2

X

-

u

-2

Semester 1, 2020

42 / 58

Correlation example (cont.)

On the other hand, E [XY ], E [X ] and E [Y ] are all zero; for instance,

E [XY ] = 10% × 2 × 2 + 40% × (−1) × 1

+ 40% × 1 × (−1) + 10% × (−2) × (−2)

= 0.4 − 0.4 − 0.4 + 0.4

= 0;

so the correlation between X and Y is zero

X and Y are uncorrelated but not independent

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

43 / 58

Independent random samples

The main use of the concept of independence in this unit is for

modelling a random sample from a population

We will often use a collection of n random variables to represent n

observations in a random sample and assume that these observations

are independent

For a random sample, independence means that one observation does

not affect the probability distribution of another observation

n random variables X = (X1 , . . . , Xn ) are (mutually) independent if

their joint cumulative distribution function factors into the product of

their n individual cumulative distribution functions or likewise for the

joint density or probability functions

FX (x) = Pr(X1 ≤ x1 , . . . , Xn ≤ xn ) =

n

Y

Fi (xi )

i=1

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

fX (x) =

n

Y

fi (xi )

i=1

Semester 1, 2020

44 / 58

Independence example

Random variable T0 is 1 if only one individual is infected and 0

otherwise

Random variable T1 is 1 if the first individual is infected and 0

otherwise

Random variable T2 is 1 if the second individual is infected and 0

otherwise

Consider events T0 = 1, T1 = 1, T2 = 1,denoted as E0 , E1 , E2

E0 = {(0, 1), (1, 0)} and Pr(E0 ) = 0.095 based on Table 1

Likewise we have E1 = {(1, 0), (1, 1)} and Pr(E1 ) = 0.05, as well as

E2 = {(0, 1), (1, 1)} and Pr(E2 ) = 0.05

Conditional probability

1 ∩E0 )

Pr(E1 |E0 ) = Pr(E

Pr(E0 ) =

Jun Ma (Topic 1)

Pr({(1,0)})

0.095

=

0.0475

0.095

= 0.5

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

45 / 58

Independence example (cont.)

Thus, given we know exactly one person is infected, it is equally likely

to be individual 1 or 2

E0 and E1 are not independent events since Pr(E1 |E0 ) 6= Pr(E1 )

Knowledge that there is one infected individual provides information

about whether individual 1 is infected

On the other hand, T1 = 1 and T2 = 1 are independent events

Pr(E1 ∩ E2 ) = Pr({(1, 1)}) = 0.0025 = 0.05 × 0.05 = Pr(E1 ) Pr(E2 )

Same process can be followed for any other value of the random

variables T1 and T2 to show that

Pr(T1 = t1 , T2 = t2 ) = Pr(T1 = t1 ) Pr(T2 = t2 ) t1 = 0, 1

t2 = 0, 1

That is, the random variables T1 and T2 are independent random

variables

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

46 / 58

Common probability distributions

Probability distributions commonly used in statistical inference are

based on a simple and flexible function for fX (x) or FX (x)

In subsequent lectures we will use many common probability

distributions

All of these are summarised in the accompanying document “Common

Probability Distributions” (which will be reviewed in the lecture)

Common discrete distributions include: binomial, Poisson, geometric,

negative binomial and hypergeometric distributions

Common continuous distributions include: normal, exponential,

gamma, uniform, beta, t, χ2 and F distributions

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

47 / 58

Normal distribution

The most important distribution for statistical inference

In large samples it unifies many statistical inference tools

The large sample concepts will be considered in Topics 2 and 3

For now we will simply review some of the key features

Consider a continuous random variable X with

µ = E(X )

and

σ 2 = Var(X )

X has a normal distribution, written

X ∼ N(µ, σ 2 ),

if the probability density function of X has the form

1

(x − µ)2

fX (x) = √ exp −

x ∈ (−∞, ∞)

2σ 2

σ 2π

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

48 / 58

Standard normal distribution

Cumulative distribution function FX (x) is not convenient and needs

to be calculated numerically

This is done using a special case, called the standard normal

distribution, which is the N(0, 1) distribution

Let the standard normal cumulative distribution distribution be

2

Z x

u

1

exp −

Φ(x) = √

du

2

2π −∞

Then the cumulative distribution function associated with any other

normal distribution is

x −µ

FX (x) = Φ

σ

α-percentile of the standard normal distribution is zα

Φ(zα ) = α

Jun Ma (Topic 1)

or

zα = Φ−1 (α)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

49 / 58

probability density

0.3

0.2

0

0.0

0.1

probability density

0.4

1 σ 2π

Standard normal distribution - percentiles

−4

−2

0

2

4

µ − 2σ

x

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

µ

µ + 2σ

x

Semester 1, 2020

50 / 58

Bivariate normal distribution

The bivariate normal distribution is a joint probability distribution

Consider two normally distributed random variables X and Y with

Corr(X , Y ) = ρ

We call µ the mean vector and Σ the variance-covariance matrix

2

σX

ρσX σY

µX

and

Σ=

µ=

ρσX σY

σY2

µY

Then X and Y have a bivariate normal distribution, written

X

X ∼ N2 (µ, Σ)

where X =

Y

if their joint probability density function is of the form

1

1

T −1

p

fX ,Y (x, y ) = fX (x) =

exp − (x − µ) Σ (x − µ)

2

2πσX σY 1 − ρ2

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

51 / 58

Multivariate normal distribution

Generalisation of the normal distribution, giving the joint distribution

of a k × 1 vector of random variables X = (X1 , . . . , Xk )T

The joint probability density function is

− 1

1

k

T

−1

fX (x) = (2π) det(Σ) 2 exp − (x − µ) Σ (x − µ)

2

x ∈ <k

where det(Σ) is the matrix determinant of Σ

µ = (µ1 , . . . , µk )T is called the mean vector

The k × k matrix Σ is called the variance-covariance matrix and must

be a non-negative definite matrix

Its main use in this unit is as the distribution of estimators in large

samples – more on this in later topics

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

52 / 58

Inference example

We will now consider how to use a probability model for the sampling

variation in a simple introductory example

Example: Assessment of disease prevalence in a population

I

We are interested in the proportion of a population that has a

particular disease, called θ

I

We sample n individuals at random from the population

I

We observe the number of individuals who have the disease

I

We assume our sample is truly random and not biased i.e. assume we

have not systematically over- or under-sampled diseased individuals

I

How would we use the sample to make inferences about θ?

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

53 / 58

Inference about the population

The population prevalence θ is considered to be a fixed constant

Our goal is to use the sample to estimate this unknown constant and

also to place some appropriate uncertainty limits around our estimate

The starting point is the natural estimate of the unknown population

prevalence, that is, by the observed proportion in our sample

By using the observed sample prevalence to make inferences about

the disease prevalence in the population, we are extrapolating from

the sample to the population

The reason why such sampling and extrapolation is necessary is that

we can’t assess the entire population

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

54 / 58

Sampling variation

How much do we “trust” the observed sample prevalence as an

estimate of the population prevalence?

The answer depends on the sampling variation

Sampling variability reflects the extent to which the sample

prevalence tends to vary from sample to sample

If our sample included n = 1000 individuals we would “trust” the

observed sample prevalence more than if our sample included n = 100

individuals

Consider a plot of repeated samples with difference sample sizes

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

55 / 58

Figure 1: Results from 10 prevalence studies with sample size

100, and 10 prevalence studies with sample size 1000.

Sample size=1000

Sample size=100

5

10

15

20

25

Sample Prevalence (%)

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

56 / 58

Probability model

In order to quantify our “trust” in the sample prevalence, we need

some way of describing its variability

This can be done using a probability model

In this example the binomial distribution provides a natural model for

the way the sampling has been carried out assuming:

I

n is fixed not random

I

individuals are sampled independently

We then have a probability model for the observed number of

diseased individuals X and the sample prevalence

P=

Jun Ma (Topic 1)

X

n

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

57 / 58

Binomial model

Pr(X = x) =

or

Pr(P = p) =

n!

θx (1 − θ)n−x

(n − x)x!

n!

θpn (1 − θ)n−pn

(n − pn)(pn)!

x = 0, . . . , n

pn = 0, . . . , n

We can use this distribution to quantify our trust in the sample

prevalence as an indication of the population prevalence, particularly

using the distribution’s mean and variance

We can also use this model to calculate a confidence interval, which

is an important summary of our “trust” in the sample

We will come back to this in Topic 3, after discussing the large

sample normal approximation to the binomial distribution and some

key estimation concepts

Jun Ma (Topic 1)

STAT6110/STAT3110 Statistical Inference

Semester 1, 2020

58 / 58