The Internet of Medical

Things (IoMT)

Scrivener Publishing

100 Cummings Center, Suite 541J

Beverly, MA 01915-6106

Advances in Learning Analytics for Intelligent Cloud-IoT Systems

Series Editors: Dr. Souvik Pal and Dr. Dac-Nhuong Le

Scope: The role of adaptation, learning analytics, computational Intelligence, and data analytics in the

field of Cloud-IoT Systems is becoming increasingly essential and intertwined. The capability of an

intelligent system depends on various self-decision making algorithms in IoT Devices. IoT based smart

systems generate a large amount of data (big data) that cannot be processed by traditional data processing

algorithms and applications. Hence, this book series involves different computational methods incorporated

within the system with the help of Analytics Reasoning and Sense-making in Big Data, which is centered

in the Cloud and IoT-enabled environments.

The series seeks volumes that are empirical studies, theoretical and numerical analysis, and novel research

findings. The series encourages cross-fertilization of highlighting research and knowledge of Data Analytics,

Machine Learning, Data Science, and IoT sustainable developments.

Please send proposals to:

Dr. Souvik Pal

Department of Computer Science and Engineering

Global Institute of Management and Technology

Krishna Nagar

West Bengal, India

souvikpal22@gmail.com

Dr. Dac-Nhuong Le

Faculty of Information Technology, Haiphong University, Haiphong, Vietnam

huongld@hus.edu.vn

Publishers at Scrivener

Martin Scrivener (martin@scrivenerpublishing.com)

Phillip Carmical (pcarmical@scrivenerpublishing.com)

The Internet of Medical

Things (IoMT)

Healthcare Transformation

Edited by

R.J. Hemalatha

D. Akila

D. Balaganesh

and

Anand Paul

This edition first published 2022 by John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, USA

and Scrivener Publishing LLC, 100 Cummings Center, Suite 541J, Beverly, MA 01915, USA

© 2022 Scrivener Publishing LLC

For more information about Scrivener publications please visit www.scrivenerpublishing.com.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or

transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, except as permitted by law. Advice on how to obtain permission to reuse material from this title

is available at http://www.wiley.com/go/permissions.

Wiley Global Headquarters

111 River Street, Hoboken, NJ 07030, USA

For details of our global editorial offices, customer services, and more information about Wiley products visit us at www.wiley.com.

Limit of Liability/Disclaimer of Warranty

While the publisher and authors have used their best efforts in preparing this work, they make no rep­

resentations or warranties with respect to the accuracy or completeness of the contents of this work and

specifically disclaim all warranties, including without limitation any implied warranties of merchant-­

ability or fitness for a particular purpose. No warranty may be created or extended by sales representa­

tives, written sales materials, or promotional statements for this work. The fact that an organization,

website, or product is referred to in this work as a citation and/or potential source of further informa­

tion does not mean that the publisher and authors endorse the information or services the organiza­

tion, website, or product may provide or recommendations it may make. This work is sold with the

understanding that the publisher is not engaged in rendering professional services. The advice and

strategies contained herein may not be suitable for your situation. You should consult with a specialist

where appropriate. Neither the publisher nor authors shall be liable for any loss of profit or any other

commercial damages, including but not limited to special, incidental, consequential, or other damages.

Further, readers should be aware that websites listed in this work may have changed or disappeared

between when this work was written and when it is read.

Library of Congress Cataloging-in-Publication Data

ISBN 978-1-119-76883-8

Cover image: Pixabay.Com

Cover design by Russell Richardson

Set in size of 11pt and Minion Pro by Manila Typesetting Company, Makati, Philippines

Printed in the USA

10 9 8 7 6 5 4 3 2 1

Contents

Preface

1

2

In Silico Molecular Modeling and Docking Analysis in Lung

Cancer Cell Proteins

Manisha Sritharan and Asita Elengoe

1.1 Introduction

1.2 Methodology

1.2.1 Sequence of Protein

1.2.2 Homology Modeling

1.2.3 Physiochemical Characterization

1.2.4 Determination of Secondary Models

1.2.5 Determination of Stability of Protein Structures

1.2.6 Identification of Active Site

1.2.7 Preparation of Ligand Model

1.2.8 Docking of Target Protein and Phytocompound

1.3 Results and Discussion

1.3.1 Determination of Physiochemical Characters

1.3.2 Prediction of Secondary Structures

1.3.3 Verification of Stability of Protein Structures

1.3.4 Identification of Active Sites

1.3.5 Target Protein-Ligand Docking

1.4 Conclusion

References

Medical Data Classification in Cloud Computing Using Soft

Computing With Voting Classifier: A Review

Saurabh Sharma, Harish K. Shakya and Ashish Mishra

2.1 Introduction

2.1.1 Security in Medical Big Data Analytics

2.1.1.1 Capture

2.1.1.2 Cleaning

xv

1

2

4

4

4

4

4

4

4

5

5

5

5

7

7

14

14

18

18

23

24

24

24

25

v

vi Contents

2.2

2.3

2.4

2.5

2.6

3

2.1.1.3 Storage

2.1.1.4 Security

2.1.1.5 Stewardship

Access Control–Based Security

2.2.1 Authentication

2.2.1.1 User Password Authentication

2.2.1.2 Windows-Based User Authentication

2.2.1.3 Directory-Based Authentication

2.2.1.4 Certificate-Based Authentication

2.2.1.5 Smart Card–Based Authentication

2.2.1.6 Biometrics

2.2.1.7 Grid-Based Authentication

2.2.1.8 Knowledge-Based Authentication

2.2.1.9 Machine Authentication

2.2.1.10 One-Time Password (OTP)

2.2.1.11 Authority

2.2.1.12 Global Authorization

System Model

2.3.1 Role and Purpose of Design

2.3.1.1 Patients

2.3.1.2 Cloud Server

2.3.1.3 Doctor

Data Classification

2.4.1 Access Control

2.4.2 Content

2.4.3 Storage

2.4.4 Soft Computing Techniques for Data Classification

Related Work

Conclusion

References

Research Challenges in Pre-Copy Virtual Machine Migration

in Cloud Environment

Nirmala Devi N. and Vengatesh Kumar S.

3.1 Introduction

3.1.1 Cloud Computing

3.1.1.1 Cloud Service Provider

3.1.1.2 Data Storage and Security

3.1.2 Virtualization

3.1.2.1 Virtualization Terminology

3.1.3 Approach to Virtualization

25

26

26

27

27

28

28

28

28

29

29

29

29

29

30

30

30

30

31

31

31

31

32

32

33

33

34

36

42

43

45

46

46

47

47

48

49

50

Contents vii

3.1.4

3.1.5

3.1.6

3.1.7

3.2

3.3

3.4

3.5

3.6

4

5

Processor Issues

Memory Management

Benefits of Virtualization

Virtual Machine Migration

3.1.7.1 Pre-Copy

3.1.7.2 Post-Copy

3.1.7.3 Stop and Copy

Existing Technology and Its Review

Research Design

3.3.1 Basic Overview of VM Pre-Copy Live Migration

3.3.2 Improved Pre-Copy Approach

3.3.3 Time Series–Based Pre-Copy Approach

3.3.4 Memory-Bound Pre-Copy Live Migration

3.3.5 Three-Phase Optimization Method (TPO)

3.3.6 Multiphase Pre-Copy Strategy

Results

3.4.1 Finding

Discussion

3.5.1 Limitation

3.5.2 Future Scope

Conclusion

References

Estimation and Analysis of Prediction Rate of Pre-Trained Deep

Learning Network in Classification of Brain Tumor MRI Images

Krishnamoorthy Raghavan Narasu, Anima Nanda,

Marshiana D., Bestley Joe and Vinoth Kumar

4.1 Introduction

4.2 Classes of Brain Tumors

4.3 Literature Survey

4.4 Methodology

4.5 Conclusion

References

An Intelligent Healthcare Monitoring System for Coma Patients

Bethanney Janney J., T. Sudhakar, Sindu Divakaran,

Chandana H. and Caroline Chriselda L.

5.1 Introduction

5.2 Related Works

5.3 Materials and Methods

5.3.1 Existing System

51

51

51

51

52

52

53

54

56

57

58

60

62

62

64

65

65

69

69

70

70

71

73

74

75

76

78

93

95

99

100

102

104

104

viii Contents

6

7

5.3.2 Proposed System

5.3.3 Working

5.3.4 Module Description

5.3.4.1 Pulse Sensor

5.3.4.2 Temperature Sensor

5.3.4.3 Spirometer

5.3.4.4 OpenCV (Open Source Computer Vision)

5.3.4.5 Raspberry Pi

5.3.4.6 USB Camera

5.3.4.7 AVR Module

5.3.4.8 Power Supply

5.3.4.9 USB to TTL Converter

5.3.4.10 EEG of Comatose Patients

5.4 Results and Discussion

5.5 Conclusion

References

105

105

106

106

107

107

108

108

109

109

109

110

110

111

116

117

Deep Learning Interpretation of Biomedical Data

T.R. Thamizhvani, R. Chandrasekaran and T.R. Ineyathendral

6.1 Introduction

6.2 Deep Learning Models

6.2.1 Recurrent Neural Networks

6.2.2 LSTM/GRU Networks

6.2.3 Convolutional Neural Networks

6.2.4 Deep Belief Networks

6.2.5 Deep Stacking Networks

6.3 Interpretation of Deep Learning With Biomedical Data

6.4 Conclusion

References

121

Evolution of Electronic Health Records

G. Umashankar, Abinaya P., J. Premkumar, T. Sudhakar

and S. Krishnakumar

7.1 Introduction

7.2 Traditional Paper Method

7.3 IoMT

7.4 Telemedicine and IoMT

7.4.1 Advantages of Telemedicine

7.4.2 Drawbacks

7.4.3 IoMT Advantages with Telemedicine

7.4.4 Limitations of IoMT With Telemedicine

143

122

125

125

127

128

130

131

132

139

140

143

144

144

145

145

146

146

147

Contents

8

ix

7.5 Cyber Security

7.6 Materials and Methods

7.6.1 General Method

7.6.2 Data Security

7.7 Literature Review

7.8 Applications of Electronic Health Records

7.8.1 Clinical Research

7.8.1.1 Introduction

7.8.1.2 Data Significance and Evaluation

7.8.1.3 Conclusion

7.8.2 Diagnosis and Monitoring

7.8.2.1 Introduction

7.8.2.2 Contributions

7.8.2.3 Applications

7.8.3 Track Medical Progression

7.8.3.1 Introduction

7.8.3.2 Method Used

7.8.3.3 Conclusion

7.8.4 Wearable Devices

7.8.4.1 Introduction

7.8.4.2 Proposed Method

7.8.4.3 Conclusion

7.9 Results and Discussion

7.10 Challenges Ahead

7.11 Conclusion

References

147

147

147

148

148

150

150

150

151

151

151

151

152

152

153

153

153

154

154

154

155

155

155

157

158

158

Architecture of IoMT in Healthcare

A. Josephin Arockia Dhiyya

8.1 Introduction

8.1.1 On-Body Segment

8.1.2 In-Home Segment

8.1.3 Network Segment Layer

8.1.4 In-Clinic Segment

8.1.5 In-Hospital Segment

8.1.6 Future of IoMT?

8.2 Preferences of the Internet of Things

8.2.1 Cost Decrease

8.2.2 Proficiency and Efficiency

8.2.3 Business Openings

161

161

162

162

163

163

163

164

165

165

165

165

x Contents

9

8.2.4 Client Experience

8.2.5 Portability and Nimbleness

8.3 loMT Progress in COVID-19 Situations: Presentation

8.3.1 The IoMT Environment

8.3.2 IoMT Pandemic Alleviation Design

8.3.3 Man-Made Consciousness and Large Information

Innovation in IoMT

8.4 Major Applications of IoMT

References

166

166

167

168

169

Performance Assessment of IoMT Services and Protocols

A. Keerthana and Karthiga

9.1 Introduction

9.2 IoMT Architecture and Platform

9.2.1 Architecture

9.2.2 Devices Integration Layer

9.3 Types of Protocols

9.3.1 Internet Protocol for Medical IoT Smart Devices

9.3.1.1 HTTP

9.3.1.2 Message Queue Telemetry Transport

(MQTT)

9.3.1.3 Constrained Application Protocol

(CoAP)

9.3.1.4 AMQP: Advanced Message Queuing

Protocol (AMQP)

9.3.1.5 Extensible Message and Presence Protocol

(XMPP)

9.3.1.6 DDS

9.4 Testing Process in IoMT

9.5 Issues and Challenges

9.6 Conclusion

References

173

10 Performance Evaluation of Wearable IoT-Enabled Mesh

Network for Rural Health Monitoring

G. Merlin Sheeba and Y. Bevish Jinila

10.1 Introduction

10.2 Proposed System Framework

10.2.1 System Description

10.2.2 Health Monitoring Center

10.2.2.1 Body Sensor

170

171

172

174

175

176

177

177

177

178

179

180

181

181

183

183

185

185

185

187

188

190

190

192

192

Contents

10.2.2.2

Wireless Sensor Coordinator/

Transceiver

10.2.2.3 Ontology Information Center

10.2.2.4

Mesh Backbone-Placement and Routing

10.3 Experimental Evaluation

10.4 Performance Evaluation

10.4.1 Energy Consumption

10.4.2 Survival Rate

10.4.3 End-to-End Delay

10.5 Conclusion

References

11 Management of Diabetes Mellitus (DM) for Children

and Adults Based on Internet of Things (IoT)

Krishnakumar S., Umashankar G., Lumen Christy V., Vikas

and Hemalatha R.J.

11.1 Introduction

11.1.1 Prevalence

11.1.2 Management of Diabetes

11.1.3 Blood Glucose Monitoring

11.1.4 Continuous Glucose Monitors

11.1.5 Minimally Invasive Glucose Monitors

11.1.6 Non-Invasive Glucose Monitors

11.1.7 Existing System

11.2 Materials and Methods

11.2.1 Artificial Neural Network

11.2.2 Data Acquisition

11.2.3 Histogram Calculation

11.2.4 IoT Cloud Computing

11.2.5 Proposed System

11.2.6 Advantages

11.2.7 Disadvantages

11.2.8 Applications

11.2.9 Arduino Pro Mini

11.2.10 LM78XX

11.2.11 MAX30100

11.2.12 LM35 Temperature Sensors

11.3 Results and Discussion

11.4 Summary

11.5 Conclusion

References

xi

192

195

196

200

201

201

201

202

204

204

207

208

209

209

210

211

211

211

211

212

212

213

213

214

215

215

215

216

216

217

218

218

219

222

222

223

xii Contents

12 Wearable Health Monitoring Systems Using IoMT

Jaya Rubi and A. Josephin Arockia Dhivya

12.1 Introduction

12.2 IoMT in Developing Wearable Health Surveillance System

12.2.1 A Wearable Health Monitoring System with

Multi-Parameters

12.2.2 Wearable Input Device for Smart Glasses Based

on a Wristband-Type Motion-Aware Touch Panel

12.2.3 Smart Belt: A Wearable Device for Managing

Abdominal Obesity

12.2.4 Smart Bracelets: Automating the Personal Safety

Using Wearable Smart Jewelry

12.3 Vital Parameters That Can Be Monitored Using Wearable

Devices

12.3.1 Electrocardiogram

12.3.2 Heart Rate

12.3.3 Blood Pressure

12.3.4 Respiration Rate

12.3.5 Blood Oxygen Saturation

12.3.6 Blood Glucose

12.3.7 Skin Perspiration

12.3.8 Capnography

12.3.9 Body Temperature

12.4 Challenges Faced in Customizing Wearable Devices

12.4.1 Data Privacy

12.4.2 Data Exchange

12.4.3 Availability of Resources

12.4.4 Storage Capacity

12.4.5 Modeling the Relationship Between Acquired

Measurement and Diseases

12.4.6 Real-Time Processing

12.4.7 Intelligence in Medical Care

12.5 Conclusion

References

225

13 Future of Healthcare: Biomedical Big Data Analysis and IoMT

Tamiziniyan G. and Keerthana A.

13.1 Introduction

13.2 Big Data and IoT in Healthcare Industry

13.3 Biomedical Big Data Types

247

225

226

227

228

228

228

229

230

231

232

232

234

235

236

238

239

240

240

240

241

241

242

242

242

243

244

248

250

251

Contents xiii

13.4

13.5

13.6

13.7

13.3.1 Electronic Health Records

13.3.2 Administrative and Claims Data

13.3.3 International Patient Disease Registries

13.3.4 National Health Surveys

13.3.5 Clinical Research and Trials Data

Biomedical Data Acquisition Using IoT

13.4.1 Wearable Sensor Suit

13.4.2 Smartphones

13.4.3 Smart Watches

Biomedical Data Management Using IoT

13.5.1 Apache Spark Framework

13.5.2 MapReduce

13.5.3 Apache Hadoop

13.5.4 Clustering Algorithms

13.5.5 K-Means Clustering

13.5.6 Fuzzy C-Means Clustering

13.5.7 DBSCAN

Impact of Big Data and IoMT in Healthcare

Discussions and Conclusions

References

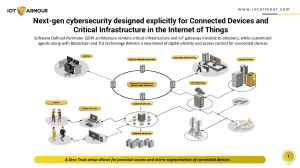

14 Medical Data Security Using Blockchain With Soft

Computing Techniques: A Review

Saurabh Sharma, Harish K. Shakya and Ashish Mishra

14.1 Introduction

14.2 Blockchain

14.2.1 Blockchain Architecture

14.2.2 Types of Blockchain Architecture

14.2.3 Blockchain Applications

14.2.4 General Applications of the Blockchain

14.3 Blockchain as a Decentralized Security Framework

14.3.1 Characteristics of Blockchain

14.3.2 Limitations of Blockchain Technology

14.4 Existing Healthcare Data Predictive Analytics Using Soft

Computing Techniques in Data Science

14.4.1 Data Science in Healthcare

14.5 Literature Review: Medical Data Security in Cloud

Storage

14.6 Conclusion

References

252

252

252

253

254

254

254

255

255

256

257

258

258

259

259

260

261

262

263

264

269

270

272

272

273

274

276

277

278

280

281

281

281

286

287

xiv

Contents

15 Electronic Health Records: A Transitional View

Srividhya G.

15.1 Introduction

15.2 Ancient Medical Record, 1600 BC

15.3 Greek Medical Record

15.4 Islamic Medical Record

15.5 European Civilization

15.6 Swedish Health Record System

15.7 French and German Contributions

15.8 American Descriptions

15.9 Beginning of Electronic Health Recording

15.10 Conclusion

References

289

Index

301

289

290

291

291

292

292

293

293

297

298

298

Preface

It is a pleasure for us to put forth this book, The Internet of Medical Things

(IoMT): Healthcare Transformation. Digital technologies have come into

effect in various sectors of our daily lives and it has been successful in

influencing and conceptualizing our day-to-day activities. The Internet

of Medical Things is one such discipline which seeks a lot of interest as

it combines various medical devices and allows these devices to have a

conversation among themselves over a network to form a connection of

advanced smart devices. This book helps to know about IoMT in the health

care sector that involves the latest technological implementation in diagnostic level as well as therapeutic level. The security and privacy of maintaining the health records is a major concern and several solutions for the

same has been discussed in this book. It provides significant advantages

for the wellbeing of people by increasing the quality of life and reducing

medical expenses. IoMT plays a major role in maintaining smart healthcare system as the security and privacy of the health records further leads

to help the health care sector to be more secure and reliable. Artificial

Intelligence is the other enabling technology that helps IoMT in building

smart defensive mechanisms for a variety of applications like providing

assistance for doctors in almost every area of their proficiencies such as

clinical decision-making. Through Machine Learning and Deep Learning

techniques, the system can learn normal and abnormal decisions using the

data generated by the health worker/professionals and the patient feedback. This book demonstrates the connectivity between medical devices

and sensors is streamlining clinical workflow management and leading to

an overall improvement in patient care, both inside care facility walls and

in remote locations. This book would be a good collection of state-of-theart approaches for applications of IoMT in various health care sectors. It

will be very beneficial for the new researchers and practitioners working in

the field to quickly know the best methods for IoMT.

xv

xvi Preface

• Chapter 1 concentrates on the study of the threedimensional (3-D) models of lung cancer cell line proteins

(epidermal growth factor (EGFR), K-Ras oncogene protein

and tumor suppressor (TP53)). The generation and their

binding affinities with curcumins, ellagic acid and quercetin

through local docking were assessed.

• Chapter 2 focuses on cloud computing and electronic health

record system service EHR used to protect the confidentiality of patient sensitive information and must be encrypted

before outsourcing information. This chapter focuses on the

effective use of cloud data such as search keywords and data

sharing and the challenging problem associated with the

concept of soft computing.

• Chapter 3 elucidates the study of cloud computing concepts,

security concerns in clouds and data centers, live migration and its importance for cloud computing, and the role

of virtual machine (VM) migration in cloud computing. It

provides a holistic approach towards the pre-copy migration

technique thereby explore the way for reducing the downtime and migration time. This chapter compares different

pre-copy algorithms and evaluates its parameters for providing a better solution.

• Chapter 4 concentrates on Deep Learning that has gained

more interest in various fields like image classification, selfdriven cars, natural language processing and healthcare

applications. The chapter focuses on solving the complex

problems in a more effective and efficient manner. It elaborates for the reader how deep learning techniques are useful for predicting and classification of the brain tumor cells.

Datasets are trained using pre-trained neural networks such

as Alexnet, Googlenet and Resnet 101 and performance of

these networks are analysed in detail. Resnet 101 networks

have achieved highest accuracy.

• Chapter 5 illustrates an intelligent healthcare monitoring

system for coma patients that examines the coma patient's

vital signs on a continuous basis, detects the movement happening in the patient, and updates the information to the

doctor and central station through IoMT. Consistent tracking and observation of these health issues improves medical

assurance and allows for tracking coma events.

Preface

• Chapter 6 details the Deep Learning process that resembles

the human functions in processing and defining patterns used

for decision-making. Deep learning algorithms are mainly

designed and developed using neural networks performing

unsupervised data that are unstructured. Biomedical data

possess time and frequency domain features for analysis and

classification. Thus, deep learning algorithms are used for

interpretation and classification of biomedical big data.

• Chapter 7 discusses how the electronic health records

automates and streamlines the clinician’s workflow and

makes the process easy. It has the ability to generate the

complete history of the patient and also help in assisting for the further treatment which helps in the recovery of the patient in a more effective way. The electronic

health records are designed according to the convenience

depending on the sector it is being implemented. The main

aim of electronic health records was to make it available

to the concerned person wherever they are, to reduce the

work load to maintain clinical book records and use the

details for research purposes with the concerned persons

acknowledgement.

• Chapter 8 elaborates technical architecture of IoMT in relation to biomedical applications. These ideologies are widely

used to educate people regarding the medical applications

using IoMT. It also gives a detailed study about the future

scope of IoMT in healthcare.

• Chapter 9 provides knowledge on the different performance

assessment techniques and types of protocols that suits best

data transfer and increases safety. The chapter provides the

best protocol which helps in saving energy and is useful for

the customer. It will help the researchers to select the best

IoT protocol for healthcare applications. Testing tools and

frameworks provide knowledge to assess the protocols.

• Chapter 10 addresses the issue of a Health Monitoring

Centre (HMC) in rural areas. The HMC monitors and

records continuously the physiological parameters of the

patients in care using wearable biosensors. The elderly suffering from chronic diseases is monitored periodically or

continuously under the care of the physician. To enhance

the performance of the system a smart and intelligent mesh

xvii

xviii Preface

•

•

•

•

backbone is integrated for fast transmission of the critical

medical data to a remote health IOT cloud server.

Chapter 11 concentrates on Diabetes Mellitus (DM) which

is one of the most widely recognized perilous illnesses for

all age groups in the world. The patients need to settle on

the best-individualized choices about day-by-day management of their diabetes. Noninvasive glucose sensor used to

find out the glucose value of patients from its fingertip and

other sensors also connected to the patient to get relevant

data. A completely useful IoT-based eHealth stage that wires

humanoid robot help with diabetes and planned successfully. The created platform encourages a constant coupled

network among patients and their caretakers over physical

separation and, in this manner, improving patient’s commitment with their caretakers while limiting the cost, time, and

exertion of the conventional occasional clinic visits.

Chapter 12 explores the concepts of wearable health monitoring systems using IoMT technology. Additionally, this

chapter also provides a brief review about challenges and

applications of customized wearable healthcare system that

are trending these days. The basic idea is to have a detailed

study about the recent developments in IoMT technologies

and the drawbacks, as well as future advancements related

to it. The recent innovations, implications and key issues are

discussed in the context of the framework.

Chapter 13 provides knowledge on biomedical big data analysis which plays a huge impact in personalized medicine.

Some challenges in big data analysis like data acquisition,

data accuracy, data security are discussed. Huge volume of

data in healthcare can be managed by integrating biomedical data management. This chapter will provide brief information on different software that are used to manage data in

healthcare domain. Impact of big data and IoMT in healthcare will enhance data analytics research.

Chapter 14 concentrates on blockchain which is a highly

secure and decentralized networking platform of multiple

computers called nodes. Predictive analysis, soft computing

(SC) and optimization and data science is becoming increasingly important. In this chapter, the authors investigate privacy issues around large cloud medical data in the remote

cloud. Their proposed framework ensures data privacy,

Preface

xix

integrity, and access control over the shared data with better efficiency. It reduces the turnaround time for data sharing, improves the decision-making process, and reduces

the overall cost while providing better security of electronic

medical records.

• Chapter 15 discusses the evolution of electronic health

record starting with the history and evolution of the health

record system in the Egyptian era when the first health

record was written, all the way to the modern computerized health record system. This chapter also includes various

documentation procedures for the health records that were

followed from the ancient times and by other civilizations

around the world.

We thank the chapter authors most profusely for their contributors written during the pandemic.

R. J. Hemalatha

D. Akila

D. Balaganesh

Anand Paul

January 2022

1

In Silico Molecular Modeling and Docking

Analysis in Lung Cancer Cell Proteins

Manisha Sritharan1 and Asita Elengoe2*

Department of Science and Biotechnology, Faculty of Engineering and Life Sciences,

University of Selangor, Bestari Jaya, Selangor, Malaysia

2

Department of Biotechnology, Faculty of Science, Lincoln University College,

Petaling Jaya, Selangor, Malaysia

1

Abstract

In this study, the three-dimensional (3D) models of lung cancer cell line proteins

[epidermal growth factor (EGFR), K-ras oncogene protein, and tumor suppressor

(TP53)] were generated and their binding affinities with curcumin, ellagic acid,

and quercetin through local docking were assessed. Firstly, Swiss model was used

to build lung cancer cell line proteins and then visualized by the PyMol software.

Next, ExPASy ProtParam Proteomics server was used to evaluate the physical and

chemical parameters of the protein structures. Furthermore, the protein models

were validated using PROCHECK, ProQ, ERRAT, and Verify3D programs. Lastly,

the protein models were docked with curcumin, ellagic acid, and quercetin by

using BSP-Slim server. All three protein models were adequate and in exceptional

standard. The curcumin showed binding energy with EGFR, K-ras oncogene protein, and TP53 at 5.320, 2.730, and 1.633, kcal/mol, respectively. Besides that, the

ellagic acid showed binding energy of EGFR, K-ras oncogene protein, and TP53

at −2.892, 0.921, and 0.054 kcal/mol, respectively. Moreover, the quercetin showed

binding energy of EGFR, K-ras oncogene protein, and TP53 at −1.249, −1.154,

and −0.809 kcal/mol, respectively. The EGFR had the strongest bond with ellagic

acid while K-ras oncogene protein and TP53 had the strongest interaction with

quercetin. In order to identify the appropriate function, all these potential drug

candidates can be further assessed through laboratory experiments.

Keywords: EGFR, K-ras, TP53, curcumin, ellagic acid, quercetin, docking

*Corresponding author: asitaelengoe@yahoo.com

R. J. Hemalatha, D. Akila, D. Balaganesh and Anand Paul (eds.) The Internet of Medical Things (IoMT):

Healthcare Transformation, (1–22) © 2022 Scrivener Publishing LLC

1

2

The Internet of Medical Things (IoMT)

1.1 Introduction

Lung cancer is known to be the number one cause of cancer deaths among

all the cancer in both men and women in worldwide. According to a World

Health Organization (WHO) survey, lung cancer caused 19.1 deaths per

100,000 in Malaysia, or 4,088 deaths per year (3.22% of all deaths) [1].

Moreover, there was a record of 1.69 million of deaths worldwide in 2015

due to lung cancer. Furthermore, a research in UK estimated that there

will be 23.6 million of new cases of cancer worldwide each year by 2030

[1]. The main cause of lung cancer deaths is smoking. Almost 8% of people

died because of it. Furthermore, the second reason is exposure to secondhand smoke. Thus, it is very clear that smoking is the leading risk factor

for lung cancer. However, not everyone who got lung cancer is smokers

as many people with lung cancer are former smokers while many others

never smoked at all. Moreover, radiation exposure, unhealthy lifestyle,

secondhand smoke, pollution of air, genetic markers, prolongs inhalation

of asbestos, and chemicals as well as other factors can cause lung cancer

non-smokers [2].

Furthermore, it seems that most lung cancer signs do not appear until

the cancer has spread, although some people with early lung cancer do

have symptoms. Generally, the symptoms of lung cancer are a cough that

does not go away and instead gets worse, shortness of breath, chest pain,

feeling tired or weak, new onset of wheezing, and some lung cancer can

even cause syndrome [3]. On top of that, a number of tests can be conducted in order to look for cancerous cell such as X-ray image of lung that

could disclose the abnormal mass or nodule, a CT scan to exhibit small

lesions in the lungs which may not detected on X-ray, blood investigations,

sputum cytology, and tissue biopsy [4]. Lung cancer treatments being carried out are adjuvant therapy which may include radiation, chemotherapy,

targeted therapy, or immunotherapy.

Since they originate from the bronchi within the lungs, small-cell

lung carcinoma (SCLC) and non–small-cell lung carcinoma (NSCLC)

are the two main clinic pathological classes for lung cancer. They are

also known as bronchogenic carcinomas because they arise from the

bronchi within the lungs [4]. Lung cancer is believed to be arising after

a series of continuous pathological changes (preneoplastic lesions)

which very often discovered accompanying lung cancers as well as in

the respiratory mucosa of smokers. Apart from that, the genes involved

in lung cancer are epidermal growth factor receptor (EGFR), KRAS,

MET, LKBI, BREF, ALK, RET, and tumor suppressor gene (TP53) [5].

Molecular Modeling and Docking Analysis

3

The three most common genes in lung cancer are EGFR, KRAS, and

TP53, and the structure of these genes is explored in thus study. EGFR

is actually transmembrane protein that has cytoplasmic kinase movement and it transduces essential development factor motioning from

the extracellular milieu to the cell. According to da Cunha Santos, more

than 60% of NSCLCs expresses EGFR which has turned into an essential

focus for the treatment of these tumors [6]. In addition, the KRAS mutation is the most widely recognized oncogene driver change in patients

with NSCLC and presents a poor guess in the metastatic setting, making it an imperative focus for tranquilize advancement. It is difficult to

treat patients with KRAS mutations since there is no targeted therapy

yet [7]. Among the mutations, the most common mutation that found

to occur in lung cancer is TP53 mutations and its frequency becomes

greater with tobacco consumption [8]. In this study, three compounds

(curcumin, ellagic acid, and quercetin) were used for docking with the

three mutant proteins. Curcumin has excellent safety profile that focus

on different infections with solid confirmation on molecule level. Thus,

improvement in formulation criteria can aid in developing therapeutic

drug [9]. Next, ellagic acid has the ability to bind with cancer cells to

make them inactive and it is also effective to resist cancer in rats and

mice according to a research [10]. Quercetin is a pigment from plant

(flavonoid) which has anti-oxidant and anti-inflammatory effect. It has

shown to inhibit the multiplication of cancer cells according to PaoChen Kuo et al. [11].

Bioinformatics is a multidisciplinary discipline that creates methods

and software tools for storing, extracting, organizing, and interpreting

biological data. To analyze biological data, a combination of bioinformatics and computer science, statistics, physics, chemistry, mathematics, and

engineering is useful. Currently, this method is growing rapidly because

it is cheap and quite faster than experimental approaches. Computational

biology tools such as protein modeling (e.g., Swiss Model, Easy

Modeller, and Modeller), molecular dynamic simulation (e.g., Gromacs

and Amber), and docking (e.g., Autodock version 4.2, AutodockVina,

Swissdock, and Haddock) helped design substrate-based drugs to study

the interaction between the target proteins (cancer cell proteins) and

ligand (phytocomponents).

The aim of conducting this research is to initiate three-dimensional (3D)

models of lung cancer line proteins (EGFR, K-ras oncogene, and TP53)

and to guesstimate their binding affinities with curcumin, ellagic acid, and

quercetin via docking approach.

4

The Internet of Medical Things (IoMT)

1.2 Methodology

1.2.1 Sequence of Protein

The entire amino acid sequence of the EGFR (GI: 110002567), K-ras oncogene protein (GI: 186764), and TP53 (GI: 1233272225) were obtained from

National Center for Biotechnology Information Center for Biotechnology

Information (NCBI). Next, EGFR consists of 464 amino acids, K-ras oncogene protein contains 188 amino acids, while TP53 consists of 346 amino

acids.

1.2.2 Homology Modeling

As of now, the 3D models of EGFR, K-ras oncogene protein, and TP53

are not available in Protein Data Bank (PDB). As a result, the models were

started with Swiss Model [12] and then visualized with PyMol [13].

1.2.3 Physiochemical Characterization

The physical and chemical characters of the protein structures were analyzed by using the ExPASy ProtParam Proteomics tool [14]. Besides that,

hydrophobic and hydrophilic were foreseen by ColorSeq analysis [15].

Furthermore, The ESBRI program [16] was used to reveal salt bridges in

protein structures, while the Cys Rec program was used to count the number of disulfide bonds [17].

1.2.4 Determination of Secondary Models

The secondary structural properties were discovered using the Alignment

Self-Optimized Prediction Process (SOPMA) [18].

1.2.5 Determination of Stability of Protein Structures

PROCHECK was used to test the protein models [19]. ProQ [20], ERRAT

[21], and Verify3D programs were used to conduct further research [22].

1.2.6 Identification of Active Site

The 3D model of EGFR, K-ras oncogene protein, and TP53 were submitted

to active site-prediction server [23] in order to discover their binding sites.

Molecular Modeling and Docking Analysis

5

1.2.7 Preparation of Ligand Model

The tertiary structure of the quercetin, curcumin, and ellagic acid are not

openly accessible. The whole sequence of quercetin, curcumin, and ellagic

acid were attained from PubChem, National Center for Biotechnology

Information (2017) [24].

1.2.8 Docking of Target Protein and Phytocompound

The 3D structure of EGFR was docked with quercetin, curcumin, and

ellagic acid by using BSP-Slim server [25]. In addition, the best docking

complex model was chosen based on the lowest binding score. The similar

docking method was carried out between the other two protein models

and phytocompounds (quercetin, curcumin, and ellagic acid).

1.3 Results and Discussion

1.3.1 Determination of Physiochemical Characters

The isoelectric point (pI) value quantified for EFGR (pI > 7) specify basic

feature while the pI for k-ras and TP53 (pI < 7) exhibit acidic. Besides

that, the molecular weight of EFGR, k-ras oncogene protein, and TP53

are 50,343.70, 21,470.62, and 38,532.60 Daltons, respectively. The extent of

light being by absorbed by protein at a specific wavelength was used to calculate the extinction coefficient of TYR, TRP, and CYS residues where for

EGFR is 38,305 M/cm, k-ras oncogene protein is 12,170M/cm, and TP53

is 43,025 M/cm. In addition, −R is the negatively (ASP + GLU) and +R is

the positively charged (ARG + LYS) residues in the amino acid sequence.

The total of –R and +R for each protein model was described in Table 1.1.

According to the instability index of ExPASy ProtParam, EGFR proteins

are classified as stable because the instability index for both proteins are less

than 40 while K-ras oncogene protein and TP53 is categorized as unstable

as the instability index is more than 40. The instability index for EGFR is

35.56, K-ras oncogene protein is 43.95, and TP53 is 80.17. On top of that,

the very low grand average of hydropathicity (GRAVY) index (a (negative

value GRAVY) of EGFR, K-ras oncogene protein, and TP53 denotes their

hydrophilic nature (Table 1.1). Apart from that, EFGR, K-ras and TP53

have more polar residues (41.52%, 53.33%, and 45.29%) than non-polar

residues (26.74%, 30.0%, and 27.35%) which were determined using Color

Protein Sequence.

Length

460

180

340

Protein

EGFR

KRAS

TP53

38,532.60

21,470.62

50,343.70

Molecular

weight (kDa)

5.64

8.18

7.10

pI

41

29

49

−R

33

31

49

+R

43,025

12,170

38,305

Extinction

coefficient

80.17

43.95

35.56

Instability

index

63.99

77.18

72.91

Aliphatic

index

−0.592

−0.559

−0.269

GRAVY

Table 1.1 Physiochemical characters of EGFR, K-ras, and TP53 proteins as determined by ExPASy ProtParam program.

6

The Internet of Medical Things (IoMT)

Molecular Modeling and Docking Analysis

7

Furthermore, the structure and function of the protein can be affected

by salt bridges. Thus, salt bridge disruption minimizes the stability of protein [26]. Next, it is also associated with regulation, molecular recognition,

oligomerization, flexibility, domain motions, and thermostability [27, 28].

The greater number of arginine in the protein model enhances the stability of the protein. This is happens through the electrostatic interactions

between their guanidine group [29]. Hence, it was confirmed that all the

protein models are in the identical stable conditions. The outcome of Cys_

Recserver exhibits that the quantity of disulfide bonds in EGFR is 42, K-ras

oncogene protein is 5, and TP53 is 11 (Table 1.2).

1.3.2 Prediction of Secondary Structures

Results from SOPMA analysis shows that random coils dominant among

secondary structure components in the protein models (Figure 1.1). The

constitution of alpha helix in EGFR, K-ras oncogene protein, and TP53

were shown in Table 1.3.

The outcome from this analysis specified that EGFR, K-ras oncogene

protein, and TP53 constitutes of 15, 11, and 10α helices, respectively.

Besides that, Table 1.4 represents the details of the longest and shortest

alpha helix of all the protein models.

1.3.3 Verification of Stability of Protein Structures

PROCHECK server was used to verify the stereo chemical quality and the

geometry of protein models through Ramachandran plots (Figure 1.2).

Furthermore, it was revealed that all the protein structures are in most

favorable region because they had percentage value more than 80% (Table

1.5). Thus, the standard of these proteins was assessed to be immense and

reliable. On top of that, PROCHECK analysis disclose that a number of

residues such as TYR265 and GLU51 for EGFR while LYS180 for K-ras

oncogene protein were located away from energetically favored regions of

Ramachandran plot. Besides that, there are no residues found at forbade

region for TP53 protein model.

Thereby, the stereo chemical interpretation of backbone phi/psi dihedral angles deduced that EGFR, K-ras oncogene protein, and TP53 have

low percentage of residues among the protein models. Moreover, ProQ was

utilized in order to validate “the quality” with the usage of Levitt-Gerstein

(LG) score and maximum subarray (MaxSub). All the protein models

were within the range for LG and MaxSub score according to the outcome

exhibited for creating a good model (Table 1.5).

8

The Internet of Medical Things (IoMT)

Table 1.2 The number disulfide bonds were quantitated by Cys_Rec prediction

program.

Protein

Cys_Rec

Score

EGFR

Cys_9

−13.0

Cys_13

39.2

Cys_17

100.1

Cys_25

98.3

Cys_26

104.2

Cys_30

104.1

Cys_34

90.5

Cys_42

48.2

Cys_45

56.0

Cys_54

58.2

Cys_58

49.5

Cys_85

55.7

Cys_89

54.9

Cys_101

50.4

Cys_105

44.0

Cys_120

63.0

Cys_123

73.3

Cys_127

75.3

Cys_131

61.6

Cys_156

33.0

Cys_264

45.3

Cys_293

43.8

Cys_300

56.5

Cys_304

46.8

(Continued)

Molecular Modeling and Docking Analysis

9

Table 1.2 The number disulfide bonds were quantitated by Cys_Rec prediction

program. (Continued)

Protein

KRAS

Cys_Rec

Score

Cys_309

66.0

Cys_317

65.6

Cys_320

60.2

Cys_329

49.1

Cys_333

42.2

Cys_349

42.2

Cys_352

32.9

Cys_356

62.9

Cys_365

70.2

Cys_373

54.2

Cys_376

54.8

Cys_385

35.8

Cys_389

41.2

Cys_411

78.8

Cys_414

85.1

Cys_418

84.4

Cys_422

26.5

Cys_430

3.7

Cys_12

−28.5

Cys_51

−74.2

Cys_80

−72.6

Cys_118

−56.4

Cys_185

−15.2

(Continued)

10

The Internet of Medical Things (IoMT)

Table 1.2 The number disulfide bonds were quantitated by Cys_Rec prediction

program. (Continued)

Protein

Cys_Rec

Score

TP53

Cys_124

−19.4

Cys_135

−1.6

Cys_141

−17.9

Cys_176

−9.1

Cys_182

−45.1

Cys_229

−54.4

Cys_238

1.6

Cys_242

−5.5

Cys_275

−34.4

Cys_277

−51.5

Cys_339

−32.8

ERRAT analysis is used for assessing the protein models which were

determined by x-ray crystallography. Next, the value of ERRAT relies upon

the statistics of non-bonded atomic interactions in the 3D protein structures. The protein is generally accepted as high quality protein if the percentage is greater than 50%. The ERRAT analysis score result shows that

K-ras oncogene protein had the highest at 94.767. Therefore, it can be seen

that K-ras oncogene protein has high quality resolution among the protein

models. Besides that, the score value for EGFR is 88.010 while 90.374 for

TP53 (Figure 1.3).

The Verify3D server was used to reveal the residues in each protein in

which EGFR, K-ras oncogene, and TP53 had 98.59%, 100.00%, and 92.96%

residues, respectively. Next, the average 3D-1D score of all three proteins

are more than 0.2. As a consequence, it specifies that all of the sequences

were in line with its protein model (Figure 1.4). Certainly, the resulting

energy minimized EGFR, K-ras oncogene protein, and TP53 protein models satisfied the standard for evaluation of protein. Hence, the docking

analysis with ligand will be carried out.

Molecular Modeling and Docking Analysis

50

100

150

200

50

100

150

200

250

300

350

250

300

350

(a)

50

20

100

40

150

60

80

200

100

250

120

140

(b)

50

100

150

50

100

150

200

250

200

250

(c)

Figure 1.1 SOPMA plots for (a) EGFR, (b) K-ras oncogene protein, and (c) TP53.

11

12

The Internet of Medical Things (IoMT)

Table 1.3 Secondary structure of the EGFR, K-ras oncogene protein, and TP53.

Secondary

structure

Alpha helix

(Hh)

Extended

strand (Ee)

Beta turn

(Tt)

Random coil

(Cc)

EGFR

16.81

16.81

3.23

64.44

KRAS

43.62

21.81

7.45

27.13

TP53

18.79

18.21

3.18

59.83

Table 1.4 Composition of α-helix EGFR, K-ras oncogene protein, and TP53.

Amino acid

Longest alpha

helix

Residues

Shortest alpha

helix

Number of

residues

EGFR

α14

14

α3, α6, α11, α15

1

KRAS

α11

20

α1, α10

1

TP53

α5

11

α7

1

180

135

180

B

–b

–1

Psi (degrees)

Psi (degrees)

45

a

A

0

–a

TYR 265 (A)

–45

–90

ASN 322 (A)

–p

–180

a

A

0

LYS 180 (C)

–45

–135

p

b

–90

–45

0

45

Phi (degrees)

90

135

180

–p

–b

–b

–135

–1

1

–90

GLU 51 (A)

–135

–b

–b

90

1

45

b

135 b

b

90

–b

B

b

b

–180

p

–135

–90

–45

0

45

Phi (degrees)

(a)

–b

90

135

180

(b)

180

135

B

–b

b

b

–b

–1

90

1

Psi (degrees)

45

a

A

0

–a

–45

–90

–135

–p

–b

p

b

–180

–135

–90

–45

0

45

Phi (degrees)

–b

90

135

180

(c)

Figure 1.2 Ramachandran plots for (a) EGFR, (b) K-ras oncogene protein, and (c) TP53.

92.9

TP53

7.1

8.9

0.0

0.0

0.0

0.6

−0.16

−0.12

−0.27

90.5

0.3

KRAS

8.6

0.6

90.6

0.07

0.04

0.02

Covalent

forces

EGFR

Disallowed

Dihedral

angles

Generously

allowed

Most

favored

Structure

Additionally

allowed

Goodness factor

Ramachandran plot statistics

Table 1.5 Validation of the EGFR, K-ras oncogene protein, and TP53.

−0.06

−0.04

−0.14

Overall

average

4.417

4.094

3.814

LG score

ProQ

0.454

0.474

0.302

MaxSub

Molecular Modeling and Docking Analysis

13

14

The Internet of Medical Things (IoMT)

Error value*

Overall quality factor**: 88.010

99%

95%

20

40

60

80

100

120

140

160

180

200

Residue # (window center)

220

240

260

280

300

Error value*

(a)

Overall quality factor**: 94.767

99%

95%

20

40

60

80

100

120

Residue # (window center)

Error value*

Overall quality factor**: 90.374

140

160

180

(b)

99%

95%

100

120

140

160

180

200

220

Residue # (window center)

240

260

280

(c)

Figure 1.3 ERRAT plots for (a) EGFR, (b) K-ras oncogene protein, and (c) TP53.

1.3.4 Identification of Active Sites

For EGFR, K-ras oncogene protein, and TP53, the BSP-Slim server was

used to obtain the active site protein volume and the residues that form

an active site pocket (Table 1.6). The protein volume for EGFR, K-ras, and

TP53 were 837 A3, 718A3, and 647A3, respectively.

1.3.5 Target Protein-Ligand Docking

Based on Murugesan et al. study [30], the plant compounds from methanolic leaf extract of Vitexnegundoweredocked successfully with cyclooxygenase-2 (COX-2) enzyme. The phytocompounds had a better interaction

Molecular Modeling and Docking Analysis

0.8

Average Score

15

Raw Score

0.6

0.4

0.2

0

-0.2

-0.4

-0.6

A1

:I

A1

2:

R

A2

3:

Q

A3

4:

S

A4

5:

A5 E

6:

M

A6

7:

V

A7

8:

T

A8

9:

A1 V

00

:

A1 G

11

A1 :V

22

:

A1 R

33

:F

A1

44

A1 :I

55

:

A1 G

66

:

A1 G

77

A1 :P

88

:

A1 E

99

A2 :P

10

:

A2 N

21

:

A2 H

32

:

A2 N

43

A2 :E

54

A2 :N

65

:W

A2

76

:

A2 T

87

:

A2 C

98

:

A3 C

09

A3 :R

20

:

A3 G

31

:

A3 E

42

A3 :E

53

:

A3 Q

64

A3 :P

75

A3 :D

86

:

A3 G

97

:

A4 K

08

:C

A4

25

:P

-0.8

(a)

0.8

Average Score

Raw Score

0.6

0.4

0.2

0

-0.2

-0.4

-0.6

:C

85

A1

A1

:M

A6

:L

A1

1:

A

A1

6:

K

A2

1:

A2 I

6:

N

A3

1:

E

A3

6:

I

A4

1:

R

A4

6:

I

A5

1:

C

A5

6:

L

A6

1:

Q

A6

6:

A

A7

1:

Y

A7

6:

E

A8

1:

V

A8

6:

N

A9

1:

E

A9

6:

A1 Y

01

:

A1 K

06

A1 :S

11

:M

A1

16

:

A1 N

21

:

A1 P

26

A1 :D

31

:Q

A1

36

:

A1 S

41

:

A1 F

46

A1 :A

51

:G

A1

56

:

A1 F

61

:

A1 R

66

:H

A1

71

:

A1 S

76

:K

-0.8

(b)

0.8

Average Score

Raw Score

0.6

0.4

0.2

0

-0.2

-0.4

-0.6

A1

:P

A6

:V

A1

1:

T

A1

6:

Y

A2

1:

G

A2

6:

G

A3

1:

V

A3

6:

S

A4

1:

K

A4

6:

L

A5

1:

P

A5

6:

V

A6

1:

P

A6

6:

V

A7

1:

A7 I

6:

Q

A8

1:

V

A8

6:

P

A9

1:

C

A9

6:

A1 G

01

:

A1 Q

06

A1 :V

11

A1 :R

16

:

A1 D

21

A1 :F

26

:

A1 V

31

A1 :P

36

:

A1 S

4

A1 1:I

46

:

A1 M

51

:

A1 C

56

:

A1 N

61

A1 :L

66

A1 :L

71

:

A1 G

76

A1 :R

81

A1 :V

86

A1 :C

91

:R

A1

99

:R

-0.8

(c)

Figure 1.4 Verify 3D plots for (a) EGFR, (b) K-ras oncogene protein, and (c) TP53.

compared with aspirin and ibuprofen. They had a good binding energy and

docking result.

Besides that, four components [1,3-Dioxolane, 2-(3-bromo-5,5,5trichloro-2,2-dimethylpentyl)-, Butanoic acid, 2-hydroxy-2-methylmethyl ester, DL-3,4-Dimethyl-3,4-hexanediol, and Pantolactone] from

Moringaconcanensishad good binding affinity with brain cancer receptors.

The binding energies were −3.90, −2.75, −3.05, and −4.15 kcal/mol. They

had the lowest binding energies [31].

According to Deepa et al. study, plant compounds from the ethanolic

leaf extract of VitexNegundo [(4S)-2-Methyl-2-phenylpentane-1,4-diol, 7Methoxy-2,3-dihydro-2-phenyl-4-quinolone, 3-(tert-Butoxycarbonyl)-6-(3benzoylprop-2-yl)phenol, (3R,4S)-4-(methylamino)-1-phenylpent-1-en-3-ol,

and (2S,1’S)-1-Benzyl-2-[1’-(dibenzylamino) ethyl]aziridine] were docked

16

The Internet of Medical Things (IoMT)

Table 1.6 Predicted active sites of the EGFR, K-ras oncogene protein, and TP53.

Protein

Volume

Residues that forming pocket

EGFR

837

GLY100, ALA 101, ASP102, SER103, TYR104,

GLU105, MET106, GLU107, GLU108,

LYS113, LYS115, LYS 116, CYS117,

GLU118, GLY119, PRO120, CYS121,

ARG122, LYS123, VAL124, ASN149,

THR151, SER152, SER154, THR185,

LYS187, GLU188, THR190, ASN210,

GLU212, ILE213, ARG215, LYS242, CYS99

K-ras oncogene

protein

718

GLY10, ALA11, CYS12, GLY13, VAL14,

GLY15, LYS16, ASP33, PRO34, THR35,

GLU37, LEU56, ASP57, THR58, ALA59,

GLY60, GLN61, GLU62, GLU63, SER65,

ARG68, MET72, ALA83, ASN86, LYS88,

SER89, GLU91, ASP92, ILE93, HIE94,

HIE95, TYR96, ARG97, GLU98, GLN99,

ILE100, ARG102, VAL103

TP53

647

GLU107, ASN109, THR11, PRO128, TYR129,

GLN13, GLU130, PRO131, PRO132,

GLU133, VAL134, GLY135, SER136,

ASP137, CYS138, THR139, THR140,

ILE141, HIE142, TYR143, TYR16, GLY17,

ASN177, SER178, PHE18, ARG19, LEU20,

GLY21, PHE22, LEU23, HIE24, TYR35,

ASN40, MET42, THR49, CYS50, PRO51,

GLN53, LEU54, TRP55, VAL56, ASP57,

THR59, PRO60, PRO61, THR64

with glucosamine 6 phosphatase synthase. They had the lowest and most negative value for binding energy (−36.53, −33.57, −35.90, −33.88, and −37.65 kcal/

mol) [32].

According to Kasilingam and Elengoe study, apigenin successfully

docked with p53, caspase-3, and MADCAM1 using BSP-Slim server.

Apigenin was the plant compound while p53, caspase-3, and MADCAM1

were the target proteins in lung cancer cell line. Apigenin bound strongly

with p53, caspase-3, and MADCAM1 at the lowest binding energies (4.611,

5.750, and 5.307 kcal/mol, respectively) [33].

Based on Ashwini et al. study, coumarin, camptothecin, epigallocatechin, quercetin, and gallic acid were screened for potential binding with

Molecular Modeling and Docking Analysis

17

caspase-3 (target protein) in human cervical cancer cell line (HeLa).

Coumarin had the strongest interaction with caspase-3 at the lowest binding affinity (−378.3 kJ/mol). Therefore, it could be a potential anti-cancer

drug. However, gallic acid had the least interaction with caspase-3 at the

lowest binding energy (−181.3 kJ/mol). The docking approach was carried

out using Hex 8.0.0 docking software [34].

Chakrabarty et al. study demonstrated that 1-hexanol and 1-octen-3-ol

suppressed the enzyme activity of Ach (PDB id: 2CKM) and BACE1 (PDB

id: 4IVT). Ach and BACE1 are the proteins responsible for Alzheimer disease. 1-hexanol and 1-octen-3-ol were the plant compounds derived from

leaf extract of Lantana Camera (L.). Glide Standard Precision (SP) ligand

docking was performed to determine the binding energy. The results show

that 1-hexanol and 1-octen-3-ol bound strongly with Ach at −2.291 and

−2.465 kJ/mol, respectively. Whereas, 1-hexanol and 1-octen-3-ol had the

lowest binding affinity with BACE 1 at −0.948 and −1.267 kJ/mol, respectively. 1-octen-3-ol may have the potential to be an effective drug against

Alzhemeir disease. It had the best interaction with both enzymes (Ach and

BACE1) when compared with 1-hexanol [35].

Based on Supramaniam and Elengoe study, glycyrrhizin successfully

docked with p53, NF-kB-p105, and MADCAM1 using BSP-Slim server.

Glycyrrhizin was the plant compound while p53, NF-kB-p105, and

MADCAM1 were the target proteins in breast cancer cell line. Glycyrrhizin

bound strongly with p53, NF-kB-p105, and MADCAM1 at the lowest binding affinities (−4.040, −5.127, and −5.251 kcal/mol, respectively). Therefore,

glycyrrhizin could be a potential drug in breast cancer treatment [36].

According to Elengoe and Sebestian study, p53, adenomatous polyposis coli (APC), and EGFR were generated using homology modeling

approach. These proteins were the target proteins. They were docked successfully with plant compounds such as allicin, epigallocatechin-3-gallate,

and gingerol. Plant compounds were used as ligands in docking process.

p53 had the most stable interaction with the allicin among the three target

proteins. p53 docked with allicin at the lowest binding energy of 4.968.

However, the other target proteins had the good docking score too [37].

In this study, EGFR is successfully docked with quercetin, curcumin,

and ellagic acid by using the BSP-Slim server. The same target protein-­

phytocompound complex docking method was repeated with K-ras oncogene protein and TP53. Furthermore, the most suitable docking complex

was selected based on the lowest binding energy (DGbind). Results of

docking showed that EGFR had a strong bond with ellagic acid since it was

the most favorable with the lowest energy value (−2.892 kcal/mol) when

compared to curcumin and quercetin (Table 1.7). In addition, there was

18

The Internet of Medical Things (IoMT)

Table 1.7 Docking result of the EGFR, K-ras oncogene protein, and TP53.

Protein

Compounds

Binding energy (kcal/mol)

EGFR

Curcumin

5.320

Ellagic acid

−2.892

Quercetin

−1.249

Curcumin

2.730

Ellagic acid

0.921

Quercetin

−1.154

Curcumin

1.633

Ellagic acid

0.054

Quercetin

−0.809

K-ras oncogene protein

TP53

strong interaction between K-ras oncogene protein and quercetin with

lowest energy (−1.154 kcal/mol) that was most favorable when compared

to curcumin and ellagic acid. In addition, the strongest interaction for

TP53 was with quercetin when compared to other two compounds with

lowest energy (0.809 kcal/mol) according to the docking analysis.

1.4 Conclusion

In a nutshell, EGFR was successfully docked with curcumin, ellagic acid,

and quercetin. Besides that, the same approach of docking simulation was

performed for K-ras oncogene protein and TP53. Among the three protein

models, EGFR had a strong interaction with ellagic acid due to the lowest

energy value while K-ras oncogene protein and TP53 had a strong interaction with quercetin as the binding energy was the lowest. Consequently,

result from this study will aid in designing a suitable structure-based drug.

However, wet lab must be carried out to verify the results of this study.

References

1. Cancer Research UK, Worldwide cancer statistics, 2012, https://www.cancer

researchuk.org/health-professional/cancer-statistics/worldwide-cancer#

collapseZero.

Molecular Modeling and Docking Analysis

19

2. American Cancer Society, Lung cancer prevention and early detection, 2016,

https://www.cancer.org/cancer/lung-cancer/prevention-and-early-detection/

signs-and-symptoms.html.

3. American Cancer Society, Causes, risk factors and prevention, 2016, https://

www.cancer.org/cancer/non-small-cell-lung-cancer/causes-risks-prevention/

what causes.

4. Mayo Clinic, Lung cancer, 2018, https://www.mayoclinic.org/diseases-conditions/

lung-cancer/diagnosis-treatment/drc-20374627.

5. El-Telbany, A. and Patrick, C.M., Cancer genes in lung cancer. Genes Cancer,

M3, 7–8, 467–480, 2012.

6. Santos, D.C., Sheperd, F.A., Tsao, M.S., EGFR mutations and lung cancer.

Annu. Rev. Pathol.: Mechanisms of Disease, 6, 49–69, 2016.

7. Bhattacharya, S., Socinski, M.A., T.F., KRAS mutant lung cancer: progress

thus far on an elusive therapeutic target. Clin. Transl. Med., 4, 35, 2015.

8. Halverson, A.R., Silwal-Pandit, L., Meza-Zepeda, L.A. et al., TP53 mutation spectrum in smokers and never smoking lung cancer patients. Front.

Genet., 7, 85, 2016.

9. Basnet, P. and Skalko-Basnet, N., Curcumin: An anti-inflammatory molecule from a curry spice on the path to cancer treatment. Molecules, 6, 6,

4567–4598, 2011.

10. Healthline, Whyellagic acid is important?, 2020, https://www.healthline.

com/health/ellagic-acid.

11. Kuo, P.-C., Liu, H.-F., Chao, J.-I., Survivin and p53 modulate quercetininduced cell growth inhibition and apoptosis in human lung carcinoma cells.

J. Biol. Chem., 279, 53, 55875–55885, 2004.

12. Biasini, M., Bienert, S., Waterhouse, A., Arnold, K., Studer, G., Schmidt,

T. et al., SWISS-MODEL: Modelling protein tertiary and quaternary

structure using evolutionary information. Nucleic Acids Res., 42, W2528, 2014.

13. Delano, W.L., The PyMOL molecular graphics system, 2001, http://www.

pymol.org.

14. Gasteiger, E., Hoogland, C., Gattiker, A. et al., Protein identification and

analysis tools on the ExPASy server, in: The proteomics protocols handbook,

J.M. Walker (Ed.), Humana Press, Totowa, 2015.

15. Prabi, L.G., Color protein sequence analysis, 1998, https://npsaprabi. ibcp.fr/

cgi- bin/npsa_automat.pl?page=/NPSA/npsa_color.html.

16. Costantini, S., Colonna, G., Facchiano, A.M., ESBRI: a web server for evaluating salt bridges in proteins. Bioinformation, 3, 137–138, 2008.

17. Roy, S., Maheshwari, N., Chauhan, R. et al., Structure prediction and functional characterization of secondary metabolite proteins of Ocimum.

Bioinformation, 6, 8, 315–319, 2011.

18. Geourjon, C. and Deleage, G., SOPMA: significant improvements in protein

secondary structure prediction by consensus prediction from multiple alignments. Comput. Appl. Biosci., 11, 681–684, 1995.

20

The Internet of Medical Things (IoMT)

19. Laskowski, R.A., MacArthur, M.W., Moss, D.S. et al., PROCHECK: a program to check the stereo chemical quality of protein structures. J. Appl.

Cryst., 26, 283–291, 1993.

20. Wallner, B. and Elofsson, A., Can correct protein models be identified?

Protein Sci., 12, 1073–1086, 2003.

21. Colovos, C. and Yeates, T.O., Verification of protein structures: patterns of

non-bonded atomic interactions. Protein Sci., 2, 1511–1519, 1993.

22. Eisenberg, D., Luthy, R., Bowie, J.U., VERIFY3D: assessment of protein models with three- dimensional profiles. Methods Enzymol., 77, 396–404, 1977.

23. Jayaram, B., Active site prediction server, 2004, http://www.scfbio-iitd.res.in/

dock/ActiveSite.jsp.

24. National Center for Biotechnology Information, Pubchem., 2017, https://

pubchem.ncbi.nlm.nih.gov/.

25. Hui, S.L. and Yang, Z., BSP-SLIM: A blind low-resolution ligand-protein

docking approach using theoretically predicted protein structures. Proteins,

80, 93–110, 2012.

26. Kumar, S., Tsai, C.J., Ma, B. et al., Contribution of salt bridges toward protein

thermo-stability. J. Biomol. Struct. Dyn., 1, 79–86, 2000.

27. Kumar, S. and Nussinov, R., Salt bridge stability in monomeric proteins.

J. Mol. Biol., 293, 1241–1255, 2009.

28. Kumar, S. and Nussinov, R., Relationship between ion pair geometries and

electrostatic strengths in proteins. Biophys. J., 83, 1595–1612, 2002.

29. Parvizpour, S., Shamsir, M.S., Razmara, J. et al., Structural and functional

analysis of a novel psychrophilic b-mannanase from Glaciozyma Antarctica

PI12. J. Comput. Aided Mol. Des., 28, 6, 685–698, 2014.

30. Murugesan, D., Ponnusamy, R.D., Gopalan, D.K., Molecular docking study

of active phytocompounds from the methanolic leaf extract of vitexnegundoagainst cyclooxygenase-2. Bangladesh J. Pharmacol., 9, 2, 146–53, 2014.

31. Balamurugan, V. and Balakrishnan, V., Molecular docking studies of

Moringaconcanensisnimmo leaf phytocompounds for brain cancer. Res. Rev.:

J. Life Sci., 8, 1, 26–34, 2018.

32. Santhanakrishnan, D., Sipriya, N., Chandrasekaran, B., Studies on the phytochemistry, spectroscopic characterization and antibacterial efficacy of

Salicornia Brachiata. Int. J. Pharm. Pharm. Sci., 6, 6430–6432, 2014.

33. Kasilingam, T. and Elengoe, A., In silico molecular modeling and docking of

apigenin against the lung cancer cell proteins. Asian J. Pharm. Clin. Res., 11,

9, 246–252, 2018.

34. Ashwini, S., Varkey, S.P., Shantaram, M., Insilico docking of polyphenolic

compounds against Caspase 3-HeLa cell line protein. Int. J. Drug Dev. Res., 9,

28–32, 2017.

35. Chakrabarty, N., Hossain, A., Barua, J., Kar, H., Akther, S., Al Mahabub, A.,

Majumder, M., Insilico molecular docking of some isolated selected compounds of lantana camera against Alzheimer’s disease. Biomed. J. Sci. Tech.

Res., 12, 2, 9168–9171, 2018.

Molecular Modeling and Docking Analysis

21

36. Supramaniam, G. and Elengoe, A., In silico molecular docking of glycyrrhizin

and breast cancer cell line proteins, in: Plant-Derived Bioactives, 1, 575–589,

2020.

37. Elengoe, A. and Sebestian, E., In silico molecular modelling and docking of

allicin, epigallocatechin-3-gallate and gingerol against colon cancer cell proteins. Asia Pac. J. Mol. Biol. Biotechnol., 4, 2851–67, 2020.

2

Medical Data Classification in Cloud

Computing Using Soft Computing

With Voting Classifier: A Review

Saurabh Sharma1*, Harish K. Shakya1† and Ashish Mishra2‡

Dept. of CSE, Amity School of Engineering & Technology, Amity University (M.P.),

Gwalior, India

2

Department of CSE, Gyan Ganga Institute of Technology and Sciences,

Jabalpur, India

1

Abstract

In the current context, a tele-medical system is the rising medical service where

health professionals can use telecommunication technology to treat, evaluate, and

diagnose a patient. The data in the healthcare system signifies a set of medical

data that is sophisticated and larger in number (X-ray, fMRI data, scans of the

lungs, brain, etc.). It is impossible to use typical hardware and software to manage

medical data collections. Therefore, a practical approach to the equilibrium of privacy protection and data exchange is required. To address these questions, several

approaches are established, most of the studies focusing on only a tiny problem

with a single notion. This review paper analyzes the data protection research carried out in cloud computing systems and also looks at the major difficulties that

conventional solutions confront. This approach helps researchers to better address

existing issues in protecting the privacy of medical data in the cloud system.

Keywords: Medical data, soft computing, fuzzy, cloud computing, data privacy,

SVM, FCM

*Corresponding author: saurabhgyangit@gmail.com

†

Corresponding author: hkshakya@gwa.amity.edu

‡

Corresponding author: ashish.mish2009@gmail.com

R. J. Hemalatha, D. Akila, D. Balaganesh and Anand Paul (eds.) The Internet of Medical Things (IoMT):

Healthcare Transformation, (23–44) © 2022 Scrivener Publishing LLC

23

24

The Internet of Medical Things (IoMT)

2.1 Introduction

There are many definitions in Electronic Health Record (EHR), such as the

electronic record that holds patient information on a health record system

operated by healthcare providers [1]. Although EHR has a good effect on

healthcare services, development in many healthcare institutions globally,

particularly in poor nations, is delayed due to numerous common problems.

Patient data security has been a problem since the beginning of medical history and is an important issue in modern day. Initiated by the idea of confidentiality, the Oath of Hippocrates has proved to be an honorable activity in

clinical and medical ethics. It is of highest importance to protect the privacy

and confidentiality of patient information; security is trustworthy. Medical

record security generally involves privacy and confidentiality [2]. Cloud computing provides the option of accessing massive amounts of patient information in a short period of time. This makes it easier for an unauthorized person

to obtain patient records. It confirms this feeling by saying “illegal access to

traditional medical records (paper-based) has always been conceivable, but

computer introduction increases a little problem to a large problem.”

Cloud computing is a concept for easy, on-demand access to a common

pool of configurable computer resources (e.g., networks, servers, storage,

applications, and services), which may easily be provided and disclosed with

minimal administration effort or engagement from service providers [4]. The

newest, most exciting, and comprehensive solution in the world of IT is cloud

computing. Its major purpose is to use the Internet or intranet to exchange

resources for users [5]. Cloud computing is an affordable, automatically scalable, multi-tenant, and secure cloud service provider platform (CSP).

2.1.1 Security in Medical Big Data Analytics

Big data is complex and uncomplicated by its very nature and requires sup­

pliers to take a close look at their techniques to collection, storage, analysis,

and presentation of their data to personnel, business partners, and patients.

What are some of the most challenging tasks for enterprises when starting up a big data analytics program, and how can they overcome these

problems to reach their clinical and financial goals?

2.1.1.1 Capture

All data comes from someone, but regrettably, it is not always from someone with flawless data management habits for many healthcare providers.

Collecting clean, comprehensive, precise, and correctly structured data for

Medical Data Classification in Cloud Computing

25

numerous systems is a constant battle for businesses, many of whom are

not on the gaining side of the conflict.

In a recent investigation at an ophthalmology clinic, EHR data were only

23.5% matched by patient-reporting data. When patients reported three or

more eye problems, their EHR data were absolutely not in agreement.

Poor usability of EHRs, sophisticated processes, and an incomplete

understanding why big data is crucial to properly collect all can contribute

to quality problems that afflict data during its life cycle.

Providers can begin to improve the data capture routines by prioritizing

valuable data types for their specific projects, by enlisting the data management and integrity expertise of professional health information managers,

and by developing clinical documentation improvement programs to train