Support Vector Machines

Binary Classification

(

0 yi = +1

< 0 yi = 1

Given training data (xi, yi) for i = 1 . . . N , with

xi Rd and yi { 1, 1}, learn a classifier f (x)

such that

f (x i )

i.e. yif (xi) > 0 for a correct classification.

Linear separability

linearly

separable

not

linearly

separable

Linear classifiers

A linear classifier has the form

f (x) = w>x + b

X2

is the normal to the line, and b the bias

f (x) < 0

•

is known as the weight vector

• in 2D the discriminant is a line

•

f (x) = 0

f (x) > 0

X1

Linear classifiers

A linear classifier has the form

f (x) = w>x + b

f (x) = 0

• in 3D the discriminant is a plane, and in nD it is a hyperplane

For a K-NN classifier it was necessary to `carry’ the training data

For a linear classifier, the training data is used to learn w and then discarded

Only w is needed for classifying new data

Frank Rosenblatt

19228-1971

The Perceptron Classifier

Given linearly separable data xi labelled into two categories yi = {-1,1} ,

find a weight vector w such that the discriminant function

f (x i ) = w > x i + b

separates the categories for i = 1, .., N

• how can we find this separating hyperplane ?

The Perceptron Algorithm

sign(f (xi)) xi

Write classifier as f (xi) = w̃>x̃i + w0 = w>xi

w+

where w = (w̃, w0), xi = (x̃i, 1)

• Initialize w = 0

w

• Cycle though the data points { xi, yi }

• if xi is misclassified then

• Until all the data is correctly classified

+(Green+points+are+misclassified)+

error

The white arrow should point

to a half plane of blue points

1

0.5

−1

−1

1

0.5

0

−0.5

2

4

−1

−1

−0.5

−0.5

0

0

0.5

0.5

1

1

Illustra(on+of+two+steps+in+the+Percep(on+learning+algorithm+(Bishop+4.7)+

1

0.5

1

0

0.5

error

0.5

1

0

0

0

−0.5

−0.5

−0.5

−0.5

1

−1

−1

1

0.5

0

−0.5

3

−1

−1

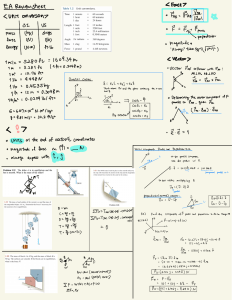

What is the best w?

• maximum margin solution: most stable under perturbations of the inputs

X

>

x) + b

w

b

||w||

Support Vector

wTx + b = 0

Support Vector Machine

i yi (xi

Support Vector

linearly separable data

f (x) =

i

support vectors

SVM – sketch derivation

• Since w>x + b = 0 and c(w>x + b) = 0 define the same

plane, we have the freedom to choose the normalization

of w

´

=

³

w > x+

||w||

x

´

=

2

||w||

• Choose normalization such that w>x++b = +1 and w>x +

b = 1 for the positive and negative support vectors respectively

x

• Then the margin is given by

w ³

. x+

||w||

w

Margin = 2

||w||

Support Vector

Support Vector Machine

linearly separable data

Support Vector

wTx + b = 1

wTx + b = 0

wTx + b = -1

SVM – Optimization

1

1

´

1 for i = 1 . . . N

if yi = +1

for i = 1 . . . N

if yi = 1

• Learning the SVM can be formulated as an optimization:

2

max

subject to w>xi+b

||w||

w

• Or equivalently

³

min ||w||2 subject to yi w>xi + b

w

• This is a quadratic optimization problem subject to linear

constraints and there is a unique minimum

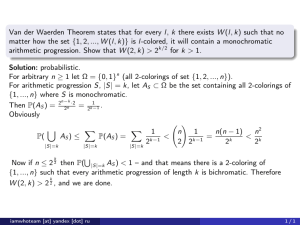

The problem statement

1, i = 1, . . . , N

1

(w) = wT w

2

Given a set of training data {(xi , di ), i = 1, . . . , N }, minimize

subject to the constraint that

di (wT xi + b)

3

The problem statement

1, i = 1, . . . , N

1

(w) = wT w

2

i

T

N

X

i=1

T

i j di dj xi xj

i di

xi + b)

i di xi

i (di (w

N

X

i=1

i di

T

xi + b

N X

N

1X

2 i=1 j=1

+

N

X

i=1

1)

Given a set of training data {(xi , di ), i = 1, . . . , N }, minimize

subject to the constraint that

di (wT xi + b)

N

X

i=1

Jw = 0 = w

1

J(w, b, ) = wT w

2

Looks like a job for LAGRANGE MULTIPLIERS!

So that

and

N

X

i=1

i di w

Jb = 0 =

N

X

i=1

Now for the DUAL PROBLEM

1

J(w, b, ) = wT w

2

N

X

i=1

Note that from (4) third term is zero. Using Eq. (3):

Q( ) =

Lets enjoy the moment!

i

(3)

(4)

DUAL PROBLEM

i

max Q( ) =

Subject to constraints

i s,

N

X

i=1

i

N

X

i=1

=0

N X

N

1X

2 i=1 j=1

i di

T

i j di dj xi xj

0, i = 1, . . . , N

N

X

i di xi

get the w from

5

This is easier to solve than the original. Furthermore it only depends

on the training samples {(xi , di ), i = 1, . . . , N }.

Once you have the

w=

i=1

b=1

w T xs

and the b from a support vector that has di = 1,

Almost done ...

KERNEL FUNCTIONS

6

Now the big bonus occurs because all the machinery we have developed will work if we map the points xi to a higher dimensional space,

provided we observe certain conventions.

wi i (x) + b = 0

2 (x), . . . ,

m1 (x))

0 (x)

= 1.

Let (xi ) be a function that does the mapping. So the new hyperplane

is

N

X

i=1

1 (x),

For simplicity in notation define

(x) = ( 0 (x),

where m1 is the new dimension size and by convention

i (x)

T

j (x)

Then all the work we did with x works with (x). The only issue is

that instead of xiT xj we have a Kernel function, K(xi , xj ) where

K(xi , xj ) =

and Kernel functions need to have certain nice properties. : )

Examples

Polynomials

(xiT xj + 1)p