Developing a Computer Science Concept Inventory for Introductory Programming.

advertisement

Developing a Computer Science

Concept Inventory for Introductory Programming

Ricardo Caceffo

Steve Wolfman

Institute of Computing

State University of Campinas

Campinas, SP, Brasil

Computer Science

University of British Columbia

Vancouver, BC, Canada

wolf@cs.ubc.ca

rec@ic.unicamp.br

Kellogg S. Booth

Rodolfo Azevedo

Computer Science

University of British Columbia

Vancouver, BC, Canada

Institute of Computing

State University of Campinas

Campinas, SP, Brasil

ksbooth@cs.ubc.ca

rodolfo@ic.unicamp.br

ABSTRACT

In this paper, we discuss our experience developing a CI

for introductory programming courses that cover topics related to basic programming skills, such as variables, conditional commands, loops and iterations, procedures and functions, basic sorting algorithms, vectors and matrix representations, and elementary structures. These topics are far from

exhaustively studied in the CI literature. Our study is part

of a multi-institutional project to develop and validate CIs

for introductory programming courses that can be used with

a peer instruction approach.

A Concept Inventory (CI) is a set of multiple choice questions used to reveal student’s misconceptions related to some

topic. Each available choice (besides the correct choice) is

a distractor that is carefully developed to address a specific misunderstanding, a student wrong thought. In computer science introductory programming courses, the development of CIs is still beginning, with many topics requiring

further study and analysis. We identify, through analysis of

open-ended exams and instructor interviews, introductory

programming course misconceptions related to function parameter use and scope, variables, recursion, iteration, structures, pointers and boolean expressions. We categorize these

misconceptions and define high-quality distractors founded

in words used by students in their responses to exam questions. We discuss the difficulty of assessing introductory

programming misconceptions independent of the syntax of

a language and we present a detailed discussion of two pilot CIs related to parameters: an open-ended question (to

help identify new misunderstandings) and a multiple choice

question with suggested distractors that we identified.

2.

Keywords

concept inventory, data structures, misconceptions

1.

RELATED WORK

Herman et al. [7] identify a CI as a standardized test that

has been statistically validated. Unlike a typical classroom

exam, a CI is not comprehensive. It only covers critical concepts for a topic. Each concept is tested multiple times to

ensure the CI’s validity and reliability [7]. A CI can be used

by an instructor to adjust material to students’ needs. The

idea of a CI was originally applied to fundamental physics:

the Force Concept Inventory (FCI) [8] was created to identify students’ common misunderstandings about Newton’s

Laws of Force.

CIs can also be used to measure the impact of educational

methodologies, such as comparing traditional and interactive engagement educational methods [5]. This is achieved

using pre- and post-tests at the beginning and end of the

term to determine the learning gain of each student through

the course, taking into account any initial knowledge students may have had when the course started.

Some studies reported in the literature [3, 10] developed

CIs based on the flowchart development process proposed

by Almstrum et al. [1]. The first step in developing a CI is

identification of fundamental concepts through synthesis of

various sources (experts, textbooks, papers, etc.). This often

follows a pre-defined path, passing through the development

of open-ended questions, pilot multiple-choice questions, a

student-based investigation, a trial period, and a statistical

analysis, leading to final deployment of the CI. Because CI

development is costly, novel approaches are under development, such as the open-source approach of Porter et al. [12]

and the CS1 exam developed by Tew & Guzdial that uses

pseudo-code questions [14].

INTRODUCTION

A Concept Inventory (CI) is a multiple choice questionnaire used to reveal students’ misconceptions related to a

subject or topic. For each question the answer choices, aside

from the right one, is a distractor designed to address a specific misunderstanding—a wrong yet common way of thinking among students.

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than

ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission

and/or a fee. Request permissions from permissions@acm.org.

SIGCSE ’16, March 02-05, 2016, Memphis, TN, USA

c 2016 ACM. ISBN 978-1-4503-3685-7/16/03. . . $15.00

DOI: http://dx.doi.org/10.1145/2839509.2844559

364

A number of initiatives in Computer Science have created,

validated, and applied CIs. Although different methodologies were used, Computer Science CIs can be categorized

into two groups: (1) misconception identification, related

primarily to topics and themes that emerge from students’

misconceptions, and (2) where after identifying misconceptions a pilot CI is developed and validated.

Misconceptions in computer science have been identified

and CI questions proposed for binary search trees (2 questions) [3, 10], digital logic (19 questions) [7], hash tables (1

question) [10], and operating systems (10 questions) [16].

Misconceptions but no questions have been identified for

Boolean logic [6], heaps [3], loops and iteration [9], memory

models and allocation [4, 9], object concepts [9], parameter

scope [4], and recursion [4].

Another approach, proposed by Sorva [13], allows learners

to interact and cognitively engage with a Visual Program

Simulation (VPS). Sorva mapped from the literature 162

misconceptions and categorized them as General, VarAssign

(variables, assignment and expression evaluation), Control

(flow of control during execution), Calls (subprogram invocations and parameter passing), Recursion, Refs (references

and pointers) and OOP (Object-Oriented Programming).

3.

Students were to identify the local and global variables and

describe the expected outputs from printf statements. The

second question asked students to write a C program to input an integer and print all of its divisors. The third question asked students to write a C program to input a year and

print whether or not it was a leap year. The fourth question asked students to write a C program to verify whether

an input n is Pythagorean (n is Pythagorean iff there are

integers a and b such that a2 + b2 = n).

The first question in Exam 2 presented a C function that

correctly implemented Insertion Sort and received as a parameter an int array to be ordered. Students were asked to

create a Date structure composed of year, month, and day

fields, and then change the function to sort an array of Date

structures. The second question asked students to write a

C function to receive an int matrix and verify whether it

followed some pre-defined rules (e.g., the first and last rows

should contain the number 0). The third question asked

students to write a C function to receive an array and two

int pointer parameters and return via the first pointer the

average value of the array and via the second pointer an

element closest to the average value. The fourth question

asked students to write a recursive C function to calculate

the floor of the logarithm base 2 of an int.

Based on exam papers students submitted, we identified

misconceptions and collected them into initial categories that

grouped similar items. We eliminated misconceptions that

fell outside of the scope of our investigation.

METHODOLOGY

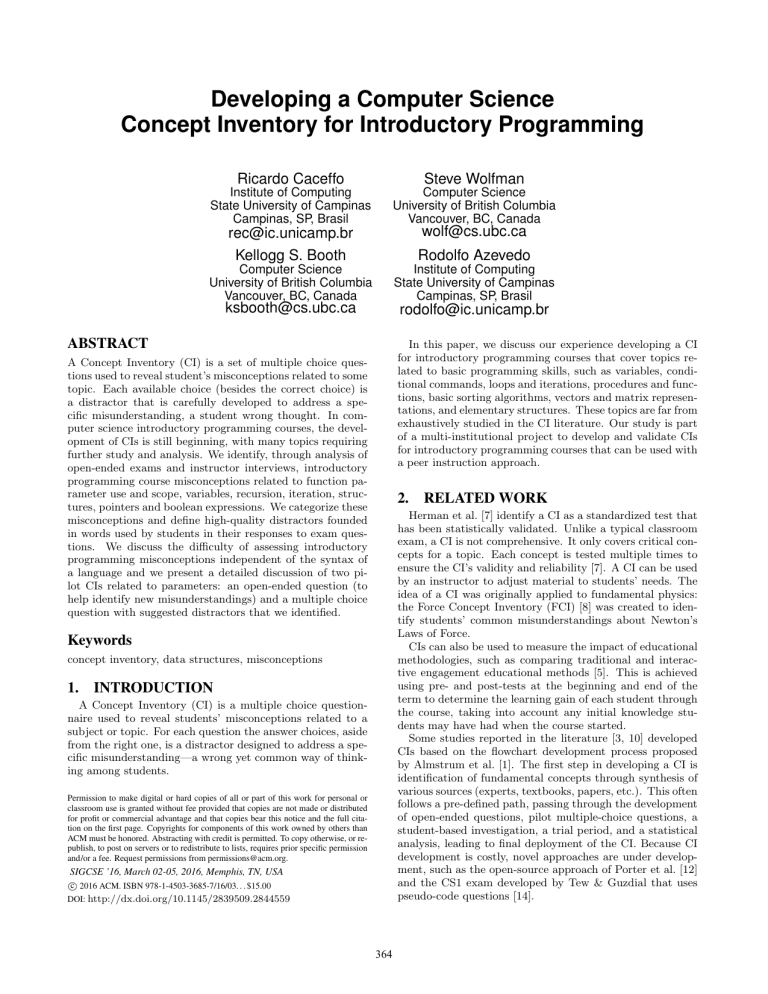

We looked at an introductory algorithm and computer

programming course at the State University of Campinas

(Brasil), a mandatory course for all engineering students.

More than 500 students enrol each semester and attend four

50-minute lectures (theoretical classes) and two 50-minute

labs (practical classes) every week. Each week students are

given a practical problem related to theoretical concepts seen

in lecture. They then develop a C programming language

program to solve the problem. This usually requires an additional four out-of-class hours each week. We developed a

CI for the course following an approach similar to Almstrum

et al. [1], which is depicted in Figure 1.

3.2

Instructor Interviews

We conducted semi-structured interviews [11] with 5 course

instructors. We asked them about the main misconceptions

they believed students in the course had and we asked them

to comment on our exam analysis findings. They were also

asked to identify and separate higher-level critical thinking

misconceptions from lower-level syntax misconceptions and

explain how they might apply our CI in their course.

Based on these interviews, we refined misconception categories to reflect the main topics necessary for correct understanding in an introductory programming course. We believe a comprehensive CI for an introductory programming

course must have questions from each of these categories.

Figure 1: CI development methodology, based on

Almstrum et al. [1]

3.3

Development of Pilot Items

We developed open-ended and multiple-choice questions

covering the categories of misconceptions identified in earlier steps, with distractors for each multiple-choice question

drawn from examples in students’ exam papers. These were

used in pilot studies to test the validity of the CI.

Key steps included identification of student misconceptions through analysis of exam papers and instructor interviews, and development of open-ended and multiple-choice

questions for CI items that reflect the misconceptions.

3.1

4.

MISCONCEPTIONS

We identified seven misconception categories. Each category has one or more misconceptions for which we found

evidence in the exams or in our interviews with instructors. Categories represent similar types of misconceptions

and thus offer opportunities to identify linkages between

misconceptions by providing multiple distractors from the

category in a single CI item. For each category we describe

below the type of misconceptions that characterize it and we

give examples drawn from our analysis of exam papers and

our interviews with instructors. We provide the percentage

and number of instances we found of each misconception.

Exam Analysis

We analysed two course exams, each having four questions. The exams were taken individually by 66 and 60 students in the same term, at the end of the second and fourth

months, respectively. We classified student’s errors in exams

according to misunderstandings that might explain incorrect

answers.

The first question in Exam 1 presented a C program with

two functions each called from inside a loop within main().

365

Categories are described in decreasing order of their prevalence.

We also identified an eighth category, which arose in the

interviews, regarding differences between conceptual misunderstandings and misunderstandings about the specific syntax of a construct in a particular programming language.

We discuss that after the seven that arose from students’

exams.

4.1

fore be inappropriate for a CI. We plan to test this in a

multiple choice format and will discard the item if it elicits

few incorrect responses. If it is popular, we will follow up

with think-aloud interviews to assess whether students really

choose the distractor for the reasons we heard in instructor

interviews.

Distractor (c) was identified in both exams. Some students replaced the caller-provided value for a parameter with

a value drawn from some other source. For example, in

Exam 2, some students began a function that received a parameter that was a matrix of integers by creating a loop to

read all matrix elements with scanf("%d", &x). In interviews, some instructors identified cases in which students

started functions by initializing parameter variables with

hard-coded numbers, losing the original values that had been

passed.

Function Parameter Use and Scope

This was by far the largest source of misconceptions, identified in both exams and instructor interviews. Students do

not understand function calls and parameters passing. We

identified three misconceptions in this category.

Call by reference vs. call by value semantics (44%

– 29/66 in Exam 1 and 5% – 3/60 in Exam 2). The

association between actual parameters (arguments) and formal parameters depends on the particular mechanism used

by a language. C uses call-by-value exclusively for all parameters, yet on both exams students thought if they changed

the content of a formal parameter inside a called function,

the actual parameter variable located outside in the caller

function would also change.

4.2

Variables, Identifiers, and Scope

On Exam 1 students did not understand declarations of

variables or rules for assignment and scope in a program.

This category excludes parameters, as explained in the previous section.

Out of scope assignment (33% – 22/66). Variables

declared inside functions were not considered local to the

function. Students classified variables from one function as

being part of another function. Local variables were used as

if they were global.

Parameters as local variables (17% – 11/66). On

Exam 1 we identified cases in which students did not consider function parameters to be local variables. This misconception is a distinct category because it is specific to the

scope of function parameters.

Function names (6% – 4/66). Students classified function names in function prototypes as global variables (they

are global, but they are not variables).

Initialization of formal parameters (12% – 7/60).

On Exam 2, students didn’t appear to understand how parameters work, specifically, how they are initialized. Students apparently did not realize that function calls initialize

parameters so they sometimes gave parameters specific values either by explicit assignment or from an external source

at the beginning of function execution, rather than using the

caller’s supplied values.

Candidate Distractors: Distractors include (a) using

non-variables as variables, (b) using local variables as global

variables, (c) using global variables as local variables, and

(d) having multiple variables with the same name but declared in different scopes (e.g., global and local variables

with the same name).

In the first question of Exam 1, students were asked to

identify the local and global variables inside a program.

Some identified non-variables, such as function names in prototype declarations, as variables (distractor (a)), some identified local variables as global (distractor (b)), while others

identified global variables as local variables (distractor (c)).

In the same question, students analysed a program that had

a loop within the main function that used an int variable i

as iterator. The loop had a call to a function that accessed

an int global variable also named i. Some students got confused and thought the value of i inside the function was the

value of the local variable i inside main, instead of the value

of the global variable i (distractor (d)).

Candidate Distractors: Possible distractors cover situations where (a) parameters are used as if passed by reference, (b) parameters are accessed outside their scope, and

(c) the original parameter value is changed by an external

source such as scanf().

The most instances of errors similar to distractor (a) were

in the first question of Exam 1, in which students had to

printf a local variable after calling a function that receives

the variable as parameter and changes its value. Another

instance of distractor (a) was in the third question of Exam

2, in which students were to implement a function based on

the following prototype:

4.3

void f(double v[], int n, double *a, double* b)

Recursion

On Exam 2 students had three misconceptions about the

application of recursion in problem-solving.

Students should calculate the average value of the elements of v, putting its value into the memory address pointed

by a. Some students instead declared a local variable x, set

it to be the average value, and then set a = &x, perhaps

expecting that some variable in the caller function corresponding to a would change.

Distractor (b) was proposed by instructors in interviews

but was never observed in student responses. It may there-

Bounded recursion (15% – 9/60). The expression for

a return value had an embedded recursive call with a parameter that was larger than the parameter to the current

invocation, leading to an inifite loop because the level of recursion increases indefinitely.

366

Stopping condition (12% – 7/60). Appropriate checks

to terminate recursion when a base case is encountered were

not provided.

as for (int i = 1; i < n; i++) but added inside the loop

another increment i++ of the iterator i (distractor (c)).

Self-reference (7% – 4/60). Functions required to be

recursive did not call themselves.

On Exam 2 students did not understand how to declare

and use C-style structs.

Candidate Distractors: Possible distractors are: (a) a

recursive function without a call to itself, (b) a recursive

call that grows its parameter and thus leads to an infinite

recursive loop, (c) lack of a stopping condition, and (d) use

of an improper stopping condition.

Distractors examples were identified in the fourth question of Exam 2, in which students were asked to implement a

recursive function int logRec (int n) that calculates the

floor of the logarithm base 2 of n. Some students wrote

most of the function code correctly, but didn’t insert a recursive call to the function itself (distractor (a)); other students inserted the correct stopping condition (n == 1) but

the recursive call was made with an increased n (e.g., logRec

(n+1)) leading to an infinite loop (distractor (b)); other students didn’t insert the stopping condition (distractor (c));

and some students inserted an incorrect stopping condition

(e.g., while n == 1), which represents an instance of distractor (d)).

Accessing fields (27% – 16/60). Students tried to use

the struct as an atomic unit (e.g., to compare it to a number

or to another struct), without accessing any of the struct’s

inner fields.

4.4

4.5

Structures

Multi-key sorting (17% – 10/60). Given an array of

Date structs, students created three variants of the insertion

sort function that had been provided and sorted the array

first by year, then by month, and finally by day. They instead should have made a single multi-key sort function.

Candidate Distractors: A possible distractor relates

to the use of the whole struct when only a field is needed.

For example, on the second question of Exam 2, students

should compare two Date structs (a and b) with day, month,

and year fields. Instead of comparing the struct through

their fields (e.g., a.day > b.day, a.month > b.month, and

so on) the distractor would compare the entire struct using

the statement a > b.

Iteration

4.6

On Exam 1 students seemed not sure when iteration should

be used or how the number of loop iterations is managed.

Pointers

On Exam 2 students did not understand the concepts of

memory addresses and pointers, nor why and how pointers

should be used.

Basic loop behavior (14% – 9/66). Students used a

loop to perform some calculation but had a call to printf()

for the result value that appeared inside the loop, not outside the loop.

Returning values through pointers (3% – 2/60).

Students created a local variable containing the value to be

returned by a function and assigned its memory address to

the pointer parameter, rather than dereferencing the pointer

parameter to assign the value to be returned to a memory

location external to the function.

Logic of looping count (14% – 9/66). Students did

not pass all required values (from 1 to n) to an auxiliary

function. Instead, they only called the function once, without any looping structure.

Assign values to pointers (12% – 7/60). Students

assigned to a pointer variable a specific value instead of a

memory address.

Candidate Distractors: Possible distractors are (a) premature final result calculation or reporting before the loop

is over, (b) solving a problem that requires iteration without

having a loop, (c) improper initialization of the loop counter,

and (d) improper updating of the loop counter.

An instance for distractor (a) was identified in the fourth

question of Exam 1, in which students were supposed to

verify if an integer n is Pythagorean. The correct answer

involves a loop to check all possibile pairs of numbers. Some

students inserted inside the loop a printf stating whether n

was or was not Pythagorean before testing all possible number combinations. In the same question, some students implemented an auxiliary boolean function that receives three

parameters a, b, and n and verified if the condition for n

to be Pythagorean was satisfied for the parameters a and

b but some of those students only called the function once,

without making a loop to check all possibilities for a and b

(distractor (b)).

An instance for distractor (c) was identified in the first

question of Exam 1, in which students were asked to print all

numbers that are divisors of some integer n. Some students

initialized a for loop with i = n instead of i = 1. In the

same question, some students correctly coded the iteration

Candidate Distractors: Possible distractors are: (a)

assigning a value to an address, (b) assigning an address to

a value, and (c) assigning to a pointer an invalid address.

For example, in the third question of Exam 2, students

should make a function that receives two double* variables

a and b and changes the values pointed to by a and b to

some calculated double x. Some students assigned x to an

address, for example a = x (distractor (a)); some assigned

the address of x to the value pointed to by a using the statement *a = &x (distractor (b)); and others assigned to the

pointer the address of x using a = &x, which would point

to an invalid address after the function returns (distractor

(c)). The expected answer was *a = x.

4.7

Boolean Expressions

Students did not understand boolean expressions’ meanings or their evaluation.

Truth tables (12% – 8/66). Incorrect use of the ||

and && operators.

367

choice pilot items based on categories of misunderstandings.

In open-ended questions students can write the answers in

their own words, allowing discovery of new misconceptions.

Multiple-choice questions have the advantage of being easier

to analyse than are open-ended questions, but they require

creation of distractor options to address each identified misconception.

We explored the open-ended questions approach during

the exam analysis described in Section 3.1. We opted to

create both new open-ended questions (to identify new misconceptions) and multiple-choice pilot items. Due to space

limitations we present here only two questions, which relate to the topic Function Parameter Use and Scope

as examples to illustrate our approach. Question 1 (Q1)

is an open-ended question, to help identify new misconceptions related to the topic. Question 2 (Q2) is a multiplechoice question that has distractors for the misunderstandings identified already in our study.

Candidate Distractors: Possible distractors cover (a)

translation from English sentences to incorrect Boolean expressions, which don’t have the expected precedence order

or semantics; and (b) attempts to create a Boolean expression without boolean operators (e.g., without ‘and’ (&&) and

‘or’ (||)).

An instance for distractor (a) was identified in the third

question of Exam 1, in which students were asked to verify if

a number n represents a leap year. Leap years are multiples

of 400 or they are multiples of 4 that are not multiples

of 100. Some students were not able to correctly translate

this sentence to a Boolean expression. For example, some

used only && or || operators without including parenthesis

to delimit the precedence order. Other students tried to

solve the problem through an if sequence, not using any

Boolean operators at all. Although not technically wrong,

this workaround does not contemplate the purpose of the

exercise (distractor (b)).

4.8

Q1. Function usage. Write a program in C that asks

the user to enter two int numbers, a and b. Then, if a is

equal or greater than b, calculate and print a-b. Otherwise,

calculate and print b-a. You must write one (and only one)

auxiliary (helper) function that performs the subtraction.

Syntax vs. Conceptual Understanding

In our interviews, instructors raised concerns about distinguishing between learning/conceptual misconceptions and

highly language-specific syntactic misconceptions. For example, could one or more CI items distinguish between a

student who does not grasp the concept of pointer addressing and one who understands the concept but not the C

progamming language syntax for pointer addressing? The

consensus of the instructors who were interviewed was that

the nature of the subject (programming) makes it hard to

elaborate conceptual questions entirely independent of the

particular programming language being used. This conclusion is similar to findings in the literature [9].

Another concern raised by instructors focused on the typical use of CIs as pre- and post-tests. Unlike the Force

Concept Inventory [8], where college students have everyday experience of basic Newtonian force concepts and may

even have studied them, many students’ first contact with

most programming concepts happens in college introductory

programming courses. A CI that assumes knowledge of programming language syntax at the start of the term would not

be effective; it could even frighten students. To address this

issue, we propose an approach similar to that discussed by

Porter et al. [12], where the pre-course CI consists of conceptual questions, not related to programming language syntax,

and the post-course CI has those same questions, but with

additional specific programming language questions added.

This approach is related to Commonsense Computing, an

attempt to get at deep computing concepts in ways accessible to people with no computing background [15].

This leads to the development, for the same misunderstanding, of two types of CI questions: conceptual or analogy questions, accessible to all students at any point in

the course, and technical or language-dependent questions,

where knowledge of the chosen programming language syntax is required. This approach supports the measurement of

learning gains; it is still possible to compare the initial subset of questions that are in both the pre- and post-course

tests. Developing such questions remains a goal.

5.

Discussion: This open-ended question was developed to

help find new misconceptions related to function parameters’ use and scope. The fact that the student must write

just one auxiliary function verifies if the student understands

how parameter passing works. This question can also help

identify misconceptions related to variable assignment and

scope and boolean expression evaluation.

Q2: Scope of variables. The following code includes a

function that adds five to a number. This is called on x in

the main function.

int addFiveToNumber (int n) {

int c = 0;

// Insert a Line Here

return c;

}

int main ( ) {

int x = 0;

x = addFiveToNumber(x);

}

The correct line to be inserted is:

(a) scanf

(b) n = n

(c) c = n

(d) c = x

("%d", n);

+ 5;

+ 5;

+ 5;

Discussion: Aside from the right answer (option (c)), each

other option matches a specific distractor presented in Section 4.1: option (a) relates to the change of the original

parameter value by an external source; option (b) relates to

the misunderstanding that parameters are passed by reference, and option (d) relates to the misunderstandings that

a variable can be accessed outside its scope. Our interest in

piloting this question is how attractive the distractors are.

PILOT TEST QUESTIONS

As Almstrum et al. propose [1], the next step in this

kind of research is the creation of open-ended or multiple-

368

For example, the scanf() distractor does not include any

addition of 5. Will students avoid it for that reason? As we

further develop the question, we will tailor it to try to elicit

the actual misconceptions that students hold, based on our

experience with the pilot exam.

6.

[4] K. Goldman, P. Gross, C. Heeren, G. L. Herman,

L. Kaczmarczyk, M. C. Loui, and C. Zilles. Setting

the scope of concept inventories for introductory

computing subjects. Trans. Comput. Educ.,

10(2):5:1–5:29, June 2010.

[5] R. Hake. Interactive-engagement versus traditional

methods: A six-thousand-student survey of mechanics

test data for introductory physics courses. Am. J.

Phys., 66(1):64–74, January 1998.

[6] G. L. Herman, M. C. Loui, L. Kaczmarczyk, and

C. Zilles. Describing the what and why of students’

difficulties in Boolean logic. Trans. Comput. Educ.,

12(1):3:1–3:28, Mar. 2012.

[7] G. L. Herman, M. C. Loui, and C. Zilles. Creating the

digital logic concept inventory. In Proceedings of the

41st ACM Technical Symposium on Computer Science

Education, SIGCSE ’10, pages 102–106, New York,

NY, USA, 2010. ACM.

[8] D. Hestenes, M. Wells, and G. Swackhamer. Force

Concept Inventory. Phys. Teach., 30(3):141–158,

March 1992.

[9] L. C. Kaczmarczyk, E. R. Petrick, J. P. East, and

G. L. Herman. Identifying student misconceptions of

programming. In Proceedings of the 41st ACM

Technical Symposium on Computer Science Education,

SIGCSE ’10, pages 107–111, New York, NY, USA,

2010. ACM.

[10] K. Karpierz and S. A. Wolfman. Misconceptions and

concept inventory questions for binary search trees and

hash tables. In Proceedings of the 45th ACM Technical

Symposium on Computer Science Education, SIGCSE

’14, pages 109–114, New York, NY, USA, 2014. ACM.

[11] J. Lazar, J. H. Feng, and H. Hochheiser. Research

Methods in Human-Computer Interaction. Wiley

Publishing, 2010.

[12] L. Porter, C. Taylor, and K. C. Webb. Leveraging

open source principles for flexible concept inventory

development. In Proceedings of the 2014 Conference

on Innovation #38; Technology in Computer Science

Education, ITiCSE ’14, pages 243–248, New York,

NY, USA, 2014. ACM.

[13] J. Sorva. Visual Program Simulation in Introductory

Programming Education. PhD thesis, Aalto University,

Finland, 2012.

[14] A. E. Tew and M. Guzdial. The FCS1: A language

independent assessment of CS1 knowledge. pages

111–116, 2011.

[15] T. VanDeGrift, D. Bouvier, T.-Y. Chen,

G. Lewandowski, R. McCartney, and B. Simon.

Commonsense computing (episode 6): Logic is harder

than pie. pages 76–85, 2010.

[16] K. C. Webb and C. Taylor. Developing a pre- and

post-course concept inventory to gauge operating

systems learning. In Proceedings of the 45th ACM

Technical Symposium on Computer Science Education,

SIGCSE ’14, pages 103–108, New York, NY, USA,

2014. ACM.

CONCLUSIONS AND FUTURE WORK

We have begun to identify misconceptions appropriate to

introductory computing courses using the C programming

language that cover concepts related to basic programming

skills, such as variables, conditional commands, loops, functions, pointers, and structs. Through a careful exam analysis, we first identified the main misunderstandings associated with each topic. Then, aided by instructor interviews,

we categorized the misconceptions, extracting the distractors. We are now applying these data to generate a pilot

CI, composed of 21 open-ended and multiple-choice questions, classified either as conceptual or analogy questions or

as programming language-dependent questions.

The CI is available at the http://edu.ic.unicamp.br/

caceffo website.

In future work, we plan to test our pilot CI to statistically validate it and use think-aloud interviews to identify

new, not-yet-addressed misconceptions. We plan to apply

the conceptual/analogy questions as a pre-course test, and

to also include them in the post-course test as a means to

measure learning.

Another approach that we plan to take is an adaptation

of the Peer Instruction methodology [2], called CSPI (Computer Science Peer Instruction). Currently in development,

CSPI addresses Computer Science-specific needs, for example, supporting the use of classroom clickers to test specific

misunderstandings of programming concepts such as a program’s execution flow control. We plan to apply the CI

that we develop to measure the CSPI’s educational impact

in CS1 courses. Future work will continue the multicultural

approach in the work to date, with the CI application tested

in universities from different countries, with different native

languages, and different student backgrounds.

7.

ACKNOWLEDGMENTS

This research was supported in part by the São Paulo Research Foundation (FAPESP) under grants #2014/07502-4

and #2015/08668-6 and by the Natural Science and Engineering Research Council of Canada (NSERC) under grant

RGPIN 116412-11.

8.

REFERENCES

[1] V. L. Almstrum, P. B. Henderson, V. Harvey,

C. Heeren, W. Marion, C. Riedesel, L.-K. Soh, and

A. E. Tew. Concept inventories in computer science

for the topic discrete mathematics. SIGCSE Bull.,

38(4):132–145, June 2006.

[2] C. H. Crouch and E. Mazur. Peer instruction: Ten

years of experience and results. American Journal of

Physics, 69:970–977, 2001.

[3] H. Danielsiek, W. Paul, and J. Vahrenhold. Detecting

and understanding students’ misconceptions related to

algorithms and data structures. In Proceedings of the

43rd ACM Technical Symposium on Computer Science

Education, SIGCSE ’12, pages 21–26, New York, NY,

USA, 2012. ACM.

369