Chapter 5: Discrete Random Variables & Their Probability Distributions

Discrete Random Variable = random variable that assumes countable values

Continuous Random Variable = not countable, can assume any value in 1 or

more intervals ($,weight, time, length)

Probability Distribution of a Discrete Random Variable:

x P(x) x2 x2 (Px)

Two Characteristics of a Probability Distribution:

1. 0≤ P(x)≤1 for each value of x

2.Σ P(x)=1

Mean of discrete random variable: μ=Σ xP(x)

Standard Deviation of a Discrete Random Variable:

4 Conditions of Binomial Experiment:

1. “n” identical trials - repeated under identical conditions

2. 2 outcomes only - “success” or “failure”

3. Probability of the outcomes remains constant

4. Trials are independent (one outcome does not affect another)

Binomial Probability Formula: the probability of exactly x

successes in n trials

q= 1-p = prob of failure

Mean of binomial distribution:

Standard deviation of a binomial distribution:

P = 0.50 = symmetric

P < 0.50 = skewed to the right

P > 0.5 = skewed to the left

Larger SD = x can assume values over a larger range about the

mean

Chapter 6: Continuous Random Variables and the Normal

Distribution

Characteristics of the Probability Distribution of a Continuous

Random Variable:

1. Probability that x assumes a value in any intervals lies in the

range 0-1

2. Total probability of all the (mutually exclusive) intervals within

which x can assume a value is 1.0 p (a ≤ x ≤ b)

The Normal Probability Distribution (Bell-Shaped)

1. Total area under curve is 1.0

2. Curve is symmetric about the mean

3. The 2 tails of the curve extend indefinitely (not beyond (μ ±3σ)

Z values: represents the distance btw the mean and x in terms of

the standard deviation

p (z assumes a single value) = 0

Use table to find values to the LEFT of any z value

1 – P(of area to the left of z) = gives area to the right

If z > 3.49 - area is approx. 1.0

If z < -3.49 -area is approx. 0.0

Standardizing a Normal Distribution:

Convert x value to a z value:

SD and Mean are parameters of a binomial distribution

Z value for an x value greater than the mean is + positive

Z value for an x value smaller than the mean is - negative

Finding an x Value for a Normal Distribution when µ, σ and z are

known: x = µ + zσ

Normal Distribution as an Approximation to Binomial Distribution:

When np > 5 and nq > 5

1. compute µ and σ for the binomial distribution µ = np and σ =

√npq

2. convert the discrete random variable into a continuous random

variable

Continuity Correction Factor: addition of 0.5 and/or

subtraction of 0.5 from the value(s) of x when the normal

distribution is used an approximation to the binomial distribution

SUBTRACT 0.5 from LOWER LIMIT of interval

ADD 0.5 to the UPPER LIMIT of the interval

3. compute the required probability using the normal distribution

Selection error -sampling frame is not representative of pop.

Nonresponse error-people do not respond

Response error -people don’t provide correct answers

Voluntary response error- participation is voluntary

Simple - each sample of same size has probability of being selected

Systematic - randomly select one member from first k (pop. size/intended sample size)

units,

then every kth member is used

Stratified - divide population into strata (when pop. differs in characteristics)

Cluster -geographical clusters, randomly select from x amount of clusters

ELEMENT OR MEMBER - of a sample or population is the specific subject or object

about which the

information is being collected

VARIABLE - a characteristic under study that assumes different values for different

elements

OBSERVATION OR MEASUREMENT - the value of a variable for an element

DATA SET - collection of observations on one or more variables

Relative frequency of a class = f/sum f

Sum of relative frequency is always 1.0

Percentage of a class= (relative frequency) X 100%

Class Width =lower limit of next class – lower limit of current class

Class midpoint = (Upper limit + Lower limit) ÷ 2

Class boundary = the midpoint of the upper limit of one class and the lower

limit of the next class (800.5-1000.5 for 801-1000)

Approximate Class Width = (largest value – smallest value) ÷ #of classes

Cumulative Frequency: Add frequency of a class to frequencies of all preceeding classes

(16+9+25|16+9+1+26)

Cumulative relative frequency = CFC/total observations (16/30, 25/30)

Cumulative Percentage = (Cumulative relative frequency) x 100%

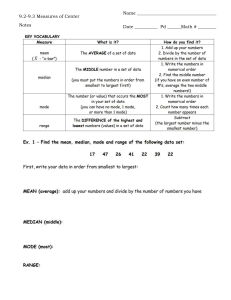

Mean (ungrouped data): μ={x/n , x={x/n

Mean (grouped data – eg. Frequency table): μ {mf/n X=mf/n

Frequency Distribution Table: f m f2 mf m2f (m=midpoint, f=frequency of a class)

Mean is sensitive to outliers

Weighted mean (ungrouped): {xw/{w

Median for ungrouped data: value of the middle term in a ranked data set

Median not influenced by outliers, preferred over mean

Mode: # occurs with highest frequency (can have 0 or many)

Negatively skewed = highest on right, tail to the left - Mean – median – mode (at

the peak)

Positively skewed = highest on left, tail to the right - Mode (at the peak) –

median – mean

Symmetric: identical on both sides of central point

Uniform/Rectangular: Same for each class

The sum of the deviations of the x values from the mean is always 0. Σ (x−μ)=0

Range: Largest value – smallest value (influenced by outliers)

Variance (for Ungrouped Data):

Standard deviation (Ungrouped Data):

Coefficient of variation:

Expresses standard deviation as a % of the mean and compares variability for 2

different data sets that have different units of measure (eg. years, $)

Variance (Grouped Data):

Standard deviation (Grouped Data):

Standard deviation tells how closely the values of a data set are

clustered around the mean (lower value = smaller range, higher

value = larger range)

Values for the variance and SD are never negative (if no

variation, values = 0) and they are sensitive to outliers

Population parameters: a numerical measure (mean, median,

mode, variance and SD) calculated for a population data set

(mean and SD are the parameters) Sample statistics: a summary

measure calculated for a sample data set

Chebyshev’s Theorem: for any number k greater than 1, at least

Of the values for any distribution lie within k standard

deviations from the mean (use mean and standard deviation)

K= distance between the mean and a point/SD (k>1)

Distance between mean and each of the two given points must

be the same

Gives minimum area (in %) under distribution curve b/w two

points

1. Find distance btw mean and 2 given points

2. Divide distance by standard to get “k”

3. Sub k in formula

Empirical rule: only applies to bell-shaped distribution

68% of observations lie within interval (μ ±1σ)

95% of observations lie within interval (μ ±2σ)

99.7% of observations lie within interval (μ ±3σ)

Quartiles: divide ranked data set into 4 equal parts

Rank set in increasing order – In an odd number, the median

stands alone

Q1= value of the middle term among the ranked observations

ranked less than the median

Q2= value of the middle term in a ranked data set (median)

Q3=value of the middle term among the ranked observations

greater than the median

IQR = Q3−Q1 (not affected by outliers)

Lower inner fence = Q1−1.5 IQR (to determine mild outliers)

Upper inner fence = Q3+1.5 IQR

Lower outer fence = Q1−3IQR (outside outer fences= extreme

outliers)

Upper outer fence = Q3+3IQR

The kth percentile:

Always round to the next higher whole number

Percentile rank of Xi=

Give the % of values in a dataset that are less than xi

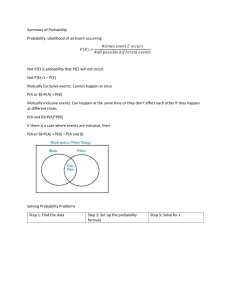

Chapter 4: Probability

Simple event – an event that includes only one of the final

outcomes of an experiment

Compound event - a collection of more than one outcomes for

an experiment

Two properties of probability

1.Event always lie in the range 0 to 1. 0≤ P(A) ≤1

2.Sum of all probabilities of a simple event is always 1. ΣP(Ei)=1

Classical Probability: applied for an experiment where all

outcomes are equally likely

Classical Probability Rule for a simple event:

classical Probability Rule for a compound event:

Relative frequency as an approximation of probability (needed when outcomes

are not equally likely)

More repetitions lead to closer actual probabilities

Subjective probability: based on subjective judgement, experience, info, belief

Marginal probability; prob. of single event w/o consideration of any other event

(Divides totals for rows or columns by the grand total)

Conditional probability of an event: prob. event will occur given another event

has already occurred

Conditions for independence of events: P(A|B) =P(A) or P(B|A)=P(B) (the

occurrence of 1 event does not affect occurrence of another)

If 2 probabilities are equal, the events are independent

1. Two events are either mutually exclusive or independent

a. Mutually exclusive events are always dependent

b. Independent events are never mutually exclusive

2. Dependent events may or may not be mutually exclusive

Mutually exclusive events cannot occur together

Complementary events: P(A) + P(A) = 1

A = all outcomes from an experiment not in A (if we already know A, we can find

prob of complementary event) [1 - P(A) = P(A)]

2 complementary events = always mutually exclusive

To find joint probability:

Multiplication rule for independent events: P(A and B) = P(A) x P(B)

Multiplication rule for dependent events: P(A and B) = P(A) x P(B|A) or

P(B)x P(A|B)

Joint probability of two mutually exclusive events (cannot occur together):

P(A and B) = 0

Addition rule for mutually nonexclusive events (can occur together):

P(A or B) = P(A) + P(B) – P(A and B)

Addition rule for mutually exclusive events:

P(A or B) = P(A) + P(B)

P(neither (A or B)) = 1 – P(A and B)

n factorial: the product of all the integers from given number to 1 n! = n(n-1)(n2)(n-3) … 0! = 1 (always)

Combinations: gives the number of ways x elements can be selected from n

elements n = total items

x = # of items selected out of n

n is always ≥ x

Counting rule: total outcomes for an experiment = m x n x k