#CLUS

Troubleshooting

Cisco DNA SDAccess from API and

Maglev

Parthiv Shah, Technical Leader, Escalation

Akshay Manchanda, Technical Leader, TAC

BRKARC-2016

#CLUS

Agenda

•

Cisco DNA Architecture Overview

•

Maglev Based Troubleshooting

Installation/Services Debugging

• Log Collection

• ISE and DNA-Centre Integration

• Device Discovery/Provisioning

•

•

API Based Troubleshooting

How to Access

• Problem and Solution

•

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

3

Agenda

•

Cisco DNA Architecture Overview

•

Maglev Based Troubleshooting

Installation/Services Debugging

• Log Collection

• ISE and DNA-Centre Integration

• Device Discovery/Provisioning

•

•

API Based Troubleshooting

How to Access

• Problem and Solution

•

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

4

Cisco Webex Teams

Questions?

Use Cisco Webex Teams to chat

with the speaker after the session

How

1 Find this session in the Cisco Live Mobile App

2 Click “Join the Discussion”

3 Install Webex Teams or go directly to the team space

4 Enter messages/questions in the team space

Webex Teams will be moderated

by the speaker until June 16, 2019.

cs.co/ciscolivebot#BRKARC-2016

#CLUS

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

5

Objectives and Assumptions

Objectives

After completing this module you will:

• Understand the Basic DNA Architecture Overview

• Understand Cisco DNAC Maglev Based Troubleshooting

• Understand Cisco DNAC API Based Troubleshooting

Assumptions

Audience must be familiar with

• Working knowledge of APIC-EM and PKI.

• Working knowledge of Routing/Switching and Cisco Fabric architecture.

• This session will not cover Cisco Fabric or ISE troubleshooting.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

6

Cisco DNA

Architecture

Overview

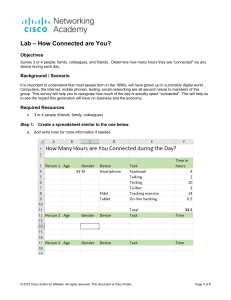

The Cisco DNA Center Appliance

Fully Integrated Automation & Assurance

Cisco DNA Center Platform

DN2-HW-APL

Centralized Deployment - Cloud Tethered

•

Built-In Telemetry Collectors (FNF, SNMP, Syslog, etc)

•

Built-In Contextual Connectors (ISE/pxGrid, IPAM, etc)

•

Multi-Node High Availability (3 Node, Automation)

•

RBAC, Backup & Restore, Scheduler, APIs

1RU Server (Small form factor)

•

•

•

•

•

•

•

DNAC 1.2 Scale: Per Node

• 5,000 Nodes (1K Devices + 4K APs)

• 25,000 Clients (Concurrent Hosts)

DNAC 1.3 Scale: Per Node

•

•

Please refer DNAC 1.3 Data Sheet

UCS 220 M5S: 64-bit x86

vCPU: 44 core (2.2GHz) / 56C / 112C

RAM: 256GB DDR4

Control Disks: 2 x 480GB SSD RAID1

System Disks: 6 x 1.9TB SSD M-RAID

Network: 2 x 10GE SFP+

Power: 2 x 770W AC PSU

Single Appliance for Cisco DNAC (Automation + Assurance)

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

8

Cisco DNA Solution

Cisco DNA Center

Cisco Enterprise Portfolio

Simple Workflows

DESIGN

PROVISION

POLICY

ASSURANCE

Cisco DNA Center

Identity Services Engine

Routers

Network Control Platform

Switches

Network Data Platform

Wireless Controllers

#CLUS

BRKARC-2016

Wireless APs

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

9

Cisco DNA Center

Cisco SD-Access – Key Components

ISE Appliance

API

Cisco DNA Center

Cisco DNA Center

Appliance

API

Design | Policy | Provision | Assurance

API

Cisco& ISE

Identity

Policy

Identity Services Engine

API

Automation

NCP

API

Network Control Platform

Assurance

NDP

Network Data Platform

NETCONF

SNMP

SSH

AAA

RADIUS

EAPoL

Fabric

HTTPS

NetFlow

Syslogs

Cisco Switches | Cisco Routers | Cisco Wireless

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

10

Cisco DNA Center and ISE integration

Identity and Policy Automation

Cisco Identity Services Engine

Authentication

Authorisation

Policies

Groups and

Policies

pxGrid

REST APIs

Campus Fabric

Fabric

Management

Policy

Authoring

Workflows

Cisco DNA Center

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

11

Cisco DNA Center and ISE integration

ISE node roles in SD-Access

Admin/Operate

Cisco DNA Center

REST

Devices

Things

Config Sync

ISE-PSN

Users

Users

Context

ISE-PAN

Authorisation Policy

Network

Devices

If

Employee

then VN/SGT-10

If

Contractor

then VN/SGT-20

If

Things

then VN/SGT-30

pxGrid

ISE-PXG

Exchange Topics

TrustSecMetaData

SGT Name: Employee = SGT-10

SGT Name: Contractor = SGT-20

...

SessionDirectory*

ISE-MNT

#CLUS

BRKARC-2016

Bob with Win10 on CorpSSID

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

12

Cisco DNA Center Solution Basic Pre-requisite

•

Hardware

Supported Cisco DNA Center Appliance (DN2-HW-APL / DN2-HW-APL-L / DN2-HW-APL-XL)

• Supported switch/router/WLC/AP models

•

•

Software

Check various platform for recommended IOS-XE software version

• Check License for planned platforms

• Recommended ISE and Cisco DNA Center software

•

•

Underlay/Overlay

IP address plan for Cisco DNA Center and ISE

• Check for underlay network / routing configured correctly and devices are reachable

• Reachability to Internet – Direct or Proxy connection

•

•

Access to an NTP server

•

Make sure Cisco DNA Center appliance is close to real time using CIMC

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

13

Cisco DNA Center

Troubleshooting

Cisco DNA Center

SD-Access 4 Step Workflow

Design

Assure

• Global Settings

• Site Profiles

• DDI, SWIM, PNP

• User Access

Provision

Policy

Assure

Prov i s i on

Assure

• Fabric Domains

• Virtual Networks

• CP, Border, Edge

• FEW, OTT WLAN

• ISE, AAA, Radius

• Endpoint Groups

• Group Policies

• External Connect

Assurance

Assure

• Health Dashboard

• 360o Views

• FD, Node, Client

• Path Traces

Planning & Preparation

Installation & Integration

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

15

Cisco DNA Center – Maglev Logical Architecture

App Stack 1

App Stack N

App Stack 2

APIs, SDK & Packaging

Standards

APIs, SDK & Packaging

Standards

Maglev Services

IaaS

(Baremetal, ESXi, AWS, OpenStack etc)

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

16

Cisco SD-Access (Fusion) Package Services

apic-em-event-service

Trap events, host discovery we leverage

snmp traps so they are handled here.

ipam-service

IP Address manager

apic-em-inventorymanager-service

Provides communication service between

inventory and discovery service

network-orchestration-service

Critical during Provisioning

orchestation.

apic-em-jboss-ejbca

Certificate authority and enables controller

authority on the DNAC.

orchestration-engine-service

Orchestration Service

apic-em-networkprogrammer-service

Configure devices. Critical service to check

during provisioning.

pnp-service

PNP Tasks

policy-analysis-service

Policy related

apic-em-pki-brokerservice

PKI Certificate authority

policy-manager-service

Policy related

command-runnerservice

Responsible for Command Runner related

task

postgres

Core database management

system

distributed-cacheservice

Infrastructure

rbac-broker-service

RBAC

sensor-manager

Sensor Related

dna-common-service

DNAC-ISE integration task

site-profile-service

Site Profiling

dna-maps-service

Maps Related services

dna-wireless-service

Wireless

identity-managerpxgrid-service

DNAC-ISE integration task

spf-device-manager-service

spf-service-manager-service

swim-service

#CLUS

BRKARC-2016

Core service during Provisioning

phase

Core service during Provisioning

phase

SWIM

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

17

Assurance Services

cassandra

Database

collector-agent

Collector Agents

collector-manager

Collector Manager

elasticsearch

Base Services

cassandra

Core Database

catalogserver

Local Catalog Server for update

Search

elasticsearch

Elastic Search Container

ise

ISE data collector

glusterfs-server

Core Filesystem

kafka

Communication service

mibs-container

SNMP MIBs

identitymgmt

Identity Managenent container

netflow-go

Netflow data collector

influxdb

Database

kibana-logging

Kibana Logging collector

kong

Infrastructure service

maglevserver

Infrastructure

mongodb

Database

rabbitmq

Communication service

pipelineadmin

pipelineruntime-jobmgr

pipelineruntime-taskmgr

pipelineruntime-taskmgr

pipelineruntimetaskmgr-data

pipelineruntimetaskmgr-timeseries

Various Pipelines and Task nanager

workflow-server

snmp

SNMP Colelctor

syslog

Syslog Collector

workflow-ui

trap

Trap Collector

workflow-worker

#CLUS

BRKARC-2016

Various Update workflow task

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

18

Most Commonly Used Maglev CLI

$ maglev

Usage: maglev [OPTIONS] COMMAND [ARGS]...

Tool to manage a Maglev deployment

Options:

--version

Show the version and exit.

-d, --debug

Enable debug logging

-c, --context TEXT Override default CLI context

--help

Show this message and exit.

Commands:

backup

Cluster backup operations

catalog

Catalog Server-related management operations

completion

Install shell completion

context

Command line context-related operations

cronjob

Cluster cronjob operations

job

Cluster job operations

login

Log into the specified CLUSTER

logout

Log out of the cluster

maintenance

Cluster maintenance mode operations

managed_service

Managed-Service related runtime operations

node

Node management operations

package

Package-related runtime operations

restore

Cluster restore operations

service

Service-related runtime operations

system

System-related management operations

system_update_addon

System update related runtime operations

system_update_package System update related runtime operations

#CLUS

$ magctl

Usage: magctl [OPTIONS] COMMAND [ARGS]...

Tool to manage a Maglev deployment

Options:

--version

Show the version and exit.

-d, --debug Enable debug logging

--help

Show this message and exit.

Commands:

api

appstack

completion

disk

glusterfs

iam

job

logs

maglev

node

service

tenant

token

user

workflow

BRKARC-2016

API related operations

AppStack related operations

Install shell completion

Disk related operations

GlusterFS related operations

Identitymgmt related operations

Job related operations

Log related operations

Maglev related commands

Node related operations

Service related operations

Tenant related operations

Token related operations

User related operations

Workflow related operations

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

19

Collecting

Logs

Integrating

ISE

Bring-up

Issues

Provisioning

Issues

#CLUS

Discovery

Issues

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

20

Cisco DNA Center Services are not coming up

Have Patience

120 to 180 minutes bring-up time

•

•

•

•

Check network connectivity

Check NTP/DNS server reachability

Check any specific service not coming up

During install or update use GUI

Avoid console login or don’t run

any system related commands

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

21

Install Failure

If you are unable to run maglev/magctl commands after install:

•

Check RAID configuration and install error messages

•

USB 3.0 is recommended for installation.

•

Avoid KVM and/or USB 2.0 or NFS mount method for installation

•

Use Windows 10 or Linux/Mac based system to build burn ISO image.

•

Check for Error or Exception in following log files:

•

/var/log/syslog

•

/var/log/maglev_config_wizard.log

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

22

Package Status – GUI / CLI

How to Check Package Status from GUI

System Settings App Management: Packages & Updates

System Settings Software Updates Installed Apps

How to Check Package Status from CLI

maglev package status

Check for any status

not “DEPLOYED”

Check for

“Failed”

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

23

Verify H/W profile complies with requirements

Verify sufficient disk and memory available

Verify number of CPUs to be minimum 88

and minimum memory is 256 GB.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

24

Check Health Status of Cisco DNAC Cluster

Should show

Result as

SUCCESS

(Continued)

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

25

Troubleshooting – Kubernetes & Docker

Docker health check

The "Active" line should

show as "running".

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

26

Package Update

Package Update Troubleshooting

2-Step Update Process – System Update and Application Package update

Fail to Download Packages:

•

Check connectivity to Internet

•

During update download internet connectivity is mandatory

Fail to install packages:

•

During install internet connectivity is mandatory

•

Check if there is any failure displayed in GUI

•

Check the status from CLI if there is any error

Package Update Ordering

https://www.cisco.com/c/en/us/td/docs/cloud-systems-management/network-automation-andmanagement/dna-center/1-1/rn_release_1_1_2_2/b_dnac_release_notes_1_1_2_2.html#task_nj3_nww_qcb

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

28

Proxy Setting check

If Proxy server

configured then

check for Proxy

server

Check Parent

Catalog server and

Repository

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

29

System Update Check

maglev system_updater update_info

Failure Output

Displays the

current and new

version

Failure State and

Sub-state

Progress Percentage

To Check the live log during update

$ magctl service logs -rf system-updater | lql

$ magctl service logs -rf workflow-worker | lql

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

30

Package Mapping – GUI v/s CLI

CLI Package Name

application-policy

assurance

automation-core

base-provisioning-core

command-runner

core-network-visibility

device-onboarding

image-management

iwan

migration-support

ncp-system

ndp

ndp-base-analytics

ndp-platform

Ndp-ui

Network-visibility

path-trace

sd-access

system

waas

sensor-automation

sensor-automation

GUI Display Name

GUI Display Name

Automation - Application Policy

Assurance - Base

NCP - Services

Automation - Base

Command Runner

Network Controller Platform

Automation - Device Onboarding

Automation - Image Management

IWAN

Automation - Application Policy

Assurance - Base

Assurance - Path Trace

Assurance - Sensor

Automation - Base

Automation - Device Onboarding

Automation - Image Management

Automation - SD Access

Automation - Sensor

Automation - WAAS

Command Runner

IWAN

NCP - Base

NCP - Services

Network Controller Platform

Network Controller Platform

Network Data Platform

Network Data Platform - Base Analytics

Network Data Platform - Core

Network Data Platform - Manager

System Or Infrastructure

NCP - Base

Network Data Platform

Network Data Platform - Base Analytics

Network Data Platform - Core

Network Data Platform - Manager

Network Controller Platform

Assurance - Path Trace

Automation - SD Access

System Or Infrastructure

Automation - WAAS

Automation - Sensor

Assurance - Sensor

#CLUS

BRKARC-2016

CLI Package Name

application-policy

assurance

path-trace

sensor-automation

base-provisioning-core

device-onboarding

image-management

sd-access

sensor-automation

waas

command-runner

iwan

ncp-system

automation-core

core-network-visibility

Network-visibility

ndp

ndp-base-analytics

ndp-platform

Ndp-ui

system

migration-support

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

31

Package Deploy Failure and Recovery

$ maglev package status

maglev-1 [main - https://kong-frontend.maglev-system.svc.cluster.local:443]

NAME

DEPLOYED

AVAILABLE

STATUS

----------------------------------------------------------------------------------network-visibility

2.1.1.60067

UPGRADE_ERROR - maglev_workflow.workflow.exceptions.TaskCallableExecutionError:

(1516326117.1073043, 1516327147.0490577, 'TimeoutError', 'Timeout of 1020 seconds has expired while watching for k8s changes for apic-em-jbossejbca ')

$ maglev catalog package display network-visibility | grep fq

fqn: network-visibility:2.1.1.60067

Find the package name

$ maglev catalog package delete network-visibility:2.1.1.60067

Ok

Delete the package

$ maglev package undeploy network-visibility.

Undeploying packages 'network-visibility:2.1.1.60067'

Package will start getting undeployed momentarily

Undeploy failed package – Don’t use it as it can be

destructive and can lose the database

$ maglev catalog package pull network-visibility:2.1.1.60067

Pull the package again

Package pull initiated

Use "maglev catalog package status network-visibility:2.1.1.60067" to monitor the progress of the operation

Once above steps completed, go to GUI and download the package again and install it.

Or you can use “maglev package deploy <>”

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

32

High Availability

High Availability(HA) Overview

•

Minimize Downtime for Cisco DNAC Clsuter

•

HA cluster consists of multiple nodes that communicate and share/replicate information to

ensure high system availability, reliability, and scalability

•

Cisco DNAC HA is limited to 3 nodes (active active).

•

Can Handle maximum one node failure

•

Components scaled as part of HA :

•

Managed Service Addons: Rabbitmq, Kong, Cassandra DB, Mongo DB, Postgres DB, Glusterfs, Elastic search,

Minio

•

Maglev Core Service Addons: Maglevserver, Identity Management, agent, fluent-es, keepalived, platform-ui

•

K8S Components: kube-apiserver , etcd , calico, kube-controller-manager , kube-dns , kube-proxy , kubescheduler

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

34

Creation of 3 node cluster

Switch 1

Cisco

DNAC1

Switch 1

Switch 2

Switch 3

Cisco

DNAC1

Cisco

DNAC2

Cisco

DNAC3

Cluster nodes MUST be on the same version

To Configure node-2 point to first node-1 as first step of software install

Repeat the same for node-3 after node-2 completes installation

Redistribute services through System 360 enables the cluster to act as a single unit

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

35

Install Initial Cisco DNA Center Node

Kong

Fusion Services

NDP Services

CatalogServer

MaglevServer

WorkflowServer

GlusterFS

RabbitMQ

DockerRegistry

WorkflowWorker

MongoDB

Cassandra

Kubernetes

Docker

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

36

Install Additional Cisco DNA Center Nodes

Kong

Kong

Fusion Services

Kong

NDP Services

CatalogServer

MaglevServer

WorkflowServer

GlusterFS

RabbitMQ

DockerRegistry

WorkflowWorker

MongoDB

Cassandra

Kubernetes

Docker

GlusterFS

RabbitMQ

MongoDB

Cassandra

#CLUS

Kubernetes

Docker

BRKARC-2016

GlusterFS

RabbitMQ

MongoDB

Cassandra

Kubernetes

Docker

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

37

Distribute Services

Kong

Fusion Services

Kong

NDP Services

RabbitMQ

MongoDB

Cassandra

NDP Services

MaglevServer

CatalogServer

GlusterFS

Fusion Services

Kubernetes

Docker

GlusterFS

RabbitMQ

Kong

WorkflowServer

MongoDB

Cassandra

#CLUS

Kubernetes

Docker

BRKARC-2016

Fusion Services

DockerRegistry

GlusterFS

RabbitMQ

NDP Services

WorkflowWorker

MongoDB

Cassandra

Kubernetes

Docker

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

38

Bringing up Cisco DNA Center 3 node cluster

•

Always ensure the seed Cisco DNA Center node is up and running before

adding other cluster nodes

•

After forming the cluster, make sure that all the nodes are in READY state

when you run ‘kubectl get nodes’ command from CLI.

•

Enabling HA should only be done after confirming that the 3-node cluster

is successfully formed and operational with full stack deployed.

•

DO NOT try to add two nodes in parallel i.e. add nodes sequentially.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

39

Cisco DNA Center settings after second node install

Enable Service Distribution Not

showing up after the second node

is installed as HA requires 3

nodes.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

40

Cisco DNA Center settings after third node install

Enable Service Distribution shows

up after the third node is installed

as HA requires 3 nodes.

Enabling HA using CLI

$ maglev service nodescale refresh

Scheduled update of service scale (task_id=afeca07f-5a87-410a-be48-3eef76b08db6)

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

41

Enable Service Distribution

Service Distribution happened

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

42

Check services on each node

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

43

Automation Behavior on node failure

Switch 1

Switch 2

Switch 3

Switch 1

Switch 2

Switch 3

Cisco

DNAC1

Cisco

DNAC2

Cisco

DNAC3

Cisco

DNAC1

Cisco

DNAC2

Cisco

DNAC3

Node fails, automation services are automatically distributed

Current re-distribution takes 25 minutes (unplanned)

Node failure restore (RMA) will require re-distribution of services needs (25 minutes – can be planned outage)

Link failure - no significant delay in redistribution of services when link comes back up

Failure of two nodes will bring the cluster down

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

44

UI Notification on HA failure

Persistent notification of failure:

1. Node

2. Services

3. Interfaces

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

45

Node Failure UI Notifications

Node down notification

#CLUS

2nd and 3rd node will form a quorum

UI won’t be available till services are

distributed

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

46

Node Failure UI Notifications

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

47

Service Failure UI Notifications

Nodes are up but one or more

services are down

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

48

Service Failure UI Notifications

Some services are pending and

not ready

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

49

Cluster Link Failure Notifications

Some services

showing status

as NodeLost

Node down

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

50

Cluster Link Came Up

Banner changed from Node Lost to Services

temporarily Disrupted. When all the services

are up, this banner should go away also.

Node down

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

51

Cluster Link Came Up

Node Up

Fully restored so banner gone

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

52

Network Link failure

No Impact but No Notifications

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

53

Remove a node from cluster (RMA use case)

•

If a node in a one of the node in cluster is in failed state and is not recovering after

several hours, users should remove it from the cluster

by running CLI : $ maglev node remove <node_ip>

Gracefully removing a node

•

If for any reason, customer want to remove one of the active nodes in cluster, use

the following steps:

•

Move services on the given host another node by issuing:

$ maglev node drain <node_ip>

•

Once all services are up and running, power down the node and remove it from the

cluster: $ maglev node remove <node_ip>

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

54

HA Commands Cheat Sheet

HA commands:

•

maglev service nodescale status

•

maglev service nodescale refresh

•

maglev service nodescale progress

•

maglev service nodescale history

•

maglev node remove <node_ip>

•

maglev node allow <node_ip>

•

maglev cluster node display

Check All 3 nodes available

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

55

Collecting

Logs

Integrating

ISE

Bring-up

Issues

Provisioning

Issues

#CLUS

Discovery

Issues

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

56

UI Debugging from Browser

Use Browser Debugging mode to find out API or GUI related Errors

For Chrome/Firefox Browsers

• Enable Debugging mode by going to

Menu More Tools Developer mode

• Select Console from top menu

• For clarity clear existing log.

• Run the task from Cisco DNA Center GUI

• Capture the console screenshot to

identify API/Error details.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

57

UI Debugging from Browser

Firebug is another Tool for debugging mode.

• Install Firebug add-on in Firefox Browser

• Enable Firebug add-on

• Launch Firebug and Go to Console

• Run the task and it will capture detailed API information and related operation

Post/Get Operation and API name

#CLUS

Task Success / Fail Code

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

58

Live Log - Service

Log Files:

• To follow/tail the current log of any service:

magctl service logs –r -f <service-name>

EX: magctl service logs -r -f spf-service-manager-service

Note: remove -f to display the current logs to the terminal

• To get the complete logs of any service:

• Get the container_id using:

docker ps | grep <service-name> | grep -v pause | cut -d' ' -f1

• Get logs using: docker logs <container_id>

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

59

Check Service Log in GUI

Click on Kibana Icon

Click on Service Counts

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

60

Monitoring / Log Explorer / Workflow

System Settings System360: Tools

https://<dnacenter_ip>/dna/systemSettings

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

61

Cisco DNA Center’s Monitoring Dashboard

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

62

Monitoring Cisco DNA Center Memory, CPU &

Bandwidth

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

63

Check Service Log using Log Explorer

Log Messages

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

64

Changing Cisco DNA Center Logging Levels

How to Change the Logging Level

• Navigate to the Settings Page:

System Settings Settings Debugging Levels

• Select the service of interest

• Select the new Logging Level

• Set the duration Cisco DNA Center should

keep this logging level change

• Intervals: 15 / 30 / 60 minutes or forever

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

65

Required information to report an issue

• RCA file

[Sun Feb 11 14:26:00 UTC] maglev@10.90.14.247 (maglev-master-1)

$ rca

• SSH to server using maglev user

ssh –p 2222 maglev@<dnacenter_ip_address>

• rca

• Generated file can be copied using scp/sftp from

external server

scp –P 2222

maglev@<dnacenter_ip_address>:<rca_filename>

Important : For 3-node Cluster RCA needs to capture

from all 3 node individually

• Error Screenshot from UI

===============================================================

Verifying ssh/sudo access

===============================================================

[sudo] password for maglev: <passwd>

Done

mkdir: created directory '/data/rca'

changed ownership of '/data/rca' from root:root to maglev:maglev

===============================================================

Verifying administration access

===============================================================

[administration] password for 'admin': <passwd>

User 'admin' logged into 'kong-frontend.maglevsystem.svc.cluster.local' successfully

===============================================================

RCA package created on Sun Feb 18 14:26:14 UTC 2018

===============================================================

2018-02-18 14:26:14 | INFO | Generating log for 'date'...

tar: Removing leading `/' from member names

/etc/cron.d/

/etc/cron.d/.placeholder

/etc/cron.d/clean-elasticsearch-indexes

/etc/cron.d/clean-journal-files

• API Debug log using

browser debugging mode

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

66

Collecting

Logs

Integrating

ISE

Bring-up Issues

Provisioning

Issues

#CLUS

Discovery

Issues

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

67

Cisco DNA Center – ISE Integration

Administration pxGrid Services

•

Pxgrid service should be enabled on ISE.

•

SSH needs to be enabled on ISE.

•

Superadmin credentials will be used for trust establishment for SSH/ERS

communication. By default ISE Super admin has ERS credentials

•

ISE CLI and UI user accounts must use the same username and password

•

ISE admin certificate must contain ISE IP or FQDN in either subject name or SAN.

•

DNAC system certificate must contain DNAC IP or FQDN in either subject name

or SAN.

•

Pxgrid node should be reachable on eth0 IP of ISE from DNAC.

•

Bypass Proxy for DNAC on ISE server

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

68

Cisco DNA Center – ISE Integration Workflow

After Trust establishment

Check the subscriber

status in ISE pxGrid

Offline, Pending approval, Online

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

69

Trust Status on Cisco DNA •Center

Identity source status: (Under System360)

• AAA server Status (Settings – Auth/Policy Server)

• INIT

• INPROGRESS

• Available/Unavailable (PxGRID state)

• ACTIVE

• TRUSTED/UNTRUSTED

• FAILED

• RBAC_FAILURE

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

70

Troubleshooting

ISE - Cisco DNA Center Integration

Checking pxGrid service status

• Login to ISE server using SSH

• Run “show application status ise” to check for the services running.

Increasing log level to debug

• Go to Administration Logging Debug Log Config

• Select the ISE server and Edit

• Find pxGrid, ERS, Infrastructure Service from the list.

Click Log Level button and select Debug Level

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

71

Troubleshooting

ISE - Cisco DNA Center Integration

On Cisco DNA Center check

On ISE check logs

•

network-design-service

•

•

identity-manager-pxGrid-service

•

•

Cisco DNA Center common-service

•

ERS

pxGrid

Infrastructure Service logs

Example Error:

2017-08-01 05:24:36,794 | ERROR | pool-1-thread-1

| identity-manager-pxGrid-service |

c.c.e.i.u.pxGridConfigurationUtils | An error occurred while retrieving pxGrid endpoint

certificate. Request: PUT https://bldg24-ise1.cisco.com:9060/ers/config/endpointcert/

certRequest HTTP/1.1, Response: HttpResponseProxy{HTTP/1.1 500 Internal Server Error

[Cache-Control: no-cache, no-store, must-revalidate, Expires: Thu, 01 Jan 1970 00:00:00 GMT,

Set-Cookie: JSESSIONIDSSO=9698CC02E88780EC4415A6DE80C37355; Path=/; Secure; HttpOnly, SetCookie: APPSESSIONID=03A609099AD604812984C6DF27CF7A19; Path=/ers; Secure; HttpOnly, Pragma:

no-cache, Date: Tue, 01 Aug 2017 05:24:36 GMT, Content-Type: application/json;charset=utf-8,

Content-Length: 421, Connection: close, Server: ] ResponseEntityProxy{[Content-Type:

application/json;charset=utf-8,Content-Length: 421,Chunked: false]}} |

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

72

Troubleshooting

ISE - Cisco DNA Center Integration

How To Capture ISE Log bundle:

• Go to Operation Download Logs

• Select ISE server

• Select any additional log to be captured

• Select Encryption and create bundle

• Download bundle

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

73

Collecting

Logs

Integrating

ISE

Bring-up Issues

Provisioning

Issues

#CLUS

Discovery

Issues

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

74

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

75

Step 1

Verify all devices are green after Discovery

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

76

Step 2

Check if all devices in Managed state

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

77

New Configuration after Discovery

FE250#show archive config differences flash:underlay system:running-config

!Contextual Config Diffs:

+device-tracking tracking

+device-tracking policy IPDT_MAX_10

+limit address-count 10

+no protocol udp

+tracking enable

+crypto pki trustpoint TP-self-signed-1978819505

+enrollment selfsigned

+subject-name cn=IOS-Self-Signed-Certificate-1978819505

+revocation-check none

+rsakeypair TP-self-signed-1978819505

+crypto pki trustpoint 128.107.88.241

+enrollment mode ra

+enrollment terminal

+usage ssl-client

New RSA Keys are created

Secure connection to Cisco DNA Center

using the interface 1 IP address as the

certificate name

See Notes for Complete Configurations

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

78

Troubleshooting – Discovery/Inventory

•

•

•

•

•

Check for IP address reachability from DNAC

to the device

Check username/password configuration in

Settings

Check whether telnet/ssh option is properly

selected

• Check using manual telnet/ssh to the

device from DNAC or any other client

Check SNMP community configuration

matches on switch and DNA-C

Discovery View will provide additional

information.

Services Involved on DNA:

apic-em-inventory-manager-service

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

79

Collecting

Logs

Integrating

ISE

Bring-up Issues

Provisioning

Issues

#CLUS

Discovery

Issues

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

80

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

81

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

82

Verifying Config Push

• While Cisco DNA Center is evolving to use NETCONF and YANG APIs, at this

time it pushes most configuration by SSH.

• Exact configuration commands can be seen via show history all

FE2050#show history all

CMD: 'enable' 13:29:55 UTC Tue Jan 16 2018

CMD: 'terminal length 0' 13:29:55 UTC Tue Jan 16 2018

CMD: 'terminal width 0' 13:29:55 UTC Tue Jan 16 2018

CMD: 'show running-config' 13:29:55 UTC Tue Jan 16 2018

CMD: 'config t' 13:29:56 UTC Tue Jan 16 2018

CMD: 'no ip domain-lookup' 13:29:56 UTC Tue Jan 16 2018

CMD: 'no ip access-list extended DNA Center_ACL_WEBAUTH_REDIRECT' 13:29:57 UTC Tue Jan 16 2018

*Jan 16 13:29:57.023: %DMI-5-SYNC_NEEDED: Switch 1 R0/0: syncfd: Configuration change requiring

running configuration sync detected - 'no ip access-list extended DNA

Center_ACL_WEBAUTH_REDIRECT'. The running configuration will be synchronized to the NETCONF

running data store.

CMD: 'ip tacacs source-interface Loopback0' 13:29:57 UTC Tue Jan 16 2018

CMD: 'ip radius source-interface Loopback0' 13:29:57 UTC Tue Jan 16 2018

CMD: 'cts role-based enforcement vlan-list 1022' 13:29:57 UTC Tue Jan 16 2018

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

83

AAA Configuration

FE2050#show running-config | sec aaa

AAA server (ISE) is now

used to authenticate

device logins

aaa new-model

aaa group server radius dnac-group

server name dnac-radius_172.26.204.121

ip radius source-interface Loopback0

aaa authentication login default group dnac-group local

aaa authentication enable default enable

aaa authentication dot1x default group dnac-group

aaa authorization exec default group dnac-group local

aaa authorization network default group dnac-group

aaa authorization network dnac-cts-list group dnacs-group

aaa accounting dot1x default start-stop group dnac-group

aaa server radius dynamic-author

client 172.26.204.121 server-key cisco123

FE2050#show aaa servers

RADIUS: id 1, priority 1, host 172.26.204.121, auth-port 1812, acct-port 1813

State: current UP, duration 546s, previous duration 0s

Dead: total time 0s, count 0

Platform State from SMD: current UNKNOWN, duration 546s, previous duration 0s

SMD Platform Dead: total time 0s, count 0

#CLUS

BRKARC-2016

AAA server up and

running from IOSd

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

84

Global Cisco TrustSec (CTS) Configurations

Global AAA Configuration for all IOS Switches

TrustSec authorization should use cts-list AAA servers

cts authorization list cts-list

For SGT policy enforcement, if switch has to access control

cts role-based enforcement

cts role-based enforcement vlan-list <VLANs>

aaa new-model

!

aaa authentication dot1x default group ise-group

aaa authorization network default group ise-group

aaa authorization network cts-list group ise-group

aaa accounting dot1x default start-stop group ise-group

!

aaa server radius dynamic-author

client <Switch_IP> server-key cisco

!

radius server ise

address ipv4 <ISE_IP> auth-port 1812 acct-port 1813

pac key <PAC_Password>

!

aaa group server radius ise-group

server name ise

!

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

85

ISE and ‘Network Device’ Transact Securely Using PAC keys

Switch authenticates with Cisco ISE for Secure EAP FAST Channel

ISE

Environmental Data

TrustSec Egress Policy

Switch# cts credential id <device_id> password <cts_password>

RADIUS EAP FAST Channel

RADIUS PAC* keys pushed by ISE. Switch uses this to talk to ISE securely

IOS

bldg24-edge-3650-1#show cts pacs

AID: 5079AA777CC3205E5D951003981CBF95

PAC-Info:

PAC-type = Cisco Trustsec

AID: 5079AA777CC3205E5D951003981CBF95

I-ID: FDO1947Q1F1

A-ID-Info: Identity Services Engine

Credential Lifetime: 15:30:58 PST Mon May 28 2018

PAC-Opaque:

000200B800010211000400105079AA777CC3205E5D951003981CBF950006009C0003

0100C25BAEC6DC8B90034431914E48C335DC000000135A95A90900093A8087E1E4

7B8EA12456005D6E38C41F69C19F86B884B370177982EB65469F1E5F6B2B6D96B7

1C99DA19B240FE080757F8F8BBD543AE830A5959EA4A999C310CE1FEC427213AA

552406796C8DDDA695DBCF08FB3473249DCC025598D27CD280E4D01E7877F14C6

F211CC3BAB5E3B836A6B42A9C5EE4E0E6F997549D10561

Refresh timer is set for 11w3d

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

86

Environmental Data

ISE

IOS

Switch# show cts environment-data

CTS Environment Data

====================

Current state = COMPLETE

Last status = Successful

Local Device SGT:

SGT tag = 2-00:TrustSec_Infra_SGT

Server List Info:

Installed list: CTSServerList1-0001, 1 server(s):

*Server: 10.1.1.222, port 1812, A-ID 3E465B9E3F4E012E6AD3159B403B5004

Status = DEAD

Security Group Name Table:

auto-test = TRUE, keywrap-enable = FALSE, idle-time = 60 mins, deadtime

0-00:Unknown

= 20 secs

Multicast Group SGT Table:

2-00:TrustSec_Infra_SGT

Security Group Name Table:

10-00:Employee_FullAccess

0-00:Unknown

20-00:Employee_BYOD

2-00:TrustSec_Infra_SGT

10-00:Employee_FullAccess

30-00:Contractors

20-00:Employee_BYOD

100-00:PCI_Devices

30-00:Contractors

100-00:PCI_Devices

110-00:Web_Servers

110-00:Web_Servers

120-00:Mail_Servers

120-00:Mail_Servers

255-00:Unregist_Dev_SGT

255-00:Unregist_Dev_SGT

Environment Data Lifetime = 86400 secs

Last update time = 21:57:24 UTC Thu Feb 4 2016

Env-data expires in

0:23:58:00 (dd:hr:mm:sec)

Env-data refreshes in 0:23:58:00 (dd:hr:mm:sec)

Cache data applied

= NONE

State Machine is running

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

87

If CTS is not Configured, Verify the Device is a NAD

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

88

Configuration

Issues

Configuration not pushed to the

network device

Check

state?

Device should be

Reachable

and Managed

Debug Inventory Issue

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

89

% 10.9.3.0 overlaps with Vlan12

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

90

Fix the configuration on the device

(config)#no vrf definition Campus

Navigate to Device inventory

Select the device and click “Resync”

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

91

Loopback 0

If you are using Automated Underlay

skip this setup

interface Loopback0

ip address <>

ip router isis

This is only required for Manual

Underlay configuration

Don’t forget to select the device and click “Resync”

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

92

SD-Access Fabric

Provisioning

Fabric Edge Configuration

LISP configuration

VRF/VLAN configuration

SVI configuration

Interface configuration

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

94

SDA Provisioning – Workflow

Start Provisioning from UI

Services Involved

NB API

SPF Service

Orchestration

Engine

Pre-Process-Cfs-Step

Determine all the namespaces this config applies to

Validate-Cfs-Step

Validate whether this config is consistent and conflict free

Process-Cfs-Step

Persist the data and take snapshot for all namespaces

in a single transaction

Target-Resolver-Cfs-Step

SPF Device

Translate-Cfs-Step

Determine the list of devices this config should go to

Per device convert the config to the config that needs to go to the device

Messaging

Network

Programmer

Deploy-Rfs-Task

Rfs-Status-UpdaterTask

Rfs-Merge-Step

Complete

Convert the config to Bulk Provisioning Message to send it to NP

Update the Device config Status based on response from NP

Update the task with an aggregate merged message

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

95

SDA Provisioning – Task Status Check

Click on Show task

Status

Click on View Target Device List

Check the status

Click on See Details

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

96

VLAN and VRF Configuration

FE2050#show run

| beg vrf

vrf definition BruEsc

rd 1:4099

!

address-family ipv4

route-target export 1:4099

route-target import 1:4099

exit-address-family

vrf definition DEFAULT_VN

rd 1:4099

!

address-family ipv4

route-target export 1:4099

route-target import 1:4099

exit-address-family

One VRF per VN

FE2050#show run

| sec vlan

ip dhcp snooping vlan 1021-1024

vlan 1021

name 192_168_1_0-BruEsc

vlan 1022

name 192_168_100_0-BruEsc

vlan 1023

name 192_168_200_0-DEFAULT_VN

cts role-based enforcement vlan-list 1021-1023

One VLAN per IP Address Pool

DHCP Snooping and CTS are enabled

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

97

Closed Authentication Configuration

IBNS 2.0 Template

Interface Configuration

template DefaultWiredDot1xClosedAuth

dot1x pae authenticator

switchport access vlan 2047

switchport mode access

switchport voice vlan 4000

mab

access-session closed

access-session port-control auto

authentication periodic

authentication timer reauthenticate server

service-policy type control subscriber PMAP_ D

FE2051#show run int gi 1/0/1

switchport mode access

device-tracking attach-policy IPDT_MAX_10

authentication timer reauthenticate server

dot1x timeout tx-period 7

dot1x max-reauth-req 3

source template DefaultWiredDot1xClosedAuth

spanning-tree portfast

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

98

Troubleshooting – Device / Fabric Provision Issues

Services involved:

• orchestration-engine-service

• spf-service-manager-service

spf-device-manager-service

• apic-em-network-programmer-service

•

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

99

Cisco SD-Access

Fabric

Troubleshooting

DHCP

DHCP Packet Flow in Campus Fabric

B

FE1

DHCP

1

The DHCP client generates a

DHCP request and broadcasts it

on the network

2

FE uses DHCP Snooping to add

it’s RLOC as the remote ID in

Option 82 and sets giaddress the

Anycast SVI

BDR

1

2

Using DHCP Relay the request is

forwarded to the Border.

4

5

3

#CLUS

3

DHCP Server replies with offer

to Anycast SVI.

4

Border uses the remote ID in

option 82 to forward the packet.

5

FE installs the DHCP binding

and forwards the reply to client

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

101

DHCP Binding on Fabric Edge

FE#show ip dhcp snooping binding

MacAddress

IpAddress

------------------ --------------00:13:a9:1f:b2:b0

10.1.2.99

Lease(sec)

---------691197

FE#debug ip dhcp snooping ?

H.H.H

DHCP packet MAC address

agent

DHCP Snooping agent

event

DHCP Snooping event

packet

DHCP Snooping packet

redundancy DHCP Snooping redundancy

Type

------------dhcp-snooping

VLAN

---1021

Interface

-------------------TenGigabitEthernet1/0/23

Debug ip dhcp snooping

Enables showing detail with regards to DHCP snooping

and the insertion of option 82 remote circuit

Debug ip dhcp server packet

Enables debug with regards to the relay function , insertion

giaddress and relay functionality to the Server

Debug dhcp detail

Adds additional detail with regards to LISP in DHCP debugs

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

102

Received DHCP Discover

015016: *Feb 26 00:07:35.296: DHCP_SNOOPING: received new DHCP packet from input interface

(GigabitEthernet4/0/3)

015017: *Feb 26 00:07:35.296: DHCP_SNOOPING: process new DHCP packet, message type: DHCPDISCOVER,

input interface: Gi4/0/3, MAC da: ffff.ffff.ffff, MAC sa: 00ea.bd9b.2db8, IP da: 255.255.255.255, IP

sa: 0.0.0.0, DHCP ciaddr: 0.0.0.0, DHCP yiaddr: 0.0.0.0, DHCP siaddr: 0.0.0.0, DHCP giaddr: 0.0.0.0,

DHCP chaddr: 00ea.bd9b.2db8, efp_id: 374734848, vlan_id: 1022

Adding Relay Information Option

015018: *Feb 26 00:07:35.296: DHCP_SNOOPING: add relay information option.

015019: *Feb 26 00:07:35.296: DHCP_SNOOPING: Encoding opt82 CID in vlan-mod-port format

015020: *Feb 26 00:07:35.296: :VLAN case : VLAN ID 1022

015021: *Feb 26 00:07:35.296: VRF id is valid

015022: *Feb 26 00:07:35.296: LISP ID is valid, encoding RID in srloc format

015023: *Feb 26 00:07:35.296: DHCP_SNOOPING: binary dump of relay info option, length: 22 data:

0x52 0x14 0x1 0x6 0x0 0x4 0x3 0xFE 0x4 0x3 0x2 0xA 0x3 0x8 0x0 0x10 0x3 0x1 0xC0 0xA8 0x3 0x62

015024: *Feb 26 00:07:35.296: DHCP_SNOOPING: bridge packet get invalid mat entry: FFFF.FFFF.FFFF,

packet is flooded to ingress VLAN: (1022)

015025: *Feb 26 00:07:35.296: DHCP_SNOOPING: bridge packet send packet to cpu port: Vlan1022.

Option 82

0x3 0xFE = 3FE = VLAN ID 1022

0x4 = Module 4 , 0x3 = Port 3

#CLUS

LISP Instance-id 4099

BRKARC-2016

RLOC IP 192.168.3.98

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

103

Continue with Option 82

015026:

015027:

015028:

015029:

015030:

015031:

015032:

015033:

015034:

015035:

015036:

015037:

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

*Feb

26

26

26

26

26

26

26

26

26

26

26

26

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

DHCPD: Reload workspace interface Vlan1022 tableid 2.

DHCPD: tableid for 1.1.2.1 on Vlan1022 is 2

DHCPD: client's VPN is Campus.

DHCPD: No option 125

DHCPD: Option 125 not present in the msg.

DHCPD: Option 125 not present in the msg.

DHCPD: Sending notification of DISCOVER:

DHCPD: htype 1 chaddr 00ea.bd9b.2db8

DHCPD: circuit id 000403fe0403

Circuit ID

DHCPD: table id 2 = vrf Campus

0x3 0xFE = 3FE = VLAN ID 1022

DHCPD: interface = Vlan1022

0x4 = Module 4 , 0x3 = Port 3

DHCPD: class id 4d53465420352e30

Sending Discover to DHCP server

015040:

015041:

015042:

015043:

*Feb

*Feb

*Feb

*Feb

26

26

26

26

00:07:35.297:

00:07:35.297:

00:07:35.297:

00:07:35.297:

DHCPD:

DHCPD:

DHCPD:

DHCPD:

Anycast Gateway IP address

Looking up binding using address 1.1.2.1

setting giaddr to 1.1.2.1.

BOOTREQUEST from 0100.eabd.9b2d.b8 forwarded to 192.168.12.240.

BOOTREQUEST from 0100.eabd.9b2d.b8 forwarded to 192.168.12.241.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

104

Forwarding ACK

015089: *Feb 26 00:07:35.302: DHCPD: Reload workspace interface LISP0.4099 tableid 2.

015090: *Feb 26 00:07:35.302: DHCPD: tableid for 1.1.7.4 on LISP0.4099 is 2

015091: *Feb 26 00:07:35.302: DHCPD: client's VPN is .

015092: *Feb 26 00:07:35.302: DHCPD: No option 125

015093: *Feb 26 00:07:35.302: DHCPD: forwarding BOOTREPLY to client 00ea.bd9b.2db8.

015094: *Feb 26 00:07:35.302: DHCPD: Forwarding reply on numbered intf

015095: *Feb 26 00:07:35.302: DHCPD: Option 125 not present in the msg.

015096: *Feb 26 00:07:35.302: DHCPD: Clearing unwanted ARP entries for multiple helpers

015097: *Feb 26 00:07:35.303: DHCPD: src nbma addr as zero

015098: *Feb 26 00:07:35.303: DHCPD: creating ARP entry (1.1.2.13, 00ea.bd9b.2db8, vrf Campus).

015099: *Feb 26 00:07:35.303: DHCPD: egress Interfce Vlan1022

015100: *Feb 26 00:07:35.303: DHCPD: unicasting BOOTREPLY to client 00ea.bd9b.2db8 (1.1.2.13).

015101: *Feb 26 00:07:35.303: DHCP_SNOOPING: received new DHCP packet from input interface (Vlan1022)

015102: *Feb 26 00:07:35.303: No rate limit check because pak is routed by this box

015103: *Feb 26 00:07:35.304: DHCP_SNOOPING: process new DHCP packet, message type: DHCPACK, input

interface: Vl1022, MAC da: 00ea.bd9b.2db8, MAC sa: 0000.0c9f.f45d, IP da: 1.1.2.13, IP sa: 1.1.2.1,

DHCP ciaddr: 0.0.0.0, DHCP yiaddr: 1.1.2.13, DHCP siaddr: 0.0.0.0, DHCP giaddr: 1.1.2.1, DHCP chaddr:

00ea.bd9b.2db8, efp_id: 374734848, vlan_id: 1022

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

105

Client Adding to Device Tracking

015104: *Feb 26 00:07:35.304: DHCP_SNOOPING: binary dump of option 82, length: 22 data:

0x52 0x14 0x1 0x6 0x0 0x4 0x3 0xFE 0x4 0x3 0x2 0xA 0x3 0x8 0x0 0x10 0x3 0x1 0xC0 0xA8 0x3 0x62

015105: *Feb 26 00:07:35.304: DHCP_SNOOPING: binary dump of extracted circuit id, length: 8 data:

0x1 0x6 0x0 0x4 0x3 0xFE 0x4 0x3

015106: *Feb 26 00:07:35.304: DHCP_SNOOPING: binary dump of extracted remote id, length: 12 data:

0x2 0xA 0x3 0x8 0x0 0x10 0x3 0x1 0xC0 0xA8 0x3 0x62

015107: *Feb 26 00:07:35.304: actual_fmt_cid OPT82_FMT_CID_VLAN_MOD_PORT_INTF global_opt82_fmt_rid

OPT82_FMT_RID_DEFAULT_GLOBAL global_opt82_fmt_cid OPT82_FMT_CID_DEFAULT_GLOBAL cid: sub_option_length 6

015108: *Feb 26 00:07:35.304: DHCP_SNOOPING: opt82 data indicates local packet

015109: *Feb 26 00:07:35.304: DHCP_SNOOPING: opt82 data indicates local packet

015117: *Feb 26 00:07:35.405: DHCP_SNOOPING: add binding on port GigabitEthernet4/0/3 ckt_id 0

GigabitEthernet4/0/3

015118: *Feb 26 00:07:35.405: DHCP_SNOOPING: added entry to table (index 1125)

015119: *Feb 26 00:07:35.405: DHCP_SNOOPING: dump binding entry: Mac=00:EA:BD:9B:2D:B8 Ip=1.1.2.13 Lease=21600

Type=dhcp-snooping Vlan=1022 If=GigabitEthernet4/0/3

015120: *Feb 26 00:07:35.406: No entry found for mac(00ea.bd9b.2db8) vlan(1022) GigabitEthernet4/0/3

015121: *Feb 26 00:07:35.406: host tracking not found for update add dynamic

(1.1.2.13, 0.0.0.0, 00ea.bd9b.2db8) vlan(1022)

015122: *Feb 26 00:07:35.406:

015123: *Feb 26 00:07:35.406:

Vlan1022, if_output->vlan_id:

015124: *Feb 26 00:07:35.406:

Client Added to Device Tracking

DHCP_SNOOPING: remove relay information option.

platform lookup dest vlan for input_if: Vlan1022, is NOT tunnel, if_output:

1022, pak->vlan_id: 1022

DHCP_SNOOPING: direct forward dhcp replyto output port: GigabitEthernet4/0/3.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

106

Available API’s and

DNA Platform

Troubleshooting

What is an API (Application Programmable Interface)?

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

108

What is Representational State Transfer

(RestFul) API’s

GET

Application A

POST

Application B

PUT

DELETE

Data format of the payload is JSON (JavaScript Object Notation)

{ "title": "A Wrinkle in Time", "author": "Madeline L'Engle" }

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

109

Different Ways for Consuming API’s

DNA Center Platform as a Service

DNA Center API Tester

Native Restful Clients like RESTLET and POSTMAN

Native Scripting in any programming language like Python, Java, C

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

110

Method 1

DNA Center

Platform as a

Service

Enable the REST API bundle from DNA-Center

Enable the

REST API

bundle to start

REST calls

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

112

Access the API’s from the Developer Toolkit

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

113

List of available API’s

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

114

Discovery API Call from DNAC Platform

Get

Discovery by

Index Range

#CLUS

Make a REST

Call from DNA

GUI Itself

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

115

Discovery API Call from DNAC Platform (Cont)

{

"response": [

{

"name": "C9800-CL",

"discoveryType": "Range",

"ipAddressList": "10.122.145.235-10.122.145.235",

"deviceIds": "36f02621-5b65-4c15-8374-8f9e5b1e72ee",

"userNameList": "admin",

"passwordList": "NO!$DATA!$",

"ipFilterList": "",

"enablePasswordList": "NO!$DATA!$",

"snmpRoCommunity": "",

"protocolOrder": "ssh",

"discoveryCondition": "Complete",

"discoveryStatus": "Inactive",

"timeOut": 5,

"numDevices": 1,

"retryCount": 3,

"isAutoCdp": false,

"globalCredentialIdList": [

"c39a97e7-54c1-4a4a-a9d8-15d0ec142f30",

"47658146-ed68-4208-b208-bb01060236b2"

],

"preferredMgmtIPMethod": "None",

"netconfPort": "830",

You can also

do Discovery

by ID

"id": "133"

}

],

"version": "1.0"

}

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

116

Method 2

Using DNA Center

API Tester

Get Device Provisioning Config

Part 1- find the Device ID

DNA API Tester URL:

https://<Cisco DNA Center IP Address>/dna/apitester

Copy the Device

ID to use in next

API Call

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

118

Get Device Provisioning Config

Part 2- Find the Provisioning Config Status Based on Device-ID and

flag for IsLatest: true

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

119

Method 3:

Using Native REST

Tools like

RESTLET

Authenticate by Generating a Token

https://developer.cisco.com/docs/dna-center/#!generating-and-using-an-authorization-token/generating-and-using-an-authorizationtoken

{

"Token": "eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJzdWIiOiI1YjhlZmE4OGZjNGE5YjAwODlkZmM3ZDIiLCJhdXRoU291cmNlIjoiaW50ZXJuYWwiLCJ0ZW5hbnROYW1lIjoiVE5UMCIsInJvbG

VzIjpbIjViOGVmYTg2ZmM0YTliMDA4OWRmYzdkMSJdLCJ0ZW5hbnRJZCI6IjViOGVmYTg1ZmM0YTliMDA4OWRmYzdjZiIsImV4cCI6MTU1NzE3NDYwMywidXNlcm5 hbWUiOiJhZG1pbiJ9.JlkLC2igDCdkqFEQ1wQjow4eaoYqi_ApfbEl8aMIhY"

}

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

121

Getting All VLAN ID’s in the Fabric and Underlay

https://<DNAC IP/FQDN>/dna/intent/api/v1/topology/vlan/vlan-names

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

122

Find the GET Request for pulling the Templates

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

123

Run the GET template API from the DNA Center

This is the URL that you’ll

define in postman to send

the GET request

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

124

Running a GET to Cisco DNA Center

Use inherit auth from

parent to send the actual

GET request.

Every template has a

templateId that you can

later use to query one

specific template

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

125

Verify the template from Cisco DNA Center

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

126

Getting the details of a template using API GET

This is the tempalteId we

before

This is giving us all the

details of the template

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

127

Creating a project using API

This is the URL you need to

send your POST to create

the project

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

128

Creating a project using API

This is the model that Cisco

DNA Center expects in the

POST. If you check the model

you’ll see if fields are

mandatory#CLUS

or optional. BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

129

Creating a project using API

Now you can send your post to

the URL you found using the

model schema described in

Cisco DNA Center

If successful, you’ll

see a tasked, URL and

a version number.

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

130

Project created in Cisco DNA Center

The project

is created

You can check the templateprogrammer logs to see how it was

created

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

131

Defining and running the API POST for a template

Verify what’s the URL you need to

send your POST to create the

template

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

132

Defining and running the API POST for a template

Send the POST using the variables

described in the method. Replace

${projectid} the real projectid

In this case I’m creating a template

named “postman-created-template”

that will be part of the project

“postman-template-name”

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

133

Verifying template under the defined project

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

134

How DNA Center

Uses API’s

Internally

Kong is the backend API Server for DNA Center

Inventory

SPF Service

Topology Service

Kong

Service

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

136

Check the API’s currently used by individual services

$ magctl appstack status | grep kong

$ magctl api routes | grep pool

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

137

Using Chrome

Developer Tools to

Troubleshoot

Issues

Launching Developer Tools on a Browser

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

139

Network API calls on Developer Tools

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

140

Checking a Specific API Call from Developer Tools

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

141

Saving the API requests for a particular session

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

142

Understanding

Certificates and

Common Issues hit

due to Improper

Certificates

Operations on DNA Center that makes use of

Certificates

Identity Services Engine(ISE)/ IP address Manager Integration (IPAM)

Software Image Management(SWIM) / Plug and Play (PnP)/Lan

Automation

Wireless Lan Controller for Assurance

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

144

Understanding the Key Fields of a Certificate

Who issued the certificate

To whom the certificate was issued

Alternate identities which the certificate is valid for

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

145

Understanding the Chain of Trust in Certificates

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

146

Verifying Certificate from Cisco DNA Center GUI

Step-3

1

Step-2

2

4

3

Step-1

Make sure all interface IP’s

and VIP are included in the

SAN field of the DNAC

certificate

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

147

Checking Certificate from Cisco DNA Center CLI

$ echo | openssl s_client -showcerts -servername <Cisco DNA Center IP Address> -connect <Cisco DNA Center IP Address>:443 2>/dev/null | openssl x509 -inform pem noout -text

1

2

3

4

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

148

How to Revert to Self Signed Certificate on DNA

Center

cd /home/maglev

vi register.conf

[req]

distinguished_name = req_distinguished_name

x509_extensions = v3_req

prompt = no

1. Generate the certificate:

sudo openssl req -x509 -nodes -days 365 -newkey rsa:2048 keyout key.pem -out cert.pem -config request.conf -extensions

'v3_req’

[req_distinguished_name]

C = IN

ST = MH

L = Mumbai

O = CUSTOMER

OU = MyDivision

CN = DOMAIN

[v3_req]

basicConstraints = critical, CA:TRUE

keyUsage = keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

2. Verification of the IP’s in the certificate:

openssl x509 -inform pem -text -noout -in cert.pem

Download the cert.pem and key.pem file from DNA Center and upload on the

DNA Center

[alt_names]

IP.1 = X.X.X.X

IP.2 = 172.20

<esc>

:wq

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

149

Upload the Certificate on the DNA Center

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

150

Post Certificate Change Checks

ISE DNA Center Integration

WLC Assurance – Manually put the new DNA certificate on WLC

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

151

Pushing the New DNA Center Certificate on WLC

(Cisco Controller) >show network assurance summary

Server url............................. https://xx.xx.xx.xx

Wsa Service............................ Enabled

wsa Onchange Mode...................... Enabled

wsa Sync Interval...................... Fixed

wsa Subscription Topics................ all

NAC Data Publish Status:

Last Error.......................... Wed Apr 25 07:54:01 2018 Peer

certificate cannot be authenticated with given CA certificates, SSL

certificate problem: unable to get local issuer certificate

Last Success........................ None

JWT Token Config.................... Not Available

JWT Last Success.................... None

JWT Last Failure.................... None

•

Login into the DNA Center SSH on Port 2222

•

Copy the token from the below command:

$ cat .maglevconf

•

Generate .pem file . The file needs to be transferred to WLC .

$ curl http://<DNAC IP address>/ca/pem > dna_cert.pem

•

Configure WLC

(Cisco Controller) >config network assurance url <DNAC IP address>

(Cisco Controller) >config network assurance id-token <The Token that generated in

DNAC>

Sensor Backhaul settings:

Ssid................................ Not Configured

Authentication...................... Open

Sensor provisioning:

Status.............................. Disabled

Interface Name...................... None

WLAN ID............................. None

SSID................................ None

(Cisco Controller) >

•

Transfer the DNAC generated pem.file to WLC either through ftp/tftp/sftp. this can

be done via WLC GUI or CLI

From the WLC GUI:

Commands > Download File >

File-Type: NA-Serv-CA Certificate

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

152

Validate DNACenter Networking

Check the assigned IP addresses to DNA-Center

and the Virtual IP addresses

$ ip a | grep enp

$ etcdctl get /maglev/config/cluster/cluster_network

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

154

Check the Intra-Cluster link details

etcdctl get /maglev/config/node-<DNAC IP address>/network| python -mjson.tool

#CLUS

BRKARC-2016

© 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public

155

Validate High