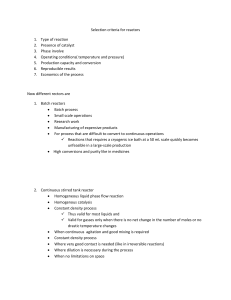

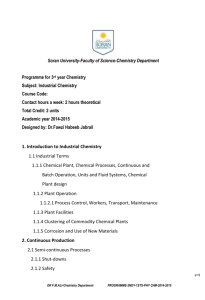

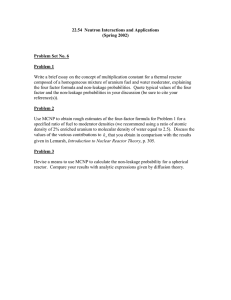

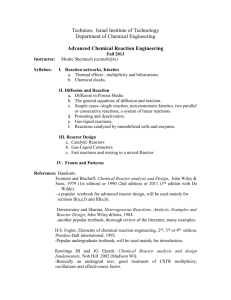

Chemical Projects Scale Up: How to go from Laboratory to Commercial Joe M. Bonem Table of Contents Cover Title page Copyright Introduction 1: Potential Problems With Scale-up Abstract Equipment Related Mode Related Theoretical Considerations Thermal Characteristics Safety Considerations Recycle Considerations Regulatory Requirements Project Focus Considerations Take Home Message 2: Equipment Design Considerations Abstract Introduction Reactor Scale-up Laboratory Batch Reactor to Larger Batch Reactor (Pilot Plant or Commercial) Laboratory Batch Reactor to a Commercial Continuous Stirred Tank Reactor Laboratory Batch Reactor to a Commercial Tubular Reactor Laboratory Tubular Reactor to a Commercial Tubular Reactor Reactor Scale-up Example Summary of Considerations for Reactor Scale-up Supplier’s Scale-ups Volatile Removal Scale-up Example Problem 2-2 Summary of Key Points for Scale-up of Volatiles Removal Equipment Other Scale-ups Using the Same Techniques Solid–Liquid Separation Example Problem 2-3 3: Developing Commercial Process Flow Sheets Abstract 4: Thermal Characteristics for Reactor Scale-up Abstract 5: Safety Considerations Abstract Use of New Chemicals Change in Delivery Mode for Chemicals Impact of a New Development on Shared Facilities Reaction By-Products That Might be Produced Storage and Shipping Considerations Step-Out Designs 6: Recycle Considerations Abstract Why Have a Recycle System in a Pilot Plant? Impurity Buildup Considerations 7: Supplier’s Equipment Scale-up Abstract Agitator and Mixer Scale-up Indirect Heated Dryer Summary of Working With Suppliers of Specialty Equipment 8: Sustainability Abstract Long-Term Changes in Existing Technology Long-Term Availability and Cost of Feeds and Catalysts Long-Term Availability and Cost of Utilities Disposition of Waste and By-Product Streams Operability and Maintainability of Process Facilities Summary 9: Project Evaluation Using CAPEX and OPEX Inputs Abstract Introduction CAPEX and OPEX Project Timing and Early Design Calculations 10: Emerging Technology Contingency (ETC) Abstract Application Contributors/Reviewers 11: Other Uses of Study Designs Abstract Identifying High Cost Parts of CAPEX and OPEX Identifying Areas Where Unusual or Special Equipment Is Required Identifying Areas for Future Development Work Identifying the Size-limiting Equipment Identifying Areas Where There Is a Need for Consultants Summary 12: Scaling Up to Larger Commercial Sizes Abstract Summary 13: Defining and Mitigating Risks Abstract Techniques for Definition and Mitigation of Risk Required Manpower Resources Reasonable Schedules Cooperative Team Spirit and Culture 14: Typical Cases Studies Abstract Successful Case Studies Unsuccessful Case Studies Production of an Inorganic Catalyst Development of a New Polymerization Platform Utilization of a New and Improved Catalyst in a Gas Phase Polymerization Process Inadequate Reactor Scale-up Utilization of a New and Improved Stabilizer The Less Than Perfect Selection of a Diluent Epilogue: Final Words and Acknowledgments Index Copyright Elsevier Radarweg 29, PO Box 211, 1000 AE Amsterdam, Netherlands The Boulevard, Langford Lane, Kidlington, Oxford OX5 1GB, United Kingdom 50 Hampshire Street, 5th Floor, Cambridge, MA 02139, United States Copyright © 2018 Elsevier Inc. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from the publisher. Details on how to seek permission, further information about the Publisher’s permissions policies and our arrangements with organizations such as the Copyright Clearance Center and the Copyright Licensing Agency, can be found at our website: www.elsevier.com/permissions. This book and the individual contributions contained in it are protected under copyright by the Publisher (other than as may be noted herein). Notices Knowledge and best practice in this field are constantly changing. As new research and experience broaden our understanding, changes in research methods, professional practices, or medical treatment may become necessary. Practitioners and researchers must always rely on their own experience and knowledge in evaluating and using any information, methods, compounds, or experiments described herein. In using such information or methods they should be mindful of their own safety and the safety of others, including parties for whom they have a professional responsibility. To the fullest extent of the law, neither the Publisher nor the authors, contributors, or editors, assume any liability for any injury and/or damage to persons or property as a matter of products liability, negligence or otherwise, or from any use or operation of any methods, products, instructions, or ideas contained in the material herein. Library of Congress Cataloging-in-Publication Data A catalog record for this book is available from the Library of Congress British Library Cataloguing-in-Publication Data A catalogue record for this book is available from the British Library ISBN: 978-0-12-813610-2 For information on all Elsevier publications visit our website at https://www.elsevier.com/books-and-journals Publisher: Susan Dennis Acquisition Editor: Kostas KI Marinakis Editorial Project Manager: Emily Thomson Production Project Manager: Maria Bernard Designer: Victoria Pearson Typeset by Thomson Digital Introduction When one considers writing a book, there are two questions that must be answered—The first question is “Is there a need for such a book?” Tied up in this question is “Who is the audience and what is their experience level?” The second question is “What knowledge do I have that would allow me to write such a book?” For this book, the answer to the first question is that one of the desires of industrial research, as well as academic research is to convert a discovery into a viable useful product. Essentially all research and/or development work is done with the goal to eventually commercialize the product or process so that it may: • Create increased profits or turn a money-losing venture into one that makes a profit by a new process, process modification, or new catalyst. • Create a new chemical or modification of an existing chemical that can increase company profitability. • Create a pharmaceutical drug, which can extend life, cure diseases, or make life more comfortable. With these goals in mind, the idea of a book began to evolve. The title is selfexplanatory. It is basically a book that describes “how we get there from here.” The job of getting there is often assigned to a chemical engineer with in-depth knowledge of research or in-depth knowledge of plant operations and/or process design. However, it is likely that they will have only limited knowledge with taking a discovery from the laboratory to commercial operation. On one hand, this is not a book for experts who are seeking a new and exotic way to scale up an unusual reactor design. The audience for this book is the “inexperienced engineer.” He could be an engineer who is not experienced in either research or plant engineering or who has minimal experience in both, such as a new college graduate. On the other hand, the book also provides insight of value to either a researcher with no plant engineering experience or a plant engineer/process designer with no research experience. The audience for the book might have a need to understand the basics in one of the following: • Reactor scale-up using simplified the first-order kinetics. • The inherent risks in increasing size of a reactor where an exothermic reaction is being conducted. • How does one organize for a successful scale-up. In addition, the book provides techniques to understand how suppliers of specialized equipment, such as agitators, dryers, or centrifuges scale up from their pilot plant data. Although one might think that the primary purpose of such a book is technical, it is important to know at an early stage of any development whether a successful scale-up will produce an economically successful project. The book includes chapters that discuss how a project can be evaluated at an early point in the research phase. The book also includes several examples of successful and unsuccessful scale-up projects. The means to develop successful development teams are also discussed. The techniques and approaches discussed in this book were developed based on many years of experience in heavy industry—the oil refining and petrochemical business. Although creating a new pharmaceutical drug was mentioned as a goal of going from the laboratory to commercial production, some might question whether these techniques apply to the pharmaceutical business. The development of a commercial process to produce a laboratory-based product is often done with a goal to reduce the cost of manufacturing the product. With the pressure on the pharmaceutical industry today to reduce the cost of their products, it would seem that cost reduction is a high priority. As a general rule, the cost of a product can be reduced when the mode of producing the chemical is changed from batch to continuous and when the scale of production is increased. That is what this book is about: Going from batch bench-scale operations to commercial size continuous operation. Terminology is always important when attempting to communicate concepts. As this book developed, I became aware that there were certain concepts that were second nature to me that I needed to explain. The terminology is described in the following paragraphs. Batch operations are those that are conducted by adding reactants to a vessel and keeping the vessel at a specific temperature for a period of time. During this period of time, there will be no flow of material into or out of the vessel. The vessel contents are then removed, and the reactants and products are separated. These batch operations are normally reactions or extractions, but they could be any unit operation. The art of cooking is filled with batch operations. On the other hand, continuous operations are those where reactants are always going into the vessel and material is always flowing out. Thus while the average particle in a continuous reactor may have the same residence time as that in a batch reactor, there will be some that have much shorter residence times and some with much longer residence times. Most operations in refineries and chemical plants are continuous. The distinction among bench scale, pilot plant, and commercial size often is based on production capacity. Bench scale has to do with operations (normally batch operations) that are conducted on such a small scale that they can be done on the laboratory counter (bench) or fume hood. They are generally operated only during normal office hours. Pilot plant operations are generally continuous and conducted in facilities that are separate from the laboratory. A typical pilot plant might be housed in a separate building with a DCS (Digital Control System), be a scaled down version of the commercial plant and produce 50– 500 lbs/h of product. The pilot plant will often operate full time (24 h a day and 7 days a week). Some of the equipment might be batch. The commercial plant is very similar to the pilot plant except essentially all facilities will be continuous. In writing this book, it was necessary to define a starting point and an ending point for the project that is being commercialized. I have defined these points as described below: • The starting point is the point where a single individual or small group working in a laboratory seems to have made a discovery significant enough that additional resources are assigned to the project. At this point in project development, the research is advanced to the point that a process designer is assigned at least part time to the project. The process designer will be a chemical engineer who has designed commercial plants and/or solved operating plant problems. This process designer will have two functions: he will help uncover other areas of research that must be completed to allow a firm process design to be developed; he will also be involved in developing a study design and cost estimate to allow an economic evaluation of the project. This aspect of project development is often overlooked. However, the development of the study design at an early point allows for the modification of the design as the project progresses, as well as development of the economics along the way. It is conceivable that one of these economic evaluations might cause the project development to look unattractive. This will normally bring cries of foul. “We should have waited until we developed more data to make this evaluation.” However, it is likely that a good study design and economic evaluation will determine the feasibility of the project no matter how much additional data are required. Chapter 9 describes the study design in more detail. • The true ending point is when the plant, plant modification, or new catalyst/chemical utilization has been successfully implemented. When one considers what is meant by a successful startup or successful utilization of a new chemical or catalyst a definition of this state of success is required. The successful completion of a project (new plant or new catalyst) is defined as the point where the project is either meeting the economic projections or a clear path is available for reaching this point in three to six months. This book deals with the initial steps of a new development through the initiation of detailed design. However, The last three steps in this implementation process (detailed engineering design, construction, and plant startup) have been described thoroughly by others. Thus, they receive only minimal attention in this book. Therefore, I have defined the ending point for a project that consists of construction of a new plant or existing plant physical modification as the completion of the front-end engineering work. At this point, all the aspects of scale-up and chemical engineering design will be essentially finished and a detailed engineering design will have been initiated. For the case where a new catalyst/chemical is being utilized, the end point is when the chemical is successfully utilized in the commercial plant. The second question deals with the question of having sufficient knowledge to write such a book. In answering this question, I reviewed my career. My career progressed through several stages of learning what chemical engineering was all about. These stages of learning, in addition to the academic world, consisted of time spent doing conceptual process design (including scale-up), process evaluation based on conceptual design, detailed process design, serving as an owner’s representative during detailed engineering, and facilities startup. Along the way, I made four observations: • I wanted to stay in technical work rather than progress as a manager. • The technical work was of interest if it was challenging and provided value added for my clients or the company that I was working for. • The commercialization of technology is potentially one of the more challenging aspects of chemical engineering. Unfortunately, it is an area that often is treated superficially. I learned first-hand the risk of failing to “peel back layers of the onion” when considering a new technology development. As layers of the onion are peeled back, more and more questions, which need to be considered, are uncovered. • I also learned that a pragmatic solution based on theoretically correct relationships is much better than a beautiful theoretically correct solution that does not work because of its complexity. Thus, there are some in the chemical engineering field, who will look at this book and consider it overly simplified. The primary defense against this charge is that the concepts given here have been proven to work. In addition to the practicality of the approaches described herein, they have the advantage of being easily understood. An engineer or chemist working in today’s industrial environment rarely has time to fully understand a complicated approach. This is particularly true when the marginal benefit of the complicated approach is minimal. Lord Ernest Rutherford once proclaimed “A theory that you cannot explain to a bartender is probably no good.” The goal of this book is to provide a package of information to allow one to visualize how a technology development can flow from the laboratory to commercial operation and to show how simple concepts can be used to scale-up from laboratory bench scale or pilot plant data to a commercial plant. Fig. I.1 presents a very simplified sketch of the technology development process. Fig. I.1 only shows the development of the process or new raw material along with product development as single lines. More detail for process development is given in Fig. 9.1. In addition, the product development is an important step and must be completed in synchronization with the process development. Figure I.1 Parallel paths of commercial development. (Concept of need to combine process development with product development. May not apply to all development projects). The approach proposed in this book works whether the new technology is simple or complicated. The new technology may seem to be a simple replacement that does not require what might be considered an elaborate stepwise procedure. On first pass, it might seem that most of the details shown later in this book can be omitted. For example, if a new additive is proposed to replace one that is not performing well, it might seem that some or most of the proposed steps can be eliminated. As trivial as this may sound, all the steps suggested in this book will be applicable. In contrast, the new technology may well be the development of a new process to replace an out of date process or a new process to make a new product. The new process/new product is obviously a much more complicated sounding development. But, the same process described in this book is applicable regardless how complicated or simple the innovation is. As a personal example, I found out the hard way when assigned to a project to make a simple change in an additive that in the process industry, no change is simple. This failure is described in Chapter 14. It is my hope that this book will be helpful to new engineers working in all phases of research (chemicals, oil production, refinery, pharmaceuticals…….) and for those responsible for commercialization of new technology. The book will also be of importance to the laboratory chemist that is developing a new technology or the experienced plant engineer assigned to the project who has no research experience. Commercialization of new technology is often referred to as “scale-up.” While this term has the connotation of larger equipment, it can also be applied to new technology associated with a chemical or catalyst change. Regardless of a successful laboratory product or process demonstration, there will almost always be a step to expand the production of a product or use of a chemical/catalyst from laboratory size quantities to commercial sized quantities. This step is referred to as “scale-up.” In addition, this term is often used to address the additional steps that must be added to the laboratory demonstration to allow commercialization. For example, a process demonstrated in the laboratory using reagent grade feedstock will likely require a step in the scaled-up process to purify the commercially available feedstock. The term scale-up is well known in the chemical/refining, food, and pharmaceutical industries. A second term that is often used is scale-up ratio. This is generally the ratio of commercial production to that in the pilot plant or laboratory. The scale-up ratio can also be associated with the ratio of equipment capacities or sizes, which may be slightly different than the production scale-up ratio. There may be multiple scale-ups involved in going from laboratory production and/or a laboratory process to a fully commercial venture. These scale-ups can consist of: • Scaling up from bench scale equipment to pilot plan equipment. • Scaling up from bench scale equipment to commercial size equipment. • Scaling up from pilot plant equipment to commercial size equipment. • Scaling up from a small commercial size plant to a large commercial plant. • Scaling up a single piece of equipment, such as an indirect heated dryer or extruder from pilot plant size to commercial size equipment. • Scaling up from bench scale studies to use a new catalyst in either a pilot plant or commercial plant. Each of these scale-up steps requires an outlay of money and also involves some risk, when considering scale-up to commercial plants the outlay of money and resources will be significant. In addition, the building of a commercial plant often involves a commitment to supplying product to a customer, at a specified time. Thus, the importance of the scale-up becomes obvious. When considering the current chemical engineering academic environment and the wide diversity of chemical engineering education and employment opportunities, the areas of process design, process scale-up, and commercial development are often overlooked. This leaves the chemical engineer assigned to a new technology development struggling with how to proceed on this important step. When considering that the transfer of technology from the laboratory to commercial operation often fails, the question of “Why did it fail?” is of value. Given the importance of scale-up, the risks of an improper scale-up can be classified into eight categories shown below: • Equipment related: Scale-up would always involve larger equipment and possibly a change in equipment design, such as from a jacketed vessel to an exchanger pump-around loop. • Mode related: A typical scale-up may well involve a change in equipment mode, such as a change from a batch reactor to a continuous reactor. • Theoretical considerations: While theoretical conclusions and considerations have generally been the regime of the laboratory chemists, the scale-up should consider if it is possible that anything is missed or erroneous conclusions have been drawn. This will also be a good time to consider simplified kinetic relationships and their possible relationships to the scale-up. An example of a failed scale-up associated with inadequate considerations of theory is given in Chapter 14. • Thermal characteristics: Scale-up of a reactor or other vessel where heat transfer is occurring will always require consideration due to the potential reduced area to volume ratio as the reactor is scaled up. • Safety considerations: While safety is always a consideration, it is more so as the equipment size is increased. Larger equipment will contain more potential energy and more toxic chemical in the contingency associated for a containment failure. • Recycle considerations: Several factors require that recycle facilities be included in commercial facilities where it may not be as important in laboratory or pilot plant facilities. • Regulatory requirements: This area might include products, process, and byproduct disposal. • Project focus considerations: The project team must maintain focus on the development and the economic aspects of the process. These failure modes are covered in more detail in various chapters in the book. The available literature deals with both scale-up from a “unit operations” standpoint and an “approval process” as separate entities. Dealing with scale-up from a “unit operations” approach has to do with how data and equipment from a laboratory bench scale or a pilot plant can be used to design a larger facility. The “approval process” is that process where permission and funding are granted for taking the next step in the process development process. It usually occurs at a point where significantly increased manning and/or funds are required in order to proceed further. There are several books and articles that deal with unit operations scale-up, such as heat transfer scale-up, mixing scale-up, and reactor scale-up. Separately the literature also covers items, such as the stepwise approval process that would be required to continue with the development work, as additional research is required, a pilot plant is developed, and a commercial plant is proposed. This book covers both of these areas (unit operations scale-up and a stepwise approval process) in a fashion such that an engineer with no experience or with only experience in one domain, such as research or plant engineering can in a single source have an adequate description of what is required to allow the project to go from the laboratory to commercialization. This book also deals with three aspects of scale-up that are not discussed in depth in the literature. These are as follows: • The development of a “study design” from a limited amount of data. This study design will be adequate to develop both CAPEX (Capital Expenditures or Investment) and OPEX (Operating Expenses). The development of these data will allow understanding of the economics for a new research or development project. The purpose of the economic study is to determine whether the research should proceed to the next step. • Potential problems associated with scale-up. • Simplified approaches to allow development and/or confirmation of commercial equipment design based on bench scale data or pilot plant data. This is often the province of the equipment supplier’s engineering staff, but the owner’s engineer needs to understand how this scale-up is done. The chemical engineer is uniquely qualified to handle these three aspects of project development. The training and experience of chemical engineers will allow them to either develop a preliminary study design at an early stage of the development or a detailed process design at a later stage. The chemical engineer will understand the development of a preliminary cost estimate and the process for making a decision to proceed with the project based on that estimate. In addition, he will understand the risks involved in a scale-up. In developing this study design or the detailed process design, the chemical engineer has the tools to take data from the smaller scale equipment (bench or pilot plant) and use first principles of chemical engineering to develop the equipment sizing for the larger plant. While one approach to the sizing of equipment for the larger plant might be to just use the production ratio as a scaleup factor, this will often result in equipment for the larger plant that is oversized and more expensive than is necessary. A more fundamental approach based on first principles is to scale-up the equipment based on an empirical constant determined in the smaller equipment and the driving force determined for the larger plant. This approach is described in more detail in Chapter 2. When considering scale-up from either bench scale to pilot plant/commercial plant or pilot plant to commercial plant, CAPEX (Capital Expenditure) becomes an important factor. This importance is different than bench scale work where manpower cost and availability concerns play dominant roles. Probably the most important factor that must be considered when considering a pilot plant is to answer the question “How much of the proposed commercial plant should be included in the pilot plant?” Obviously, this is a question that has to be answered for each specific commercialization project. For example, if one considers that the recycle fractionation tower in a process containing a reactor step, the answer must be associated with what are the possible by-products that are produced in the reactor. If the by-products are either nonexistent or well known, it will likely be possible to simulate the recycle fractionation tower with computer technology rather than building an expensive piece of equipment. Besides cost, each piece of equipment adds to the complexity of operating the pilot plant. It is also possible that the envisioned commercial plant will include an alumina or mol sieve dryer in the recycle system. This might be included in the design to remove water from the system prior to startup. In addition, the presence of this dryer will likely serves to remove any polar impurities that are present. On the other hand, if the reaction by-products are unknown or if their production rate is unknown, it may be necessary to include the recycle fractionation tower in the pilot plant. Another driving force for the installation of the recycle fractionation might be the cost of the raw materials. By recycling expensive raw materials, the operating cost of a pilot plant can likely be reduced. Similar logic might apply to specialized equipment used to transform the product into a form for shipping or subsequent processing. This equipment might consist of drying, solid–liquid separation, or packaging facilities. While a small-scale version of the anticipated equipment required for the commercial plant is usually available and could be installed in a pilot plant, careful consideration should be given to whether it is necessary to do so. For example, a sludge concentrating process is being piloted. The envisioned process consists of a centrifuge followed by a direct heated dryer. The centrifuge has been pilot tested at the supplier’s pilot plant and found to have the capability of concentrating the sludge from 20 to 50 wt.% solids. The pilot plant dryer can be tested using a pilot scale centrifuge and dryer or by making a blend of 50 wt.% sludge which will serve as feed to the dryer. The later will have the lower pilot plant CAPEX with a slightly higher risk of scale-up. It is important to make this evaluation as opposed to simply communicating with designers that the pilot plant should look like the commercial plant. Another type decision that must be made prior to the start of the pilot unit design or bench scale development program is the question of scale-up ratio. This is usually the scale-up ratio for going from the pilot plant to a commercial plant since bench scale results are almost always obtained on relatively small equipment. When considering the pilot plant size, the smaller unit might have a production rate that is 1/10, 1/100, 1/1000 of the size of the commercial plant. The scale-up ratio depends on several factors. There is likely no general conclusion that can be used to set the size of the pilot plant. Some of the factors that can enter into a decision on size are as follows: • Use of pilot plant data: A pilot plant that is used strictly for kinetic data can be very small and still get reliable data and may only require that a reactor of the appropriate design (CSTR or plug flow reactor) be provided. For this case, a complete pilot plant will not be necessary. At the other extreme is a pilot plant with the primary function being to supply relatively large quantities of product for customer evaluation. In this case a relatively large full product line will be necessary. • Degree to which the process will be studied: Some pilot plants are built with the goal of understanding the process further. To study and improve a process or develop a new process requires a relatively large pilot plant. For example, if reactor fouling is to be studied, the reactor in the pilot plant must be large enough that wall effects are not significant. It is often desirable to study mixing scale-up. This can best be done in a large pilot plant. • Raw material cost: A pilot plant that utilizes expensive raw materials and does not allow recovering the raw materials should generally be small to minimize the raw materials cost. • Detailed design considerations: Detailed design considerations, such as available space, utilization of existing vent or flare connections, utilization of spare equipment might also help define the size of the pilot plant. While the four variables listed do not make an inclusive list, it is intended to illustrate the multiple faceted task of defining a scale-up ratio for going from a pilot plant to a full-sized commercial unit. The book does not deal with the commercial aspects of product development: those are aspects that are associated with product trials, business considerations, and regulatory clearances. The book is aimed at the chemist or chemical engineer who is working on the development of a new process/product and has very limited knowledge of how to determine if the project should proceed, what should be considered in a stepwise scale-up procedure, and how some key pieces of equipment should be scaled up. While sequentially following the guidelines presented in this book does not guarantee a successful scale-up, skipping any of the steps will almost certainly lead to failure or a scale-up that is less successful than it could be. I have included a brief summary at the end of each chapter. The goal of this summary is to enhance the learning from the chapter contents. It provides a quick look at what I think the reader should “take home” from the chapter. This summary for many of the chapters takes the form of “lessons learned.” We learn from both successes and failures. In fact, we often learn more from failures than successes. One other editorial concept is pronoun genders. With the large number of highly qualified female engineers, operators, and mechanics, one is inclined to no longer use gender-specific terms, such as manpower, manning, men, or he. To avoid awkward expressions, such as peoplepower, I have chosen to use the male gender and ask the reader to recognize that I am referring to both male and female. 1 Potential Problems With Scale-up Abstract A crafty operations manager once told a conference room full of new engineers —“If over the years, we had saved one half the amount of money that the projects engineered by people like you claimed to save, the company would be a lot better off financially.” Although not all the projects that he had in mind were scaled up from the results obtained from the laboratory or pilot plant, several of them were. This cynical statement illustrates that all scale-ups do not go as planned or forecasted. If this is true, then any book on scale-up must provide answers to the question as to what happened or why was the scale-up not successful. There are several reasons why scale-ups fail. Keywords completeness of pilot plant computational fluid dynamics driving force equipment safety thermal considerations study designs A crafty operations manager once told a conference room full of new engineers —“If over the years, we had saved one half the amount of money that the projects engineered by people like you claimed to save, the company would be a lot better off financially.” Although not all the projects that he had in mind were scaled up from the results obtained from the laboratory or pilot plant, several of them were. This cynical statement illustrates that all scale-ups do not go as planned or forecasted. If this is true, then any book on scale-up must provide answers to the question as to what happened or why was the scale-up not successful. There are several reasons why scale-ups fail. These reasons were mentioned in the introduction and can be summarized as follows: • Equipment Related—Scale-up will always involve larger equipment and possibly a change in equipment design such as a jacketed vessel to a pumparound exchanger loop. • Mode Related—A typical scale-up may well involve a change in equipment mode such as a change from a batch reactor to a continuous reactor. • Theoretical Considerations—Although theoretical conclusions and considerations have generally been the regime of the laboratory chemists, the scale-up should consider if it is possible that anything is missed or erroneous conclusions have been drawn. • Thermal Characteristics—Scale-up of a reactor or any other vessel where heat transfer is occurring will always require consideration due to the potentially reduced area-to-volume ratio. • Safety Considerations—Although safety is always a consideration, it is more so as the equipment size is increased. • Recycle Considerations—Several factors require that recycle facilities be included in commercial facilities, although it may not be as important in laboratory or pilot plant facilities. • Regulatory Requirements—Regulation might include products, process, and by-product disposal. • Project Focus Considerations—The project team must maintain focus on the development and the economic aspects of the process. Now considering these eight general causes of scale-up failure, the next few paragraphs add detail to the aforementioned summary. Equipment Related Determining the equipment size often involves a change in the type of equipment. For example, bench-scale work is often conducted as a batch process, wherein each reactant has the same amount of residence time. However, in a commercial design, it is almost always desirable to have a continuous flow reactor. This type of reactor is often a stirred reactor and is simulated as a perfectly mixed vessel. In this case, some of the reactants leave the reactor almost instantly and some remain in the reactor for a time much greater than the average residence time. This factor by itself will increase the residence time requirements in the commercial plant relative to the bench scale. Whether equipment is of the same type or not, the results from a laboratory or a pilot plant can generally be scaled up by making judicious use of the following generalized Eq. (1.1): (1.1) where R = The kinetic rate of the process. C1 = A constant determined in the laboratory, pilot plant, or a smaller commercial plant. Generally, this constant will not change as the process is scaled up to the larger rate. C2 = A constant that is related to the equipment’s physical attributes such as area, volume, L/D ratio, mixer speed, mixer design, exchanger design, or any other physical attribute. This constant will be a strong function of the scale-up ratio. As discussed later, it is not always identical to the scale-up ratio. DF = The driving force that relates to a difference between an equilibrium value and an actual value. This might be temperature driving force, reactant concentration driving force, or volatiles concentration driving force. The driving force will always have the form of the actual value minus an equilibrium value. The process described by Eq. (1.1) could be heat transfer, volatile stripping, reaction, or any process step wherein equilibrium is not reached instantaneously. The kinetic rate is usually expressed in terms of the value of interest (conversion, heat transfer, or volatiles concentration) per unit time. If the required rate is expressed in absolute units (for example, BTU/h), it will be much lower in the laboratory or a pilot plant than what it is in the commercial plant. As defined earlier, the ratio of the rate in the commercial plant to that in the smaller plant is the scale-up ratio. Although this Eq. (1.1) might be considered a simplification of the complexity of scale-up, it illustrates the basic criteria that must be considered. The following well-known simplified heat transfer equation (shown below) is a form of this generalized Eq. (1.1): (1.2) where Q = The kinetic rate or, in the case of heat transfer, it is the rate of heat removal/addition expressed in heat units (BTU or calories) per unit time (normally hours). U = The constant comparable to C1 or, in this case, the heat transfer coefficient. A = The heat transfer area, which is the constant comparable to C2. ln∆T = The temperature driving force or, in this case, the “log delta T.” The use of Eq. (1.1) allows consideration of the various aspects of equipmentrelated scale-up from several angles. This concept is explained in greater detail in Chapter 2. When considering this concept as defined by Eq. (1.1), several questions need to be considered. The overriding question is always, what is the scale-up ratio. It can be defined in multiple ways—production rate, heat removal rate, volatile removal rate, etc. It should be noted that the scale-up ratio is always defined using a time element. Some of the other questions that need to be considered are as follows: • What is the required ratio of the kinetic value R? This maybe the ratio of the production rates between the smaller facilities and the larger facility. However, when considering the example of a heat exchanger, the ratio of heat exchanged (Q) may be more or less than the production rate ratio, because the inlet and outlet temperatures may be different in a commercial unit than a bench scale or a pilot plant. Another possibility to be considered is a jacketed reactor with a pump-around cooling loop. In such a scenario, due to the reduced area-tovolume ratio (A/V) in the jacketed reactor of the larger unit, the actual Q on the external pump-around heat exchanger maybe higher than that which would be calculated by a direct ratio of total heat exchanged in the two reactors. The key concept is that the actual scale-up should be based on the unit operation of interest rather than a simple ratio of production rates. • Is it really reasonable to assume that C1 does not change between the smaller and larger facility? Generally, the answer is that this is a reasonable assumption. However, when considering problems with scale-up, this is an area that must be considered. A real-life example of the change of C1 was a vertical heat exchanger in a commercial plant being used to condense propylene. This exchanger was scaled up to a higher capacity while maintaining C1 (the heat transfer coefficient, U) constant at the value demonstrated in the smaller facility. During the detailed design, the tube’s length-to-diameter (L/D) ratio was increased to avoid using more tubes and having a larger diameter exchanger. This was done to minimize the actual plot plan area. However, this caused the average thickness of the condensate layer that formed on the outside of the tubes to increase. This created a higher resistance to heat flow and resulted in a lower heat transfer coefficient than in the smaller plant. In addition, careful consideration of the scalability of C1 for fixed bed processes such as purge bins, reactors, or adsorption units is required. • What is the driving force (DF) in the commercial facility compared to either the laboratory, the pilot plant, or a smaller commercial facility? In many cases, the driving force is constant between the two sizes. However, in some cases such as heat transfer or stripping of volatiles, it will be different in the commercial plant than in the laboratory or pilot plant. The most obvious need to consider this difference is when a batch reactor in the laboratory must be scaled up to a continuously operated reactor either in a commercial plant or a pilot plant. This is discussed in more detail in Chapter 2. Scaling up to a larger facility that requires a different type of equipment requires a different consideration. The first thing to do is to recognize that this is likely a problem. Once it is recognized that there is a potential problem, then approaching it from first principles will often be helpful. The concept of first principles is defined as an approach that moves as far away from a strictly empirical approach to one that uses basic chemical engineering principles to the extent that it is reasonably possible. Eq. (1.2) is an example of this as applied to heat transfer. In this equation, the driving force (DF) and C2 (heat transfer area) are based on chemical engineering principles. C1 is based on empirical evidence (the heat transfer coefficient) in the smaller plant and/or pilot plant. As indicated earlier, there is some risk in using the empirical heat transfer coefficient from the smaller plant or pilot plant. However, using existing data from these smaller plants is a more practical approach than trying to estimate the heat transfer coefficient based on fundamentals. On the other hand, scaling up the required heat transfer area just based on production would be considered a strictly empirical approach and should be replaced with the concept of using first principles to the extent that it is practical. An example of change in equipment might be a pilot plant with a fluid bed dryer used to remove volatiles from a solid. In the commercial facility, it is desirable to reduce nitrogen consumption by using a multiple counter-current staged device such as a purge bin. When considering Eq. (1.1), it can be converted to Eq. (1.3) as follows: (1.3) where K = A combined constant that incorporates the solid area and porosity, as well as the mass transfer coefficient. In this example, it is likely that the driving force and constants will change. Thus returning to the laboratory or pilot plant to simulate a closer approach to what is desirable for the commercial facility is mandatory, unless there is other data available. One of the examples of a failed scale-up that was related to a change in equipment is included in Chapter 14. Mode Related Mode is defined as a manner of acting or doing. When discussing scale-up, changing the mode of equipment is usually associated with a change from batch to continuous operations. This change in mode occurs frequently as a process or product is commercialized. It is generally associated with a reactor mode change from batch to continuous. Thus this discussion of change in mode will use reactor scale-up as the basis. However, the approach and conclusions will also fit other unit operations. The type of equipment used in a commercial plant is likely to be significantly different from that used in a bench-scale experiment. For example, a chemist at a major producer of polypropylene used a coke bottle to polymerize propylene. Before considering reactor scale-up, the two different types of continuous reactor models need to be considered. These two approaches are the Plug Flow Assumption (PFA) model and the Continuous Stirred Tank Reactor (CSTR) model. These are described as follows: • The plug flow model or PFA—In this model, each fluid particle or solid particle is assumed to have the same residence time. A typical example of this is a tubular reactor. The tubular reactor can be a tube(s) filled with a solid catalyst or be an empty tube(s). The actual approach to the PFA depends on the type of flow regime in the tube. The flow pattern in a tube packed with catalyst allows the closest approach to the PFA. Turbulent flow in an empty tube also provides a close approach to the PFA. However, laminar flow in an empty tube deviates from the PFA because of the parabolic velocity pattern in the tube. In laminar flow, the velocity is highest at the centerline of the tube and approaches zero at the wall of the tube. Laminar flow in empty tubes can create the largest deviation from plug flow. At high conversions, this deviation can cause the design of plug flow reactors in laminar flow to be 30% larger than those with true plug flow. Flow patterns in plug flow reactors are described further in Chapter 2. A laboratory batch reactor can be described with a PFA model, because all reactants have the same residence time. In a commercial tube reactor, the reactant concentration varies with the longitudinal position in the tube. In a batch reactor, the reactant concentration varies with time. The time in the batch reactor and length in the tube reactor can be easily related. If one considers a first-order batch reactor that is at a constant reaction temperature, the rate of reaction is as shown in the following Eq. (1.4): (1.4) Then by integration and substitution, Eq. (1.5) can be obtained as follows: (1.5) where R = The rate of reactant conversion. M = The reactant concentration. The subscripts indicate the starting concentration and the concentration after θ minutes. C1 = The reaction rate constant. C2 = A constant that includes vessel size and necessary unit conversion factors. Now in a commercial tubular reactor with either turbulent flow or laminar flow with packing, the residence time (θ) is simply related to the actual physical position (Li) and can be defined by the following Eq. (1.6): (1.6) where in Eqs. (1.4) through (1.6) R = The reaction rate, mols/minute. Mi = Reactant concentration at any time, mols/ft³. Mo = Reactant concentration at the start, mols/ft³. θt = Total reaction time, minutes. θi = Reaction time at any point in time, minutes. K = A constant with the units, 1/minutes. Lt = Total length of tubular reactor, feet. Li = Linear position in tubular reactor, feet from inlet. • The CSTR—This model assumes that the reactor is perfectly mixed, and therefore the concentration of the reactants is the same at any point in the tank. This means that the concentration of the reactants in the feed is immediately diluted to that in the tank. The concentration of reactants leaving the reactor will be the same as at any point in the reactor. Thus compared to the PFA reactor, the reaction is carried out at a much lower concentration of reactants. If the concentration of reactants is lower, the volume of the reactor must be increased. The increase in volume associated with a CSTR is a function of reactor conversion. As the reactor conversion is increased, the subsequent reduction in reactant concentration in the reactor is higher than at lower conversion. Thus as the design conversion is increased in the continuous commercial facility, the required reactor volume is increased. This increase in required volume can be mitigated by using multiple reactors in series. Another variation of the CSTR is a fluid bed reactor. In a fluid bed reactor, agitation and heat removal are both accomplished by a circulating gas stream. The gas is at a high enough rate, such that the solid particles are lifted up from the bed and suspended in the flowing gas without large amounts being entrained with the circulating gas. Because the gas is at a lower temperature than the reaction, it serves to remove the heat of reaction. The heat removal provided by the gas may be enhanced by utilizing a gas that contains a liquid that can be vaporized. Heat transfer in a fluid bed can also be enhanced by the installation of heat exchange tubes. When considering the reaction, a fluid bed reactor should be simulated as a CSTR reactor. Although, certainly, reactor flow patterns are much more complicated than described by the PFA or CSTR models, these describe the two regimes most encountered when considering scale-up. There are other more detailed approaches for determining flow patterns in reactors (such as Computational Fluid Dynamics (CFD)). However, an approach considering these two basic models (CSTR and PFA) will suffice for the examples and calculations involved with most industrial reactor systems, and these approaches are far less complicated than the CFD techniques. The previous discussion covered the two basic reactor models. These models provide a basis for scale-up from either bench scale or pilot plant scale as the mode is changed from batch to continuous. These models can be used to analyze the following areas during the process development period: • Kinetics/Reactor Volume/Stages Considerations—Kinetics determined from batch bench scale must be adjusted for the loss of effective residence time in CSTRs or plug flow reactors at laminar conditions. The actual details of the scale-up will depend on the styles of reactors being used and considered. This will be covered in Chapter 2. • Thermal Considerations—Thermal considerations consist of both steady-state heat removal/addition during the reaction period and the dynamic operations of heating/cooling prior to reaction initiation or in the case of a loss of temperature control. • Mixing Considerations—Mixing considerations are determined by the goal of the mixing and the characteristics of the material in the reactor. Although a mixer supplier may do the detailed agitator design, the type of mixing is usually specified by the designer. • Exact location of each nozzle—Nozzle placement for a commercial reactive system can determine the effective kinetics, reactor fouling, or particle formation. For a batch reactor, it is likely to be easier to get almost instantaneous distribution of the catalyst throughout the reactor. Due to size and limits on injection rate, this may not be the case for a large commercial reactor. Each of these is discussed in more detail in Chapter 2. It must be recognized that each of these items is important, and short cuts will likely lead to improper scale-up. A thorough analysis will contribute to a successful scale-up. The design and detailed scale-up of reactors has been discussed in several books. The purpose of this book is to discuss what items should be considered when scaling up reactors rather than a new perspective on the detailed scale-up of the reactors. These considerations and the proposed approach also apply to other types of unit operations, for example, a purge bin dryer or a fixed bed adsorption column. These might be scaled up from a bench-scale experiment or from a pilot plant. Adequate modeling of the batch facility will allow estimation of C1, which can be assumed to be constant in designing the continuous commercial unit. Theoretical Considerations Although the idea of a scale-up error associated with an incorrect theoretical approach might seem unlikely, it can easily happen. It generally happens because observations in the bench-scale experiments that seem unusual are explained by an incorrect hypothesis. A real-life example of this is as follows: A bench-scale reaction was being conducted at a constant temperature and pressure in the liquid phase. The reactant was a volatile hydrocarbon. The product of the reaction was a solid without any vapor pressure. It was observed that the use of a less volatile hydrocarbon diluent increased the reaction kinetics by 30%–50% when compared to the use of reagent grade hexane at the same temperature and pressure. The concentration of the reactant was adjusted to keep the pressure constant. It was hypothesized that this improvement in reaction kinetics was due to an impurity in the less volatile diluent, which created a more mobile catalyst site and enhanced reaction kinetics. This hypothesis was accepted without any thought given to alternative hypotheses or the difficulty of purifying and recycling the less volatile diluent. The process design and subsequent construction of the plant was based on the use of the less volatile diluent. The application of the phase rule, as shown in the following Eq. (1.7), and a consideration of alternative explanations would have been of value. (1.7) where F = degrees of freedom (temperature, pressure, and composition). C = number of components in the mixture. P = number of phases present. In the example being considered, C = 2 (reactant and diluent) and P = 2 (liquid and vapor); thus the degrees of freedom will be equal to 2. These have already been specified as temperature and pressure. Therefore the compositions of the liquid and vapor phases are fixed. If time had been taken for additional considerations as described, it might have been recognized that a more theoretically correct and simpler hypothesis would have been that, at constant pressure and temperature, there would be an increased concentration of the volatile reactant in the liquid phase. This by itself would increase the kinetic rate of the system. This example illustrates the following several scale-up considerations: • The value of carefully considered potential alternative hypotheses. • The need to carefully consider complex proposed theoretical explanations. Simple explanations are usually the most likely. • The need for a study design to allow the process developers to understand and visualize potential problems with the entire process. More details on this example are provided in Chapter 14. Other considerations that are classified as theoretical errors are those that fall into the covert category, that is, they are important but are either overlooked or assumed to be unimportant at the early stage of the project. Some examples of these types of errors are as follows: • Design of piping that is in a two-phase flow either in steady-state operations or during startup or shut down. Although the final design of this piping would normally be handled at a later stage of the engineering design, it is likely of value to determine the impact of this critical design at an early point. If this design is critical and is not considered at an early stage, the detailed designer may be forced to provide a less than optimum design, which can cause flow in the pipe to be in an undesirable regime. • Flare elevation and location may be important at an early stage for processes that involve a high release rate to the flare. Failure to adequately consider this at an early stage may lock in a flare location and later force the final flare design to be very tall to enable the ground-level radiation to be at an acceptable level. Thermal Characteristics Although the basic thermodynamics do not change as the process is scaled up, the change in equipment dimensions may change characteristics such as A/V ratio, heat transfer coefficient, or fouling of heat transfer surfaces. In addition, if the reaction conversion is increased, the amount of heat that must be removed will also increase. Because the majority of reactions are exothermic, scale-up problems associated with thermal considerations usually manifest themselves as what is often referred to as a “temperature runaway.” The propensity for a “temperature runaway” in an exothermic reaction might never be observed in the bench-scale reactor due to the high area-to-volume ratio (A/V) of the reactor. However, when the reactor is scaled up, the reduced A/V ratio will increase the probability of such an event. For example, if a bench-scale or pilot plant reactor is scaled up by a factor of 50 (V2 /V1 = 50), the A/V ratio will decrease by 75% if the reactor height-over-diameter ratio is maintained constant. This can be illustrated in the mathematics described as follows: Given: Reactor H/D = 3 for both the smaller and larger reactors. Set the A/V ratio = R1 and R2, where the subscripts denote the smaller and larger reactors, respectively. Then (1.8) Therefore (1.9) With a scale-up ratio of 50, it can be shown that the ratio of the diameters (D1/D2) will equal 0.27 giving a value for R2/R1 = 0.27 or a decrease in A/V of approximately 75%. In addition to heat removal capability, a batch reactor in the laboratory or pilot plant can be quickly brought up to reaction temperature. This is likely not to be true in a commercial batch reactor because of the reduced A/V ratio. This could lead to undesirable reactions in the commercial reactor because it heats up slower than in the smaller reactor. Although this discussion on thermal considerations in scale-up has been based on exothermic reactions, similar considerations apply to endothermic reactions, wherein heat has to be added to keep the reactor at the reaction temperature. Safety Considerations As processes are scaled up, the amount of explosive or toxic material increases in proportion to the volume of the vessels being utilized. This can impact the safety of the commercial process in the following three ways: • The size of an explosive vapor cloud formed if a vessel leak occurs will be larger and the probability of finding an ignition source will increase. • The probability of an unconfined vapor cloud explosion increases as the amount of material increases when going from pilot plant size to commercial size. • If the material in the vessels is toxic, there is a greater toxicity risk, because more material will be released. The safe operation of a commercial size unit is often more difficult than a benchscale or pilot plant unit. This does not mean that safety is not a consideration for smaller units. Unsafe operation or unsafe procedures in small units have the potential for personnel injury and equipment destruction. However, because of the three aforementioned aspects, there are several reasons that the larger units require an enhanced focus on safety. These reasons are as follows: • There is always a larger volume of chemicals stored in commercial units. This creates the increased possibility for a catastrophic explosion or fire. • The larger equipment will create a greater load on the safety release system, which will require more consideration in the design and operations phases of this equipment. • There is a greater potential for a temperature runaway in the reactor associated with a larger facility because of thermal considerations, as discussed earlier. • Inadequate review of facility changes can occur in bench scale, pilot plant scale, and commercial scale. Safety problems associated with these inadequately reviewed changes tend to have a larger impact on commercial facilities. • Buildup of impurities in the commercial recycle due to unknown side reactions may cause safety-related problems (corrosion or internal explosions). This potential may not have been apparent in the bench or pilot plant because there was no recycle system or it was not operated for long enough periods of time to create a buildup of side reaction products. • Diluent selection may be made with minimal thought about toxicity. This may not be a problem in a bench-scale operation because of the fume hoods and small volume of chemicals used. However, in commercial facilities, a leak of the toxic diluent may be a threat to safety. • Unfortunately, in a commercial plant due to economic pressures, there will be a greater tendency to expedite and bypass steps in operating procedures. The risks associated with most of these can and will be mitigated by what most operating companies refer to as “management of change” and project review procedures. However, as was the case at the fertilizer plant in West, Texas, larger facilities do have increased risks. In this episode, a routine fire turned into a disaster when large amounts of ammonium nitrate stored onsite were ignited and exploded. It will not be possible or desirable to analyze all the potential safety problems during the early stages of project development. Many of these should not be considered until and if the project enters the process design phase. The four areas that will require careful consideration in the early project development phase are as follows: • Insuring that the facilities to prevent temperature runaways are always adequate and operable. Although this to some extent is a PSM (Process Safety Management) issue, the development and design of the facilities must include means to test these facilities without creating a plant shutdown. • Doing a careful study of the potential for side reactions and buildup of potentially dangerous impurities in a recycle or vent stream. This will include the possibility that air that might leak into a vacuum system might build up in a vent stream and form an explosive mixture. In some developments, air (oxygen) is used as a reactant. This introduces safety concerns not only in a recycle or vent stream, but also in the reactor. If it is possible that internal explosions due to the presence of oxygen may occur, it may be desirable to get the assistance of a testing company to assist in sizing the required vent. • Reviewing the amount and type of material that is being stored and looking for ways to minimize the amount of dangerous and toxic chemicals stored onsite and offsite. • Making a careful assessment of new chemicals that are being used or familiar chemicals that are being used under different operating conditions. For example, if a new catalyst developed in the laboratory is to be introduced to a process, the exact composition of the catalyst and potential side reactions must be considered. Various handbooks and MSDS sheets provide data on compounds to be used. Another example illustrates the potential for safety-related problems associated with changes in operating conditions. Although ethylene is a wellknown chemical, if operating conditions are changed (higher temperatures and pressures), this change by itself can cause ethylene to undergo an explosive decomposition reaction. Recycle Considerations Essentially all bench-scale and many pilot plant designs do not have the capability to recycle. Thus the operation of these facilities does not provide knowledge to determine the impact of impurities or inerts that might buildup in a commercial size plant with recycle equipment. Almost all commercial operations have capabilities to allow recycle of unreacted chemicals and/or reaction diluent. Bench-scale laboratory facilities rarely have these facilities. Although pilot unit operations may have recycle facilities, they often are not operated or do a poor job of simulating what will happen in the commercial facility. If there are reaction by-products that have a similar volatility to the recycle reactants or diluent, they will buildup in the recycle stream. It is likely that they will be a reaction impurity and cause the reaction to become less efficient or have other detrimental effects on the reaction. Many times, the run period of a pilot plant will not be long enough to cause buildup of these impurities. Another aspect of commercial operation is operating under vacuum. If the commercial unit has a drum that is under vacuum, small amounts of air will be pulled into the process. This air will concentrate with the most volatile chemical (diluent, reactants, products, or by-products) and be purged to a vent or flare. It is important that the composition of this vent not be in the explosive range. If it is in the explosive range, it could be ignited by the flare or by a lightning strike. Regulatory Requirements Regulatory requirements that are not adequately considered can create project delays and impact projects so that they are never started up. Although this book does not cover these requirements in detail, one should be aware that they can fall into the categories of product regulations, process safety/hazards, and byproduct disposal. New chemicals might be covered by various governmental regulations. Process safety and hazards are covered by OSHA regulations. Byproduct disposal is covered by “cradle-to-grave” environmental regulations. Project Focus Considerations To confirm that a research project is really of value, economics must be considered. The economics can be developed from the Capital Investment (CAPEX) and the Operating Cost (OPEX). The development of these economics at an early stage will avoid the risk of going ahead with a research project that will not be financially attractive. Although it can be argued that this step cannot be undertaken until the basic research is finished, if one uses a minimal amount of bench-scale data and first principles of engineering, values of CAPEX and OPEX can be determined with sufficient accuracy to make a decision about proceeding with the research and project development efforts. Although the focus of the project team is on the details of the experiments, project design, and project execution, they must also keep confirming that the project is economically viable. The best way to do this is to execute a study design using first principles. The study design should be done at an early stage of the project as soon as work force is available. Even though the experimental work in a bench-scale apparatus or pilot unit is still underway, it is not only possible, but also mandatory to be able to focus on economics using a study design. If the viability of the project is not continually evaluated, at some point during this expenditure of money, management will realize that the economics of the project are not what was originally thought, and the project will be dropped after a significant expenditure. This potential problem can be resolved or highlighted by the approach of using first principles of chemical engineering to prepare a study design and develop investment and operating cost estimates for the project. The design, cost estimates, and economic feasibility can be modified as additional development work is completed. The development of this study design is discussed later in Chapter 9. In addition to this chapter, Chapter 11 discusses other uses of this study design. Take Home Message This chapter has dealt with potential problems associated with scale-up. It is hoped that the items highlighted in the chapter will serve as a reminder to the development team that no scale-up is a simple “just drop it in and go.” There are many potential pitfalls and speed bumps along the way. Now that the potential problems with scale-up have been highlighted and summarized, the next few chapters deal with each of these areas and discuss the actions that can be taken to mitigate the risk in each of these areas. 2 Equipment Design Considerations Abstract Chapter 1 discusses several scale-up failure modes. Several of these (equipment related, mode changes, and thermal considerations) involve the process design of equipment. This chapter deals with the various aspects of process design that involve scale-up from bench scale or pilot plant scale to commercial scale. The approach will be considered by some to be too empirical and not scientific enough. It is presented as an attempt to reach a reasonable compromise between industrial time pressures and the need to be as theoretically correct as possible. In addition, there are certainly design details that are not covered but will normally be covered in the detailed process and/or mechanical design. Keywords catalyst efficiency computational fluid dynamics CSTR reactor driving force kinetics nozzle design residence time distribution tubular reactor Introduction Chapter 1 discusses several scale-up failure modes. Several of these (equipment related, mode changes, and thermal considerations) involve the process design of equipment. This chapter deals with the various aspects of process design that involve scale-up from bench scale or pilot plant scale to commercial scale. The approach will be considered by some to be too empirical and not scientific enough. It is presented as an attempt to reach a reasonable compromise between industrial time pressures and the need to be as theoretically correct as possible. In addition, there are certainly design details that are not covered but will normally be covered in the detailed process and/or mechanical design. When considering equipment design based on laboratory bench-scale or pilot plant experiments, the focus is generally on reaction, mixing, extraction, solid– liquid separation, heat transfer, and removal of the volatile component. Scale-up of this equipment can be lumped together into the following three areas: • Reactor scale-up, including mixing and heat transfer. • Removal of the volatile using equipment with or without agitation. • Solid–Liquid separation. Extraction is really a combination of reactor scale-up (vessel design with no reaction) and solid–liquid separation. Liquid–liquid separation can be treated in a similar fashion to solid–liquid separation. They both involve settling, which can be done in a vessel or equipment with enhanced settling capability such as a centrifuge. Other types of unit operations such as distillation, fluid flow, vapor– liquid separation, and vapor–solid separation can generally be designed from first principles without bench-scale or pilot plant experiments. The operations on which scale-up is focused have in common that they are kinetically limited and can be described by the following Eq. (2.1), as well as being described in the Introduction and Chapter 1. (2.1) Although the definitions of the various terms were covered earlier, they are repeated here for clarity. In the aforementioned equation, •R = The kinetic rate, which could be heat transfer, removal of volatile, reaction, or any process step wherein equilibrium is not reached instantaneously. Typically, the rate will be expressed in absolute units such as BTU/h or lbs/h. As defined earlier, the ratio of the rate in the commercial plant to that in the pilot plant or bench scale is the scale-up ratio. The scale-up ratio can also be used to define the increase in capacity associated with a larger commercial plant relative to a smaller commercial plant. •C1 = A constant determined in the laboratory, pilot plant, or smaller commercial plant. Generally, this constant will not change as the process is scaled up to the larger rate. •C2 = A constant that is related to the equipment’s physical attributes such as area, volume, L/D ratio, mixer speed, mixer design, exchanger design, or any other physical attribute. This constant will be a strong function of the scale-up ratio. However, as discussed later, it is not always identical to the scale-up ratio. •DF = The driving force that relates to a difference between an equilibrium value and an actual value. This might be temperature driving force, reactant concentration driving force, or volatiles concentration driving force. The driving force will normally have the form of the actual value minus an equilibrium value. In the case of solid–liquid settling, the C1 term is somewhat more complicated and involves the difference in density between the solid particles and the liquid, the diameter of the particles, and the liquid viscosity. In this case, the driving force would be the gravitational force, which might be enhanced by the use of a centrifuge. In all of the scale-up cases considered, it is mandatory to know both a C1 that remains constant as the process is scaled up and a C2 that is representative of the increase in physical size. The value of C2 will not always be equal to the production scale-up factor, because changes in the driving force will allow a C2 ratio that is different than the production scale-up factor. Reactor Scale-up The purpose of this book is not to provide a detailed technique for every phase involved in the scale-up of reactors, but to point out what things need to be considered and a recommended route to achieve success in these areas. It is highly probable that many of the areas mentioned will require assistance from equipment suppliers. In addition, because the focus of the book is on scale-up concepts, I have chosen to deal with first-order reactions that have no competing or secondary reactions. Certainly, this is the only part of the vast field of reaction engineering. There are reactions that are second and third order. In addition, there are chemical reactions that rather than being rate limited are equilibrium limited. Perhaps one of the more complicated reaction systems is one wherein the products react further to produce an undesirable by-product. None of these more complicated systems are specifically covered. However, the concepts described in this section are applicable to all of these systems. For example, a heterogeneous commercial continuous perfectly mixed reacting system that produces both a desirable product and an undesirable by-product may have a higher yield of the desirable product than in a batch bench-scale reactor. It is possible that the residence time distribution (RTD) and kinetics may cause a lower amount of by-product to be formed in the commercial reactor. This possibility can be predicted by developing kinetic relationships from batch reactor data and the use of the concepts described in this chapter. In the process development stage, even before the commercial reactor design is selected, consideration must be given to the reactants, catalyst, and diluent. In the reaction that is being scaled up, it will be important to use the same precise material as is planned for use in the commercial facilities regardless of what reactor design will be selected. If a recycle system is planned, buildup of impurities or inerts may create a different reactant concentration, which should be simulated in the bench-scale laboratory facilities. This can be done using a two-step procedure. The reactor concentration of reactants, diluents, inerts, and impurities with a recycle system in place should first be estimated during the study design. The second step will involve conducting the reaction using this reactor composition. An example of this is the bulk polymerization of propylene to produce polypropylene. Even polymerization-grade propylene (containing 99.5% propylene) contains small amounts of propane, which can only be removed by super fractionation or more expensive removal treatments. The normal technique is to allow the propane to build up to the optimum economic concentration and maintain this concentration by purging some of the unreacted propylene stream that contains propane. A typical optimum concentration of propylene in the reactor with these conditions might be 90%. Thus to fully simulate the anticipated conditions in the commercial plant, the laboratory batch runs might be conducted with a reactor feed concentration of 95% propylene rather than polymerization-grade propylene at 99.5% propylene. A feed concentration of 95% propylene will produce a reactor concentration of 90% propylene, assuming a propylene conversion of 50%. If the kinetics are well known, it will be possible to adjust the kinetics for the presence of the increased level of propane. However, there might also be some unanticipated effects because of the lower concentration of propylene, which can only be determined by the actual experimental results at the anticipated reactor propylene concentration. When considering laboratory reactor scale-up, the first step is to decide the directional mode of the scale-up. There are four possibilities. Although one can visualize other possibilities, the following are the main ones of interest: 1. Scaling up from a laboratory batch reactor to a pilot plant or commercial batch reactor. 2. Scaling up from a laboratory batch reactor to a pilot plant or commercial Continuous Stirred Tank Reactor (CSTR). 3. Scaling up from a laboratory batch reactor to a continuous pilot plant or a continuous commercial reactor, wherein both are operating in the plug flow mode and can be simulated using the Plug Flow Assumption (PFA). 4. Scaling up from a continuous laboratory plug flow reactor to a continuous pilot plant or a continuous commercial reactor, wherein both are operating in the plug flow mode (PFA). Although there may be other possibilities, these are the most likely and most frequently encountered. Of course, there are scale-ups from a continuous pilot plant operating in the CSTR or plug flow mode to a commercial reactor operating in a similar mode. These scale-ups are essentially scaling up heat transfer and agitator design. The concepts discussed in the four scale-up scenarios aforementioned are applicable to scaling up from a pilot plant to a commercial plant or from a small commercial plant to a larger one. In considering reactor scale-up, there are three main areas that must be considered, which are reactor mode, mixing, and heat transfer. The need to consider these areas is not always required for each of the aforementioned cases. Table 2.1 illustrates what should be considered for each case. Table 2.1 Scale-up Modea RTD Batch to batch Mixing Heat Transfer Nozzle X X X X X X X X X X X Laminar flow in packed bed X X X Turbulent flow X X X Batch to CSTR Batch to Tubular Laminar flow Tubular to Tubular Laminar flow X X X X Laminar flow in packed bed X X X Turbulent flow X X X a Scale-up mode is the description of moving from the smaller facility to a larger one. For example, “Batch to Batch” involves moving from a bench scale batch reactor to a commercial batch reactor and will involve considering mixing, heat transfer, and nozzle design and location. Some explanation for the aforementioned table is necessary. An “X” indicates that this variable needs to be considered in the design of the larger reactor, whether it is a commercial or pilot plant reactor. The “Scale-up Mode” indicates what the type of reactor transition is. The four most important considerations are as follows: • RTD is an acronym for Residence Time Distribution. RTD provides a calculation technique to evaluate the bypassing effect in a CSTR that reduces the effective residence time. As shown in the table, it is not significant in the scaleup modes of batch-to-batch or batch-to-tubular flow if the reactor contains packing or if the tubular flow is in the turbulent regime. However, it must be considered in the other modes. • Mixing is the desire to have a similar mixing pattern in the larger reactor. • Heat Transfer deals with the design considerations that insure that the likely reduced A/V ratio of the larger reactor is taken into account. It includes the phases of reactor startup, steady-state operation, and potential loss of temperature control (temperature runaway). Heat transfer considerations will be covered in more detail in Chapter 4. • Nozzle is the design consideration that deals with the desire to obtain good dispersion of the catalyst and/or reactants at the inlet to the reactor. An example of the use of this table would be the scale-up of a laboratory batch reactor to a commercial size CSTR. Each of the areas shown in Table 2.1 must be considered in the design. In a CSTR, because some of the reactants leave the reactor immediately and some significant amount remain in the reactor as long as 4 to 5 times the average residence time, the RTD must be considered. Evaluation of mixing patterns often requires the assistance of a mixer supplier and possibly the utilization of enhanced simulation techniques such as Computational Fluid Dynamics (CFD). CFD is a mathematical technique that allows predicting the flow pattern at every point in a reactor based on the reactor and mixer designs. It is of great value in predicting the flow pattern at the reactant and/or catalyst feed injection points. The size, configuration, and location of the feed and/or catalyst nozzles must be carefully considered. It is likely that the reactants in the bench-scale reactor were pressured into the reactor at a high rate of speed and thus became mixed almost instantaneously in the small reactor. This is rarely possible in a larger reactor. The four scale-up possibilities are as follows: Laboratory Batch Reactor to Larger Batch Reactor (Pilot Plant or Commercial) Although this might seem like a relatively easy type of scale-up, there are some areas that must be considered. As indicated in Table 2.1, consideration of the RTD is not required because the larger reactor is also a batch reactor. However, it is inadequate to just adjust the reactor size for the larger batch requirements. The areas that must be considered are discussed in the following paragraphs. The mixing patterns in the larger reactor should be similar to that in the laboratory. This requires a significant amount of consideration. The first step is generally to maintain the same height-to-diameter ratio (H/D) and the same agitator diameter to tank diameter ratio (DA/DT). Although this statement sounds highly desirable, it often leads to conflicting dimensions and conclusions. For example, similar mixing patterns can mean any of the following: • Equal agitator tip speed—This might be a good criterion for reactions that involve a solid that is easily destroyed by high tip speeds. However, it may mean that the power-to-volume ratio (P/V) will be lower in the larger reactor than in the smaller reactor. • Equal power-to-volume (P/V) ratios—If the selected target similarity between the smaller and larger reactors is P/V, then the angular velocity will be lower in the larger reactor unless changes are made in the agitator diameter to tank diameter ratio or the design of the impeller. However, because the larger vessel has a larger diameter agitator, the tip speed will be greater in the larger vessel. Because of the potential confusion in scaling up an agitator, it is often desirable to collaborate with a mixer supplier. In addition, mathematical and computer techniques such as CFD will allow an accurate representative of the mixing pattern in the reactors. These approaches are described in more detail in Chapter 7. For the batch reactor to batch reactor scale-up, there are also thermal characteristics that must be considered. Temperature control may be more difficult in the larger reactor due to reduction in the A/V ratio. As the diameter of a vessel is increased, the volume increases faster than the surface area, and the A/V is reduced. This reduction in A/V ratio causes the larger reactor to heat up or cool down at a slower rate unless this difference is considered in the design. A laboratory batch reactor can be brought up to reaction temperature quickly relative to a commercial size reactor. If the vessel is filled with reactants and the rate of temperature change is slower than in the laboratory, there may be side reactions that occur in the commercial facility that did not occur in the smaller reactor. In addition to side reactions, the slower change in temperature may lead to producing a product with a different morphology. An example of failure to adequately consider the change in A/V ratio is discussed in Chapter 14. In addition, the thermal characteristics are discussed in greater detail in Chapter 4. It should be stressed that essentially all reactions have a heat of reaction. The heat of reaction needs to be determined and considered along with the reduced A/V ratio in the design. Because of the larger A/V ratio in a laboratory reactor, the development chemist may not believe that there is a heat of reaction associated with the process being developed. Literature review, mathematical determination from heats of formation, or a more careful investigation in the laboratory may be required to determine the heat of reaction. The nozzle design is often a critical area when a batch reactor is scaled up. In a laboratory batch reactor, the reactants can be injected into the reactor quickly and at a high velocity into a well-agitated area. These catalyst or reactants are often flushed into the reactor to aid in their dispersion. If the reaction is rapid, the actual commercial reactant nozzle design will be critical. Such items as injection velocity, injection location, and the need for a flush must be considered. An example of the questions that might occur during the design of a critical nozzle for injecting a catalyst or reactive chemical into a reactor might require consideration of the following: • Velocity—A high velocity of 8–20 fps might be required at all flow rates to insure adequate dispersion. This might require the design of a nozzle with a variable size opening to control the velocity at low flow rates. Another alternative would be the utilization of a flush to increase the velocity. • Injection point—The reactive material should be injected into an area that has maximum turbulence. That is “dead zones” should be assessed and avoided. This may require the use of a nozzle that extends into the vessel. A flush nozzle will tend to inject the material into a stagnant zone. Laboratory Batch Reactor to a Commercial Continuous Stirred Tank Reactor Because of investment and operating costs, heat transfer requirements, and minimal required operating attention, the commercial reactor is often a CSTR. As indicated earlier, this reactor model assumes a perfectly mixed reactor, wherein the concentration of reactants and catalyst is the same at any point in the reactor and is equal to the outlet concentration. This assumption is generally considered adequate for the calculations involved. The primary difference between a laboratory batch reactor and a commercial CSTR will almost always require that adjustments be made to the batch reactor results. In addition to the areas (mixing, heat transfer, and nozzle design/location) discussed for scaling up a batch reactor to a larger batch reactor, this scale-up requires additional considerations. A batch reactor may have a driving force (DF) that varies during the course of the reaction. However, in a CSTR conducting the same reaction, there will be a fixed driving force that is almost always lower than the average driving force in the batch reactor. In addition, the concept of RTD must be considered if a CSTR is used. The two cases to be used as examples are as follows: • In the first case, assume that one is scaling up a batch reactor in which a reaction occurs between two liquids such as follows: (2.2) The concentration of the reactants (the driving force, DF) starts high and diminishes with time as the reaction occurs. When this same reaction occurs in a perfectly mixed vessel (CSTR), the concentration of the reactants at any spot in the reactor is equal to the its concentration leaving the reactor, which will be much lower than the feed concentration or the average concentration in the batch reactor. Thus to achieve the same conversion in a CSTR will require more residence time, which translates into a larger reactor or multiple reactors in series. • In the second case, consider scaling up a bulk (no diluent) batch polymerization reactor, wherein the product and catalyst are insoluble solids. In this batch polymerization, each catalyst particle stays in the reactor for the exact same period of time. In a commercial CSTR, some of the catalyst and reactants leave the reactor with a minimal amount of residence time. Conversely, some of the catalyst and reactants have a very long residence time. This is referred to as RTD. The impact of the RTD on the CSTR performance requires an increased residence time to allow operation at the same catalyst efficiency as in the batch reactor. When considering the first case, assume that the reaction in the batch reactor is one that is first order with respect to each of the two components shown in Eq. (2.2). Fig. 2.1 shows the effect on reactor volume by the change from a batch reactor to a continuous reactor as a function of overall conversion in a single reactor. As noted in this figure, as the conversion is increased, the ratio of the volume required in a CSTR to a batch reactor is increased. In a commercial plant, this impact is sometimes mitigated by providing the additional volume or installing additional reactors in series. Figure 2.1 Relative volume ratio of CSTR/PFA versus fractional conversion. For the second case, as an example to help understand the difference between a batch reactor and a CSTR, we will look at bulk (no diluent) polymerization of propylene. An example of a batch reactor and how the results can be scaled up to a CSTR will help to illustrate these concepts. Liquid propylene is often polymerized in a batch mode in the laboratory using the technique shown in Fig. 2.2. To start the polymerization, the reactor is filled with propylene; thereafter, alkyl is added, and the reactor is heated to the desired reaction temperature. A fixed amount of catalyst is injected as quickly as possible, and the reaction is allowed to continue for a fixed amount of time. It is then shutdown, and the amount of polypropylene is measured. Moreover, efficiency of the catalyst, expressed as pounds of polypropylene per pound of catalyst, is calculated. As shown in Fig. 2.2, the polymerization reactor is maintained at a constant temperature and pressure by a combination of coolant and propylene flow. If the pressure and hence the temperature begin to decrease, the flow rate of propylene will be increased. The increase in propylene flow will increase the concentration in the liquid phase causing the reaction rate to increase. In addition, the temperature and the pressure will increase. Because it is a batch reactor and all the catalyst is added immediately, each catalyst particle has the same residence time. In the polymerization of propylene, the catalyst serves as a template for the polymer particle. A simplified way to visualize this is that the growing polypropylene particle grows around the catalyst particle. If the catalyst particle is spherical, then the polymer particle will be spherical. The polymerization proceeds with propylene being polymerized and increasing the thickness of the growing layer of polypropylene that is on the catalyst particle. As the particle grows, the catalyst becomes less accessible to the propylene, resulting in a decay of catalyst activity. At the end of a fixed period of time, the reaction is terminated, and efficiency of the catalyst is determined by drying and weighing the polymer. As mentioned earlier during the polymerization, additional propylene is added to maintain pressure. Because the reactor is at constant pressure and temperature and there is no diluent, the concentration of propylene is constant. In addition, each catalyst particle is present for the same amount of time. Figure 2.2 Batch polymerization of propylene. In the commercial CSTR, the reaction temperature and concentration of propylene will be constant. The concentration of the catalyst, in the reactor outlet and at any point in the reactor, will also be constant after an initial short startup period. However, the RTD of the catalyst particles will be very broad as opposed to the narrow RTD in a batch reactor. This is shown graphically in Fig. 2.3. Figure 2.3 Catalyst particle RTD fraction with residence time <t/T versus residence time t/T. When considering the CSTR at the same residence time as in the batch reactor, this difference in RTD will reduce the efficiency of the catalyst relative to that obtained in a laboratory batch reactor by about 10%. However, the amount of reduction depends on the catalyst decay factor. The catalyst decay factor is an exponential factor, allowing for the fact that as residence time increases, the thickness of the polymer coating on the catalyst increases, and the porosity decreases. This reactor style change (batch reactor to a CSTR) can be compensated by increasing reactor volume or installing reactors in series. The performance in the batch reactor can be monitored by manipulating Eq. (2.1) to obtain Eq. (2.3). (2.3) where CE = The catalyst efficiency, lbs of polymer/lb catalyst. K = The simplified polymerization rate constant, ft³/lbs-h. M = The concentration of propylene, lbs/ft³. T = The residence time, hours. The 0.75 represents the catalyst decay factor. It can be determined by multiple batch polymerizations at variable residence times. In Eq. (2.3), if the catalyst efficiency (CE) is known, the polymerization rate constant can be calculated from the performance in the batch reactor. This polymerization rate constant along with concentration of propylene, residence time, and the catalyst RTD factor can then be utilized to predict performance in the scaled-up commercial CSTR reactor. Although the rate of addition of reactants and/or catalyst may not seem important, there may well be an impact of this variable. In the commercial continuous reactor, the concentrations of reactant and catalyst will be the same throughout the reaction at a concentration equal to the outlet concentration. As indicated earlier in the first case of a laboratory batch reactor, the concentrations of the reactants may vary over the time of the reaction. If the reactants and catalyst are added quickly in the batch reactor, the concentrations will have a predictable decay rate. However, if they are added slowly over time, the concentration versus reaction time relationship may be radically different. In an extreme case, if the reactants are added at the same rate as they are being converted, the concentration of the reactant might remain constant. This addition rate difference in the laboratory batch reactor could cause a change in the following: • In the amount of by-product produced by competing reactions. • The morphology of a solid being produced. • Other unanticipated differences. When considering this possibility, the equation discussed in this chapter and shown below can be utilized (2.1) As an example, when considering the aforementioned possibilities, either a change in the C1 (a constant related to the speed of the undesirable reaction) or the DF (Driving Force) could cause a change in morphology or by-product formation. From a scale-up consideration, the key is to keep this variable (C1) and the driving force (DF) constant. A similar problem might occur if the initial catalyst injection rate to a benchscale or pilot plant scale batch reactor is increased. If the reaction is exothermic, the rate of temperature rise may be a function of initial catalyst injection rate. Varying this injection rate may create a different temperature–time profile. If the temperature–time and/or the concentration–time profiles are important, they must be considered in the design of the commercial plant using a CSTR. In the CSTR, with a continuous catalyst rate injection, these profiles will not exist. Because these profiles will not exist in the CSTR, one must consider whether they existed in the batch reactor, and if so, did the existence of these profiles have an effect on the reaction rate or product quality. Another area of difference that might occur between the laboratory batch reactor and the commercial CSTR is related to the current phenomena of time and cost pressures in a business environment being more important than accurate results. For example, if a liquid phase catalytic reaction can be carried out in a low volatility diluent in the laboratory, there will not be a need for high-pressure equipment. This might be desirable from a cost/construction time and/or safety consideration. However, using this low-volatility diluent may not be desirable in the commercial plant. This brings up the question of how can these results conducted at low pressure be adequately translated into results that are valid for the higher-pressure operation. There will be the question of the unknown as the reaction pressure is increased. It is likely that due to the differences in pressure of the reaction, there may be differences in concentrations of the reactant, which will change the rate of reaction. While this change in rate of reaction can be accurately predicted from the change in concentration of the reactant, the change in reaction rate may impact the morphology of solids and/or by-product formation. Although there may be other areas that need to be considered when scaling up a laboratory batch reactor to a commercial CSTR, these are the primary ones. In summary, when scaling up from a laboratory batch reactor to a commercial CSTR, one should consider, in addition to the factors mentioned for scaling up from a laboratory batch reactor to a commercial CSTR reactor, the following: • The RTD of the catalyst and/or reactant. • The fact that the concentration of reactants in a CSTR is equal to the outlet concentration, which may be much lower than the average concentration that existed in the batch reactor. • The concentration of catalyst or reactant, which may exist as a function of reaction time in the batch reactor. • The temperature–time profile that may exist in the laboratory batch reactor and is not likely to exist or is likely to be radically different in a CSTR. Laboratory Batch Reactor to a Commercial Tubular Reactor Commercial or pilot plant tubular reactors are essentially a piece of pipe or tubing that may or may not be jacketed for heat removal/addition. Most of the concepts discussed earlier apply to this mode of scale-up. In the tubular reactor, there is normally no agitator; therefore mixing is not a criterion. However, the flow regime—turbulent or laminar—is important, as discussed later. Certainly, the aspect of heat transfer is also important in the commercial reactor design. Heat transfer in the commercial or pilot plant tubular reactor is accomplished by the following: • Jacketed tubes—Jacketed tubes can be utilized to remove the heat of reaction or, in the case of an endothermic reaction, they can be used to add heat. These tubes normally are a long tube of moderate diameter, which will provide sufficient area for heat transfer and sufficient residence time to achieve the target reactant conversion. In theory, it would be possible to have multiple parallel tubes to provide the desired residence time. This approach (multiple small diameter tubes) would provide a larger area-to-volume ratio and, hence, more heat transfer area for a given residence time. Flow distribution can become an issue with this approach, especially if either reactants or products have a tendency to foul and restrict flow. However, generally, this approach is not utilized. • Conversion control—This technique can be used with or without external heat transfer. In this technique, the primary control of temperature is reactant conversion. If external heat transfer is not used, this technique will almost always result in a temperature that varies with time and/or position of reactants along the tube. Assuming that the reaction being considered is exothermic, the temperature increases as the reacting mass continues through the tube. At some point, additional chilled reactants are added, which both increase the decreased concentration of the reactant and cool the reacting mass. Thus the heat of reaction is removed by the sensible heat associated with the fresh feed injection. For some reactions conducted in tubes, a secondary control of the temperature is rate of catalyst addition, that is, for an exothermic reaction experiencing higher than desired temperatures, the conversion can be reduced by reduction of catalyst flow, which will reduce the reaction temperature. Conversely, for an endothermic reaction under a similar scenario of higher than desired temperature, an increase in reaction rate will cause a reduction in temperature. Essentially, all reactions of interest are exothermic. Either of these cases will have a slow response and a long lag time. This will cause temperature fluctuations in the reactor temperature, as described in Fig. 2.4. • The nozzle location and design are both important for this type of commercial reactor. The reactant nozzle locations will be determined by heat balances around the reactor section. These heat balances will help determine where the chilled reactants should be injected to minimize the temperature spikes along the tube. The nozzle design must be well designed to achieve a good radial distribution of the chilled feed. • The injection of cold or hot reactant, diluent, or catalyst can also be used to boost the concentration of reactant or catalyst, as well as cooling/heating the reacting mass. This type of temperature control will result in a temperature profile similar to that described in the previous case. Figure 2.4 Temperature profile in adiabatic tubular reactor. As in the CSTR, the RTD of the catalyst and/or reactants is important. However, unlike the CSTR, a commercial tubular reactor might have a RTD such that the PFA is a good assumption or deviates widely depending on several factors. The various alternatives for a commercial tubular reactor are shown in Table 2.2. Table 2.2 Commercial Reactor Case Flow Regime Catalyst Bed Model 1 Turbulent Not applicable PFA 2 Laminar Yes PFA 3 Laminar No NON-PFA A tubular reactor containing a catalyst bed with a laminar flow regime will be in true plug flow. However, without the catalyst bed, the laminar flow regime will deviate greatly from this PFA. A tubular reactor in turbulent flow can also be modeled using the PFA whether there is or is not a catalyst bed in the tube. These differences in flow pattern are shown in Fig. 2.5. Figure 2.5 Flow patterns in tubular reactor. For the commercial tubular reactor in turbulent flow or containing a bed of catalyst, the reaction results should be very similar to that obtained in the laboratory batch reactor, that is, equal residence times in the commercial reactor compared to the laboratory batch reactor should provide equal conversions if all other factors are the same. In addition, mixing is not a consideration. Thus the only areas of scale-up consideration are the heat transfer and nozzle design, which were discussed earlier. However, for a tubular reactor in laminar flow, the RTD will differ greatly from the PFA and, hence, from the laboratory batch reactor’s results at the same residence time. A typical velocity profile in a tubular reactor in laminar flow is shown in Fig. 2.6. This parabolic type of velocity distribution results in a variable reactant velocity and a nonplug flow RTD. Figure 2.6 Typical laminar flow in tubular reactor. Laboratory Tubular Reactor to a Commercial Tubular Reactor As described earlier, a tubular reactor (or a similar type reactor) is designed so that reactants enter one end of the tube continuously, and the reacting mass consisting of the products and reactants exits the other end. Most of the scale-up considerations have been discussed earlier. The remaining questions are associated with the correct approach to modeling the reactor over a combination of regimes. Table 2.3 shows the different scale-up possibilities for a laboratory tubular reactor to a commercial reactor. These possibilities are for tubular reactors with packing (catalyst or adsorbent, for example). If the tubes are filled with packing, it can be considered as a scale-up from turbulent flow regardless whether the flow is in the laminar regime or not. Generally, turbulent reactors are considered to be plug flow reactors. This will become clearer as each case is discussed. Table 2.3 Case Number Laboratory Regime Commercial Regime 1 Continuous turbulent flow Continuous turbulent flow 2 Continuous turbulent flow Continuous laminar flow 3 Continuous laminar flow Continuous laminar flow 4 Continuous laminar flow Continuous turbulent flow In Case 1, it can be assumed that if the turbulent flow pattern is maintained in the commercial facility, then both laboratory and commercial facilities act as true plug flow reactors. Thus equivalent results should be obtained if the reaction conditions (residence time, temperature, etc.) are maintained constant between the laboratory and commercial facilities. Case 2 will occur in only very limited conditions. For a low-viscosity fluid, increasing the diameter of the tubular reactor while the fluid velocity is maintained constant will almost always increase the Reynolds Number, ensuring that the flow regime stays in the turbulent zone. An exception to this is a reaction that produces a viscous product in the melt phase (low-density polyethylene in a tubular reactor, for example). The commercial design may require operation at higher conversion and, hence, higher viscosity than in the laboratory reactor. The higher viscosity may result in the Reynolds Number being low enough, such that laminar flow is developed at some point in the reactor. Another possible exception is the case wherein the tubular reactor diameter was decreased to achieve a higher A/V ratio. This reduction in diameter at constant velocity will cause a reduction in the Reynolds Number. This may cause the flow regime to enter the laminar region. Case 3 represents the most frequently encountered alternative. When considering scale-up of laboratory reactors that are operating in the laminar flow regime, the key question is the RTD of the reacting material in the smaller laboratory unit (bench scale or pilot plant) compared to the larger commercial unit. A typical flow pattern for a reactor in laminar flow is shown in Fig. 2.6. When one considers laminar flow in a tube as shown in Fig. 2.6, an analysis of the fully developed laminar flow can be made. It can be shown that the velocity at any point is as follows: (2.4) (2.5) where VO = The laminar flow velocity at any point in the tube, ft/s. R = The radius of the tube, ft. r = The radial distance from the centerline of the tube to the point in question, ft. ∆P = The pressure drop over the length of the tube, lbs/ft². l = The length of the tube, ft. μ = The fluid viscosity, lbs-s/ft². This description of laminar flow can be used to estimate both the RTD and the average residence time. The basis for estimating these parameters is the velocity at the axis. Eq. (2.4) can be used to estimate that the velocity at the centerline of the tube is as follows: (2.6) Manipulation of this equation allows development of the average residence time, average velocity, and RTD as follows: (2.7) (2.8) where θA = Average residence time. θO = Residence time at axis. θX = Residence time at a distance x from centerline. F = The fraction of fluid that has a residence time less than θX. When considering scale-up from the smaller reactor to the larger reactor with both in the laminar flow regime—The distribution of residence times will be the same if the two reactors have the same residence time (velocity) at the centerline. When considering Eqs. (2.7) and (2.8), this means that if the average residence time is the same between the small and large reactors, the residence time at the centerline will be the same, and the RTD will be the same. Thus as far as the reaction depends on residence time and/or RTD, we can assume that in this case these variables are identical. Case 4 is a somewhat hypothetical case and is unlikely to occur. However, if it does occur, the larger reactor operating in turbulent flow regime might have a higher conversion than the smaller reactor operating in a laminar flow regime. This is because of the uniformity of the residence time in the turbulent flow larger reactor compared to the parabolic velocity profile in the smaller reactor as shown in Fig. 2.6. Reactor Scale-up Example Problem 2-1 A laboratory test in a batch reactor similar to that shown in Fig. 2.2 showed that a new catalyst would have a catalyst efficiency (lbs of polypropylene/lb of catalyst) of 2500 with a residence time of 30 min at 140°F. The polymerization was conducted in pure propylene. Because of the morphology of the catalyst, the shell of the polymer that builds up on the catalyst creates a barrier to propylene flow and causes a reduction in instantaneous catalyst efficiency. This causes the catalyst efficiency to be proportional to residence time to the 0.75 power. In the commercial plant, the concentration of propylene in the reactor will be less than in the batch reactor. This is because propylene is difficult to separate from propane, and the conversion in the reactor is not 100%. Thus propylenecontaining propane must be recycled. Management desires to know what catalyst efficiency can be expected in the commercial plant at the following reactor conditions: Reactor Temperature =140°F Reactor Residence Time (θ) = 60 min or 1 h Concentration of Propane in the Reactor = 90wt% Using Eq. (2.3), the kinetic constant can be calculated from the given batch data as follows: (2.9) (2.10) The commercial reactor is a CSTR, which will have a broad RTD, whereas the batch reactor had a narrow RTD. In addition, the concentration of propylene in the commercial reactor is lower than in the batch reactor. Thus calculations are required to be able to accurately predict the efficiency of the commercial catalyst. These calculations must take into account the following: • The commercial reactor is a CSTR with a broad RTD. As such, calculations must include the “bypassing” effect of the catalyst particles. • The concentration in the reactor is only 90% propylene due to the buildup of propane in the recycle system. • The average residence time is 1 h instead of 30 min. With the exception of the correction for the CSTR, all other data are available or can be easily calculated as follows: (2.11) (2.12) (2.13) The only unknown at this point is “f,” which represents the factor associated with the broad RTD of the catalyst particles. The bypassing effect associated with the broad RTD of the catalyst will cause the “f” to be less than 1, resulting in a lower catalyst efficiency than would be expected based on the batch reactor results. This factor (f) can be calculated as follows: Step 1: Break the average residence time into several small time increments, as described in Eq. (2.14). (2.14) where n = the number of increments. θ = The average residence time. Because the calculations will be performed using computers and modern spreadsheets, n should be at least 200. Step 2: Calculate the fraction of the catalyst particles that are in the reactor in each time increment as follows: (2.15) (2.16) where Wi = Fraction of catalyst that has a residence time less than Ti/θ. Ti = The residence time of the catalyst increment. Step 3: Calculate the amount of catalyst in the time increment by substitution in Eq. (2.15) as follows: (2.17) Step 4: Calculate the polymer produced in each increment using Eq. (2.18). (2.18) where ∆P = The polymer produced in the time between T1 and T2. In Eq. (2.18), the units are actually lbs of polymer/lb of total catalyst. Eq. (2.18) can be numerically integrated using a spreadsheet to give a predicted catalyst efficiency in the commercial plant as 3400 lbs of polymer/lb of catalyst. If the concentration of propylene and the impact of a CSTR versus a batch reactor had been ignored, the calculated catalyst efficiency would have been 4200 lbs of polymer/lb of catalyst. If a plant test had been done with management expecting an efficiency of 4200 and the actual efficiency was 17% below this, the plant test might have been considered an unsuccessful scale-up, and work might have been initiated to determine what the cause of this failure to achieve the anticipated efficiency was. Actually, the ignoring of the differences between the batch and continuous reactors associated with the calculations of the anticipated catalyst efficiency was the problem. Summary of Considerations for Reactor Scale-up Reactor scale-up touches on all phases of chemical engineering. The following list is provided as a reminder of the items discussed in the consideration of reactor scale-up: • This book only covers scale-up of single first-order reactions. • It is important to know the chemistry and kinetics of the reaction. • It is important to be able to translate kinetics from a batch reactor to a continuous reactor. This will require knowledge regarding modeling reactor RTD and flow regime. • Short cutting calculation procedures can lead to unreasonable management expectations. • Heat transfer is almost always a consideration when a reactor is scaled up from the laboratory batch reactor to a commercial size reactor. This subject is covered in more detail in Chapter 4. • Reactant and catalyst injection nozzles and nozzle location require careful attention. • Supplier’s assistance to obtain the desirable mixing pattern is important for the design of a CSTR reactor. However, the vendor will require input from the designer and/or SME (Subject Matter Expert). Supplier’s Scale-ups Most engineering or operating companies rely almost completely on the supplier’s scale-up know-how regarding several equipment items. The supplier’s know-how is almost always very empirical and is based on experience with other projects. These other projects may or may not be adequate prototypes for the project under consideration. The types of equipment that fall into this category besides agitators/mixers are drying equipment and solid–liquid separation equipment. To enhance the reader’s knowledge regarding the scale-up of this type of equipment, the next two sections deal with an approach that is somewhat more theoretical than used by suppliers. The purpose of including these sections in the book is to allow the reader to have some knowledge regarding the theoretical approach to scaling up this type of equipment. Volatile Removal Scale-up Removal of volatile from a solid can be conducted in fluid beds, plug flow purge bins, or various kinds of mechanically assisted dryers. The term volatile means material that can be removed from a solid by heating, application of a vacuum, and/or passing a non-condensing gas through a bed of solids and/or over the surface of the solid. The volatile can be either a gas or a liquid at normal atmospheric conditions. Eq. (2.1) described earlier and shown below can be used to scale-up various kinds of volatile removal equipment. (2.1) When this concept is used for removal of volatiles from a solid, the following definitions can be used: (2.19) where for the case of removal of volatile, R = dX/dθ = The rate of volatiles removed from the solid, %/min. C1 = A constant that is related to the type of equipment and mass transfer coefficient. As discussed later, this mass transfer coefficient is a semi-empirical value that can be determined by laboratory experiments. C2 = A constant that is necessary to convert the units to %/min. XO = The actual concentration of the volatile at any point in the equipment, %. XE = The equilibrium concentration of the volatile in the solid at the same point in the equipment, %. The equilibrium concentration in the solid is based on the concentration of the volatile in the gas at the point of interest. As an aid in calculation, the concentrations are usually expressed on a dry basis, that is, lbs of volatiles/100 lbs of dry solids. Eqs. (2.1) and (2.19) can be combined and rearranged to give Eq. (2.20) as follows: (2.20) Eq. (2.20) is normally simplified by combining constants as shown in Eq. (2.21). (2.21) For the purpose of considering scaling up this type of equipment, the scenario of removing volatiles from an organic polymer will be used. The principles apply to any operation in which volatile material is removed from a solid. Examples of where these principles might be used are as follows: • Removal of volatile from waste solids. • Using superheated steam to remove volatiles from solids or liquids. • Removal of volatiles in the manufacturing of pharmaceuticals. • Removal of volatile material to meet flash point specifications prior to shipping. • Removal of volatile material in an extruder designed for “degassing.” • Removal of water in the process of drying lumber. In addition, much of the following discussion centers around a commercial size continuous plug flow purge bin. A purge bin is a stripping vessel in which gas and solids flow countercurrent. However, the principles discussed apply to other types of equipment just as well. The principles also apply to batchwise removal of volatiles. One must just make sure that the model that is being developed fits the actual equipment and mode of operation. For example, a purge bin model with countercurrent gas and solid flow would not be applicable to a mechanically assisted dryer that utilizes concurrent gas and solid flow. However, the countercurrent model will be applicable to a mechanically assisted dryer in which the solid and gas flows are countercurrent. The C1 will likely be different for the two when comparing the purge bin dryer to the mechanically assisted dryer. As indicated earlier, the important part of this scale-up approach is to utilize equations and values that do not change when the capacity of the equipment is increased, or if they do change, the change is incorporated in a theoretically correct manner. For example, in Eq. (2.20), C1 should not change unless the type of equipment or the type of solid changes. If a Thermal Gravimetric Analyzer (TGA) is used to study removal of volatiles in the laboratory and a purge bin is used in the pilot plant or commercial plant, there will be a large difference in C1. This is due to the fact that the solids in a TGA apparatus consist of a thin bed. While in the purge bin, they consist of bed with a H/D (height-to-diameter) ratio of 2 to 6. The TGA apparatus approaches single particle stripping kinetics. A purge bin is more related to bed stripping kinetics. This is shown schematically in Fig. 2.7. Figure 2.7 Single particle diffusion versus bed diffusion. When considering the solid by itself, the value of C1 will depend on the porosity and morphology of the solid. This can be illustrated by comparing a polymer granule prior to extrusion to the same polymer after it is extruded into pellets. Before extrusion, the granule has a great number of pores. Each pore has surface area that is accessible to the flowing gas, thus creating large amounts of surface area. After extrusion, the pellets are dense particles with an accessible area of only that on the exterior surface of the particle. A solid with a lower porosity would be expected to have a lower value of C1. A solid with a low porosity has very little surface area, and the mass transfer coefficient is generally limited by the very slow diffusion through the solid. On the other hand, a solid with a high porosity has a large amount of surface area, as well as a very short path of diffusion resistance. Thus it will have a high mass transfer coefficient. In the unit operation of removing volatiles from solids, the mass transfer is dependent on the rate-limiting step. This can be either the rate of evaporation from the surface and through the gas film or diffusion through the particle, whichever is slowest. The previous discussion has centered on the rate of diffusion or mass transfer through the particle and bed. Furthermore, it was noted that the removal can also be limited by the evaporation of the volatile from the surface. This rate can be limited by the concentration of the volatile in the gas that flows countercurrent to the solids in a purge bin. For example, if a recirculating gas stream is used, the inlet gas stream is likely to contain volatiles. The volatiles in the exiting solid cannot be lower than the equilibrium concentration that is based on the concentration of the volatiles in the recirculating incoming gas. The TGA apparatus or similar equipment will be of value in distinguishing the impact of polymer morphology on C1, because this impact will be observed in single particle diffusion. This impact is shown in Fig. 2.8 for 2 different polymers in a laboratory TGA apparatus. Polymer A has a surface area (ft²/ft³) twice that of polymer B. In this figure, the gas rate is high enough, such that the equilibrium content of the volatile in the polymer can be assumed as zero. Figure 2.8 Volatiles content versus time. Even though the TGA apparatus is of value in determining how the morphology of the polymer affects volatile removal rate in the laboratory, it is of limited value for scaling up to larger equipment. If a TGA apparatus is being used to scale-up from a bench-scale experiment to a pilot plant or commercial purge bin, it is unlikely that the C1 value in the TGA and purge bin will be the same even if the morphology of the polymer is the same. This is because as mentioned earlier and shown in Fig. 2.7, the TGA is an apparatus that allows an approach to single particle diffusion. Most solvent removal applications are bed diffusion limited. However, if one compares a bench-scale purge bin to a larger unit with both of them removing volatiles from a polymer with the same morphology, the C1 values will be very close, when assuming that comparable gas rates are used. This is shown in Fig. 2.9. In this figure, the y-axis represents the bed mass transfer coefficient. The figure illustrates scaling the bed mass transfer coefficient in a bench-scale unit 6 in. in diameter to a pilot plant that is 24 in. in diameter. Figure 2.9 Comparison of pilot plant data to bench-scale data for polymer 1. The gas rate that is used in this correlation is the percentage of minimum fluidization velocity. It is believed that this provides a value that is representative of the gas–solid contacting and back mixing that occurs in the purge bin. Obviously, a purge bin is not the only kind of drying equipment in commercial use. There are different types of equipment supplied by different companies. All of the equipment can be scaled up from supplier pilot plant test data to full-size commercial units using the concepts described here. These concepts and necessary interaction with the supplier are described in more detail in Chapter 7. The driving force will often change as the scale of production is increased. In addition, the driving force will change as the concentration of the volatiles in the solid changes. However, these changes do not create problems, because they can be easily calculated by the equilibrium and material balance relationships. The relationship between the concentration of volatiles in the gas phase and the equilibrium value of the volatile in the solid (XE) can be determined either experimentally or by the use of theoretical relationships as described in the following paragraphs. Although Eq. (2.21) can be simplified and integrated directly if XE is assumed to be equal to zero, this is a dangerous assumption and will almost always lead to an inadequate design. It is imperative that equilibrium be considered in designing a volatiles removal system. There have been several approaches described in the literature relating to the equilibrium of volatiles in the amorphous region of solids. Many of these approaches deal with organic volatiles and polymers. The following paragraphs are based on this type of system. However, the concepts apply to any system in which the required data are available. When considering the volatile–polymer systems, the amorphous region in an organic polymer is the region that is not crystalline. Polymer chemists describe the amorphous region as one wherein the polymer chain has minimal organization compared to the crystalline region, which is highly organized. This organization of the crystalline region limits the amount of volatile (solvent or monomer) that can be dissolved. For the purpose of this discussion, solvents or monomers are assumed to be soluble in the amorphous region, but are only very slightly soluble in the crystalline region. The classic approach to determining the equilibrium concentration of a volatile solvent or monomer in the amorphous region of any polymer is the Flory– Huggins relationship. This is described as follows: (2.22) where P = partial pressure of the volatile. VP = vapor pressure of the volatile at the temperature of the polymer. V1 = volume fraction of the volatile in the amorphous region. V2 = volume fraction of the polymer in the amorphous region. M1 = molar volume of the volatile. This is obtained by dividing the molecular weight by the density. M2 = molar volume of the polymer. This is obtained in the same fashion as M1. U = Polymer–Volatile interaction parameter, which is dimensionless. For the removal of volatiles from polymers (and most solids), several simplifying assumptions can be made. where C = A constant to convert wppm on a dry basis to volume fraction. XEA = The equilibrium concentration in the amorphous region in wppm. Then with the appropriate substitution and simplification, Eq. (2.22) can be expressed as: (2.23) As shown in Eq. (2.23), if the polymer–volatile interaction parameter (U) is known, the equilibrium concentration in the amorphous region can be determined for any temperature and partial pressure of the volatile. This relationship can be used for both correlating experimental data and for estimating equilibrium relationships when no experimental data exists. If no experimental data are available, Eq. (2.23) can be utilized if values of the polymer–volatile interaction parameter (U) are known or can be determined. Typical values of the interaction parameter range from 0.35 to 0.55. This parameter should be related to the difference in polymer and volatile solubility parameters. One equation that has been proposed to evaluate the interaction parameter is shown as follows: (2.24) where Z = The lattice constant in the range of 0.25–0.45, which is dimensionless. V1 = The molar volume of the volatile, cc/gm-mol. S = The solubility parameter for the polymer and volatile, (cal/cc) .⁵. R = The gas constant, cal/(gm-mol-°K). T = Absolute temperature, °K. If these equations are valid, literature data of solubility parameters for polymers and volatiles can be used to predict the equilibrium relationships. However, the prediction of equilibrium from the Flory–Huggins relationship is complicated by the fact that these relationships only predict the equilibrium in the amorphous region. To predict the equilibrium concentration in the total polymer requires a determination of the crystallinity, in addition to the interaction parameter. For some polymers, the approximate crystallinity is known. For polyolefins, it is in the range of 70%–90%. Therefore in the case of a polyolefin with a crystallinity of 85%, the approximate equilibrium concentration in the total polymer is as follows: (2.25) where C = The % crystallinity of the polymer. While there is work in progress at several industrial and academic institutions to refine this approach, this area is not addressed further. This limitation of the Flory–Huggins relationship can be overcome by obtaining experimental data. Eqs. (2.23) and (2.25) can be transformed (by algebra and by substituting the equilibrium concentration in the total polymer (XE) for that in the amorphous region (XEA)) to Eq. (2.26) shown below. (2.26) The “A” and “B” constants can be determined by 2 to 3 experimental points at a single temperature. These experimental runs are generally conducted in equipment in which an inert gas (nitrogen) containing the volatile is circulated through a bed of the solid. At the end of a sufficient time for obtaining equilibrium between the solid and the gas, the composition of each phase is measured. A comparison of Eqs. (2.23) and (2.26) would indicate that in theory “B” should equal 1. However, this is rarely the case. In summary, the equilibrium of a volatile (solvent or monomer) in a polymer can be determined in the following 3 different ways: • Purely experimental, wherein two or three data points are fitted to Eq. (2.26) • Purely theoretical, using the Flory–Huggins relationship and knowing that it only predicts the solubility in the amorphous region. This might be of most value to predict the equilibrium concentration of a solvent in a synthetic elastomer or other highly amorphous material. • Semi-theoretical, wherein the equilibrium concentration in the amorphous region is predicted using the Flory–Huggins relationship and then reduced using a factor based on literature or experimental values of crystallinity to obtain the equilibrium value of the volatile in the total polymer. Which of the three methods should be used depends on the status of the project. In the early stage of project development, the semi-theoretical method will probably be sufficient to develop study designs. For detailed design, it is probably of value to use both the semi-theoretical and the experimental methods. To enhance the understanding of the approach to the scale-up of a volatiles removal operation, the following example problem is provided. Example Problem 2-2 Problem Statement Estimate the residence time required in a purge bin to remove hexane from 20,000 pph of polypropylene. The concentration of hexane is initially 1000 ppm by weight, and the hexane must be removed to a level of 25 ppm by weight. Pure nitrogen is to be used as the stripping gas flowing in a countercurrent direction to the solids flow. The rate of pure nitrogen entering the purge bin at the bottom is 250 pph; the purge bin is at a temperature of 200°F, and the pressure is 17.7 psia. Given: Three data points from a laboratory experiment to determine the equilibrium are as follows: Concentration in Polymer wppm Dry Basis Concentration in Gas Phase, Mol Fraction 285 .015 57 0.005 14.8 0.002 Step 1: To obtain an equilibrium relationship between the hexane in the polymer and the hexane in the vapor phase, the data given above can be curve fit, and the following relationship developed: (2.27) (2.28) where VP = The vapor pressure of the volatile at the temperature of the purge bin, psia. π = The total pressure on the purge bin, psia. Y = The mol fraction of hexane in the gas at a given point. XE = The equilibrium concentration of hexane in the polymer, wppm on a dry basis. Step 2: Calculate the outlet gas composition from a material balance as show in Fig. 2.10. Figure 2.10 Material balance concept. Step 3: Transform Eq. (2.21) into Eq. (2.29). (2.29) where K = 0.15 1/min. The purge bin constant for removing hexane from polypropylene as demonstrated in the bench-scale purge bin. θ = The required residence time in the purge bin, minutes. Step 4: Split the reduction in volatiles to be achieved into 50 to 200 segments (dX), and starting from the gas outlet point, perform a material and kinetic balance around each segment as shown in Fig. 2.11 and Step 4. The accuracy of the calculation is directly related to the number of segments used. With computer technology, it is easy to utilize a large number of segments. Figure 2.11 Calculation of Kθ. Step 5: Considering Fig. 2.11, the value of X can be taken as the inlet concentration going into the segment. This will be the outlet value of X from the previous segment. XE will be the concentration in equilibrium with the outlet gas from the segment. Using these values and the value of dX of the segment, the value of Kθ can be calculated for this segment. Step 6: Calculate the value of Kθ for each segment. Step 7: Continue this process until the value of (X−dX) for a segment is equal to the outlet volatiles specification. Step 8: Sum up the individual Kθs. Then divide this summation by the equipment-related value of “K” to obtain the residence time required to accomplish the desired volatiles removal. The value of “K” can be determined from similar pieces of equipment or from tests using a purge bin in a pilot plant or in a bench-scale experiment. In the example given here, the residence time required in the purge bin is 34 min if the “K” is .15 1/min. The residence time required as calculated in Steps 1–8 along with the desired H/D ratio, the bulk density of the solid, and the appropriate solid–vapor disengaging dimensions will determine the size of the purge bin. Once the diameter of the purge bin is fixed, the gas rate can be confirmed by comparing it to the desirable levels of fluidization velocities. If it is not in the desirable range, the gas rate and/or H/D ratio should be adjusted, and the calculations repeated. The reverse of this process can be used for laboratory or pilot plant results to determine the value of “K.” If the actual residence time in a pilot plant or benchscale purge bin used for experimental work is known, this work can be used to determine the value of “K”. The summation of K*θ from the calculations as described in the Steps 1–8 can be divided by θ to arrive at a value of an experimentally determined value of “K.” While the discussion here considers the detailed calculations for a purge bin, it was also indicated that the same techniques could be used for mechanically aided dryers once it is determined if the gas flow is concurrent or counter current. These techniques can then be used to determine the “K” value from pilot plant data and scaled up to a commercial unit. A fluid bed dryer can be approached using similar techniques. A fluid bed dryer consists of a vessel in which the solid particles are suspended in the circulating gas as described in the discussion of fluid bed reactors. Because of the high degree of agitation and back mixing in a fluid bed dryer, it is considered to be a perfectly mixed vessel and simulated as a CSTR. In a fluid bed dryer, the circulating gas provides energy to fluidize the bed, a source of heat, and an inert gas that provides the driving force to remove volatiles from the solid. The fluid bed dryer can be modeled using Eq. (2.21) in a technique similar to that described for a purge bin. However, because the fluid bed is considered to be a perfectly mixed vessel, the outlet concentration of the volatile in the outlet gas determines the equilibrium concentration in the outlet solids. A typical fluid bed dryer, in which the equilibrium concentration of the volatile in the polymer (XE) is greater than zero, often consists of multizones as shown in Fig. 2.12. This provides a method for a fluid bed to approach a purge bin in effective residence time. Figure 2.12 Segmented fluid bed dryer. In the case of a multicompartment fluid bed dryer, the equilibrium concentration of the volatile in the solid leaving each zone can be approximated using one of the three approaches to equilibrium discussed earlier and the concentration of the volatile in the total gas leaving the dryer. The rate of drying in each zone is described by Eq. (2.21) described earlier. Because each zone in the fluid bed dryer is simulated as a perfectly mixed vessel, the actual concentration of the volatile in the solid (X) is the concentration leaving each zone. To estimate the residence time required in each zone, the steps are as follows: Step 1: Divide the inlet concentration of the volatiles minus the outlet concentration by the number of physical zones. This will be dX for each zone. Step 2: Estimate the required fluidizing gas rate based on heat/material balances and the required fluidizing velocity. Step 3: Knowing the concentration of volatile in the inlet gas and the volatiles removed from the solid, estimate the concentration of the volatiles in the total gas leaving the dryer. Step 4: Calculate the XE based on the concentration of volatiles in the total gas leaving the dryer. This XE will be the same for each zone. Step 5: Manipulate Eq. (2.21) to give Eq. (2.30) as follows: (2.30) Step 6: Repeat this for each physical zone and sum these to give the total residence time. Calculations using the residence time required as calculated in Steps 1 to 6 along with the desired bed height, bulk density, and the appropriate solid–vapor disengaging dimensions will determine the size of the fluid bed. Depending on the stage of the project, it may be desirable to optimize the dryer residence time by manipulating the amount of drying done in each zone. For example, doing more drying in the initial zone and less in subsequent zones will likely reduce the dryer size. However, this consideration may not be necessary at an early stage of project development. Summary of Key Points for Scale-up of Volatiles Removal Equipment • The equilibrium between the solid and the volatile must always be considered. • A scale-up relationship for a batch or pilot plant facilities to a larger equipment consists of two key items. There should be an equilibrium relationship between the vapor and solid, which can be developed by either experimental data or the Flory–Huggins theory. Then a value of a mass transfer coefficient that can remain constant between the bench-scale or pilot plant facilities and the commercial operation should be developed. Careful consideration should be given before assuming that the mass transfer coefficient will be constant. • A spreadsheet that allows integration of material balance, mass transfer, and equilibrium relationships should be utilized. The accuracy of this spreadsheet will be enhanced as the number of calculation stages is increased. The discussion has centered around using the modified Eq. (2.1) in an application to remove volatiles from a polymer (polypropylene) using a purge bin. However, the same techniques can be utilized for the following: • Removing volatiles from any solid. • Mechanically aided dryers and fluid bed dryers or other types of dryers. Other Scale-ups Using the Same Techniques Although the above technique was developed for removing volatiles from solids, it can be slightly modified and used for other scale-up approaches. For example, an analysis and scale-up of a crystallization process can be done using Eq. (2.1). The definitions given at the start of this chapter are valid for this equation when used to analyze a crystallization operation. The driving force (DF) is the same. That is, it is equal to as follows: (2.31) where XO = The actual concentration of the crystallizing solid in the liquid, %. XE = The equilibrium concentration of the solid in the liquid at the temperature of the liquid, %. An analysis similar to that done for either the purge bin or the fluid bed dryer can then be performed. Solid–Liquid Separation Although not every chemical engineering process utilizes solid–liquid separation, many of them do. Examples where solid–liquid separators are used consist of polymer production, waste treatment facilities, water purification, lubrication oil production, production of oil from oil sands, and removal of color bodies. While there are many different kinds of solid–liquid separation equipment, a centrifuge has been used here to illustrate how Eq. (2.1) can be used in scaling up solid–liquid separation equipment. When considering this equation as it relates to a centrifuge, it should be realized that the detailed design of the centrifuge may be much more complicated than shown by this analysis. The detailed analysis can best be performed by the centrifuge supplier and his engineering staff. They will use proprietary correlations that are likely not available in the open literature. However, it will be helpful for the engineers from the Engineering and Construction and/or Operating Company to understand some of the basic concepts of centrifuge scale-up. The concept of working with the supplier’s engineers is covered in Chapter 7. Centrifuges are basically settling devices that can be analyzed by Stokes Law as follows: (2.32) where VS = settling rate under the force of gravity, ft/s. d = particle diameter, feet. g = gravitational constant, 32.2 ft/s². ρ = density of solid (S) and liquid (L), lbs/ft³. μ = viscosity of fluid, lbs/ft-s, As indicated in the aforementioned definition of terms if physical properties are known, the settling rate in Eq. (2.32) is based on the force of gravity alone. A centrifuge operates at a high rate of rotational speed, which creates centrifugal force that serves as an additional gravitational force, thus increasing the settling rate. The enhanced gravitational constant is often referred to as a “G force.” The “G force” is calculated by dividing the centrifugal force plus the universal gravitational constant (32.2 ft/s²) by the gravitational force, that is, a “G force” of 10 will increase the gravitational constant and the settling velocity by a factor of 10, with all else being constant. When scaling up from a bench-scale or pilot plant size centrifuge, the diameter of the centrifuge bowl is increased to provide more settling capacity. As the diameter of the centrifuge is increased, the distance that the solids must settle is also increased. The rotational speed of the centrifuge is increased to compensate for this. This can be expressed mathematically as shown in Eqs. (2.33)–(2.35). (2.33) (2.34) (2.35) where θA = Settling time available, minutes. θR = Settling time required, minutes. V = The volume of liquid in the centrifuge, ft³. Q = The volumetric flow leaving the centrifuge, ft³/min. r = Radius of the centrifuge bowl, ft. VS = Settling velocity, ft/min. The enhanced gravitational constant (G-force) can be expressed mathematically if the rotational speed and radius of the centrifuge are known. Eq. (2.36) shows that the settling rate based on Stokes Law is proportional to rω². (2.36) (2.37) where r = The radius of the bowl of the centrifuge, feet. ω = The angular velocity, radians/s. RPM = The angular velocity in revolutions/min While the “G” force should include the gravitational force, and be represented by (r*ω²+g), this is often simplified by leaving out the “g” term, because this is a very small part of the total settling force. Stokes Law gives settling velocities accurately if the Reynolds Number is such that the settling is in laminar flow. This is likely the case with small particles and high viscosity liquids. This scenario is often the case when a centrifuge is used to obtain adequate solid–liquid separation. Other correlations are required if the settling rate is such that the flow is in the intermediate or turbulent region. However, Stokes Law is generally considered valid, because the application of centrifuges is normally for small particles and/or high viscosity liquids. The enhanced settling factor (rω²) provides a simplified technique to scale-up pilot plant data to a commercial operation. However, the scale-up requires some equivalent criteria such as similar particle diameter, similar particle morphology, similar physical properties of liquid, and similar centrifuge length-to-radius ratio. In addition, the maximum rotational velocity and maximum commercial centrifuge diameter must be known. As indicated earlier, the supplier’s engineering staff will be heavily involved in this scale-up. For example, consider the following scale-up. Example Problem 2-3 Problem Statement Determine the maximum settling capacity and, hence, the number of commercial size solid bowl centrifuges based on pilot plant data of a similar model. Given Pilot Plant Data Rate of Liquid (Centrate) = 50 ft³/h. at full capacity as determined by the maximum rate at which no significant solids are present in the centrate. Centrifuge diameter = 1 foot Liquid volume as % of calculated centrifuge volume = 80%. The shaft and internals are assumed to be 20% of the actual volume. Rotational Velocity = 500 RPM Length to diameter ratio = 3 Required Commercial Operating Needs Rate of Liquid (Centrate) = 100,000 ft³/h Maximum commercial centrifuge diameter = 4 ft Liquid volume as % of calculated volume = 80%. The shaft and internals are assumed to be 20%. Length to diameter ratio = 3 Problem Approach Calculate the rotational velocity required to provide the necessary settling velocity at the maximum centrifuge diameter of 4 ft. Pilot Plant Calculations and Comments (2.38) (2.39) In the pilot plant, the solids must settle from the centerline of the bowl (feed injection point) to the wall in less than 2.3 min as defined in Eq. (2.38). This equation represents the settling time (residence time) available in the pilot plant centrifuge. The required settling time can be calculated using Stokes Law (assuming that the settling flow is in the laminar region) and the centrifugal force created by the rotational speed of the centrifuge. The centrifugal force on the particle is also a function of radial position. It increases from 0 at the centerline of the bowl of the centrifuge (radius in Eq. (2.36) = 0) to the radius of the centrifuge as the wall is approached. This factor is taken into account by using the average centrifugal force. These relationships were combined in Eq. (2.36) described earlier: Eq. (2.36) can be split into the enhanced settling velocity factor (r*ω²/2) and Stokes Law represented by “K” in Eq. (2.41) as follows: (2.40) (2.41) Because for the pilot plant, the centrifuge capacity was determined as the rate at which significant solids were just beginning to be entrained with the centrate, we can say that at this point in the pilot plant, the required residence time was equal to the available residence time. The K value can be calculated as shown in the following steps: Step 1: Calculate the required settling velocity (VR) in the pilot plant. This will be the available settling distance divided by the available time to settle θA. (2.42) Eq. (2.42) represents the required settling rate based on residence time and radius. Step 2: Calculate the “K” from the pilot plant using Eq. (2.40) and by setting the required settling velocity (VR) equal to the available settling velocity (VA) as follows: (2.43) (2.44) The “K” represents the scale-up factor (C1), which should not change unless the properties of the solid or liquid change. It is recognized that as shown in Eq. (2.41), “K” could be calculated directly from physical properties. However, the particle diameter that should be used is often questionable for the following reasons: • The diameter may be difficult to measure. • The particles may have a wide particle size distribution and/or form clusters. • The particles may not be solid uniform spheres. They may have a large amount of porosity and/or have a surface morphology that makes the particles very “fuzzy.” This will change the settling rate by changing the particle density. Commercial Calculations In the commercial plant, because the settling is scaled up from the pilot plant or bench scale, and if there is no change in morphology or physical properties, the “K” value will not change between the pilot plant and the commercial plant. The physical meaning of the “K” value is defined by Eq. (2.41). As shown in this equation, it is a combination of particle size, difference in solid and liquid densities, and viscosity of the liquid. In the commercial plant, the required rotational velocity of the bowl can be calculated using the value of “K,” the bowl diameter, the length of the bowl, and the centrate rate. With the centrifuge diameter set at 4 ft (assumed to be the maximum commercial available model), the required angular velocity can be calculated as follows: (2.45) (2.46) (2.47) Step 4: Knowing the required settling rate, calculate the centrifuge rotational velocity based on “K” calculated in Eq. (2.44). It will probably be of value to introduce some conservatism by reducing the calculated value of “K” by 15% or 20%. (2.48) (2.49) (2.50) The centrifuge supplier will have information that will help decide the accuracy of this approach and whether the standard models available can perform at this rotational speed. To illustrate this point, assume that the standard centrifuge speed for this size is only 2000 rpm (210 radians/s), the maximum throughput for this centrifuge can be calculated as follows: Step1: Calculate the Centrifuge Settling Rate (VS) (2.51) (2.52) (2.53) (2.54) Thus 3 centrifuges would be required to handle the design rate of 100,000 ft³/h. It could be questioned if this simplified approach added value to a project as compared to just sending a centrifuge supplier a RFQ (request for quote). This approach does provide value as a project is developed. Some of the value might occur in the following areas: • During the study design, this approach will allow the designer to estimate the number of centrifuges that will be required. • During the front-end design and bid evaluation, this approach will allow confirmation of the proposed bids. • For new products or new processes, the physical properties of the liquid or solid may change as the process research continues even as the study design or front-end work is evolving. This approach provides a means to test the changes against the proposed design. • This approach may also be of value during the start-up phase or problemsolving phase to allow estimation of the impact of changes in process conditions or product attributes on centrifuge performance. The chapter provides know-how on scaling up reactors, drying equipment, and solid–liquid separating devices from both bench scale and pilot plant scale to commercial scale. Although the techniques presented may be considered empirical or “black box,” they work. The value is in the theoretically correct pragmatic nature of the approach rather than the scientific beauty. The key to this approach is to find a constant that is scalable, described in this chapter as C1, C2, or K, and then use the commercial design driving force to estimate equipment size for the full-size unit. 3 Developing Commercial Process Flow Sheets Abstract When dealing with the conversion of a laboratory bench-scale process to either a commercial or pilot plant design, the concept of stages arises. Each individual unit operation is basically a stage. For example, a process may have a reaction stage, a product purification stage, a recycle stage, and a solid–liquid separation stage. Whether the process is being conducted in a small-scale laboratory vessel, a pilot plant, or a commercial plant, the goal of the stages will be similar. However, the equipment being used in the staging is often very different between the larger and smaller units. In addition, bench-scale work often utilizes the same vessel for various stages. This approach is unlikely in a continuous pilot plant or commercial unit. Thus in designing a scaled-up continuous unit, the focus should be on the goal of the stage rather than the equipment used in the bench scale. Keywords commercial design vs bench scale design reactor design (CSTR or PF) product purification development When dealing with the conversion of a laboratory bench-scale process to either a commercial or pilot plant design, the concept of stages arises. Each individual unit operation is basically a stage. For example, a process may have a reaction stage, a product purification stage, a recycle stage, and a solid–liquid separation stage. Whether the process is being conducted in a small-scale laboratory vessel, a pilot plant, or a commercial plant, the goal of the stages will be similar. However, the equipment being used in the staging is often very different between the larger and smaller units. In addition, bench-scale work often utilizes the same vessel for various stages. This approach is unlikely in a continuous pilot plant or commercial unit. Thus in designing a scaled-up continuous unit, the focus should be on the goal of the stage rather than the equipment used in the bench scale. When considering bench-scale processes, one often observes that the operations themselves are conducted as batch operations, whether the operation is a reaction or a purification step. If there is more than a single step involved, they are referred to as stages such as first-stage reactor and/or second-stage reactor. A single-stage reactor and a purification stage might be referred to as the reactor stage and the wash stage, respectively. It is likely that these stages would be conducted in the same laboratory vessel. In a continuous commercial plant or a continuous pilot plant, each stage (reactor, purification, etc.) will be conducted in a separate vessel. Probably the greatest separation between a process designer/engineer and a bench chemist occurs in this area. The process engineer thinks in terms of a continuous process, wherein each stage has a unique piece of equipment associated with it. The bench chemist thinks in terms of staged batch processes, with generally all of them done in a single vessel. This isolation in thinking is nowhere clearer than when considering reactors. When considering a development that requires multiple reactions, the process designer thinks in terms of at least one vessel for each reaction. The bench chemist thinks in terms of multiple reactions per vessel. Using a single bench-scale vessel for multiple purposes might be done for the following many different reasons: • It seems like the right thing to do because there are multiple reactions involved. • It is convenient. A single reactor can be used for multiple reactions as opposed to multiple reactors that might be required in a continuous process. These multiple reactors might take up excessive bench area in the laboratory and require purchasing of several reactors. The reactors in series will also require a lot of operating attention. • With batch reactors, there is no concern with the bypassing that will occur in a continuous stirred tank reactor, that is, the initial reaction can be conducted, and the product washed and thereafter made available for a second reaction to be conducted in the same vessel. • In a multistage continuous reaction process, unreacted reactants will flow into the downstream reactors due to the bypassing effect. This might create undesirable reactions in the second or downstream reaction steps. This potential problem can be eliminated in the bench scale by using the initial reactor as a wash drum to remove unreacted raw materials and then conducting the subsequent reaction in the same vessel. As the process engineer begins to think in terms of reactor design for a commercial plant, he will realize that the design for a continuous commercial reactor can be based on the plug flow reactor model or the Continuous Stirred Tank Reactor model (CSTR) model. Although these descriptions were given earlier in Chapter 1, they are summarized herein as follows: • The plug flow model or plug flow assumption (PFA)—In the perfect embodiment of this model, each fluid particle or solid particle is assumed to have the same residence time. A typical example of this is the tubular reactor that was discussed previously. The tube can be filled with a solid catalyst or be an empty tube. The actual approach to the PFA depends on the type of flow regime in the tube. The flow pattern in a tube packed with catalyst allows the closest approach to the PFA. Turbulent flow in an empty tube also provides a close approach to the PFA. However, laminar flow in an empty tube deviates from the PFA because of the parabolic velocity pattern in the tube. In the laminar flow regime, the velocity is highest at the centerline of the tube and approaches zero at the wall of the tube. With this velocity flow pattern, the reactants will have different residence time. Laminar flow in empty tubes can create a large deviation from the PFA. • The CSTR—This model assumes that the reactor is perfectly mixed, and therefore the concentration of the reactants is the same at any point in the tank. This means that the reactants in the feed are immediately dispersed, and their concentration becomes that in the tank. Now if the concentration of reactants leaving the reactor is the same in either the PFA model or the CSTR model, the average concentration of reactants at any point in the reactor will be higher in the PFA reactor than in the CSTR model. Another variation of the CSTR is a fluid bed reactor. In a fluid bed reactor, agitation and heat removal is accomplished by the circulation of a gas through the bed. This gas is normally a mixture of an inert gas (nitrogen) and the reactants. More discussion of the fluid bed reactor was provided earlier in Chapter 2. Either reactor design (PFA or CSTR) may also include the use of a diluent such as hexane or, in the case of a gas fluid bed, nitrogen. The utilization of a diluent has the following advantages: • It dilutes the incoming reactants mitigating the chance of reactor hot spots. • It provides a limited “heat sink,” that is, in the case of a loss of temperature control in the reactor, the diluent provides a means to absorb some of the excess heat. • If the product “C” or “E” in the reactions described by Eqs. (3.1) and (3.2) is a solid, it mitigates the risk of the material in the reactor becoming too viscous. • It reduces the reactor vapor pressure in a liquid reactor if either the reactants or product has a high vapor pressure. Now consider the two-step reactor process to make compound “E” as follows: (3.1) (3.2) To the bench-scale chemist, it is obvious and simple how this two-step reaction with intermediate wash steps or distillation should be done. The reaction described by Eq. (3.1) is conducted first. If the exact stoichiometric amounts of “A” and “B” are used, and the reaction goes to completion, then product “C” can either be reacted with “D” in the same vessel or it can be transferred to another vessel prior to conducting the reaction. If the exact stoichiometric amounts of “A” and “B” are not used, or if the reaction to produce “C” does not go to completion, then consideration must be given to the potential harmfulness of either of the excess of “A” or “B” on the reaction described by Eq. (3.2). If the residual of one of these reactants is harmful to the second reaction, then “C” must be separated from the mixture before the second reaction can be conducted. This separation will likely be done by some sort of phase separation or by a distillation process. Whatever the separation process is, it will likely be conducted in the same vessel as the initial reaction. If distillation is used, it will be done in a batch mode. It is also likely in this mode that the recovery of “C” and eventually production of “E” will be sacrificed to maintain a high purity of “C.” If the product “C” forms a separate phase, either solid or liquid, the phase consisting of the residual “A” and “B” can be siphoned off leaving “C” in the vessel to participate in the second reaction to produce “E.” Even in the case of phase separation, it is likely that “C” will have to be washed to remove residual “A” and/or “B.” It is also likely then that “E” must be purified to separate it from the unreacted reactants and from the by-products. For the production of “E” in the bench scale, all of these steps may be done in a single vessel and possibly over a period of multiple days. The first step in the development of a commercial plant design is the development of a process flow diagram. At this first step, the process development engineer will begin studying the process as developed in the laboratory. The first conceptual process design will look something similar to Fig. 3.1. Figure 3.1 Original conceptual flow diagram. At some point after looking at Fig. 3.1, the process designer will realize that to design a plant to produce the product “E” in a mode that duplicates the laboratory will be both expensive and cumbersome. While each process is different, some of the questions that should be asked in the process flow sheet development stage of the example are as follows: • How important is the separation of “C” from the residual reactants A and B? Would it be acceptable to allow the residual reactants to react with “D” and then separate the products of these undesirable reactions in the final purification of “E”? • Can the concern associated with an undesirable reaction in the second reactor be eliminated by the addition of an impurity, which inhibits the undesirable reaction, but not the desired reaction? • Can the two reactions be conducted in a reactor that approaches plug flow with an intermediate withdrawal point that allows feeding a continuous distillation column with “C” flowing back to the second half of the plug flow reactor? This is shown conceptually in Fig. 3.2. • The conceptual design shown in Fig. 3.2 is essentially a continuous PFA reactor duplicating the batch bench-scale results. If this appears feasible, does such a reactor exist that would allow duplicating bench-scale conditions? Figure 3.2 Possible flow sheet. An examination of Fig. 3.1 relative to Fig. 3.2 illustrates the difference in thinking between the bench chemist and the process designer. It also illustrates the role of the process designer in the development of the commercial process flow diagram. Another possibility is shown in Fig. 3.3. In this process flow, the reactors consist of well-mixed vessels that will have the attributes of a CSTR, as discussed earlier and in Chapter 2. In Fig. 3.3, multiple reactors are used to approach the results obtained in a laboratory batch reactor. Fig. 3.4 illustrates this impact. In this figure, it is assumed that at time equals zero, a tracer is injected instantaneously into the reaction system. The reactor systems are then compared assuming that they act similar to perfect CSTRs. The reactor volume is the same in either case, that is, each of the three reactors are assumed to have one-third of the volume of the single reactor. These two systems are compared to a plug flow reactor, which will match the performance of a laboratory batch reactor. When the number of displacements of the reactor is equivalent to one, the tracer leaves the plug flow reactor completely. At the same displacement, 62% of the tracer has left the single reactor, and 58% has left the series reactors. The figure also shows that the difference is much more pronounced at the early stages. The primary purpose of this extra reactor volume is to reduce the amount of bypassing. Figure 3.3 Use of CSTRs to approach batch reactor. Figure 3.4 Percentage of tracer escaped versus reactor displacements. There is another type of staged process that does not require scaling up. Fractionation towers, phase settling drums, and extraction columns, as well as other equipment can be designed from first principles without a detailed analysis of scale-up. The science of designing this type of equipment has advanced to the point that this type of equipment can be designed using well-documented correlations. In summary, this chapter was included in the book to illustrate two principles as follows: • The difference in viewpoints between the process designer/engineer and the bench-scale chemist. The process designer/engineer will look at what the bench chemist is doing in the laboratory and say, “There is no way that I can just design larger equipment to do that on a commercial basis.” The bench chemist will look at what the process designer is proposing (Fig. 3.2) and say, “Why not just do like we do in the laboratory?” • The development of the process flow sheet for the commercial plant is much more than just copying what is being done in the bench-scale process. It requires an understanding of the chemistry in the bench scale and innovation in developing a reliable process flow diagram for the commercial plant. 4 Thermal Characteristics for Reactor Scale-up Abstract The consideration of thermal characteristics in reactor scale-up involves the concept of utilizing data from a bench scale or a pilot plant reactor to design a full size commercial reactor that has the capability to remove/add heat to maintain the temperature control. Maintaining control covers both static and dynamic conditions wherein an upset creates unexpected conditions. Although the book covers scale-up, the thermal characteristics scale-up will always involve an exothermic or an endothermic reaction. Keywords A/V ratio avoiding temperature "runaways" base loading commercial reactor startups heat of reaction The consideration of thermal characteristics in reactor scale-up involves the concept of utilizing data from a bench scale or a pilot plant reactor to design a full size commercial reactor that has the capability to remove/add heat to maintain the temperature control. Maintaining control covers both static and dynamic conditions wherein an upset creates unexpected conditions. Although the book covers scale-up, the thermal characteristics scale-up will almost always involve an exothermic or an endothermic reaction even if the bench scale chemist has not observed any heat of reaction. When discussing the thermal characteristics of a specific reaction, it should be recognized that essentially all reactions have a heat of reaction. In addition, 85%–90% of the reactions are exothermic, that is, heat must be removed to maintain a constant temperature. The thrust of this chapter is primarily directed at exothermic reactions because they make up the majority of the group. When scaling up from a laboratory bench reactor or a pilot plant reactor to a continuous commercial reactor, the thermal characteristics must be considered. The consideration of thermal characteristics includes items such as: • Determining the heat of reaction associated with the reaction. • Determining the impact of the decreased area-to-volume (A/V) ratio associated with the larger reactor. • Determining the potential for the loss of temperature control in the larger reactor. • Analyzing the reactor startup to ensure that there are no negative impacts on temperature versus time profile during startups. • Analyzing the temperature distribution in the reactor with respect to time and location. The heat of reaction can be determined by both laboratory techniques and literature references. One of the keys to avoiding scale-up mistakes associated with the heat of reaction is to make sure that one determines the heat of reaction. Although this statement may sound obvious, laboratory chemists often downplay this variable because they did not notice any heat of reaction in the bench-scale reactor. Essentially all reactions have heat of reaction. In addition, as mentioned earlier, most of these reactions are exothermic reactions (they evolve heat). In the laboratory, the heat of reaction for either an exothermic or an endothermic reaction is often not observed, because the reactor has a large A/V ratio, is often not well insulated, and is provided with a system to quickly remove or add heat. These factors may cause the laboratory chemist to indicate that the heat of reaction is essentially zero. A heat of reaction that approaches zero will be very unusual, and in addition, the factors that cause the heat of reaction to go unobserved in the small equipment may also cause the heat of reaction for an exothermic reaction to be underestimated. Because of these factors, additional work will be required to ensure that an adequate heat of reaction is determined. The heat of reaction can be measured experimentally using two different techniques. Both techniques require very accurate temperature measuring devices and maintaining a reaction temperature that does not vary more than 1 or 2°F. In one technique, a dynamic approach is used. In this approach, a very wellinsulated adiabatic (no heat added or removed) calorimeter is used as a batch reactor. The heat of reaction is determined by operating at a relatively low conversion and measuring the amount of temperature change. As the calorimeter is being operated in an adiabatic mode, any temperature change is associated with the heat of reaction. Although it is beyond the scope of this book to provide details on such a calorimeter, the procedures must include: • Techniques to determine what is the “base loading” of the calorimeter must be developed. The base loading is the amount of heat generated that goes into heating the vessel itself. This is the heat that is generated by the reaction, but rather than heating the process fluid, it heats the vessel. For an endothermic reaction, the base loading will still be important and must be determined. • Determining the heat of reaction experimentally in this adiabatic mode requires highly accurate reactor temperature measurements. The heat of reaction is determined by how much the temperature of the reactor increases or decreases at a very low conversion rate. The low conversion rate allows both the assumption of a constant specific heat and a constant reactor temperature to be valid. • The amount of the reactants converted must be determined accurately. • A second experimental technique for determining the heat of reaction is to again use a well-insulated continuous reactor in a static mode. This reactor is cooled/heated by a heat transfer media. Highly accurate temperature instruments and an accurate flow meter are used to measure the flow rate and inlet and outlet temperatures of the heat exchange media. This along with determination of the reactor conversion allows determination of the heat of reaction. As the reactor is maintained at a constant temperature by the cooling or heating media, the dynamic change in vessel temperature is not a factor. Another technique for determining the heat of reaction is to use literature data that give the heat of formation of the components involved in the reaction. Classical thermodynamics indicates that the heat of reaction is the heat of formation of the products minus the heat of formation of the reactants. For example, the reaction is shown below: (4.1) The heats of formation of the compounds and elements at 64°F are as follows: CH4 = 32,202 BTU/lb-mol O2 = 0 BTU/lb-mol by definition CO2 = 169,290 BTU/lb-mol H2O = 104,132 BTU/lb-mol Thus, the heat of reaction at 64°F can be calculated as follows: (4.2) where ∆HR the heat of reaction/combustion in BTU/lb-mol for methane at 64°F. This can also be expressed in BTU/lbs of methane by dividing the result of Eq. (4.2) by 16, which gives −21,584 BTU/lb methane. The heats of formation for common chemicals are readily available in textbooks. In addition, the heats of formation can be estimated by several different techniques using group contribution methods. In addition, the calculation of the heat of reaction from the heats of formation requires the modifications to allow the fact that very few reactions are conducted at 64°F. This modification can best be made by adjusting the heats of formation to the reaction temperature by using the specific heat of each reactant or product and the difference in temperature between 64°F and the reaction temperature. If the new temperature is not too far from 64°F, the adjustment is usually small and may not be required for preliminary studies. Once the heat of reaction is known, the heat generated can be calculated knowing the amount of reactants converted. The basic concept of heat removal expressed in Eq. (4.3) shown below can then be used to evaluate the needs. (4.3) where U is the heat transfer coefficient. This will be a function of the type of heat removal device that is utilized. It will likely be radically different from the coefficient experienced in the laboratory, A is the heat transfer area, and Ln ∆T is the log temperature between the cooling/heating media and the reactor. Although the same relationship as shown in Eq. (4.3) applies to both the laboratory and the commercial plants, the scale-up from a small reactor to a larger reactor will reduce the A/V ratio unless changes are made to the heat exchanger design. A is the heat transfer area as shown in Eq. (4.3) and V is the reactor volume. The impact of A/V ratio was discussed in a preliminary fashion in Chapter 1. As a reactor is scaled up with a constant H/D ratio and with no additional heat exchange facilities such as internal coils or an external pump-around exchanger being added, the ratio of heat exchange A/V will always decrease. Unless the temperature of the heat exchange fluid is changed, it is likely that the reactor temperature cannot be controlled whether the reaction is exothermic or endothermic. An example of this might be a scale-up of an exothermic batch CSTR that is scaled up by a factor of 100, that is, the commercial production rate is 100 times the rate in the pilot plant or bench-scale reactor. It is desirable to keep the residence time and reactor H/D at the same value as that in the smaller reactor. For this example, only thermal characteristics are being considered. As the reactor volume (residence time) is proportional to the vessel diameter cubed (D³) and the surface area for heat transfer is proportional to the vessel diameter squared (D²), it is shown below that the A/V ratio is reduced by a factor of 4.63 when the reactor is scaled up by a factor of 100. (4.4) Thus, we can conclude that the A/V ratio is proportional to the reciprocal of the diameter. Now when considering the scale-up from bench-scale reactor to commercial reactor with a constant H/D ratio, the following derivation can be developed: (4.5) As the scale-up ratio is proportional to the volume ratio: (4.6) (4.7) where C is the A/V ratio of the commercial reactor, B is the A/V ratio of the bench-scalreactor, DC is the diameter of the commercial reactor, and DB is the diameter of the bench-scale reactor. Thus in the commercial plant, the A/V ratio will be only 20% of that in the bench-scale reactor. Thus unless heat removal/addition requirements are very low, it will be necessary to enhance the heat removal capabilities by design changes such as modifying the temperature of the coolant or heating medium, providing internal coils or an external pump around circuit to increase the surface area for heat transfer. In addition, the potential for a loss of temperature control (temperature runaway) in an exothermic reaction is much higher in a commercial reactor than in a laboratory or pilot plant reactor. A temperature runaway occurs when a slight increase in reactor temperature triggers an increase in reaction rate. Unless sufficient excess capacity is provided in the cooling system, this slight increase causes an increase in reaction rate and a further increase in temperature. In the design of the reactor cooling system, this must be considered. In a smaller unit (bench scale or pilot plant), the temperature runaways are generally not a problem because of the inherent excess capacity of the cooling system and heat transfer area. As an example of this, consider the case of an exothermic non-reversible reaction. The steps in this consideration are shown below: For a temperature runaway to occur, the rate of heat generation with respect to reactor temperature must be greater than the rate of heat removal with respect to reactor temperature. This can be expressed mathematically as given in Eq. (4.8). (4.8) where dQg/dT is the rate that heat generated increases with temperature and dQr/dT is the rate that heat removal increases with temperature. The rate that heat is generated in BTU/lb can be expressed as shown in Eq. (4.9). This is a typical Arrhenius equation. (4.9) In Eq. (4.9), K is a constant that depends on reactor conditions, reactant concentrations, reactor volume, and heat of reaction, B is a constant that describes how the reaction rate changes with temperature, R is the universal gas constant, BTU/lb-mol T, and T is the absolute temperature, °Rankine. The “B” term can be developed with experimental data. However, at the early stages of process development, this experimental work may not have been conducted yet. If this is the case, a reasonable “rule of thumb” is that the reaction rate doubles for every 20°F increase in temperature. This would be equivalent to a “B” value of approximately 23,000 BTU/lb-mol. Eq. (4.9) can be differentiated with respect to the absolute temperature, T, to give the rate that heat generated increases with respect to temperature as shown in Eq. (4.10). (4.10) The rate that heat is removed from the reactor can be represented by a typical heat removal equation, which is shown in Eq. (4.11). (4.11) where U is the exchanger heat transfer coefficient, BTU/ft²-°F-hr, A is the exchanger surface area, ft², and Ln ∆T is the log temperature difference between the reactant side and the cooling water side °F. Assuming that as temperature runaway conditions are approached, the cooling water flow is at a maximum, the average water temperature will not decrease. Therefore as a first approximation: (4.12) (4.13) where (T – Tw) is the difference between the average reactor temperature and the average cooling water temperature. In the initial few minutes of a temperature runaway, the average cooling water temperature will remain constant. Thus the differentiation of Eq. (4.13) with respect to reactor temperature, T, gives Eq. (4.14). (4.14) By substituting the values from Eqs. (4.10) and (4.14) into Eq. (4.8), Eq. (4.15) can be obtained: (4.15) An examination of Eq. (4.15) indicates that if the term on the left-hand side of the equation (the rate that heat generated increases with temperature) is higher than the term on the right-hand side (the rate that heat removal increases with temperature) and that the cooling system has only a limited amount of additional capacity that it is likely that a temperature runaway will occur. These potential temperature runaways can be eliminated or at least mitigated by providing excess heat exchange area in the scaled-up design. Although this example is for an exothermic reaction, similar derivations for an endothermic reaction would indicate that under conditions wherein the heating capacity was limited and a small increase in reaction rate occurred, the reaction temperature would decrease followed by a decrease in the reaction rate. The condition of the temperature and reaction rate decreasing would continue. However, in the case of an endothermic reaction, there will eventually be a point wherein the reactor temperature and subsequent reaction rate have decreased so far that an equilibrium will be established, that is, the available heat input capability will equal the heat required to sustain the reaction. Thus the uncontrollable temperature in an endothermic reaction will be self-correcting. Another potential with the thermal scale-up of a reactor is associated with providing facilities to bring the reactor up to reaction temperature quickly. The incentives for getting the reactor to reaction temperature quickly in the commercial world are: • The only productive time is that in which the reactor is producing product. The time to heat up or cool down the reactor is basically a wasted time. For the most part, this incentive does not exist in a pilot plant or bench-scale facility. The smaller equipment can be brought to the desired temperature fast because of the inherent excess heat transfer capability. Even if this inherent excess capacity does not exist, the debit for loss of productive time is less with the smaller equipment. • There are occasions where the actual temperature profile of the heat-up or cooldown period can be important. One example of this is a reaction where all of the reactants are added prior to heating the reactor to operating temperature. This heat-up period in the small equipment will be very short compared to a comparable time in the larger equipment. If the product morphology is impacted by reactor temperature, the slower heat-up may well cause a difference in morphology. An actual example of this is provided later. Another possibility wherein a slower heat-up may impact the product or product yield is one wherein secondary reactions are important at temperatures intermediate to ambient and reaction temperature. The obvious solution to these concerns is to provide more heat exchange capacity. This can be done by either increased heat transfer area or in the case of heating/cooling a reactor, changes in heating or cooling media temperature. For example, to quickly heat up a reactor that is normally heated with hot water, a steam heater can be provided to increase the temperature of the media and improve the heat transfer coefficient. Temperature distribution can sometimes be a problem in a reactor. This can be considered a scale-up problem, because it often does not occur at small scale, but does occur at the larger scale. Mal temperature distribution covers areas such as: • Poor temperature distribution with time. When considering an exothermic reactor, a short-term temperature spike may occur immediately after the catalyst is injected. This temperature spike may or may not be observed depending on the location and speed of response of the temperature measuring devices. However, it may be important when considering overheating of individual particles. • Poor temperature distribution with position. This is often referred to as “reactor hot spots.” The poor temperature distribution can be caused by poor agitation, poor flow distribution of either the reacting mass or the cooling media, fouled heat transfer surface, or distance from the heat transfer surface to name a few possibilities. Again, these “hot spots” may or may not be observed primarily dependent on the location of the temperature measuring devices. In either of these causes for poor temperature distributions, there can be variations in the magnitude of the distribution from day to day. For example, one lot of supported solid catalysts (active ingredient supported on an inactive solid) may have a slightly higher concentration of the active ingredient than another lot. The catalyst with the higher concentration of the active material may result in a higher catalyst activity. But more importantly, it may cause the temperature versus time distribution to peak at a higher temperature level. This higher peak temperature can lead to reactor fouling or poor product performance. This chapter illustrates the complexity of scaling up thermal aspects of a reactor from bench scale or pilot plant to full commercial operation. While most of these aspects are classical chemical engineering, to avoid scale-up problems careful consideration must be given to each one of them. This is an area that not only can cause process design errors in scale-up, but also can cause disastrous events. One of the case studies described later is an example of a potentially disastrous event. The impact of the event was mitigated by the size of the equipment involved. 5 Safety Considerations Abstract The purpose of this chapter is NOT to discuss all the safety implications associated with developing a new process. The assumption is that the basic safety considerations are covered as part of the bench scale development and subsequent engineering design. In addition, this chapter does not cover all the aspects of the components necessary to create a fire or explosion, and it will be helpful to remember that for a fire/explosion event to occur, three things must be present. Keywords Arrhenius factor chemicals concentration engineering hazard need for early considerations of chemicals and by-products The purpose of this chapter is NOT to discuss all the safety implications associated with developing a new process. The assumption is that the basic safety considerations are covered as part of the bench scale development and subsequent engineering design. In addition, this chapter does not cover all the aspects of the components necessary to create a fire or explosion, and it will be helpful to remember that for a fire/explosion event to occur, three things must be present: • An explosive mixture must be present. The concentration of the combustible must be greater than the lower explosive limit (LEL expressed as volume % of the combustible in air) and less than the upper explosive limit (UEL expressed as volume % of the combustible in air). • Oxygen must be present. This is normally associated with the presence of air. Substitution of pure oxygen for air is sometimes done as the process is scaledup. This increases the safety risk, because as the concentration of oxygen in the gas phase is increased, the explosive limit widens. • There must be an ignition source. Typical sources are electrical motors, static electricity discharges, and open flames. The primary purpose of this chapter is to discuss what considerations should be given to safety when a process is scaled-up from either bench scale or pilot plant scale to commercial operation. It is assumed that the safety of the process has been well demonstrated in the smaller equipment. It might seem that for a safe and well-documented operation of a bench scale or pilot plant facility, no additional consideration is required except for a levels of protection analysis and/or hazard and operability study following completion of the process design and/or detailed engineering design. Although these studies are very valuable, it should also be recognized that as the size of a facility is increased, the degree of hazard in the operations also increases. This increase in the hazard level is associated with the larger equipment handling the larger volumes of fluid required, the level of complexity of the larger facilities, the use of new chemicals, and the large-scale operating procedures as opposed to the procedures for handling small quantities. These possibilities should be considered during the scale-up. When considering the change in size from bench scale to pilot plant scale or from pilot plant scale to commercial scale, some of the areas that must be considered are as follows. Use of New Chemicals Safety considerations associated with new chemicals can be of two different kinds. A chemical or chemicals being used in a new process may have been used in the bench scale and pilot plant with no safety incident, but the operating plant may not have any experience with these chemicals. Because the chemicals are new to the operating plant, they should receive careful consideration prior to utilization. Handling techniques, storage considerations, and unloading techniques are different between bench scale and commercial operation. Although it is likely that the primary chemicals will not change as the process is being developed, one must ask whether there are any new chemicals that are introduced into the process because of the difference between using reagent grade and commercial grade of one of the primary chemicals. For example, could the commercial grade of a solvent contain benzene which could build up in a recycle stream to a concentration that would cause the accepted toxic limit of benzene to be exceeded? In addition to this example, there is always the possibility that the commercial availability of the primary chemical/chemicals was not thoroughly researched during the process development phase and that it becomes necessary to make a complete change in the primary chemical/chemicals. This is often done in a rush with the primary emphasis on the process. It is imperative that the focus on safety not be lost during this frantic period. Another possibility is the use of a new catalyst in place of an existing one. The impact on this substitution on shared facilities is described later. In addition, the different Arrhenius constant may impact the cooling system if the reaction is exothermic. A second way that chemicals associated with a new process or modification to an existing process might create safety problems is associated with chemicals that have been used safely in the operating plant for many years. Because of this longevity of use, they are considered to be safe and, during reviews, references are made to this long-term safety experience. However, the new process or modification may utilize these chemicals in a new manner, which leads to an unsafe situation. Sulfuric acid is used in a refining process, referred to as Alkylation. In this process, the acid is used at a concentration greater than 65%. The acid is not highly corrosive at this concentration. However, if the same chemical is used at a lower concentration, say 50%, it becomes very corrosive. Change in Delivery Mode for Chemicals When a process that is under development moves from the laboratory to larger production facilities (pilot plant or commercial plant), the mode of delivering the chemical to the operating plant and/or delivering the chemical to the primary vessel (reactor, extractor, dryer, and others) will likely change. Although this might seem obvious, the recognition and mitigation of any safety risks associated with this change are not always clear and require careful study. Some safety-related considerations are discussed below: • Solid materials—a solid material is generally much easier to handle at the bench scale level than in commercial size equipment. When considering the safety of handling large volumes of bulk solids, several things such as dust explosions, static electricity created by loading into large volume containers, and residual volatile content of the solids must be investigated. A solid-related explosion must be considered, because most dusts are explosive. Solid materials as diverse as polyolefins and wheat, when existing in a dust-like atmosphere, can form explosive mixtures. These dust explosions ignited by sparks from electric motors or by static electricity are not well known in the process industry. In addition, they are essentially unheard of if the solids are being used at the bench scale level. However, if the material in large quantities is being transferred by a conveyor belt, there are multiple sources of ignition if the solid material does form a combustible dust. Thus in this case, what seemed like movement of harmless solid material can become a hazard as the mode and quantity of solid movement changes. In this example, the upgrading of electrical facilities to avoiding sparking motors would be a partial but helpful solution. Another source of ignition of flammable dusts is static electricity. The random movement of solids going into large bulk shipping containers can create static electricity, which manifests itself as a spark, which can ignite both residual volatiles and flammable dusts if the material is inside the explosive range. The most likely source of the flammable material inside a large volume bulk container is the presence of residual volatiles. These volatiles are often present because of the lack of understanding of the equilibrium between the volatiles in the final stage of solids processing and the concentration of volatiles in the vapor phase of the final stage. It is often assumed erroneously that if the temperature of the solid in the final drying step is above the boiling point of the volatile then the solid will contain none of the volatiles. When solids containing excessive levels of volatiles are loaded into the shipping container, the volatiles evolve from the solid and flow to the vapor phase. Depending on the amount of volatiles and the amount of air in the container, an explosive mixture may be formed. If this happens, it may be ignited by static electricity generated by the movement of the solids in the container. • Liquid materials Shipping liquid materials in tank cars or tank trucks and storing them in large vessels can also create a safety hazard similar to the ignition of combustible dust clouds by static electricity. If one considers filling an unpurged storage vessel, tank car, or tank truck with gasoline, there will be a point in the filling of the vessel wherein the mixture of gasoline vapors and air will be in the explosive range. This is true even though the vapor immediately above the liquid is “too rich” to ignite. If the filling rate is too high, a static spark can be generated and ignite the explosive mixture. Another case of the size of the facility possibly dictating what appears to be an insignificant change in the mode of delivery might be associated with the pyrophoric (a spill will catch fire without the presence of an ignition source) alkyl material. A material such as Triethylaluminium is pyrophoric at a concentration in a solvent over 12–15 wt%. Below this level, it is not pyrophoric. Superficially, it would appear that using the material at a lower concentration might enhance safety. In the bench scale, the material is often used at a concentration of 100% because of the small quantity used and the small size of the equipment. However, in a commercial plant, the diluted form is often used to enhance safety even though the transportation cost and material cost are increased. This “safety improvement” may allow the operators and mechanics to avoid the bulky protective clothing that would be used if the alkyl was not diluted. The assumption that the diluted alkyl is not pyrophoric is not always valid. For example, there have been occasions wherein even at concentrations below the pyrophoric limit, the material has ignited spontaneously. This spontaneous ignition of diluted material might happen if a leak occurs and the dilution solvent vaporizes quickly under the conditions of an unusually hot day with strong winds. Thus in somewhat convoluted circumstances in what was intended as a change to enhance safety turned out to be a safety trap as the operations and mechanical personnel will likely not be wearing protective clothing. When considering bulk chemicals, the mode of delivery to an operating plant is often by rail car or tank truck rather than small containers used in a bench scale or pilot plant development. This brings up the issues associated with transferring the material from the tank car or tank truck to the storage vessel. Pumps, pressure transfer with nitrogen, or pressure transfer with process gas all have safety issues associated with them. These are all workable issues unless they are ignored or not considered. Impact of a New Development on Shared Facilities Whether the new development is a new plant or a different chemical being used in an existing plant, there will likely be some impact on the shared facilities. Shared facilities consist of utility systems, effluent treatment systems, and safety release systems. An example of this is the change in catalyst in a process with a single component boiling exothermic reaction. The new catalyst is known to be both more active and have a larger Arrhenius factor. Prior to the initial test run, it was decided to check the adequacy of the safety release system with the new catalyst for contingencies of a power failure and a loss of temperature control. Because the reactor contains a single component and two phases, the degrees of freedom will be limited to one. Thus if the pressure is fixed, the temperature will also be fixed. The design of the safety release system called for the reactor pressure to increase until the set point of the safety release valve was reached. Because the degree of freedom is 1, the temperature is known. The Arrhenius factor can then be used to determine the reaction rate, heat of reaction release, and vaporization rate of the boiling component. This boiling rate will be the rate that must be released through the safety valve. The larger Arrhenius factor will cause the reaction rate to increase faster, the vaporization rate to be higher, and in the case of a release to the flare, this rate will be higher than with the old catalyst. This will require checking out the entire release system for temperature runaway and utility loss contingencies. The system requires checking to confirm that the blowdown drum and seal leg are large enough and that the ground level flare radiation is within acceptable limits. The ground level flare radiation is the radiant heat from the flare when the system release rate is at a maximum. The calculation of ground level radiation compared to the acceptable criteria to avoid personnel injuries is used to determine the flare location and flare height. Although it could be argued that this detail does not need to be done early in the project development, it should receive consideration as soon as the base data are available. An exceptionally high flare or one requiring a large horizontal separation from the process unit will have a large impact on the CAPEX as discussed later. Reaction By-Products That Might be Produced In the bench scale or pilot plant scale, disposal of by-products formed by the reactions is generally not a problem because of the small quantities involved. However, in a commercial plant, these materials must be disposed off in an environmentally and safe means. This might involve both safety and environmental considerations in determining the ultimate storage or conversion to a nontoxic material, transportation, and personnel protection. EPA regulations and ethics indicate that the waste generators own the waste from “cradle to grave”. Thus at the planning stage of a new project, disposal of reaction byproducts and/or waste must be considered. Depending on the specific process being developed, a solvent might be used to wash the solid product. This washing and settling step will generate a material, which is not a reaction byproduct, but must be disposed off in the same manner as a by-product or waste material. Storage and Shipping Considerations As the process is scaled-up from bench scale or pilot plant, the amount of product to be shipped, the amount of raw materials consumed, and the delivery frequency of other chemicals increase dramatically. The required facilities to handle these increases must now include items such as tank car loading/unloading, tank truck loading/unloading, bag/box loading, automated drum loading, container unloading, and/or pipeline facilities. Some of these have been discussed earlier. In addition, storage facilities will be required for the outgoing product or incoming raw materials. The amount of hazardous material to be stored should be minimized from a safety consideration. However, the amount of storage for raw materials can be very large if there is a concern about “running out” of a critical material. The fertilizer plant in West, Texas stored large quantities of ammonium nitrate with no safety-related events until a fire occurred in the area and the subsequent explosion of ammonium nitrate severely damaged the plant and a large part of the town. During Hurricane Harvey, large amounts of peroxides were stored at a specialty chemical company. The material had to be kept at refrigerated temperatures to avoid decomposition with the likely occurrence of explosion and fire. When high water caused failure of the electrical system and the standby generators for the refrigeration system, the only resource left was to evacuate plant personnel and those living close to the plant. As the amount of hydrocarbon storage increases, the probability of a highly damaging unconfined vapor cloud explosion also increases. In addition, as the size increases, laboratory curiosities such as dust explosion or static-induced explosions become more severe and have in some instances resulted in largescale destruction, deaths, and injuries. Step-Out Designs Careful consideration must be given to “step-out designs”. These are the designs that appear to be very similar to an existing, well-proven process, but they have a “minor change” that allows the efficiency to be improved. Safety risks have a way of sneaking into this type of step-out design. For example, a well-proven process that uses air as the source of oxygen in a hydrocarbon oxidation process was converted to using pure oxygen. Although the concentration of the hydrocarbon in the gas whether the gas was air or oxygen was well outside the explosive limits, no consideration was given to the fact that the explosive limits broadened as the percent oxygen in the gas increased. As a result of this oversight, the oxidation reaction was operating in the explosive range and an explosion eventually occurred. A similar event occurred on Apollo 1. On the basis of several successful flights of earlier capsules using an oxygen atmosphere to conserve weight, it was assumed that the safety had been demonstrated. However, during a test prior to space craft launch date, an explosive mixture inside the capsule was ignited by a spark and all three astronauts were killed. The point of this discussion is that what appears to be a technology breakthrough (the use of pure oxygen and the assumption of no sparking device) needs a comprehensive safety review. So, when considering the safety aspects of scale-up, careful consideration must be given to questions such as: • What new hazards will be encountered as rates and equipment sizes are increased? • What trivial hazards known to exist in smaller equipment will now become significant hazards as the size of equipment is increased? 6 Recycle Considerations Abstract When considering a new process that is at the bench-scale level, the usual thinking is that a two-step scale-up procedure should be used. That is, (1) A scale-up from bench-scale to pilot plant. The pilot plant is almost always visualized as a prototype of the commercial plant. That is a complete replica of the commercial plant, and (2) The second step is a scale-up from the pilot plant to the commercial plant. This scale-up is often assumed to be a replica of the pilot plant but with much larger equipment. This chapter is included to illustrate that the pilot plant does not always have to be a prototype of the commercial plant. Keywords pilot plant prototype recycle should recycle be included in pilot plant? solid beds solvent When considering a new process that is at the bench-scale level, the usual thinking is that a two-step scale-up procedure should be used. That is, • A scale-up from bench-scale to pilot plant. The pilot plant is almost always visualized as a prototype of the commercial plant. That is a complete replica of the commercial plant. • The second step is a scale-up from the pilot plant to the commercial plant. This scale-up is often assumed to be a replica of the pilot plant but with much larger equipment. However, economics and practicality of the pilot plant design may dictate straying from the philosophy of having the equipment in the pilot plant be a prototype of the commercial plant. For example, the pilot plant reactor heat loading may allow a less expensive and easier-to-operate reactor design than a scaled-down version of the commercial plant. In addition, the enhanced A/V ratio in the smaller plant may require a different reactor design to have a good temperature control. There may be other areas wherein the prototype philosophy can be replaced by calculations, supplier pilot plant data, or less expensive equipment. This chapter is included to emphasize and illustrate the concept that a pilot plant does not have to be designed to serve as a prototype. Because most process developments involve reactions, the discussions that follow assume that a new process being developed includes a reaction step. It can also be expanded to include a process wherein an adsorption step is a key component and the concepts appear to be applicable to this type of process also. When this question arises during the design of a pilot plant, the need for recycle equipment is often a key question. Why Have a Recycle System in a Pilot Plant? One of the hardest decisions to make when considering a pilot plant is the question whether the recycle of solvent and/or unreacted materials needs to be included. Similar considerations are encountered when it is decided to scale-up directly from bench-scale data without going through the pilot plant step. Some of the key questions that must be considered in making the decision to include recycle in a pilot plant design are: • Will the recycle facilities actually be operated or will they prove to be an operating burden and not be used? The recycle equipment will require additional maintenance and operational attention. Because recycle is a peripheral operation, the presence of these facilities detracts from the primary mission of the pilot plant which is generally associated with developing reaction-related data and possibly developing different products. • Are the chemicals (solvent and unreacted feeds) so valuable that there is a justifiable incentive to build and operate a recycle system? In making this determination, the life of the pilot plant must be considered. The pilot plants will normally have much shorter lives than the commercial plants, because of the changing technologies. If a recycle system is not included, the disposition of the solvent and unreacted materials must be considered. This disposal must be done in an economical and environmentally acceptable manner. This cost must be included in the operating cost of a facility that does not include a recycle system. • Is it necessary to operate a recycle system to simulate the possible buildup of reaction impurities or inerts in the process? These possible impurities must include both known and unknown impurities. Impurity Buildup Considerations Impurities or inerts that enter the process with the feeds (solvent or reactants) or are produced as by-products of the reaction may build up in the recycle system. These impurities/inerts may or may not be harmful to the reaction. However, regardless of their impact, they must be purged from the system. The impurities will consist of both known impurities that were analyzed during bench-scale studies or known from feed or solvent specifications and unknown impurities, that is, impurities that were not discovered analytically, but which cause reaction problems and/or build up in the recycle system. Both the known and unknown impurities may build up in the recycle system until their concentration in the liquid purge or vent streams is adequate to remove them. This is shown schematically in Fig. 6.1. As shown in this figure, these impurities will enter the reactor at a concentration that is based on the ratio of fresh feed to recycle feed and the concentration of the impurity in the recycle and fresh feeds. The concentration of the impurity in the recycle stream is a function of the severity of the separation of the impurity in the recycle facilities and the amount of purge. For simplicity, the recycle separation severity is defined as the ratio of the concentration of impurity in the recycle divided by the concentration of the impurity in the purge. Figure 6.1 Build up for nonreactive impurity. A quantitative analysis of the concentration of the impurity buildup in the reactor feed can be developed as shown in Table 6.1. The analysis is for a very specific example. However, the techniques can be used for any process that involves a recycle of solvent and/or reactants. In this example analysis, it is assumed that none of the impurity is destroyed in the reaction. If some of the impurity is destroyed, an additional factor should be included to represent this. Table 6.1 Given: A reaction process that converts a single reactant into a solid product that can be easily be separated from the reac FF = is the rate of fresh feed = 1000 lbs/hr. C = is the reactor conversion = 70 %. XFF = is the concentration of the impurity in the fresh feed = 0.5 weight %. S = is the severity of separation of impurity in recycle system XR/XP = 1.0. Since As this ratio is equal to 1.0, it means t PR = is the product rate = 900 lbs/hr. Other variables to be calculated: TF = is the total reactor feed, lbs/hr. P = is the purge rate, lbs/hr. XP = is the concentration of impurity in purge, %. XR = is the concentration of impurity in recycle, %. R = is the recycle rate, lbs/hr. XTF = is the concentration of impurity in total feed, %. Objective of calculation: To determine the concentration of the impurity in the total feed (XTF). Steps 1 (6.1) 2 (6.2) 3 (6.3) 4 (6.4) 5 (6.5) 6 (6.6) 7 (6.7) 8 (6.8) For the values given and using the steps above, the concentration of the impurity in the total reactor feed is estimated to be 1.5 wt%. This calculated value can then be used as the concentration in a laboratory batch reactor. The results from the batch reactor will have to be adjusted to estimate the impact of the impurity in the continuous commercial reactor. If the anticipated results are not acceptable, the purge rate and/or the “severity of separation” will have to be adjusted to reduce the concentration of the impurity in the reactor feed. The severity of separation will involve operations such as fractionation and/or adsorption on solid beds such as mol sieves, alumina, or charcoal. Fig. 6.2 shows the steady-state buildup concentration of an impurity that enters with the fresh feed as a function of recycle separation severity (S). Figure 6.2 Impact of separation severity on impurity concentration in reactor feed. As indicated earlier, in addition to the known impurities that are present in the recycle stream, there may be unknown impurities present in this stream that impact the reaction and/or the product. Fortunately, impurities that impact the reaction or the product are often the ones that are readily adsorbed on solid beds. If unknown impurities are a concern, there are three approaches that might be used as follows: • Install a recycle facility in the pilot plant and modify it as necessary to obtain a satisfactory pure recycle stream. • Do not install a recycle system in the pilot plant. Determine the design of the plant recycle system based on batch studies and calculations. • Do not install a recycle system. Install adsorption beds in the commercial plant. The selection and design of these beds will likely be very speculative. If recycle facilities are not provided in the pilot plant, the buildup composition can be estimated using techniques shown in Fig. 6.1 and described in Table 6.1. It is likely that this kind of calculation will be necessary anyway to determine what kind of separation is required prior to design of the pilot plant. Once the composition of the feed to the reactor is estimated, it can be used in an impurity study to determine the specification of impurities in the reactor feed and from that the required level of purification of the recycle stream can be determined. This type of calculation can also be used to estimate what impurities might be present in the recycle solvent or recycle reactant streams. In an actual example, the chlorinated hydrocarbons present in the recycle system as a reaction byproduct were estimated based on vapor–liquid equilibrium (VLE) and a fractionation study. This study was performed by consideration of what byproducts might be produced in the reactor. Some of these by-products could be eliminated, because any reasonable severity purification system would remove them. The remaining by-products were studied in more detail and the ones likely to be present in the recycle systems were compiled. The reactor effluent was then analyzed for these materials. If they were not found, it was concluded that they were not formed. If they were found, their impact on the reaction was studied further and the total reactor feed (recycle and fresh) specifications were developed. This approach allowed the development of the required fractionation separation severity. The fractionation requirements (number of trays and reflux ratio) were determined from a computer program using the fractionation severity with carefully developed VLE factors. If it was determined that fractionation by itself would not provide an adequate separation severity, additional facilities such as mol sieves were studied. This specific scale-up is described in Chapter 14. Obviously, this analytic technique does not work for unknown impurities. To determine if there is an unknown impurity present, a “worst case scenario” can be simulated by obtaining a sample of the reactor at the termination of the reaction if the reaction is being conducted in a batch mode or a sample of the reactor outlet if the reaction is being conducted in a continuous mode. This sample with no treatment can then be used as the only feed to the reactor. By measuring the reaction kinetics and comparing them to that obtained with a pure reactant, the absence or presence of a harmful unknown impurity will be confirmed or not confirmed, that is, if the reactor kinetic constant using untreated recycle is equal to that with fresh feed only, it can be assumed that there is no harmful unknown impurity present. If it does appear that there is a harmful unknown impurity present, additional bench-scale impurity studies may be required to determine what separation severity will be required for a pilot plant or commercial plant design. One risk in using a pilot plant to determine the maximum level of an impurity is the need to obtain steady-state operation for an extended period of time. Commercial plants operate at steady-state operations for longer periods of time than the pilot plants. Pilot plants change conditions frequently to test new conditions or to make different products. When considering a single well-mixed vessel operating in a continuous mode, three displacements are required for the outlet concentration to reach 95% of the steady-state value. A displacement is defined as the vessel volume divided by the outlet flow rate expressed in volume units. When considering a pilot plant, there will likely be several vessels in series. This extends the time required to achieve steady-state operation. This concept is shown in Fig. 6.3 for the hypothetical case of three vessels in series each with a average residence time of 3 h. Although this does not adequately predict the impact that occurs in a pilot plant with vessels with varying residence times, it is shown to illustrate the need for significant periods of operation to get to a steady-state operation, which would enable one to conclude that the pilot plant recycle design would be adequate for a commercial plant. The need for achieving steady-state operations to obtain all data from the pilot plant should to be considered. Figure 6.3 Percentage of final change versus time. Buildup of impurities in the commercial recycle facilities due to unknown side reactions or contaminants in the feed may also cause safety-related problems (corrosion or internal explosions). This potential may not have been apparent in the bench or pilot plant, because there was no recycle system. An example of this might be a plant that utilizes a vacuum to remove either unreacted chemicals or solvent from a solid product and then processes this recovered material in a recycle system. Regardless of the design, construction, operations, and maintenance, some air will always leak into the process. This air will concentrate in a vent stream. The design and operations using an oxygen analyzer should ensure that this stream is not in the explosive range. The necessary study to ensure that any vent stream is not in the explosive range may not require a pilot plant demonstration. It may be a design study based on well-established criteria. For example, in considering the buildup of oxygen in a vent stream, there are well-defined criteria for the air leakage into a vacuum system. This air must be released in a vent system associated with the recycle system. Knowing the lower explosive limit of the hydrocarbon that would vent with the oxygen enables one to estimate the amount of hydrocarbon that must leave the vent to avoid forming an explosive mixture. As indicated earlier, scale-up may also involve using a new chemical or catalyst in an existing facility. This rarely involves the need for building a pilot plant to study the recycle system. However, it will involve the need to do careful calculations and consideration of potential problems that might be associated with the recycle system or other parts of the process. Areas such as safety, byproduct formation and removal, impurity buildup in the recycle system, as well as a host of other considerations need to be studied. In addition to the projects involving new technology, there are also projects involving converting an existing plant to produce a completely different product, Whether or not this change will require pilot plant work to prove the capability of the recycle system depends on the complexity of the change, whether or not an existing pilot plant is available, and the amount of risk a company is willing to take. Supplier’s Equipment Scale-up Abstract The scale-up of mechanical equipment such as agitators, solid blenders, indirect dryers, and other pieces of equipment requiring specialized knowledge almost always requires assistance from the supplier’s engineering staff or others outside the operating or engineering company doing the scale-up. However, it is very important for the designer to adequately communicate to the outside sources of knowledge what exactly the equipment is to perform. The purpose of this chapter is to provide examples of this type of equipment, point out why outside assistance may be necessary, and show how the engineer from the owner or engineering company can work with the supplier. Two specific examples have been chosen to illustrate these principles. The first example is an agitator/mixer scale-up. The other example is that of an indirect dryer. Keywords agitators gas-to-solid ratio indirect heated dryer scale-up specialty equipment turbulent flow The scale-up of mechanical equipment such as agitators, solid blenders, indirect dryers, and other pieces of equipment requiring specialized knowledge almost always requires assistance from the supplier’s engineering staff or others outside the operating or engineering company doing the scale-up. However, it is very important for the designer to adequately communicate to the outside sources of knowledge what exactly the equipment is to perform. The purpose of this chapter is to provide examples of this type of equipment, point out why outside assistance may be necessary, and show how the engineer from the owner or engineering company can work with the supplier. Two specific examples have been chosen to illustrate these principles. The first example is an agitator/mixer scale-up. The other example is that of an indirect dryer. Agitator and Mixer Scale-up The scale-up of agitators/mixers can be approached in either a qualitative or quantitative manner. The qualitative manner involves describing what kind of mixing is desired. This approach is often helpful in scaling up from a benchscale batch process to either a commercial or a pilot plant facility. The quantitative approach involves calculations using both dimensionless numbers and factors that describe the mixing. The quantitative approach to the scale-up of agitators/mixers can involve many different factors. Examples of these quantitative factors include power-tovolume ratio, tip speed (the linear speed of the tip of the agitator), generated centrifugal force (the force that pushes the heavy solids or more dense phase to the wall of the vessel), Reynolds number, and Froude number. Scale-ups using different factors rarely give the same answer. For example, scaling up using a constant tip speed will cause the larger vessel to have a lower power-to-volume ratio. This assumes dimensional similarity between the smaller and larger reactors and similar mixer styles. In many scale-ups, the first choice is to maintain dimensional similarity. In this approach, the pilot plant agitator is considered a prototype and similar dimensional ratios are maintained in the commercial plant. However, this will result in differences in tip speed and power input as shown in Table 7.1 for a production scale-up equal to 100. The results in this table are based on maintaining constant physical properties, identical impeller dimensional ratios, a constant agitator diameter-to-tank diameter ratio, a constant height-to-diameter ratio, and operation in the turbulent regime in both the pilot plant and commercial plant. Table 7.1 Volumetric Scale-up Ratio Equals 100 Variable Pilot Plant Commercial Plant Vessel diameter, feet 3 14 Agitator diameter, feet 2 9 Agitator speed, rpm 60 TBD Agitator tip speed, fps 6.3 TBD 6.3 6.3 Constant tip speed, fps Agitator speed, rpm 60 13 Power/volume Base .2 × Base Centrifugal force factor Base .2 × Base Base Base Constant power/volume Agitator speed, rpm 60 21 Tip speed, fps 6.3 10 Centrifugal force factor Base .55 × Base Scale-up criteria a The assumption is that the fluid properties, agitator impeller type, and agitator diameter/tank diameter are the same. The purpose of Table 7.1 is to illustrate the variations that can occur when two different scale-up criteria are used. As shown in this table, the selection of scaleup criteria strongly influences the mixer design to be used in the commercial vessel. Both power input per volume and agitator tip speed have been used successfully as reasonable scale-up criteria. However, as can be seen, they give radically different results. If the tip speed is held constant, the power input per vessel volume in the commercial plant will be only 20% of that in the pilot plant. This reduction in power input raises some questions about the adequate mixing or in the case of solid–liquid mixing, the adequate dispersion and suspension of the solids. On the other hand, if the power input per vessel volume is maintained constant, the tip speed in the commercial plant will be 60% greater than that in the pilot plant and the agitator rotational speed will be about 30% of that in the pilot plant. The higher tip speed has some potential impact on solids that are being mixed. Some solids are so brittle that a high tip speed can destroy their integrity. In addition, Table 7.1 shows a term that is referred to as “centrifugal force factor”. This is simply the term “rω²” where “r” is the radius of the agitator and ω is the rotational speed in revolutions/second. An examination of this term indicates that in both the examples given, there will be significantly more centrifugal force generated in the pilot plant than in the commercial plant. In a well-baffled reactor, this centrifugal force is generally ignored. However, in a solid–liquid mixing system without baffles, this can be a significant factor. If the pilot plant is operated to maintain a power-to-volume ratio comparable to the commercial plant, the increased centrifugal force may cause solids to accumulate on the walls of the vessel and create agglomerates, which plug the outlet of the reactor. The same concerns are valid for a pilot plant agitator operating at the same tip speed as the commercial agitator. When considering these difficulties involved in scale-up, there are two approaches that can be used. Both of these involve assistance from the outside sources. In one approach, the type of mixing that is required can be defined along with the physical properties of the material. This information along with any appropriate data from the smaller size vessel is then transmitted to the equipment supplier. The equipment supplier then designs the mixing system based on his experience and proprietary correlations. Some examples of the type of mixing that might be specified to the supplier are: • Perfectly mixed—This is defined as mixing that provides the exact same composition at every point in the vessel. Although this is easy to specify, it is very difficult if not impossible to achieve. If this is the specification, it is likely that the supplier will only propose an agitator that approaches this requirement. • Solids Suspension—In this case, it is desirable that the solids in a solid–liquid mixture would not settle to the bottom of the vessel or, if they did, they would be quickly resuspended. • No Solids Buildup in Fillets—This is defined as a less intensive mixing than “Solids Suspension”. There may be a limited number of solids laying on the bottom of the vessel, but solids would not continuously build up in the corners or fillets. • Uniformly mixed up to outlet line—This type of mixing provides an outlet concentration such that there is no buildup of one of the components of the mixture in the vessel. For example, in a polymerization reactor that is producing 10,000 lbs/h of polymer slurried in 20,000 lbs/h of a liquid, the outlet concentration and the mixture below the outlet line will always approximate 33% polymer by weight. In this example, if this degree of mixing is not obtained, the slurry concentration will vary with time. The concentration may peak out at a concentration that causes plugging or fouling. • Uniform mixing of liquids of different viscosities—It is often desirable to mix two liquids of widely different viscosities. The higher viscosity liquid or paste serves as the continuous phase and the lower viscosity one serves as the dispersed phase. If adequate mixing is not achieved, the dispersed droplets will coalesce and form larger particles, which will settle faster than desired and the mixture will become an obvious two-phase fluid. • Sufficient mixing to achieve adequate heat transfer coefficient—In some cases, heat transfer is achieved by a jacket on a vessel or internal coils. Although heat transfer may be adequate in the smaller vessel with almost any heat transfer coefficient, in the larger vessel, the heat transfer requirements will be more stringent. The amount of heat to be transferred will increase and the A/V ratio will decrease. This may require a larger heat transfer coefficient. These are just a few of the qualitative descriptions that will allow a mixing equipment supplier to utilize the experience and “in-house” correlations to provide a mixing system. In addition to this approach, there are techniques utilizing computational fluid dynamics (CFD) to analyze flow patterns of the dispersed phase (liquid or solid) in a proposed agitation system. This graphical representation of the mixing patterns in the proposed commercial vessel can then be compared to those calculated for the pilot plant using CFD. On the basis of this type of work, a mixing system that comes closest to duplicating the flow pattern in the pilot plant can be selected. This technique can also be used to consider mixing patterns in larger versions of the commercial plant. The CFD approach also provides a graphical representation of flow patterns in the vapor phase of a boiling agitated vessel. These vapor flow patterns will be helpful in predicting the particle size that will be entrained from a vessel where vapor disengaging from the liquid occurs. Unfortunately, the CFD software is expensive and requires a learning curve. However, there are engineering companies that specialize in using it and are available on a contract basis. Indirect Heated Dryer Another example of this type of equipment is the indirect dryer. In a typical application of this equipment, a solid-volatile stream is heated to cause vaporization of the volatile as the solids are conveyed through the length of the dryer. The dryer may be heated using steam or other heating medium in a jacket around the dryer. The dryer will be equipped with a conveying type rotor. This rotor is designed to move the solids from the dryer inlet to the dryer outlet. Although conveying is the primary purpose, the rotor may be heated by steam or other means. A sweep gas passing through the dryer in a counter-current direction to the solids flow is generally used. This sweep gas reduces the partial pressure of the volatile in the vapor phase and hence the concentration of the volatile in the vapor phase. The volatile removal will be limited by the residence time and the equilibrium between the volatile in the solid and the volatile in the vapor phase. The constants to be determined from the pilot plant equipment are the heat transfer coefficient, mass transfer coefficient, and the conveying capacity coefficient of the rotor. The concepts described in Chapters 1 and 2 that have been summarized here can be used to scale up from the small pilot plant equipment in the supplier’s laboratory to larger equipment. In this approach, as shown below in Eq. (1.1), the constant determined in the supplier’s pilot plant (C1) is used for the commercial plant. The constant (C2) is related to the physical size of the commercial facilities. The driving force (DF) is calculated based on the material balance values for the commercial plant. (1.1) where R = The kinetic rate of the process. The process could be heat transfer, volatile stripping, reaction, or any process step where equilibrium is not reached instantaneously. The kinetic rate is usually expressed in terms of the value of interest per unit time. The required rate will be much lower in the laboratory or pilot plant than what it is in the commercial plant. As defined earlier, the ratio of the rate in the commercial plant to that in the smaller plant is the scale-up ratio. C1 = A constant determined in the laboratory, pilot plant, or smaller commercial plant. As a general rule, this constant will not change as the process is scaled up to the larger rate. C2 = A constant that is related to equipment physical attributes such as area, volume, L/D ratio, mixer speed, mixer design, exchanger design, or any other physical attribute. This constant will be a strong function of the scale-up ratio. As discussed later, it is not always identical to the scale-up ratio. DF = The driving force, which relates to a difference between an equilibrium value and an actual value. This might be temperature driving force, reactant concentration, or volatiles concentration driving force. The driving force will always have the form of the actual value minus an equilibrium value. One of the potential problems in determining C1 (the mass transfer constant or heat transfer coefficient) for the example of the indirect dryer is the question of reconstitution of the solid–volatile mixture. Normally, to simplify the shipping requirements, the solids, which are to be used, are shipped in a dry form and then liquid is added at the supplier’s facilities. This operation is referred to as reconstitution and it raises the question: does this perform the same way as a fresh feed sample? (a sample of the solid containing volatiles that might be obtained from the laboratory or pilot plant facilities and used in the supplier's pilot plant). The dried solids used for reconstitution may have a lower porosity than solids in a fresh feed sample. If this happens, the volatile may be primarily on the surface and be much easier to remove. In addition, the pilot plant dryer using reconstituted feed may have a higher heat transfer coefficient than the commercial plant operating on fresh feed. This might occur because the surface of the solid is wet with volatiles when using reconstituted feed. With fresh feed, some of the volatiles are in the pores of the solid and the surface would not be as wet as that with reconstituted feed. The adequacy of reconstitution can be confirmed by use of a thermal gravimetric analyzer (TGA). This laboratory equipment can be used to measure the rate of volatile removal from a solid for reconstituted feed and fresh feed. If they are the same, it can be assumed that results using reconstituted feed will be equivalent to those with fresh feed. Thus the scale-up using the constant determined from the pilot plant dryer will be valid. If these rates from the TGA are not equivalent, some estimates of the mass transfer constant and heat transfer coefficient can be determined by a ratio of the TGA rate with fresh feed and reconstituted feed. Prior to detailed design, it may be necessary to either ship fresh feed to the supplier for use in his equipment or rent/purchase a pilot unit size dryer for use to test on fresh feed. The heat transfer coefficient is relatively easy to determine in the supplier’s pilot plant facility and generally can be directly scaled up to a commercial plant size. If the TGA results for fresh feed and reconstituted feed are different, the coefficient can be adjusted based on the TGA results described earlier. To determine the heat transfer coefficient in the pilot plant, the aforementioned Eq. (1.1) can be modified as shown in Eq. (7.1). (7.1) where R = The rate of heat transfer in the pilot plant in BTU/h, C1 = The heat transfer coefficient in the pilot plant, BTU/ft²-°F-h. C2 = The area of the indirect dryer, ft². DF = The log mean temperature difference between the heating fluid and the material being heated, °F. The size of the commercial dryer (C2) can be determined by using the design rate of heat transfer, the demonstrated supplier pilot plant heat transfer coefficient (C1 adjusted as necessary based on the TGA results), and the commercial driving force. As indicated, the driving force is usually the log mean temperature difference between the heating fluid and the material being heated. The modification of Eq. (1.1) is shown in Eq. (7.2). (7.2) The residence time requirements can be determined in a similar fashion. Chapter 2 describes the use of the pseudo mass transfer coefficient in determining the residence time required in removing volatiles from a solid. The pseudo mass transfer coefficient is a constant that combines the actual mass transfer coefficient with the specific area of the solid (ft²/ft³). Chapter 2 also shows how the pseudo mass transfer coefficient can be obtained from the supplier’s pilot plant results. As in the heat transfer coefficient, the pseudo mass transfer coefficient determined from the pilot plant studies can be adjusted based on the TGA results comparing fresh feed to reconstituted feed. The actual driving force for removing a volatile from a solid is more complicated than the driving force for heat transfer. It is described in Chapter 2. As shown in this chapter, it is a function of gas-to-solid ratio, volatile concentration in the incoming gas, and equilibrium between the gas and the solid. One of the key inputs that will be required from the process designer is the equilibrium relationship between the volatile in the solid and the volatile in the vapor. This was also discussed in Chapter 2. The heat transfer area and residence time of the commercial dryer based on the supplier’s pilot plant are usually a joint effort between the supplier’s engineering force and the owner’s process designer. The supplier’s engineer may have correlations that predict that the heat transfer or mass transfer coefficients in a commercial size dryer will be less than that experienced in the pilot plant. In addition, the supplier will have standard sizes of dryers and he will select one of the standard sizes that approximate the required heat transfer area and residence time. When considering the indirect dryer, the conveying capacity can be changed by the speed of the rotor or the installation and/or pitch of paddles on the rotor. Unlike the heat transfer area and residence time, the solids conveying capacity is almost always the responsibility of the supplier. In addition to these specific examples, equipment suppliers are often in the best position to provide the scale-up of other mechanical equipment such as extruders, solids mixers, belt conveyors, and “live bottom bins.” These bins are storage hoppers that are designed so that they flow uniformly, that is, each particle is in the hopper for the average residence time. Summary of Working With Suppliers of Specialty Equipment Although it would be entirely possible to only provide a supplier of specialty equipment a request for quote (RFQ) and allow him to provide a final design, it will be helpful to work with him to understand the basis for his scale-up. This chapter provides directions for a semi-theoretical approach to scale-up of this type of equipment. 8 Sustainability Abstract Sustainability has multiple meanings. It is best known as meaning—“What impact does this new development have on the capability of the planet to continue in existence?” A dictionary definition is “The quality of not being harmful to the environment or depleting natural resources and thereby supporting long-term ecological balance.” However, when considering the process development, this definition should be expanded to include all areas that might cause the commercial facility to have less than the projected lifetime and Return on Investment. Keywords by-product corrosion crystallization LPG return on investment sustainability Sustainability has multiple meanings. It is best known as meaning—“What impact does this new development have on the capability of the planet to continue in existence?” A dictionary definition is “The quality of not being harmful to the environment or depleting natural resources and thereby supporting long-term ecological balance.” However, when considering the process development, this definition should be expanded to include all areas that might cause the commercial facility to have less than the projected lifetime and Return on Investment. As the project is developed, process sustainability must include evaluation of the following as well as environmental considerations: • Long-term changes in existing technology. • Long-term availability of the feeds and catalysts. • Long-term cost and availability of utilities. • Long-term waste and by-products disposition. • Long-term operability and maintainability of the process facilities. Long-Term Changes in Existing Technology Many process developments are evaluated against either existing technology or an understanding of current technology developments. When evaluating the sustainability of a new technology development, the potential changes to the technology that the process development is being evaluated against must be considered. Examples of this are present in such diverse businesses as in both the chemical and the oil sands industries. When considering the chemical industry, para-xylene is a valuable raw material in the production of polyesters. Although it is readily available from refining and processing crude oil, it cannot be separated by fractionation due to the only slight difference in boiling points between para-xylene and meta-xylene. In the middle of the 20th century, a crystallization process was developed to recover and purify the para-xylene. In the last part of the 20th century, proposals to improve the operation of the existing crystallization process for producing para-xylene in the chemical industry were dwarfed by the new Parex adsorption process developed by Universal Oil Products (UOP). In this case, no development was started to improve the crystallization process because economic information on the new UOP process became available. Because of its cost competitiveness, this process is now utilized to manufacture most of the para-xylene produced in the world. The Canadian Oil Sands industry provides another example of the need to understand competitive technologies to develop sustainable technology. Oil is produced from the oil sands by a multiple step procedure. The rock containing the oil is first mined and conveyed to the oil recovery operation. In this operation, the oil is released from the rock using a hot water extraction process. The residual sands and water are allowed to settle. Unfortunately, the settling is not complete and an interface layer of sand and water called ”tailings” is also formed. These tailings are being accumulated in large ponds. The Canadian government and oil companies operating in the Canadian Oil Sands are working to develop technology to eliminate the large volume of these tailings. The tailings create potential problems such as leakage of toxic water from the ponds, continued loss of land with the need to build more ponds, and costs associated with maintaining surveillance of the ponds. Before launching a process development operation to mitigate the problems associated with these tailings, an individual company needs to know how their proposed technology compares to competitive technologies that are available and are being developed. In both of these examples, the economics can be evaluated using “study designs” to determine the capital expenditures (CAPEX) and operating costs (OPEX) for the competing technologies. The development of CAPEX and OPEX will require a knowledge of each technology. This can be accomplished by developing a simplified process design. This is described in more detail in Chapter 9. Long-Term Availability and Cost of Feeds and Catalysts Laboratory studies associated with new process developments often use only small quantities of reagent grade chemicals. The commercial availability of these chemicals should be reviewed at an early point in process development. In addition, the long-range availability of the chemicals should be considered. Some reasons that the feeds and catalysts might not be available in the future include: • Safety, health, and environmental (SHE)—This is different than the process scale-up criteria discussed earlier. It is possible that some of the process reagents used in laboratory process development might not be available on a commercial scale, because there is not a manufacturing facility that is willing to take a SHE risk in the large-scale production of these chemicals. • A parallel example of this concern might be a chemical that is currently available on a commercial basis from a sole supplier. However, at some point, the chemical becomes unavailable because the manufacturer goes out of business, has a disaster, or makes a business decision not to produce the chemical. • Long-term cost of the chemicals being utilized should also be considered. Changes in utility prices or feed stock prices may impact the supplier(s) of the chemicals being used and the prices of the material. In addition to these availability/costs considerations, the commercial grade of many chemicals has a different composition than the reagent grade. Reagent grade n-hexane, which is often used as a diluent, is much purer than most grades of commercially available solvent grade hexane. The acceptability of commercially available hexane should be confirmed in bench-scale studies. Long-Term Availability and Cost of Utilities The current development of most processes has a component of minimizing utility consumptions. This will continue to be a consideration. In addition to cost, the impact of availability of the utilities on the commercial project operations should be considered. For example, the availability of relatively pure water may become limited. This may drive the need to reuse wastewater and hence increase the cost of a currently cheap utility. As the process development progresses, the availability of either steam or electricity may need to be evaluated to aid in the selection of the drivers for pumps and compressors. This driver selection is rarely a need in the early process development stages. It is also possible that the cost of a utility may decrease over time. The impact of the “fracking” process on the availability and cost of liquefied petroleum gas (LPG) and natural gas is an example of this. Thus both upward and downward cost/availability factors should be considered. Disposition of Waste and By-Product Streams During the early process development phase, it is often easy to ignore the disposition of waste and/or by-product streams or to assume that they can be disposed off through a contractor. However, as indicated earlier, the generator of the waste is subject to the “cradle to grave” regulations. These “cradle to grave” regulations make the generator of the waste stream responsible for what technique is utilized to dispose off the waste. This regulation requires the generator to have in-depth knowledge of how the waste contractor will dispose off the stream. This is true for even the disposition by incineration. Although this technique might seem to be an acceptable means of disposal, the implicit assumption is that all of the waste will completely burn and generate only carbon dioxide and water. This assumption needs to be confirmed by understanding the combustion process and likely the actual temperature and residence time in the waste disposer's furnace. Besides incineration, injection of waste streams into deep well underground deposits is often used. This technique needs careful consideration as concerns have been expressed about contamination of potable water supply from disposal wells in the vicinity of potable water wells. Concerns have also been expressed about earthquakes associated with the high pressure used in the deep well injections associated with waste disposal. Although the disposition of solid waste material in either municipal or commercial landfills has been used, it presents problems. Toxic materials in the waste can migrate to the surface of the material due to a rising water level, density differences, or diffusion. In addition, the presence of toxic materials in a landfill can create serious health problems if development of this area or nearby areas occurs. By-product disposition is considered to be separate from waste disposal, because it involves commercial use of a stream from the process being developed. In a chemical plant located near a refinery, a by-product stream may be added to a fuel stream (LPG, gasoline, heating oil, or fuel oil). Although this sounds completely harmless, the impurities in the by-product stream may create problems for the downstream consumers of the fuel stream. For example, if a propane stream that is added to an LPG stream contains a chlorinated hydrocarbon, a small quantity of this hydrocarbon may create corrosion problems in the LPG consumers facilities. Operability and Maintainability of Process Facilities One of the most important aspects of developing the design of a new process is to define the operability and maintainability targets. A process that is to have a high service factor (e.g., a commercial unit) must have high operability and maintainability targets. However, a process that is still in the development stage may well have a lower service factor target and hence lower operability and maintainability targets. Examples of this type of development stage that does not require a high service factor might be pilot plants and/or semi-works. The lower operability and maintainability targets will allow for a reduction in initial investment. For example, pumps might not be provided with a spare. This would reduce investment at a loss in service factor. A process development that does not consider the operability and maintainability of the facilities will be difficult to bring into commercial reality. A pilot plant or semi-works that do not consider the possibility of a moderate operability/maintainability target may be so expensive that the project is cancelled. On the other hand, a commercial plant without a good operability/maintainability factor will be difficult to operate and almost certainly result in an economic failure. A plant with good operability/maintainability is one that operates at a high service factor (annual hours of operation/actual hours per year) with infrequent downtimes. These two criteria are both separate and related. A plant that has a high service factor may have frequent downtimes each for a very short duration. However, each downtime may require an extended period to get back to full rate on-specification production. The same service factor can also be obtained by infrequent downtimes each of a long duration. A better measure of the determination of operability is probably what is referred to as “mean time between failures (MTBF).” In the two examples, the initial one (frequent downtimes) will have a lower MTBF than the second example (infrequent downtimes) even though the service factors are the same. Good operability/maintainability of the scaled-up process development can be achieved by: • Balanced Process Design—The process design should have a balance between the cost and desired operating aspects. This can be achieved by the development of accurate heat and material balances, the consideration of the need for spare equipment, and the consideration of start-up requirements to name a few areas. The accurate heat and material balances will allow the designer to minimize the safety factors often used in the design work. The consideration of start-up is necessary, because a design that considers only steady-state operation may be difficult to start up, thus increasing the length of time from completion of mechanical work to actual production during both the initial start-up and subsequent start-ups. • Careful Selection and Review of Process Equipment—When considering process equipment, both the standard type of equipment and unique “one of a kind” equipment will require review. The amount of review of the equipment will depend on the stage of the project. At an early stage, only the unique pieces of equipment will require review. As the project progresses into detailed mechanical design, the equipment review should include all the equipments. • In reviewing the unique equipment, the commercial experience of this equipment should be carefully considered. This may take both reviews with suppliers and reviews with the suppliers’ customers. • Consideration of corrosion—Corrosion can be caused by both primary and trace components involved in the process. These components can be associated with feed or catalyst streams, the products of any reaction, or by-products formed in the process. Because the presence of materials that cause corrosion might dictate the use of specialized materials of construction with higher cost, this possibility should be considered early in the project. Corrosion can be measured in a pilot plant or a commercial plant by the use of thin metal strips suspended in the process fluids. They can be removed at some frequency and the change in weight measured to allow the determination of corrosion rate. This will provide a reality checkpoint on the anticipated corrosion rate. The assurance that the design will achieve the target operability and maintainability targets can be achieved by operability/maintainability reviews as the process development proceeds. As a general rule, these reviews will require more time, more people, and be in more detail as the project proceeds. Summary From the subsequent paragraphs, it is easy to conclude that sustainability means more than just an isolated area associated with caring for the environment of the planet. Although this is important, all the areas discussed above also must be considered. 9 Project Evaluation Using CAPEX and OPEX Inputs Abstract When a new development appears to be promising after considering chemistry, engineering, and/or product attributes, there is always the financial question of the impact of the implementation of this project on the profits of the company. This is true whether the project is a laboratory development, plant improvement development, or a new/improved product development. Project evaluation is an important part of each of these developments. To evaluate a project, it is mandatory that the estimates of both capital expenditures (CAPEX) and operating expenditures (OPEX) be developed. CAPEX is basically the cost of building the facilities. In addition to the construction costs, it will also include the engineering cost for the project. OPEX is the operating cost of the facilities. It includes items such as utilities, operating labor, maintenance, overheads (administrative, accounting, among others), taxes, and depreciation. Keywords business determination of business viability engineering provide research guidance study design for—development of CAPEX and OPEX taxes Introduction When a new development appears to be promising after considering chemistry, engineering, and/or product attributes, there is always the financial question of the impact of the implementation of this project on the profits of the company. This is true whether the project is a laboratory development, plant improvement development, or a new/improved product development. Project evaluation is an important part of each of these developments. To evaluate a project, it is mandatory that the estimates of both capital expenditures (CAPEX) and operating expenditures (OPEX) be developed. CAPEX is basically the cost of building the facilities. In addition to the construction costs, it will also include the engineering cost for the project. OPEX is the operating cost of the facilities. It includes items such as utilities, operating labor, maintenance, overheads (administrative, accounting, among others), taxes, and depreciation. This chapter does not cover a complete revelation of either CAPEX or OPEX estimating procedures, but it provides some concepts that can be used in project evaluation. This evaluation is usually done before commitment of all large project expenditures. There will normally be several points in the project where this is done such as prior to commitment for the following: additional technical resources, funds to build a pilot plant, funds to begin detailed engineering design, or funds to build a commercial plant. Some companies have developed an elaborate gate system that requires a project to meet certain criteria before passing through a gate and proceeding to the next level of expenditure This book only deals with the general technical approach to project evaluation and it does not deal with the gate system. The development of a study design as discussed later is the key component for determining CAPEX and OPEX. In addition to describing how CAPEX and OPEX are developed, this chapter describes the business, research, and engineering points of view that are often involved in answering the question—“Is this development project commercially viable?” The difference in viewpoints almost always manifests itself in conflict and disagreements related to the next step in the process development. The likely viewpoints are summarized below: • Research viewpoint (including plant improvement projects)—The researcher/engineer/inventor of the new development will almost always desire to have additional time to continue studying and progressing the technology of the project. He will not see the need for any sort of evaluation until additional development is completed. He will be concerned that an evaluation of the project too early will result in a negative decision because of the preliminary stage of development. One researcher once remarked in a sarcastic manner—“You engineers think that you can calculate anything.” The meaning of this remark was that engineers calculate without having sufficient data and true scientists develop data. • Engineering viewpoint—This area covers the process design and the project engineering. The process designer(s) will be responsible for completing the study design. As such, they will utilize data that have been generated by the researcher. However, at the stage of research that most study designs are initiated, there will not be sufficient data available to complete a final process design. Thus the process designer(s) will have to utilize their experience and/or first principles to complete this task. First principles are those principles that while not being developed or studied in the current project have been proved historically. Examples of this might include the impact of reaction temperature on reaction rate and the rate of the heat transfer coefficient as a function of velocity. • The project engineer will normally be responsible for both the cost estimating and timing of the project. Although these responsibilities are often handled in two separate departments, they are combined here as a single viewpoint. The combined goal of the project engineer can be expressed in the clichés “On Budget and On Time” or “Under Budget and On Time”. He often has the entire project from laboratory experiments to commercialization on a “critical path diagram”. A critical path diagram is a document that allows visualization of where the key time limiting elements of the project are. He will also be involved in determining the amount of contingency that should be included in the CAPEX at any point in the project. • Business viewpoint—The business manager will often serve as the project leader and as such he will be concerned with all phases of the project. In addition, he will be heavily involved in developing the answer to the question “Is the project economically viable?” Project viability depends on both current situations and a projection of costs and technology into the future. The business viewpoint almost always includes the concepts that the project needs to be brought to commercialization as soon as possible and that to obtain approval on the project, the CAPEX should be as small as possible. As it can be observed from the various viewpoints, there will often be differences of opinion between the researcher, engineers, and business manager. The development of the study design will often help resolve these differences of opinion. For example, if the CAPEX appears to be excessive, an examination of the study design and cost estimate will help explain why the cost seems excessive. This will allow the examination of possible routes to reduce CAPEX. This is explained in more detail in Chapters 9–11 CAPEX and OPEX It should be obvious that much of the project evaluation phase revolves around the development of CAPEX and OPEX as well as a project schedule. As CAPEX includes an escalation (inflation) factor, determining when the plant construction can begin from the project schedule is important. This project schedule should show the relationships between each organization associated with the project and the critical decision points. Fig. 9.1 shows a simplified critical path diagram for a “fast track” project. As shown in this figure, the development of an economic evaluation for the project is a key piece of data. The CAPEX and OPEX that are part of this evaluation are likely on the critical path. Figure 9.1 Fast track schedule. The “fast track” project is defined as one that progresses as fast as possible and still meets the project projections of cost and product quality. It is often what is desired in the real-world scenario. However, it is rarely achieved in the realworld. In the real-world, decisions are made slowly, gate reviews, safety reviews, and a host of other reviews slow down the projects. Although it may be difficult to achieve, a “fast track” time line is shown here to illustrate that the engineering work can and should start before the research efforts are finished. An examination of Fig. 9.1 will show that there are three obvious paths during project development. There is a path referred to as Product and Market Evaluation. This is especially necessary for a new product. But it may also be necessary for an existing product where a change in the process or raw materials might change the attributes of the product. Then there is a path that is referred to as Research/Development. Many new product or process innovations begin in this area. At an early stage of the project, there may only be one or two researchers working in a corporate or academic bench-scale laboratory. The third path is an engineering path, which would include both process designers working on the study design and eventually on the detailed process design as well as project engineers developing the cost estimate and the project schedule. Fig. 9.1 shows the interrelationship between the various paths in a simplified fashion. It also shows the decision points with numbers in the clear diamond shaped markers. These decision points and work completion points are as follows: 1. On the basis of bench-scale experiments and product/market knowledge, an interesting development has been discovered. At this point, the project staffing should be as shown in the table in Fig. 9.1, that is, the process research, product evaluation, and process design efforts will each require 1–3 people. At the completion of the study design, an additional 1–2 people should be added to the project team to develop the project cost estimate (CAPEX) and the project schedule. Although only a limited amount (if any) product may be available for an evaluation, the knowledge of available products and the market indicate that the material from the bench-scale development will be of interest in the market place. 2. After the CAPEX is developed, an economic project evaluation can be completed. This economic evaluation indicates that the project appears to be economically attractive. Decision point 2 will require input from the study design, product evaluation team, and the process research team. Besides input related to project evaluation, an analysis of the study design will allow determining areas of uncertainty that will require additional research efforts. If the project continues to look favorable, development work associated with the study design, process research, and product evaluation will continue. 3. In addition, for most large development projects, consideration of the need to provide pilot plant facilities will be important. If the decision is made to consider a pilot plant, additional resources should be added to design a new pilot plant or investigate modifying an existing pilot plant. Resources should be added to the project engineering area to handle cost estimating and scheduling efforts for the pilot plant. At this point, additional information should be available relating to the costs of the pilot plant, of the research aimed at the areas of uncertainty highlighted by the study design, and of the product/market evaluation. Depending on these factors, a pilot plant may or may not be necessary. Fig. 9.1 assumes that a pilot plant is necessary to completely demonstrate the process. This figure also makes the assumption that the primary need for the pilot plant is to firm up only a few areas of the process and to make product for market development. On the basis of that assumption, the figure shows that the preparation of the design basis memorandum and detailed process design of the commercial plant can continue while the pilot plant is being built and operated. The final decision point associated with appropriating funds to build the commercial plant is shown as a cross-hatched diamond. After building and operating the pilot plant, the process design can be finalized. If the project is really on a fast track as shown in Fig. 9.1, it is likely that the commercial plant and pilot plant will both be operating at the same time. This will allow additional process and product innovations to occur quickly. Fig. 9.1 is not meant to show the complete project schedule. It only shows a developmental project on a fast track to the finalization of the process design. As such it does not cover detailed engineering, construction, and start-up. CAPEX and OPEX are developed from a study design. The study design is also referred to as a conceptual design, a preliminary design, or a “first pass” design. The deliverables of a study design should include a flow sheet and simplified preliminary equipment specifications for all major process equipment such as fractionation towers, reactors, drums, settling drums, mixers, pumps, and compressors. The flow sheet will not normally include piping sizes, spare pumps, spare compressors, or instrumentation. However, the spares will be indicated in the equipment list and on the flow sheets as “A&B.” Only the main piping will be shown, that is, by-passes, bleeders, vents, and so on will not be shown. The equipment specifications will include vessel sizes, type of internals, pumps/compressors sizes, mixer horsepower, and materials of construction. Fig. 9.2 shows a typical study design flow sheet. In addition, Table 9.1 shows the equipment specifications for Fig. 9.2. This figure shows a very simple fractionating tower. The purpose of this figure is to illustrate what kind of information should be shown in the study design flow sheet. The study design must also include a simplified heat and material for the envisioned process. Figure 9.2 Typical flow diagram for study design. Table 9.1 Vessel D × L (ft) Pressure (Psig) Temperature (°F) Internals Design Material T-1 4 × 90 50 sieve trays 200 400 c-steel D-1 4×6 None 200 400 c-steel Heat Area Tube Side Design Shell Side Design Material Exchangers ft² Psig °F Psig °F E-1 1500 150 200 200 400 c-steel E-2 1000 200 400 250 400 c-steel Pumps Flow Rate Delta P Design Design Gpm Psig Psig °F HP Motor Material P-1 A&B 250 75 300 400 20 c-steel P-2 A&B 20 50 300 400 5 c-steel From flow sheets, similar to that shown in Fig. 9.2 and tables similar to Table 9.1, a cost estimate can be made for the process-related cost of the project. The cost of the process-related facilities is often referred to as “Process-Related On-Site Cost” or in simpler terms—“On-site Cost.” In addition to the process-related “on-site costs”, there are often requirements to install utility and auxiliary facilities. The cost of these is often included in a category referred to as “off-sites”. These costs are almost always site-specific and project-specific, that is, a new site will require complete new “off-site” facilities. If the project is installed at an existing site, there may be some existing spare capacity available. Of course, there are developments that require either no additional or only minimal “off-sites” additions. The CAPEX of these off-site facilities can be estimated in three different ways: • Detailed Estimate—As the project is developed further, the exact details of the “off-sites” will be known permitting a detailed cost estimate. This is normally completed at the end of the process design. This is obviously the most accurate approach, but can only be done once the process design is finished. • Factored Estimate—At the early stage of project development, there is rarely enough information available to develop any type of detailed “off-site” estimate. In this case, the “off-site” investment cost is based on a factor. It is generally assumed that “off-site” cost is in the range of 25%–30% of the process-related on-site cost. The cost of fuel used to generate the utilities must be estimated in addition to the factored investment. • Combined Investment/Operating Cost—For project evaluation, the cost of utilities (both CAPEX and OPEX) can be included in the OPEX by known factors for a specific site. In this approach, there is no attempt made to estimate the investment required for utilities. For example, if it is known that each increment of additional steam generated costs a given amount of investment, a return on investment (ROI) can be added to the operating cost of producing the increment of steam to give a total cost of steam to the new facility. As indicated earlier, this is site-specific and as such each site may have a list of utility cost to be used for project evaluation. Although this may provide a basis for project evaluation, the investment for the additional utilities that will be required will have to be provided separately when the project funding is requested. In addition, this approach will not be satisfactory for some of the other off-site items such as loading or unloading facilities. These will have to be estimated using the factored approach or by a detailed cost estimate. When considering these three alternates for early project evaluation, the combination of simplicity and accuracy dictates that the factored estimate approach is likely the best approach. OPEX can be broken down into the components of fixed cost and controllable cost. Fixed cost is the cost that does not change regardless of production rates. It includes items such as operating labor, maintenance labor and material, overhead, depreciation, ad valorem taxes, and ROI. Some possible fixed cost factors that can be used for project evaluation are shown below: • Operating Labor—If detailed estimates are not available, an annual factor of 2% of CAPEX is reasonable. If the operating labor is developed based on “operating posts”, it is generally assumed that there are 4.6 operators per post. This covers the number of shifts and the need for extra people to be available for relief. • Maintenance—Maintenance labor and material can be estimated at an annual cost of 4%–6% of CAPEX. • Plant-Related Overhead—This category can be estimated at an annual cost of 2% of operating labor. • Depreciation—The rate of depreciation is often determined by governmental and accounting regulations. A typical value for a chemical facility might be 10%/year. A pharmaceutical facility with an anticipated short “lifetime” might have a higher rate of depreciation. • Ad Valorem Taxes—These are the taxes paid to the local governments and are usually estimated at 1%/year based on CAPEX. • ROI—The desired ROI is a function of type of project when considering such things as technology sustainability, project risk, and alternative use of funds to name a few considerations. A typical project for a chemical plant should have a ROI of 25%–30%. Pharmaceutical facility might be higher and refineries might be lower. As a general rule, the projects with a greater risk will require a higher ROI. Although ROI is not truly an operating cost, it is normally included in OPEX to allow estimates of the price that must be charged for a new product to provide the desirable economics. For early project evaluation, it is generally included by the equation shown below: (9.1) where f = The desired ROI. Controllable costs include feedstock, catalyst, chemicals, and utilities. The cost of the feedstock, catalyst, and chemicals is based on the material balance. The utility costs are based on the heat balance and equipment specifications. The electrical demand is based on agitators, pumps, compressors, and other rotating equipments. A small contingency for lighting, control room, and instrumentation should be included. In evaluating the project being considered, it should be evaluated based on the anticipated ROI, the risk involved, and the sustainability factors discussed in Chapter 8. As indicated earlier, projects that have a higher risk will require a high ROI. The ROI can be evaluated by one of the following criteria: • The anticipated price of the product produced by the project can be estimated as the sum of the fixed (including ROI) and controllable costs. This is the technique described earlier. The price can then be compared to competitive products and a decision made whether to proceed or not. • The ROI can be calculated based on a given price that is based on the prevailing market prices. • The ROI can be determined by first estimating the total cost (including ROI) of producing the targeted product from both existing and possible future competitive technologies. Then knowing the price developed with this approach, the ROI for the new process can be estimated. In addition to comparing the total cost of the new and competitive technologies, the controllable costs of competitive technologies and the technology being developed should be developed. This will allow understanding how the proposed technology will perform economically in poor economic times. This approach has great value even at an early stage of product development. • For a plant improvement project, the ROI can be calculated based on project cost savings. When considering the factors that go into project evaluation (CAPEX and OPEX), CAPEX is generally the one that creates the greatest area of uncertainty and/or error. Although the expert cost estimator will react negatively to the superficial treatment given here to cost estimating, the purpose is not to educate people on cost estimating, but to show the importance of different factors that are important to CAPEX. When considering CAPEX, the errors or uncertainties are associated with the following in order of importance: • Number of pieces of equipment—When the study design flow sheet is completed, how many pieces of equipment are present. • Materials of Construction (if other than carbon steel is used)—The materials of construction can impact both the cost of the material and the cost of installation. • Equipment type—A reactor is generally more expensive than a pump. But even inside of different equipment groups, there can be differences in cost. • Equipment Size—Larger equipment of the same complexity is more expensive. • Equipment Sparing—Installed equipment sparing, while may be necessary, leads to more complexity in installation in addition to higher equipment costs. The importance of having a flow sheet from the study design with the correct number of pieces of equipment shown is illustrated by the very rough cost estimating technique where the number of pieces of equipment is multiplied by a factor to obtain the CAPEX. This factor provides a total erected cost (TEC) based on a count of pieces of equipment shown on the flow sheet. Using this technique, there is no differentiation between small pumps and large reactors. This technique emphasizes the need for an accurate heat and material balances. These balances will generally uncover the need for additional equipment that might not be included initially. The second factor the materials of construction is only important if the planned material is not carbon steel (c-steel). The material of construction is important because it can impact the entire process. Stainless steels are 2–3 times the cost of c-steel and higher alloys (Inconel and Hastelloy) are 5–10 times the cost of csteel. The type of equipment is obviously important. When considering the various types of reactors that are in use, an autorefrigerated reactor with an overhead condenser will be more expensive than a reactor that consists of jacketed pipe. A reactor with a pump around heat exchanger circuit will probably be more expensive than either one of the two. Inside a specific grouping, the size of equipment is the next CAPEX determining category. For example, a reactor with a small pump around exchanger is of lower cost than the same reactor with a large pump around exchanger. Sparing philosophy, while often assumed to be that every pump should have an installed spared, can be costly enough to justify the considerations of not providing an installed spare. This is especially true for a facility where it is not important to have a high service factor. Pilot plants and small semi-works often fit the category of not requiring a high service factor. The considerations described here will often help making decisions on the process flow diagram and raw material decisions. If a jacketed pipe reactor will handle the heat load and it is truly cheaper than other reactor designs, it should be included in the flow sheet. In addition, the use of a different catalyst might allow use of c-steel rather than a high alloy material. In estimating the CAPEX for a project in the development stage, there are three factors that must be added to the cost of engineering, overhead, equipment, and installation. These three factors are escalation, project contingency, and process contingency. The escalation factor is added to allow the time lapse between the start of project construction and the current point in time. This factor allows the increasing cost of material, labor, and engineering. Each of these escalation factors will have different numerical values. These numerical values will vary widely as the economic conditions in the country and as the time between the estimate and the project completion change. A second factor is the project contingency. This is a factor to make allowances for the occurrence of events beyond the control of the project team that occur during detailed engineering or construction. This contingency might include new or unknown regulatory requirements, soil conditions, labor strikes, unanticipated changes in economic conditions, changes in currency exchange rates (valid if equipment is being purchased in another country), and a host of other events. As a general rule, this category only includes items associated with detailed engineering, equipment purchasing/transportation, and construction, that is, this category does not allow significant changes in the process design. The third factor relates to changes in the process design. At the study design stage of a new development, history has shown that the cost is often underestimated because of the unknown factors. The cost estimator will normally handle this historical fact by adding a “Process Development Contingency” or “Emerging Technology Contingency”. This contingency is different and separate from the project contingency and escalation factors. This process development contingency is included to cover such items as additions to the preliminary process flow sheet, reoptimization of the reactor design based on the final research experiments, additional fractionation stages as determined by the final vapor–liquid equilibrium (VLE) data, and other items not considered fully in the study design. The area of additions to the process flow sheet study design includes components that the additional research uncovered. An example of this might be the need for an additional purification step of a feed component. The next chapter (Chapter 10) covers this in detail. As noted in this chapter, the Emerging Technology Contingency may have a significant impact on the CAPEX Project Timing and Early Design Calculations As shown in Fig. 9.1, for a “fast track” project, the actual study design will almost certainly be started before the research is completely finished. This introduces three questions as follows: • How does one do a study design without all the data? • When should a study design be started? • At what point can the study design be “frozen”? The frozen point is the terminology used in the engineering world to indicate that past this point, no significant changes will be made in the design. Obviously these three questions are strongly related. However, overriding these considerations is the often-overlooked need to develop an accurate simplified heat and material balance along with an acceptable process flow diagram. This should be done prior to any consideration of data availability. When considering the question of data availability, the key operational steps in the process should be considered. These key steps are likely to be the reaction system and any specialized equipment data and/or quotes based on a supplier’s pilot plant. It is likely that reaction/reactor data will be on the critical path and must be approximated in the initial study design. The data for sizing and optimizing reactor systems can usually be obtained with a minimal number of laboratory experiments, followed by data analysis and optimization of reaction conditions. This can be done by using the first-order kinetics with respect to the reactants and typical Arrhenius constants. For reactions involving gases, an optimization to determine the optimum pressure can be performed knowing the effect of pressure and inerts on reactants density. Data for the heat of reaction and equilibrium between reactants and products can be approximated from the literature or from similar processes. Often, VLE data are required. Although these data may need to be confirmed prior to completing the final process design, literature data or calculation techniques are generally adequate for the initial study design. As indicated earlier in Chapter 6, the reaction by-products are often not known and must be determined by laboratory experiments and analytical work. However, an examination of the chemistry and likely VLE data will usually point to the possible by-products that might be key fractionation components. These considerations indicate that a study design can be initiated with a minimal amount of research data using the available literature, experience, and calculations. Thus a study design can be initiated when the project concept along with some early reaction data is available. If a small-scale demonstration of specialized equipment is required for the process, the equipment supplier will either need adequate amounts of the material to be used in pilot plant tests or experience with similar material. A study design should be “frozen” in time for the estimate of CAPEX to be finished and the results provided for the decision point 2 shown in Fig. 9.1. When considering reactor design, there are various types of designs. In the design of polypropylene and some polyethylene reactors, a loop reactor has often been the optimum arrangement. A loop reactor consists of a double pipe with process fluid in the inside pipe and cooling water in the annulus area between the two pipe walls. The fluid is pumped around the inner loop with a large circulating pump. The high velocity of the process fluid in the inner pipe promotes an enhanced heat transfer coefficient. The reactor can be either vertical or horizontal. Fig. 9.3 shows a simplified flow sheet of a loop reactor. This style reactor is used in the example problem. Figure 9.3 Vertical loop reactor. The optimization of reactor conditions during a study design can take a wide variety of forms. For the loop reactor that will be considered here, the reactor residence time will increase as the length or number of loops increases. However, the size of the circulating pump will also increase as the length of the flow path increases. The increased length and pump size will increase the CAPEX. As the residence time increases, the catalyst efficiency also increases, which will decrease OPEX. The procedure for the determination of reactor size, which will then lead to the process optimization, is shown in Table 9.2. In this procedure, only a single laboratory experiment is required along with first principles. Table 9.3 shows the results of these calculations. In addition, Fig. 9.4 shows this in a graphical fashion. Table 9.2 Given: • The reactor system being designed is a single CSTR producing a polymer from a monomer in a liquid reactor w • Polymer density = 56.2 lbs/ft³. • The slurry concentration in the reactor and reactor outlet is 50 wt.%. • The inner loop ( • The reactor production rate is 50,000 lbs/h of polymer. • The heat of reaction is 1000 BTU/lb of polymer produced. • T Problem Statement: Determine the length of the reactor and required pump horsepower as a function of temperature. Thi Table 9.3 Reactor Loop Residence Catalyst Pump Temperature (°F) Length (ft) Time (h) Eff (lbs/lb) Horsepower 140 4000 4.6 76,700 2,070 145 2700 3 66,500 1,360 150 2000 2.2 62,800 1,000 155 1590 1.75 61,900 800 160 1330 1.45 62,800 650 165 1140 1.22 64,700 550 170 1000 1.05 67,600 475 Figure 9.4 Reactor optimization. As indicated in the problem statement, this table can be used to determine the CAPEX and OPEX for each reactor temperature and an optimization of the two can be performed to select the optimum operating temperature. As these calculations are based on a single laboratory temperature and first principles of reactor design, the estimated values will need to be confirmed during additional development. For example, if the optimum reactor temperature appears to be 170 °F, the pilot plant or bench-scale reactor should be operated at this temperature to both confirm the reaction kinetics and determine if there are any problems associated with operating at this temperature. 10 Emerging Technology Contingency (ETC) Ross Wunderlich Wunderlich Consulting Company, Rapid City, SD, United States Abstract Contingency is a method for managing risk. When developing cost estimates for projects, there are a lot of uncertainties and risks involved. The Association for the Advancement of Cost Engineering International has defined contingency. Typically contingency is estimated using statistical analysis or judgment based on past asset or project experience. Keywords considerations for amount of CAPEX contingency estimates equipment incremental statistical analysis technology Contingency is a method for managing risk. When developing cost estimates for projects, there are a lot of uncertainties and risks involved. The Advancement of Cost Engineering International (AACE International), defines contingency in its Recommended Practice 10S-90, Cost Engineering Terminology, as: Contingency 1. An amount added to an estimate to allow for items, conditions, or events for which the state, occurrence, or effect is uncertain and that experience shows will likely result, in aggregate, in additional costs. Typically estimated using statistical analysis or judgment based on past asset or project experience. Contingency usually excludes: a. Major scope changes such as changes in end product specification, capacities, building sizes, and location of the asset or project; b. Extraordinary events such as major strikes and natural disasters; c. Management reserves; and d. Escalation and currency effects. Some of the items, conditions, or events for which the state, occurrence, and/or effect is uncertain include, but are not limited to, planning and estimating errors and omissions, minor price fluctuations (other than general escalation), design developments and changes within the scope, and variations in market and environmental conditions. Contingency is generally included in most estimates, and is expected to be expended (see also: management reserve). 2. In earned value management (based upon the ANSI EIA 748 Standard), an amount held outside the performance measurement baseline for owner level cost reserve for the management of project uncertainties is referred to as contingency (October 2013). For projects, estimates are prepared based on a known scope of work. There are risks that need to be managed associated with executing that project. For most “normal” process industry projects, the process design technology is known to work. The risks are lower as the process has been proven not only in the pilot plant, but also in the already constructed scaled up manufacturing facilities. When developing new technologies, new processes, or new ways of executing a task, the risks are higher. The new process or technology has not been proven, so there are added risks involved. Consequently, consideration should be made for addressing these added risks when trying to estimate the costs associated with this new innovative technology [1,2]. Developing new technology requires overcoming many design challenges. It takes time to study and to determine solutions to any issues. Businesses will want to know as early as possible whether or not it is economically feasible to pursue the research as well as ultimately build the facility to process feeds into a product. Specialized equipment may be needed, some of which may or may not have ever been used in a specific application or process step. Will it work? Have ALL of the equipment/facilities necessary for the process been identified? No one will know until these studies/experiments are completed. The management needs to make decisions at several points in time during the development of a process/project. So, cost estimates will be done using rudimentary tools early on to more sophisticated methodologies as more and more design information becomes available. As the AACE International definition of contingency implies, a certain amount of contingency is applied to these cost estimates to cover the incremental cost of these uncertain risks for minor changes to the known scope of work. Owners and contractors have developed in-house contingency guidelines for their estimates that vary depending on the definition involved as well as the estimating tools used to do the estimate. Statistical analysis is performed using a myriad of project data and estimate accuracy studies are completed to come up with these contingency guidelines to better the accuracy of estimates as the projects progress. For those companies that develop innovative technologies in the process industry, a similar exercise should be undertaken to determine an incremental Emerging technology contingency (ETC) to cover the added uncertainties and risks. Directionally, the graph below shows that more ETC should obviously be applied early in the development (Pre-Class 5 Estimating as per the AACE Estimate Classification System (2)). One may need to double the cost of the process unit as the process engineers know that they have not identified all of the equipment/facilities so early on the cycle. The work is just being screened initially and very little detailed work has been completed. As the time goes on, more and more study is done and thus more is known. Uncertainties get identified and solutions implemented to mitigate that challenge. Therefore less ETC is necessary later on in the development of the new technology. ETC is decreased over time to much lower levels once the project estimating has progressed to the appropriation funding stage (AACE International Class 3) (Fig. 10.1). Figure 10.1 Emerging technology contingency versus estimate classification The questions that the process or technology developer needs to be asking as the project is developing are: • What is the impact every processing step and every piece of equipment going to have? • How will a piece of equipment be supported and what elevation is required? • What utilities are required for the piece of equipment? • How will the process be controlled (instrumentation)? • How much space will the equipment take up on the plot? • Will the equipment use more power or need more cooling? • How will the inflows/outflows of the process impact the supporting infrastructure? • And many others Some of these impacts are incremental to the overall cost. The above graphic provides directional indications of the “extra” contingency (ETC) that is necessary for the particular process or technology being developed. Consideration should also be made for the impact on support facilities that feed or provide utilities to the process unit. Feed storage facilities, utilities supply facilities, and other plot related facilities may be incrementally impacted by major changes/risks associated with the main process development. The definition of that impact is very rarely understood or defined in the early stages. In fact, most cost estimating methodologies will simply utilize a factoring approach to determine the cost of the process unit as well as the supporting infrastructure in the very early screening of a project. But, as the impact on the supporting infrastructure is likely incremental rather than directly proportional, a reduced amount of ETC can possibly be used for that portion of the estimate scope. The construction industry institute (CII) has undertaken numerous studies to look at managing risk on projects. They developed the project definition rating index (PDRI) for several different types of projects. The first of these was for Industrial Projects and they developed a tool that helps identify risks so that decisions can be made on how to manage those risks. Subsequently, CII has developed similar tools for Buildings as well as Infrastructure projects. A PDRI assessment exercise should be undertaken if ETC company guidelines have not already been developed. For a business to continue to fund expensive research and development, more realistic assessments of the costs involved are needed to make wise decisions. Application A typical cost estimate for a process unit will include the following: Cost Category New Technology Process Equipment A Bulk Materials B Construction Labor and Overheads C Front End Engineering D Detailed Engineering and Procurement E Profit/Fees F Other Miscellaneous Costs G Owner's Costs H Escalation I X Y Emerging Technology Contingency ETC% × X Normal General Contingency NC% × X Total of Above Total ETC costs should be calculated as the sum of the above costs for the new technology portion of the overall estimate × the recommended ETC% developed from statistical analysis of several projects. Note, many cost estimates will include a “normal” general contingency level given: • Known scope • Level or quality of design • Accuracy of the estimating tools used ETC is incremental to that normal contingency and therefore should be applied to an estimate including that “normal” general level of contingency. Some managers will look upon that as being a double dipping of risk management funds, but experience has shown that there is value in breaking down contingency into two categories for emerging technology estimates. Consistency and breaking out the known scope versus the unknown scope are the keys. The funds derived from this calculation provide an allowance in the early stages of an emerging technology development program to cover the unknowns and significant discoveries that will ultimately change the cost of a commercial facility. Contributors/Reviewers • Allen C. Hamilton PMP CCE, Project Management Associates LLC • Keith Whitaker—Assist. Professor/Program Coordinator, Construction Engineering and Management, Department of Civil & Environmental Engineering, South Dakota School of Mines & Technology References [1] AACE International Recommended Practice 10S-90 Cost Engineering Terminology Rev December 28, 2016. [2] AACE International Recommended Practice 18R-97 Cost Estimate Classification System – As Applied in Engineering, Procurement, and Construction for the Process Industries Rev March 1, 2016 Reprinted with the permission of AACE International, http://web.aacei.org. 11 Other Uses of Study Designs Abstract The previous chapter described the use of study designs to evaluate new projects. That chapter discussed the development of CAPEX and OPEX. The chapter also made reference to the fact that there are other uses of the study designs. Some of these uses are as follows: Identifying high cost parts of the CAPEX and OPEX. Identifying areas where unusual equipment needs to be used or developed. Identifying areas for future development work including areas where additional basic data is required. Identifying the size-limiting equipment. Identifying areas where assistance of consultants might be required. Keywords CAPEX critical areas of design heat transfer coefficient OPEX parallel pieces of equipment special equipment The previous chapter described the use of study designs to evaluate new projects. That chapter discussed the development of CAPEX and OPEX. The chapter also made reference to the fact that there are other uses of the study designs. Some of these uses are as follows: • Identifying high cost parts of the CAPEX and OPEX. • Identifying areas where unusual equipment needs to be used or developed. • Identifying areas for future development work including areas where additional basic data is required. • Identifying the size-limiting equipment. • Identifying areas where assistance of consultants might be required. These areas are described in the next few paragraphs. Identifying High Cost Parts of CAPEX and OPEX Very often after completion of a study design, the cost estimate will reveal areas of the design that appear to be very expensive. This high cost might be related to equipment choice, selected materials of construction, overly conservative design and/or excessive contingency in the cost estimate. Often during the study design, the designer will select a design that she/he is familiar with to expedite the completion of the design. This selection may not be the optimum for the specific process. An example is the design of a process that includes an exothermic reactor requiring a large amount of heat transfer to a coolant. If the designer has experience with reactors that consist of a tube bundle in a stirred vessel, he might select this type reactor for the process without giving consideration to cheaper reactors such as double pipe loop reactors. His logic might have been that the heat transfer coefficient for the bundle in a vessel was well known from commercial experience and that coefficients in the double pipe loop reactors were not known. However, these coefficients could have been estimated from first principles of heat transfer. This decision will have likely resulted in a high cost reactor system. Another example, where the study design might be used to define high cost areas is the area of flare releases. The design of flare systems often assumes that all safety valves release at the same time in a contingency such as a power failure. This can cause a large expenditure for the flare system. The size of the flare release might be mitigated by modifying release facilities by designing additional low cost facilities such as an intermediate pressure blow down drum or other means to obtain a staged flare release. As indicated earlier, the materials of construction can often cause a significant increase in cost. This cost might be reduced by utilizing lower cost materials. The use of these materials might be possible if the catalyst is changed or if a low-cost neutralization step is provided in the process. There may also be some other parts of the process where the operating cost (OPEX) may likely be high. These areas as defined during the study design should be explored and lower cost alternatives considered. Examples of this would include reducing catalyst cost by providing more residence time, by using higher reactor temperatures or by using higher concentrations of reactants. In some study designs, the utility costs may seem very high. Steam or fuel costs can be reduced by heat recovery. In a thermal process, it is often possible to recover 80+% of the fuel cost by heat recovery. Another possibility to reduce OPEX is the generation of steam at a high pressure, expanding it across a turbine and by using the lower pressure steam in the proposed heating application. Identifying Areas Where Unusual or Special Equipment Is Required In the development of a study design for a new process, it is possible that during the initial effort, that the designer can only specify a part of the process with a “black box” duty specification. A duty specification is the one that describes what a part of the process should do. Obviously, the cost of such a “black box” cannot be estimated. The process designer will have to research the literature and the internet to locate a supplier of the type of equipment that will adequately satisfy this duty specification. It is likely that there will be multiple sources of this type of equipment and each supplier will have several models with varying costs. It is possible that after reviewing the available equipment that the designer can make slight modifications to the heat and material balances that make a difference in the model to be chosen with a reduction in cost. Examples of this unusual or specialty equipment are the different types of mixing, drying, blending and solids handling equipment available. Identifying Areas for Future Development Work During the study design, there will be areas where the need for additional research and development work will appear. Some of the possible questions that might occur are: • Is additional purification of the feed streams necessary? As indicated earlier, the initial development work is often done with reagent grade chemicals or other sources of low volume high quality chemicals. These reagent grade streams will not likely be available in the commercial operation. This question needs to be highlighted in the study design so that it will be addressed by either pilot plant or bench scale efforts. • Are sufficient VLE data available for fractionation separation of key components? It should be recognized that these key components may be reactants, by-products or impurities. It is likely that there are impurities and/or by-products that exist in the feed streams or reactant recycle streams that must be separated from the primary reactants. If there is no sufficient VLE information for the separation of these materials from the primary reactants, additional data will be required before the detailed process design is completed. The optimum approach is utilization of an outside laboratory that will have the equipment and the expertise to develop this information. • What by-products are produced in the reactions? As indicated earlier in Chapter 6, this is an area that will need to receive attention during the study design. It is likely that questions will arise regarding the presence and amount of these by-products. • How can the by-products be separated from the reactants recycle stream? This will be a strong function of the physical state of the by-products (solid, liquid, or vapor). If the by-products are liquid or vapor, as indicated earlier, it is likely that an outside laboratory may be required to develop VLE for the separation. If the by-product is a solid, the separation technique will depend on the amount of by- product produced, solid particle size, other properties of the solid as well as properties of the liquid. • How can these by-products and unreacted reactants be removed from the product? This consideration will depend to a very large extent on the particular technology being developed in the study design. If the product, reactants and byproducts are liquid then separation by distillation will likely be the choice and the question of VLE becomes important. If the product is a solid, then removing the reactants and by-products by a solids drying operation will likely become the key. The vapor–solid equilibrium information given in Chapter 2 will have likely been used in the study design, but more accurate information may be required. In addition, this separation may require the utilization of the specialty equipment described earlier in this chapter. • Are the kinetics used in the study design correct? As described earlier, the study design might likely have used a minimum amount of bench scale data to optimize the design of any reactor that is required for the process. The study design will provide the range of temperatures, concentrations, and residence times that should be explored in additional work. This is not an all-inclusive list. The validity of these questions depends to a large extent on the type of project and the technology. The purpose of the list is to illustrate the type of questions that will occur during the study design. Prior to the initiation of the detailed process design, the future work areas identified in the study design should be completed or be in the final stages of completion. This is shown in Fig. 9.1 from the previous chapter. While the researcher may question the wisdom of starting a study design at an early point, this list helps to illustrate one of the benefits for an early start. Besides fast tracking the project, there is a great value associated with starting this study design as soon as possible because it allows these developmental needs to be discovered prior to completing all of the research. In a worst-case scenario when a study design is delayed until the research is finished, the research team will be reassigned after “completion” of all the research. A study design will then begin. During the study design, areas of uncertainty will be identified. However, there will be no available research team to conduct the additional studies required to eliminate this uncertainty. Identifying the Size-limiting Equipment A study design can be used to determine if there is a piece of equipment that will limit the size of the plant being designed. If there is such a limit, then a parallel equipment will be required to reach the target plant capacity. Identifying the need for parallel equipment will be particularly critical for processes where multiple phases such as solids and liquids are involved. It is relatively easy to split the flow to parallel pieces of equipment handling streams that are either a single component or multiple components that are mutually soluble. However, if the stream being handled contains solids or two phases (two liquid phases or a liquid and gas), it may be difficult to maintain the same composition in multiple branches of flow. This may require specialized equipment and careful piping designs in order to maintain the compositions equal in each branch of the flow. The basis of the study design may be to define the largest size plant as limited by any single piece of equipment. This type of requirement is more likely in refineries or commodity chemicals that have a high-volume demand. The sizelimiting equipment can be based on the supplier' capability, shipping limitations or plot plan limitations. While the study design phase may be too early to determine these limits accurately, they can be highlighted for future analysis. This preliminary analysis will provide the business managers with any known area of sizing concerns. Identifying Areas Where There Is a Need for Consultants During the study design, it may become apparent that there will be a need for consultation in a very specific field. Consultants specializing in both equipment design and calculation techniques can be valuable during the detailed process design. This need can be highlighted in a report covering the study design. Examples of these experts, who can assist during detailed process design, include consultants with specialized knowledge in mixing equipment and/or those with detailed knowledge of CFD calculations as described in Chapter 1. It may be of value to use these experts even in the study design if there are highly critical areas. An example where CFD might be utilized in a study design is the prediction of the particle size that will be entrained from an agitated boiling reactor when the production rate is increased. Calculations based on superficial velocity do not adequately consider the vapor flow pattern introduced by the agitation. Prior to utilizing any outside source of expertise, the deliverables expected from them should be well documented and a projected cost of these expert services obtained. Summary This chapter briefly identifies other advantages of conducting a study design besides the early stage project evaluation. Since each design is different, all of the identified areas may not be present in each study. In addition, there may be other areas that are not included in this list. As each study design is progressed, the designer should keep in mind this concept and highlight areas for future work. 12 Scaling Up to Larger Commercial Sizes Abstract Traditional thinking is that scale-up only applies to either the design of a much larger facility (pilot plant or commercial plant) based on bench scale work or design of a commercial plant based on pilot plant work. This concept of scale-up also applies to utilization of a new reactant, catalyst, additive, or other raw material. Scaling up an existing commercial facility to a higher capacity is often not thought of as scale-up because it is only going from a lower capacity to a higher capacity without a basic change in the process or raw materials. However, some of the most creative engineering work is often associated with this type of scale-up. Keywords commercial plant debottlenecking engineering areas equipment increment obtaining economies of larger size by scale-up Traditional thinking is that scale-up only applies to either the design of a much larger facility (pilot plant or commercial plant) based on bench scale work or design of a commercial plant based on pilot plant work. This concept of scale-up also applies to utilization of a new reactant, catalyst, additive, or other raw material. Scaling up an existing commercial facility to a higher capacity is often not thought of as scale-up because it is only going from a lower capacity to a higher capacity without a basic change in the process or raw materials. However, some of the most creative engineering work is often associated with this type of scale-up. There are actually two kinds of scale-up of commercial facilities. The first of these is expanding an existing commercial facility by replacing or modifying critical production-limiting equipment or eliminating this limit by process changes. These are referred to as “Debottlenecking”. The genesis of this name is that plant limitations are often referred to as “bottlenecks” which come from the shape of the neck of many bottles. As the neck of the bottle restricts flow of a liquid so the “bottleneck” of a plant limits the production. Thus “debottlenecking” refers to removing this “bottleneck”. This is generally very economically attractive while it can be done in an effective and inexpensive fashion. This is because the last increment of production is produced at a low cost (marginal cost) and sold for the same price as the average production. In addition to this “debottlenecking” of an existing plant, scaling up to a larger capacity can also involve a new plant that is designed for a higher capacity. When considering the “debottlenecking” scenario, there are three approaches that can, in theory, be used. They are as follows: • Just make everything bigger by the scale-up factor. • Increase the production rate of the plant until a limit is discovered and then use that as the new capacity. • Make a detailed study of the possible limitation of the key pieces of equipment and develop low cost ways to eliminate these bottlenecks. The first of these approaches is not practical for an existing plant since it implies that all the pumps, heat exchangers, and piping would be replaced whether it was a limit or not. The second of these approaches—increasing production rate until a limit is discovered might be all that is possible if the limit is a major piece of equipment such as a large furnace that has no further excess capacity. The third of the approaches given is the only means to obtain a low cost “debottleneck” that might involve equipment replacement. It is really a combination of defining what the “bottlenecks” are and creating ways to eliminate them. For example, if a furnace limit is associated with the amount excess air available for the combustion process, this might be overcome by the installation of air blowers or larger air blowers to increase the amount of air available. However, before recommending this, the designer will need to confirm that not only there are no other limitations of the furnace but also no other critical equipment limits in the plant. The approach of considering only the critical pieces of equipment will generally be sufficient because items such as piping and control valves have excess capacity or can be easily replaced. However, in plants containing piping that conveys two phases (solid–gas, solid–liquid, or liquid–gas), a careful checking of the pressure drop, the potential for settling or changes in flow regime will be necessary as a part of the “debottlenecking” effort. When considering the critical pieces of equipment, it will likely be necessary to conduct data analysis and test runs. Many of the critical pieces of equipment will be well known to operators and technical personnel associated with the particular process unit. However, those closest to the process might think in terms of system limitations rather than specific limitations of the components of the system. They might also erroneously conclude that a system limit is caused by a specific component. For example, it may seem that there is a limit in a liquid handling system because the control valve is operating 90+% open most of the time. Those closest to the process might conclude that replacement of the control valve will increase the capacity of this liquid handling system. In fact, the limitation may be a pump limit that is causing a low discharge pressure and hence a 90+% opening of the control valve. While this is a true system limit, it indicates that this area needs to receive consideration in order to “debottleneck” the process. However, the observation of a system limit does not determine whether the control valve, the pump or the piping should be replaced. In this example, the determination of limiting equipment will be done by calculations using the pump curve, pipe size, flow rate, and control valve characteristics defined by the CV and type of valve. A control valve CV along with the type of valve will determine fluid flow as a function of pressure drop and valve opening expressed as a percent. In another example, it might appear that a heat exchanger is limiting the process production to a slightly below design rate. In this case, an exothermic reactor is cooled with a pump around circuit consisting of a circulating pump and a heat exchanger. Both of these pieces of equipment should be considered as potential limits to heat removal capability. Those closest to the process might maintain that the reactor could not be debottlenecked because there was no room to install a larger exchanger. However, a careful evaluation of the system might reveal that the exchanger is perhaps dirty, such that the installation of a higher capacity pump, or additional flow of cooling water might allow for more heat removal. This process of checking the critical pieces of equipment should continue until most of the major equipment has been considered. At this point, a table similar to Table 12.1 can be prepared to aid in making a judgment of what capacity should be used for the study design associated with scaling up an existing plant. Table 12.1 Expansion Increment Equipment Expansion Increment and Cost of Expansion $M 10% 20% 30% Reactor, $M 1 5 Feed Purification, $M 0 2 Recycle, $M 0 3 Solids Purification, $M 1 3 Solids Packing/Shipping, $M 2 4 Flare and Offsites, $M 0 3 Total, $M 4 20 Cost/Ton, $/T 300 800 In the example shown in Table 12.1, the existing capacity is 120 KT/Year (120,000 Tons/Year). A new plant would cost $120 M. This “bookend” provides a maximum “debottlenecking” cost of about $1000/Ton. This maximum “debottlenecking” cost was determined by dividing the cost of a new plant ($120 M) by the production rate from a new plant (120 KT/Year). If the “debottlenecking” cost exceeds $1000/Ton, it is likely more economical to build a new plant rather than to “debottleneck” the existing plant. In the early stages of a study design, this kind of cost information is not available. However, the process designer can generally make very rough estimates which can then be used to determine the likely increment of capacity that can be provided. In the example shown in Table 12.1, a 30% expansion increment is probably greater than what can be economically justified based on the incremental investment cost for the expansion compared to a completely new facility. On the basis of this table, the economic expansion increment is about 20% or less. The market assessment should provide additional details which will help determine the exact increment of production that can be justified. The expansion of 20% will provide an incremental capacity of 24,000 Tons/Year and a total capacity of 144,000 Tons/Year. Using this as a basis, complete material and heat balances should be developed. These material and heat balances will serve as the basis for the process design calculations that will be done as a part of the study design. The other case of scaling up a commercial plant to a larger size is the design of a new plant using the same technology as used in the existing plant, but with a significantly higher capacity. The plant will often be planned for another location, but the design is based on the current process and operating plant. In this case, the capacity of the plant is often determined by market considerations as well as physical limitations (plot plan, transportation, and equipment manufacturing limitations). If the plant size is to be set by physical limitations, the limitation will almost always be a large or specialized piece of equipment. The specialized equipment maybe limited by the desire to avoid sizes that have not been commercially demonstrated. In addition to this limit, the plant size maybe set by shipping or manufacturing limitations of large equipment. This shipping and/or manufacturing limit rarely occur(s) except for equipment in refineries, oil sands, and/or commodity chemical plants. If the desired plant size is larger than these limits, parallel lines will be required. This is usually not desirable. Once the size of the scaled-up plant is set, the overall material and heat balances can be determined. It is mandatory that this material and heat balances be developed for doing the process design and for assistance in preparing the operating manual and operator training. This is especially true if the new plant is located at another location. In addition to these items (operating manual and operator training) that are generally done by the operating company, the preparation of material and heat balances is valuable for the process designer(s). The process design is often being done by those who are not intimately familiar with the process. The preparation of the material and heat balances will be a learning experience for those who are most often not knowledgeable in the process. In addition, the preparation of these balances will provide a basis for sizing equipment instead of the alternative of just increasing the equipment size by a scale-up factor. Two of the three possible approaches discussed earlier are still applicable in doing the process design. Since the new plant has not been built, the approach of increasing the production rate until a limit is discovered may not a feasible approach. The approach of just making everything bigger by a scale-up factor will almost invariably lead to oversizing equipment and piping components. In addition, the existing commercial plant often will have specialized equipment which is either performing better or worse than it was designed to perform. If this equipment is scaled-up without any consideration of actual performance, then the new equipment will either be oversized or undersized. Thus, the only approach that seems reasonable consists of one that uses the following steps: • Make a detailed study of the key pieces of specialized equipment in the existing plant to determine the C1. C1 was described earlier in Chapter 2. Once C1 is determined, it can be utilized in the next steps. • Prepare detailed material and heat balances for the new plant based on the capacity determined by the market study and/or physical limitations. • Now using the material and heat balances, classical chemical engineering calculations and C1 for the specialized equipment, the equipment and piping for the new facilities can be sized. This approach, while it may be more labor intensive than just increasing the size of all the equipment and piping by a scale-up factor, will result in a significantly better process design and hence likely remain a better operating plant. An example of using C1 would be a critical specialized heat exchanger. In this case, C1 would represent the heat transfer coefficient as determined from the existing plant. As this plant is scaled-up to a new plant or a debottlenecked plant, the heat transfer coefficient can be expected to be constant as long as the relative dimensions (H/D, baffle spacing, tube diameter, etc.) are the same or similar. While the scale-up of specialized heat exchangers based on the existing plant might seem simply a matter of specifying to the supplier, the heat transfer coefficient to be used, as indicated other dimensions, may be important. For example, the change in the H/D ratio for a vertical condenser with the condensate on the shell side might result in a lower heat transfer coefficient. This is due to the thicker condensate layer that will accumulate on the outside of the tubes as the condensate runs down the tube. In either of these cases (debottlenecking an existing plant or designing a new plant based on an existing plant), any new equipment will require careful dimensional analysis. The larger reactors if required will require that the designer maintain similar dimensions to the existing reactor and similar mixing patterns. As indicated in Chapter 7, the design of the agitator will likely require assistance of the supplier. An analysis of the need for larger reactors can be determined using the same approach based on C1 for various parts of the reactor. If the reactor is jacketed, the heat transfer considerations can be based on the heat transfer coefficient (C1) determined from the existing reactors. In addition, the need for additional residence time can be determined by the kinetic relationship developed for the existing reactors. This relationship for propylene polymerization was developed in Chapter 2 and is repeated below: (2.3) Where: CE = The catalyst efficiency, lbs of polymer/lb catalyst. K = The simplified polymerization rate constant, ft³/lbs-h. This is a form of C1 and is determined from test runs in the existing plant or bench scale results as discussed previously. M = The propylene concentration, lbs/ft³. T = The residence time, h. The 0.75 represents the catalyst decay factor. It can be determined by multiple batch polymerizations at variable residence times. The relationship described by Eq. (2.3) can be used to develop the catalyst cost as a function of residence time. This along with the investment for larger reactors can be used to determine the justification for adding increased reactor volume. In the case of propylene polymerization, the effect of increased production on catalyst efficiency can be minimized by increasing the size of the reactors or by operating changes such as increased monomer concentration (M) or increased reactor level if the reactor is not a liquid-filled reactor. In the case of a gas phase reactor, the operating changes available to avoid increasing the size of the reactor are pressure, monomer concentration, and fluid bed level. Summary While most people consider scale-up to mean designing a commercial plant based on laboratory experimental equipment (bench scale or pilot plant scale), there is also a need to scale-up from a smaller commercial plant to a larger one. This scale-up can either be a “debottlenecking” or the design of a new commercial facility based on a smaller existing facility. The same principles apply as are used in laboratory scale-up. In addition, the knowledge base about equipment in the existing plant must be well established. While most scale-ups to larger facilities are successful, some fail. The failure is often due to expediency, lack of knowledge of existing bottlenecks, and/or conclusions reached without calculations. In addition, most often the failed project has not been adequately resourced. This will result in failing to use the scale-up principles discussed in this book. This will result in an inadequate scale-up and an economic failure. 13 Defining and Mitigating Risks Abstract The previous chapters discussed the technical and business aspects of scaling up from bench scale, pilot plant scale, and from commercial scale as well as the early stage economic study development. The next chapter deals with typical case studies. These prior and subsequent chapters to this chapter do not touch on how to define and handle risks. Risks generally fall into two broad categories. There are technical risks associated with the scale-up itself. Then there is what might be called organization/people risk. The successful defining and mitigating both of these types of risks require good experienced management and technical personnel, adequate resources, a reasonable schedule and a cooperative team spirit. Keywords bench scale commercial scale cooperative team spirit and culture manpower resources mitigation of risk pilot plant scale The previous chapters (Chapters 1–12) discussed the technical and business aspects of scaling up from bench scale, pilot plant scale, and from commercial scale as well as the early stage economic study development. The next chapter (Chapter 14) deals with typical case studies. These prior and subsequent chapters to this chapter do not touch on how to define and handle risks. Risks generally fall into two broad categories. There are technical risks associated with the scaleup itself. Then there is what might be called organization/people risk. The successful defining and mitigating both of these types of risks require good experienced management and technical personnel, adequate resources, a reasonable schedule and a cooperative team spirit. This chapter deals with how risks can be defined and mitigated. As such it deals with developing techniques to define/mitigate risks, defining required resources, developing of reasonable schedules, and developing organization/management concepts to foster a cooperative team spirit and culture. The concepts described here will be of value for both research developments that result in the construction of large commercial plants as well as research developments that improve process economics by utilization of new catalysts, chemicals, or raw materials. In any of these developments regardless of size or type, there will almost always be a single individual (chemist or chemical engineer) who is the primary individual for getting the project moving. I have used the term “project originator” to mean the individual serving in this role in an operating company. This same individual may be responsible for keeping the project moving until completion. However, for large projects that require significant design work and expenditure of funds, the project originator may be replaced by a project engineer at some point in the life of the project. The discipline of the project initiator depends on the type of project involved. For new or modified facilities, a process designer will be the one most knowledgeable and should serve as the project initiator. For a new raw material (catalyst or chemical), the initiator will be the individual most involved with the project. He will normally be the one in the operating company or operating division that has dealt with the bench scale researchers or the personnel from a company supplying the new catalyst, raw material, or chemical. Perhaps a story from Texas folklore illustrates the principal of a single individual having responsibility for the project. The Texas Rangers are the oldest law enforcement group with statewide jurisdiction on the North American continent. In the late 1800s, according to Texas folklore, there was a small Texas town where a large riot was imminent. The town had very limited law enforcement resources then. They requested that the governor of Texas send a troop of Texas Rangers to quell the riot. When the train arrived, carrying what the town folks thought would be a troop of Rangers, only one person emerged from the train. When the town folks expressed shock, the Ranger’s reply was “One Riot- One Ranger.” While this folklore is far from research that is being commercialized, it illustrates the single person responsibility concept. Techniques for Definition and Mitigation of Risk Those in industry are familiar with the vast number of techniques that are used to ensure that any project whether it be a new process or a new raw material has been adequately reviewed. These reviews consist of safety reviews (such as hazards in operations (HAZOPs), levels of protection analysis (LOPAs)….), design reviews, maintenance reviews, startup reviews, potential problem reviews, and business reviews to name a review. Many of these reviews were highlighted in Chapter 8 on sustainability. The purpose of this discussion on techniques is to give an overall summary of the purpose of the reviews and a general approach for conducting these reviews. This chapter does not provide detailed discussions of how these reviews should be conducted. Safety reviews such as HAZOP or LOPA are conducted after a careful analysis of each of the detailed process flow sheets that are often referred to as P&I diagrams. The analysis is made by a team that generally consists of the project initiator, a senior technical person who is familiar with plant operations (but not operations of the plant being considered), an operator familiar with the process being considered or a similar one, a mechanic or mechanical supervisor who is familiar with the type of equipment involved, a process control specialist who can provide the details of the operating control system, and a team leader. This team will require either an additional person or one of the team members (not the leader) to serve as secretary. The HAZOP or LOPA analysis consists of reviewing several questions such as, What happens if an exchanger leaks? What happens if a control valve fails either open or closed? or What happens if the temperature of a reaction can no longer be controlled? These and other questions are usually contained in a carefully prepared manual that describes how to conduct such reviews. A typical safety review for a new or modified process will last most of a week with additional time to prepare a final report. Design reviews and maintenance reviews are often combined. The review team will normally have a similar makeup as that described for safety reviews. The team may be somewhat larger as more of those who have been involved and will be involved are included. While safety items may be discussed, this team will have the objective of reviewing the P&I drawings with the goal of confirming the design methodology, the process flow and mechanical reliability. For example, a distillation column with a new kind of trays might be the subject of some discussion. The review team might want to know the answers to such questions as What tray efficiency was used for the design? What commercial experience is available for these trays? or How do they perform if fouling material is present in the feed? The focus will tend to be on major pieces of equipment, sparing of critical equipment and control strategies. Startup analysis is often included in this review. A startup analysis will include items such as, How is water to be removed from the process? Is the process so heat integrated that it cannot be started up? or Are there any unsafe by-products that might be made in startup conditions? A shutdown analysis similar to this should also be considered. This shutdown analysis might include items such as, How are solids cleared from the equipment? How are dangerous chemicals cleared from vessels, pumps, and compressors before they are opened? or Is there adequate off-site storage available to pump out the unit? A similar analysis to this should be made for introducing new catalyst or chemicals into a process. This analysis should include considerations for initiating flow of the new material into the process. It should be done in a gradual fashion which will require multiple displacements of any CSTR to see the full effect or it should be done by a complete shutdown and restart with the new material. While reviewing, the emergency situations (power failure, cooling water failure….) are really safety review items, which may also come up in the design reviews. When reviewing the design for startup, shutdown, and emergencies, due considerations should be given to the impact of procedures on other units. For example, a procedure that calls for pumping out receivers to other units or sending a stream to the flare should be carefully reviewed to confirm that it will not impact other process units that are connected to the one under consideration. From a technical viewpoint, one of the most valuable reviews is a potential problem analysis. A potential problem analysis calls for reviewing all critical decisions, equipment or research results using a “what if scenario.” One example of this might be the bench scale work that indicated that the Arrhenius constant was much lower than classical chemical engineering would indicate. If the reaction rate were calculated using a classical Arrhenius constant, it would be anticipated that the reaction rate would double with a 20°F increase in temperature. The Arrhenius constant is determined in the bench scale by several runs at constant residence time and reactants concentration, but with varying reactor temperatures. The amount of conversion is then determined, and the reaction rate constant is calculated for each temperature. On the basis of the bench scale work, if the Arrhenius constant appeared to predict that the reaction rate would increase with increasing temperatures less than traditional chemical engineering guidelines would predict, then this would be highlighted as a potential problem. A typical response for both the classical concept and the actual laboratory data is shown in Fig. 13.1. Figure 13.1 Reaction rate ratio versus reaction temperature. The potential problem statement might be “The data developed in the laboratory showed an increase in reaction rate that is less than what would be calculated based on a classical Arrhenius constant. Since the flare system design was based on laboratory data, this might cause the safety release system to be overloaded if temperature control were lost in the reactor.” Once this potential problem is recognized, the project initiator can then begin to develop a preventative action plan to mitigate this problem. Possible preventative actions might consist of determining if the laboratory results are correct and if they are not correct, redesigning the safety release system. In addition to a complete redesign of the safety release system, it might be possible to include a staging effect to reduce the safety valve release rate or take design action to reduce the release rate going to the flare. When analyzing some potential problems, an action step is also included for the case where the problems do occur in spite of the preventative action step(s). In one of the case studies given in Chapter 14, a partial potential problem analysis has been shown. Business reviews are those considerations that take the best-known data on the process, the economics, and the product market place reception/product pricing to determine if the project will still yield an acceptable return. These reviews are often referred to as “Gates” indicating the presence of a point in time when a gate must be cleared before additional time and funding is provided for the project. Many books and magazine/internet articles have been written showing how various companies implement the Gate system. It is not the purpose of this book to cover this aspect, but to make the reader aware that such a system exists. Chapter 9 describes how the economics of a new project can be determined using only a minimal amount of laboratory data. Developing a variety of visions for projects has become a popular means to determine whether a project will be a good fit for the specific company or not. However, it should be noted that these business reviews cannot be conducted without technical and marketing input. Visions with numbers are important. Visions without numbers shall likely lead to economic nonsense and economic disasters. Required Manpower Resources The importance of qualified personnel has already been mentioned. These next few paragraphs address the question of how many people does it take to produce a successful project. Whenever the subject of manning is discussed, the question of determining the optimum number of people who are required is involved. The problem with not having sufficient manpower resources is obvious. Since regardless of how many hours a person works in each week, there is a limit. When this limit is reached, the individual will begin to take calculation short cuts, fail to adequately communicate, and/or ignore a pressing problem. The result of this is often an industrial accident or at best a loss of production. However, it is equally bad to have too many resources. If the project is “over staffed,” people will tend to use the free time to complain, scheme, or other devious and nonproductive ways to “kill time.” The old cliché “An idle mind is the devil’s workshop” is definitely applicable. Obviously, the required resources are a function of the size and complexity of the project. Fig. 13.2 gives an owner’s manning schedule for a moderate size project ($50–$100 M) with a moderate degree of complexity. The manning schedule begins with laboratory inception and goes through the startup phase. This estimate does not include the Engineering and Construction Company’s manpower for detail engineering, construction, and design assistance during startup. Figure 13.2 Owner manpower schedule: new facilities. The utilization of a new raw material or catalyst will obviously require fewer resources than a major project would need. This type of project may not even require any detailed engineering or construction. However, the manpower need, which sometimes appears nondescript, even for a relatively minor change should be given due consideration. Fig. 13.3 provides some guidelines for estimating these needs. Figure 13.3 Owner manpower: existing plant with new raw material. Obviously, it is difficult to project manpower resources without knowing the details of the project. Even when the details are known, it is often challenging to accurately estimate the manning needs. Areas such as investment size of the project, complexity of the project, and capability of the existing organization will impact the needs. When considering the investment size of the project, there are two impacts that need to be considered. As a general rule, the greater the investment the greater is the size of the project team and greater is the incentive to get the project done quickly. Some of this incentive is related to the need to begin generating a positive cash flow and some of it is related to promises from lower management to upper management. Often, the duration of any phase of the project can be reduced by adding additional personnel. However, this is not always the case since inclusion of additional personnel often may cause project inefficiencies. The estimates shown in Fig. 13.2 assume that the project is a normal speed project rather than a fast track project where additional personnel would be of value. They also assume that the project is of normal complexity and that availability of high quality personnel is not a limitation. When considering capital projects that are smaller or larger, more or less complex, and without well qualified people, the manning requirements should be scaled up or down. The question how should this be done then becomes one of the issues. Certainly, manning requirements are not linearly scalable. That is a project that costs two times the $50–$100 M will not require doubling the manning shown in Fig. 13.2. A classical methodology of chemical engineering is the approach of scaling using the 0.6 power. Applying that to the manning requirements might be helpful as shown in Eq. (13.1). (13.1) where M1 = Manning for project with cost or complexity of C1. M0 = Manning for project with cost or complexity of C0. The baseline project. C1 = Cost or complexity of project. C0 = Cost or complexity of the baseline project. This type of approach recognizes that as the size or complexity of the project changes that certain roles such as leadership do not require more or less people. Probably a better approach is to carefully examine exactly what manning is required. In considering the complexity of a project, consider a large distillation tower compared to a reaction process where the reactor is operated at a low temperature of −150°F. The distillation process even with complicated condensers and reboilers would be considerably less complicated than the reaction process. This process is complicated by the need to operate at this low temperature since it would likely require both ethylene and propane refrigeration systems. In addition, most polymerization reactors can be very sensitive to impurities and require high technology analyzers as well as monomer purification facilities. The project location maybe at a site that already has well-trained and experienced operators and mechanical personnel. While the technology may be different than what they have experience with, they will be familiar with basic unit operations and maintenance. The technical organization at an existing site, while not necessarily being experienced in the technology will have made the transition from the academic world to the industrial world. At a new grass-roots site, it is likely that the technical staff as well as operators and mechanics will be much less experienced than at an existing site. The argument could be made that personnel (operations, maintenance, and technical) could be transferred from an existing site to the new site. However, there are two limitations to this concept. In the first place, the people may not want to leave their current location. In the second scenario, the management at the existing location may not agree to release the well-qualified personnel and will want to substitute a less qualified person. This second scenario may occur even if the new facility is built at an existing site. In addition to these “in-house” resources, the operating company may have a need to seek expertise from outside. It may be necessary to obtain both construction supervision and startup assistance from suppliers of very specialized and/or complicated equipment such as centrifuges or equipment drying and handling solids. The utilization of computers for control and data storage will often make it desirable to have startup assistance and training from these suppliers. This assistance from suppliers is often included in the cost of their equipment. In addition to this equipment related assistance, it is often desirable to have representatives from the Engineering and Construction Company that did the detailed engineering present on-site for startup. Their presence will provide a source to review the design calculations or recycle with the Engineering and Construction Company as necessary. Reasonable Schedules The project development time line has often been shortened to the detriment of the quality of work. When schedules are being considered, I am reminded of what I was once told by a machinist who I was pushing to give me a time when the repair that he was working on would be finished. His reply was “It will be finished when it is finished.” His words constantly remind me of the need to do a job well with a reasonable time schedule. It is probably of value to add a word of caution to the reader when discussing schedules. Schedules generally have two components. One of these is the length of time that it takes to get the work done. The second is the length of time that it takes to get approval and/or the release of funds to proceed with the project. Besides internal approvals, it is often necessary to obtain approval from various government bodies. The schedules shown in this chapter are only those described as “the length of time that it takes to get the work done.” No allowance is provided for excessively long approvals. There are often needs to complete a project quickly. However, one must constantly be on guard to avoid the “There is never enough time to do the project correctly, but there is always enough time to redo it.” Figs. 13.4 and 13.5 show typical schedules from project conception to construction completion for a project handled in a typical sequential fashion and one that is “fast-tracked” respectively. A project that is “fast tracked” will often have overlapping steps rather than sequential ones. In addition, a fast-tracked project will have a shortened approval schedule rather than a more traditional schedule. These figures show a potential time saving of 10–12 months if the project can be “fast tracked.” Figure 13.4 Sequential project timing. Figure 13.5 Fast track project timing. Not all projects need to be “fast-tracked.” In addition, not all projects can be “fast tracked” because of the overall company organization and capital approval schedules. For a project that cannot be fast-tracked, bench scale research, study designs, pilot plant design and construction, pilot plant operations, and plant process design are all be done sequentially. However, this luxury is rarely the case in the current business/industrial environment. There are likely projects that need to be fast tracked. One historical example is the synthetic rubber industry that was developed during WWII. The Japanese thrust into SE Asia basically eliminated the supply of natural rubber. An entire new industry arose to develop projects for the production of synthetic rubber from petroleum-based materials. For a project that is to be fast tracked, Fig. 13.5 indicates a way to do this with overlapping schedules. This overlapping schedule for a typical fast track project shows the possibility that preparation of the P&I diagrams will be started prior to completion of all the research work. The fast track approach for a new project is risky since it may be necessary to redo parts of the process design as pilot plant operation begins. It may also be necessary to redesign and rebuild the pilot plant. This obviously creates a double delay with a redesign of both the pilot plant and commercial plant as well as rebuilding the pilot plant. It is conceivable, but not likely, that the fast track approach may take longer time to reach the end target of process design completion than the conventional approach could do. This might occur if an existing pilot plant has to be dismantled and removed before it can be rebuilt. While this description of schedule is based on a process plant construction, it has application to the change in catalyst, chemicals, or raw materials for an existing plant. For the case of a new catalyst being used in an existing plant, the sequential approach will include some but not all of the steps discussed earlier. The sequential steps will include bench scale research, pilot plant operations, confirmation that the process chemical or catalyst change is economically desirable, plant test planning, plant test and conversion to full time use in the plant. These steps offer opportunities for fast tracking by doing some of the steps in parallel rather than doing everything sequentially. Cooperative Team Spirit and Culture The actual organization chart is not near as important as the culture. The management of the project should work to achieve a culture that has the right people, a cooperative organization spirit and a reasonable time line. These musts are defined in the next few paragraphs. The people on the development team must have the capabilities for the job. The team leader must not be there to learn the job. Unfortunately, when an individual is put in a leadership position to “learn the job,” there is no one to correctly learn the job from. When considering the organization leader of the team, it is likely that this leadership will change as the project progresses. Fig. 13.6 shows how the leadership will likely change as the project progresses from laboratory to construction completion. Figure 13.6 Leadership change: sequential project timing. This change in leadership is consistent with the desire to have well qualified people in the leadership role. In the early stages of the project (initial research and study design), the leader should have experience in either of these areas that are in progress. As the project progresses into the detailed design and construction phases of the pilot plant and process design of the commercial plant, a project engineer with a background in serving as a lead engineer on multiple projects should take on the role of the project leader. It is recognized that he will not be an expert in research, but most of the research will have been completed by this time and there will be a need to focus on contractor selection, project schedule and project execution. While Fig. 13.6 shows leadership transition for a project with sequential timing, it is also valid for a fast track project with the exception that the schedule will obviously be compressed and the project engineer should take leadership at an earlier time in the schedule. This schedule compression requires that more coordination and resource allocation be made at an earlier date which will require someone who has a project orientation and is an experienced project engineer. This individual should have some background or interest in research and development if at all possible. The importance of the culture of the team cannot be overemphasized. The team should have an overall cooperative spirit and culture. This means that any success, failures, or problems are owned by the team not by an individual. This culture will be one that creates individual creativity, mentoring, and team effort. Perhaps the best way to determine if this sort of culture exists is to listen for “dangerous phrases” and “dangerous thoughts.” These are phrases and thoughts that often indicate that the team culture is not right. Some of these are: • “Make sure that I get credit for helping you.” • “My job is not as interesting as yours.” • “I was the guy/gal that discovered that.” • “I had to develop this for myself. I am not going to share it with you.” • “My boss doesn’t know anything about management. He is a real jerk.” While most people working in both of the industrial and academic world are familiar with examples of the failure to establish a cooperative culture, developing a healthy productive culture is often difficult to do. While this book is not designed to provide answers to the question of how to produce this type of healthy culture, in a project team there are actions that are helpful in achieving this goal. Some of these actions are: • Celebrate accomplishments as a team and provide a budget for this. These celebrations might consist of get together for families, team sporting events, or team financial rewards. • Share the blame by judicious use of pronouns. For example, “We made the wrong decision at that point” is a much better team phrase than “They made the wrong decision at that point.” • Establish offices separate from the main building or in a separate part of the main building. This will help breakdown organization barriers. In a typical organization, research may be in one building, design engineering in another and plant engineering in yet a third building. Separate buildings tend to emphasize organization structure and prevent good teamwork. While isolating a project team in a separate building or section of a building may be difficult to do due to the various skill levels and possible different geographical locations, it should be approached as much as possible. For example, if research is located in a separate location from engineering, this goal can be approached by traveling to have frequent face-to-face meetings. • Provide amenities for the team. This might consist something as low cost as free coffee or sodas. It provides a way to remind people on a daily basis that they are a team. • Look for opportunities to praise teamwork and mentoring. The praise might be as simple as thanking a senior engineer for mentoring a less experienced one. That would be a way to show the mentor that you recognized one of his roles was to provide his experienced knowledge to a junior member of the team. Many senior engineers feel that they get no credit for mentoring and that it just reduces time that they have to spend on their assigned tasks. • While this spirit of teamwork and cooperation is vitally important, it should be recognized that most technical step outs are produced by an individual working long hours in semiisolation. Leaders and managers need to find ways to make these individuals aware that their efforts are recognized. • Protect personnel assignments. A development project is not the place to give someone a short-term training assignment. If they are assigned to the team, they are there until the team is disbanded. • Communicate, Communicate, and Communicate—Communicate in all directions up, down, and sideways. Don’t let the organization structure that is on paper limit communications. As a general rule—Too much communication is better than too little. These are just some starting points that will be helpful in developing a high performing team for the development of a project. There are undoubtedly other actions that will be of value. This goal of this chapter has been to recognize that regardless how faultless the calculations, approaches, and concepts associated with bringing a project to commercialization, there are other factors that might cause the project to fail. These things are both technical related and organization related. The technical related are often items that were considered, but were dismissed as unlikely to occur. These items will be uncovered by a potential problem analysis that is conducted with an open mind. One example of a closed mind potential problems analysis will help to illustrate “how not to do a potential problem analysis.” In one real life example, an operating company was told by an outside consultant that with their design, the friable catalyst would break apart when a high tip speed centrifugal pump was utilized for feeding the catalyst to the reactor. Their response was “We considered that and dismissed it as unlikely.” The catalyst did disintegrate and the pump design had to be redone. Much of this chapter is devoted to organization items. How do we take a well- trained team and make sure that they are functioning rather than being dysfunctional? How do we keep the project originator or others in leadership roles from being frustrated by the host of what they may regard as unnecessary reviews? Or how do we encourage senior technical personnel to mentor less experienced engineers, operators, and mechanics? Answers to questions such as these and others will go a long way to insuring high quality performance from the project team. 14 Typical Cases Studies Abstract One way to provide a mechanism to understand the approach that is proposed in this book is to consider case studies. To enhance the concepts, six cases studies have been selected as illustrations. These consist of three case studies where the scale-up was considered to be a success and three case studies where the scaleup was less than successful. In addition to these case studies, a potential problem analysis for one of the case studies has been included. The potential problem analysis was described in Chapter 13. Keywords case study scale-up business environment Canadian Oil Sands inorganic catalyst gas phase polymerization process One way to provide a mechanism to understand the approach that is proposed in this book is to consider case studies. To enhance the concepts, six cases studies have been selected as illustrations. These consist of three case studies where the scale-up was considered to be a success and three case studies where the scaleup was less than successful. In addition to these case studies, a potential problem analysis for one of the case studies has been included. The potential problem analysis was described in Chapter 13. Before launching into the details of the case studies, a definition of success is necessary. For the purposes of this chapter, a success is defined as a scale-up that meets one of the criteria listed below: • The initial bench scale work and subsequent pilot plant operations are scaled up to a commercial operation which produces the anticipated profits for the company without a significant amount of rework. In these cases, the bench scale work appeared to produce an attractive project and a subsequent study design and economic evaluation based on the bench scale work showed the project to appear to be economically viable. Subsequently a pilot plant may or may not have been built. The technical scale-up from either the bench scale or pilot plant and startup then resulted in a plant that produced the desired product and the project produced the anticipated Return on Investment (ROI). Obviously, the product was successful in the market place. As indicated in the Introduction, the product aspect of scale-up is not covered in this book. • A study design based on the bench scale work is developed for the new process, product or raw material. However, the study design indicates definitely that this project is not economically attractive. If this is the case, then bench scale research work would be terminated and research scientists would be assigned to other projects. While this will be an extremely unpopular decision among scientists, it will prevent the company from investing huge amounts of money and spending a lot of time to develop a project which might be technically successful, but an economic failure. This type of decision is made possible by the preliminary bench scale work, an engineering analysis of the data, a study design, and the economics based on this study design and cost estimate. A project that does not fit into these definitions and categories is considered to be an unsuccessful project. One of the worst decisions that can be made in a business environment is to continue project work without considering the engineering and economic feasibility aspects of the project. The actual case studies presented illustrate scale-up and utilization of the study design approach. The cases studies presented have been modified to ensure that no proprietary knowledge is included. However, they are real life examples. The case studies were split into two groups – successful and unsuccessful ventures using the definitions given earlier. While these case studies come primarily from the chemical industry, they are applicable to any project where project scale-up is involved. For example, this approach was recently utilized on a study that involved a project in the Canadian Oil Sands. The details of this study are very far removed from some of the examples shown here. However, the proposed process did work for this scale-up study. In addition to the details of the case studies, at the end of each case study, there is a paragraph that describes the lessons learned from the events. These experiences could either be positive or negative kind of learnings. Wisdom comes from both type of experiences that go right and that go wrong. Successful Case Studies Using the definition of successful case studies, the following successful studies have been selected for a detailed description: • Production of an inorganic catalyst – This case study involved the development of an inorganic solid catalyst project. Besides scale-up and study design considerations, the project also involved developing an understanding of DOT shipping requirements, and the possible use of a contract manufacturing company for both interim production until a plant could be built, and for permanent catalyst supply. • Development of a new polymerization platform – In this case study, laboratory bench scale kinetic data were available. The study involved developing a reactor design that would be adequate for high pressure operation as well as providing the necessary cooling to remove the heat of reaction. The economics of this platform were then compared to other alternatives. This case study is included to illustrate how the development of a study design can be done with limited data and then used to determine the viability of the project. It also illustrates that by definition, any successful projects may be ones where the study design indicates that the development work should be discontinued. • Utilization of a new and improved catalyst in a gas phase polymerization process – This case study involved analysis of bench scale data to understand whether what appeared to be an improved catalyst would be successful in a notoriously sensitive gas phase commercial polymerization process. This case study is included to allow understanding of what should be done before a plant test of a new catalyst or raw material is conducted in a commercial plant. This case study has also been used to demonstrate the utilization of a potential problem analysis. Unsuccessful Case Studies As indicated earlier, unsuccessful scale-ups were designated as those that did not meet the criteria of a successful case study. The cases selected for a detailed description were as follows: • Reactor scale-up – In this case study that includes one of the steps in what was discussed earlier as a successful scale-up, the initial reactor scale-up design for one step in the process was not done correctly. The errors caused by this scale-up error were mitigated by operating techniques. This study illustrates the need to incorporate basic chemical engineering in the dialog between the researchers and the plant designers. • Utilization of a new and improved stabilizer – This case study describes the failure of a bench scale development that involved the utilization of what appeared to be an improved stabilizer in the operation of an elastomer plant. The case study illustrates that the type of equipment used in the bench scale prototype must be similar to commercial equipment or allowances must be made for the differences. • Selection of a diluent for a polymerization process – The utilization of batch bench scale studies without adequate theoretical analysis resulted in the selection of a “heavy” diluent rather than a “light” diluent for the polymerization process. In addition to the process inefficiencies, this selection resulted in a plant that had operating difficulties throughout the life of the plant. Production of an Inorganic Catalyst The development and/or use of a new inorganic catalyst for a polymerization operation had significant operating credits. Besides reduction in catalyst cost, the polymer product quality was also improved. This catalyst was available from the existing catalyst supplier. However, to use the commercially available catalyst required payment of a licensing fee in addition to the cost of the catalyst. This licensing fee was associated with the composition of matter patent that the catalyst supplier owned. The licensing fee over the anticipated life of the use of the catalyst and discounted to present value amounted to several million dollars. Thus, there was a significant incentive to develop an alternative catalyst. Bench scale experiments indicated that by using different raw materials and temperatures that a catalyst that was slightly more efficient and more importantly was of a different composition appeared to give comparable plant operating results when compared to the properties of the supplier’s catalyst. This development work was conducted using commercially available raw materials and reagent grade hexane as a diluent. Since the incentive for developing and producing this catalyst instead of purchasing it from the supplier was known, the question then became what was the cost to develop and manufacture the catalyst. The catalyst process as developed in the laboratory consisted of the following steps: • In reaction 1, two inorganic liquids reacted to form a solid (precatalyst) and some soluble by-products. The reaction was conducted in a hexane diluent with agitation to maintain the solid precatalyst in suspension. • The slurry was washed with hexane to remove reaction 1 by-products. Because the reaction by-products were undesirable in the next reaction, several wash steps were required. • Reaction 2 consisted of reacting the solids from reaction 1 to convert them to the desirable catalyst. This reaction was also conducted in a hexane diluent. • This slurry was washed to remove reaction 2 by-products. This also took several washes since the by-products would act as impurities in the polymerization reaction where the catalyst was to be used. • The slurry was stripped of most of the hexane so that large amounts of hexane would not be injected with the catalyst into the reactor. • The catalyst-hexane slurry was then injected into the bench scale polymerization reactor. The commercialization of this process was highly successful even though there were several hurdles along the path that had to be overcome. The success of the project was indicated by the fact that the catalyst was not dominated by previous patents, and that it was used commercially for over 30 years. The steps and hurdles in the commercialization are as follows: • Production of sufficient catalyst in contract manufacturing facilities to allow for a plant test of the new catalyst. • Scale-up of the reactors used to produce the catalyst from bench scale to a 1000-gallon reactor. This scale-up included capability to cool the reactants to 0°F and maintain the first reaction at that temperature. In the second reaction step, the temperature had to be raised quickly to 190°F and maintained there during the reaction. • Confirmation that commercially available hexane would produce a catalyst comparable to that produced with reagent grade n-hexane. • Determination of how to dispose of the by-products and waste hexane wash streams on a commercial basis. • Determination of the cost of producing this catalyst in a plant with all new facilities. • Determination of the cost of producing this catalyst in a contract manufacturing facility. • Comparison of the approach of building a new plant to the approach of using contract manufacturing to produce the catalyst. • Confirmation that the route of manufacturing this catalyst was more economical than the approach of purchasing the available catalyst from an existing supplier and paying the previous described licensing fees. Fig. 14.1 shows this development on a timeline. While each project will likely have a different timeline, this figure illustrates the steps and their sequence that were involved in this development. Figure 14.1 Development timeline. The initial step of a producing sufficient catalyst for a commercial plant test was achieved by utilizing a small contract manufacturer with a 100-gallon reactor, a refrigeration system, and a heating/cooling system that could be used for both reactions. The production of the catalyst was to be done using commercially available reactants and hexane. Since the commercially available hexane had a different isomer distribution than the n-hexane used in the laboratory, this was also a test of the hexane. One of the scale-up considerations in going from a 10-gallon reactor to a 100gallon reactor involved the reduction in area to volume (A/V) ratio. The heat of reaction for both reactions was reported as negligible based on laboratory results in a 10-gallon reactor. While the assumption of negligible heat of reaction was in error, the limited scale-up 10:1 and the over designed heating and cooling systems allowed the catalyst to be produced without any problems. An analysis of the catalyst efficiency and the product quality of the polymer produced using the catalyst in bench scale equipment indicated that the catalyst produced a polymer product with the anticipated properties. Thus, a test of the catalyst in the commercial polymerization plant was scheduled. The plant test was only successful when viewed from the standpoint that it indicated that this catalyst definitely would not work in the plant. The catalyst crystals disintegrated in the polymerization reactor causing the polymer product to have such a small particle diameter that it could not be handled in subsequent stages of the plant operations. While the catalyst performed well when considering catalyst efficiency and product quality attributes, it would not be considered as a replacement catalyst. The phenomena of disintegrating could not have been easily determined in the bench scale experiments. The bench scale polymerizations were conducted under conditions that gave a relatively slow polymerization rate and hence made it easier for the catalyst particles to maintain their integrity. After some additional bench scale experimentation, a catalyst that did not disintegrate in the polymerization plant reactors was developed. It was tested in the polymerization plant. It did not disintegrate and it appeared that the new catalyst was ready for the next steps in the commercialization process. The next steps consisted of developing a study design of new facilities to produce the catalyst as well as looking for larger facilities to produce the catalyst on an interim basis. When considering the study design, it became obvious that it was likely that the commercial plant might not look like the bench scale system. For example: • The bench scale work utilized reagent grade hexane. • It was believed that the commercial plant had to recycle the hexane diluent rather than disposing of these large quantities of waste hexane. • This hexane to be recycled would have to have facilities to remove by-products formed in the two reactions used to produce the catalyst. Many of these byproducts were unknown. The possible presence was unknown as well as the amount of the by-products. • The continued utilization of batch reactors appeared to be necessary due to the need for each solid particle of precatalyst and catalyst to have the same residence time in the reactors. At the same time, any facilities for recycling hexane would be continuous. This would mean that there would likely be large amounts of intermediate hexane storage. • The location of the plant was unknown. It was assumed that it would be located at a facility where people had experience with batch operations. This would mean that shipping the catalyst would be required and specialized shipping containers and requirements would have to be developed. If the catalyst was produced at a contract manufacturing site, the specialized shipping containers would also be required. The initial work for the study design consisted of setting the design basis. The amount of catalyst that would be required was estimated to be 1 Mlbs/yr. (1 million lbs/yr.) of the catalyst solid. When the batch steps were considered, it was estimated that ideally 3 batches could be produced in each 24-hour period. The theoretical batch steps and schedule along with the schematic flow sheet are shown in Fig. 14.2. This provided sufficient information to begin the study design. Rather than using the theoretical estimates of batch production cycle, it was assumed that due to maintenance, scheduling and inefficiencies, only 2 batches would be produced in any 24-hour period. Figure 14.2 Schematic process flow and batch operations. The study design team tabled several design questions initially. These questions were as follows: • What was the heat of reaction for each of the 2 reactions? The bench scale experiments were conducted in relatively small vessels. As indicated in Chapter 4, small vessels have a high A/V ratio and have low production rates. This makes it difficult to determine the heat of reaction in bench scale experiments. • What by-products were produced in the reaction? • How could these by-products be disposed of? The “cradle to grave” environmental regulation indicated that the manufacturer was responsible for the ultimate disposal. Even in the case of using a contract manufacturer, the company that was developing the technology felt they were responsible for disposing of the by-products in an environmentally acceptable way. • Would the catalyst quality and yield be the same as demonstrated in the bench scale when commercial hexane was utilized? • How could the catalyst be shipped and stored? The design team recognized that the conclusion based on bench scale experiments that there was no significant heat of reaction was not correct. They decided to assume that the heat of reaction could be removed by the cooling source in the reactor jacket. They felt that the facilities to supply the catalyst on an interim basis would allow determination of whether the heat of reaction was significant. When the design team considered the question of commercial raw materials compared to reagent grade raw materials, they recognized that the only difference appeared to be in the hexane diluent. The reagent grade hexane was 85% n-hexane where commercially available hexane was 55% n-hexane. The lower concentration n-hexane from a likely supplier was used in the bench scale work with no notable differences in the catalyst quality or yield. Scaling up the agitator system was accomplished by using a conventional agitator and scaling up to maintain the same agitator tip speed as in the bench scale in both reactors. This method was used in both the 100-gallon and 1000gallon reactors. This route was chosen based on maintaining catalyst particle integrity. It was visualized that the solids produced either the pre-catalyst (reaction 1) or the actual catalyst (reaction 2) would be very friable as experienced in the earlier polymerization plant test. It was recognized that this scale-up choice would mean that the power-to-volume ratio in the larger reactors would be much lower than in that the bench reactor. Early on, the commercial plant design team began to question the possibility that reaction by-products would be present and build up in the recycle hexane. Since the bench scale experiments were all conducted using fresh hexane (no recycle), it was impossible to determine analytically if there were reaction by-products that would build up in the recycle hexane. The design team assumed that if these by-products were present, those would be harmful to the reaction or to the catalyst product quality. An examination of the reaction chemistry indicated that there were a multitude of possible reaction by-products. One possibility for additional development would be to determine analytically if these possible byproducts existed. If they did exist, their impact on the reaction and product quality could be determined. However, the large number of possible by-products seemed to make this approach time and cost prohibitive. The design team took the approach of estimating which of these by-products would be present in the recycle hexane if there was a reasonable amount of purification of the recycle hexane. The purification system for the study design was assumed to consist of two fractionation columns to remove materials more and less volatile than hexane. This fractionation would be followed by an alumina bed adsorption column since the by-products were anticipated to be polar and trace amounts would be adsorbed on the alumina. Vapor-liquid equilibrium (VLE) was developed by using calculation methods such as the analytical-solution-of-groups model. Based on the VLE data and the fractionation calculations, there were only 2–3 compounds from the list of the possible by-products that appeared likely to build up in the recycle hexane. If these compounds appeared to be present in the effluent from the bench scale reactor, additional purification facilities than those proposed would be required for the recycle hexane. The bench scale researchers then searched for these compounds analytically. Since they did not exist down to a very low limit of detectability, it was assumed that for the purposes of the study design, adequate facilities had been provided to purify the recycle hexane. That is – the by-products that might have been difficult to separate and would build up in the recycle hexane were not produced in the reaction. The more and less volatile compounds that were to be separated from the recycle hexane would still require consideration. The disposal of these compounds along with the waste hexane was complicated by the likely presence of chlorinated by-products in these streams. Off-site incineration was a possible disposal means. However, it was necessary to confirm that the by-products would be fully converted to CO2, H2O, and HCl. The company doing the incineration would also have to have capability to remove the HCl from the vent gas by caustic scrubbing or other means. The catalyst quality and yield using commercially available reagents were demonstrated in the bench scale. The design team considered this to be a proof that commercially available hexane was a satisfactory diluent. However, since the catalyst being produced was highly sensitive to the presence of oxygen, facilities were necessary to protect the hexane from contamination with air. There were two approaches considered. The hexane could be transported to the catalyst plant under a nitrogen atmosphere and maintained under a similar atmosphere in storage. A second approach involved the same methods for shipping and storing hexane with the added step of stripping the hexane with nitrogen prior to use. For the purpose of the study design, it was decided that shipping and storing under a nitrogen atmosphere followed by stripping with nitrogen would provide the highest probability of a successful catalyst making operation. Since the catalyst would be shipped by a common carrier, it was concluded that a solvent other than hexane would allow shipping with a lower DOT classification. A commercially available white oil was selected as the material to be used for slurrying and shipping the catalyst. It was concluded that to meet the desired shipping classification the material should be shipped in a specially designed 55gallon drum under nitrogen atmosphere with the liquid contents of the container having a closed cup flash point of greater than 100°F. The estimated concentration of hexane remaining in the liquid in the container that would result in a closed cup flash point above 100°F was 1.0 weight %. This was estimated knowing that the lower explosive limit (LEL) of hexane in vapor was 1.2 mol%, the vapor pressure of hexane at 100° is 0.35 atmospheres and the molecular weight of the white oil is about 350.The actual technique for doing this calculation is shown in Eqs. (14.1)–(14.3). (14.1) Y = .012 mol fraction in vapor. Note that by definition the LEL is the composition in the vapor phase at which the vapor will burn when exposed to a flame. (14.2) (14.3) (14.4) Where: P, = partial pressure of hexane in the vapor phase, atm. VP, = vapor pressure of hexane at 100°F, atm. π, = total pressure, atm. X, = mol fraction of hexane in the liquid phase. Y, = mol fraction of hexane in the vapor phase. Conversion of the mol fraction to weight % gives 0.86% or approximately 1% hexane by weight in the liquid. As indicated earlier, the catalyst was extremely sensitive to oxygen. Thus, the white oil to be used had to be stripped to remove any traces of oxygen. Now based on these calculations and equipment design, an investment estimate and operating cost estimate were developed. These estimates then provided the basis for determining the cost of producing this catalyst in new facilities. As shown in Fig. 14.1, this basis would then be compared to the cost of producing the catalyst by contract manufacturing. Whether or not contract manufacturing would be chosen as a permanent means to provide catalyst, it would be the route to supply an interim supply of catalyst. When this comparison was done, it was concluded that the lowest cost route to permanent supply of the catalyst was to produce it by contract manufacturing. Of course, the capability of the contract manufacturer had to be demonstrated. Thus, a preliminary conclusion was that contract manufacturing would be used for both interim supply and permanent supply if the capability of the manufacturer could be demonstrated. The study design considerations were transmitted to the selected contractor manufacturer. The engineering staff of the contractor manufacturer incorporated them into the interim supply plant design. Since the commercial grade hexane was proven in the bench scale tests, it was decided that commercial quantities of it could be delivered by tank truck or in 55-gallon drums. As indicated earlier, the hexane would be stripped with nitrogen prior to adding the reagents. The contract manufacturer planned to use only once through hexane. That is hexane recycle facilities were not included. Because of the “cradle to grave” environment regulations and the possible culpability of the company that was developing the technology, this created some strong disagreements between the contractor manufacturer and the technology owner. It was finally agreed that the waste hexane streams would be incinerated at high temperature and the off gases would be scrubbed with caustic to remove any traces of HCl as planned during the study design efforts. This would be done at an off-site waste disposal company. Laboratory tests and plant tests indicated that the catalyst being produced was essentially identical to that produced in the bench scale and in the smaller commercial facility. Several successful batches were produced until a slight modification in the procedure resulted in a loss of temperature control (temperature runaway) during reaction 2. This was caused by the lack of capability to remove the heat of reaction as described in Chapter 4. This was a surprise since several batches had been produced successfully and the bench scale indication was that the heat of reaction if any was very small. Upon examining the procedure that caused the temperature runaway, it was discovered that the rate of addition of the main reagent to the second reaction was slightly faster than on the earlier batches. Most of the conversion of the solids from reaction 1 to the desired catalyst in reaction 2 occurred in the early stages during and immediately after the addition of the main reagent. Attempts to solve this temperature runaway problem by adding this reagent at a slow rate were successful in controlling the temperature, but resulted in a catalyst that was unacceptable. The scale-up error details and the solution to the scale-up problem are described later. The following lessons can be learned from this scale-up: • Any reaction will be either exothermic or endothermic. Most reactions are exothermic. • It is difficult to obtain heat of reaction in the standard bench scale facilities due to the large A/V ratio and the small quantity of heat generated in absolute terms. However, the heat of reaction should be estimated using either calculation techniques (heat of formation related) or by a special designed calorimeter that can be used to measure the heat of reaction. • This is a good example of how the steps in a scale-up should proceed as shown in Fig. 14.1. • While contract manufacturing is often a more economical approach compared to building a new facility, the company that owns the technology must not just “turn things over to the contract manufacturer”. This is particularly true if the company that owns the technology is a large company that could become the target of a lawsuit. Development of a New Polymerization Platform While the high-pressure polymerization of ethylene has been in existence for many years, the high-pressure polymerization of other olefins is generally not a chosen route to produce a polymer. A research study of the high-pressure polymerization of propylene indicated that the product being produced from this route had some interesting properties. However, the cost (CAPEX and OPEX) of producing such a polymer was unknown. Thus, the incentive for continuing to develop this process was highly questionable. In order to establish the cost of producing such a polymer it was decided to undertake a study design that would allow the determination of both the investment and operating cost of such a plant. This design was started even though the bench scale researchers had developed only a limited amount of data. These data consisted of batch experiments in a bulk (no diluent except for propylene) 1-gallon reactor with a single temperature (250°F), single pressure (11,000 psig), and a fixed slurry concentration. The residence time was varied to determine the impact on the kinetics. In addition, there was a general feeling that the reaction should be conducted at a constant temperature since it was believed that different temperatures would produce different molecular weights of the polymer. These different molecular weights would cause the molecular weight distribution of the polymer to vary as the temperature distribution in the reactor varied. That is a wide temperature distribution would result in a molecular weight distribution that was greater than that produced when there was a narrow temperature distribution. This difference in molecular weight distribution from run to run would cause the product characteristics to be different. In addition to the desire to produce the polymer at a constant temperature, there were several other factors that complicated the study design because they were outside the range of the company’s experience with propylene polymerization. These factors were as follows: • The operating conditions would put the polymerization in the “super critical region”. That is the polymerization temperature of 250°F was above the critical temperature of propylene (197°F). • At the polymerization conditions, the polymer would be soluble in the super critical fluid. This would result in a reacting mix that was at a high viscosity. This would limit the concentration of polymer in the super critical fluid to about 18% by weight. • In the downstream flash to remove propylene, based on the heat balance the unvaporized polymer/propylene residue would be below the melting point of the polymer. This is in contrast to the production of low density polyethylene where the polymer in the flash steps is always above the melting point. This difference created a concern that the flashing step would create irregular shaped polymer particles that would result in plugging in the flash drum. The designers decided to mitigate this problem by first heating the reactor effluent and then doing an initial step to reduce pressure to cause the formation of 2 semi-liquid phases. One of these phases would contain a high concentration of polymer and the other would contain essentially all propylene. With this process sequence, the polymer rich phase would be above the melting point of polypropylene during the propylene stripping phases of the process. A limited amount of phase equilibrium data was obtained in laboratory experiments. These data allowed the development of a preliminary heat and material balance. • These concepts are shown in a block diagram as Fig. 14.3. Figure 14.3 Polymerization block diagram. The researchers felt strongly that doing the study design based on the limited amount of bench scale work was a serious mistake. However, the business team members felt like they needed some preliminary cost numbers and authorized work to proceed on the study design. Some of the concern of the researchers was that the basic data were unknown. Items such as heat of reaction, Arrhenius constant, and detailed reaction kinetics had not been thoroughly determined. However, some of these items were well known for the moderate pressure of propylene polymerization. In addition, some data on reaction kinetics at the elevated temperature and pressure were available. Since the heat of reaction was well known for polymerization of propylene at moderate pressure and 160°F, the heat of reaction for the high-pressure polymerization was adjusted for the higher temperatures and used for the study design. In addition, the Arrhenius constant was assumed to be the same as that of a typical moderate pressure propylene polymerization. The reaction kinetics were analyzed using concepts described in Chapter 2. In the moderate pressure polymerization of propylene, the catalyst activity decays with time. This is due to the shell of polypropylene that encapsulates the catalyst particle as the reaction proceeds. This impact is determined by a “catalyst decay factor”. The “catalyst decay factor” for the high-pressure polymerization of propylene was determined from multiple experiments at variable reaction times. This was determined to be similar to moderate pressure polymerization of propylene. Based on these experiments that were conducted at constant temperature and variable residence time the kinetics were determined to follow the simplified relationship shown below and described in Chapter 2. (2.3) Where: CE, = the catalyst efficiency, lbs. of polymer/lb. catalyst. K, = the simplified polymerization rate constant, ft³/lbs-hr. M, = the propylene concentration, lbs/ft³. T, = the residence time, hours. The 0.75 represents the catalyst decay factor. Knowing the heat of reaction and the production rate, the heat duty was estimated after allowing for the sensible heat gain as the cooler propylene enters the reactor. This along with the kinetics developed as described in Eq. (2.3) can be used to study the different potential reactor designs. There were five reactor styles that were considered. In each of these styles the polymer concentration was limited to 18% from viscosity considerations. High viscosity would cause the heat transfer coefficients to be very low and the pressure drop in tubes/loops to be very high. The styles considered were as follows: • Adiabatic tubular reactor – In this reactor, the reactor temperature would be allowed to rise and it would be controlled by feed injection along the tube to cool the reacting mass. While there would be no external cooling in this style reactor, the polymer concentration would still be limited to 18% due to pressure drop considerations. • Cooled tubular reactor – This style reactor would be similar to a double pipe heat exchanger. The reacting mass of propylene and polymer would be on the inside of the inner tube. The annulus area would contain cooling water. This style reactor also included the vertical loop reactor as utilized by several polypropylene and polyethylene producers. • Adiabatic autoclave reactor – This style reactor consists of an agitated CSTR vessel with no external cooling. The heat of reaction is removed by sensible heating of the reactor feed. That is the feed is cooler than the reactor. The heat of polymerization is removed by allowing the reactor feed temperature to increase to the temperature of the reactor. If the reactor feed is cooled by cooling water, the reactor effluent will be at about 300°F. This is well above the target reaction temperature of 225–250°F. Since the autoclave is basically a CSTR, essentially all of the polymer is produced at this elevated temperature or at a slightly higher temperature. The temperature could be reduced by reducing the polymer concentration from the 18% to a lower value, but this would increase the size of the reactor and recycle system. • Refrigerated autoclave reactor – In this style CSTR, the reactor feed would be chilled to a low temperature using a propane refrigeration system. The polymer concentration would be reduced to allow maintaining the reactor temperature at 225°F. In order to obtain sufficient cooling without resorting to using ethylene as a refrigerant, the slurry concentration would be reduced well below 18%. This would require larger reactor volume and a larger recycle system. • Pumparound Autoclave Reactor – The CSTR would be cooled by pumping the reactor solution through large exchangers. This reactor design would use 18% polymer concentration in the reactor as limited by viscosity. High viscosity would reduce the heat transfer coefficient in the exchangers and increase the pressure drop in the pumparound loop. The advantages/disadvantages are summarized in Table 14.1. Table 14.1 Reactor Style Adiabatic tubular reactor Advantages Simplest style Minimal fouling Cooled tubular reactor or vertical loop reactor Closer to constant temperature Relatively simple de Adiabatic autoclave reactor with feed cooled by cooling water Minimal fouling Adiabatic autoclave reactor with feed cooled by propane Minimal fouling Pumparound Autoclave Reactor Best temperature control The adiabatic tubular reactor with the only cooling provided by the frequent injections of water cooled feed along the length of the tube appeared to be the lowest cost alternative. However, the limited product data indicated that the reaction temperature should be maintained as constant as possible to allow production of a polymer with a narrow molecular weight distribution. It would not be practical to provide enough feed injection points to keep the adiabatic tubular reactor at a constant temperature. When considering the remaining four reactor styles, it appeared that the choice of the pumparound autoclave reactor would be the best selection for several reasons as follows: • The reaction temperature control would be more robust. This would allow operation at a constant temperature. In addition, the robust control would minimize the chances for loss of temperature control. • The reactor polymer concentration would not be used as a temperature control mechanism. In some of the reactor designs, the reactor slurry concentration would be reduced to provide more sensible heat to absorb the heat of reaction. This would result in the need for increased reactor volume to maintain the desired residence time. In addition, the size of the recycle system would be increased in order to adequately handle the increased volume of propylene that would be circulating. • The tubular reactor with cooling would likely have fouling problems because of the temperature on the coolant side. While this could also be a problem with the pumparound cooling system, these exchangers could be cleaned easier than the tubular reactors. • Since the pumparound reactor would basically be a CSTR the propylene concentration would be constant in the reactor. Since the potential disadvantage of the pumparound autoclave reactor was the high-pressure pumps that would be required, several pump suppliers were contacted to ascertain that such pumps were available or could be manufactured at a reasonable price. When this was confirmed the pumparound autoclave was selected as the reactor system. The final step in the polymer processing train would be the removal of the propylene monomer and traces of other volatiles from the polymer. For the purpose of the study design, the supplier of a devolatilizing extruder was contacted. Based on their experience, they provided equipment sizing, cost information and utility consumption for their suggested equipment. While it would have been preferable to actually test their equipment with polymer produced in the laboratory, there was not sufficient material available. So, this alternate was delayed until more material was available from a pilot plant. Based on the owner’s cost estimating techniques including the “Emerging Technology Contingency Factors” described in Chapter 10 a cost estimate was developed. This cost estimate along with operating cost estimates allowed this new process to be compared to an alternative means to produce this polymer. After a careful study of the two alternatives, it was concluded that there was not sufficient incentive to continue development of this new technology and that the new product could be provided by an existing means. The project team was reassigned to other projects. When considering that this project was classified as successful, consideration has to be given to the definition of a successful project as defined at the start of this chapter and to the question of “Was it the correct decision to terminate the development?” The project definitely fit the definition of a successful project as defined in the early part of this chapter. The question about the validity of the decision would be judged at some point based on history. However, “hindsight is always 20/20”. When the project economics of a new project appear to be unfavorable, it is often of value to examine the cost components. In examining this decision from a technical cost reduction standpoint, the following aspects of the investment estimate and study design were reviewed: • Reactor selection – While the adiabatic tube reactor would have been lower investment and lower operating costs, it did not meet the desire to produce the polymer at relatively constant temperature. The cooled tubular reactors would be closer to constant temperature, but they would likely be subject to severe fouling. Thus, it was concluded that the choice of pumparound reactors would allow for the best combination of operability, product attributes, and cost. While changing the reactor selection might well reduce costs, it would likely result in one of two failure routes. If a cooled loop reactor was chosen, a pilot plant would likely be built to assess the fouling potential and it would be likely that fouling would be severe. If an adiabatic reactor was chosen, the final product might not have been acceptable because of the broad molecular weight distribution produced at the varying temperatures. • Post reactor – An examination of the post reactor section indicated that if the reactor effluent could be flashed directly without the phase separation and heating of the polymer rich phase that this might reduce the investment and operating costs significantly. However, the direct flashing would produce randomly shaped particles of various sizes. These would be likely to lead to plugging in this flash drum. In addition, the larger particles would be slow to melt and devolatilize in subsequent operations. Technology associated with the phase separation on another product existed in the company. So, it would be easy to adapt for this new propylene polymerization technology. Based on these considerations, it was concluded that unless a pilot plant could be designed, built and operated to demonstrate this approach of direct flashing, it would not be considered. • Investment cost estimate – All areas of the investment cost estimate were reviewed. The only one that was questionable was the “Emerging Technology Contingency”. While the amount of contingency used met company guidelines, the impact of having less contingency was tested and it was found that the decision was relatively insensitive to the amount of contingency. Based on these considerations, it was concluded that the decision to terminate the project development was the correct decision and that building a pilot plant was an unnecessary and costly expense. When this experience is considered, there several things that can be learned. It is always of value to develop a study design with the associated CAPEX and OPEX at an early stage. This will provide an economic basis for deciding whether to pursue this development opportunity or not. In addition to the economics, this study design will help guide future research efforts if the decision is to continue the development. The pressure to make a decision concerning the future of the development will almost always cause consideration of other alternatives. One of these alternatives may well be more economical than the research development being considered. The development of a study design at an early stage can almost always help determine critical equipment items. In this example, the study design was instrumental in determining that high pressure reactor circulation pumps and the volatile removal equipment were critical equipment items. Utilization of a New and Improved Catalyst in a Gas Phase Polymerization Process A supplier of catalyst for the gas phase polymerization of propylene developed a new catalyst with much higher activity than a commercially available existing catalyst. The supplier determined that the company could make an acceptable return on investment if the new catalyst were sold at the same price as the existing catalyst. Because of the increased activity, it appeared to be of interest in a gas phase polymerization process to either increase production or decrease catalyst cost. A typical flow sheet of the gas phase polymerization process is shown in Fig. 14.4. Figure 14.4 Typical gas phase. The key parts of the process are the reaction, the cooling loop, and the volatiles (primarily propylene) stripping facilities. The reactor is a fluidized bed that was modeled as a CSTR. The solid bed consisting of polypropylene is fluidized by a circulating gas stream. This gas passes through the bed to provide both fluidization and mixing and then flows into the top section of the reactor where solids entrained in the lower part of the reactor are disengaged from the gas stream and fall back into the reaction zone. This gas stream then passes through the cycle gas compressor and cycle gas cooler. Nitrogen at about 25 mol% is present in the circulating gas and serves as a heat sink. The heat of polymerization is removed by cooling and partially condensing the circulating gas in the cycle gas cooler. This gas and liquid stream then returns to the reactor and is used to fluidize the solids in the reaction bed. The liquid vaporizes and helps to remove the heat of reaction. The approximate dimensions and operating conditions of the gas phase reactor are shown in Table 14.2. Table 14.2 Reactor physical dimensions H/Db 3 Dt/Dba 2 Reactor operating conditions Pressure, psig 400 Temperature, °F 160 Residence time, hours 1 Nitrogen, mol% 25 Bed density, lbs/ft³ 20 Heat removed by conden., % 11 Heat of reaction, BTU/lb 1000 a Dt/Db is the ratio of the diameter of the disengaging section (Dt) to the reaction section (Db) The catalyst supplier did not have a pilot plant and wanted to approach the users of the existing catalyst with a proposal to either test the catalyst in their pilot plants or commercial plants. The primary customer for the existing catalyst had both a pilot plant and a commercial plant with a single gas phase continuous flow reactor in both plants. The single gas phase reactor would be the most severe test as described later. Since the catalyst supplier did not have a pilot plant and had only batch data for the polymerization in the liquid phase, there were several unanswered questions that were developed as part of the potential problem analysis. Some of these questions were as follows: • How would batch data in a liquid polymerization translate to a single continuous gas phase reactor? • Would this higher reactivity of the new catalyst cause the individual particles formed in the early stages of polymerization to melt creating reactor fouling? • Could the increased activity of the catalyst being developed be used to increase production without a large investment increase? • What would the resulting particle size distribution (PSD) be in the commercial plant? • Would the polymer particles disintegrate in the commercial reactor? • Would increased solids entrainment in the cycle gas loop, be a problem? • How would the volatiles removal compare with the experimental catalyst compared to the existing catalyst? The potential problem analysis is shown in Table 14.3. As indicated in Chapter 13, a potential problem analysis is a table with three columns as described below: • The first column describes the problem and the impact on the process. • The second column is the preventative action that is planned ahead of the test run or startup. • The third column describes what contingency action will be taken if the problem occurs in spite of the preventative action. Table 14.3 Potential Problem Preventative Action Excessive Fines are entrained into cycle gas loop causing exchanger fouling. Examine anticipated PS Removal of volatiles is not adequate Run comparisons using Reactor fouls due to higher reactivity of new catalyst. Estimate the temperatur Catalyst fractures in reactor creating reactor fouling and entrainment into the gas loop. Determine if there is a re Catalyst efficiency is lower than predicted even when considering CSTR vs batch reactor. Test customer’s propyle PSD, Particle size distribution a TGA is an acronym for Thermal Gravimetric Analyzer. This laboratory instrument can be used to develop a comparative measurement of the porosity of polymers While none of the questions generated from the potential problem analysis could be answered definitively without a test in either a pilot plant or the commercial plant, the catalyst supplier’s commercial development engineer used this analysis and evaluated these questions from an analytical view point to provide some assurance that the test would be successful. The determination of anticipated catalyst activity in the gas phase reactor using data from the batch liquid polymerization required adjustment for both the differences in monomer concentration and the continuous reactor vs a batch reactor. The effect of differences in monomer concentration between the liquid phase polymerization and the gas phase polymerization was determined using Eq. (2.3) from Chapter 2. The equation was described earlier in this chapter and is shown below: (2.3) The new catalyst and existing catalyst were tested in the batch liquid reactor and it was determined that the new catalyst had an activity as measured by the catalyst efficiency (CE) twice that of the existing catalyst at the same residence time (θ) and the same temperature. For this to occur, it had to be due to the increase in the constant “K”. That is, the “K” value for the new catalyst was twice that for the existing catalyst. The development engineer then predicted the anticipated catalyst efficiency with the new catalyst in the gas phase reactor. He used the existing plant data and calculated a “K” value for the existing catalyst. He then multiplied this value by 2 and used this value as “K” for the new catalyst. He then estimated the catalyst efficiency for the new catalyst knowing the residence time (T) and the gas phase monomer concentration (M) taking into account the nitrogen concentration. The development engineer then proceeded to estimate how much increase in production might occur if the catalyst efficiency were maintained constant and production were increased. The increase in production would decrease the residence time in the reactor. The increase in inherent catalyst activity would compensate for this decreased residence time. In addition to the balancing between the residence time and catalyst reactivity, the increase in capacity would require more heat removal capability in the cycle gas cooler. In the gas phase polypropylene polymerization process 70%–80% of the heat is removed by condensation of propylene and subsequent vaporization of the liquid propylene in the reactor. The design amount of condensation was limited to about 17% of the circulating gas by an existing patent. The development engineer developed the relationship shown in Fig. 14.5 to show that the potential increase in capacity associated with the new catalyst was 40%–80%. Figure 14.5 Increase in capacity vs condensation in cycle gas. He had no way to know if the cycle gas compressor could be increased in size or if the cycle gas cooler capacity could be increased without further information. Thus, the catalyst supplier’s customers would likely have both the option of increased capacity or decreased catalyst cost (higher catalyst reactivity). Since the fouling of gas phase polymerization reactors would be a potential concern with any possible change to the process or raw materials, the development engineer wanted to assess this problem. He assessed this problem using three fundamental concepts as follows: • In the polymerization of propylene, the catalyst particle serves as a template for the growing polymer particle. As the polymerization time increases the diameter of the growing particle increases. However, the shape of the particle remains the same and is similar to the shape of the catalyst particle. • The most acceptable theory for fouling not caused by external events was that it was caused by softening or melting of small particles of polymer that were formed in the early stages of polymerization or right after the catalyst was injected into the reactor. • The heat of polymerization might cause the temperature of these small particles to increase until they melted or softened. For modeling this process, it was assumed that this softening point occurred well below the melting point of the polypropylene. A model describing the early period of polymerization of the propylene on catalyst that was injected into the reactor was developed. Based on laboratory results and assuming that there was no catalyst decay in the early stages of the polymerization, it was determined that the catalyst efficiency could be estimated as the following: (14.5) Eq. (14.5) was based on a temperature of 160°F and a reaction time of θ minutes with the new catalyst. Since the time of most concern was the early period of catalyst in the reactor, the catalyst decay factor was ignored. The value of “820” represents the new catalyst at the reaction conditions as shown in Table 14.2. For the existing catalyst, the constant in Eq. (14.5) is 410. The reaction rate constant would increase as the temperature increased based on an Arrhenius constant of 9400 BTU/lb-mol. for either catalyst. Thus, the reaction rate constant for the new catalyst at any temperature (T) was calculated as shown in equation (14.6). (14.6) An example of the use of this equation is the estimation of the reaction rate constant at 180°F. (14.7) It was decided that the best way to determine if the thermal characteristics of the two catalysts would result in a difference in fouling was to estimate the amount of melted polymer particles that would be produced based on the catalyst activity and the PSD of the catalyst. Visualizing the polymer particle that is growing around the catalyst particle and considering only the particle in the very early stages of polymerization, the particle temperature will increase until the heat being generated is equal to the heat being removed. Fig. 14.6 shows this model schematically. The model is also described by the following equations and descriptions: Figure 14.6 Schematic representation of particle heat removal. (14.8) But when the equilibrium temperature is reached, accumulation = 0 and then Eq. (14.8) reduces to Heat in = Heat out or as shown in Eq. (14.9). (14.9) (14.10) (14.11) Where: Gg, = heat generated by the reaction. Gr, = heat removed from the growing polymer particle. Wc, = weight of the catalyst particle. The will depend on density and particle diameter. CA, = catalyst activity at any point in time. This is basically the catalyst efficiency differentiated with respect to time or the constant shown in Eq. (14.5). Hr, = heat of polymerization. U, = heat transfer coefficient. This was developed using an approach that involves the Reynolds Number, Prandtl Number and Nusselt Number. A, = area of growing polymer particle. This will depend on the catalyst diameter and catalyst efficiency. It was assumed that for both catalysts that the actual surface area was 5 times that calculated assuming a perfect sphere. This increase in surface area is associated with the porosity and irregularities of the growing particle. Tp, = temperature of the growing polymer particle. Tg, = temperature of the gas. For a given catalyst diameter, density, catalyst efficiency, heat of reaction, gas temperature and heat transfer coefficient, there will be a single unique polymer particle temperature that will satisfy Eqs. (14.8) and (14.9). If this single temperature is greater than the critical polymer temperature, then it was concluded that the particle would begin to melt and become soft and sticky. Since the leading theory was that fouling was caused by polymer particles approaching or exceeding the melting point or softening point of the polymer, the amount of polymer particles that developed temperatures exceeding the critical temperature was chosen as the criteria for judging whether the new catalyst would cause an increase or decrease in fouling. The new catalyst would be compared to the existing catalyst to make a qualitative assessment of whether fouling would be less or greater than with the current catalyst. In addition to the gas phase reactor operating conditions shown in Table 14.2, the catalyst PSD and catalyst density were available for both catalysts. A MS Excel spreadsheet was developed using Eqs. (14.8)–(14.11). This spreadsheet was then used to determine the temperature of the polymer particles that were formed from any size catalyst particle and at any point in time after the catalyst was injected into the reactor. From several calculations using the described spreadsheet, two things were concluded. It was concluded that the greatest risk for forming soft melted polymer was in the very early stages of the catalyst time in the reactor. In addition, it was concluded that the larger the diameter of the catalyst the higher would be the calculated particle temperature. And thus, the more likely it would be to form soft sticky polymer particles. Perhaps both of these conclusions are intuitively obvious, but in the engineering world calculations are always preferable to intuition. Fig. 14.7 was developed using this approach for catalyst immediately after being injected into the reactor. This figure shows the relationship between the equilibrium temperature of the particle and the catalyst particle size. As intuition would predict, the more active catalyst (the new catalyst) would have a greater tendency to melt than the existing catalyst. However, the respective particle sizes of the two catalysts were different. This difference in particle size would have to be considered before reaching any conclusion just based on estimated particle temperature. Figure 14.7 Particle temperature vs catalyst particle size. It was desirable to determine what the critical temperature that would cause fouling was. While the melting point of polypropylene is in the range of 300°F, the softening point of the critical grade was in the range of 250°F. So the development engineer set the critical temperature as 220°F. Because calculations of this nature are not very precise, it is usually appropriate to include an empirical safety factor. In this case the safety factor was 30°F. Thus, if the calculated particle temperature exceeded 220°F, it was assumed that this particle would be soft and sticky and create fouling. The particle diameter that was associated with this temperature (220°F) was then referred to as the critical particle diameter. Particles that had a diameter greater than this critical diameter were assumed to be soft and sticky. Since the particle of interest would be formed immediately after the catalyst entered the reactor, it was assumed that the polymer particle diameter was equal to the catalyst particle diameter. As shown in Fig. 14.7 the critical particle diameter was 30 μm for the existing catalyst and 20 μm for the new catalyst. If the polymer particle size (equal to the catalyst particle size in the first few seconds of the polymerization) was greater than this diameter, the particle would likely create fouling. Thus, a possible measure of fouling would be the fraction of catalyst particles than had a diameter greater than the critical diameter. To determine which of the two catalysts would have a greater tendency to create fouling, the PSD of each catalyst was plotted as shown in Fig. 14.8. The critical diameter was then added to this figure to help determine the relative amount of fouling material produced by the two catalysts. This figure indicates that the mean particle size of the new catalyst is smaller (13 μm) than that of the existing catalyst (22 μm). In addition, the figure indicates the amount of catalyst with a diameter greater than the critical diameter which then allows for an approximation of the amount of fouling material that might be present (using the calculation approach and given criteria) with each catalyst being used in the gas phase reactor. As shown in Fig. 14.8, the existing catalyst would be expected to produce 38% of the particles that would be larger than the critical diameter. These would be the particles that would likely be soft and sticky. Using the same approach, the new catalyst would be expected to have only 28% of the polymer particles that were soft and sticky. The development engineer realized that these calculations provided only a relative direction, but they allowed concluding that the new catalyst would have slightly less of a tendency to promote fouling than the existing catalyst. One might wonder about the validity of the calculation procedures or express themselves as a chemist once did – “You engineers think that you can calculate anything”. However, there is value in making such calculations since it provides a directionally correct conclusion. Figure 14.8 Estimated catalyst particle size distribution and critical catalyst size. Since as can be seen in Fig. 14.8, the 50% point on the PSD indicates a mean catalyst diameter of 13 μm for the new catalyst and 22 μm for the existing catalyst, the development engineer decided that he needed to consider the impact of the difference on the amount of fines in the cycle gas loop. An increase in entrained fines might lead to increased fouling of the cycle gas cooler. The amount of fines carried into the cycle gas loop was determined by the “particle cutpoint” in the dome section of the reactor and the fraction of particles less than this cut point. The entrainment cutpoint is defined as the largest particle size that if present in the dome would be entrained with the gas leaving the domed section of the reactor. This cutpoint is calculated using an iterative classical technique that involves the Reynolds Number and Drag Coefficient. Based on this calculation, the cutpoint was estimated to be 137 μm. That is particles less than 137 μm in diameter if present in the dome would be carried into the cycle gas loop. The development engineer then estimated the PSD of the polymer that had been in the reactor an average amount of time when using the new catalyst and existing catalyst. In estimating the particle size of the polymer particle, he used the approach of assessing the particle diameter knowing that the polymer particle formed around the catalyst particle and would be spherically shaped like the catalyst particle. Knowing that the catalyst particle served as a template for the polymer particle, the catalyst particle diameter, the catalyst efficiency and the densities of the catalyst and the polymer, he could calculate the particle size of the polymer particle. The calculation approach is shown in Eqs. (14.12) and (14.13). (14.12) (14.13) Where: Dp, = calculated diameter of the particle. Dp, = diameter of the catalyst. GF, = growth factor. The diameter of the polymer particle compared to the catalyst particle. CE, = catalyst efficiency in grams of polymer/grams of catalyst. Pc, = density of the catalyst in grams/cc. Pp, = density of the polymer in grams/cc. An explanation of the term “growth factor” may be in order. Since the catalyst efficiency is the weight ratio of polymer produced to catalyst, it can be converted to a volumetric ratio of polymer volume to catalyst volume as shown in Eq. (14.14). (14.14) Where: VR, = the volumetric ratio of polymer volume to catalyst volume. This can then be converted to Eq. (14.13). He repeated the same calculations for the existing catalyst. The development engineer then used the estimated catalyst PSD, the growth factor, and the estimated cutpoint of 137 μm to predict the amount of entrainment that would be carried into the cycle gas loop. Table 14.4 shown below convinced him that entrainment would be no worse with the new catalyst than with the existing catalyst. Table 14.4 Catalyst Description % of Polymer Entrained Existing catalyst 2.6 New catalyst 0.11 While ideally it would have been valuable to develop a means to prove that the catalyst particles would not disintegrate in the reactor, it was deemed that it was unlikely to have a proof without a plant test. In addition, the plant test would be the final proof that the calculation techniques adequately predicted plant performance. Based on this study, a plant test was initiated at a customer’s commercial plant and the catalyst appeared to exceed the expectation in the areas of catalyst efficiency. In addition, there was no apparent increase in fouling in either the cycle gas cooler or the reactor. In a review of the project, it was deemed that the following things were learned: • Pretest calculations are of great value even if they are only on a relative basis. Several of the calculations described in this case study were relative. For example, the entrainment from the dome would be no worse with the new catalyst than the existing catalyst. While this type of claim is often made in a sales effort, it is seldom backed up by actual calculations. • If the plant test had not met or exceeded expectations, the calculations would have provided a starting point to help understand the deviations from the expected. • While the pretest analysis described here indicated that the plant test would be successful, this is not always the case. There are cases where similar pretest calculations indicate that the plant test will not be successful. If the pretest analysis indicates that the plant test will not be successful, these same calculations will often point the way to changes which are necessary to provide a route to success. Doing this analysis will often avoid a highly advertised plant test that does not meet expectations. Inadequate Reactor Scale-up The first example of a failed scale-up design involved a reactor that was actually part of the successful catalyst development project described earlier. The project of producing an inorganic catalyst was successful only because of quick adaptive action that was taken to overcome the influence of the inadequate reactor scaleup. The overall process description is provided in the section entitled “Production of an Inorganic Catalyst”. The reactor in question was the second reactor where the solids from reactor 1 were converted to the actual catalyst. The bench scale work indicated that the reactant reacted immediately as it entered the reactor. As indicated earlier, the bench scale researchers indicated that there was no significant heat of reaction associated with the reaction being conducted in the second reactor. This appeared true in the bench scale, in several pilot plant production runs, and in the initial batches produced in the interim production facilities. The operating procedure called for heating the contents of the reactor to 149°F prior to adding the reactant. The steam used to heat the reactor was to be shut off at that point and the reactant was to be added as fast as possible. Cooling water was to be started as soon as there was an indication of reaction as judged by an increase in temperature. Based on these early successful results, it appeared that the key reactant could be added as fast as it could be pressured into the reactor. However, as the need for the catalyst product increased and the pressure to reduce the batch cycle time increased, the speed of adding the primary reactant to reactor 2 also increased. At a later production run a loss of temperature control occurred in the second reactor. This loss of temperature control was so severe that the temperature and pressure increased to the point that the safety valve on the reactor released. This relieved the pressure in the reactor, but the hexane diluent that was released condensed in the atmosphere and created a hexane cloud in the surrounding areas. Fortunately, the cloud did not ignite. The actual operations on this batch were reviewed and compared to the procedures, yet there were no deviations. Obviously, there appeared to be a heat of reaction associated with reaction 2. In order to mitigate the loss of temperature control, it was decided to add the reactant very slowly and then raise the reactor temperature as quickly as possible using the steam-heated jacket as the only source of heat. While this approach was successful in eliminating the loss of temperature control, the reaction to convert the solid formed in reaction 1 to an acceptable catalyst in reaction 2 was not successful. At this point the project was reviewed in detail and the following items seemed of interest: • The operating procedure for the reaction in the second reactor called for adding the primary reactant as fast as possible. This was based on the bench scale work where the reactant was added in slightly less than 10 min. • The reactant was known to react instantaneously when it entered the reactor. • In the large-scale production facilities, the time to add the reactant varied due to variations in the nitrogen pressure and nitrogen availability. This caused the rate that the reactant was pressured into the reactor to vary. • In retrospect, there had to be a significant heat of reaction in order to heat the reactor to the desired temperature under normal conditions. At this point, it was clearly obvious that adequate attention had not been given to the details of reactor scale-up. Attention was focused on correcting and/or mitigating the problem rather than placing blame for this error. There were two possible paths to follow: • Make changes in facilities to either reduce the coolant temperature which would increase the temperature driving force or increase the heat transfer area. The heat transfer area could be increased by adding internal heat exchanger coils. • Make changes in operating procedures which would eliminate the loss of temperature control while maintaining catalyst quality. The fact that some batches were successfully produced made the approach of modifying procedures seem attractive. This was particularly attractive since the project was under pressure to be successful. There was a need for full production of catalyst batches of adequate quality. Both of these approaches were limited by the fact that while there was a significant heat of reaction for this second reaction and the heat of reaction was unknown. The project time constraints made it unlikely that there was enough time to do an adequate calorimeter study in the laboratory. The catalyst development team used a plant heat balance to determine the heat of reaction from plant data. They used a technique described by Eqs. (14.15)–(14.17). In these equations it is shown that the rate of increase in the temperature in the second reactor is associated with the fact that the heat being input to the reactor is greater than the heat being removed from the reactor. The only heat being input to the reactor once the cooling water started is due to the heat of reaction (Hr). Thus, as shown in Eq. (14.17), the heat of reaction can be estimated. (14.15) (14.16) Solving for Hr then gives (14.17) Where: Tr, = the reactor temperature, °F. θ, = the reaction time, minutes after addition of reagent started. W, = weight of reactor and contents, lbs. Cp, = average specific heat of reactor and contents, BTU/lb-°F. F, = reagent flow rate, lbs/hr. Hr, = heat of reaction, BTU/lb of reagent. Tw, = average water temperature, °F. U, = heat transfer coefficient, 75 BTU/ft²-°F-hr. This was based on data obtained when hexane by itself was heated in the reactor. A, = heat transfer area, ft². This was estimated from the vessel drawings. There are some short cuts and simplifying assumptions included in Eqs. (14.15)– (14.17), but they can be easily justified. The sensible heat associated with the reactant addition can be ignored because the rate of addition and hence the heat impact is low compared to the heat sink in the reactor and the heat of reaction. In addition, the cooling water rate was so high that there was very little increase in water temperature. Thus, the use of an arithmetic average rather than a log type average gave satisfactory answers. Using this approach, the heat of reaction based on several successful runs was estimated as 700 BTU/lb. In order to confirm that the problem was truly a scale-up problem, Eq. (14.15) was used to estimate the minimum time of addition to avoid exceeding 170°F in the various size reactors. As shown in Fig. 14.9, the reactor size did make a significant difference as indicated by these calculations. It became obvious that with the bench scale reactor (10 gallons) and the pilot plant reactor (100 gallons) that the reactant could be added in a relatively short period of time. But with the full-size production facilities, the addition rate had to be decreased significantly and added over 30–35 min. To further confirm this conclusion, Fig. 14.10 was developed. As shown in this figure if the rate of addition was such that the reactant was added in 20 min, the temperature in the reactor would be become greater than 200°F. This would be well above the target of 170°F. Figure 14.9 Minimum addition time vs reactor volume. Figure 14.10 Max temp F vs time to add reagent for 1000 gallon reactor. At this point, there were still two options to mitigate the problem. New facilities could be provided as indicated earlier or changes could be made in operating procedure. Since there were time constraints, it was decided to revise the operating procedures. The revised operating procedures specified adding the reactant at a rate high enough to produce a satisfactory catalyst, but low enough so that the temperature was under control. The first and subsequent production runs using this new operating technique were successful. Thus, while the reactor scale-up was inadequate, the operating procedure changes allowed the problem to be quickly resolved. In reviewing the project, several lessons were learned • As referenced earlier, essentially all reactions have a heat of reaction. 90% of the heats of reaction are exothermic. • In this case and in similar cases, the heat of reaction can often be determined by utilization of plant data if the equipment is well insulated and adequate instrumentation is available. • The error in the scale-up of the reactor conducting reaction 2 did not ruin the project because of the rapid response and solution to the loss of temperature control as discussed. • However, the scale-up error created a significant potential liability for both the contract manufacturer and the technology developer. In a litigious society, the release of the hexane solvent could easily have resulted in a lawsuit. It is likely that in such a lawsuit that the technology developer would have been at most risk. Utilization of a New and Improved Stabilizer One of the needs with most synthetic polymers is to incorporate a stabilizer to protect the polymer from oxygen degradation which results in breaking the polymer chain and creating two molecules of a greatly reduced molecular weight. This case study involves a plant that produced a synthetic elastomer. It was proposed to introduce a new stabilizer that had properties that appeared to make the product more desirable to major customers. The researchers who developed the new stabilizer also thought that it would provide better stabilization in the finishing operation where the elastomer was extruded, milled, baled, and boxed to put into a final form for shipping. Utilization of the current stabilizer resulted in a small but significant molecular weight breakdown. The researchers had run several tests at various temperatures and times to test the ability of the new stabilizer compared to the existing stabilizer. These tests were run in bench scale ovens under an air atmosphere. The tests indicated that the elastomer stabilized with the new material exhibited significantly less decrease in average molecular weight than the elastomer containing the existing stabilizer. Tests were also conducted to confirm that the new stabilizer could be slurried in water and adequately metered into the large tanks containing the elastomer in a water slurry. This technique for adding the stabilizer to the elastomer was the approach used with the existing stabilizer. It was desirable to utilize this technique so that the new stabilizer could be just “dropped in”. Based on the laboratory results, it appeared that the new stabilizer could be successfully added to the elastomer and would protect it from oxidative degradation. A plant test of the new stabilizer was scheduled with no further laboratory work done. In addition, it was believed that since it was truly a “drop-in” that no potential problem analysis was necessary. The actual plant facilities consisted of large extruders where the elastomer was heated and converted into flowable strands. This was followed by steam heated mills and water-cooled mills where the elastomer was formed into sheets. The molecular weight was measured immediately after it was produced in the reactors using typical viscosity testing equipment. The molecular weight (viscosity) was also measured after the elastomer was formed into sheets in the finishing operation. This measurement in the finishing operation was the quality control step since that was the product that the customer would receive. As the new stabilizer was introduced into the process, the molecular weight (viscosity) of the elastomer leaving the reactors was maintained constant. Shortly afterwards, the molecular weight of the elastomer leaving the extrusion and milling area began to decrease. The amount of molecular weight breakdown was much greater than anticipated and also was greater than with the current stabilizer. Since the product specification was based on the finished product, the molecular weight in the reactors was increased to compensate for the greater than expected degradation in the finishing section. With many synthetic polymers the amount of degradation at fixed conditions (temperature, time and air presence) increases as the original molecular weight of the polymer increases. Thus, as the molecular weight in the reactors was increased the amount of breakdown also increased. The plant test was terminated prematurely because of the apparent large amount of molecular weight breakdown. When the post test run mortem was being conducted, it was recognized that the bench scale ovens were not adequate prototypes for the combinations of extruders and hot mills used in the plant finishing operations. While the stabilizer was tested in bench scale ovens at various times and temperatures, it was unlikely that these conditions adequately simulated the plant operations. In both the bench scale and the plant, oxygen degradation would only occur if oxygen diffused to the elastomer. In the bench scale oven tests, oxygen had to diffuse through the elastomer particle and through the bed of polymer. It is likely that the oxygen content was high (21%) on the elastomer surface and very low at the center of the particle. Thus, the amount of oxidative breakdown varied at different spots in the radius of the particle as well as at the depth of the bed of polymer. The actual rate of breakdown would depend on the oxygen content at various spots in the particle. Since this particular elastomer had a very low diffusivity, the distribution of the oxygen content in the particle would have be similar to that shown in Fig. 14.11. In the case of the plant facilities, the extruder and hot mills created a continually renewed surface which would give most of the elastomer an exposure to air or 21% oxygen. Figure 14.11 Oxygen content vs bed depth. If this unsuccessful scale-up is examined, the key learning that occurred was twofold. In the first place, the chemical/equipment aspects of all scale-ups must be examined. In this case, it should have been recognized that the batch ovens were radically different than the extruders and hot mills used in the plant. In the second place, any scale-up or plant test will be worth the effort to conduct a potential problem analysis. If a potential problem analysis had been conducted before the test, it would have likely included the following questions and answers: • What causes oxidative breakdown? – Oxidative breakdown is a typical first order reaction. Thus, the reaction rate depends on oxygen concentration, temperature and time. Since the temperature and time would be equivalent with the existing and new stabilizer and both of these variables had been tested in the bench scale, the only possible concern would be the oxygen content associated with the diffusion through the particle and bed of the elastomer. • What might be the difference in oxygen concentration between the bench scale and the plant? – The oxygen distribution in the elastomer in the plant was uniform and equal to the oxygen concentration in air. In the bench scale, the oxygen concentration varied inversely to the distance from the surface of the elastomer. • What might be the cause of the enhanced distribution of oxygen in the plant? – The most likely cause is the fact that the plant facilities (extruder and hot mill) created a surface renewal which allowed the oxygen to be equivalent to the concentration of oxygen in air at every point in the elastomer. • How could the surface renewal of the extruder and mill be simulated in the laboratory? – While there are multiple ways to simulate the surface renewal that occurs in the plant, the most obvious way is to use only a very thin layer of the elastomer in the oven tests. The Less Than Perfect Selection of a Diluent When olefin polymerization was in its infancy, many of the polymerization processes were conducted in a diluent. The olefin would be soluble in the diluent and the solid polymer product would not be soluble. Thus, the insoluble polymer product could be partially separated from the diluent using some sort of solid– liquid separation device (rotary filter or centrifuge). The diluent could then be treated, recycled, and reused in the process. The polymer leaving the separation device would still contain some of the diluent since the solid–liquid separation device did not produce an absolutely diluent free polymer. The residual diluent was then stripped from this polymer stream. This stripping operation was conducted in indirect dryers, fluid bed dryers, steam stripping, or a combination of these. This process is shown schematically in Fig. 14.12. Figure 14.12 Heavy diluent polymer process. Several companies selected the diluent to be used by bench scale batch polymerizations of the desired olefin. This polymerization was conducted at a relatively low pressure (50 psia). The thinking was that the pressure did not matter since this polymerization was only being done to check the quality of the diluents. In this polymerization procedure, the diluent was added to a small laboratory reactor. This addition of diluent was followed by the addition of a measured amount of catalyst and the agitator was turned on. Then the olefin was added to pressurize the reaction vessel to 50 psia. The temperature of the vessel was raised to 150°F. As the reaction started, the consumption of the olefin caused the pressure to begin dropping. The olefin was added automatically to maintain the pressure at 50 psia. At the end of an hour, the reaction was terminated by injection of methanol. The polymer was recovered and dried and the weight of material was measured. The metric of catalyst efficiency (grams of dried polymer/gram of catalyst) was calculated. This metric was then used to compare diluents. It was believed due to the organization structure and proximity to a refinery that a hydrocarbon diluent would be the most cost-effective diluent selection. Both “heavy diluents” (refinery streams with a low vapor pressure) and “light diluents” (refinery streams with a moderate vapor pressure) were tested. These batch tests conducted by one company indicated that the catalyst efficiency when using the heavy diluent was 40% higher than the catalyst efficiency when using the light diluent. The bench scale chemists rationalized this difference as being due to impurities in the light diluent that were not present in the heavy diluent. This seemed logical since the catalyst was known to be very sensitive to trace amounts of oxygenated compounds. The catalyst was very expensive so the heavy diluent appeared to be the logical choice to be used in the commercial plant. As indicated in Chapter 1, one of the reasons for failure of a scale-up is improper application of theory or failure to apply theory. If the logic associated with the decision to use a heavy diluent is examined closely, an alternative theory to the one proposed is possible. Instead of the theory that the difference in catalyst efficiency was associated with impurities present in the light diluent, the basic equation described in Chapter 2 can provide a different concept. Eq. (2.3) shown below indicates that the concentration of the monomer is important in determining the reaction rate and hence the catalyst efficiency. This seems to be inherently true since the polymerization reaction is first order with respect to catalyst and monomer concentration (2.3) Where: CE, = the catalyst efficiency, lbs. of polymer/lb. catalyst. K, = the simplified polymerization rate constant, ft³/lbs-hr. M, = the propylene concentration, lbs/ft³. T, = the residence time, hours. The 0.75 represents the catalyst decay factor. This factor can be determined by multiple batch polymerizations at variable residence times and a constant reaction temperature. Rather than assuming that the catalyst efficiency difference was related to impurities, a more theoretically correct approach would have been to compare the “K” value for each diluent taking into account the difference in monomer concentrations. At a later point in the development, a more theoretically inclined engineer began considering the conclusions. Using simplified total pressure-liquid phase concentration relationships (Raoult’s Law), the concentration of a monomer (propylene for example) in the liquid phase for either light or heavy diluent can be determined. This approach is shown below: (14.18) Which can be transformed to (14.19) Where: P, = total pressure in the polymerization reactor = 50psia. VP3, = vapor pressure of propylene at 150°F = 410 psia. VPd, = vapor pressure of diluent at 150°F, psia. X3, = the concentration of propylene in the liquid phase, mol fraction. Knowing that the vapor pressure of the light diluent at 150°F was 13.2 psia and that the vapor pressure of the heavy diluent approached zero, the monomer concentration that would be present in the liquid phase for each case (light diluent and heavy diluent) can be calculated using Eq. (14.19). When this was done and the mol fraction was converted to monomer concentration expressed as lbs/ft³, Table 14.5 shown below was developed. Table 14.5 Diluent Propylene Concentration mol fraction lbs/ft³ Light .093 1.82 Heavy .122 2.58 Thus, it appeared that the use of the heavy diluent allowed for a 42% higher concentration of propylene in the liquid phase and based on Eq. (2.3) this would account for the increased catalyst efficiency observed. When the decision to use a heavy diluent was reviewed, it was obvious that this decision had not been adequately considered. If it had been adequately considered, besides the impact of the heavy diluent on propylene concentration in the bench scale reactor, the impact of operating the commercial reactors at the design pressure would have been considered. The commercial reactors were being designed to operate at 300 psia. At this pressure, the calculated difference in propylene concentration in the liquid phase was only 4% rather than the 40% as when the bench scale reactor was operated at 50 psia. So, the anticipated 40% increase in catalyst efficiency would be only 4% if the reaction was a first order reaction with respect to catalyst and propylene concentration and the reactor was operated at 300 psia. In addition to the failure to adequately account for the impact of propylene concentration in the reactor, no consideration was given to the removal of the residual amount of diluent that was left after the solid–liquid separation device. As the process design of the plant evolved, it was recognized that the heavy diluent would probably require 2 stages of vaporization to remove the residual diluent from the polymer. The initial stage would be a steam stripping operation which would then be followed by an indirect dryer. It was thought that going direct to an indirect dryer or to a fluid bed dryer would result in operating conditions being so severe that melting or softening of the polymer particles would occur. This concern was related to the fact that the presence of a residual diluent with a solubility parameter close to that of the polymer would cause the melting point and/or softening point of the polymer to be inversely proportional to the concentration of the diluent in the polymer. It was believed that the installation of a steam stripping stage prior to the indirect heated dryer would reduce the diluent content to the point that there would be minimal reduction in the melting or softening point of the polymer. If a light diluent had been used, the need for steam stripping would have been eliminated. When considering lessons learned from this development, the two most obvious lessons that can be learned are as follows: • A second theoretical analysis that used bench scale data and simplified kinetics and was conducted at an early stage would have prevented an error in diluent selection. This selection of the heavy diluent could not possibly provide the claimed 40% increase in catalyst efficiency relative to the light diluent. In addition, the selection of a heavy diluent without careful consideration of the diluent stripping not only resulted in an inefficient diluent recovery step, but also created operating problems through the life of the plant. • It is likely that this error could have been prevented if the development had been adequately resourced and managed. Chapter 13 provided some guidelines for this aspect of project development. Epilogue: Final Words and Acknowledgments In visualizing who might use this book and what final words could I have for them, I had five thoughts that I think are important: • Don’t forget your technical training and call CH2O seawater rather than formaldehyde. • Don’t be afraid to ask questions whether your assignment be a bench scale researcher, plant engineer or process designer. Don’t be afraid to challenge the answers if they don’t fit with your training and education. • Make sure that you consider the economics (CAPEX and OPEX) as soon as possible and certainly before major funds for research are committed. While some will doubt the validity of developing economics at an early stage, decisions based on numbers are better than decisions based on only “gut feel.” • The engineering group of equipment suppliers can be very helpful for scale-up. However, it is important to both make sure they understand the design basis for the facilities and make sure that their scale-up techniques are consistent with your training and experience. • As described in Chapter 13 a Potential Problem Analysis is a good means to eliminate and mitigate scale-up problems. Remember this analysis is only of value if one is creative and is not limited by phrases such as “that has never happened” or “that is unprecedented.” Ross Wunderlich who wrote Chapter 10 for the book has been a friend and coworker for several years. Not only does he understand the intricacies of cost estimating, but he has the skills and capabilities to explain to noncost estimators what the various factors are and most important for this book to provide insight to the need for emerging technology contingencies. I appreciate the insight that Ross has provided. I am also indebted to those supervisors, operators, mechanics, and students who often did not know how much they were mentoring me. Lastly, I am most indebted to my wife who put up with someone writing a book that “no one would want to read” as I have struggled to make a contribution to the chemical engineering profession and to those who are coming after me. Index A Adiabatic autoclave reactor, 176 Adiabatic tubular reactor, 175 advantages/disadvantages, 177 Agitator/mixer scale-up, 93–97 Alkylation, 78 Angular velocity, 22, 54 Anticipated reactor propylene concentration, 20 Area-to-volume (A/V) ratio, 3, 10, 21, 23, 69, 70, 72, 73, 85, 96, 166 Arrhenius constant, 78, 148, 149, 185 Association for the Advancement of Cost Engineering (AACE International), 125 define contingency, 125, 126 Pre-Class 5 Estimating, 127 B Batch polymerization, See also Polymerization liquid, 182 propylene, 26 Bowl volume, 57 Business environment, 162 C Calorimeter, 70 Canadian Oil Sands, 102, 162 CAPEX, See Capital expenditures (CAPEX) Capital expenditures (CAPEX), 14, 107, 108 conceptual design, 111 decision points and work completion, 110 economic project evaluation, 111 engineering path, 109 errors/uncertainties, 116 escalation (inflation) factor, 109 estimated reactor conditions vs. reactor temperature, 122 fast track schedule, 109 “frozen” in time for estimate of, 119 identifying high cost parts, 131–132 likely viewpoints, 108 business viewpoint, 108 engineering viewpoint, 108 research viewpoint, 108 material of construction, 116 off-site facilities estimation, 113 combined investment/operating cost, 114 ROI, 114 detailed estimate, 113 factored estimate, 113 process design, 111 product and market evaluation, 109 project in development stage, factors, 117–118 project timing and early design calculations, 118–122 VLE data, 119 reactor calculation optimization, 122 reactor design, 119 research/development, 109 total erected cost, 116 type/size of equipment, 116, 117 typical equipment specifications for study design, 113 typical flow diagram, for study design, 112 vertical loop reactor, 120 Case studies, 161–163 diluent, perfect selection of, 200–203 gas phase polymerization process, catalyst utilization, 180–192 inadequate reactor scale-up, 192–197 inorganic catalyst, production of, 163–172 polymerization platform, development of, 172–179 scale-up, 162 error, 171 successful, 162–163 unsuccessful, 163 utilization of stabilizer, 197–199 Catalyst commercialization, 164, 166, 169 decay factor, 27, 28, 175 development timeline, 165 efficiency, 25, 36, 119, 122, 142, 182 analysis of, 166 flow/batch operations, schematic process, 167 friable, 158 gas phase polymerization process, utilization, 180–192 inorganic, production of, 163–172 instantaneous distribution, 8 max temp F vs. time, 196 minimum addition time vs. reactor volume, 195 mobile catalyst site, 9 particle RTD fraction with, 27 particle temperature vs. particle size, 188, 189 polymerization of propylene, 185 PSD of, 185 solid, 6 study design, 168 Centrifuge, 17 bowl, volume of, 57 settling rate, 58 CFD, See Computational fluid dynamics (CFD) Commercial process development original conceptual flow diagram, 64 possible flow sheet, 65 two-step reactor process, 63–65 Computational fluid dynamics (CFD), 7, 22, 23, 96, 97, 135 Contingency, 125 construction industry institute (CII) studies, 128 project definition rating index (PDRI), 128 define by AACE International, 125 exclude conditions/events, 125 impact on support facilities, 128 incremental emerging technology contingency, 127 questions need to ask by process/technology developer, 127–128 vs. estimate classification, 127 Continuous stirred tank reactor (CSTR), 20, 22, 26, 27, 29, 31, 37, 38, 63 percentage of tracer escaped vs. reactor displacements, 66 relative volume ratio of CSTR/PFA vs. fractional conversion, 25 use to approach batch reactor, 66 Cooled tubular reactor, 176 advantages/disadvantages, 177 Cooperative team spirit and culture, 156–159 change in leadership, 156–157 Corrosion, 106 CSTR, See Continuous stirred tank reactor (CSTR) Culture, 157 actions helpful in achieving goal, 157–158 and cooperative team spirit and, 156–159 importance of, 157 Cycle gas capacity vs. condensation, 184 cooler, 191 D Delivery mode for chemicals, change in, 79–81 mode of delivery, transferring material, 81 pyrophoric alkyl material, 80 safety-related considerations, 79 static electricity, 79 Diluent polymer process, 163, 200 DOT shipping, 162, 170 Drag coefficient, 190 E Emerging technology contingency (ETC), 127, See also Contingency costs calculation, 129 factors, 178 incremental to, 129 “normal” general contingency level, 129 Endothermic reaction, 11, 30, 70, 72, 75, 172 ETC, See Emerging technology contingency (ETC) Exothermic reaction, 10, 11, 30, 70, 72, 73, 78, 172 Explosive mixture, 77 F Fertilizer plant, 82 Fire/explosion event, 77 Flory-Huggins relationship, 46, 47 G Gas phase polymerization, 163, 180, 182, 183 catalyst utilization, 180–192 polypropylene, 183 reactors, 184 Gas phase reactor, 180, 187 G force, 53 H Hazard level, 77 material, 82 Heat of reaction, 69, 70, 118 jacketed tubes utilized to remove, 30 reactor design to remove, 162 techniques to measure, 70–76 A/V ratio, 72, 73 bring reactor up to reaction temperature, 75 calorimeter, 70 H/D ratio, 72 heat of reaction/combustion, 71 from heats of formation, 71–72 loss of temperature control, 73 Arrhenius equation, 73 “B” value, 74 endothermic reaction, 75 exothermic non-reversible reaction, 73 temperature runaway, 73, 74 typical heat removal equation, 74 temperature distribution, 76 use a well-insulated continuous reactor in a static mode, 71 volume ratio, 73 Heat sink, 63 Heat transfer, 17, 21 Heat transfer coefficient, 3, 4, 10, 72, 76, 96, 98, 99, 119, 131, 141, 175, 186, 187, 195 Height-to-diameter ratio (H/D),, 11, 22, 41, 50, 72, 141 Hexane, 9, 47, 63, 103, 164, 165, 193 lower explosive limit (LEL), 170 purification system, 169 Hydrocarbon storage, 83 I Ignition source, 77 Impurities buildup considerations, 86 adsorption, 87 avoid forming explosive mixture and other and considerations, 91 buildup in reactor feed, quantitative analysis, 87 chlorinated hydrocarbons present in recycle system, 89 estimation of impurity level in reactor feed, 88 fractionation separation severity, 87, 89 on impurity concentration in reactor feed, 89 known/unknown impurities, 87 approaches for unknown impurities, 87 commercial recycle facilities due to unknown contaminants, 91 nonreactive impurity, 87 percentage of final change vs. time, 91 purge rate /severity of separation, 87 recycle facilities, not provided in pilot plant, 89 steady-state operation, 90 VLE factors, 89 Indirect heated dryer, 93–97, 100 conveying capacity, 100 driving force (DF), calculated based on, 97 heat transfer coefficient, 98–100 kinetic rate, 97 mass transfer constant, 98, 100 pseudo mass transfer coefficient, 99 residence time requirement, 99, 100 scale-up ratio, 97 size of the commercial dryer, determined by, 99 modification of equation, 99 thermal gravimetric analyzer use of, 98, 99 Inerts in process, 86 Injection point, 24 Investment cost estimate, 179 K Kinetic rate, 2, 3, 18, 97 “K” value, 49, 50, 57, 182 L Laboratory batch reactor, scale-up possibilities, 22 addition rate difference, cause changes, 28 to commercial continuous stirred tank reactor, 24–29 to commercial tubular reactor, 30–32 to larger batch reactor (pilot plant/commercial), 22–24 Laboratory tubular reactor to commercial tubular reactor, 33–35 commercial tubular reactor flow model, 32 flow patterns in tubular reactor, 32 possible regime combinations for scaling up, 33 temperature profile in adiabatic tubular reactor, 31 typical laminar flow in tubular reactor, 33, 62 parabolic velocity pattern, 62 Laminar flow, 62 LEL, See Lower explosive limit (LEL) Licensing fee, 163 Liquefied petroleum gas (LPG), 103 Lower explosive limit (LEL), 77, 91, 170 M Manpower resources, 149–153 challenging to accurately estimate, 151 guidelines for estimating needs, 151 manning requirements, equation for, 152 over staffed project, 149 owner’s manning schedule for moderate size project, 150 project location and, 152 seeking expertise from outside, 153 technical organization, 152 utilization of computers for control and data storage, 153 Material balance concept, 48 Mechanical design, 17 Mitigation of risk business reviews, 149 design reviews and maintenance reviews, 147 HAZOP/LOPA analysis, 146 implement Gate system, 149 potential problem analysis, 148 Arrhenius constant, 148, 149 project originator, role of, 145 replaced by, 145 reviewing emergency situations, 147 shutdown analysis, 147 startup analysis, 147 techniques for definition and, 146–149 typical response, for both classical concept/actual laboratory data, 148 N New chemicals usage, 78 safety considerations associated, 78–79 Nonreactive impurity, 87 Nozzle design, 23, 32 O Operating cost, 14 Operating expenditures (OPEX), 14, 107, 108, See also Capital expenditures (CAPEX) fixed cost and controllable cost, 114 ad valorem taxes, 114 depreciation, 114 maintenance, 114 operating labor, 114 plant-related overhead, 114 ROI, 115–116 identifying high cost parts, 131–132 likely viewpoints, 108 business viewpoint, 108 engineering viewpoint, 108 research viewpoint, 108 project timing and early design calculations, 118–122 VLE data, 119 OPEX, See Operating expenditures (OPEX) Oxidative breakdown, 199 Oxygen, 13, 77, 83, 170 content vs. bed depth, 199 degradation, stabilizer to protect polymer from, 197 P Particle heat removal, 186 PFA, See Plug flow assumption (PFA) Plant heat balance, 194 Plug flow assumption (PFA), 20, 62, 63 Plug flow model, 62 Polymer, catalyst description, 191 Polymerization, 163 batch polymerization of propylene, 26 block diagram, 174 development of new polymerization platform, 172–180 gas phase polymerization process new and improved catalyst in, 180–192 grade propylene, 19, 26 impurities in, 164 rate, 166 constant, 28, 142, 175, 201 reactor, 96, 152 selection of diluent, 163 Polypropylene, 5, 19, 26, 47, 52, 173 Potential problems, with scale-up equipment related, 2–5 driving force, 4 equation desirable to reduce nitrogen consumption, 5 generalized equation, use of, 2 heat transfer area, 4 heat transfer equation, 3 length-to-diameter (L/D) ratio, 4 mode related, 5–8 continuous stirred tank reactor (CSTR) model, 5 integration and substitution, equation, 6 models used to analyze areas, 8 plug flow assumption (PFA) model, 5, 7 project focus considerations, 14 capital investment, 14 operating cost, 14 recycle considerations, 13–14 regulatory requirements, 14 OSHA regulations, 14 safety considerations, 11–13 areas require careful consideration in early project development phase, 12–13 reasons, 12 take home message, 15 theoretical considerations, 9–10 application of the phase rule, equation for, 9 scale-up considerations, 9 types of errors, 10 thermal characteristics, 10–11 reactor height-over-diameter ratio, 10 scale-up ratio, 11 temperature runaway, 10 Power-to-volume (P/V) ratios, 22 Project initiator, 145 Project originator, 145 Propylene, 4, 19, 26, 28, 36, 38, 142, 172 batch polymerization, 26 concentration in liquid phase, 202 gas phase polymerization, 180 polymerization, 173, 175 Pumparound autoclave reactor, 176 advantages/disadvantages, 177 Purification step, 53, 61, 64, 117, 139, 200 R Reaction by-products, 82 and safety and environmental considerations, 82 Reactor polymer concentration, 177 Reactor process, two-step, 63 Reactor scale-up, 17, 19, 193 computational fluid dynamics, 22 from continuous laboratory plug flow reactor to continuous pilot plant/continuous commercial reactor operating in PFA, 20 example problems, 36–38, 47–51, 55–59 heat transfer, 20, 21 kinetics, 19 from laboratory batch reactor to continuous pilot plant or continuous commercial reactor, 20 pilot plant/commercial batch reactor, 20 from a laboratory batch reactor to pilot plant/commercial continuous stirred tank reactor (CSTR), 20 mixing, 20, 21 nozzle, 21 other scale-ups using same techniques, 52 driving force (DF), 52 reactor mode, 20 residence time distribution, 19 summary of considerations for, 38–39 Reactor selection, 178 Reactor startup, analyzing, 69 Reasonable schedules, 153–155 Recycle facilities, 86 Recycle stage, 61 Recycle system, in a pilot plant, 85 Refrigerated autoclave reactor, 176 advantages/disadvantages, 177 Request for quote (RFQ), 58, 100 Residence time, 19, 183 available, 57 Residence time distribution (RTD), 19, 21, 22, 25, 29, 31, 35, 36 catalyst particle RTD fraction with residence time vs. residence time, 27 Return on investment (ROI), 114, 161 equation for early project evaluation, 115 evaluated by criteria, 115–116 Reynolds number, 38, 54, 190 RFQ, See Request for quote (RFQ) RTD, See Residence time distribution (RTD) S Safety health, and environmental (SHE), 102 improvement, 80 risk, 77 Scale-up design considerations, 21 Scale-up equipment, 17 driving force, 18 exchanger design, 18 kinetic rate, 18 L/D ratio, 18 mechanical equipment, 93, 100 mixer design, 18 mixer speed, 18 mixing and heat transfer, 17 removal of volatile, 17 solid-liquid separation, 17 Scaling up existing commercial facility, 137 “debottlenecking, ”, 137–139 approaches, 137 for critical specialized heat exchanger, 141 design of agitator, require assistance, 142 example, 139–141 reasonable consisting steps, 141 relationship for propylene polymerization, 142 equipment replacement, 138 increasing production rate, 138 Schedules, reasonable description schedule based on, 155 design basis memorandum, 154 fast-tracked projects, 154 overlapping schedules, 155 Settling factor, 55 Shared facilities, impact of new development on, 81 Arrhenius factor, 81 ground level radiation, 81 impact on the CAPEX, 81 Shipping considerations, and safety, 82–83 Solid blenders, 93 Solid-liquid separation, 17–53, 55, 61 Solid materials, 79 Stabilizer, 197, 198 utilization, 163 Steam-heated jacket, 193 Step-out designs, 83 comprehensive safety review, 83 Stokes law, 53, 54, 56 Storage considerations, and safety, 82–83 Study designs, 131 identifying areas for future development work, 133–134 need for consultants, 135 size-limiting equipment, 134–135 unusual/special equipment required, 132 Sulfuric acid, 78 Supplier’s scale-ups, 39 Sustainability, long-term, 101 availability and cost of feeds and catalysts, 102–103 availability/costs considerations, 103 safety, health, and environmental (SHE), 102 availability and cost of utilities, 103 changes in existing technology crystallization process, 101 UOP process, 102 disposition of waste and by-product streams, 104 operability and maintainability of process facilities, 105–106 balanced process design, 105 careful selection and review of process equipment, 106 consideration of corrosion, 106 mean time between failures, 105 T Temperature, See also Heat of reaction control, loss of, 69, 73, 171 conversion, 30 distribution, 69, 76 driving force, 97 fluctuations, 30 inlet and outlet, 3 profile in adiabatic tubular reactor, 31 reactor optimization, 122 runaway, 10, 12, 21, 74 time profile, 29, 69 TGA, See Thermal gravimetric analyzer (TGA) Thermal characteristics, in reactor scale-up, 69 Thermal gravimetric analyzer (TGA), 41, 98, 99 Total erected cost (TEC), 116 Triethylaluminium, 80 U Upper explosive limit (UEL), 77 V Vapor-liquid equilibrium (VLE), 89, 119, 133, 169 Vapor-liquid separation, 17 Vapor-solid separation, 17 Velocity, 6, 22–24, 33, 35, 43, 54, 55, 57, 119 angular, 22, 54, 57 fluidization, 43, 51 parabolic pattern, 6, 35, 62 rotational, 55, 57, 58 terminal, 186 Volatile removal scale-up, 39–47 definitions used for, 39 key points, summary, 52 single particle diffusion vs. bed diffusion, 41 volatiles content vs. time, 42 Volatiles removal equipment, 52