Science, Technology and Society An Introduction by Martin Bridgstock, David Burch, John Forge, John Laurent, Ian Lowe (z-lib.org)

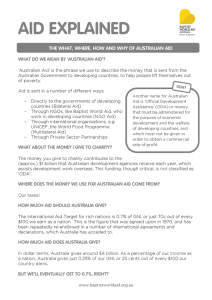

advertisement