Sentence Similarity Assessment: Lexical, Syntactic, Semantic Analysis

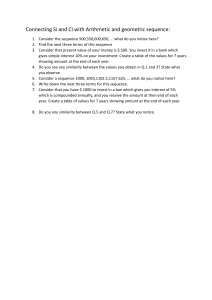

advertisement

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

Available online at www.sciencedirect.com

ScienceDirect

Computer Speech and Language xxx (2016) xxx–xxx

Assessing sentence similarity through lexical, syntactic and semantic

analysis夽

1

2

3

Q1

Rafael Ferreira a,b,∗, Rafael Dueire Lins a, Steven J. Simske c, Fred Freitas a, Marcelo Riss d

4

b

5

6

7

a Informatics Center, Federal University of Pernambuco, Recife, Pernambuco, Brazil

Department of Statistics and Informatics, Federal Rural University of Pernambuco, Recife, Pernambuco, Brazil

c HP Labs., Fort Collins, CO 80528, USA

d HP Brazil, Porto Alegre, Rio Grande do Sul, Brazil

Received 28 April 2015; received in revised form 19 January 2016; accepted 20 January 2016

8

Abstract

9

The degree of similarity between sentences is assessed by sentence similarity methods. Sentence similarity methods play an

important role in areas such as summarization, search, and categorization of texts, machine translation, etc. The current methods

for assessing sentence similarity are based only on the similarity between the words in the sentences. Such methods either represent

sentences as bag of words vectors or are restricted to the syntactic information of the sentences. Two important problems in language

understanding are not addressed by such strategies: the word order and the meaning of the sentence as a whole. The new sentence

similarity assessment measure presented here largely improves and refines a recently published method that takes into account the

lexical, syntactic and semantic components of sentences. The new method was benchmarked using Li–McLean, showing that it

outperforms the state of the art systems and achieves results comparable to the evaluation made by humans. Besides that, the method

proposed was extensively tested using the SemEval 2012 sentence similarity test set and in the evaluation of the degree of similarity

between summaries using the CNN-corpus. In both cases, the measure proposed here was proved effective and useful.

© 2016 Elsevier Ltd. All rights reserved.

10

11

12

13

14

15

16

17

18

19

20

21

Keywords: Graph-based model; Sentence simplification; Relation extraction; Inductive logic programming

22

23

1. Introduction

24

25 Q3

26

27

28

29

30

The degree of similarity between sentences is measured by sentence similarity or short-text similarity methods.

Sentence similarity is important in a number of different tasks, such as: Automatic text summarization (Ferreira et al.,

2013), information retrieval (Yu et al., 2009), image retrieval (Coelho et al., 2004), text categorization (Liu and Guo,

2005), and machine translation (Papineni et al., 2002). Sentence similarity methods should also be capable of measuring

the degree of likeliness between sentences with partial information, as when one sentence is split into two or more

short texts and phrases that contain two or more sentences.

夽

Q2

This paper has been recommended for acceptance by Srinivas Bangalore.

Corresponding author. Tel.: +55 81999008818.

E-mail addresses: rflm@cin.ufpe.br (R. Ferreira), rdl@cin.ufpe.br (R.D. Lins), steven.simske@hp.com (S.J. Simske), fred@cin.ufpe.br

(F. Freitas), marcelo.riss@hp.com (M. Riss).

∗

http://dx.doi.org/10.1016/j.csl.2016.01.003

0885-2308/© 2016 Elsevier Ltd. All rights reserved.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

2

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60 Q4

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

The technical literature reports several efforts to address such problem by representing sentences using a bag of

words vector (Mihalcea et al., 2006; Qiu et al., 2006) or a tree of the syntactic information among words (Islam and

Inkpen, 2008; Oliva et al., 2011). These representations allow the similarity methods to compute different measures

to evaluate the degree of similarity between words. The overall similarity of the sentence is obtained as a function of

those partial measures. Two important problems are not handled by using such approach:

The Meaning Problem (Choudhary and Bhattacharyya, 2002) Sentences with the same meaning, but built with

different words. For example, the sentences Peter is a handsome boy and Peter is a good-looking lad, have similar

meaning, if the context they appear in does not change much.

The Word Order Problem (Zhou et al., 2010) The order that the words appear in the text influences the meaning

of texts. For example, in the sentences “A killed B” and “B killed A” use the same words, but the order they appear

changes their meaning completely.

A recent paper (Ferreira et al., 2014) addressed these problems by proposing a sentence representation and content

similarity measure based on lexical, syntactic and semantic analysis. It has some limitations, however. For example,

the size of sentences is not taken into account. To overcome such problems, the paper (Ferreira et al., 2014) presents:

• A new sentence representation that improves the one proposed in Ref. Ferreira et al. (2014) to deal with the meaning

and word order problems, and

• A sentence similarity measure based on two similarity matrices and a size penalization coefficient.

• An algorithm to combine the statistical and semantic word similarity measures.

This paper, besides explaining the measure presented in Ref. Ferreira et al. (2014) in full details, improves the

combination of the word similarity measures, introducing the more general concept of sentence similarity as a numerical

matrix. Here, the lexical analysis is performed in the first layer, in which the similarity measure uses “bag-of-word

vectors”, similarly to Refs. Islam and Inkpen (2008), Li et al. (2006), Mihalcea et al. (2006), Oliva et al. (2011). In

addition to lexical analysis, this layer applies two preprocessing services (Hotho et al., 2005): stopwords removal and

stemming. The syntactic layer uses relations to represent the word order problem. The semantic layer employs Semantic

Role Annotation (SRA) (Das et al., 2010) to handle both problems. The SRA analysis returns the meaning of the actions,

the agent/actor who performs the action, and the object/actor that suffers the action, among other information. Ref.

Ferreira et al. (2014) was possibly the first to use SRA as a measure of the semantic similarity between sentences, while

other methods employ only WordNet (Fellbaum, 1998; Das and Smith, 2009; Oliva et al., 2011) or a corpus-based

measure (Mihalcea et al., 2006; Islam and Inkpen, 2008) in the classic bag-of-word vectors approach.

The measure presented here was benchmarked using three datasets. The one proposed by Li et al. (2006) is widely

acknowledged as the standard dataset for such problems. Pearson’s correlation coefficient (r) and Spearman’s rank

correlation coefficient (ρ), which are traditional measures for assessing sentence similarity, were compared with the

best results of the measure described in the literature. The new measure proposed in this paper outperforms all the

previous ones, as a combination of the proposed measure achieves 0.92 for the r, which means that the proposed

measure has the same accuracy of the best human assigned values to the similarities in such a dataset. Compared with

ρ, the measure proposed here achieved 0.94, which means a reduction of 33% in the error rate in relation to the state

of the art results reported in Ref. Ferreira et al. (2014).

The second experiment described here uses the test set of the SemEval 2012 competition (Agirre et al., 2012), which

contains 3108 pairs of sentences. The evaluation was performed in terms of r, which is the official measure used in the

competition. The proposed approach obtained 0.6548 for r, only 0.0225 less than the best result reported. However, the

approach presented here uses an unsupervised algorithm; the other better ranked systems use supervised algorithms,

and are therefore corpus dependent.

The benchmarking experiments also used an extension of the extractive summary datasets in the CNN-corpus

proposed by Lins et al. (2012). This corpus is based on CNN news articles from all over the world. The current version

of the CNN dataset has 1330 texts in English. One outstanding point of the CNN-corpus is that there is a summary

of each text provided by the original author: the highlights. The assessment of summary similarity checks the degree

of similarity of each sentence in the original text with each of the sentences in the highlights. The sentences with the

highest similarity scores are seen as providing an extractive summary of the text. Such summary and the highlights are

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

3

89

also compared using ROUGE (Lin, 2004), which is widely used to assess the degree of similarity between summaries.

In such experiment, the assessment measure proposed here outperformed all the other systems by 19%.

In addition to the experiment described, the proposed measure was applied to eliminate redundancy in multidocument summarization. The results obtained show the effective and usefulness of the proposed measure in a real

application.

The rest of this paper is organized as follows. Section 2 presents the most relevant differences between the proposed

method and the state of the art related works. The sentence representation method and the similarity measure are

described in Section 3. The benchmarking of the proposed method and the best similar proposed methods found in the

literature is presented in Section 4. Section 6 details the application of the proposed measure to eliminate redundancy

in multi-document summarization. This paper ends drawing the conclusions and discussing lines for further work in

Section 7.

90

2. Related work

79

80

81

82

83

84

85

86

87

88

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

This section presents the state of the art solutions for the sentence similarity problem. The methods proposed can

be divided into supervised and unsupervised, outlined here. The unsupervised methods rely only on text processing

techniques to measure the similarity between sentences. These systems are presented first.

A similarity measure that translates each sentence in a semantic vector by using a lexical database and a word order

vector is proposed by Li et al. (2006). They propose to weight the significance between the semantic and syntactic

information. A new word vector is created for each sentence using the information from the lexical database that

calculates the weight of significance of a word using information content obtained from a corpus-based method to

measure similarities between words (Li et al., 2003). A new semantic vector is built for each of the two sentences by

combining the semantic vector with the information content from the corpus-based method. The semantic similarity is

measured taking into account the semantic vectors. At last, the sentence similarity is computed by combining semantic

similarity and order similarity.

Islam and Inkpen (2008), presented an approach to measure the similarity of two texts that also makes use of semantic

and syntactic information. They combine three different similarity measures to perform the paraphrase identification

task. At first, they take the entire sentence as a string in order to calculate string similarity by applying the longest

common subsequence measure (Kondrak, 2005). Then, they use a bag-of-word representation to perform a semantic

word similarity, which is measured by a corpus-based measure (Islam and Inkpen, 2006). The last similarity measure

uses syntactic information to evaluate the word order similarity. The overall degree of similarity is calculated using a

weighted combination of the string similarity, semantic similarity and common-word order similarity.

Mihalcea et al. (2006) represented sentences as a bag-of-word vector and performed a similarity measure that work

as follows: for each word in the first sentence (main sentence), it tries to identify the word in the second sentence that

has the highest semantic similarity according to one of the word-to-word similarity measures. Then, the process is

repeated using the second sentence as the main sentence. Finally, the total similarity score is obtained as the arithmetic

average of the values found.

Oliva et al. (2011) proposed the SyMSS method that assesses the influence of the syntactic structure of two sentences

in calculating the similarity. Sentences are represented as a tree of syntactic dependence. This method is based on the

idea that a sentence is made up of the meaning of its individual words and the syntactic connections among them. Using

WordNet, semantic information is obtained through a process that finds the main phrases composing the sentence.

The recent work described in Ref. Ferreira et al. (2014) presents a method similar to the one by Mihalcea et al. (2006)

to compare sentences, but uses lexical, syntactic and semantic analysis. A word matching algorithm using statistics

and WordNet measures is proposed to assess the degree of similarity between two sentences. It has two drawbacks.

The first one is that it does not take into consideration the similarities of all words; in general, only the words with

high similarity value are used. The second deficiency is that the similarity measure in Ferreira et al. (2014) does not

take into consideration the size of sentences, and this represents a problem especially when there is a large difference

between the size of the two sentences.

In 2012, with the creation of the SemEval conference (Agirre et al., 2012) a large benchmarking dataset for sentence

similarity was released with some examples of sentences that could be used for training the assessment algorithms.

This fostered the development of several supervised systems, the most important of them listed below.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

4

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

Bär et al. (2012) represented each sentence as a bag of words vector, after a pre processing phase that includes

lemmatization and stop word filtering. They apply several sentence similarity measures on the vectors, and the output

of the measures obtained are taken as features to log-transformed values using a linear regression classifier (Hall et al.,

2009). The classifier output is the final similarity of the system. As Šarić et al. (2012) also applied different similarities

as features to the classifier. They also used syntactic information of the sentence, named entity and a number overlap

features. The training set provided in SemEval (Agirre et al., 2012) was used to build a Support Vector Regression

(SVR) model using LIBSVM (Chang and Lin, 2011).

Jimenez et al. (2012) used a recursive model to compare sentences by dividing them into words, and in turn, words

are divided into q-grams of characters. This idea is implemented using soft cardinality (Jimenez et al., 2010), instead

of the classical string cardinality, like Jaccard’s coefficient (Jaccard, 1912) and the Levenstein distance (Levenshtein,

1966). The idea of calculating the soft cardinality is to group similar elements in addition to identical elements. It treats

the elements x in the set A as sets themselves and inter-element similarities as the intersection between the elements.

Based on this cardinality, they proposed a parameterized similarity model, using seven parameters, to assess similarity

between sentences. These parameters could be selected using a training set. Refs. Jimenez et al. (2013, 2014) make

use of a bag of words vector in a regression algorithm, along with reduced-error pruning tree (REPtree) using a set of

17 features obtained from the combination of soft cardinality with different similarity functions for comparing pairs

of words.

The Paraphrase Edit Rate with the Perceptron (PERP) system (Heilman and Madnani, 2012) extends the Translation

Error Rate Plus (TERp), a measure used to assess the quality of machine translation, to perform the evaluation of the

degree of sentence similarity. TERp (Snover et al., 2009) is a edit distance measure that allows the following operations:

match (M), insertion (I), deletion (D), substitution (S), stemming (T), synonymy (Y), substitution of synonyms, and

shift (Sh), or changes of positions of words or phrases in the input sentence, and phrase substitution (P). TERp has 11

total parameters, with a single parameter for each edit except for phrase substitution, which has four. PERP expands the

original features of TERp to better model the semantic and textual similarities. Twenty-five new features were added

to the TERp model in total. Then, the system uses these features as input to a perceptron algorithm (Collins, 2002) to

perform the assessment of the similarity between sentences.

Han et al. (2013) proposed the combination of LSA (Deerwester et al., 1990) and WordNet (Miller, 1995) to assess

the semantic similarity between words. Benefiting from this word similarity measure they proposed two methods to

assess sentence similarity: (i) the align-and-penalize approach, which applies the measure proposed and penalizes

specific groups of words, such as antonyms; and (ii) SVM approach, which uses the output of align-and-penalize

approach, a n-gram similarity with different parameters (for example, uni-gram, bi-gram, tri-gram and skip-bigram),

and other similarities measures as features to a Support Vector Regression (SVR) model using LIBSVM (Chang and

Lin, 2011). The output of the SVR is the final similarity.

Wu et al. (2013) adopt named entity measures (Lavie and Denkowski, 2009), random indexing (Kanerva et al.,

2000), semantic vectorial measures (Wu et al., 2011) and features proposed by Bär et al. (2012), Šarić et al. (2012)

as features to a linear regression algorithm provided by the WEKA package (Hall et al., 2009) to combine all of the

different similarity measures into a single one. Along the same line of work, NTNU (Marsi et al., 2013) combines

shallow textual (Bhagwani et al., 2012), distributional (Karlgren and Sahlgren, 2001), knowledge-based and (Šarić

et al., 2012) features using a Support Vector Regression model.

Although the supervised similarity measures achieve good results, they are domain dependent. In other words, they

need a large and a diverse sentence corpus to perform the assessment.

This paper proposes an unsupervised similarity measure that follows the ideas in Ref. Ferreira et al. (2014) to

compare sentences. The approach here uses different preprocessing services to represent the lexical, syntactic and

semantic analysis and proposes a completely new sentence similarity algorithm based on a similarity matrix, however.

Besides that, the similarity measure introduced in this paper also proposes a size penalization coefficient to account

for sentences with different sizes. Thus, the approach proposed in this paper combines:

• The three layer sentence representation, which encompasses different levels of sentence information. Previous works

that claim to use semantic information, do not actually evaluate the semantics of sentences. They use WordNet to

evaluate the semantics of the words instead, yielding potentially poor results.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

5

178

• A similarity measure that uses a matrix to consider the similarities among all words in sentences and a size penalization

coefficient to deal with sentences with different sizes.

179

3. The three-layer sentence representation and similarity

177

180

181

182

183

184

185

186

187

188

189

190

191

This section presents the proposed sentence representation and a measure to assess the degree of sentence similarity

encompassing three layers: lexical, syntactic and semantic. It is important to remark that these layers do not reflect

exactly the standard linguistic analysis: one assumes that the input text was preprocessed for stopword removal and

stemming.

The new sentence similarity assessment method is detailed here. Two pair sentences from SemEval 2012 dataset

(Agirre et al., 2012) (more details are described in Section 4) were selected for illustrative purposes only. The similarity

originally tagged on the SemEval dataset for the pair of sentences E1.1 and E1.2 is 1.0 while for the pair E2.1 and E2.2

is 0.36. The two pairs of sentences are:

E1.1

E1.2

E2.1

E2.2

192

193

3.1. The lexical layer

This section details the sentence representation used here and how the lexical similarity measure is calculated.

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

Ruffner, 45, doesn’t yet have an attorney in the murder charge, authorities said.

Ruffner, 45, does not have a lawyer on the murder charge, authorities said.

The Commerce Commission would have been a significant hurdle for such a deal.

The New Zealand Commerce Commission had given Westpac no indication whether it would have approved its

deal.

3.1.1. Lexical representation

The lexical layer takes a sentence as input and yields a list of the sentence tokens representing it as output. The

steps performed in this layer are:

1. Lexical analysis: This step splits the sentence into a list of tokens, including punctuation.

2. Stop word removal: Words with little representative value to the document, such as articles and pronouns, and

the punctuation marks are suppressed. This work benefits from the stop word list proposed by Dolamic and Savoy

(2010).

3. Lemmatization: This step translates each of the tokens in the sentence into its basic form. For instance, words in

plural form are made singular and all verb tenses and persons are replaced by the same verb in the infinitive form.

Lemmatization for this system is carried out by the Stanford coreNLP tool.1

Fig. 1 depicts the operations performed in this layer for the example sentences. It also displays the output of each

step. The output of this layer is a text file containing the list of stemming tokens.

This layer is important to improve the performance of simple text processing tasks. Although it does not convey

much information about the sentence, it is widely employed in various traditional text mining task such as information

retrieval and summarization.

3.1.2. Lexical similarity

The first part of this section describes six measures to evaluate the similarity between words, and the second one

presents details of the proposed assessment measure for the lexical layer.

Six measures are used to calculate the similarity between words. They cover the top five dictionary measures based

on the results extracted from Refs. Oliva et al. (2011) and Budanitsky and Hirst (2006). These measures make use

the WordNet ontology (Miller, 1995) to compute the similarity between words. In addition, the Levenshtein distance

1

http://nlp.stanford.edu/software/corenlp.shtml.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

6

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

Input – E1.1

Ruffner, 45, doesn't yet have

an attorney in the murder

charge, authorities said.

Input – E1.2

Ruffner, 45, does not have a

lawyer on the murder charge,

authorities said.

Lexical Analysis

ruffner; 45; does; not; yet;

have; an; attorney; in; the;

murder; charge; authorities;

said

Lexical Analysis

ruffner; 45; does; not; have;

a; lawyer; on; the; murder;

charge; authorities; said

Stop Words Removal

ruffner; 45; not; have;

attorney; murder; charge;

authorities; said

ruffner; 45; not; have;

lawyer; murder; charge;

authorities; said

Lexical Analysis

Stop Words Removal

The Commerce Commission

would have been a significant

hurdle for such a deal.

the; commerce; commiss ion;

would; have; been; a; significant;

hurdle; for; such; a; deal

commerce; commission;

have; been; significant;

hurdle; deal

Lexical Analysis

Stop Words Removal

The New Zealand Commerce

Commiss ion had given Westpac

no indication whether it would

have approved its deal.

the; new; zealand; commerce;

commission; had; given; westpac;

no; indication; whether; it; would;

have; approved; its; deal

ruffner; 45; not; have;

attorney; murder; charge;

authority; say

Stemming

Stop Words Removal

Input – E 2.1

Input – E2.2

Stemming

new; zealand; commerce;

commission; had; given;

westpac; indication; have;

approved; deal

ruffner; 45; not; have;

lawyer; murder; charge;

authority; say

Stemming

commerce; commiss ion;

have; be; significant;

hurdle; deal

Stemming

new; zealand; commerce;

commission; have; give;

westpac; indication; have;

approve; deal

Fig. 1. Lexical layer processing of the example sentences.

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

metric (Miller et al., 2009) is used to provide a statistic evaluation because, in general, it is faster to calculate than

dictionary based methods. The similarity measures are:

Path measure stands for the length of the path between two concepts in WordNet Graph to score their similarity.

Resnik measure (Res) attempts to quantify how much information content is common to two concepts. The information content is based on the lowest common subsumer (LCS) of the two concepts.

Lin measure is the ratio of the information contents of the LCS in the Resnik measure to the information contents of

each of the concepts.

Wu and Palmer measure (WP) compares the global depth value of two concepts, using the WordNet taxonomy.

Leacock and Chodorow measure (LC) uses the length of the shortest path and the maximum depth of the taxonomy

of two concepts to measure the similarity between them.

Levenshtein similarity (Lev) is based on Levenshtein distance, which counts the minimum number of operations of

insertion, deletion, or substitution of a single character needed to transform one string into the other. The similarity

is calculated as presented in Eq. (1).

LevSimilarity = 1.0 − (LevenshteinDistance(word 1 , word 2 )/maxLength(word 1 , word 2 ))

(1)

Two other word similarity measures were also tested: the Greedy String Tiling (Prechelt et al., 2002) and the

Hamming distance (Hamming, 1950). However, they were discarded for the following reasons: (i) The Greedy String

Tiling matches of expressions with more than one word, such as “Hewlett-Packard Company” and “Hewlett-Packard

Co.”. But it does not detect the similarity between misspelled words; (ii) The Hamming similarity is based on a editing

(character difference) distance methods, likewise the Levenshtein distance. The choice here for Levenshtein similarity

was made because it gives scores to expressions with one or more words and it is the edit distance method more widely

used in the literature.

Dictionary-based measures, such as the top five ones listed above, attempt to convey the degree of semantic similarity

between two words, but they do not handle named entities, such as proper names of person and places. WordNet does

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

239

240

241

242

243

not index these kinds of words, either. In the lexical measure introduced here, the Levenshtein measure is used to

analyze the degree of lexical similarity between the two words.

The assessment made in Section 4 shows that the path measure was the most adequate for expressing sentence

similarity. Algorithm 1 is used to measure the degree of similarity between two words. It takes two words (word1 and

word2 ), their path measure, and the Levenshtein similarity (1) between the words as input.

244

Algorithm 1. Similarity between words.

245

1:

2:

3:

4:

5:

246

247

248

249

250

251

252

253

254

255

if Path measure(word1 , word2 ) < 0.1 then

similarity = LevSimilarity(word1 , word2 )

else

similarity = Path measure(word1 , word2 )

end if

The relations between words that achieve similarity degree lower than 0.1 using path measure were discarded.

The lexical measure accounts for the degree of resemblance between sentences through the analysis of lexical

similarity between the sentence tokens. This paper proposes the creation of two matrices. The first matrix contains

the similarities of the words in the sentence and the second consists of the similarities between the numerical tokens

from the two sentences. The tokens were divided because the numbers contain specific information from sentences;

for example, a time or an amount of money. This information should receive a higher score. Thus, it is used as a weight

coefficient for sentences of different sizes. A detailed explanation of the calculation of the first matrix follows.

Let A = {a1 , a2 , . . ., an } and B = {b1 , b2 , . . ., bm } be two sentences, such that, ai is a token of sentence A, bj is a

token of sentence B, n is the number of tokens of sentence A and m is the number of tokens of sentence B. The lexical

similarity is presented in Algorithm 2.

256

Algorithm 2. Proposed similarity algorithm.

257

Require: A and B

1:

2:

3:

4:

5:

6:

7:

8:

9:

10:

11:

12:

13:

14:

15:

16:

258

259

260

261

262

263

264

265

266

267

268

269

270

7

matrix = newmatrix(size(A)xsize(B))

total similarity = 0

iteration = 0

for bui ∈ A do

for buj ∈ B do

matrix(i, j) = similarity(ti , tj )

end for

end for

for has line(matrix) and has column(matrix) do

total similarity = total similarity + larger similarity(matrix)

remove line(matrix, larger similarity(matrix))

remove column(matrix, larger similarity(matrix))

iteration++

end for

partial similarity = total similarity/iteration

return partial similarity

The algorithm receives the set of tokens of sentence A and sentence B as input (required). Then, it creates a matrix

of dimension, m × n, the dimension of the input tokens sets. The variables total similarity and iterations are initialized

with values 0. The variable total similarity adds up the values of the similarities in each step, while iterations is used

to transform the total similarity into a value between 0 and 1 (lines 1–3). The second step is calculating the similarities

for each pair (ai ,bj ), where ai and bj are the tokens of sentences A and B respectively. The matrix stores the calculated

similarities (lines 4–8). The last part of the algorithm is divided in three steps. First, it sums to total similarity the high

similarity value from matrix (line 10). Then, it removes the line and column from the matrix that contains the high

similarity (lines 11 and 12). To conclude, it updates the iterations value (line 13). The output is the partial similarity

which consists of the division of total similarity and iterations (line 15).

An example is presented to illustrate the process. In Fig. 1, E1.1 = {ruffner, 45, not, have, attorney, murder, charge,

authority, say} and E1.2 = {ruffner, 45, not, have, lawyer, murder, charge, authority, say}, the first step is creating

the matrix containing the similarities between all words, Table 1. It is important to notice that the numerical token is

excluded from this matrix.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

8

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

Table 1

Q5 Step 1: create the similarity matrix.

ruffner

not

have

lawyer

murder

charge

authority

Say

ruffner

not

have

attorney

murder

charge

authority

say

1.0

0.14

0.14

0.25

0.42

0.14

0.11

0.00

0.14

1.00

0.00

0.12

0.00

0.00

0.22

0.00

0.14

0.00

1.00

0.20

0.06

0.16

0.33

0.08

0.28

0.00

0.2

1.00

0.05

0.12

0.20

0.071

0.42

0.00

0.062

0.055

1.00

0.09

0.062

0.05

0.14

0.00

0.16

0.12

0.09

1.00

0.16

0.10

0.11

0.22

0.33

0.20

0.06

0.16

1.00

0.10

0.00

0.00

0.08

0.07

0.05

0.10

0.10

1.00

Table 2

Step 2: removed row 1 and column 1.

not

have

lawyer

murder

charge

authority

say

not

have

attorney

murder

charge

authority

say

1.00

0.00

0.12

0.00

0.00

0.22

0.00

0.00

1.00

0.20

0.06

0.16

0.33

0.08

0.00

0.20

1.00

0.05

0.12

0.20

0.07

0.00

0.06

0.05

1.00

0.09

0.06

0.05

0.00

0.16

0.12

0.09

1.00

0.16

0.10

0.22

0.33

0.20

0.06

0.16

1.00

0.10

0.00

0.08

0.07

0.05

0.10

0.10

1.00

Table 3

Step 3: removed row 1 and column 1.

have

lawyer

murder

charge

authority

say

have

attorney

murder

charge

authority

say

1.00

0.20

0.06

0.16

0.33

0.08

0.20

1.00

0.05

0.12

0.20

0.07

0.06

0.05

1.00

0.09

0.06

0.05

0.16

0.12

0.09

1.00

0.16

0.10

0.33

0.20

0.06

0.16

1.00

0.10

0.083

0.07

0.05

0.10

0.10

1.00

Table 4

Numerical similarity matrix.

45

271

272

273

274

275

276

277

278

279

280

281

282

45

1.0

Tables 2 and 3 represent two iterations of lines 10–13 of Algorithm 2. In both iterations the total similarity receives

the value 1. At this point, total similarity = 2 and iteration = 2.

Further interactions of the algorithm take place until no lines or columns are left. In this case, the total number of

interactions is 8 (iteration = 8) and the total similarity is also 8 (total similarity = 8). Thus, the output of Algorithm 2

is 1.0 (word partial similarity = 1.0), total similarity divided by iterations (8.0/8.0).

The second step of the process is repeating the same algorithm including the numerical tokens. The matrix of

numerical tokens has only one difference from the previous one, the calculus of the similarity (line 6). In this matrix,

the similarity does not follow Algorithm 1: it is 1 if the numbers match and 0, otherwise. The rest of the process follows

exactly the same process of obtaining the word matrix. In this case, E1.1 (Ruffner, 45, doesn’t yet have an attorney in

the murder charge, authorities said.) and E1.2 (Ruffner, 45, does not have a lawyer on the murder charge, authorities

said.) have only one numerical token each, the number 45. The numerical similarity matrix from E1.1 and E1.2 is

presented in Table 4. Thus, the output of the numerical similarity is 1.0 (num partial similarity = 1.0).

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

9

Table 5

Initial matrix.

new

zealand

commerce

commission

have

give

westpac

indication

have

approve

deal

283

284

285

286

287

288

289

290

291

292

293

294

commerce

commission

have

be

significant

hurdle

deal

0.12

0.08

1.00

0.14

0.09

0.09

0.12

0.16

0.09

0.12

0.33

0.00

0.09

0.14

1.00

0.10

0.11

0.10

0.20

0.10

0.00

0.16

0.00

0.12

0.09

0.10

1.00

0.09

0.00

0.12

1.00

0.28

0.11

0.33

0.10

0.08

0.09

0.11

0.08

0.14

0.11

0.11

0.14

0.12

0.09

0.18

0.09

0.18

0.09

0.18

0.09

0.27

0.09

0.00

0.09

0.00

0.10

0.14

0.14

0.10

0.08

0.00

0.14

0.10

0.28

0.16

0.25

0.10

0.33

0.16

0.11

0.11

0.28

0.16

0.11

0.00

1.00

After calculating the word partial similarity and the num partial similarity, the system computes the size difference penalization coefficient (SDPC) for each one, lowering the weight of the similarity between sentences with a

different number of tokens. The SDPC is proportional to the partial similarity. Eq. (2) shows how SDPC is calculated.

It is important to notice that in case of sentences with the same number of tokens, SDPC is equal to zero.

(|n − m| × PS)/n if (n > m)

SDPC =

(2)

(|n − m| × PS)/m otherwise

where n and m are the number of tokens in sentence 1 and sentence 2, respectively; PS is the partial similarity found.

In the example presented, the two sentences have exactly the same number of words and numerical tokens. Thus,

both word SDPC and num SDPC are equal to zero. This pair of sentences does not receive any size difference

penalization.

Then, the system calculates two relative similarities as presented in Eqs. (3) and (4). In the presented example,

as the word SDPC and num SDPC are zero, the output of this step is word sim = word total similarity = 8.0 and

word sim = word total similarity = 1.0

295

word sim = word total similarity − word SDPC

(3)

296

number sim = number total similarity − number SDPC

(4)

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

To conclude, the proposed method combines word sim and number sim as presented in Eq. (5), penalizing

sentences which contains different numerical tokens.

((n word ∗ word sim) + (n number ∗ number sim))/(n word + n number), if (number sim = 1)

final similarity =

(5)

word similarity − (1 − number similarity),

otherwise

where n word and n number are the total number of words and numbers in the two sentence evaluated, respectively.

As in the proposed example the number sim is 1.0, then, the final similarity = ((n word * word sim)

+ (n number * number sim))/(n word + n number) converting into values final similarity = ((8 * 1) + (1 * 1))/(8 + 1),

yielding final similarity = 1.0.

To exemplify the entire process, the lexical similarity of the second pair of sentences presented in Fig. 1 are now

calculated. This example shows the process of measuring the similarity between E2.1 = {commerce, commission,

have, be, significant, hurdle, deal} and E2.2 = {new, zealand, commerce, commission, have, give, westpac, indication,

have, approve, deal}. Table 5 presents the initial similarity matrix created. The first iteration selects column 1 and row

3 because it is the fifth element with similarity equal to 1.0. Then, the column and row selected are eliminated from

the matrix, reaching to matrix 2 (Table 6).

This process is carried on until there is no column or line left. In such case, as the number of columns is less than

the number of lines, the loop ends when the number of columns is equal to zero. The output of the similarity algorithm

is 0.54 (word partial similarity = 0.54). In this example, there is no number in any sentence; thus, the process to

measure the numerical similarity is not taken into account.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

10

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

Table 6

Second iteration matrix.

new

zealand

commission

have

give

westpac

indication

have

approve

deal

commission

have

be

significant

hurdle

deal

0.00

0.09

1.00

0.10

0.11

0.1

0.2

0.10

0.00

0.16

0.00

0.12

0.10

1.00

0.09

0.00

0.12

1.00

0.28

0.11

0.33

0.10

0.09

0.11

0.08

0.14

0.11

0.11

0.14

0.12

0.09

0.18

0.18

0.09

0.18

0.09

0.27

0.09

0.00

0.09

0.00

0.10

0.14

0.10

0.08

0.00

0.14

0.10

0.28

0.16

0.25

0.10

0.16

0.11

0.11

0.28

0.16

0.11

0.00

1.00

321

The next step is to calculate the size difference penalization coefficient (Eq. (2)). As E2.2 has more tokens than s3,

SDPC = (|n − m| × PS)/m, where n and m is the number of tokens in sentence E2.1 and E2.2 respectively, and PS is the

partial similarity obtained from proposed algorithm (word partial similarity). So, SDPC = (|7 − 11| × 0.54)/11, thus

SDPC = 0.19.

To conclude the process word sim = word total similarity − SDPC, in this case word sim = 0.54 − 0.19 = 0.35. It

is important to notice that these sentences (E2.1 and E2.2) have no numbers, thus the last step of the proposed method,

combining the words and numbers similarity, is not executed. This pair of sentences was annotated with a similarity

of 0.36. This means that the proposed approach achieves a good estimation.

322

3.2. The syntactic layer

314

315

316

317

318

319

320

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

The second layer is based on syntactic analysis. This section describes how the syntactic information of the sentences

is represented and how the assessment measure makes use of such information.

3.2.1. Syntactic representation

This layer receives the sequence of tokens, generated in the lexical layer, and converts then into a graph represented

using RDF triples (W3C, 2004). This transformation follows the steps of:

1. Syntactic analysis: In this step relations such as subject, direct object and adverbial modifier, among others, are

represented as usual. The relations involving prepositions and conjunction are also extracted from the dependence

tree process provided by Stanford CoreNLP (Stanford NLP Group, 2014).

2. Graph creation: A directed graph is used to store the entities with their relations. The vertices are the elements

obtained from the lexical layer, while the edges denote the relations described in the previous steps.

Fig. 2 deploys the syntactic layer for the example sentences. The edges usually have one direction, following the

direction of the syntactic relations. This is not always the case, however. The representation also accommodates bidirected edges, usually corresponding to the conjunction relations. One should notice that all vertices from the example

are listed in the output of the previous layer.

The syntactic analysis step is important as it represents an order relation among the tokens of a sentence. It describes

the possible or acceptable syntactic structures of the language, and decomposes the text into syntactic units in order to

“understand” the way in which the syntactic elements are arranged in a sentence.

RDF format was chosen to store the graph because:

1.

2.

3.

4.

It is a standard model for data interchange on the web;

It provides a simple and clean format;

Inferences are easily summoned with the RDF triples; and

There are several freely available tools to handle RDF.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

not

charge

neg

prep_in

ruffner

ruffner

nsubj

nsubj

have

comp

say

dobj

nsubj

attorney

authority

not

neg

charge

nn

nn

murder

murder

prep_in

have

11

comp

say

dobj

nsubj

lawyer

authority

new

nn

commerce

nn

commission

commerce

nn

aux

be

nn

zealand

aux

have

dobj

deal

nsub

nsubj

have

commission

westpac

iobj

give

dobj

dobj

indication

significant amod

hurdle

comp

prep_for

have

aux

approve

deal

Fig. 2. Syntactic layer processing of the example sentences. E1.1: Ruffner, 45, doesn’t yet have an attorney in the murder charge, authorities said.

E1.2: Ruffner, 45, does not have a lawyer on the murder charge, authorities said. E2.1: The Commerce Commission would have been a significant

hurdle for such a deal. E2.2: The New Zealand Commerce Commission had given Westpac no indication whether it would have approved its deal.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

12

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

First Step

Sim =

Second Step

a1

u

a2

a1

u

a2

b1

v

b2

b1

v

b2

0.3

TotalSimilarity = 0.25

0.2

Sim =

0.4

TotalSimilarity = 0.35

0.3

Fig. 3. Example of syntactic similarity between triples, where Sim is the similarity between two tokens or two edges, TotalSimilarity is the total

similarity of one triple, u and v are edges and a1 , a2 , b1 and b2 are the tokens associated with the nodes of the graph.

345

346

347

348

349

350

351

352

353

354

355

356

3.2.2. Syntactic similarity

The syntactic similarity between sentences is measured using the relation of the syntactic layer calculated by

matching the vertices of the RDF triples.

The process works similarly to the calculation of the lexical measure, Algorithm 2. Instead of comparing words,

this measure compares RDF triples, however. The comparison is performed by checking the triples in two steps as

presented in Fig. 3. At each step, the similarity between vertices is measured by using the similarity between words.

The similarity is the arithmetic mean of the values. The overall syntactic similarity between the triples is the average

of the values obtained in the two steps. In the case of the example presented, the final result for the syntactic similarity

measure is 0.3.

Formalizing: Let S1 = (V1 ,E,V2 ) and S2 = (V1 ,E ,V2 ) be two syntactic triples, where V1 , E, V2 are respectively

the vertex, edge and vertex from triple one, and V1 , E , V2 are the same for triple S2 . Formula (6)–(8) show how the

syntactic similarity is measured.

T1 + T2

2

357

syntactic similarity =

358

T1 =

Sim(V1 , V1 ) + Sim(V2 , V2 )

2

(7)

359

T2 =

Sim(V1 , V2 ) + Sim(V1 , V2 )

2

(8)

(6)

364

This RDF comparison replaces the token similarity in lines 2 and 3 of Algorithm 1, and consequently line 6 from

Algorithm 2. Table 7 shows the triples from example 1 (E1.1 and E1.2) and example 2 (and E2.1 and E2.2), and

Tables 8 and 9 present the initial configuration of similarity matrices between triples. These matrices go through the

same process explained in the lexical similarity until one reaches a final result. In the proposed examples, the syntactic

similarity between sentences in example 1 and example 2 are 1.0 and 0.31, respectively.

365

3.3. The semantic layer

360

361

362

363

366

367

368

369

370

371

The last layer proposed is based on semantic analysis. This section details the semantic layer.

3.3.1. Semantic representation

This layer elaborates the RDF graph with entity roles and sense identification. It takes as input the sequence of

groups of tokens, extracted in the lexical layer and applies Semantic Role Annotation (SRA) to define the roles of each

of the entities and to identify their “meaning” in the sentence.

The semantic layer uses SRA to perform two different operations:

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

13

Table 7

Syntactic triples for example 1 (E1.1 and E1.2) and example 2 (and E2.1 and E2.2).

ID

Triple

ID

Triple

E1.1

T1.1a

T1.1b

T1.1c

T1.1d

T1.1e

T1.1f

T1.1g

have-neg-not

have-nsub-ruffner

have-comp-say

have-dobj-attorney

say-nsubj-authority

attorney-prep in-charge

charges-nn-murder

E1.2

T1.2a

T1.2b

T1.2c

T1.2d

T1.2e

T1.2f

T1.2g

have-neg-not

have-nsub-ruffner

have-comp-say

have-dobj-layer

say-nsubj-authority

layer-prep in-charge

charges-nn-murder

E2.1

T2.1a

T2.1b

T2.1c

T2.1d

T2.1e

T2.1f

commission-nn-commerce

be-nsubj-commission

be-aux-have

be-dobj-hurdle

hurdle-amod-significant

significant-prep for-deal

E2.2

T2.2a

T2.2b

T2.2c

T2.2d

T2.2e

T2.2f

T2.2g

T2.2h

T2.2i

T2.2j

commission-nn-new

commission-nn-commerce

commission-nn-zealand

give-nsub-commission

give-iobj-westpac

give-aux-have

give-dobj-indication

indication-comp-approve

approve-dobj-deal

approve-aux-have

Table 8

Initial syntactic matrix example 1.

T1.2a

T1.2b

T1.2c

T1.2d

T1.2e

T1.2f

T1.2g

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

T1.1a

T1.1b

T1.1c

T1.1d

T1.1e

T1.1f

T1.1g

1.00

0.35

0.33

0.33

0.07

0.10

0.09

0.35

1.00

0.33

0.33

0.07

0.10

0.06

0.33

0.33

1.00

0.33

0.05

0.06

0.02

0.33

0.33

0.33

1.00

0.05

0.06

0.02

0.07

0.07

0.05

0.05

1.00

0.071

0.05

0.10

0.10

0.06

0.06

0.07

1.00

0.07

0.09

0.06

0.02

0.02

0.05

0.07

1.00

1. Sense identification: Sense identification is of paramount importance to this type of representation since different

words could denote the same meaning, particularly regarding to verbs. For instance, “contribute”, “donate”, “endow”,

“give”, “pass” are words that could be associated with the sense of “giving”.

2. Role annotation: Differently from in the syntactic layer, role annotation identifies the semantic function of each

entity. For instance, in the example E2.1, the word deal is seen not only syntactically as the nucleus of the direct

object of the sentence its deal. It is seen as the action of the frame approved.

This layer deals with the problem of meaning using the output of the step of sense identification. The general

meaning of the main entities of a sentence, not only the written words, is identified in this step. On the other hand, the

role annotation extracts discourse information, as it deploys the order of the actions, the actors, etc, dealing with word

order problem. Such information is relevant in extraction and summarization tasks, for instance.

The creation of the semantic layer benefitted from using FrameNet (Fillmore et al., 2003) and Semafor (Das et al.,

2010) to perform the sense identification and role annotation. FrameNet is a database that provides semantic frames,

such as a description of a type of event, relation, or entity and their agents. In other words, it makes explicit the

semantic relations required in this layer. The proposed approach applies Semafor to process the sentences and obtain

their semantic information from FrameNet.

Fig. 4 presents the semantic layer for the example sentences. Two different types of relations are identified in Fig. 4:

the sense relations, e.g. the triple authority-sense-leader, and the role annotation relations, e.g. say-speaker-authority

and say-message-have. The semantic layer uses a RDF graph representation, as does the syntactic layer.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

14

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

Table 9

Initial syntactic matrix example 2.

T2.2a

T2.2b

T2.2c

T2.2d

T2.2e

T2.2f

T2.2g

T2.2h

T2.2i

T2.2j

390

391

392

393

394

395

396

397

398

399

400

401

402

403

T2.1a

T2.1b

T2.1c

T2.1d

T2.1e

T2.1f

0.04

0.04

0.04

0.04

0.00

0.00

0.02

0.02

0.02

0.02

0.69

1.00

0.66

0.06

0.03

0.11

0.09

0.06

0.03

0.08

0.06

0.07

0.03

0.03

0.03

0.38

0.40

0.06

0.02

0.07

0.07

0.06

0.03

0.03

0.66

0.03

0.06

0.69

0.02

0.06

0.06

0.07

0.03

0.03

0.03

0.05

0.09

0.06

0.02

0.36

0.03

0.11

0.00

0.00

0.03

0.33

0.05

0.14

0.00

0.05

3.3.2. Semantic similarity

The last sentence similarity measure takes into account some semantic information. This process works in analogous

fashion to the calculation of the lexical measure, Algorithm 2, as well as the syntactic measure. A different layer

representation and way of comparing the RDF triples is used, however. The semantic measure compares the pairs

(vertex, edge) as the basic similarity value. The analysis of the graphs generated by the semantic layer showed that the

pair (vertex, edge) conveys relevant information of the degree of similarity between sentences. For instance, the sense

edges, introduced in Section 3.3.1, are connected with the token presented in the sentence and with its meaning. Thus,

it is important to measure if two sentences contain related tokens and meaning. The calculus of the semantic similarity

is illustrated in Fig. 5.

The measure of the semantic similarity follows a similar process to the syntactic one, where the RDF comparison

replaces the token similarity from Figs. 3 to 5. In other words, let S1 = (V1 , E, V2 ) and S2 = (V1 , E , V2 ) be two semantic

triple, where V1 , E, V2 are respectively the vertex, edge and vertex from triple one, and V1 , E , V2 are the same for

triple S2 . Formula (9) shows how the semantic similarity is measured.

Sim(V1 , V1 ) + Sim(E, E)

semantic similarity =

(9)

2

409

Table 10 shows the semantic triples from example 1 (E1.1 and E1.2) and example 2 (and E2.1 and E2.2), and

Tables 11 and 12 present the initial configuration of similarity matrices between triples. In the example proposed, the

similarities are 1.0 for sentences E1.1 and E1.2, and 0.32 for E2.1 and E2.2.

It is important to notice that this paper does not intend to make an intrinsic assessment of the mapping proposed by

FrameNet. However, it is evaluated extrinsically using the sentence similarity task. The results shown in Section 4 are

promising.

410

3.4. Combining the measures

404

405

406

407

408

411

412

413

414

415

416

417

418

419

420

Each sentence representation conveys a different outlook of the sentences analyzed. Thus, the similarity measures

proposed yield a value for each one of these perspectives. It is necessary to combine the three similarity measures in

order to have a global view of the degree of similarity between the sentences under analysis. Formula (10) presents the

combination of the lexical, syntactic and semantic measures adopted here to provide the overall measure of sentence

similarity.

similarity(S1 , S2 ) =

(lexn × lexs ) + (synn × syns ) + (semn × sems )

lexn + synn + semn

(10)

where lexn is sum of the number of words in the lexical file in both sentences, synn and semn are sum the number of

triples in syntactic and semantic rdf in both sentences, respectively. lexs , syns and sems are the values of the similarities

obtained using lexical, syntactic and semantic layers, respectively. It is important to notice that the numerical tokens

are not taken into account.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

possession

leader

sense

owner

possession

leader

speaker

sense

ruffner

authority

have

message

say

ruffner

owner

authority

speaker

have

message

say

possesion

murder

charge

lawyer

murder

sense

sense

killing

killing

hiring

new

sense

commerce

employee

charge

hiring

sense

commerce

sense

sense

possesion

attorney

15

employee

commission

commission

donor

factor

zealand

hurdle

total

give

recipient

westpac

significant

theme

part

deal

deal

sense

action

approve

indication

agreement

indicated

sense

indication

grantPermission

Fig. 4. Semantic layer processing of the example sentences. E1.1: Ruffner, 45, doesn’t yet have an attorney in the murder charge, authorities said.

E1.2: Ruffner, 45, does not have a lawyer on the murder charge, authorities said. E2.1: The Commerce Commission would have been a significant

hurdle for such a deal. E2.2: The New Zealand Commerce Commission had given Westpac no indication whether it would have approved its deal.

421

422

423

424

425

Formula (11) presents the values for the first example involving sentences E1.1 and E1.2, where the similarity in

this case is 1.0. On the other hand, Formula (12) outputs the similarity between E2.1 and E2.2, which is 0.33. As the

original similarity tagged on the SemEval dataset for the pair of sentences E1.1 and E1.2 is 1.0, and for the pair E2.1

and E2.2 is 0.36, the proposed system achieves a good approximation.

similarity(E1.1, E1.2) =

(16 × 1.0) + (14 × 1.0) + (18 × 1.0)

= 1.0

16 + 14 + 18

(11)

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

16

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

First Comparison

Second Comparison

a1

u

u

a2

b1

v

v

b2

Sim = 0.3

0.5

TotalSimilarity = 0.4

Sim =

0.5

0.2

TotalSimilarity = 0.35

Fig. 5. Example of similarity between pairs (vertex, edge), where Sim is the similarity between two tokens or two edges, TotalSimilarity is the total

similarity of one triple, u and v are edges and a1 , a2 , b1 and b2 are the tokens associated with the nodes of the graph.

Table 10

Syntactic triples for example 1 (E1.1 and E1.2) and example 2 (and E2.1 and E2.2).

426

427

428

429

430

431

ID

Triple

ID

Triple

E1.1

T1.1a

T1.1b

T1.1c

T1.1d

T1.1e

T1.1f

T1.1g

T1.1h

T1.1i

say-speaker-authority

say-message-have

authority-sense-leader

have-sense-possession

have-owner-ruffner

have-possession-attorney

have-possession-murder

have-possession-charge

murder-sense-killing

E1.2

T1.2a

T1.2b

T1.2c

T1.2d

T1.2e

T1.2f

T1.2g

T1.2h

T1.2i

say-speaker-authority

say-message-have

authority-sense-leader

have-sense-possession

have-owner-ruffner

have-possession-lawyer

have-possession-murder

have-possession-charge

murder-sense-killing

E2.1

T2.1a

T2.1b

T2.1c

T2.1d

T2.1e

T2.1f

T2.1g

commission-sense-hiring

commission-employee-commerce

significant-factor-commission

hurdle-total-commission

hurdle-total-significant

hurdle-part-deal

deal-sense-agreement

E2.2

T2.2a

T2.2b

T2.2c

T2.2d

T2.2e

T2.2f

T2.2g

T2.2h

T2.2i

T2.2j

T2.2l

T2.2m

T2.2n

commission-sense-hiring

commission-employee-commerce

commission-employee-new

commission-employee-zealand

give-donor-commission

give-recipient-westpac

give-theme-approve

give-theme-indication

give-theme-deal

approve-action-deal

indication-indicated-deal

indication-indicated-approve

approve-sense-getPermission

(18 × 0.36) + (16 × 0.31) + (20 × 0.32)

= 0.33

(12)

18 + 16 + 20

The proposed combination outperformed five different machine learning algorithms tested (details are provided in

Section 4.2).

In relation to the relational properties the proposed measure is:

similarity(E2.1, E2.2) =

• Reflexive: It means that the for every sentence S the proposed similarity will always be the same. In other words,

similarity(S) = similarity(S), for all sentence S.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

17

Table 11

Initial semantic matrix example 1.

T1.2a

T1.2b

T1.2c

T1.2d

T1.2e

T1.2f

T1.2g

T1.2h

T1.2i

T1.1a

T1.1b

T1.1c

T1.1d

T1.1e

T1.1f

T1.1g

T1.1h

T1.1i

1.00

0.69

0.70

0.37

0.49

0.10

0.21

0.15

0.11

0.69

1.00

0.68

0.37

0.41

0.17

0.17

0.11

0.08

0.70

0.68

1.00

0.37

0.41

0.09

0.22

0.16

0.12

0.37

0.37

0.37

1.00

0.36

0.05

0.14

0.06

0.09

0.49

0.41

0.41

0.36

1.00

0.39

0.48

0.18

0.11

0.10

0.17

0.09

0.05

0.39

1.00

0.37

0.15

0.07

0.21

0.17

0.22

0.14

0.48

0.37

1.00

0.25

0.17

0.15

0.11

0.16

0.06

0.18

0.15

0.25

1.00

0.47

0.11

0.08

0.12

0.09

0.11

0.07

0.17

0.47

1.00

Table 12

Initial semantic matrix example 2.

T2.2a

T2.2b

T2.2c

T2.2d

T2.2e

T2.2f

T2.2g

T2.2h

T2.2i

T2.2j

T2.2l

T2.2m

T2.2n

T2.1a

T2.1b

T2.1c

T2.1d

T2.1e

T2.1f

T1.1g

0.66

0.66

1.00

0.36

0.06

0.17

0.03

0.15

0.14

0.10

0.18

0.07

0.12

0.36

0.36

0.36

1.00

0.06

0.06

0.33

0.04

0.06

0.06

0.12

0.12

0.12

0.05

0.05

0.10

0.03

0.00

0.05

0.03

0.09

0.38

0.05

0.12

0.06

0.13

0.08

0.08

0.14

0.38

0.05

0.22

0.33

0.20

0.14

0.06

0.28

0.12

0.20

0.09

0.09

0.20

0.09

0.04

0.38

0.04

0.41

0.12

0.07

0.47

0.13

0.19

0.09

0.09

0.09

0.08

0.04

0.04

0.03

0.05

0.06

0.07

0.09

0.09

0.09

0.09

0.09

0.14

0.08

0.04

0.10

0.03

0.11

0.40

0.07

0.15

0.09

0.16

432

• Symmetric: It implies that for all sentence X and Y, if similarity(x) = similarity(y), then similarity(y) = similarity(x).

433

4. Experimental results

438

The proposed similarity measure was evaluated in different contexts. The first evaluation was performed using an

adaptation of benchmark dataset by Li et al. (2006). The second benchmarking uses the dataset provided by SemEval

Semantic Textual Similarity Competition organized in 2012 (Agirre et al., 2012). Then, the proposed measure was

evaluated using a summarization dataset, adopting the CNN corpus developed by Lins et al. (2012), to measure the

similarity between sentences in extractive summaries. The following section describes each of those experiments.

439

4.1. The dataset by Li and collaborators

434

435

436

437

440

441

442

443

444

445

446

447

448

449

450

This experiment assesses the performance of the proposed measure against the state of the art methods in the

area. The used dataset initially contained 65 pairs of sentences created from the 65 noun pairs from Rubenstein and

Goodenough (1965), replaced by the definitions of the nouns from the Collins Cobuild dictionary. Li et al. (2006)

tagged the similarities of the sentence in this dataset with the average similarity scores given to each pair of sentence by

32 human judges. Only 30 of those 65 pairs of sentences were considered relevant for similarity assessment purposes,

however. The relevant subset is used here for comparison purposes.

The Pearson’s correlation coefficient (r) and the Spearman’s rank correlation coefficient (ρ) are used to evaluate the

proposed similarity measure. The r measures the strength and direction of the linear relationship between two variables.

It provides the relationship between human similarity and the similarity obtained with the proposed measure. The ρ

calculates the correlation between the ranks of two variables. In this experiment, the sentences are ranked from the

highest to the lowest similarity.

Please cite this article in press as: Ferreira, R., et al., Assessing sentence similarity through lexical, syntactic and semantic

analysis. Comput. Speech Lang. (2016), http://dx.doi.org/10.1016/j.csl.2016.01.003

+Model

YCSLA 763 1–28

18

ARTICLE IN PRESS

R. Ferreira et al. / Computer Speech and Language xxx (2016) xxx–xxx

Table 13

Pearson’s and Spearman’s coefficients of the sentences similarities given by

proposed measure.

Measure

r

ρ

Path-Lexical

Lin-Lexical

LC-Lexical

Res-Lexical

WP-Lexical

0.87

0.87

0.79

0.85

0.76

0.90

0.86

0.78

0.87

0.65

Path-Syntactic

Lin-Syntactic

LC-Syntactic

Res-Syntactic

WP-Syntactic

0.66

0.75

0.65

0.70

0.52

0.62

0.68

0.53

0.53

0.34

Path-Semantic

Lin-Semantic

LC-Semantic

Res-Semantic

WP-Semantic

0.76

0.76

0.71

0.78

0.62

0.75

0.72

0.68

0.71

0.58

Table 14

Pearson’s and Spearman’s coefficients of the sentences similarities

combination.

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

Measure

r

ρ

Path-Combination

Lin-Combination

LC-Combination

Res-Combination

WP-Combination

0.92

0.88

0.86

0.86

0.82

0.94

0.89

0.89

0.89

0.86

Table 13 presents the results of each proposed measures in terms of Pearson’s and Spearman’s coefficients. The

measures are described as the pair (similarity between words, sentence representation layer).

As one may observe, the similarity measure based on the lexical layer provided the best results. This demonstrates

that the preprocessing applied to the lexical layer combining word similarities (see Section 3.1.2) improves the accuracy

of lexical similarity measure. The best result achieved is the combination of Path and Lexical measures (Path-Lexical),

which achieves 0.87 and 0.90 of r and SCC, respectively.

Another conclusion one may draw is about the measure of similarity between words (Section 3.1.2). When the

system used Path, Lin and Resnik similarities it achieves better results than the other measures (see Table 13). This

behavior corroborates the results reported in Refs. Ferreira et al. (2014) and Oliva et al. (2011). This happens because