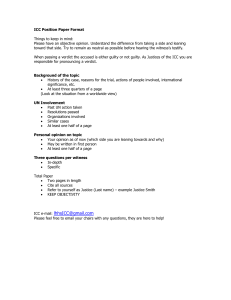

A53 core optimization to achieve performances Calogero TIMINERI Julien BUVAT Julien GUILLEMAIN Sebastien PEURICHARD STMicroelectronics 12 rue Jules Horowitz, B.P. 217 38019 Cedex Grenoble, France www.st.com ABSTRACT In order to achieve the best PPA on ARM A53 cores, meaning performance, power, and area, GPU/CPU team at STMicroelectronics has developed a dedicated physical implementation Flow based on IC Compiler (ICC). The purpose of this article is to share various techniques used by designers to meet performance target all along the ICC flow. We will describe those techniques and their impact on theQuality of Results (QoR) such as timing, leakage, routability and DFM score. Table of Contents 1. Introduction ........................................................................................................................... 4 2. CPU cores implementation challenges ................................................................................. 5 3. Implementation Flow Presentation ....................................................................................... 6 4. Timing calibration throughout implementation flow ............................................................ 8 4.1. Purpose of calibration ........................................................................................................ 8 4.1.1. Project Schedule ......................................................................................................... 9 4.1.2. PPA monitored with a dashboard ............................................................................... 9 4.1.3. Two types of miscorrelation can be addressed by calibration ................................. 11 4.2. DCG vs ICC: setting alignment ....................................................................................... 11 4.3. Pre-cts vs post-cts correlation .......................................................................................... 11 4.3.1. A budget for each clock and every PVT (Process, Voltage, Temperature) .............. 12 4.3.2. Clocktree exceptions ................................................................................................. 13 4.3.3. Empirical calibration ................................................................................................ 13 4.4. Pre-route vs post-route on data path ................................................................................ 14 4.5. ICC vs primetime correlation .......................................................................................... 15 4.5.1. Crosstalk effect.......................................................................................................... 15 4.5.2. Delay calculation in ICC and Primetime using Graph Based Analysis and Path Base Analysis .................................................................................................................................. 16 5. DRC and physical convergence .......................................................................................... 18 5.1. Dealing with design related congestion ........................................................................... 18 5.2. Resolving DRC problems under power stripe with PNET feature .................................. 19 5.2.1. Power grid structure and routing resources ............................................................. 19 5.2.2. Synopsys pnet feature ................................................................................................ 21 5.3. Results ............................................................................................................................. 21 5.4. DRC convergence with signoff ....................................................................................... 22 6. Conclusions ......................................................................................................................... 24 7. References ........................................................................................................................... 24 Table of Figures Figure 1: CPU core floorplan .......................................................................................................... 5 Figure 2: Top-down implementation methodology [2] .................................................................. 7 Figure 3: Vt usage kpi ................................................................................................................... 10 Figure 4: Slack distribution histogram. Moving margins has a big impact on violation count and TNS ............................................................................................................................................... 12 Figure 5: C sensitive path (yellow), R sensitive path (orange) ..................................................... 14 Figure 9: Congestion prone hierarchy (red) and DRC (white) ..................................................... 18 Figure 10: Congestion map without partial density blockage on congestion prone hierarchy ..... 19 Figure 11: Congestion map with partial density blockage on congestion prone hierarchy .......... 19 SNUG 2015 2 A53 core optimization to achieve performances Figure 6: Layer structure from IA/IB tapping down to M1 with via stack ................................... 20 Figure 7: Power grid structure ...................................................................................................... 20 Figure 8: Routed signals DRC under M3 power stripes ............................................................... 21 Figure 12: Via enclosure height is 0.095um in ICC ..................................................................... 22 Figure 13: Built in ICV flow [1] ................................................................................................... 23 Table of Tables Table 1: Example of area variations highlighting the need for calibration..................................... 8 Table 2: Example of timing difference between DCG and ICC prectsopt. Path delay contributions. .................................................................................................................................. 9 Table 3: Timing results throughout the implementation steps...................................................... 10 SNUG 2015 3 A53 core optimization to achieve performances 1.Introduction ARM based CPU subsystems have become a major industry standard. They fit in a wide variety of consumer products whose requirements obey to the consumer market: the right feature with a short time to market. One of our main challenges is to design various differentiated cores within an aggressive schedule. CPU/GPU team has developed adaptive methodologies to allow a fast convergence to signoff. CPU cores have specifications and expected performances challenging the technology as well as the entire design flow from RTL to gds. All design stages are stressed to pull the best performances, including physical implementation. Not only CPU cores have to run at high frequency, they also have to operate in a wide range of conditions. This implies functionality must be guaranteed with multiple voltage supplies, and various functional modes. Furthermore, area is shrunk, density pushed up to get the most cost efficient product. Static and dynamic power are implementation constraints too as they are key parameters when assessing CPU performance. Advanced technology nodes, like STMicroelectronics 28nm FDSOI technology, are becoming more constraining in terms of design rules. Some rules are mandatory to obey (often known as Design Rule Check), others are good practices to help manufacturing and allow yield improvment, sometimes known as lithography rules. These rules need to be checked during the implementation in order to minimise signoff loops and to avoid manual editing of the physical database. SNUG 2015 4 A53 core optimization to achieve performances 2.CPU cores implementation challenges Project’s schedules lead to find methodologies to shorten the design phase. One way to do so is to improve turnaround time, by decreasing signoff loops as much possible for instance. Minimising the QoR gap between each step helps the implementation tool to converge faster and reduces runtime. Typically, we shall look at reducing timing deviation, as well as area or leakage divergence. Power, performance and area are part of the CPU specification. It is essential to know as early as possible if these targets can be achieved in order to give commitment on feasibility. With frequency targets over the gigahertz, margins are slim. A 50ps miscorrelation impacts CPU performances by 5%. Small timing miscorrelation may severely impact usage of low leakage cells. CPU constraints have to be set accurately to achieve expected performance within static power budget. Density and area have to be tightly controlled considering usual buffering of the clocktree and hold fixing. Increasing the area may improve routability, but on another hand may increase dynamic power too. Timing, area and density budgets have to be set accurately as they are a trade-off between all the key indicators of a CPU. Main features of the CPU cores we implement : <1.1 mm² >1.4GHz >600kInstances 60k register 29 memory cuts 200 scenarios Aggressive dynamic and static power targets Large voltage range 28nm FDSOI 10ML Figure 1: CPU core floorplan SNUG 2015 5 A53 core optimization to achieve performances 3.Implementation Flow Presentation CPU cores are generally not stand-alone IPs. Their implementations have to be set in a more global context of a CPU subsystem. Figure 2 shows the top down methodology for subsystem design, implementation and signoff. Cores inherit all their inputs from the budgeting and partitioning: timing constraints, upf, and floorplan. Cores are going through full physical implementation and signoff loop until timing is met, and physical checks successfully pass. Top and blocks are then put together for a flat signoff and further ECO on the top partition. SNUG 2015 6 A53 core optimization to achieve performances RTL Assembly UPF T O P D O W N A P P R O A C H Flat Synthesis Netlist / UPF Handoff Design Planning Partitioning Budgeting Top Hier Implementation Blocks Implementation PT-ECO Top Hier Signoff CPU Implementation Blocks Signoff PT-ECO Top Flat Signoff Figure 2: Top-down implementation methodology [2] SNUG 2015 7 A53 core optimization to achieve performances 4.Timing calibration throughout implementation flow 4.1. Purpose of calibration In early phase of implementation some margin is kept to account for model inaccuracy. It generally applies to the entire design as extra conservatism. It is relaxed in later steps as the maturity of the design grows. Setting too much conservatism should result in over quality or not being able to converge, with tools striving to meet all constraints. On the other hand, too little conservatism makes the job easier for the tool with the risk of target performance not being met. Calibration is all about estimating as precisely as possible these margins. In order to guarantee a smooth transition between implementation steps, all parameters causing divergence must be identified whether they are tool settings, constraints, placement, clocktree or routing options. Key parameters are kept under control. QoR from the end of one step must compare to the start of the next one. Any deviation can reveal a miscorrelation or ‘miss-calibration’ and needs to be understood. For instance an area increase may be the cause of density and further congestion issues. Main indicators under close monitoring are runtime, performance (timing), power and area referenced as PPA. Step Combi area step increase prePlace prectsOpt prectsOptIncr clockTreeSynthesis postctsOpt route postrouteOpt 0.00% 14.25% 0.00% 0.77% 5.37% 2.25% 0.99% Table 1: Example of area variations highlighting the need for calibration SNUG 2015 8 A53 core optimization to achieve performances Weight on timing path Incremental synthesis slack clock: (ns) prectsopt 0.084 0.0202 (0.0532), 8% (0.0132), 2% CP to Q 9% 10% setup time 4% 11% 67% 74% 88% 97% Data Table 2: Example of timing difference between DCG and ICC prectsopt. Path delay contributions. 4.1.1. Project Schedule Calibration guarantees that each step does not sensibly degrade the QoR. Therefore most of the design weaknesses are spotted as early as possible. Considering full CPU core physical implementation is around five days, a better predictability allows us to shorten the design phase and to be able to commit on Performances, Power, and Area (PPA) very early. A major benefit from calibration is productivity improvement. 4.1.2. PPA monitored with a dashboard QoR at all steps from synthesis to post signoff are tracked in a dashboard. It allows, monitoring key parameters through each implementation step, to have a timing summary for each scenario, and to quickly compare two implementations considering all major indicators. Examples here below show Vt cell’s usage giving indication of leakage (Figure 3), and timing summary along implementation steps (Table 3). Other examples illustrate monitoring of the area (Table 1) and the path delay contributors (Table 2) SNUG 2015 9 A53 core optimization to achieve performances VT1 VT2 VT3 VT4 Speed Leakage Impact ++ + -- -+ ++ Figure 3: Vt usage kpi Step TNS WNS prePlace prectsOpt prectsOptIncr clockTreeSynthesis postctsOpt route postrouteOpt 0 -0.656 -0.656 -179.115 -4.036 -272.789 -0.462 0 -0.02 -0.02 -0.141 -0.033 -0.166 -0.015 Table 3: Timing results throughout the implementation steps SNUG 2015 10 A53 core optimization to achieve performances 4.1.3. Two types of miscorrelation can be addressed by calibration Since timing models or timing engine are different between DC-SPG, ICC and Primetime there is a need to correlate whenever the database is handed over from one tool to another. Within physical implementation tool, timing models are updated and become more and more precise as the design goes through all the steps. We shall see how calibration has been done between pre and post cts, pre and post route. 4.2. DCG vs ICC: setting alignment In CPU/GPU team’s methodology, synthesis is physical aware using DCG. Database is passed to ICC in ASCII format with DEF and Verilog. DDC format contains design and optimisation directives which may not all be under control. Hence we have abandoned the use of DDC. In order to be in full control of the settings, we have chosen to explicitly align all variables in both tools. We particularly cared about settings impacting net delays: scaling factors and via resistance. Others necessary means for a good correlation are the use of the same technokit, libraries, timing constraints, design attributes, amongst them dont_use, ideal_nets, and tools variables. 4.3. Pre-cts vs post-cts correlation Timing margin on a path depends on the data delay as well as the clock delay. In pre-cts steps clock latencies and their variations must be modelled as accurately as possible as they impact all paths to sequential elements. Clock is budgeted by means of source or internal latencies and uncertainties. They are global constraints applied to the entire clock fanout. Therefore a small variation on clock timing model may affect lots of timing paths in the design and it may change the global timing results (Figure 4). Increasing uncertainty is equivalent to shifting the curve to the left. Violation count and TNS (red area) grow significantly with a few ps shift. SNUG 2015 11 A53 core optimization to achieve performances Figure 4: Slack distribution histogram. Moving margins has a big impact on violation count and TNS 4.3.1. A budget for each clock and every PVT (Process, Voltage, Temperature) Pre-cts clock timing models account for period, latency with variations induced by PLL jitter, OCV derating and skew. Each of these compounds can be optimised individually for each clock. For instance OCV derating depends on most divergent branches depth. It requires a good knowledge of what the clocktree structure and its latency will be when the cts is done. Obviously, latencies depend on PVT corners. Hence clock budget must follow the same rules. All scenarios used in pre-cts have to be budgeted independently. A small miscorrelation in a single scenario may cause timing, area or leakage divergence. Ideally, not a unique mode should be excessively more constraining than the other. SNUG 2015 12 A53 core optimization to achieve performances 4.3.2. Clocktree exceptions Most sequential cells of the design are aligned deep in the clocktree. Some elements such as clock gates may need a deskew, because they are structuraly on the path from port to the leaves. Their budgeted pre-cts latency needs to be set according to their depth. Parameters to consider when assessing the depth are the structural location within the clocktree and the fanout. A clock gate inserted by Power Compiler is generally deep in the clocktree. It drives a few registers, whereas a functional clock gate with a fanout of nearly the entire design is likely to be early. They need to have different and specific deskew budgeted. With our methodology, pre-cts budget for deskewed elements is fully automated and takes into account structural location of the cell within the clocktree. 4.3.3. Empirical calibration Calibration is a trade-off between a tight global constraint that would allow a clean timing at signoff at the cost of some over quality, and a rather slack constraint which may leave a few violations but does not degrade other parameters such as density or leakage. With too much conservatism induced by the clock budget, the placement may not converge. Pre-cts margins need to be relaxed. Similarly, if pre-cts clock models are too loose, placement may give good results, but timing violations may appear in post-cts. In that case, clock uncertainties are adjusted based on pre-cts as well as post-cts optimisation’s results. Clock budget is empirically finetuned. Clock calibration implies clocktree synthesis but also placement and post-cts steps. A few implementation loops may be necessary to assess the global results. The global benefit is a better convergence. SNUG 2015 13 A53 core optimization to achieve performances 4.4. Pre-route vs post-route on data path Timing paths are made of cell delays and net delays. Depending on the topology, the net length and the process, some timing path may be more sensitive to net resistivity or to net capacitance. In our 28nm FDSOI 10ML technology upper layers are thicker and wider than lower ones, resulting in differences in RC parasitics. Figure 9 shows metal layer sizes. Figure 5 illustrates R and C sensitive paths. Figure 5: C sensitive path (yellow), R sensitive path (orange) Layer assignment and routing may have a big impact on the timing. There is a paramount need to control the layer assignment all along the implementation. Assumptions made by the tool in preroute stages have to be persistent and consistent until the design is fully routed. Synopsys design flow using DCG and ICC is optimized to guarantee the best correlation on WNS timing and area (after place_opt in ICC), and congestion. With the same global route engine, routability can be anticipated into DCG. Therefore parasitic and nets delays are accurately predicted from the physical synthesis. It has a positive impact on design convergence. In synthesis and pre-cts, tools make assumptions on layer assignment for each net. They may use fat metal layers for long timing critical data paths. However, clock nets are privileged over data paths with less resistive metal layers, because they need to be balanced with as small latency and skew as possible. With dedicated tracks for clocks not being anticipated in pre-route steps, available resources for data paths would be much less than what has been assumed. ICC would SNUG 2015 14 A53 core optimization to achieve performances have to revert back to lower and more resistive layers for data nets. A QoR degradation may be observed after routing. To avoid this fall trap, we have deliberately chosen to forbid fat metal layers usage in all steps before cts. After clock routing, the remaining resources on upmost metal layers can be used again. As a result, delay calculation on long data paths is a bit conservative in placement. This makes the placer to work harder. We can afford to leave a few small violations expecting they will easily be reclaimed in post-route. In conjunction with relevant implementation corners, CPU/GPU team could achieve a good timing convergence. We could further improve layer predictability in pre-cts if we could assess routing resources taken by clocktrees. We would allow partial use of B1 and B2 from the synthesis. The shared groute engine guarantees DCG and ICC make exactly the same layer assignment. Therefore the timing would be accurately predicted. 4.5. ICC vs primetime correlation 4.5.1. Crosstalk effect Crosstalk is the undesirable electrical interaction between two or more physically adjacent nets due to capacitive coupling. The two major effects of crosstalk are crosstalk-induced delay and static noise. IC Compiler tool uses crosstalk prevention techniques during track assignment. During timing and crosstalk-driven track assignment, the tool minimizes the crosstalk effects by assigning long, parallel nets to nonadjacent tracks. It runs a simplified noise analysis to make sure the noise level from aggressor nets is minimized. After detail routing, the tool performs crosstalk-induced noise and delay analysis by calculating the coupling capacitance effects to identify any remaining violations. It fixes these violations during the post-route optimization phases. ST’s physical design flow kit allows the user to control different threshold for crosstalk prevention and fixing using “set_si_options” command: set_si_options \ -delta_delay true \ -max_transition_mode normal_slew \ -min_delta_delay true \ -route_xtalk_prevention true \ -route_xtalk_prevention_threshold $::STM::TECH::siThreshold(xtalk_prevention) \ -static_noise true \ -static_noise_threshold_above_low $::STM::TECH::siThreshold(static_noise) \ -static_noise_threshold_below_high $::STM::TECH::siThreshold(static_noise) SNUG 2015 15 A53 core optimization to achieve performances The lower the threshold voltage for crosstalk prevention, the harder the router tries to prevent crosstalk. With advanced design nodes like 28nm FDSOI, we are very sensitive to crosstalk impact; therefore, the use of this feature has become mandatory. We do enable Timing-driven global routing by using “set_route_zrt_global_options timing_driven true” as well as enabling timing-driven track assignment by using “set_route_zrt_track_options -timing_driven true” however we do not allow xtalk prevention during global routing with “set_route_zrt_global_options -crosstalk_driven false“ During post-route optimization, “route_opt” command performs the following crosstalk optimizations based on the signal integrity options set with “set_si_options” for both setup and hold by setting the “-delta_delay” and “-min_delta_delay ”option to true. The relevant settings we are using today in our ST 28nm FDSOI process node for crosstalk prevention show a good convergence as no crosstalk violation is reported in the first signoff. 4.5.2. Delay calculation in ICC and Primetime using Graph Based Analysis and Path Base Analysis “graph“ is the term used for the timing database. It is first created when the netlist is read in and the design is linked. During a timing update, timing database is populated with timing values from delay calculation. “graph“ represents the entire design, all possible timing paths are contained within the graph. Ports and pins in the design become the nodes in the graph, and the timing arcs become the connections between the nodes. “graph“ stores both min and max timing values for all timing arcs in the design, along with other information. Delay calculation is performed as the edges propagate in a forward direction across the logic. If we were computing the timing on a chain of buffers, we would simply feed the output slew from each stage into the next stage, performing delay calculation and storing the results on the graph as we propagate along the direction. However, when two slews arrive at the same point on the graph, timing engine must choose one of these slews to propagate forward so it can continue delay calculation for the downstream logic. To ensure the min/max graph values always bound the fastest and slowest possible timing, the worst slew must be chosen and propagated forward. This is the fastest (numerically smallest) slew for min delays, and the slowest (numerically largest) slew for max delays which is the default graph-based mode. path-based analysis mode allows to pull any timing path off the graph and perform a more accurate timing analysis of that specific path's timing by propagating the exact slew along the path under analysis. A major difference between IC Compiler and PrimeTime is graph-based versus path-based analysis. Full path-based optimization is not feasible in a physical implementation tool due to the analysis required, leading to excessive runtimes. SNUG 2015 16 A53 core optimization to achieve performances We have established a statistical estimate of the divergence between signoff PBA and implementation GBA timing. An adjustment has been done in the implementation constraints through the uncertainty. Refined corrections have been done for all implementation scenarios until timing divergences are reduced to a minimum while violation count within primetime still allows to meet timing after eco. We have seen a good QoR in setup, hold and leakage out of icc. Hold and leakage could be further optimised after signoff eco. SNUG 2015 17 A53 core optimization to achieve performances 5.DRC and physical convergence Our team has faced various challenges with physical convergence. Three of them are developed in this section. The first one is intrinsic congestion in a particular design’s hierarchy. Secondly, we shall see how we have overcome an issue with routability related to the power grid structure. For the third challenge related to global DFM convergence with physical signoff we shall describe how we have improved our implementation flow to clean up physical violators. 5.1. Dealing with design related congestion Some designs, or part of them, are prone to congestion due to their high level of interconnections. Agressive timing constraints, sometimes tend to force the placement engine to stack the cells closely in order to reduce net delays. As a consequence, smaller cells are used by the placer and the instance count per area increases. The design can end-up with high net density and local congestion hotspots can appear (see Figure 7). We have faced such problem in an identified hierarchy of our cores (see Figure 6). Highlighted hierarchy was realy sensitive to routing congestion during prects steps and it worsen after successive postcts optimizations as the design was filled with buffers inserted for long nets or crosstalk. Figure 6: Congestion prone hierarchy (red) and DRC (white) ICC has no pin density aware placer and cannot prevent this type of issue. However, ICC has a feature with the possibility to constrain the cell density with a beneficial effect on congestion. It has the flexibility to set a density threshold on a particular area with the command: create_placement_blockage -type partial -blocked_percentage 40 Used on a specific hierarchy of the A53 CPU core has resulted in spreading the cells and decongestioning this area. In some cases it has also helped the timing optimizer to get better results due to the fact that tool can find more room to legalize cells. (see Figure 8) SNUG 2015 18 A53 core optimization to achieve performances Figure 7: Congestion map without partial density blockage on congestion prone hierarchy Figure 8: Congestion map with partial density blockage on congestion prone hierarchy 5.2. Resolving DRC problems under power stripe with PNET feature 5.2.1. Power grid structure and routing resources Core’s power grid consist in dedicated two fat metal layers IA/IB and strengthening stripes on M3 before tapping down standard cells rails on M2. See Figure 9: Layer structure from IA/IB tapping down to M1 with via stack and Figure 10: Power grid structure Power grid structure is generally sized to minimize voltage drop without impacting routability too much in any direction. Power stripes and via stacks may sometimes act as routing obstructions. During the implementation of our IPs we faced DRC errors under these M3 stripes. Many standard cells placed under these M3 grid have to be connected with limited routing ressources. M3 blocked all vertical access. Considering M1 cannot be used due to standard cell’s pins, there is only one metal available M2, hence a few routing tracks in a single direction. SNUG 2015 19 A53 core optimization to achieve performances Figure 9: Layer structure from IA/IB tapping down to M1 with via stack Figure 10: Power grid structure SNUG 2015 20 A53 core optimization to achieve performances Without any specific action on pin access under M3 stripes, a typical design ends up with unfixable DRCs. We have noticed the issue is more likely to happen when small cells are used, and more strictly when the pin density is higher. ICC placement is not pin density aware and cannot foresee later routing congestion. Therefore, nothing prevents placer from stacking cells with limited access to pins. Figure 11: Routed signals DRC under M3 power stripes Using placement blockages under M3 power stripes would clear off the issue, but this results in a loss of placeable area. We have not chosen this solution. 5.2.2. Synopsys pnet feature Synopsys has implemented a feature allowing to define some placement rules under metal stripes. It is invoqued during every legalization. We used this feature to restrict usage of selected cells under M3 whenever they are small cells or prone to create DRCs. 5.3. Results With the following options CPU cores got rid of all DRC related to accessibility under M3 stripes set_pnet_options -partial M3 set_app_var legalizer_avoid_pin_under_pnet_lib_cells $forbiddenCells set_app_var legalizer_avoid_pin_under_pnet_layers M3 set legalizer_avoid_pin_under_pnet_min_width 0.500 SNUG 2015 21 A53 core optimization to achieve performances 5.4. DRC convergence with signoff Considering 28nm physical rule’s complexity, running DRC and DFM checks late in signoff may cause lots of rerouting and may have a side-effect on timing or crosstalk. In implementation, search and repair engine fixes DRC errors based on a set of rules specifically coded for ICC. Several iteration of DRC checks and fixes are executed after every post-route optimization. The bulk of DRC violations are fixed. Despite the continuous effort of P&R tools to support rules requirements from different foundries, we see few cases where ICC Zroute, misses some DRC violations reported by the signoff tool. There are many reasons for this misscorrelation. DRC involving shapes not present in abstract cannot be spotted nor fixed by ICC. Also, specific rules with very low occurrence in design have deliberately not been coded in ICC runset because it would severely penalize the runtime. Complex rules can be coded in different ways depending on the DRC engine. There are divergences between ICC search and repair and third party signoff tool. For instance, geometries may be reshaped to reflect process variations. Pieces of metal may be shrunk or extended prior to rules checking. For instance Figure 12, via enclosure is 0.095um height in ICC. During signoff this piece of metal is extended to 0.100um making a DRC rule to fail. Figure 12: Via enclosure height is 0.095um in ICC Solution to improve the convergence between ICC and signoff DRC checks is to use ICValidator (ICV). The tool is dynamicaly invoqued during the P&R flow to complement the use of ICC runset, with a particular focus on a selection of rules identified as weaknesses of the ICC router. It keeps a low runtime and may be invoqued many times during postroute optimizations. This has become a regular methodology and has been put in our execution flow (Figure 13: Built in ICV flow). SNUG 2015 22 A53 core optimization to achieve performances Figure 13: Built in ICV flow [1] The benefits of this flow is to identify DRC violations based on the foundry sign-off DRC runset and target an automatic DRC fixing. The ability to select only a subset of rules checks by ICV decreases the runtime impact. ICV feature has been activated in post route and post signoff. Finally the number of iterations between place and route and signoff verification has been limited to only one loop. SNUG 2015 23 A53 core optimization to achieve performances 6.Conclusions Calibration has been done at all implementation stages, correlation done for every tool handover. We have seen timing continuity, area stability and power predictability. Physical convergence fall traps whether they are design related or due to rule set definitions could be avoided thanks to various ICC features. All these techniques can be re-used for similar designs if the constraints are modified (target frequency, scenarios) or if the technology node changes (14nm, layer, lithography or DRC rules). With good correlation throughout the flow, ICC optimizations focus on real violations. No overdesign is done and runtime is cut down. DFM indicators get good scores guaranteeing manufacturing and yield. A design out of ICC has a number of violations left that can be handled within acceptable timescale. All these predictable results allowed us to give early commitment on PPA. 7.References [1] In-Design Automatic DRC Repair Flow Using IC Compiler and IC Validator, Stephane Pautou (STMicroelectronics, Crolles, France), Alain Boyer (Synopsys, Montbonnot, France), SNUG 2014 [2] Concurrent Top and Blocks Level Implementation of a High Performance Graphics Core Using OnePass Timing Closure in Synopsys IC Compiler, Corine Pulvermuller, Julien Guillemain (STMicroelectronics, Grenoble, France) SNUG 2015 24 A53 core optimization to achieve performances