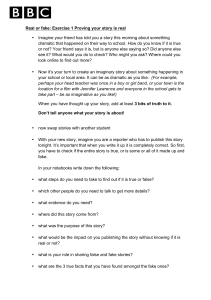

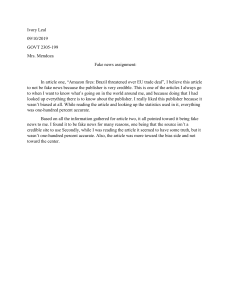

977301 research-article2020 MCS0010.1177/0163443720977301Media, Culture & SocietyLemos et al. Main Article Fake news as fake politics: the digital materialities of YouTube misinformation videos about Brazilian oil spill catastrophe Media, Culture & Society 2021, Vol. 43(5) 886­–905 © The Author(s) 2020 Article reuse guidelines: sagepub.com/journals-permissions https://doi.org/10.1177/0163443720977301 DOI: 10.1177/0163443720977301 journals.sagepub.com/home/mcs André Luiz Martins Lemos Federal University of Bahia, Brazil; FACOM/UFBA, Brazil Elias Cunha Bitencourt State University of Bahia, Brazil; FACOM/UFBA, Brazil João Guilherme Bastos dos Santos Brazilian National Institute of Science and Technology for Digital Democracy, Brazil Abstract This article investigates misinformation chains – fake news and clickbait – related to the 2019 oil spill along the coast of Northeast Brazil. A link between the intensive use of misinformation on YouTube and the environmental impact of digital media and algorithmic performativity has been found by analyzing videos about the 2019 Brazilian oil spill. A total of 591 YouTube videos were extracted based on a search for the hashtags ‘oleononordeste’, ‘vazamentopetroleo’, and ‘greenpixe’. The data thus obtained suggest that most of the corpus (80.37%) consists of misinformation, of which 65.82% (389 videos) is clickbait and 14.55% (86 videos) fake news. YouTube misinformation videos produced around 1.42 MtCO2e, the equivalent of burning 3.30 barrels of oil. We argue that misinformation chains increase pollution and carbon footprint as a result of at least three factors: (a) the extra energy cost of feeding algorithms; (b) increased algorithmic resistance to the visibility of journalistic information; and (c) undermining public debate about environmental catastrophes in favor of private interests (fake politics). Corresponding author: Elias Bitencourt, School of Design, State University of Bahia, Avenida Alphaville, n 866, apt 507, Salvador, BA 41701015, Brazil. Email: eliasbitencourt@gmail.com Lemos et al. 887 Keywords Anthropocene, carbon footprint, digital materialities, fake news, fake politics, misinformation, YouTube Introduction: digital materiality – raw material and energy consumption Life on Earth in the new geological epoch known as the Anthropocene is under threat (Bonneuil and Fressoz, 2016; Hamilton et al., 2015; Latour, 2017; Taffel, 2016). The relationship between digital media and its environmental impact is currently the object of study by three main groups of researchers. The first has highlighted the need for minerals and energy in the manufacture and disposal of electronic equipment. The second has emphasized aspects of labor exploitation, the growth in inequality and the lack of regulation in the digital industry. The third has raised the issue of the demand for energy resulting from the use of the internet, which requires large amounts of fossil fuels to power data centers and the associated urban infrastructure. For the first group of authors, digital culture is based on machines that require minerals and fossil fuels and produce heat and waste in the form of e-waste (Gitelman, 2013; Parikka, 2015a; Räsänen and Nyce, 2013). Further waste is produced by rapid obsolescence and the need to upgrade a wide range of electronic devices (Cubitt, 2014; Cubitt et al., 2011; Gabrys, 2013, 2016; Hogan, 2015; Parikka, 2012). Before the oil spill along the coast of Northeast Brazil, the accident at a dam in Brumadinho on January 25th, 2019, resulted in 270 deaths and was considered the second-worst Brazilian industrial disaster in a century (Souza and Fellet, 2019). There is a direct correlation between what is extracted at Brumadinho and digital equipment, which uses much of what is produced by Vale, the mining company that owns the dam. On Vale’s site one can read: Take a look at the computer you are using to read this, the chair you are sitting on, the telephone and cellphone that are near you so you can communicate quickly and efficiently whenever you need to. What do these objects have in common? All of them contain iron ore. Iron ore is so common in our daily lives that it is practically impossible to imagine the world we live in without it. (VALE, 2015) In the second group of authors, the relationship between digital materiality and environmental impact appears associated with economic policy of cultural production processes and digital memory (Reading, 2014; Reading and Notley, 2018); with political-economic interests of the digital industry and practices of greenwashing (Maxwell and Miller, 2012; Notley, 2019); and related to the entanglement of capital and state power with the natural world through datacenters (Brodie, 2020). Although these approaches share the same criticism of the economic models highlighted by the first group, they do not explore how large-scale datafication practices and digital platforms algorithmic processing would increase gas emissions into the atmosphere. The third group of authors has drawn attention to the relationship between internet use and energy consumption in data centers, which are required for internet services to 888 Media, Culture & Society 43(5) operate. Datafication (Mayer-Schönberger and Cukier, 2013), the process of transforming everyday actions and practices into digital data, allowing phenomena to be monitored, managed and predicted with numerically oriented strategies, and the platformization of society (Van Dijck et al., 2018), which are a feature of current digital culture, are irreversible, growing phenomena that require minerals as raw material and fossil fuels as a source of energy. Data has been likened metaphorically to oil, but in fact data is oil. The datafication of society is directly linked to a ‘data structure’ that extends from oil wells and coal mines to the cooling systems in data centers. Here, however, we establish a critical linkage between two important aspects of the media studies debate: the environmental consequences of datafication practices and the political role of algorithmic mediation in digital platforms. We consider fake news from the perspective of new materialism (Fox and Alldred, 2017; Gamble et al., 2019), arguing that the algorithmic performativity of the YouTube platform fosters the emergence of misinformation as a socio-technical, political and environmental problem. We have no intention of characterizing fake news from a discursive point of view (Anderson, 2020) or of addressing the political aspects of misinformation from the perspective of communication and the public sphere (Creech, 2020), the relationship with populist strategies and network polarization (Gerbaudo, 2018; Mejias and Vokuev, 2017) or the audience’s perception (van der Linden et al., 2020). Our goal is to expose the digital materialities of fake news production chains on YouTube that directly impact energy expenditure and the emission of pollutants into the atmosphere. Fossil fuels are fundamental for the development of what Cubitt calls semiocapitalism. As he notes, ‘oil is the dominant commodity of the early twenty-first century, without which we could not have the existing information society, cognitive capital, or semiocapitalism’ (Cubitt, 2017: 37). Oil and coal are still the main sources of energy for the data centers needed for social networks to operate. As Parikka observes: Coal is one of the most significant energy sources, powering cloud computing data centers, but also an essential part of computer production itself – as the exhibit points out, ‘81% of the energy used in a computer’s life cycle is expended in the manufacturing process, now taking place in countries with high levels of coal consumption.’ (Parikka 2015a: 99). Cubitt (2017) notes that social networks also require large amounts of energy. According to Urs Hölzle, senior vice president of Google, a simple search in Google uses 0.0003 kWh of energy, equivalent to approximately 0.2 g of CO2 emitted into the atmosphere (Google, 2009). Similarly, generating a tweet causes around 0.02 g of CO2 to be emitted (Harvey, 2019). Hence, with more than 500 million tweets a day, a total of 13.39 metric tons of CO2 are emitted every 24 hours (Danielle, 2018). According to the findings of the Shift Project published in the Brazilian newspaper O Estado de São Paulo, ‘watching a half-hour episode of a series results in emissions of 1.6 kg of carbon dioxide equivalent (. . .). This is equivalent to driving 6.28 km’. It is believed that data centers will use 4.1% of global electricity by 2030.1 According to Thylstrup (2019), digital traces can be considered pollutants linked to an extractive logic rather than neutral, immaterial instruments. As she points out, ‘the logic of datafication is premised on a logic of waste and recycling, with significant implications for how we consider datafication’s politics and ethics’ (Thylstrup, 2019: 1). Lemos et al. 889 The pollution associated with social networks is therefore not limited to the pollution produced when the hardware is manufactured or discarded. The practices associated with the consumption and use of content on YouTube generate carbon footprints. The more dynamic, polemical and controversial the messages (political polarization and fake news), the greater the engagement (more messages produced, sharing and comments posted). This mode of operation increases energy consumption in data centers and CO2e emissions in the atmosphere. According to ISO standard 14067 (ISO TISO, 2012), the carbon dioxide equivalent (CO2e) is a calculated mass for comparing the radiative forcing of a greenhouse gas (GHG) with that of carbon dioxide. There can be no doubt that the use of gossip and polemical, breaking news also produces engagement with mass media, increasing electricity consumption when radio and TV audiences increase as a result of this strategy. However, something is amplified with the logic of platforms, datafication and algorithmic performativity (Lemos, 2020) and surveillance capitalism (Zuboff, 2015, 2020). Nevertheless, unlike rumors and polemics, fake news is a product of modern digital networks. It emerges as a ramification of the algorithmic mechanisms that make content (in)visible on platforms such as YouTube, Instagram, Facebook, and Twitter. These computational mechanisms can also be characterized as information strategies for generating segmented engagement among ideological groups by the production of convenient ‘truths’ (James, 2000) In this sense, such news is fake not because it is not true – after all, fake news is an oxymoron – but because it is produced as simulacra (Baudrillard, 1994), actions that seek to simulate the chains of reference of journalism and science to mobilize engagement on networks. Fake in this case does not mean the antithesis of truth (or post-truth), but a biased way of taking advantage of the algorithmic performativity of networks to transmit something incorrect or fantastical in order to harm actors with ideologically opposing views. Fake news acts as simulacra because it mobilizes engagement among a segmented audience by imitating the devices used to circulate scientific or journalistic truth. It is precisely in this sense of fake that we shall later define the action of fake news as fake politics. The power of surveillance capitalism lies in the way behaviors are datafied. Datafication generates traces (data) which are particularly valuable and requested in the current digital economy, leading to great use of environmental resources in their production. Fake news is part of this broad datafication scenario as it allows audiences ideologically aligned with ‘false’ content that is circulated to be identified, segmented, and profiled. The use of misinformation networks as a tool for mobilizing audiences and breaking up opposing groups reveals even more toxic ramifications of these data. The forecasting of actions based on content consumption profiles on digital platforms is polluting as it demands energy for equipment, networks and data centers to operate properly. Many authors have spoken of ‘data exhaust, dirty data, bit rot, toxic data, data sweat and data trash’ (Gabrys, 2013; Lepawsky, 2018; Liboiron, 2016; Liboiron et al., 2018; Parikka, 2012), yet there has been little debate about the environmental impacts of misinformation chains on digital platforms. Produced by the algorithmic performativity of platforms, which highlights certain content and hides other, these misinformation chains allow the relationship between content and carbon footprint for communication practices in these environments to be explored. The term misinformation chains is used here in allusion to the set of actions 890 Media, Culture & Society 43(5) resulting from associations between multiple actors – such as channels, YouTubers and independent users – organized around the production, circulation and consumption of fake news and clickbait videos on the YouTube platform. Thinking about media in terms of the very diverse elements that are an integral part of it – such as minerals, chemical compounds and processes, disposal of these minerals and the carbon footprint generated by the circulation of content on social networks – is one way of understanding the materialities of digital media. In this work, we shall consider the case of the circulation of misinformation about the oil spill on beaches in Northeast Brazil at the end of 2019 to examine the environmental impacts of these materialities. An oil spill in Brazil Recent cases of deforestation in Amazonia and the oil spill along the coast of Northeast Brazil help to illustrate this relationship between carbon footprint and communication practices on digital platforms. In addition to the volume of carbon generated by the polarizing mechanism of the affective economy of data on digital platforms, the two cases cited revealed the emergence of a crisis management model in which the content released by official sources fed misinformation chains that ignored facts, reinforced the beliefs of groups with similar convictions and attacked the opposition (polarization), all the while in the absence of any of the appropriate management measures expected of the public authorities. Narratives such as ‘the oil was spilled intentionally by Venezuela (Veja, 2019)’, ‘measures are being taken by the appropriate bodies (Ministério do Meio Ambiente, 2019)’ and ‘Greenpeace caused the spill (G1, 2019)’ are examples of misinformation practices that fed environmental crisis management policies in Brazil. We explore the environmental impacts of the circulation of misinformation videos on YouTube based on the oil spill on Brazilian beaches in 2019 (the country’s worst-ever environmental disaster). A total of 591 YouTube videos published between September 11, 2019, and January 21, 2020, were extracted based on a search for the hashtags ‘Oleononordeste’ (‘oilinthenortheast’), ‘greenpixe’ (‘greenpitch’) and ‘vazamentopetroleo’ (‘oilspill’) became the most common terms once the news about the oil spill started to circulate. The term ‘greenpixe’ (‘greenpitch’) was coined by the current environment minister, insinuating that a ship belonging to the NGO Greenpeace was among those responsible for the spill. The hashtag became popularly associated with fake news. These hashtags allowed us to identify a variety of content (true content and fake news) about the subject. The data were obtained with YouTube Data API V3 (Google Developers) using the snippet, contentDetails and statistics properties. Requests were made using Python, R and tools developed by the Digital Methods Initiative (Amsterdam University). Three keys were used for the requests. The first key relates to basic data about the videos in our sample, such as publication date (snippet.publishedAt), channel ID (snippet.channelId), title of the video (snippet.title), description of the video (snippet.description), title of the channel (snippet.channelTitle), and category of the video (snippet. categoryId). The second relates to information that could help when calculating the carbon footprint produced when the videos are uploaded, such as the resolution, dimensions, and length of the video (contentDetails.definition, contentDetails.dimension, and contentDetails.duration). Lastly, the third key concerned information related to statistics Lemos et al. 891 about the popularity of the videos, including data that would also affect the calculation of the carbon footprint, such as the number of views (statistics.viewCount), and data about the number of likes, dislikes and comments (statistics.likeCount, statistics.dislikeCount, and statistics.commentCount). Viewing statistics (statistics.viewCount) and the length of the video (contentDetails. duration) were used to estimate the carbon footprint produced when the videos circulated and were viewed. To calculate the carbon footprint, we adopted the approach proposed by Priest et al. (2019), who consider the energy use associated with videos not to be limited to the energy used to process the content of YouTube servers but to include the costs of distributing the videos on mobile and cable access networks and users’ devices – according to life-cycle assessment (LCA) parameters laid down in ISO 14040:2006 (ISO TISO, 2006). The numbers presented by Priest et al. (2019) for total energy use by YouTube in 2016 (19.6 TWh) and viewing time per day in seconds (1 billion) in the same year were used to calculate total energy use and total emissions in MtCO2e per 1 second viewing time. We use the total hours viewed on 24 hours on YouTube and the total energy spent in the same year (converted to kWh) to calculate the total number of seconds viewed (41,666,666.67) and the energy cost of those total views (621.51 kWh) per second. Later, we use these reference figures to find the energy cost per second of viewing. The CO2e values presented in this work do not consider legal discounts such as carbon quotas, compensation protocols and discounts for renewable energy uses. Our focus here was to highlight broadly the environmental costs of YouTube digital materialities as they relate to misinformation chains. We arrived at a reference figure of 1.49 × 10−5 kWh energy use and 1.05 × 10−10 MtCO2e emitted into the atmosphere for every second of video watched on the platform. According to the EPA calculator, the amount of energy spent in 2016 (19.6 TWh), in absolute terms, is equivalent to 138,579.84 MtCO2e. We use this reference figure as a basis for calculating emissions per second of viewing. These figures were then converted into barrels of oil using the United States Environmental Protection Agency (EPA) calculator.2 It should be stressed that although the carbon-footprint calculation used here takes into account the energy use identified in the LCA and not just the energy required for processing in Google data centers, the numbers we used are estimates based on the 2016 reference values. Furthermore, the available API did not provide access to properties of the video such as the size of the file sent (fileDetails.fileSize) and processing of the video (processingDetails.processingProgress), which prevented the impact of the resolution (HD and SD) being included in the calculation of the carbon footprint. Consequently, the figures calculated here probably still underestimate the environmental impact. All the videos were examined individually and classified by source and profile: fake news – content, context or connections that are false, manipulated or fabricated to harm a person, social group or organization (Wardle and Derakhshan, 2017); journalistic content, and clickbait – a practice that consists of using content as ‘bait’ to direct users’ clicks and increase visibility or the number of posts for recommendation algorithms –, as shown in Figure 1. The proportion of journalistic content and misinformation (fake news and clickbait) for each source and the total CO2e emissions resulting from the circulation 892 Media, Culture & Society 43(5) Figure 1. Description of the categories used to classify the content analyzed in the corpus. Source: Authors. of journalistic content and misinformation in each group were then determined. Finally, although descriptive statistics were used, it should be noted that the analysis is essentially qualitative and that the findings are non-probabilistic. General description of the corpus The corpus consisted of 591 YouTube videos extracted with a search for the hashtags ‘oleononordeste’, ‘vazamentopetroleo’, and ‘greenpixe’. Together, the videos were viewed 7.4 million times and attracted 898,517 thousand likes and 53.7 thousand comments. According to our calculations, the total corpus viewing time in seconds (5,892,667,820.00, obtained by multiplying the total number of views by the duration of the video) accounted for 6.21 MtCO2e in greenhouse gas emissions (GHG), the Lemos et al. 893 Figure 2. Description of the corpus. Source: Authors. equivalent of 14.45 barrels of oil (Figure 2). When the corpus was classified by content, the results indicated that most of the content (80.37%) consisted of misinformation, of which 65.82% (389) was clickbait and 14.55% (86) fake news. There were 116 videos with journalistic content, accounting for only 19.63% of the information in circulation about the environmental catastrophe. In other words, the content circulated on YouTube during the oil spill and the subsequent 4 months consisted primarily of fake news, conspiracy theories and opportunistic actions to attract likes and achieve algorithmic visibility for the source – clickbait (Karaca, 2019; Munger et al., 2018; Pangrazio, 2018). However, although misinformation videos (clickbait and fake news) were most common, they accounted for only 32.51% of all views. In contrast, legitimate content accounted for 67.49% of views and 77.15% of corpus energy consumption and emissions. In concrete terms, the 116 videos with journalistic content used approximately 67,809.96 kWh and produced around 4.79 MtCO2e, the equivalent of burning 11.15 barrels of oil, while the 475 misinformation videos used 20,086.84 kWh and produced around 1.42 MtCO2e, equivalent to 22.85% of total corpus emissions or 3.30 barrels of oil (Figure 3). Despite the reach of the journalistic content, we shall see later on that the high energy consumption for this category was not only due to the large absolute number of views it attracted. Proportionally, misinformation chains operated with more optimized energy costs 894 Media, Culture & Society 43(5) Figure 3. Energy consumption by content type and channel type. Source: Authors. as they consumed around 1.6 times less oil to achieve the same number of views as legitimate content, indicating possible interference from the algorithmic performativity of the machine-learning processes that constitute the platform’s recommendation mechanisms. Filtering the data by source reveals that independent media channels are responsible for publication of 70.9% of the corpus, followed by YouTubers (12.86%), traditional media channels (7.45%) and independent users (5.41%). Candidates, official bodies, civil society organizations (CSOs) and companies together account for less than 3% of the material analyzed. Looking at the distribution of content by channel type, we find that independent media is also the media that most disseminates misinformation, 63.45% of videos of this type being transmitted by independent media, 10.15% by YouTubers and 3.55% by independent users. In contrast, journalistic content is distributed more widely throughout the corpus. Independent media channels account for 7.45% of the legitimate content, followed by traditional media channels (5.75%) and YouTubers (2.71%). Figure 4 shows energy consumption by channel type and content type. Toxic data: pollution produced by misinformation chains Although independent media accounts for the largest absolute number of videos in the corpus, energy consumption for this type of channel was equivalent to only 3.78 barrels of oil (26.19% of total corpus emissions). YouTuber channels, which represented 12.86% of all the videos, consumed around 10.05 barrels and emitted approximately 4.32 MtCO2e Lemos et al. 895 Figure 4. Distribution of videos, views, emissions and barrels by channel type and content type. Source: Authors. (69.60% of total corpus emissions). If we compare energy consumption for misinformation and for journalistic content, we can see that around 3.30 barrels of oil were used to disseminate 475 fake news and clickbait videos while 11.15 barrels were required to make 116 videos with journalistic content visible. It is as if we had used at the same time almost twice the oil spilt on the coast of Piauí to mislead 2 million users about the environmental catastrophe and most of the oil spilt along the coast of Ceará for 5 million people (approximately) to be able to learn about the catastrophe.3 Hence, for every barrel used for misinformation, approximately 3.37 barrels of oil were burnt for journalistic content. Consequently, the 19.63% of journalistic content in the corpus cannot be compared in terms of absolute numbers with the 80.37% of fake news and clickbait; nor can the environmental impact of the latter be minimized by comparing it with the absolute volume of emissions produced by viewing journalistic content. Rather, we shall argue that misinformation networks disseminating videos about the oil spill not only interfere with the reach of true content, but also amplify the environmental impact in at least three ways: (a) the energy cost of feeding algorithms as a strategy to boost regimes of visibility in recommendation rankings; (b) increased algorithmic resistance to the visibility of journalistic content as misinformation is considered important content and recommended accordingly; and (c) the directed 896 Media, Culture & Society 43(5) circulation of political speech, undermining the common good, diluting the State’s responsibility and rendering public environmental policies less effective (fake politics). We hypothesize that journalistic content is producing a legitimate carbon footprint as this type of content generates information and makes politically responsible action possible. Fake news, in contrast, amplifies the environmental disaster and generates politically biased action by private and non-republican interests. In this sense, we shall argue that fake news works as fake politics. Feeding algorithms with carbon As discussed above, although there were fewer videos with journalistic content than with misinformation, the former had more views than the latter, and the number of clickbait videos far exceeded the number of fake news videos. Furthermore, for every 3.3 barrels of oil used to disseminate journalistic content, one barrel is used to make fake content visible, to attract views and to encourage viewers to subscribe to the channel. However, if we only consider the immediate relationship between number of views and carbon footprint, we will exclude from the discussion the regimes of visibility performatized by recommendation algorithms and their active role in the production of content on YouTube and atmospheric pollution. Journalistic content not only required proportionally slightly over three times as much oil as misinformation chains to achieve only twice as many views as the latter, but produced, together with the misinformation videos, the YouTube recommendation algorithm’s regime of visibility for the environmental disaster. According to Neal Mohan, YouTube’s chief product officer, more than 70% of time spent watching videos on YouTube is the outcome of recommendation services based on artificial intelligence algorithms (Solsman, 2018). As a result of the classification rules used in these first recommendation models – like the importance of clicks, views, and subscriptions to channels – YouTube produced the phenomenon of videos used as clickbait as an alternative to boost profiles with attributes valued by the algorithms, increasing the visibility and reach of content posted. Although adjustments to the ranking algorithm have already been made (Covington et al., 2016), including a review of the parameters and implementation of sophisticated machinelearning techniques, clickbait remains an opportunistic practice that can be used to take advantage of regimes of visibility on the network. Whereas the use of clickbait lends a negative connotation to the content circulated under these strategies (Molyneux et al., 2019) and even serves as a criterion to identify fake news (Bourgonje et al., 2017; Chen et al., 2015; Li, 2019; Pangrazio, 2018), clickbait as a practice is directly linked to the materiality of information on the YouTube platform. In other words, while on the one hand the decontextualized use of content as bait to attract engagement (likes, views, comments etc.) contributes to the increase in misinformation chains, on the other, clickbait is also a practice that amplifies the reach of journalistic and other content made available by channels, mobilizing its own affective economy (Gerlitz and Helmond, 2013; Munger and Munger, 2020). Hence, in addition to the environmental influence, we have, as a result of the performance of algorithms, the formation of an audience subjected to the affective construction of an imaginary about the Brazilian oil spills weighted in favor of private interests. Lemos et al. 897 In our case, we estimate, referring only to clickbait emissions, that 1.01 MtCO2e were given off into the atmosphere so that the channels analyzed here could gain sufficient algorithmic importance and increase the visibility of videos transmitted on them. This algorithmic mechanism exposes the toxic materialities of information in computer content-recommendation models. By making the tangibility of videos dependent upon classification of content consumption experiences, YouTube recommendation systems add a layer of energy consumption to the circulation of information without offering in exchange any guarantee that the environmental resources used to feed the machinelearning networks are ethically managed. In this regime of visibility, what makes journalistic content or fake news important is the extent to which each network can feed the algorithms. In both cases, the cost of making journalistic content invisible or promoting fake news is paid with carbon and fossil fuels, as well as with cognitive biasing or identity reinforcement. The energy consumed by algorithmic resistance In the three groups with the greatest presence in our corpus (traditional media, independent media, and YouTubers), the channels with less journalistic content tended to use proportionally more barrels to achieve the same number of views as the misinformation transmitted in the same group (Figure 5). Additionally, we found that the misinformation videos needed only one barrel to get 733,406.36 views, while legitimate content achieved only 450,579.73 views with the same amount of oil equivalent. In terms of energy, the misinformation videos were viewed 120.49 times for every kWh used, while the journalistic information was viewed only 74 times for every kWh (Figure 6). We estimated the engagement rate by views (ERV) – by dividing the number of impressions of a video (likes, comments, dislikes) by the number of views. According to this formula, the average ERV of misinformation chain is 14%, about 1% greater than the average for the corpus (Figure 6). In other words, it is reasonable to suppose that the greater the amount of misinformation about specific topics (‘oleononordeste’, ‘vazamentopetroleo’, and ‘greenpixe’) that is circulating and achieving a good ERV, the more the ranking and recommendation algorithms tend to promote misinformation and require that videos with journalistic content have more likes and views to be visible. We also find that the Spearman’s coefficients for the correlations between energy consumption and the engagement variables ‘like’, ‘comment’, and ‘dislike’ were positive and corresponded to strong correlations (rs > 0.82) in both misinformation and journalistic information chains. In contrast, the coefficient for the correlation between energy consumption and ‘video duration’, while also positive, corresponded to a moderate correlation (rs = 0.69). Although journalistic videos were 3 minutes longer than misinformation ones, the correlation between energy consumption and the variable ‘duration’ in these specific groups was less strong (rs = 0.57) whereas the engagement variables and energy consumption correlations were slightly stronger (rs = 0.86). This would suggest that for the corpus analyzed here, engagement variables have a greater impact on energy consumption than the duration of the videos. Bearing in mind that the content-approval criterion used by recommendation algorithms depends on the engagement history (views, shares, likes, comments) of and level 898 Media, Culture & Society 43(5) Figure 5. Comparison of distribution of content (journalistic content, fake news, and clickbait) by source type in terms of absolute number of videos and total energy consumption in barrels of oil. Source: Authors. of interest (searches, subscriptions to channels etc.) in similar content, it is possible that in order to gain visibility, content that circulates less widely on a given network requires proportionally greater initial engagement than content that is already well known and has average engagement. This regime of (in)visibility was discussed by Taina Bucher, who analyzed the Facebook EdgeRank algorithm (Bucher, 2012); by Rieder at al. (2018), who investigated Youtube ranking algorithms; by Bitencourt (2019), who studied Amazon e-books recommendation systems; and by Morozov: In other words, the PR industry just needs to spend enough money to sustain a video’s popularity for a short period; if they are lucky, YouTube will create the impression that the meme is spreading autonomously by recommending this video to its users. (Morozov, 2014: 157) The ideas discussed here are in line with these arguments and underline the environmental impacts of these regimes of visibility. The hypothesis is that by teaching recommendation algorithms that false content is important, fake news and clickbait produce algorithmic resistance to legitimate news. By analogy with electrical resistance, this algorithmic resistance increases the energy used by journalistic content as it requires that this content have more likes and comments per video to achieve the same reach as videos that are already recognized by the recommendation algorithms. A significant increase in CO2e emissions would therefore be needed to process videos with journalistic content Lemos et al. 899 Figure 6. Comparison of proportional energy use (in barrels of oil and kWh) and number of views by content type. Source: Authors. related to the oil spill as these were less common (19.63%) than misinformation videos (80.37%). From this perspective, misinformation chains not only add an energy cost associated with feeding algorithms, but also pollute more by training recommendation systems to promote fake news and clickbait, creating resistance to the circulation of journalistic content and requiring that this type of content generate greater engagement (for which more processing is needed) in order to achieve the same level of visibility. In other words, although at first sight it would seem self-evident that the greatest energy consumption corresponds to the group with the greatest viewing time, on closer inspection we find that the relationship between visibility, reach and energy consumption varies according to the type of content transmitted. This may indicate that the content-recommendation algorithm imposes additional material energy barriers, optimizing the reach of common content and requiring more carbon from less common videos. The energy resistance produced by algorithmic regimes of visibility requires that any discussion on environmental responsibility in the context of the consumption of information on YouTube be extended to address this issue. Watching, liking or commenting on a video may not only restrict circulation of future videos, but also increase the energy cost of their materialization on the network. If we consider that clickbait practices are widespread in our corpus, it is reasonable to argue that misinformation chains could impose this additional cost, namely, the extra energy consumption required to achieve the engagement necessary to overcome the reduced visibility of content that is less widely viewed in certain networks. Liking content or attracting likes to it can be doubly polluting: in addition to the feeding of algorithms already discussed, computational barriers are erected that require more fossil fuel for the increased engagement needed to achieve visibility. Fake news as fake politics The misinformation network produces more systemic pollution in the form of devaluation of the political domain by directing the circulation of political speech in a non-republican 900 Media, Culture & Society 43(5) way, undermining the common good. It thus adds yet another layer of pollution by reducing critical awareness of the role of the State, manipulating public opinion and diluting networks of accountability in the event of catastrophes. Misinformation chains constitute fake politics not in the sense of false politics, as they seek to act in the field of politics, where their action involves disclosure (veritas), but because this disclosure is based on false chains of reference. The political fake here, just like fake news, refers to a ‘masquerade’, a disguise. Fake news is fake politics because it sets in train the circulation of information without any basis in solid reference chains as a ‘political simulacrum’; a political simulacrum that exploits the algorithmic mediations of the network in order to identify groups more sensitive to specific ideologies, to confine the debate to within these ideological bubbles and to promote conflicting attitudes. Fake news acts as fake politics to the extent that it acts politically not so much by biasing the felicity conditions for the mode of politics (as it puts political speech in circulation), as by breaking the felicity conditions for the mode of disclosure of scientific or journalistic facts (reference chains, the Mode of Reference – REF) (Latour, 2013). The political action of fake news amplifies the reverberation of specific values within ideological bubbles, promoting antagonism of ideas based on simulacra of news and undermining the public sphere by the absence of a solid basis for the information disseminated in this way. What we call fake politics is precisely this non-republican way of acting through information, through the algorithmically performative action of social network platforms to reach people or groups, polarizing the debate by circulating political speech built on false foundations and undermining the common good. As one of the material consequences of this mechanism, we would highlight the increase in energy consumption in data centers and the carbon footprints thus generated. As misinformation (fake news and clickbait) chains materialize by manipulating the logic of engagement and circulation of digital platforms, they not only affect the way public opinion is manipulated, but also directly affect the environment, with implications in terms of energy, economics and politics. Misinformation chains are thus doubly polluting: they not only generate a carbon footprint, but also undermine the common good by the use of algorithmic systems to datafy action chains geared toward the production and reinforcement of beliefs for political ends. In the case of the oil spill in Brazil, misinformation chains not only increase carbon consumption in data centers, but also seek to shape an irresponsible environmental policy that denies reality and is geared toward the interests of private groups that refuse to accept global warming and disagree with the efforts of conservation institutions. By using false rhetoric to disorient and manipulate public opinion, misinformation chains translate political ethics (circulation of political speech and actions based on false reference chains that affect the common good) into political aesthetics (affecting, producing reactions in groups with similar beliefs, sensitizing to produce actions aligned with the values circulating in bubbles in the network) and increase energy consumption for private purposes. Misinformation chains thus help to produce this fake politics effect, with practical consequences in terms of a distancing of the State not only from its political responsibilities in the case in question but also from its responsibilities for the environmental consequences of the widespread use of fake news. Lemos et al. 901 Conclusion In this article we have analyzed the materiality of misinformation and digital media from the perspective of new materialism. Unlike other authors, we do not focus on digital materialities only in terms of the environmental cost of the production and disposal of hardware (Gabrys, 2013, 2016; Gitelman, 2013; Hogan, 2015; Räsänen and Nyce, 2013) or aspects of labor exploitation, the growth in inequality, the lack of regulation in the digital industry and greenwashing practices (Brodie, 2020; Maxwell and Miller, 2012; Notley, 2019; Reading, 2014; Reading and Notley, 2018). Of the issues discussed in the literature that draws attention to the energy costs associated with the consumption, production and circulation of information on digital platforms (Cubitt, 2017; Parikka, 2012, 2015a, 2015b), we highlight the materialities of digital media by investigating the entangling of algorithmic mediation, communication practices on digital platforms, misinformation and the environment. We have shown how the content about the environmental catastrophe in the Northeast of Brazil in 2019 that circulated on YouTube consists mainly of fake news and opportunistic actions (clickbait) to attract likes and increase the algorithmic visibility of the source. As we saw, journalistic content used 11.15 barrels of oil (77.20% of total corpus emissions) while misinformation chains used 3.30 barrels (22.87% of total corpus emissions). However, this effect can be attributed to the resistance created by algorithmic performativity, which considers misinformation important content and recommends it accordingly. Misinformation networks thus increased the energy required to feed algorithms as part of a strategy to increase visibility in the recommendation rankings. As mentioned in the article, the energy cost of achieving a given number of views was 1.6 times less for misinformation chains than for legitimate content, indicating interference by the platform’s algorithmic performativity. In addition to the increased carbon footprint due to the digital materiality of the platform’s algorithms, misinformation networks produce another type of toxicity: they undermine the common good, directing the circulation of political speech and creating affects that reinforce hardly republican identifying logic. Diverting public debate toward chains that have no factual basis implies denying or misunderstanding the causes of the disaster and the environmental measures that should be implemented through public policies. For any information to be produced, energy must be consumed, and in the case studied here journalistic content generated a larger carbon footprint than misinformation chains. However, information that is of interest to the public and disseminated to promote the common good could be considered to have a plausible justification (although an effective change in the energy mix of the data centers of the companies responsible for the platforms would be required). Misinformation chains, in contrast, because they seek to destroy the common good and produce individual (personal and group) advantages, could be considered entropic. Our material analysis of the use of YouTube videos suggests that there is a more generic law characterizing platformization, datafication, and algorithmic performativity (Author 1 Removed, 2020) in contemporary society: the carbon footprint generated by communicational practices within digital networks (the production, accessing, and 902 Media, Culture & Society 43(5) sharing of information) is a function not of the content of the information but of the algorithmic performativity of the platform. The processes involved in building beliefs cannot be considered in isolation from the materialities and politics of digital media. Algorithmic performativity generates an additional energy cost because of the engagement practices needed to make up for the limited visibility of content that is less common in certain networks. In misinformation chains, experiences that produce information are both data-collection methods and content-visualization filters that reinforce identities of specific groups by datafication of affects through algorithmic filters. In stating this, we endeavor to show that misinformation not only relates to the public sphere, political polarization and the concept of truth, but is also a political, technological and environmental phenomenon that takes place through algorithmic mediation. In order to understand the political and environmental problem posed by the contemporary media ecology, further investigations are required. In the introduction we highlighted two issues that are not always taken into account in discussions about misinformation, media, materialism and environmentalism. Firstly, there is a need to move beyond issues related to technological components and e-waste. As we have shown, environmental problems are also caused by the algorithmically mediated production, circulation and consumption of information. Secondly, misinformation and fake news should not be explored using only hermeneutic approaches. This paper has shown how fake news and disinformation are material-discursive elements entangled with media infrastructure, algorithmic mediations, political regimes, social relations and the environment. Funding The author(s) disclosed receipt of the following financial support for the research, authorship, and/ or publication of this article: The authors received financial support from CNPq Research grant (Productivity Fellowship PQ-1A – 307448/2018-5, Brazil) for the research and publication of this article. The Authors declare that there is no conflict of interest. ORCID iD Elias Cunha Bitencourt https://orcid.org/0000-0001-7366-6469 Accessibility statement Research data is available for download. Notes 1. 2. 3. See https://cultura.estadao.com.br/noticias/televisao,assistir-a-netflix-tem-alto-preco-ambiental-dizem-especialistas,70003067942. For further details see https://theshiftproject.org/en/ article/unsustainable-use-online-video/ See https://www.epa.gov/energy/greenhouse-gas-equivalencies-calculator A total of 1.8 barrels are estimated to have been collected from the beaches of Piauí and 18 along the coast of Ceará. See: https://piaui.folha.uol.com.br/sobre-o-oleo-derramado/ Lemos et al. 903 References Anderson ER (2020) The narrative that wasn’t: What passes for discourse in the age of Trump. Media, Culture and Society 42(2): 225–241. DOI: 10.1177/0163443719867302. Bitencourt EC (2019) Os Livros Intermitentes: Um ensaio sobre as materialidades da representação algorítmica do livro na plataforma de autopublicação da Amazon. In Porto C and Santos E (Orgs.) O livro na cibercultura. São Paulo: Editora Universitária Leopoldianum, pp. 203–225. Available at: https://www.academia.edu/40929678/OS_LIVROS_INTERMITENTES_Um_ ensaio_sobre_as_materialidades_da_representação_algorítmica_do_livro_na_Plataforma_ de_Autopublicação_da_Amazon Baudrillard J (1994) Simulacra and Simulation. Ann Arbor, MI: University of Michigan Press. Bonneuil C and Fressoz J-B (2016) The Shock of the Anthropocene: The Earth, History and Us. London and New York: Verso Books. Bourgonje P, Schneider JM and Rehm G (2017) From clickbait to fake news detection : An approach based on detecting the stance of headlines to articles. In: Proceedings ofthe 2017 EMNLP workshop on natural language processing meets journalism, Copenhagen, 2017, pp. 84–89. Stroudsburg, PA: Association for Computational Linguistic. Brodie P (2020) Climate extraction and supply chains of data. Media, Culture and Society. Epub ahead of print March 4, 2020. DOI: 10.1177/0163443720904601. Bucher T (2012) Want to be on the top? Algorithmic power and the threat of invisibility on Facebook. New Media & Society 14: 1164–1180. DOI: 10.1177/1461444812440159. Chen Y, Conroy NJ and Rubin VL (2015) Misleading online content : Recognizing clickbait as ‘false news’. In: Proceedings of the ACM workshop on multimodal deception detection, Washington D.C., 2015, pp. 15–19. New York, NY, ACM. DOI: 10.1145/2823465.2823467. Covington P, Adams J and Sargin E (2016) Deep neural networks for YouTube recommendations. In: Proceedings of the 10th ACM conference on recommender systems, 2016. DOI: 10.1145/2959100.2959190. Creech B (2020) Fake news and the discursive construction of technology companies’ social power. Media, Culture and Society 42(6): 952–968. DOI: 10.1177/0163443719899801. Cubitt S (2014) Decolonizing ecomedia. Cultural Politics 10(3): 275–286. Cubitt S (2017) Finite Media: Environmental Implications of Digital Technologies. Durham and London: Duke University Press. Cubitt S, Hassan R and Volkmer I (2011) Does cloud computing have a silver lining? Media, Culture and Society 33(1): 149–158. DOI: 10.1177/0163443710382974. Danielle (2018) The carbon footprint of the internet. Available at: https://www.creditangel.co.uk/ blog/consumption-and-carbon-footprint-of-the-internet (accessed 12 January 2020). Fox NJ and Alldred P (2017) Sociology and the New Materialism. Theory, Research, Action. London: SAGE Publications. G1 (2019) Salles afirma que Greenpeace ‘tem que se explicar’ por ter navio perto do litoral quando manchas de óleo surgiram; ONG diz que ministro ataca com ‘mentira’. G1 Notícias, 24 October. Available at: https://g1.globo.com/se/sergipe/noticia/2019/10/24/salles-afirma-quegreenpeace-tem-que-se-explicar-por-ter-navio-perto-do-litoral-brasileiro-quando-manchasde-oleo-surgiram-no-nordeste-ong-diz-que-ministro-ataca-com-mentira.ghtml. Gabrys J (2013) Digital Rubbish: A Natural History of Electronics. Ann Arbor, MI: University of Michigan Press. Gabrys J (2016) Program Earth: Environmental Sensing Technology and the Making of a Computational Planet. Minneapolis, MN: University of Minnesota Press. Gamble CN, Hanan JS and Nail T (2019) What is new materialism? Angelaki - Journal of the Theoretical Humanities 24(6): 111–134. DOI: 10.1080/0969725X.2019.1684704. Gerbaudo P (2018) Social media and populism: An elective affinity? Media, Culture and Society 40(5): 745–753. DOI: 10.1177/0163443718772192. 904 Media, Culture & Society 43(5) Gerlitz C and Helmond A (2013) The like economy: Social buttons and the data-intensive web. New Media & Society 15: 1–29. DOI: 10.1177/1461444812472322. Gitelman L (2013) Raw Data Is an Oxymoron. Cambridge, MA: MIT Press. Google (2009) Powering a Google search. Available at: https://googleblog.blogspot.com/2009/01/ powering-google-search.html (accessed 10 January 2020). Hamilton C, Gemenne F and Bonneuil C (2015) The Anthropocene and the Global Environmental Crisis: Rethinking Modernity in a New Epoch. London and New York: Routledge. Harvey L (2019) Is the cloud killing the environment? The Face, November. Available at: https:// theface.com/life/cloud-technology-data-environment-carbon-footprint. Hogan M (2015) Data flows and water woes: The Utah Data Center. Big Data & Society 2(2): 1–12. DOI: 10.1177/2053951715592429. Hogan M (2015) Facebook data storage centers as the archive’s underbelly. Television and New Media 16(1): 3–18. DOI: 10.1177/1527476413509415. ISO TISO (2006) ISO 14040:2006 environmental management – Life cycle assessment – Principles and framework. ISO TISO (2012) Carbon footprint of products – Requirements and guidelines for quantification and communication (Draft International Standard ISO/DIS 14067). James W (2000) From the meaning of truth (1909). In: Pragmatism and Other Writings. London: Penguin Books. Karaca A (2019) News Readers’ Perception of Clickbait. Istanbul: Kadir Has University. Latour B (2013) An Inquiry Into Modes of Existence. An Anthropology of the Moderns, 1st ed. London: Harvard University Press. Latour B (2017) Why Gaia is not a god of totality. Theory, Culture & Society 34(2–3): 61–81. Lemos ALM (2020) Plataformização, dataficação e performatividade algorítmica (PDPA): Desafios atuais da cibercultura. In Prata N and Pessoa SC (Orgs.) Fluxos Comunicacionais e Crise da Democracia. São Paulo: Intercom, pp. 117–126. Lepawsky J (2018) Reassembling Rubbish: Worlding Electronic Waste. Cambridge, MA: MIT Press. Li Q (2019) Clickbait and Emotional Language in Fake News. preprint. Austin, Texas, pp.2–11. Available at: https://www.ischool.utexas.edu/~ml/papers/li2019-thesis.pdf. Liboiron M (2016) Redefining pollution and action: The matter of plastics. Journal of Material Culture 21(1): 87–110. Liboiron M, Tironi M and Calvillo N (2018) Toxic politics: Acting in a permanently polluted world. Social Studies of Science 48(3): 331–349. Maxwell R and Miller T (2012) Greening the Media. New York: Oxford University Press. DOI: 10.1017/CBO9781107415324.004. Mayer-Schönberger V and Cukier K (2013) Big Data: A Revolution That Will Transform How We Live, Work, and Think. Kindle. Boston, MA, New York, NY: Houghton Mifflin Harcourt. Mejias UA and Vokuev NE (2017) Disinformation and the media: The case of Russia and Ukraine. Media, Culture and Society 39(7): 1027–1042. DOI: 10.1177/0163443716686672. Ministério do Meio Ambiente (2019) Governo realiza todas as ações para conter óleo na praia. Molyneux L, Coddington M and Molyneux L (2019) Aggregation, clickbait and their effect on perceptions of journalistic credibility and quality of journalistic credibility and quality. Journalism Practice 14: 429–446. DOI: 10.1080/17512786.2019.1628658. Morozov E (2014) To Save Everything, Click Here: The Folly of Technological Solutionism. New York, NY: PublicAffairs. Munger K, Luca M, Nagler J, et al. (2018) The Effect of Clickbait. New York. Available at: https:// csdp.princeton.edu/sites/csdp/files/media/munger_clickbait_10182018.pdf. Munger KM and Munger K (2020) All the news that’s fit to click: The economics of clickbait media. Political Communication 37(3): 376–397. DOI: 10.1080/10584609.2019.1687626. Lemos et al. 905 Notley T (2019) The environmental costs of the global digital economy in Asia and the urgent need for better policy. Media International Australia 173(1): 125–141. DOI: 10.1177/1329878X19844022. Pangrazio L (2018) What’s new about ‘fake news’?: Critical digital literacies in an era of fake news, post-truth and clickbait Citation : Pangrazio, Luci 2018, What’s new about ‘fake news’?: Critical digital literacies in an era of Reproduced by Deakin University. Páginas de educación 11(1): 6–22. DOI: 10.22235/pe.v11i1.1551. Parikka J (2012) Forum : New materialism new materialism as media theory: Medianatures and dirty matter. Communication and Critical/Cultural Studies 9(1): 95–100. Parikka J (2015a) A Geology of Media. Minneapolis, MN: University of Minnesota Press. Parikka J (2015b) Postscript: Of disappearances and the ontology of media. In Ikoniadou E and Wilson S (eds.) Media After Kittler. London: Rowman & Littlefield, pp.177–190. Preist C, Schien D and Shabajee P (2019) Evaluating sustainable interaction design of digital services: The case of YouTube. In: Conference on human factors in computing systems – proceedings. pp.1–12. DOI: 10.1145/3290605.3300627. Räsänen M and Nyce JM (2013) The raw is cooked: Data in intelligence practice. Science, technology, & human values 38(5): 655–677. Reading A (2014) Seeing red: A political economy of digital memory. Media, Culture and Society 36(6): 748–760. DOI: 10.1177/0163443714532980. Reading A and Notley T (2018) Globital memory capital: Theorizing digital memory economies. In: Hoskins A (ed.) Digital Memory Studies. London: Routledge, pp. 234–249. Rieder B, Matamoros-Fernández A and Coromina Ò (2018) From ranking algorithms to ‘ranking cultures’: Investigating the modulation of visibility in YouTube search results. Convergence 24(1): 50–68. DOI: 10.1177/1354856517736982. Solsman JE (2018) YouTube’s AI is the puppet master over most of what you watch. Souza F and Fellet J (2019) Brumadinho pode ser 2° maior desastre industrial do século e maior acidente de trabalho do Brasil. Revista Época Negócios. Available at: https://epocanegocios. globo.com/Brasil/noticia/2019/01/brumadinho-pode-ser-2-maior-desastre-industrial-doseculo-e-maior-acidente-de-trabalho-do-brasil.html (accessed 5 January 2020). Taffel S (2016) Technofossils of the Anthropocene: Media, geology, and plastics. Cultural Politics 12(3): 355–375. Thylstrup NB (2019) Data out of place: Toxic traces and the politics of recycling. Big Data & Society 6(2): 2053951719875479. VALE (2015) A mineração faz parte do nosso dia a dia. van der Linden S, Panagopoulos C and Roozenbeek J (2020) You are fake news: Political bias in perceptions of fake news. Media, Culture and Society 42(3): 460–470. DOI: 10.1177/0163443720906992. Van Dijck J, Poell T and De Waal M (2018) The Platform Society. New York, NY: Oxford University Press. Veja (2019) Bolsonaro volta a falar em vazamento criminoso no Nordeste e cita Maduro. Veja, 23 October. Available at: https://veja.abril.com.br/brasil/bolsonaro-volta-a-falar-em-vazamento-criminoso-no-nordeste-e-cita-maduro/. Wardle C and Derakhshan H (2017) Information disorder: Toward an interdisciplinary framework for research and policy making. Report to the Council of Europe: 108. Available at: https://rm.coe. int/information-disorder-toward-an-interdisciplinary-framework-for-researc/168076277c. Zuboff S (2015) Big other: Surveillance capitalism and the prospects of an information civilization. Journal of Information Technology 30(1): 75–89. DOI: 10.1057/jit.2015.5. Zuboff S (2020) The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: Public Affairs.