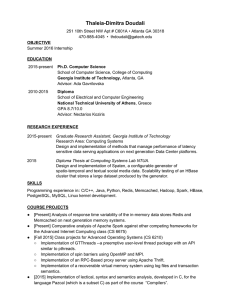

Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 A Study of Big Data Analytics using Apache Spark with Python and Scala 2020 3rd International Conference on Intelligent Sustainable Systems (ICISS) | 978-1-7281-7089-3/20/$31.00 ©2020 IEEE | DOI: 10.1109/ICISS49785.2020.9315863 1 Yogesh Kumar Gupta 1 2 Assistant Professor, Department of Computer Science Banasthali Vidyapith gyogesh@banasthali.in Abstract— Data is generated by humans every day via various sources such as Instagram, Facebook, Twitter, Google, etc at a rate of 2.5 quintillion bytes with high volume, high speed and high variety. When this huge volume of data with high velocity is handled by the traditional approaches, it becomes inefficient and time-consuming. Apache S park technology has been used that is an open-source in-memory clusters computing system for fast processing. This paper introduces a brief study of Big Data Analytics and Apache S park which consists of characteristics (7V’s) of big data, tools and application areas for big data analytics, as well as Apache S park Ecosystem including components, libraries and cluster managers that is deployment modes of Apache spark. Furthermore, this also presents a comparative study of Python and S cala programming languages with many parameters in the aspect of Apache S park. This comparative study will help to identify which programming language like Python and S cala are suitable for Apache S park technology. As result, both Python and S cala programming languages are suitable for Apache S park, however language choice for programming in Apache S park is depending on the features that best suited the needs of the project since each one has its own advantages and disadvantages. The main purpose of this paper is to make things easier for the programmer in the selection of programming languages in Apache S park-based on their project. Keywords— Big Data, Apache S park, Cluster Computing, Python, S cala. I. INT RODUCT ION Big Data is a large set of data that can be structured, semistructured, or unstructured form, which is gathered from a variety of data sources like Social Media, Cell Phones, HealthCare, E-commerce, etc. John Mashe coined the term Big Data in the 1990s, and it got trendy in the 2000s. There are some tools and techniques of big data analytics such as Apache Hadoop, MapReduce, Apache Spark, NoSQL, database, and Apache Hive for data processing that is used to manage a massive amount of big data. The main purpose of collecting and processing huge amounts of big data helps organizations to better understanding. Moreover, it also helps to find the information that is most important for future business decisions. There are three types of Big Data. Structured Data- Those types of data is already stored in databases in an ordered manner is called structured data. Nowadays, at least 20% of the data are structured data, which Surbhi Kumari 2 M .Tech(CSE) Research Scholar Banasthali Vidyapith surbhiroy25july@gmail.com is generated from the sensors, weblogs, machines, and humans, etc. Examples of structured data are DBMS, MySQL, Spread sheet, etc. Semi-structured Data:- Datasets can be in a structured or unstructured format is called semi-structured data. That’s why the developer faces difficulty categorizing it. Moreover, semistructured data can also be handled through the Hadoop System. Examples of semi-structured data are JSON documents, BibTex files, CSV files, XML, etc. Unstructured Data:- Unstructured data are those data which does not have any format and cannot be stored in a rowcolumn form. It can only be handled through Hadoop System. At least, 80% of data are structured data in the world. Examples of unstructured data are images, audio, video, text, pdf, media posts, word documents, log files, E-mails data, etc. Hadoop is one of the popular open-source scalable faulttolerant platforms for large scale distributed batch processing by using cluster commodity servers. It was developed against common failure issues of execution in a distributed system. However, if compared to its performance with other technologies is not good since data is accessed from disks for processing. Hadoop provides fault tolerance so organizations have not needed expensive products for processin g tasks on large data sets. There are two key Hadoop building blocks: a Hadoop Distributed File System that can accommodate large datasets and a MapReduce engine that evaluates results in batches. MapReduce is a distributed programming model for processing massive datasets across a large cluster. It has two functions:- Map and Reduce, which helps to utilize the available resources for parallel processing of large data. It is used for batch and persistent storage processing. However, MapReduce has not been developed for real-time processing. Apache Spark is a powerful open-source parallel processing, flexible and user friendly platform which is very appropriate for storing and performing big data analytics. It can be run on vast cloud clusters and also run on a small cluster, even run locally on student computers with smaller datasets. Providers such as AWS and Google Cloud have supported it. With the RDD can quickly perform processing tasks on very large data sets as stored in memory. Apache Spark framework consists of several dominant components 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 471 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 which include Spark core and upper-level libraries: Spark SQL, Spark Streaming, Spark MLlib, GraphX and SparkR which helps to perform a wide range of workloads including batch processing, machine learning, interactive queries, streaming processing, etc. Apache Spark system leads with language-integrated APIs in SQL, Scala, Java, Python and R. The major functions are accomplished on Spark Core. Existing components are tightly integrated with the Spark Core which provides one unified environment. It is more efficient for especially iterative or interactive applications than all other technologies. In this way, Apache Spark is better than other technologies. A. Characteristics of Big Data wants to extract meaningful insights from data, they need to clean up to minimize noise. Big data benefits can only come from applications when the data is meaningful and reliable. Therefore, data cleansing is necessary so that inaccurate and unreliable information can be filtered out. Example:- Data set of high veracity will be from a medical procedure or trial. Validity:- The validity of the data refers to the accuracy and correctness of data used to obtain the result in form of information. It is very important to make decisions. Volatility:-The volatility of big data implies the stored data and how long it is useful for future use. Since the data which is valid right now might not be valid just a few minutes or a few days later. Value:-The value of data is the most important element of 7V’s in big data. It is not just the amount of data that stores or processes by individuals. In reality, it is the amount of precious, accurate, and trustworthy information that needs to be stored, processed, analyzed to find insights. B. Challenges in Big data analytics There are many computational methods available that work well with small data but it does not work well for data that is generating with high volume and velocity. Traditional tools are not efficient to process big data and the complexity to process big data is very high. The following challenges arise when big data is analyzed. 1) Fig. 1. 7Vs of Big Data Volume:- Big data implies enormous volumes (TeraByte, Peta Byte) of data. Data is created by various sources, including internet explosion, social media, healthcare, Internet of Things, e-commerce, and other systems, which need large storage s o the volume of data is a big challenge. Velocity:- Velocity of data refers to the low latency, or how fast the data is generated by multiple sources at high velocities viz: social media data, healthcare data, and retail data. Every second, 1.7 MB of data is provided by every person during 2020. Variety:- Variety refers to the various types of data that can be structured, unstructured or semi-structured, existing different forms of data for example text data, emails, tweets, log files, user reviews, photos, audios, videos, and sensors data. Example: - High variety of data sets would be the CCTV audio and video files that are produced at different places in a city. Veracity:- The veracity of data refers to noise and abnormality in big data. All data will not be 100 % correct when dealing with high volume, velocity, and variety of data so there can be dirty data. If anyone 2) 3) Data Heterogeneity and Incompleteness. A major problem of how to include all the data from various sources to discover the pattern and trend for researchers. It can remain difficult to analyze the unstructured and semi-structured data. Hereby, data must be discreetly structured before analysis. Ontology matching is a common approach based on semantics that detects the similarities among ontologies of multiple sources. After data cleaning and error correction, certain incompleteness and errors can persist in datasets. During data processing, this incompleteness and errors must be handled. It's a challenge to do this in the right way. Scalability and Storage. In data analytics, the management and analysis of massive volumes of data is a challenge. Storage systems are not adequately capable of storing rapidly increasing data sets. Though, by improving processor speed such problems can be ameliorated. Therefore, needed to develop a processing system that will also maintain the necessity of the future. Security and Privacy. A much more serious issue is how to find meaningful information from large and rapidly generated datasets. Researchers have many methods and techniques to access data from any data source to discover trends in data. They have ceased worrying about an individual's security and privacy. 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 472 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 4) When data is shared and agglomerated beyond dynamic or distributed computing systems. Organizations have been using diverse de-identification approaches to maintain privacy and security. Human Collaboration. Despite the enormous advancements made in computational analysis, there are still many patterns that humans can easily identify, but computer algorithms have a difficult time identifying them. The big data analysis framework must support input from diverse human experts and the sharing of results. These many experts can be segregated in space and time when it is too pricey to combine an entire team in one room. This distributed expert input must be accepted by the data system and their collaboration must be supported. Other challenges: Data Replication, Data Locality, Combining Multiple Data Sets, Data Quality, Fault-Tolerance of Big Data Applications, Data Availability, Data Processing, and Data Management. C. Tools of Big Data Analytics 1) Apache Hadoop:- Apache Hadoop is one of the most prominent big data frameworks and is written in Java. Hadoop is originally designed to continuously gather data from multiple sources without worrying about the type of data and storage across a distributed environment. Moreover, it can only perform batch processing. 2) MapReduce:- MapReduce is a programming model that processes and analyzes huge data sets. Google introduced it in December of 2004. Moreover, it is used for batch processing and persistent storage. However, MapReduce was not built for real-time processing. 3) Apache Hive:- Apache Hive is a SQL-like query language and established by Facebook. Hive is a data warehousing component that performs reading, writing, and managing large datasets in a distributed environment. 4) Apache Pig:- Apache Pig is a high-level data flow platform for executing MapReduce programs of Hadoop and which was originally developed by Yahoo in 2006. By using this, all data manipulation operations in Hadoop can be performed. 5) Apache HBase:- Apache HBase is a distributed column-oriented database that is run at the top of the HDFS file system. It is nothing but a NoSQL DataStore, and it is similar to a database management system, but it provides quick random access to a huge amount of structured data. 6) Apache Storm:- Apache Storm is an open-source distributed real-time computation system. It is used wherever to generated a lot of data streaming. Twitter uses it for real-time data analysis. 7) Apache Cassandra:- Apache Cassandra is a free open source NoSQL database and which was created by Facebook. It is more popular and very robust to handle huge amounts of data. 8) Apache Spark:- Apache Spark is one of the most prominent and highly valued big data frameworks. It was developed by people from the University of California and written in Scala. The performance of Apache Spark is fast because it has in-memory processing. It does real-time data processing as well as batch processing with a huge amount of data and requires a lot of memory, but it can deal with standard speed and amount of disk. D. Application areas of Big Data Analytics Healthcare:- Big Data analytics is used with the aid of a patient's medical chronicle data to determine how likely they are to have health problems. Furthermore, Big data analytics are used in healthcare to minimize costs, vaticinate epidemics, and prevent preventable diseases. The Electronic Health Record is one of the most popular applications of Big Data in the healthcare industry. Banking:- Banks use big data analytics to identify fraudulent activities from the transaction. Due to the analytics system stops fraud before it occurs and the bank improves profitability. Media and Entertainment:- Entertainment and media industries are using big data analytics to understand what content, products, and services people want. Telecom:- The most relevant contributor to big data is telecoms. They improve the services and routes of traffic more efficiently. Furthermore, the analytics system is used to recognize records of call details, fraudulent behavior, and which also helps to take action immediately. Government:- The Indian government used big data analytics which helps law enforcement and to get an estimation of trade in the country. Due to big data analytics, governmental procedures allow competencies in terms of expenditure, productiveness, and innovation. Education:- Nowadays the education department is being observed big data analytics gradually. As a result of big data-powered technologies have been improved learning tools. Besides, it's used to enhance and develop existing courses according to trade requirements. Retail:- Retail uses Big Data Analytics to optimize its business, including e-commerce and in-stores. For example, Amazon, Flipkart, Walmart, etc. E. Overview of Apache Spark Technology Apache Spark is an open-source distributed, in-memory cluster computing framework designed to provide faster and easy-to-use analytics than Hadoop MapReduce. In 2009, AMPLab of UC Berkeley designed Apache Spark and first released it as open-source in March 2010 and donated to the Apache Software Foundation in June 2013. This open -source framework protrudes for its ability to process large volumes of data. Spark is 100 times faster than MapReduce of Hadoop since there is no time consumed in transferring and processing data in or out of the disk because all of these processes are 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 473 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 done in memory. It supports stream processing, known as realtime processing which includes continuous input and output of data and is suitable for trivial operations and massive data processing on large clusters. Many organizations such as Healthcare, Bank, Telecom, E-Commerce, etc; and all of the major gigantic technology companies such as Apple, Facebook, IBM, and Microsoft are used Apache Spark to improve their business insights. These companies collect data in terabytes from various sources and process it, to enhance consumer's services. Apache Spark Ecosystem is having various components, including Spark Core and upper-level libraries such as Spark SQL, Spark Streaming, Spark Mllib, GraphX, and SparkR which are built atop of Spark Core. Cluster Managers viz; Standalone, Hadoop YARN, Mesos and Kubernetes are operated by Spark Core. batch and streaming processing of data in the application. d) Spark MLlib:- It is a package of Apache Spark which accommodates multiple types of machine learning algorithms (classification, regression, and clustering) on top of spark. It performs data processing with large datasets to recognize the patterns and make decisions. Machine learning algorithms run with many iterations for the desired objective in an adaptable manner. e) GraphX:- Apache Spark leads with a module to allows Graph distributed computing in Graph data structures. A graph data structure is having a network of organizations like non-manual social networks. GraphX is also called Pregel that revealed by Google agent in 2010. f) SparkR:- It is a module os Apache Spark that produces incompetent forefront. SparkR is a cluster computational platform that allows the processing of structured data and ML tasks. Although, R programming language was not invented to manages large datasets that cannot suitable to a single machine. The cluster manager manages a cluster of computers that consists of CPU, memory, storage, ports, and other resources available on a cluster of nodes. Spark supports cluster managers, including Standalone, Yarn, Mesos, and Kubernetes which provides a script that can be used to deploy a Spark application. Apache Spark can be operated through many cluster managers. Currently, there are available some modes for the deployment of Spark. 1. Fig. 2. Apache Spark Ecosystem a) Spark Core:- Spark Core is the main component that available in the Apache Spark tool, all processes of Apache Spark are handled by Spark Core. Apache Spark provides some libraries such as Spark SQL, Spark Streaming, Spark MLlib, and Graphx are built on the top of Spark Core. It has RDD's, which stands for resilient distributed datasets, which helps to execute Spark's libraries in a distributed environment. b) Spark SQL:- Spark SQL is a data processing framework in Apache Spark that is built on top of Spark Core. Both structured and semi-structured data can be accessed via the Spark SQL. Spark SQL can read the data from different formats such as text, CSV, JSON, Avro, etc. It can create powerful, interactive, and analytical applications through both streaming and historical data. c) Spark Streaming:- The Spark Streaming component is built on top of Spark Core moreover used to process real-time data and live data. It also allows us to perform 2. 3. Standalone:- The term standalone is meant by it does not need an external scheduler. Spark standalone cluster manager provides everything to start Apache Spark. It can run quickly with few dependencies or environmental considerations . Standalone is a cluster management technique that is responsible for managing the hardware and memory that runs on a node. Spark applications are corroborated through it. Furthermore, it manages the Spark components and provides limited functionality. Several applications use Standalone, such as Microsoft Word, Autodesk 3D Max, Adobe Photoshop, and Google Chrome. This cluster manager can be run on Linux, Mac, or Windows. Hadoop YARN:- Even YARN (another resource negotiator) is also a generic open-source cluster manager that enables Spark application to share cluster resources with Hadoop MapReduce applications. It is associated with a component called Job-tracker that provide features such as cluster managing, job scheduling, and monitoring capabilities. YARN supports both client and cluster mode deployment of a Spark application. It can run on Linux or Windows. Apache Mesos:- Apache Mesos is also an opensource cluster manager that is exquisitely scalable to 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 474 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 4. thousands of nodes. It is a master slave-based system and has fault-tolerant. For a cluster of machines, it can be known as an operating system kernel. It pools computing resources together on a cluster of machines and allows those resources to be spread through different applications. Mesos is developed to support a diversity of distributed computing applications that can be share both static and dynamic cluster resources. Some organizations such as Twitter, Xogito, Media Crossing, etc are used Apache Mesos and can be run it on Linux or Mac operating systems. Kubernetes:- Spark runs on clusters that are organized throKubernetes. Due to the open-source container management platform, it has been ingested to spark. It comes up with Google in 2014. Kubernetes brings with its advantages as feasibility and stability. So can run full spark cluster on it. Kubernetes is a portable and cost-effective platform that comes with self-healing abilities. It is developed for managing the complex distributed system without invalidating of containers empowers . model of big data performs some operation like calculating average speed rate, code necessity, etc, with the spark. T his application was conducted by data processing techniques. T hus, applied it to the healthcare system. [4] [5] Ajaysinh, R. P., & Somani, H. (2016) Salwan, P., & Maan, V. K. (2020) Apache Spark, and Machine Learning Algorithms Apache Spark II. LIT ERAT URE REVIEW Table 1. Literature Survey Sr. No. [1] [2] [3] Author Name Omar, H. K., & Jumaa, A. K(2019) Van-Dai T a et al. (2016) Keerti, Singh, K., & Dhawan, S. (2016) Algorithms/ Techniques Apache Spark tool, Mllib library with Python and Scala Apache Spark tool, Steaming API, Machine Learning and data mining techniques Hadoop MapReduce, Apache Spark O bservation T he authors presented a comparison between Java And Scala for evaluating time performance in Apache Spark Mllib .also explain tools, APIs, programming language, and Spark machine learning libraries in Apache Spark. Furthermore discovered the advantages of data loading and accessing from stored sources like Hadoop, HDFS, Cassandra, HBase, etc. T he authors concluded that the performance of Scala is much better than Java performance. In this paper, creat ed a general architecture using the Spark streaming method that can implement in the healthcare system in big data analytics. Also, explain how can be enhanced efficiency through machine learning and data mining techniques. Researchers are focusing on the big data application model that can be used in the real-time system, social network area, and in the healthcare system. T his paper gave an introduction to MapReduce, Hadoop, and Spark. Also, Spark is compared with MapReduce. T he three-layered [6] [7] Bhattacha rya A. & Bhatnagar , S. (2016) Salloum, S., Dautov, R., Chen, X., Peng, P. X., & Huang, J. Z. (2016) 978-1-7281-7089-3/20/$31.00 ©2020 IEEE Hadoop Map Reduce And Spark tools Apache Spark T he authors have implemented the healthcare model using different analysis and prediction techniques with machine learning algorithms for better predictions. T he work in this paper is focusing on the e-governance system that is built using an apache spark for analyzing government -collected data. authors gave a brief explanation of the architecture of apachespark, including core layer, ecosystem layer, resource management, and methods that are used in a spark government department is generated data with high volume so cannot be managed by the traditional database management system, thus built a system with more efficiency using big data analytics techniques. .furthermore resolved main issues of traditional database management systems like speed, mixed typed datasets, accuracy, etc. T he authors explained the concept of big data, and Apache Spark, firstly introduces big data and a very important part of big data is V’s. Moreover, big data analytics, security issues of big data analytics, and a variety of tools that are available in the market like Hadoop MapReduce, Apache Spark are also explained. Furthermore, presented a comparison between Hadoop’s MapReduce and Apache Spark on some features such as memory, competitive product. In this paper, the author is focusing on the basic components and unique features of apache spark to big data analytics. With the help of apache-spark, some Ml pipelines API and distinct utilities are produced for designing and implementing. T he authors illustrated how to increase the popularity of apache spark technology to the research field in big data analytics. 475 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 [8] [9] [10] [11] [12] [13] [14] Hongyong Yu & Deshuai Wang (2012) Hussain et al. (2016) Shaikh, E., Mohiuddi n, I., Alufaisan, Y., & Nahvi, I. (2019) M.U. Bokhari et al. MapReduce, Apache Hive Apache Spark and Hadoop MapReduce Apache Spark Apache Spark, HDFS and Machine Learning U. R. Pol (2016) Apache Spark, Hadoop MapReduce Sunil Kumar& Maninder Singh (2019] Apache Hadoop Aziz et al. (2018) Apache Spark, MapReduce T he authors have explained how to improve the privacy and security of data in big data analytics. T hey discussed both MapReduce and Apache Hive frameworks with usages, ease of use, capability, and processing. T he authors focused on a learning analytics model that can predict future and trends from educational organizations. Furthermore, explored usages, methodologies (Hadoop MapReduce) of big data and also recognized some issues such as data privacy, capacity, processing, and analyzing of data. T he authors concluded that the prediction model helps education authorities for learning activities and patterns that are in the trends. T he authors discussed how to perform in-memory computing processes in apache spark and spark compared with other tools for fast computing. Furthermore, explained batch processing and stream processing with capabilities. And also discussed the multithreading and concurrency capabilities of Apache Spark. . T he authors implemented a three-layered model of architecture for storage and data analysis. T here are used HDFS for data storage, and machine learning algorithms for data analysis. T he author gave a brief explanation of big data and its analytics using Apache Spark. furthermore, explain how apache spark overcomes Hadoop which is a good framework for data processing and also open-source distributed computing for reliability and scalability in big data analytics T hey have discussed the impact of big data on the healthcare system and how to manage different tools that are available in the Hadoop ecosystem. Moreover, they have also explored the conceptual architecture of big data analytics for healthcare systems. T he authors described how to process real-time data using Apache Spark and Hadoop tools in big data analytics. And also compared Apache Spark and Hadoop for fast computing [15] [16] [1723] [24] Amol Bansod (2015) Apache Spark, Hadoop MapReduce Shoro, A. G., & Soomro, T . R. (2015) Apache Spark And T witter Stream API Gupta, Y., et. al. Apache Pig Shrutika Dhoka & R.A. Kudale (2016) Apache Spark T he author described the advantage of the Apache Spark framework for data processing in HDFS and also compared it with Hadoop MapReduce, and with other data processing frameworks In this paper, the author discussed the concept of big data, characteristics of big data (V’s), and big data analytics tools that are Hive, Pig, Zebra, HBase, Chu Kwa, Storm, and Spark. Moreover, given some reasons for apache spark technology when should use it or not. furthermore, they performed data processing with T witter data by Apache Spark and T witter stream API. Authors Analyzed various datasets such as stock exchange Data, Crime rates of India, Population of India, and Healthcare data using Apache Pig. Also elaborated various tools and techniques used to analyses the massive volume of data i.e. big data in the Hadoop distributed file system of the cluster of commodity hardware. T he authors also describe various image processing techniques. T he authors have developed a conceptual architecture on the Apache Spark platform to overcome the problems that get when processing big data in the healthcare system. In this paper, Big data analytics and Apache Spark is explained in various aspects. Authors focused on characteristics (7V’s) of big data, tools and application areas for big data analytics, as well as Apache Spark Ecosystem including components, libraries and cluster managers that is deployment modes of Apache spark. Ultimately, they also present a comparative study of Python and Scala programming languages with various parameters in the aspect of Apache Spark. III. RESEARCH GAP After reading all these research papers, the vast amount of Big Data can be processed using Python and Scala programming languages over Apache Spark. Also, the present comparisons between both programming languages for fast data processing in Apache Spark defines which programming language is best suited for Apache Spark that can give a better result in Big Data Analytics. 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 476 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 IV. COMPARAT IVE ST UDY OF PYT HON AND SCALA IN SPARK Pe rformance Slower Faster The learning curve of Scala is a bit tough as compared to Python due to simple syntax. However, Scala has enigmatic syntax and a lot of operations that are combined into a single statement. The availability of libraries is very rich in Python in contrast to Scala. However, Scala libraries are not more compatible with a data scientist. So python is the preferable language for this aspect. In Python, the testing process and its methodologies are very complex due to a dynamically typed language. However, testing of scala is less complex. Python holds less verbosity because of dynamically typed language Scala is a statically typed language that can identify compilation time error so it is a better alternative for largescale projects. Scala supports features of multithreading so it can be handle parallelism and concurrency while python does not support multithreading. The Python community keeps organizing conferences, reunites, and work on code to develop the language. Python has much larger community support in comparison to Scala. 02 Le arning Curve Easy T ough V. DISCUSSION 03 Machine Le arning Librarie s Rich library Less library Platform Interpreter Complier Visualiz ation Librarie s Rich library Less library Type Safe ty T he dynamic type of language. T he static type of language. Te sting Very complex Less complex Simplicity Easy to learn because writing code is simple. Scala may be difficult to learn than Python. Ease of use Less Verbose High Verbose Concurre ncy No support Highly supported IDE Pycharm, Jupyter Eclipse, Intellij Spark She ll >Pyspark >Scala Support Much larger community support. Less community support Python is an object-oriented, high-level functional and interpreted based programming language that runs directly on the machine. Pyspark is a Python API for the Apache Spark that works with RDDs due to multiple operations in python. It is a very powerful and preferred programming language because of its high availability of libraries. Scala is an object-oriented and functional programming language that runs on JVM (Java virtual machine) and also helps the developers to be more programmers to be more inventive. It is a great programming language that helps to write valid code without error and also helps to develop Big Data application. Table 2. Comparison between Python and Scala Sr. No 01 04 Parame te rs 05 06 07 08 09 10 11 12 13 Python Scala The performance is a very important factor, when Python and Scala are used with Spark, the performance of Scala is 10 times faster rather than Python performance. Python is a dynamically typed language, so the speed is reduced. During runtime, Scala uses Java Virtual Machine and it is a statically typed language consequently, this originates speed. The compiled language is faster than the interpreted language. The discussion is related to programming languages that are appropriate for the big data field. A lot of programming language is used to solve Big Data problems , simultaneously for big data professionals choosing a language is the most important part. The decision of programming language must be suitable, thus here can perform analysis and manipulation to Big Data Problems so that they can achieve the desired output. By Omar, H. K., & Jumaa, A. K. (2019) [1], it was found that the performance of the Scala programming language is better than Java performance in Spark MLlib. The authors have presented the comparative study of Python and Scala programming languages with parameters for Apache Spark. The performance of Scala is faster but a little difficult to learn although Python is slower while it is very simple to use. Scala does not provide enough big data analytics tools and libraries such as Python for machine learning and natural language processing and there are no good visualization tools for Scala. Python support for Spark Streaming is not advanced and mature like Scala consequently Scala is the best option for Spark Streaming functionality. Apache Spark framework is written in Scala, so with Scala big data developers easily dig into the source code. Scala is more engineering-oriented but Python is more analytical-oriented, both languages are excellent to develop the big data applications. To exploit the full potential of Spark, Scala will be more helpful. After exploring, the authors concluded that in Big Data Analytics, both Python and Scala programming languages are apt for Apache Spark technology. However, language choice for programming in Apache Spark depends on the features that suit the needs of the project and can also effectively solve the problem as each language has its own benefits and drawbacks If the programmer works on smaller projects with less experience then python is a good choice. if the programmer has large-scale projects that need many tools, techniques, and 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 477 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply. Proceedings of the Third International Conference on Intelligent Sustainable Systems [ICISS 2020] IEEE Xplore Part Number: CFP20M19-ART; ISBN: 978-1-7281-7089-3 multiprocessing, then the Scala programming language is the best alternative. The main objective of this paper, it will make things easier for the programmer to a selection of programming languages in Apache Spark according to their project and achieved its desired objectives. VI. CONCLUSION This paper has explained big data analytics and Apache Spark from various aspects. Authors focused on characteristics (7V’s) of big data, tools and application areas for big data analytics, as well as Apache Spark Ecosystem including components, libraries and cluster managers that is deployment modes of Apache spark. Ultimately, they also present a comparative study of Python and Scala programming languages with various parameters in the aspect of Apache Spark. It consists of a table for comparison between Python and Scala programming languages with various parameters. This comparative study has been concluded that both Python and Scala programming languages are suitable for Apache Spark technology. However, language choice is depending on the features that best suit the needs of the project as each language has its own advantages and disadvantages. The main purpose of this paper is to make things easier for the programmer in the selection of programming languages in Apache Spark-based on their project. REFERENCES [1] Omar, H. K., & Jumaa, A. K. (2019). Big Data Analysis Using Apache Spark MLlib and Hadoop HDFS with Scala and Java. Kurdistan Journal of Applied Research, 4(1), 7–14 https://doi.org/10.24017/science.2019.1.2 [2] Van-Dai T a, Chuan-Ming Liu, Goodwill Wandile Nkabinde(2016).Big Data Stream Computing in Healthcare RealT ime Analytics. IEEE, pp. 37-42, DOI:10.1109/ICCCBDA.2016.7529531 [3] Keerti, Singh, K., & Dhawan, S. (2016). Future of Big Data Application & Apache Spark vs. Map Reduce. 1(6), 148 –151. [4] Ajaysinh, R. P., & Somani, H. (2016). A Survey on Machine learning assisted Big Data Analysis for Health Care Domain. 4(4), 550–554. [5] Salwan, P., & Maan, V. K. (2020). Integrating E-Governance with Big Data Analytics using Apache Spark. International Journal of Recent T echnology and Engineering, 8(6), 1609 –1615. https://doi.org/10.35940/ijrte.f7820.038620 [6] Bhattacharya, A., & Bhatnagar, S. (2016). Big Data and Apache Spark: A Review. International Journal of Engineering Research & Science (IJOER) ISSN, 2(5), 206–210. https://ijoer.com/Paper-May2016/IJOER-MAR-2016-9.pdf [7] Salloum, S., Dautov, R., Chen, X., Peng, P. X., & Huang, J. Z. (2016). Big data analytics on Apache Spark. International Journal of Data Science and Analytics, 1(3–4), 145–164. https://doi.org/10.1007/s41060-016-0027-9 [8] Hongyong Yu, Deshuai Wang(2012). Research and Implementation of Massive Health Care Data Management and Analysis Based on Hadoop. IEEE, pp. 514 -517, DOI: 10.1109/ICCIS.2012.225 [9] Zaharia, M., Xin, R. S., Wendell, P., Das, T., Armbrust, M., Dave, A., Meng, X., Rosen, J., Venkataraman, S., Franklin, M. J., Ghodsi, A., Gonzalez, J., Shenker, S., & Stoica, I. (2016). Apache spark: A unified engine for big data processing. Communications of the ACM, 59(11), 56–65. https://doi.org/10.1145/2934664 [10] Shaikh, E., Mohiuddin, I., Alufaisan, Y., & Nahvi, I. (2019). Apache Spark: A Big Data Processing Engine. 2019 2nd IEEE Middle East and North Africa Communications Conference, MENACOMM 2019. https://doi.org/10.1109/MENACOMM46666.2019.8988541 [11] M. U. Bokhari, M. Zeyauddin, and M. A. Siddiqui(2016).An effective model for big data analytics. 3rd International Conference on Computing for Sustainable Global Development, pp. 3980-3982, 2016. [12] Pol, U. R. (2016). Big Data Analysis : Comparison of Hadoop MapReduce and Apache Spark. International Journal of Engineering Science and Computing, 6(6), 6389–6391. https://doi.org/10.4010/2016.1535 [13] Kumar, S., & Singh, M. (2018). Big data analytics for the healthcare industry: impact, applications, and tools. Big Data Mining and Analytics, 2(1), 48–57. https://doi.org/10.26599/bdma.2018.9020031 [14] Aziz, K., Zaidouni, D., & Bellafkih, M. (2018). Real-time data analysis using Spark and Hadoop. 2018 4th International Conference on Optimization and Applications (ICOA). DOI:10.1109/icoa.2018.8370593 [15] Amol Bansod. (2015). Efficient Big Data Analysis with Apache Spark in HDFS. International Journal of Engineering and Advanced T echnology, 6, 2249–8958 [16] Shoro, A. G., & Soomro, T . R. (2015). Big data analysis: Apache spark perspective. Global Journal of Computer Science and T echnology. [17] Gupta, Y. K. & Sharma, S. (2019). Impact of Big Data to Analyze Stock Exchange Data Using Apache PIG. International Journal of Innovative Technology and Exploring Engineering. ISSN: 22783075, 8(7), Pp. 1428-1433. [18] Gupta, Y. K. & Sharma, S. (2019). Empirical Aspect to Analyze Stock Exchange Banking Data Using Apache PIG in HDFS Environment. Proceedings of the Third International Conference on Intelligent Computing and Control Systems (ICICCS 2019). [19] Gupta, Y. K. & Gunjan B. (2019). Analysis of Crime Rates of Different States in India Using Apache Pig in HDFS Environment. Recent Patents on Engineering. Print ISSN: 1872-2121, Online ISSN: 2212-4047, 13:1. https://doi.org/10.2174/1872212113666190227162314. site:http://www.eurekaselect .com/node/170260/article. [20] Gupta, Y. K.* & Choudhary, S. (2020). A Study of Big Data Analytics with T wo Fatal Diseases Using Apache Spark Framework. International Journal of Advanced Science and Technology (IJAST), Vol. 29, No. 5, pp. 2840 - 2851. [21] Gupta, Y. K.*, Kamboj, S. & Kumar, A. (2020). Proportional Exploration of Stock Exchange Data Corresponding to Various Sectors Using Apache Pig. International Journal of Advanced Science and Technology (IJAST), Vol. 29, No. 5, pp. 2858 - 2867. [22] Gupta, Y. K.* & Mittal, T. (2020). Empirical Aspects to Analyze Population of India using Apache Pig in Evolutionary of Big Data Environment, International Journal of Scientific & Technology Research (IJSTR). ISSN 2277-8616, 9(1), Pp. 238-242. [23] Gupta, Y. K. & Jha, C. K.(2016). A Review on the Study of Big Data with Comparison of Various Storage and Computing T ools and their Relative Capabilities. International Journal of Invocation in engineering & technology (IJIET). ISSN: 2319-1058, 7(1), Pp. 470477. [24] hoka, Shrutika; A. Kudale, R. (2016). Use of Big Data in Healthcare with Spark. Proceedings - International Symposium on Parallel Architectures, Algorithms and Programming, PAAP, 2016 Janua(11), 172–176. https://doi.org/10.1109/PAAP.2015.4 978-1-7281-7089-3/20/$31.00 ©2020 IEEE 478 Authorized licensed use limited to: Carleton University. Downloaded on June 02,2021 at 03:18:18 UTC from IEEE Xplore. Restrictions apply.