0 - Text book Managerial Economics- A Problem Solving Approach

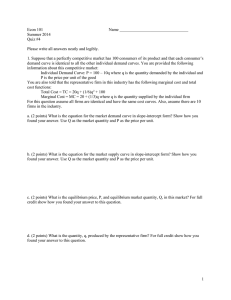

advertisement