ARIMA Model

November 24, 2020

[2]: import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

import statsmodels.api as sm

from statsmodels.graphics.tsaplots import plot_acf,plot_pacf

from statsmodels.tsa.arima_model import ARIMA

from statsmodels.tsa.stattools import adfuller

from pandas.tseries.offsets import DateOffset

[3]: df=pd.read_csv(r'C:\Users\Asus\Desktop\OPM_Assignment Data.csv')

df = df.rename(columns={ "Date": "date", ' Sales ': "sales"})

[4]: df['sales'] = df['sales'].astype(int)

df['date']=pd.to_datetime(df['date'])

[5]: df.set_index('date',inplace=True)

[6]: df.plot()

[6]: <matplotlib.axes._subplots.AxesSubplot at 0x24358badb20>

1

Testing For Stationarity

[7]: test_result = adfuller(df['sales'])

[8]: #Ho: It is non stationary

#H1: It is stationary

def adfuller_test(sales):

result=adfuller(sales)

labels = ['ADF Test Statistic','p-value','#Lags Used','Number of␣

,→Observations Used']

for value,label in zip(result,labels):

print(label+' : '+str(value) )

if result[1] <= 0.05:

print("strong evidence against the null hypothesis(Ho), reject the null␣

,→hypothesis. Data has no unit root and is stationary")

else:

print("weak evidence against null hypothesis, time series has a unit␣

,→root, indicating it is non-stationary ")

[9]: adfuller_test(df['sales'])

ADF Test Statistic : -3.5804829030383365

p-value : 0.0061453039375673

2

#Lags Used : 5

Number of Observations Used : 27

strong evidence against the null hypothesis(Ho), reject the null hypothesis.

Data has no unit root and is stationary

Identification of an AR model is often best done with the PACF. For an AR model, the

theoretical PACF “shuts off” past the order of the model. The phrase “shuts off” means that in

theory the partial autocorrelations are equal to 0 beyond that point. Put another way, the number

of non-zero partial autocorrelations gives the order of the AR model. By the “order of the model”

we mean the most extreme lag of x that is used as a predictor. Identification of an MA model is

often best done with the ACF rather than the PACF.

Identification of an MA model is often best done with the ACF. For an MA model, the

theoretical PACF does not shut off, but instead tapers toward 0 in some manner. A clearer pattern

for an MA model is in the ACF. The ACF will have non-zero autocorrelations only at lags involved

in the model.

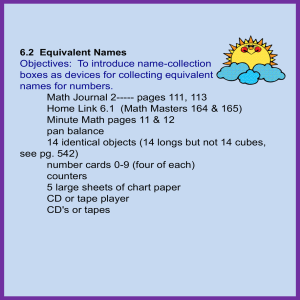

p,d,q p AR model lags d differencing q MA lags

[10]: fig

ax1

fig

ax2

fig

=

=

=

=

=

plt.figure(figsize=(12,8))

fig.add_subplot(211)

sm.graphics.tsa.plot_acf(df['sales'],ax=ax1) #.iloc[13:],lags=40

fig.add_subplot(212)

sm.graphics.tsa.plot_pacf(df['sales'],ax=ax2) #.iloc[13:],lags=40

3

[11]: # For non-seasonal data

#p=1, d=1, q= 0 or 1

ARIMA Model

[12]: model=ARIMA(df['sales'],order=(1,1,0))

model_fit=model.fit()

C:\Users\Asus\anaconda3\lib\site-packages\statsmodels\tsa\base\tsa_model.py:159:

ValueWarning: No frequency information was provided, so inferred frequency MS

will be used.

warnings.warn('No frequency information was'

C:\Users\Asus\anaconda3\lib\site-packages\statsmodels\tsa\base\tsa_model.py:159:

ValueWarning: No frequency information was provided, so inferred frequency MS

will be used.

warnings.warn('No frequency information was'

[13]: model_fit.summary()

[13]: <class 'statsmodels.iolib.summary.Summary'>

"""

ARIMA Model Results

==============================================================================

Dep. Variable:

D.sales

No. Observations:

32

Model:

ARIMA(1, 1, 0)

Log Likelihood

-453.176

Method:

css-mle

S.D. of innovations

342079.278

Date:

Tue, 24 Nov 2020

AIC

912.352

Time:

22:38:31

BIC

916.749

Sample:

02-01-2011

HQIC

913.810

- 09-01-2013

================================================================================

=

coef

std err

z

P>|z|

[0.025

0.975]

-------------------------------------------------------------------------------2.544e+04

5.91e+04

0.667

-9.04e+04

const

0.430

1.41e+05

ar.L1.D.sales

-0.0289

0.187

-0.154

0.878

-0.396

0.339

Roots

=============================================================================

Real

Imaginary

Modulus

Frequency

----------------------------------------------------------------------------AR.1

-34.6596

+0.0000j

34.6596

0.5000

----------------------------------------------------------------------------"""

4

[14]: df['forecast']=model_fit.predict(start=24,end=33,dynamic=True)

df[['sales','forecast']].plot(figsize=(12,8))

[14]: <matplotlib.axes._subplots.AxesSubplot at 0x24359871580>

ARIMA yields unsatisfatory result. So, we try with SARIMA

SARIMA Model

[15]: model=sm.tsa.statespace.SARIMAX(df['sales'],order=(1, 1, 1),seasonal_order=(1,␣

,→1, 1,12))

results=model.fit()

C:\Users\Asus\anaconda3\lib\site-packages\statsmodels\tsa\base\tsa_model.py:159:

ValueWarning: No frequency information was provided, so inferred frequency MS

will be used.

warnings.warn('No frequency information was'

C:\Users\Asus\anaconda3\lib\site-packages\statsmodels\tsa\base\tsa_model.py:159:

ValueWarning: No frequency information was provided, so inferred frequency MS

will be used.

warnings.warn('No frequency information was'

C:\Users\Asus\anaconda3\lib\sitepackages\statsmodels\tsa\statespace\sarimax.py:866: UserWarning: Too few

5

observations to estimate starting parameters for seasonal ARMA. All parameters

except for variances will be set to zeros.

warn('Too few observations to estimate starting parameters%s.'

[35]: df['forecast']=results.predict(start=24,end=33,dynamic=True)

df[['sales','forecast']].plot(figsize=(12,8))

[35]: <matplotlib.axes._subplots.AxesSubplot at 0x2435b5d4160>

[17]: future_dates=[df.index[-1]+ DateOffset(months=x)for x in range(0,24)]

[18]: future_datest_df=pd.DataFrame(index=future_dates[1:],columns=df.columns)

[19]: future_df=pd.concat([df,future_datest_df])

[30]: future_df['forecast'] = results.predict(start = 24, end = 38, dynamic= True)

future_df[['sales', 'forecast']].plot(figsize=(12, 8))

[30]: <matplotlib.axes._subplots.AxesSubplot at 0x2435b508b80>

6

[31]: future_df[:-17]

[31]:

2011-01-01

2011-02-01

2011-03-01

2011-04-01

2011-05-01

2011-06-01

2011-07-01

2011-08-01

2011-09-01

2011-10-01

2011-11-01

2011-12-01

2012-01-01

2012-02-01

2012-03-01

2012-04-01

2012-05-01

2012-06-01

2012-07-01

2012-08-01

sales

3240325

2741349

2987427

3456892

3740738

3979178

4160454

4162013

3809132

3794419

3719219

3812981

3480451

3183133

3764529

3500189

3389811

4348789

4442455

4593383

forecast

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

7

2012-09-01

2012-10-01

2012-11-01

2012-12-01

2013-01-01

2013-02-01

2013-03-01

2013-04-01

2013-05-01

2013-06-01

2013-07-01

2013-08-01

2013-09-01

2013-10-01

2013-11-01

2013-12-01

2014-01-01

2014-02-01

2014-03-01

4029783

4211211

3854682

3554831

3488309

3270444

3709943

3655530

4097990

4472583

4531711

4504466

4054338

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

3.703144e+06

3.455306e+06

3.884572e+06

3.753771e+06

3.708422e+06

4.393622e+06

4.477484e+06

4.581709e+06

4.157130e+06

4.282700e+06

4.036895e+06

3.864442e+06

4.218167e+06

3.985965e+06

4.339405e+06

[32]: df.to_excel("Sarima_output.xlsx")

[33]: future_df[:-17].to_excel("future_forecast.xlsx")

8