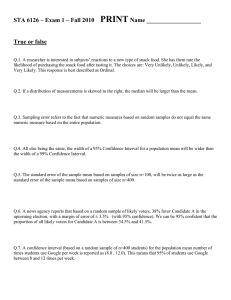

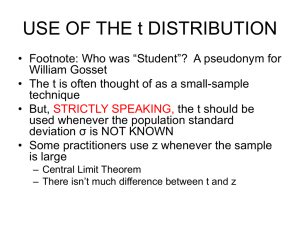

Introductory Biostatistics Equation Sheet Sample Mean 𝑥̅ = ∑𝑥 𝑛 𝜇= ∑𝑥 𝑁 Population Mean Range Range = xL-xS where xL and xS are the largest and smallest scores in the data set respectively. Variance Parameter σ2 ∑(x-μ)2 = N Variance Statistic s 2 x x 2 n 1 Computational Form s2 = ( ∑ x)2 n 𝑛−1 ∑ x2 - Standard Deviation s (x x) 2 n 1 ( ∑ x)2 2 √∑ x − n s= n−1 Convert x values to Z scores Z x Standard Error for sample mean σx = σ √n where σ is the population standard deviation and n is the sample size. Sampling Distribution Z Score Z x μ σ n where 𝑋̅ is the sample mean, µ is the population mean, σ is the population standard deviation, and n is the sample size. Binomial Probability P(y successes) n! y (1 ) n y y!(n y)! Where n is the number of times process is replicated, p is the P(success), y is the number of successes of interest, and ‘!’ means factorial. Normal Curve Approximation for Binomial Distribution 𝑝̂ − 𝜋 𝑍= √𝜋(1 − 𝜋) 𝑛 where 𝑝̂ is the sample proportion of successes, p is the population proportion and n is the sample size. One Sample Z test Z= ̅ − 𝜇0 𝑋 𝜎/√𝑛 where 𝑥̅ is the sample mean, 𝜇0 is the hypothesized mean of the population, 𝜎 is the population standard deviation, and n is the sample size. One Sample t test t= ̅-μ0 X df=n-1 s/√n where 𝑥̅ is the sample mean, μ0 is the hypothesized mean of the population, 𝑠 is the sample standard deviation, and n is the sample size One Sample Z test for Proportions (Approximate Test) p̂- π0 𝑧= √π0(1-π0) n where 𝑝̂ is the sample proportion of successes, π0 is the hypothesized population proportion, and n is the sample size. Two-sided Confidence Interval for a mean when sigma is known 𝑥̅ ± 𝑍 𝜎 √𝑛 where 𝑥̅ is the sample mean, Z is the appropriate Z value for the specified interval, 𝜎 is the population standard deviation, and n is the sample size. Two-sided Confidence Interval for a mean when sigma is not known 𝑥̅ ± 𝑡 𝑠 √𝑛 where 𝑥̅ is the sample mean, t is the appropriate value from the t table with n − 1 degrees of freedom, 𝑠 is the sample standard deviation, and n is the sample size Two-sided Confidence Interval for a proportion (Approximate Method) p̂ Z p̂(q̂) n Where 𝑝̂ is the proportion of successes in the sample, 𝑞̂ is the proportion of failures in the sample, n is the sample size and Z is the appropriate Z value for the specified interval. Paired t-Test 𝑡= d̅ - μd0 Df=n-1 sd/√n where 𝑑̅ is the sample mean of the difference scores, μd0 is the hypothesized value of μd (usually but not always zero), sd the sample standard deviation of the difference scores, and n the number of paired observations (or difference scores). Confidence Interval for Paired Mean Difference 𝑑̅ ± 𝑡 sd √n where 𝑑̅ is the sample mean of the difference scores, sd is the sample standard deviation of the difference scores and t is the appropriate value from the t table with n − 1 degrees of freedom. McNemar’s Test (Approximate Method) 𝑍= 𝑝̂ − 0.5 0.5/√𝑛 where 𝑝̂ is the proportion of pairs in which the designated treatment has the advantage and n is the number of pairs utilized in the analysis. Independent Samples t-Test t= x̅1 - x̅2 1 1 √𝑆p2( + ) Df = n1 + n2 – 2 n1 n2 where 𝑥̅ 1 and 𝑥̅ 2 represent the means of samples one and two, n1 and n2 represent the number of observations in each of the two samples, and Sp2 is an estimate of the population variance based on an averaging or pooling of the information in the two samples. Calculation of sp2 Sp2 (n1 -1)s12+(n2 -1)s22 = n1+n2 -2 Where s12 and s22 represent the variances of the first and second samples respectively and , n1 and n2 represent the number of observations in each of the two samples. Alternate Calculation of sp2 (∑ x12 Sp2 = ( ∑ x1)2 ( ∑ x2)2 ) + (∑ x 22 n1 n2 ) n1+n2 -2 The Confidence Interval for the difference between means (x̅1 - x̅2) ± t√sp2( 1 1 + ) n1 n2 Where t is the appropriate t value with n1 + n2 − 2 degrees of freedom, 𝑥̅ 1 and 𝑥̅ 2 represent the means of samples one and two, n1 and n2 represent the number of observations in each of the two samples, and Sp2 is an estimate of the population variance based on an averaging or pooling of the information in the two samples. Independent Samples Z Test for Proportions (Approximate Test) p̂1 - p̂2 𝑍= √p̂(1 − p̂)( 1 + 1 ) n1 n2 Where 𝑝̂ 1 and 𝑝̂ 2 represent the proportion of successes in the first and second samples respectively, 𝑝̂ represents the overall proportion of successes, and n1 and n2 represent the two sample sizes. Where 𝑝̂ 1 and 𝑝̂ 2 are the proportions of successes in the two samples, 𝑞̂1 and 𝑞̂2 are the proportions of failures in the two samples (i.e., 𝑞̂1 = 1 − 𝑝̂ 1, 𝑞̂2 = 1 − 𝑝̂ 2), n1 and n2, are the respective sample sizes and Z is the appropriate Z value for the specified interval. Chi Square Test χ2 = ∑ (O-E)2 df=(j - 1)(k − 1) E where O and E are referred respectively to the observed and expected frequencies and j and k are the number of rows and columns in the table. Expected Frequency 𝐸= (𝑁𝑅)(𝑁𝐶) 𝑁 where NR is the row total for the cell whose expected frequency is being calculated and NC is the column total for the same cell. Chi Square Goodness of Fit Test χ2 = ∑ (O-E)2 E df=(k − 1) where O and E are referred respectively to the observed and expected frequencies and k is the number categories. Expected Frequency E = N*πk0 where N is the total number in sample and πk0 is the hypothesized proportion for each category. Analysis of Variance (ANOVA) F statistic F= 𝑀𝑆𝐵 𝑀𝑆𝑊 Mean Square Within (MSW) or Mean Square Error (MSE) MSW = 𝑆𝑆𝑊 𝑁−𝑘 Where SSW is the sum of squares within groups, N is the total number of observations, and k is the number of groups. The quantity N − k is termed the denominator degrees of freedom. Sums of Squares Within (SSW) or Sums of Squares Error (SSE) SSW = SS1 + SS2 + · · · + SSk, where k is the number of groups The sum of squares for a given group can be calculated by SSk = ∑(x-x̅k)2 or equivalently 𝑆𝑆𝑊 = ∑ x2 − ( ∑ x)2 n Mean Square Between (MSB) MSB = 𝑆𝑆𝐵 𝑘−1 where SSB is the sum of squares between and k is the number of groups. The quantity k − 1 is termed the numerator degrees of freedom. The Sum of Squares Between (SSB) ̅k-X ̅ )2 SSB = ∑ nk(X Or equivalently 𝑆𝑆𝐵 = 1 ( ∑ni=1 xi1)2 n1 + 2 ( ∑ni=1 xi2)2 n2 + 3 ( ∑ni=1 xi3)2 n3 - ( ∑ x)2 N If you are only given group means, you can find the overall mean to use in the SSB calculation using the following equation 𝑋̅ = X̅1(n1)+X̅2(n2)+…+X̅k(nk) 𝑁 Pearson Correlation Coefficient The conceptual equation 𝑟= ∑(𝑥 − 𝑥̅ )(𝑦 − 𝑦̅) √[∑(𝑥 − 𝑥̅ )2 ][∑(𝑦 − 𝑦̅)2 ] where r is the sample correlation coefficient, x and y are the two variables to be correlated and n is the number of paired observations. The computational equation ∑ 𝑥𝑦 − 𝑟= (∑ 𝑥)(∑ 𝑦) 𝑛 2 2 (∑ 𝑥) (∑ 𝑦) √[∑ 𝑥2 − ∑ 𝑦2 − ] [ 𝑛 𝑛 ] Hypothesis Test of H0 : ρ = 0 𝑡= r √1-r n-2 2 where r is the Pearson correlation coefficient and n is the number of pairs of observations. The degrees of freedom for the test critical value is n − 2. Linear Regression Simple Regression Prediction Equation ̂ = a + bX Y ̂ is the predicted value of Y, a is the intercept, b is the slope, and X is the independent variable. Where Y Calculating Residual ̂ Residual = Y - Y ̂ is the predicted value of Y. Where Y is the observed value of Y and Y Multiple Regression Prediction Equation ̂ = a+b1X1+b2X2 + …+bkXk Y ̂ is the predicted value of Y, a is the intercept, bk is the slope for the kth variable, and Xk is the Where Y kth independent variable.