APPLICATIONS OF GAUSSIAN

ELIMINATION METHOD AND

INVOLMENT OF C PROGRAMMING

IN IT

Abdullah

SRI VENKATESWARA ARTS COLLEGE

Tirupati

Table of Contents

History ................................................................................................................................................... 2

Introduction ............................................................................................................................................ 3

Applications of Gaussian Elimination ..................................................................................................... 4

1.1. Determinants Computing ............................................................................................................. 4

1.2 Finding the inverse of a matrix ..................................................................................................... 6

1.2.1 General Structure to find inverse ............................................................................................ 6

1.3 Finding Kernel of a Matrix ............................................................................................................ 8

1.3.1 Computations by gaussian Elimination ................................................................................... 8

1.4 Rank of matrix ............................................................................................................................ 10

1.4.1 General process: .................................................................................................................. 10

1.4.2 Computed by Gaussian elimination ...................................................................................... 10

1.4.3 C Program for finding rank of a ‘Matrix’ ................................................................................ 12

1.5 Number of Solutions of the System of Equations........................................................................ 15

1.5.1 Hierarchy ............................................................................................................................. 15

1.5.2 Procedure to Find Rank Method........................................................................................... 17

1.6 Finding Solutions to the System of Equations ............................................................................ 18

1.6.1 Introduction .......................................................................................................................... 18

1.6.2 General From ....................................................................................................................... 19

1.6.3 Procedure to Solve............................................................................................................... 19

1.6.4 C Algorithm .......................................................................................................................... 21

1.6.5 C Program for Solving System of Equations ........................................................................ 22

1

History

The method of Gaussian elimination appears - albeit without proof - in the Chinese

mathematical text Chapter Eight: Rectangular Arrays of The Nine Chapters on the Mathematical Art. Its

use is illustrated in eighteen problems, with two to five equations. The first reference to the book by this

title is dated to 179 CE, but parts of it were written as early as approximately 150 BCE. It was commented

on by Liu Hui in the 3rd century.

The method in Europe stems from the notes of Isaac Newton. In 1670, he wrote that all the algebra books

known to him lacked a lesson for solving simultaneous equations, which Newton then supplied.

Cambridge University eventually published the notes as Arithmetical Universalis in 1707 long after

Newton had left academic life. The notes were widely imitated, which made (what is now called) Gaussian

elimination a standard lesson in algebra textbooks by the end of the 18th century. Carl Friedrich Gauss in

1810 devised a notation for symmetric elimination that was adopted in the 19th century by

professional hand computers to solve the normal equations of least-squares problems. The algorithm

that is taught in high school was named for Gauss only in the 1950s as a result of confusion over the

history of the subject.

Some authors use the term Gaussian elimination to refer only to the procedure until the matrix is in

echelon form, and use the term Gauss–Jordan elimination to refer to the procedure which ends in

reduced echelon form. The name is used because it is a variation of Gaussian elimination as described

by Wilhelm Jordan in 1888. However, the method also appears in an article by Claisen published in the

same year. Jordan and Claisen probably discovered Gauss–Jordan elimination independently.

2

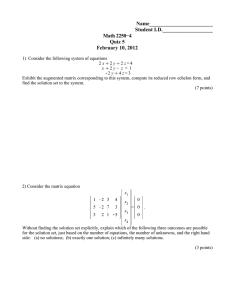

Introduction

Gaussian elimination, also known as row reduction, is an algorithm in linear

algebra for solving a system of linear equations. It is usually understood as a sequence of operations

performed on the corresponding matrix of coefficients. This method can also be used to find the rank of

a matrix, to calculate the determinant of a matrix, and to calculate the inverse of an invertible square

matrix. The method is named after Carl Friedrich Gauss (1777–1855). Some special cases of the method

- albeit presented without proof - were known to Chinese mathematicians as early as circa 179 AD.

To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the

matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are

three types of elementary row operations:

Swapping two rows,

Multiplying a row by a nonzero number,

Adding a multiple of one row to another row.

Using these operations, a matrix can always be transformed into an upper triangular matrix, and in fact

one that is in row echelon form. Once all of the leading coefficients (the leftmost nonzero entry in each

row) are 1, and every column containing a leading coefficient has zeros elsewhere, the matrix is said to

be in reduced row echelon form. This final form is unique; in other words, it is independent of the

sequence of row operations used. For example, in the following sequence of row operations (where

multiple elementary operations might be done at each step), the third and fourth matrices are the ones

in row echelon form, and the final matrix is the unique reduced row echelon form.

Using row operations to convert a matrix into reduced row echelon form is sometimes called Gauss–

Jordan elimination. Some authors use the term Gaussian elimination to refer to the process until it has

reached its upper triangular, or (unreduced) row echelon form. For computational reasons, when solving

systems of linear equations, it is sometimes preferable to stop row operations before the matrix is

completely reduce.

3

Applications of Gaussian Elimination

Historically, the first application of the row reduction method is for solving systems of linear equations.

Here are some other important applications of the algorithm.

Finding determinants

Finding inverse of matrix

Finding kernel of matrix

Finding rank of matrix

Determine the number of solutions for the given system of equations

Finding the solutions to the system of equations

1.1. Determinants Computing

To explain how Gaussian elimination allows the computation of the determinant of a square

matrix, we have to recall how the elementary row operations change the determinant:

Swapping two rows multiplies the determinant by −1

Multiplying a row by a nonzero scalar multiplies the determinant by the same scalar

Adding to one row a scalar multiple of another does not change the determinant.

If Gaussian elimination applied to a square matrix A produces a row echelon matrix B, let d be the product

of the scalars by which the determinant has been multiplied, using the above rules. Then the determinant

of A is the quotient by d of the product of the elements of the diagonal of B:

𝜋𝑑𝑖𝑎𝑔(𝐵)

𝑑

det(𝐴) =

Computationally, for an n × n matrix, this method needs only O(n3) arithmetic operations, while solving by

elementary methods requires O(2n) or O(n!) operations. Even on the fastest computers, the elementary

methods are impractical for n above 20.

Example:

𝟐

𝑨 = [𝟑

𝟏

𝟏 𝟏

𝟐 𝟑]

𝟒 𝟗

𝑹𝟑 × 𝟐

𝟐

𝑨 = 𝟐 × [𝟑

𝟐

𝟏 𝟏

𝟐 𝟑]

𝟖 𝟏𝟖

𝑹𝟑 → 𝑹𝟑 − 𝑹𝟏

𝟐

𝑼 = 𝟐 × [𝟑

𝟎

𝟏 𝟏

𝟐 𝟑]

𝟕 𝟏𝟕

𝑹𝟏 × 3 and 𝑹𝟐 × 𝟐

𝟔 𝟑 𝟑

𝑼 = 𝟐 × 𝟐 × 𝟑 × [𝟔 𝟒 𝟔 ]

𝟎 𝟕 𝟏𝟕

𝑹𝟐 → 𝑹𝟐 − 𝑹𝟏

4

𝟔 𝟑 𝟑

𝑼 = 𝟐 × 𝟐 × 𝟑 × [𝟎 𝟏 𝟑 ]

𝟎 𝟕 𝟏𝟕

𝑹𝟐 × 𝟕

𝟔 𝟑 𝟑

𝑼 = 𝟐 × 𝟐 × 𝟑 × 𝟕 × [𝟎 𝟕 𝟐𝟏]

𝟎 𝟕 𝟏𝟕

𝑹𝟑 → 𝑹𝟑 − 𝑹𝟐

Now det 𝐴 =

6 × 7 × −4

= −2

2×2×3×7

5

1.2 Finding the inverse of a matrix

Definition –

In linear algebra, an n-by-n square matrix A is called invertible (also nonsingular or

nondegenerate) if there exists an n-by-n square matrix B such that

AB=BA=In

where In denotes the n-by-n identity matrix and the multiplication used is ordinary matrix multiplication. If

this is the case, then the matrix B is uniquely determined by A and is called the inverse of A, denoted

by A−1.

A square matrix that is not invertible is called singular or degenerate. A square matrix is singular if and

only if its determinant is zero. Singular matrices are rare in the sense that the probability that a square

matrix whose real or complex entries are randomly selected from any finite region in the number line or

complex plane is singular is 0, that is, it will "almost never" be singular.

Non-square matrices (m-by-n matrices for which m ≠ n) do not have an inverse. However, in some cases

such a matrix may have a left inverse or right inverse. If A is m-by-n and the rank of A is equal

to n (n ≤ m), then A has a left inverse, an n-by-m matrix B such that BA = In. If A has rank m (m ≤ n),

then it has a right inverse, an n-by-m matrix B such that AB = Im.

Matrix inversion is the process of finding the matrix B that satisfies the prior equation for a given

invertible matrix A

1.2.1 General Structure to find inverse

A variant of Gaussian elimination called Gauss–Jordan elimination can be used for finding the inverse of

a matrix, if it exists. If A is an n × n square matrix, then one can use row reduction to compute its inverse

matrix, if it exists. First, the n × n identity matrix is augmented to the right of A, forming an n × 2n block

matrix [A | I]. Now through application of elementary row operations, find the reduced echelon form of

this n × 2n matrix. The matrix A is invertible if and only if the left block can be reduced to the identity

matrix I; in this case the right block of the final matrix is A−1. If the algorithm is unable to reduce the left

block to I, then A is not invertible.

𝑎11

𝑁𝑜𝑤 [𝐴|𝐼] = [𝑎21

𝑎31

𝑎12

𝑎22

𝑎32

𝑎13 1 0

𝑎23 | 0 1

𝑎33 0 0

0

0]

1

Now we have to reduce left side matrix into reduced

Now echelon form. By using row operations.

Then

1

[𝐼|𝐵] = [0

0

0 0 𝑏11

1 0| 𝑏21

0 1 𝑏31

𝑏12

𝑏22

𝑏32

Now

𝑏11

𝐵 = [𝑏21

𝑏31

𝑏12

𝑏22

𝑏32

6

𝑏13

𝑏23 ]

𝑏33

𝑏13

𝑏23 ]

𝑏33

become the inverse matrix of A (i.e.,) 𝐴−1 = 𝐵

Example:

𝟐 −𝟏 𝟎

𝑨 = [−𝟏 𝟐 −𝟏]

𝟎 −𝟏 𝟐

to find the inverse of matrix, one takes the following matrix augmented by the identity and row-reduces

it as 3 x 6 matrix.

𝟐 −𝟏 𝟎 1 0 0

[𝐴|𝐼] = [−𝟏 𝟐 −𝟏| 0 1 0]

𝟎 −𝟏 𝟐 0 0 1

By performing row operations, one can check that the reduced row echelon form of this augmented

matrix is

1

[𝐼|𝐵] = 0

0

[

3

4

0 0 |1

1 0

0 1 |2

1

4

1 1

2 4

1

1

2

1 3

2 4]

One can think of each row operation as the left product by an elementary matrix. Denoting by B the

product of these elementary matrices, we showed, on the left, that

BA = I, and therefore, B = A-1. On the right, we kept a record of BI = B, which we know is the inverse

desired. This procedure for finding the inverse works for square matrices any size.

𝑩 = 𝑨−𝟏

3

4

1

=

2

1

[4

7

1 1

2 4

1

1

2

1 3

2 4]

1.3 Finding Kernel of a Matrix

Definition

In mathematics, more specifically in linear algebra and functional analysis, the kernel of

a linear mapping, also known as the null space or nullspace, is the set of vectors in the domain of the

mapping which are mapped to the zero vector.[1][2] That is, given a linear map L : V → W between

two vector spaces V and W, the kernel of L is the set of all elements v of V for which L(v) = 0,

where 0 denotes the zero vector in W,[3] or more symbolically:

1.3.1 Computations by gaussian Elimination

A basis of the kernel of a matrix my be computer by Gaussian elimination. For this

𝐴

purpose, given an m x n matrix A, we construct first row augmented matrix

[ ]

𝐼

Where I is the n x n

identity matrix.

Computing its column echelon form by Gaussian elimination (or any other suitable method), we get a

𝐵

matrix

[ ] A basis of the kernel of A consists in the non-zero columns of C such that the corresponding

𝐶

column of B is a zero column. In fact, the computation my be stopped as soon as the upper matrix is in

column echelon form: the remainder of the computation consists in changing the basis of the vector

space generated by the columns whose upper part is zero.

For example, suppose that

1

𝑨 = [0

0

0

0 −3 0 2 −8

1 5 0 − 4]

0 0 1 7 −9

0 0 0 0 0

Then,

8

Putting the upper part in column echelon form by column operations on the whole matrix gives

The last three columns of B are zero columns. Therefore, the three last vectors of C,

are a basis of the kernel of A.

proof that the method computes the kernel: since column operations correspond to post-multiplication by

invertible matrices, the fact that

𝐴

𝐵

𝐼

𝐶

[ ] reduces to [ ]

means that there exists an invertible matrix P such that [

𝐴

𝐵

] 𝑃 = [ ] , with B in column echelon form.

𝐼

𝐶

Thus AP=B, IP = C, and AC = B. A column vector 𝜐 belongs to kernel of A (that is 𝐴𝜐 = 0) if and only

Β𝜔 = 0, where 𝜔 = 𝑃−1 𝜐 = 𝐶 −1 𝜐. As B in column echelon form, Β𝜔 = 0, if and only if the nonzero entries

of 𝜔 correspond to the zero columns of B. By multiplying by C. One may deduce that this is the case if

and only if 𝜐 = 𝐶𝑤 is a linear combination of the corresponding columns of C.

9

1.4 Rank of matrix

DefinitionIn linear algebra the rank of matrix A is the dimension of the vector space generated (or

spanned) by its columns. This corresponds to the maximal number of linearly independent columns of

A. This, in turn, is identical to the dimension of the vector space spanned by its rows. rank is thus a

measure of the” no degenerateness “of the system of linear equations and linear transformation encoded

by A. there are multiple equivalent definitions of rank. A matrix’ rank is one of its most fundamental

characteristics.

The rank is commonly denoted by rank(A) or rk(A); sometimes the parentheses are not written, as in

rank A.

1.4.1 General process:

Matrix= ‘your matrix you want to find rank’

M2=ref (matrix)

Rank=number_of_non_zero_rows (m2)

Where ref(matrix) is a function that does your run-of-the-mill Gaussian elimination

Number_non_zero_rows(m2) is a function that sums the number of rows with nine-zero entries Your

concern about all cases of linear combinations resulting in dependencies is taken care of with the ref

(Gaussian elimination) step. Incidentally, this works no matter what the dimensions of the matrix are.

1.4.2 Computed by Gaussian elimination

The Gaussian elimination algorithm can be applied to any m × n matrix A. In this way, for

example, some 6 × 9 matrices can be transformed to a matrix that has a row echelon form like

Where, the stars are arbitrary entries, and a, b, c, d, e are nonzero entries. This echelon

matrix T contains a wealth of information about A: the rank of A is 5, since there are 5 nonzero rows in T;

the vector space spanned by the columns of A has a basis consisting of its columns 1, 3, 4, 7 and 9 (the

columns with a, b, c, d, e in T), and the stars show how the other columns of A can be written as linear

combinations of the basis columns. This is a consequence of the distributivity of the dot product in the

expression of a linear map as a matrix

10

All of this applies also to the reduced row echelon form, which is a particular row echelon format.

Example:

3 −4 21

A = [0 42 −21]

0

2 24

2 54 −15

𝑹𝟐 →

𝑹𝟐⁄

𝟐𝟏

3

A = [0

1

2

−4 21

2 −1]

12 0

54 15

𝑹𝟑 → 𝟑𝑹𝟑 − 𝑹𝟏

𝑹𝟒 → 𝟑𝑹𝟒 − 𝟑𝑹𝟏

3

A = [0

0

0

−4

21

2

−1 ]

40 −21

170 −87

𝑪𝟐 →

𝑪𝟐⁄

𝟐

3 −2 21

−1 ]

A = [0 1

0 20 −21

0 35 −87

𝑹𝟑 → 𝑹𝟑 − 𝟐𝟎𝑹𝟐

𝑹𝟒 → 𝑹𝟒 − 𝟖𝟓𝑹𝟐

3

A = [0

0

0

−2 21

1 −1]

0 −1

0 −2

𝑹𝟒 → 𝑹𝟒 − 𝟐𝑹𝟑

3 −2 21

A = [0 1 −1] Which is in row echelon form

0 0 −1

0 0

0

Rank(A) = no. of non-zero rows = 3

11

1.4.3 C Program for finding rank of a ‘Matrix’

#include<stdio.h>

int R,C;

int i, j;

int mat[10][10];

void display( int, int);

void input( int, int);

int Rank_Mat(int , int);

void swap(int, int, int);

/* This function exchange two rows of a matrix */

void swap( int row1,int row2, int col)

{

for( i = 0; i < col; i++)

{

int temp = mat[row1][i];

mat[row1][i] = mat[row2][i];

mat[row2][i] = temp;

}

}

/* This function find rank of matrix */

int Rank_Mat(int row1, int col1)

{

int r, c;

for(r = 0; r< col1; r++)

{

display(R,C);

if( mat[r][r] ) // Diagonal element is not zero

for(c = 0; c < row1; c++)

if(c != r)

{

/* Make all the elements above and below the current principal

diagonal element zero */

float ratio = mat[c][r]/ mat[r][r];

for( i = 0; i < col1; i++)

mat[c][i] -= ratio * mat[r][i];

}

else

printf("\n");

/* Principal Diagonal elment is zero */

else

12

{

for(c = r+1 ; c < row1; c++)

if (mat[c][r])

{

/* Find non zero elements in the same column */

swap(r,c,col1);

break ;

}

if(c == row1)

{

-- col1;

for(c = 0; c < row1; c ++)

mat[c][r] = mat[c][col1];

}

--r;

}

}

return col1;

}

/* Output function */

void display( int row, int col)

{

for(i = 0; i < row; i++)

{

for(j = 0; j < col; j++)

{

printf(" %d", mat[i][j]);

}

printf("\n");

}

}

/* Input function */

void input( int row, int col)

{

int value;

for(i = 0 ; i< row; i++)

{

for(j = 0 ; j<col; j++)

{

printf("Input Value for: %d: %d: ", i+1, j+1);

scanf("%d", &value);

mat[i][j] = value;

}

}

13

}

/* main function */

void main()

{

int rank;

printf("\n Input number of rows:");

scanf("%d", &R);

printf("\n Input number of cols:");

scanf("%d", &C);

input(R, C);

printf("\n Row is : %d", R);

printf("\n Column is : %d \n", C);

printf("\n Entered Two Dimensional array is as follows:\n");

display(R,C);

printf("\n Row is : %d", R);

printf("\n Column is : %d\n", C);

rank = Rank_Mat(R, C);

printf("\n Rank of above matrix is : %d", rank);

}

Output:

14

1.5 Number of Solutions of the System of Equations

A system of linear equations usually has a single solution, but sometimes it can have no

solution (parallel lines) or infinite solutions (same line).

1.5.1 Hierarchy

Consistent

Solutions

Inconsistent

One

solution

Infinite

solutions

No

Solutions

One solution. A system of linear equations has one solution when the graphs intersect at a point.

Example:

We are asked to find the number of solutions to this system of equations.

y=-6x+8

3x+y=-4

Let’s put them in slope-intercept form:

y = -6x+8

y = -3x-4

If we solve the equations then we get x and y unique values

15

No solutions. A system of linear equations has no solutions when the graphs are parallel.

Example:

We are asked to find the number of solutions to this system of equations.

y=-3x+9

y=-3x-7

Without graphing this equation, we can observe that they have a slope of -3. This means that the lines

must be parallel. And since the y-intercepts are different, we know that the lines are not on top of each

other.

There are no solutions to this system of equations.

Infinite solutions. A system of linear equations has infinite solution when the graph is the exact same line.

Example:

We are asked to find the solutions to this system of equations:

-6x + 4y = 2

3x – 2 y= -1

Interestingly, if we multiply the second equation by -2, we get the first equation.

3x-2y=-1

-2(3x - 2y) = -2(-1)

-6x + 4y = 2

16

1.5.2 Procedure to Find Rank Method

i.

First, we have to write the given equations in the form of AX=B.

ii.

Then we have to write argumented matrix [A, B].

iii.

Then we have to find rank-of-matrices A and [A, B] by applying elementary row operations.

iv.

If rank (A) = rank of [A, B] = number of unknowns then we can say that the system is consistent

and it has unique solution.

v.

If rank (A) = rank of [A, B] < number of unknowns then we can say that the system is consistent

and it has infinite solution.

vi.

If rank (A) = rank of [A, B] then we can say that the system is inconsistent and it has no solution.

Example

let the given system of equations

Therefore, rank of A = Rank of (AB) = No. of unknowns = 3. Therefore, the given systems of equations

have unique solutions as z = 2, y = 8, and x = -15

17

1.6 Finding Solutions to the System of Equations

1.6.1 Introduction

In mathematics, a system of linear equations (or linear system) is a collection of one or

more linear equations involving the same set of variables. For example,

3x + 2y - z = 1

2x - 2y + 4z = -2

-x + 1/2y – z = 0

is a system of three equations in the three variables x, y, z. A solution to a linear system is an

assignment of values to the variables such that all the equations are simultaneously satisfied.

A solution to the system above is given by

x=1

y = -2

z = -2

since it makes all three equations valid. The word "system" indicates that the equations are to be

considered collectively, rather than individually.

In mathematics, the theory of linear systems is the basis and a fundamental part of linear algebra, a

subject which is used in most parts of modern mathematics. Computational algorithms for finding the

solutions are an important part of numerical linear algebra, and play a prominent role

in engineering, physics, chemistry, computer science, and economics. A system of non-linear

equations can often be approximated by a linear system (see linearization), a helpful technique when

making a mathematical model or computer simulation of a relatively complex system.

Very often, the coefficients of the equations are real or complex numbers and the solutions are searched

in the same set of numbers, but the theory and the algorithms apply for coefficients and solutions in

any field. For solutions in an integral domain like the ring of the integers, or in other algebraic structures,

other theories have been developed, see Linear equation over a ring. Integer linear programming is a

collection of methods for finding the "best" integer solution (when there are many). Groaner basis theory

18

provides algorithms when coefficients and unknowns are polynomials. Also tropical geometry is an

example of linear algebra in a more exotic structure.

1.6.2 General From

A general system of m linear equations with n unknowns can be written as

Where x1, x2… xn are the unknowns a11, a12…. amm are the coefficients of the system, and b1,

b2…, bm are the constant terms.

Often the coefficients and unknowns are real or complex numbers, but integers and rational

numbers are also seen, as are polynomials and elements of an abstract algebraic structure.

1.6.3 Procedure to Solve

Describing the solution

When the solution set is finite, it is reduced to a single element. In this case, the unique

solution is described by a sequence of equations whose left-hand sides are the names of the

unknowns and right-hand sides are the corresponding values, for example (x = 3, y = -2, z =6). When

an order on the unknowns has been fixed, for example the alphabetical order the solution may be

described as a vector of values, like (3, -2, 6) for the previous example.

To describe a set with an infinite number of solutions, typically some of the variables are designated

as free (or independent, or as parameters), meaning that they are allowed to take any value, while the

remaining variables are dependent on the values of the free variables.

For example, consider the following system:

X+3y-2z=5

3x+5y+6z=7

The solution set to this system can be described by the following equations:

X=-7z-1 and y=3z+2

here z is the free variable, while x and y are dependent on z. Any point in the solution set can be obtained

by first choosing a value for z, and then computing values for x and y.

each free variable gives the solution space on degree of freedom, the number of which is equal to the

dimension of the solution set. For example, the solution set for the above equation is the line, since a

point in the solution set can be chosen by specifying the value of the parameter z. an infinite solution of

the higher order may describe a plane, or higher-dimension set.

Different choices for the free variables may be led to different descriptions of the same solution set. For

example, the solution to the above equation can alternatively be described as follows:

3

𝑦=− 𝑥+

11

1

1

7

7

and 𝑧 = − 𝑥 −

7

7

Here x is the free variable, and y and z are dependent.

19

Elimination of Variables

The simplest method for solving a system of linear equations is to repeatedly eliminate variables.

This method can be described as follows:

1. In the first equation, solve for one of the variables in terms of the others.

2. Substitute this expression into the remaining equations. This yields a system of equations with one

fewer equation and one fewer unknown.

3. Repeat until the system is reduced to a single linear equation.

4. Solve this equation, and then back-substitute until the entire solution is found.

For example, consider the following system:

x+3y-2z=5

3x+5y+6z=7

2x+4y+3z=8

Solving the first equation for x gives x = 5 + 2z − 3y, and plugging this into the second and third

equation yields

-4y+12z=-8

-2y+7z=-2

Solving the first of these equations for y yields y = 2 + 3z, and plugging this into the second equation

yields z = 2. We now have:

x=5+2z-3y

Y=2+3z

Z=2

Substituting z = 2 into the second equation gives y = 8, and substituting z = 2 and y = 8 into the first

equation yields x = −15. Therefore, the solution set is the single point (x, y, z) = (−15, 8, 2).

Row reduction

In row reduction (also known as Gaussian elimination), the linear system is represented

as an augmented matrix:

This matrix is then modified using elementary row operations until it reaches reduced row echelon form.

There are three types of elementary row operations:

Type 1: Swap the positions of two rows.

Type 2: Multiply a row by a nonzero scalar.

Type 3: Add to one row a scalar multiple of another.

Because these operations are reversible, the augmented matrix produced always represents a linear

system that is equivalent to the original.

There are several specific algorithms to row-reduce an augmented matrix, the simplest of which

are Gaussian elimination and Gauss-Jordan elimination. The following computation shows GaussJordan elimination applied to the matrix above:

20

From the resultant matrix, we redefine the system of equations as

X+3Y-2Z=5;

Y-3Z=2;

Z=2.

Therefore x=25; y=8; z=2.

1.6.4 C Algorithm

Step 1: Start

Step2: Read the Given matrix

Step3: Display the matrix.

Step4: Make the first elements of 2nd and 3rd rows, using 1st element

of

1st row.

If 1st element of 1st row is 0, then use row swap function.

Step5: Make the 2nd of 3rd row by using 2nd element of 2nd row, use

row swap function if needed.

Step6: Print the resultant row echelon form matrix.

21

Step7: Redefine the system of equations by using resultant row echelon

form of matrix.

Step8: Find the solutions by solving these equations.

Step9: Stop.

1.6.5 C Program for Solving System of Equations

#include<stdio.h>

#include<conio.h>

//function declaration

void displaymatrix(int a[3][4]);

void swapmat(int *a[3][4],int row1,int row2);

void main(){

int mat[3][4],a,b,i,j;

float x,y,z;

clrscr();

//Reading Matrix

printf("Enter matrix (3X4)\n");

for(i = 0 ; i < 3 ; i++){

for(j = 0 ; j < 4 ; j++){

scanf("%d",&mat[i][j]);

}

}

displaymatrix(mat);

if(mat[0][0] == 0 && mat[1][0] != 0)

swapmat(&mat,0,1);

else if(mat[2][0] == 0)

printf("invlaid matrix");

if(mat[0][0]*mat[1][0] > 0){

a = mat[0][0];

b = mat[1][0];

for(j = 0 ; j < 4 ; j++){

mat[1][j] = a * mat[1][j] - b * mat[0][j];

}

} else {

a = mat[0][0];

b = mat[1][0];

for(j = 0 ; j < 4 ; j++){

mat[1][j] = a * mat[1][j] + b * mat[0][j];

}

22

}

if(mat[0][0]*mat[2][0] > 0){

a = mat[0][0];

b = mat[2][0];

for(j = 0 ; j < 4 ; j++){

mat[2][j] = a * mat[2][j] - b * mat[0][j];

}

} else {

a = mat[0][0];

b = mat[2][0];

for(j = 0 ; j < 4 ; j++){

mat[2][j] = a * mat[2][j] + b * mat[0][j];

}

}

if(mat[1][1] * mat[2][1] > 0){

a = mat[1][1];

b = mat[2][1];

for(j = 0 ; j < 4 ; j++){

mat[2][j] = a * mat[2][j] - b * mat[1][j];

}

} else if(mat[2][1]!=0){

a = mat[1][1];

b = mat[2][1];

for(j = 0 ; j < 4 ; j++){

mat[2][j] = a * mat[2][j] + b * mat[1][j];

}

}

printf("\nResults : \n");

displaymatrix(mat);

z = mat[2][3]/mat[2][2];

y = (mat[1][3]-(z*mat[1][2]))/mat[1][1];

x = (mat[0][3]-(y*mat[0][1])-(z*mat[0][2]))/mat[0][0];

printf("\nx = %.2f",x);

printf("\ny = %.2f",y);

printf("\nz = %.2f",z);

getch();

}

void displaymatrix(int a[3][4]){

int i,j;

printf("\nMatrix Elements are \n");

23

for(i = 0 ; i < 3 ; i++){

for(j = 0 ; j < 4 ; j++){

printf(" %d ",a[i][j]);

}

printf("\n");

}

}

void swapmat(int *a[3][4],int row1,int row2){

int i,j,temp;

printf("\nSwaping Matrix : \n");

for(i = row1 ; i < row1+1 ; i++){

for(j = 0 ; j < 4 ; j++){

temp = a[i][j];

a[i][j] = a[row2][j];

a[row2][j] = temp;

}

}

printf("\nMatrix after swaping\n");

displaymatrix(a);

}

Output:

24