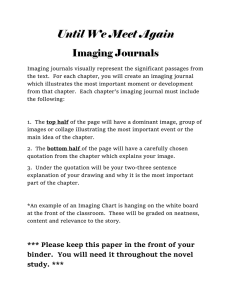

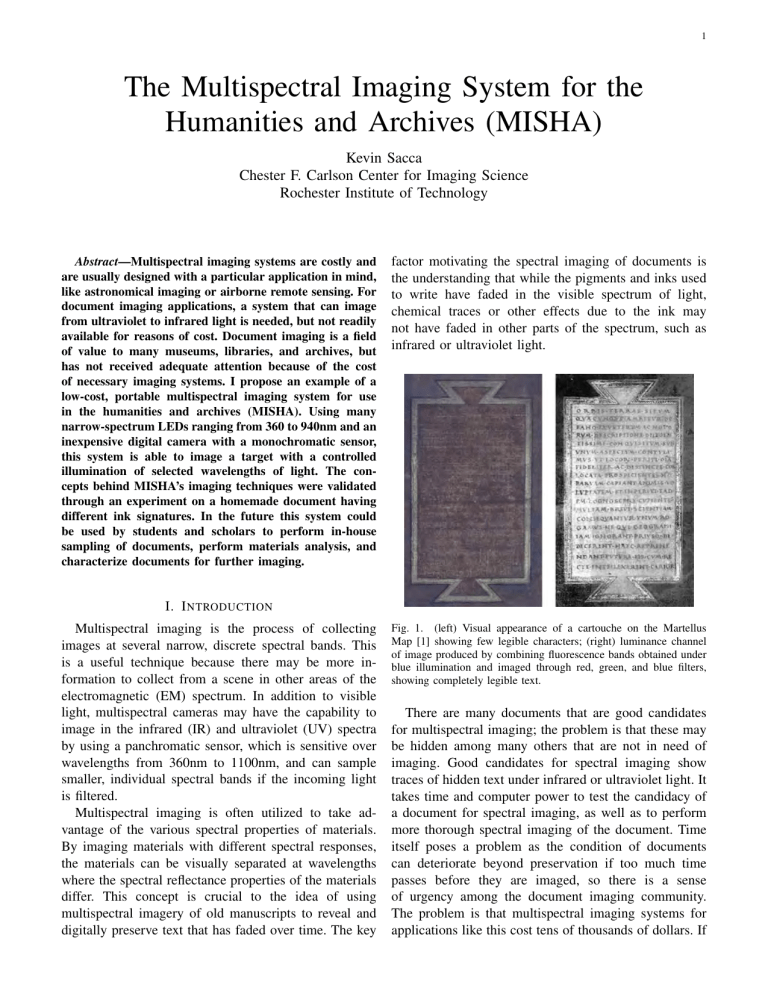

1 The Multispectral Imaging System for the Humanities and Archives (MISHA) Kevin Sacca Chester F. Carlson Center for Imaging Science Rochester Institute of Technology Abstract—Multispectral imaging systems are costly and are usually designed with a particular application in mind, like astronomical imaging or airborne remote sensing. For document imaging applications, a system that can image from ultraviolet to infrared light is needed, but not readily available for reasons of cost. Document imaging is a field of value to many museums, libraries, and archives, but has not received adequate attention because of the cost of necessary imaging systems. I propose an example of a low-cost, portable multispectral imaging system for use in the humanities and archives (MISHA). Using many narrow-spectrum LEDs ranging from 360 to 940nm and an inexpensive digital camera with a monochromatic sensor, this system is able to image a target with a controlled illumination of selected wavelengths of light. The concepts behind MISHA’s imaging techniques were validated through an experiment on a homemade document having different ink signatures. In the future this system could be used by students and scholars to perform in-house sampling of documents, perform materials analysis, and characterize documents for further imaging. factor motivating the spectral imaging of documents is the understanding that while the pigments and inks used to write have faded in the visible spectrum of light, chemical traces or other effects due to the ink may not have faded in other parts of the spectrum, such as infrared or ultraviolet light. I. I NTRODUCTION Multispectral imaging is the process of collecting images at several narrow, discrete spectral bands. This is a useful technique because there may be more information to collect from a scene in other areas of the electromagnetic (EM) spectrum. In addition to visible light, multispectral cameras may have the capability to image in the infrared (IR) and ultraviolet (UV) spectra by using a panchromatic sensor, which is sensitive over wavelengths from 360nm to 1100nm, and can sample smaller, individual spectral bands if the incoming light is filtered. Multispectral imaging is often utilized to take advantage of the various spectral properties of materials. By imaging materials with different spectral responses, the materials can be visually separated at wavelengths where the spectral reflectance properties of the materials differ. This concept is crucial to the idea of using multispectral imagery of old manuscripts to reveal and digitally preserve text that has faded over time. The key Fig. 1. (left) Visual appearance of a cartouche on the Martellus Map [1] showing few legible characters; (right) luminance channel of image produced by combining fluorescence bands obtained under blue illumination and imaged through red, green, and blue filters, showing completely legible text. There are many documents that are good candidates for multispectral imaging; the problem is that these may be hidden among many others that are not in need of imaging. Good candidates for spectral imaging show traces of hidden text under infrared or ultraviolet light. It takes time and computer power to test the candidacy of a document for spectral imaging, as well as to perform more thorough spectral imaging of the document. Time itself poses a problem as the condition of documents can deteriorate beyond preservation if too much time passes before they are imaged, so there is a sense of urgency among the document imaging community. The problem is that multispectral imaging systems for applications like this cost tens of thousands of dollars. If 2 a cheaper, more accessible multispectral imaging system were readily available for scholars to use, the number of documents in queue for imaging, processing, and digital preservation would permanently decrease. II. BACKGROUND There is a present and ever-growing need for the spectral imaging of historic manuscripts, paintings, and palimpsests. Over time, such delicate artifacts may fade, decay, or even accumulate mold if kept in less than optimum conditions. Many other documents have been damaged intentionally due to war and are now unreadable. Additionally, there are palimpsests, which are manuscripts which have been erased and written over, often by persons of religious order who could not acquire new substrate to copy religious text. Despite these types of damages, employing spectral imaging and processing is a method for revealing and preserving the information that was originally there. The Early Manuscripts Electronic Library (EMEL) is an organization that strives to provide libraries and archives with processed digital copies of the originals that they possess [3]. This group not only utilizes highquality imaging systems to take superb images, they extensively image documents with a multispectral system if the document is a good candidate. Dr. Roger Easton is a colleague of EMEL and has worked on various projects with EMEL, such as the Mount Sinai Project, the Archimedes Palimpsest, the St. Catherine’s Palimpsests, and the David Livingstone’s diary pages [2]. These documents are very important to scholars and he spent a lot of time developing imaging techniques to reveal, enhance, and preserve the texts that were thought to be lost. There is still much work to be done, and it’s estimated that over 60,000 documents are waiting to be imaged. The Palimpsests Project is an international coalition of scholars, graduate researchers, and undergraduate research students working to make the imaging technologies, processing, and analyzing of multispectral images of palimpsests more widely available [2]. There are numerous libraries that have a definite need for affordable, accessible, and open-source technologies to aid them in preserving their catalog of manuscripts. If a low-cost, portable, and effective multispectral imaging system were widely available, smaller groups around the world could pick up the catalog of palimpsests and other manuscripts that need to be imaged. III. R EQUIREMENTS The key components to this system are the camera (mainly the sensor), the illumination source(s), and the (a) Visual Appearance (b) Processed Pseudocolor Fig. 2. Example leaf from the Archimedes Palimpsest [2]. Note the deterioration present in the visual appearance as well as the abundant damage shown in the processed image. It is very common for there to be degradation of documents in the IR domain, where humans cannot perceive light. This means that even if there are traces of ink that reflect light under IR illumination, mold and fading may prevent any attempt at finding, let alone reading, the text. lens. The system must image the field under multiple narrow-band illuminations. Researchers have found that the combination of white light sources and different narrow-bandpass filters over the sensor is not the best way to collect multispectral imagery. The broadspectrum white light source has been found to actually damage the document due to the high-energy output of light that is not imaged. A solution to this problem is the use of narrow-spectrum LEDs to illuminate the document with only the energy to be imaged. This method can also be supplemented with color filters, which can result in spectral bands with a bandpass around 10-30nm. The reduced spectral energy output is small, which is a well-received aspect of using LED illumination by scholars and curators. In order to sample the scene with 3 sufficient spectral resolution, the number of bands, (or the number of different-wavelength LEDs that will be used) will be a factor that will be determined through this research. Using past research and the multispectral images resulting from them, the illumination sources will be chosen based on their effectiveness and usefulness. The sensor is important for many reasons, as it addresses the spatial, spectral, radiometric, and temporal resolution of the imaging system. It will be crucial to choose a camera with a sensor that is a well-balanced option between each type of resolution. The pixel size and number of pixels will determine the spatial resolution, and higher resolution tends to be better. However, to design a low-cost system that is capable of multispectral capture, spatial resolution will almost certainly be sacrificed to some degree to allow the sensor chosen to accommodate the other resolution requirements, such as the spectral response and radiometric properties of the electronics. The sensor also must be sensitive to a wider portion of the electromagnetic spectrum than a typical digital camera, which is often only effective from 400 to 700nm. Most digital cameras include infrared and ultraviolet filters installed, which limit the spectrum imaged to visible light. Since the spectral information is produced by the lights, a monochromatic sensor may be used. The most desirable sensor in terms of its spectral capabilities is one that is monochromatic (e.g. color filter array), contains no extra filters, is sensitive from 300-1000nm, and has low noise under low light level conditions. To simplify design, the sensor and camera will be considered a single component when researching options. TABLE I E STIMATED S YSTEM B UDGET Component Estimated Total Price Camera Illumination Sources Lens Structure Other Electronic Components $1500 $1000 $600 $300 $100 Total $3500 IV. D ESIGN The camera chosen for MISHA was the Point Grey Blackfly [4]. The camera offered a 5.0MP monochromatic sensor with USB3.0 connectivity and speeds. According to the documentation, the spectral response at ultraviolet and near-infrared wavelengths was adequate. It was fairly expensive for the specs but it was an easy off-the-shelf solution to begin testing with. The lens mount for the Blackfly is a C-mount, and a recommended lens for the camera was a Fujinon 12.5mm lens speciallymade for 5.0MP cameras, meaning that my optics would not be the leading contributor to low-spatial resolution of my system. The other key component to this system is the lens. When an optical system has to image such a wide range of wavelengths, its design must be more complex than a single, static lens. Chromatic aberration is an effect of variation in refractive index and will play a huge role in the resolution of this system. No matter how perfect the sensor and illumination, a low quality lens will reduce the overall quality of the images. Therefore, lenses that are designed for use in broad-spectrum applications will be thoroughly researched. Other than these components, some kind of rigid structure that holds each component in place will be needed. For any imaging system that captures long exposures of a static target, the structure needs to be very stable, yet adjustable, strong, yet light, and it needs to be easy to maintain and assemble/disassemble. If this system is made to be highly portable, a more expensive, lightweight material may be chosen with a collapsible design in mind. Fig. 3. The MISHA system. (1) Raspberry Pi, (2) Point Grey Blackfly, (3) 3D Printed LED Lamps. 4 (a) PG Blackfly Camera (b) LED Lamp Fig. 4. 4a Point Grey Blackfly 5.0MP USB3.0 with a monochromatic Sharp RJ32S4AA0DT sensor [4]. 4b Image showing the interior of one of MISHA’s LED lamps with the diffuser removed. The colors represent the colors of light each wavelength emits, with the black circles representing UV or IR. The illumination source for MISHA was decided after purchasing some LEDs to test with, and realizing the simplicity of using cheap LEDs. The 16 unique LEDs purchased have central wavelengths of: 361nm, 400nm, 420nm, 440nm, 470nm, 505nm, 525nm, 575nm, 590nm, 610nm, 630nm, 660nm, 780nm, 850nm, and 940nm. A broad-spectrum white LED type is also used. The LEDs all have varying angular spread, intensity, and bandwidths, but they were extremely inexpensive and these properties are not bad. The varying angular spread of the different LEDs was effectively normalized with the use of soft light diffusers. The diffusers create a flat-field illumination of the target to minimize specular effects from the reflection off the target. In order to simplify the design of the system, a convenient method for organizing the lights was needed. The solution chosen was to design and 3D print lamp casings for the 16 LEDs. Two lamps were created to be placed 45 degrees from nadir to maximize reflected light in the vertical direction. [2] The diffusers fit between two plates constructed into the LED lamps making for a simple packaged light source. Fig. 5. Spectral Quantum Efficiency of the Point Grey Blackfly integrated in MISHA. [4] Fig. 6. An approximate spectral output plot for each of MISHA’s 16 unique LEDS. Each curve is a normalized gaussian with a fixed FWHM value of 50nm, which was the wider range of bandwidths among the LEDs purchased. The camera and the LEDs are controlled via an onboard Raspberry Pi microcomputer. The Pi is an incredibly capable device, and well-suited to systems requiring few computing tasks. In MISHA’s case, the Pi is really only responsible for providing some method for turning on a specified LED at a time, capturing an image, turning off the LED, and repeating. Other solutions to this workflow are easily possible with other technology, but the Pi also has a great amount of support and multiple platforms to work with. The Pi allows for server control via WiFi, which means that MISHA can be operated remotely from any other computer. The software to control MISHA was written exclusively in open-source tools, namely Python and C. Another challenge for MISHA was aiming to develop a strong but portable and adjustable structure. A very simple design is proposed, but it allows for easy adjustment and even folds down flat to fit inside a laptop bag. 80/20 aluminum was used for the structure because it is easy to assemble, cut, and add/remove components. The entire system was painted black to try to reduce the possibility of reflections occurring during image capture, which would result in imagery with undesirable specular effects. Fig 3 shows the final system ready for imaging. The anticipated budget for MISHA was $3500 but only about $1700 total was spent building the system. Many of the prices shown in Table II are very generous estimates, and the cost of everything purchased for testing is included as well. Therefore, building a system of known components and streamlining the construction would result in a much cheaper system. Also, the camera/lens are clearly the largest purchases, and a different choice of camera could drastically bring down the price of MISHA while not sacrificing quality. Even at a price 5 TABLE II T OTAL C OST OF MISHA Component Price Point Grey Blackfly Camera 5.0 MP Mono Fujinon 12.5mm C-mount Lens Bulk LEDs, Resistors, and Cables Raspberry Pi 2 Starter Kit 74” of 1”x1” 80/20 Aluminum 3D Printed Lamps and Light Diffusers $1,000 $300 $250 $90 $30 $30 Total Cost $1,700 point of $1700, MISHA is incredibly affordable, and could be a solution for many researchers and scholars to determine if a document is a good candidate for highresolution multispectral imaging. V. R ESULTS The evaluation of MISHA’s performance should be in three main aspects of cost effectiveness: Spatial Resolution, Spectral Resolution/Sensitivity, and Accessibility. MISHA is first and foremost an affordable alternative to an expensive high-resolution multispectral imaging system, so it’s performance should be rated by it’s potential to be used as a preliminary step in document imaging applications. If MISHA is shown to have the capability to capture multispectral image cubes that show the spectral properties of a target, then the concept will have been proved successful. Fig. 7. Example of a 16-band image cube as captured by MISHA. The front band shown is the image exposed by the broad-spectrum white LED. Note the varying intensity of each band along the spectral axis, this is due to dim LEDs and poor QE at extreme wavelengths. In terms of spatial resolution, MISHA needs to be able to distinguish fine details in small documents and text, and an acceptable resolution is about 300 pixels per inch (ppi). With this high of resolution, a multispectral imaging system should be able to distinguish between manuscript writing and stains/mold. MISHA accomplishes 200ppi at the expense of field of view (FOV). With the camera positioned 14 inches above the target, the field of view of the camera/lens used is approximately 6 inches by 7 inches, yielding the 200ppi resolution captured by 1.25MP images. The spatial and radiometric resolution of MISHA was limited mostly by the Raspberry Pi’s data transfer capabilities. The Raspberry Pi 2 has only USB2.0 ports, while the Point Grey Blackfly camera is USB3.0. Image data sent from the Blackfly is too large for the Pi to process and store when the resolution of the image is above 1.25MP 8-bit images. 1.25MP (1024x1224) was achieved using 2x2 binning on the 5MP (2048x2248) sensor. If the Raspberry Pi could achieve the full resolution of the camera, then the resolution of the imagery would be doubled to be 400ppi at the same capture height. The FOV of the images is still at maximum with this technique. The Blackfly is specified to be able to handle 10-bit and 12bit imagery, allowing for more ADU steps to saturation, however the Raspberry Pi can’t handle the increased data size of each image, so 8-bit is the only option for this system. Higher bit-depth in imagery for document image processing is actually very important, but again, for the purpose MISHA serves, 8-bit imagery still works. As for the spectral resolution and spectral range detectable by MISHA, the performance can be assessed by the quality of each individual band MISHA has, which is determined by the combination of the sensor’s spectral quantum efficiency (SQE) and the spectral output of each LED. Ideally, a multispectral imaging system will have high QE for all wavelengths sampled and many narrow bandpass LEDs. MISHA has 16 bands, with each band exposed by two identical LEDs that have average bandwidths between 40-50nm at FWHM [6]. To saturate the sensor as much as possible, the longest exposure that the camera hardware would allow is used for each band. Increasing the exposure time also decreases the effects of random photon noise that is usually a problem in lowlight conditions. Luckily, for document imaging applications, the scene is static and so motion blur is not an issue to worry about. Three seconds was the maximum exposure time programmable into the hardware using the Raspberry Pi as the computer. Perhaps with another system, exposures could be lengthened. The SQE of the Blackfly is decent at wavelengths longer than 360nm but for the 361nm centered UV LED, the QE and signal to noise ratio are poor, resulting in an image appearing to be random noise. The result of using an 1100nm LED for a 17th band would be of similar quality. 6 (a) 360nm - UV (b) 400nm - UV (c) 420nm (d) 440nm (e) 470nm (f) 505nm (g) 525nm (h) 575nm (i) 590nm (j) 610nm (k) 630nm (l) 660nm (m) 780nm -NIR (n) 850nm - NIR (o) 940nm - NIR (p) Broad-Spectrum White Fig. 8. All 16 monochromatic bands from a MISHA capture of a handmade document. The document used was a simple sticky note with various inks and writing tools used, to show the varying spectral properties that are regularly encountered in document imaging. Note that some inks are completely transmissive in IR light, revealing pencil text underneath. Fig. 9. The truecolor image obtained by loading Fig 8k into the Red channel, Fig 8g into the Green channel, and Fig 8e into the Blue channel into an RGB render. As you can see in Fig 8, the individual bands are showing the unique spectral reflective and transmissive properties of the different inks. This is the working concept behind multispectral imaging systems and how the spectral properties of materials can be sampled. In document imaging, the multispectral sampling serves to separate inks into different spectral layers so that one type of ink can be isolated and read at a time. In Fig 8b, the fluorescent nature of some inks is shown, where high frequency UV light is absorbed and light at lower energies is emitted, which is why the ’Hello World!’ text appears so bright. In Fig 8n and the other NIR bands, the transmissive nature of some inks is shown, where the 7 light simply passes right through the ink and the detected light from the image sensor is the light reflected from underneath the ink, showing the ’IMAGINE RIT’ text. VI. C ONCLUSION From the results shown in Fig 8, it is clear that MISHA is proving the legitimacy of using inexpensive components to build a multispectral imaging system for document imaging applications. The spectral nature of different inks is shown in 16 bands, each producing different effects such as absorption, reflection, transmission, and fluorescence. From this result, it is safe to say that this system and others like it could be used by scholars to image their own documents to test for good candidacy for high-resolution multispectral imaging. Radiometric calibration, spectral characterization of the LEDs, and MTF calculation would have been nice additions to system characterization results, but none of these items are absolutely necessary for multispectral imaging of documents. Time permitting, these would have been additional steps in the scope of the project. Stretch goals for MISHA were to make it portable, easy to use, and cheaper than $2500. All of these were accomplished through careful design and simple components. It is important to those involved in document imaging research to have access to cheaper equipment, so lowering the total cost of the system will be the focus of future work on this project. It would be very easy to cut a significant portion of the total cost by using a much cheaper camera and lens combination. Simplifying the electronics in the LED lamp would also decrease the amount of material needed to construct them, thereby lowering the cost. Once the system costs around $500 total, many scholars and researchers around the world could easily afford to own a MISHA, rather than rent one or another very expensive system. Hopefully this project leads to an advancement in document imaging technologies and brings attention to the global movement to image, process, and preserve old documents of historical importance. Only with widely available technology, motivated individuals, and quick action will we begin to make strides in the effort to preserve the humanities from centuries ago. ACKNOWLEDGEMENTS I’d like to thank R. Easton for advising me and giving me the opportunity to work on such amazing projects. I’d also like to thank R. Kremens for his insight into system design and electronics. Thanks to J. Pow and the Chester F. Carlson Center for Imaging Science for funding the first MISHA system. A special thank you to D. Kelbe, K. Boydston, M. Phelps, and C. van Duzer for inspiring me to build this system for my senior project. Lastly, thank you to S. Chan, M. Helguera, J. van Aardt, A. Vodacek, J. Qiao, E. Lockwood, L. Cohen, Z. Mulhollan, H. Grant, and all the other students, faculty, and staff who are a part of the Imaging Science community and support students and their great projects. R EFERENCES [1] R. E. et al., “Rediscovering text in the yale martellus map: Spectral imaging and the new cartography,” in WIFS Rome, August 2015. [2] R. Easton. Imaging of historical manuscripts. [Online]. Available: http://www.cis.rit.edu/people/faculty/easton/manuscriptsshort.html [3] M. P. et al. Emel: Early electronic manuscripts library. [Online]. Available: http://www.emel-library.org [4] PointGrey. Blackfly 5.0 mp mono usb3 vision sharp rj32s4aa0dt. [Online]. Available: http://www.pointgrey.com [5] NEDCC. Protection from light damage. [Online]. Available: https://www.nedcc.org/free-resources/preservationleaflets/2.-the- environment/2.4-protection-from-light-damage [6] LedSupplyCo. Led specifications. [Online]. Available: http://www.ledsupply.com