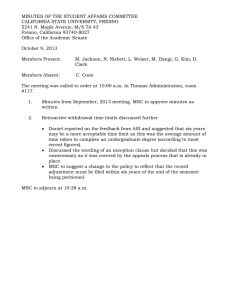

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/225155100 Improved Water Level Forecasting Performance by Using Optimal Steepness Coefficients in an Artificial Neural Network Article in Water Resources Management · August 2011 DOI: 10.1007/s11269-011-9824-z CITATIONS READS 34 695 4 authors: Muhammad Sulaiman Ahmed El-Shafie Universiti Malaysia Pahang Universiti Kebangsaan Malaysia 2 PUBLICATIONS 35 CITATIONS 131 PUBLICATIONS 3,173 CITATIONS SEE PROFILE SEE PROFILE Othman A Karim Hassan Basri Universiti Kebangsaan Malaysia Universiti Kebangsaan Malaysia 63 PUBLICATIONS 909 CITATIONS 164 PUBLICATIONS 2,694 CITATIONS SEE PROFILE Some of the authors of this publication are also working on these related projects: Intelligent Solid Waste monitoring and Management for SmartCity/SmartVillage View project Phytoremediation of Arsenic using Terrestrial Plant View project All content following this page was uploaded by Muhammad Sulaiman on 21 September 2015. The user has requested enhancement of the downloaded file. SEE PROFILE Water Resour Manage (2011) 25:2525–2541 DOI 10.1007/s11269-011-9824-z Improved Water Level Forecasting Performance by Using Optimal Steepness Coefficients in an Artificial Neural Network Muhammad Sulaiman · Ahmed El-Shafie · Othman Karim · Hassan Basri Received: 20 August 2010 / Accepted: 4 April 2011 / Published online: 24 May 2011 © Springer Science+Business Media B.V. 2011 Abstract Developing water level forecasting models is essential in water resources management and flood prediction. Accurate water level forecasting helps achieve efficient and optimum use of water resources and minimize flooding damages. The artificial neural network (ANN) is a computing model that has been successfully tested in many forecasting studies, including river flow. Improving the ANN computational approach could help produce accurate forecasting results. Most studies conducted to date have used a sigmoid function in a multi-layer perceptron neural network as the basis of the ANN; however, they have not considered the effect of sigmoid steepness on the forecasting results. In this study, the effectiveness of the steepness coefficient (SC) in the sigmoid function of an ANN model designed to test the accuracy of 1-day water level forecasts was investigated. The performance of data training and data validation were evaluated using the statistical index efficiency coefficient and root mean square error. The weight initialization was fixed at 0.5 in the ANN so that even comparisons could be made between models. Three hundred rounds of data training were conducted using five ANN architectures, six datasets and 10 steepness coefficients. The results showed that the optimal SC improved the forecasting accuracy of the ANN data training and data validation when compared with the standard SC. Importantly, the performance of ANN data training improved significantly with utilization of the optimal SC. Keywords Artificial neural networks · Sigmoid function · Steepness coefficient · Water level forecasting M. Sulaiman (B) Faculty of Civil Engineering and Natural Resources, University Malaysia Pahang, Pahang, Malaysia e-mail: muhammad@ump.edu.my A. El-Shafie · O. Karim · H. Basri Department of Civil Engineering, Faculty of Engineering, National University of Malaysia, Bangi, Malaysia 2526 M. Sulaiman et al. 1 Introduction River flow forecasting is essential in water resources management because it can facilitate the management of water resources, thereby optimizing the use of water. The ability to forecast river flow also helps predict the occurrence of future flooding, enabling better preparation to avoid the loss of lives and minimize property damage. Forecasting studies normally require a series of historical datasets so that future events can be predicted based on past events. It is vital for local agencies such as the water authority to maintain good quality river flow data to facilitate reliable river flow forecasting. The artificial neural network (ANN) is a computing model that can solve nonlinear problems from a series of sample datasets. ANN computing is based on the way the human brain processes information. The ANN can define hidden patterns within sample datasets and forecast based on new dataset inputs. Thus, in many areas of study such as forecasting river flow, the ANN does not require defined physical conditions of the subject, which in this case is the river. Other problems such as regression, classification, prediction, system identification, feature extraction and data clustering can also be solved through ANN computing. ANNs have been widely applied in many areas including the financial, mathematical, computer, medicinal, weather forecasting and engineering fields. In water resources studies, ANNs are employed to forecast daily river flow (Atiya et al. 1999; Coulibaly et al. 2000; Ahmed and Sarma 2007; El-Shafie et al. 2008; Wu et al. 2009), water levels (Bustami et al. 2007; Leahy et al. 2008), flood events (Tareghian and Kashefipour 2007; Kerh and Lee 2006), rainfall runoff patterns (Chiang et al. 2004; Agarwal and Singh 2004; Rahnama and Barani 2005), reservoir optimization (Cancelliere et al. 2002; Chandramouli and Deka 2005) and sedimentation (Cigizoglu and Kisi 2006; Rai and Mathur 2008). For a general review of the application of ANN in hydrology, refer to ASCE (2000a, b). The objective in studies of ANN forecasting is to identify the best forecasting model that can provide the most accurate forecasting results possible. This is achieved by modifying key components in the ANN during data training. These essential parameters are the number and type of data inputs, number of hidden layers and neurons, activation of transfer function, and optimization method to identify the weight in neurons. In river flow forecasting, data inputs can be generated from historical river flow data, rainfall, precipitation and sedimentation. El-Shafie et al. (2007, 2008, 2009) conducted river flow forecasting of the Nile River using river flow data obtained from a single station. Turan and Yurdusev (2009) used a multiple upstream river flow station to forecast river flow. Chiang et al. (2004) and Rahnama and Barani (2005) included rainfall and runoff data when conducting river flow forecasting, and Zealand et al. (1999) included precipitation, rainfall and flow data in their forecasting study. Fernando et al. (2005) suggested several methods to identify the proper inputs to a neural network. Other studies (Alvisi et al. 2006; Toth and Brath 2007) have investigated the effects of the number and type of inputs on the ANN performance. Once data inputs have been determined, selection of the number of hidden layers and neurons plays a vital role in achieving the best forecasting performance. Determination of the number of layers and neurons used in forecasting studies has generally been based on a trial-and-error approach (Coulibaly et al. 2000; Improved Water Level Forecasting Performance 2527 Joorabchi et al. 2007; Solaimani and Darvari 2008; Turan and Yurdusev 2009). Many studies have shown that one hidden layer is sufficient. Indeed, Hornik et al. (1989) found that multilayer feed forward networks with one hidden layer were capable of approximating to any desired degree of accuracy provided sufficient hidden units were available. Zhang et al. (1998) reviewed published studies of ANN and found that a single hidden layer is most popular and widely used in the layer selection. Two hidden layers are also able to produce the best forecasting performance in certain problems (Barron 1994). However, selection of the number of ANN neurons is still based on trial and error (Chauhan and Shrivastava 2008). Activation transfer function (ATF) is the main computing element of ANN and plays an important role in achieving the best forecasting performance. The most common type of activation transfer function is the sigmoid function (Zhang et al. 1998). However, several studies have used different types of ATFs within the ANN to improve the forecasting performance. Shamseldin et al. (2002) used logistic, bipolar, hyperbolic tangent, arc-tan and scaled arc-tan to explore the potential improvement of ANN forecasting. Joorabchi et al. (2007) applied log-sigmoid and hyperbolic tangent sigmoid transfer functions to produce their output. Han et al. (1996) introduced optimization of the variant sigmoid function using a genetic algorithm to optimize the ANN convergence speed and generalization capability. Many researchers have studied the exterior architecture of the ANN using a trial and error approach. Others have investigated the interior architecture of the ANN, but have been limited to testing different types of ATF in the ANN architecture. The sigmoid function, which is the most commonly used computing function for ATF, has been widely used in the ANN because of its ability to influence the performance of the ANN. This study was conducted to evaluate the effectiveness of the steepness coefficient in a sigmoid function for improving ANN data training and forecasting in a river flow study. Additionally, the effectiveness of the optimal steepness coefficient approach for the exterior architecture in river flow forecasting was compared with that of the traditional approach based on trial and error. The improved performance of the ANN water level forecasting could assist water authorities in managing water resources. 2 Methodology In this study, sigmoid functions with different steepness coefficients were evaluated as activation transfer functions in an ANN to improve the forecasting water level at Rantau Panjang Station, Johor Baru, Malaysia. The performance of the ANN data training and data validation were measured using the statistical index Nash-Sutcliffe efficiency coefficient (NS). The Root Mean Square Error (RMSE) was also used to measure the accuracy of the data forecasting performance. 2.1 Artificial Neural Network The ANN is a non-linear mathematical computing model that can solve arbitrarily complex non-linear problems such as time series data forecasting. The ANN can identify hidden patterns in sample datasets and forecast based on these patterns. ANN computing is based on the way human brains process information. Indeed, 2528 M. Sulaiman et al. the ANN is a non-linear solver that needs to compute constant terms or unknown parameters before the x’s or data input can be computed. The general ANN nonlinear equation can be defined as follows: y1 , . . . yk = f (x1 , . . . xm , . . . c1 , . . . cn ) (1) where y is the forecasted output, k is the number of forecasted outputs, x is the input, m is the number of data input, c is a constant term and n is the number of constant terms. The constant term c is referred to as the weight in ANN modeling. y can be computed if m, the c values and n are known. The x values are data input. To determine the m, c values and n, it is necessary to understand the ANN architecture. The architecture of ANN is dependent on the type of ANN that is used. In this study, a multi-layer perceptron back-propagation (MLP-BP) neural network, which is the most common type of ANN used in forecasting studies, was employed. The reason for the popularity of the MLP-BP is its simplicity, easy implementation and demonstrated success in forecasting studies. Figure 1 provides an example of the MLP-BP architecture. There are three layers in MLP-BP, an input layer, a hidden layer and an output layer. There can be only one input layer and one output layer; however, there can be more than one hidden layer. Each layer contains stored neurons, with the total number of neurons being determined by the user. The number of neurons in the input layer is equivalent to the number of data inputs selected in a study. The number of output neurons in the output layer is generally one, resulting in one forecasted output. The number of neurons in the hidden layer is subject to user selection. Most often, the number of input neurons and hidden neurons are determined based on trial and error. An additional dummy neuron known as a bias neuron that holds a value of 1 is added to the input layer and each hidden layer. The bias neuron acts as a threshold value so that the value of the forecasted output falls between 0 and 1. The neurons are interconnected between layers as shown in the figure. The direction of the link is from the input layer to the output layer, and these links represent the computational process within the ANN architecture. The actual computational process occurs in the neuron, in which the activation transfer function assigned to the neuron is used to compute the incoming value and produce an output. In the input neuron, the activation transfer functions relay the incoming data input as output. In the hidden and output neurons, the activation transfer function computes the incoming value and produces an output. In the input neurons, the activation transfer function employed is a linear transfer function. In hidden and Fig. 1 Multi-layer perceptron back-propagation neural network in the study Improved Water Level Forecasting Performance 2529 Fig. 2 An active neuron with sigmoid function and threshold input output neurons, the activation transfer function that is commonly used is a sigmoid function. Figure 2 shows a neuron with a sigmoid function that computes incoming data and produces an output. The data received by the neuron is the summation of the output of the previous neuron based on its weight. Finally, the weight c values can be determined by data training. Data training can be accomplished when the number of neurons in each layer is defined, an activation transfer function is assigned to the neurons in each layer, and training data are available. The data training process involves two parts of computation, feed-forward and back propagation. In the feed-forward computation, computing starts from the input layer and proceeds to the output layer based on the links and ATF in the ANN architecture. The output of the feed-forward computation is the forecasted value. In the data training process, performance is evaluated based on the model output value with respect to the observed value. If the measured performance does not achieve the target performance, a back-propagation computation takes place. The backpropagation computation is a process of adjusting the weights in the architecture based on the gradient descent method. The process of the weight adjustment starts from the output layer and proceeds backward toward the input layer. The process of feed-forward and back-propagation continues until the performance target is achieved. Once the data training process is completed, the weights of the c values are determined. Then, the forecasting process proceeds based on the single feed-forward computation utilizing the input data as shown in the model architecture. The results are then evaluated by applying performance measures to the observed data versus the model output values. 2.2 Sigmoid Function The activation transfer function (ATF) forces incoming values to range between 0 to 1 and 1 to −1 depending on the type of function used. The most commonly used ATF in the MLP-BP is the sigmoid function. The sigmoid function is a differentiable function in which the gradient method can be applied to the ANN to adjust the ANN weights so that the model output and observed data reach a target performance value during data training. The sigmoid function is defined as follows: y= 1 1 + e−kx (2) 2530 M. Sulaiman et al. where y is the sigmoid value, k is the sigmoid steepness coefficient and x is the data or incoming values. As in the case of the neural network, the incoming values are the summation of the input and weight values. Additional input with a value of 1 and its weights are added as a threshold value so that the computed sigmoid function will result in an activation value between 0 and 1. 2.3 Case Study Area and Dataset The case study area in the present study was Rantau Panjang station, which is located along the bank of the Johor River (Fig. 3). More than 12 flood events have occurred in Rantau Panjang since 1963. All occurrences of flooding were caused by river flow from the upper stream and heavy rainfall during the Northeast monsoon, which is between December and January (except in 1964). The Johor River, which is located at the central part of southern Johor, originates from Mount Gemuruh (109 m) and Mount Belumut (1,010 m). The river length is about 122.7 km with a drainage area of 2,636 km2 . The river flows through the southern part of Johor and discharges into the Straits of Johor (0 m). The main tributaries of the river are the Linggiu and Sayong rivers. Data for this study were provided by the Department of Irrigation and Drainage, Ampang, Selangor. The data consist of 45 years of historical hourly water level data obtained from 1963 to 2007. The collected data were divided into two categories; training data and validation data. Due to missing daily data in the historical records, the training and validation data were divided into smaller groups of continuous datasets. Six water level datasets were extracted from 1963 to 1986 for data training and a single dataset with 1,144 daily water level data points extracted from 1994 to Fig. 3 The Johor River basin Improved Water Level Forecasting Performance 2531 2007 for data validation. The description of the training and validation datasets is shown in Table 1. The datasets in this study were normalized based on the following equation: N= Oi − Omin Omax − Omin (3) where N is the normalized value, O is the observed value, Omax is the maximum observed value and Omin is the minimum observed value. 2.4 Implementation A total of 10 sigmoid functions based on 10 different steepness coefficients (Fig. 4; Table 2) were used as the activation transfer functions in the hidden and output neurons. The steepness coefficients ranged from 0.025 to 1. The steepness coefficient with a value of one, which is commonly used as the default steepness coefficient in sigmoid functions, was used as the Standard Steepness Coefficient (SSC). The other nine steepness coefficients ranging from 0.025 to 0.7 were referred to as Milder Steepness Coefficients (MSC) and numbered from 1 to 9. As an example, MSC_1 refers to a steepness coefficient of 0.7. Pre-analysis of a steepness coefficient greater than one shows poor performance in data training and validation of the water level; thus, it is not described in this paper. Previous studies (Atiya et al. 1999; Solaimani and Darvari 2008) have revealed that a single layer is sufficient for forecasting river flow. Therefore, in this study, an MLP-BP with one hidden layer was selected. In the ANN model tested in this study, 3, 4, 5, 6, and 7 input data values were investigated. The number of input data refers to the number of multi-lead days ahead of the forecasting day. The number of input neurons was the same as the number of data input, while the number of output neurons was one, referring to 1 day ahead of water level forecasting. The number of neurons in the hidden layer was equal to the number of input neurons. Based on these findings, five MLP-BP architectures ranging from Net1 to Net5 were developed (Table 3). The MLP-BP architecture used in this study is shown in Fig. 1. The goal of ANN data training is to determine the weights in the ANN architectures by processing sets of data input from historical data through iteration. Generally, the weights in the network model are initialized with random values between value 0 and 1. This method helps achieve better forecasting performance when compared with conditions in which the initial weights are initialized with fixed values. However, to enable equal data forecasting comparisons between different network models, the initial weights in all network models in this study were initialized Table 1 Training and forecasting datasets used in the study Dataset Date from Date to Water level (days) Purpose DS_1 DS_2 DS_3 DS_4 DS_5 DS_6 DS_7 05-08-1963 16-12-1971 12-05-1976 15-02-1979 27-11-1979 20-03-1981 16-02-2004 15-07-1970 01-03-1976 08-12-1977 13-11-1979 09-03-1981 24-11-1986 02-05-2007 2,264 1,539 576 272 470 1,276 1,144 Data training Data training Data training Data training Data training Data training Data forecasting 2532 M. Sulaiman et al. Fig. 4 Sigmoid function with ten different steepness coefficients. MSC milder steepness coefficient with a fixed value of 0.5. By doing so, each data training process started with the same position of weight values, thereby enabling even comparisons of the forecasting performance of different network models. Another, parameter that needs to be fixed is the epoch so that equal forecasting performance of the different network models can be made. Thus, an early stopping procedure (ASCE 2000a), which is the best approach to stopping data training, was not implemented in this study. In summary, 300 data training models based on six training datasets, 10 steepness coefficients and five ANN architectures were tested. The ANN weights were initialized with a fixed value of 0.5, and 2000 epochs were used for the data training. The model performance was tested based on a single validating dataset generated from the best known training performances of the SSC and the optimal MSC of the six training datasets. Comparisons of the observed and forecasting water levels were charted to show the results of the data validation. 2.5 Performance Evaluation Data training performance in this study was evaluated using the Nash-Sutcliffe coefficient of efficiency (NS). The efficiency NS is a statistical index widely used Table 2 The ten steepness coefficients in the sigmoid function Steepness coefficient Value Sigmoid function SSC MSC_1 MSC_2 MSC_3 MSC_4 MSC_5 MSC_6 MSC_7 MSC_8 MSC_9 1.00 0.70 0.50 0.35 0.25 0.17 0.100 0.075 0.050 0.025 1/(1 + e−x ) 1/(1 + e−0.7x ) 1/(1 + e−0.5x ) 1/(1 + e−0.35x ) 1/(1 + e−0.25x ) 1/(1 + e−0.17x ) 1/(1 + e−0.1x ) 1/(1 + e−0.075x ) 1/(1 + e−0.05x ) 1/(1 + e−0.025x ) Improved Water Level Forecasting Performance Table 3 The five ANN architectures 2533 ANN architecture Input neuron Hidden neuron Output neuron Weights Net1 Net2 Net3 Net4 Net5 3 4 5 6 7 3 4 5 6 7 1 1 1 1 1 16 25 36 49 64 to describe the forecasting accuracy of hydrological models. An NS with a value of one indicates a perfect fit between the modeled and observed values. In addition, an NS with a value of greater than 0.9 indicates satisfactory performance results, while a value greater than 0.95 indicates good performance results between the two data series. The NS is defined as: N NS = 1 − i=1 N (Oi − Fi )2 (4) (Oi − O)2 i=1 where O is the observed value, F is the forecasted value and N is the number of data being evaluated. The model performance was measured using the NashSutcliffe’s coefficient of efficiency and root mean square error (RMSE). The RMSE describes the average magnitude of error of the observed and forecasted values and is defined as: N RMSE = (Oi − Fi )2 i=1 N (5) where the terms above are the same as in Eq. 4. The units for RMSE in this study are in millimeters. 3 Results and Discussion The data training and data validation results for the five ANN architectures trained with six datasets, 10 different steepness coefficients in sigmoid function and 2000 epochs are presented herein. Figure 5 shows the data training performance for the five ANN architectures in which each of the six datasets were trained with 10 steepness coefficients. All datasets trained with MSC in the ANN architectures generally had better performance than datasets that were trained with the SSC. Milder MSC values such as MSC_3 to MSC_9 tended to produce better performance than SSC, MSC_1 and MSC_2. However, this does not necessarily indicate that milder steepness coefficients produce better training performance. Figure 6 shows an enlargement of Fig. 5e for data training performance greater than 0.9. The figure shows a variation in dataset performance above 0.9 for Net5, which is also indicated for Net1 to Net4. Dataset DS_1 shows decreasing performance starting from MSC_3, which demonstrates that a milder steepness coefficient is not necessarily better. Datasets DS_2, DS_4 and 2534 M. Sulaiman et al. (a) Net1 (c) Net3 (b) Net2 (d) Net4 (e) Net5 Fig. 5 Data training performances of the six datasets with five different network models and ten steepness coefficients DS_6 indicate that the best performance occurred when a steepness coefficient of MSC_9, which was the steepest MSC tested, was used. In fact, the optimal MSC could occur at or between MSC_3 to MSC_9. As shown in Fig. 6, datasets DS_1, DS_3 and DS_6 showed strong data training performance where the performance for the SSC training had NS values close to or greater than 0.9, while datasets DS_2, DS_4 and DS_5 had poor data training performance when the NS values were below 0.85, and occasionally when the values were lower than 0.7. However, for the poor data training performance, the NS values improved to greater than 0.85, Improved Water Level Forecasting Performance 2535 Fig. 6 Enlargement of data training performance for Net5. DS dataset. MSC milder steepness coefficient and most to greater than 0.9, as the steepness coefficient in the range of MSC_4 and MSC_9 was used. The results shown in Figs. 5 and 6 indicate that an optimal steepness coefficient existed in all ANN architectures and datasets tested, which gave optimal data training performance and better data training results when compared with the standard steepness coefficient. Indeed, even bad training datasets improved significantly to an NS value above 0.9, which can be considered satisfactory. Figure 7 shows a summary of data training performances from Fig. 5 of five sets of ANN architectures that were trained with SSC and another five sets of ANN architectures that were trained with the optimal MSC for each of the datasets. The Fig. 7 Comparison of data training performances based on the standard steepness coefficient (SSC) and optimal milder steepness coefficients (MSC) 2536 M. Sulaiman et al. optimal MSC refers to the best data training performance among the nine MSCs for the specific dataset and ANN architecture. For data training with the SSC, the performance of the six datasets varied depending on the ability to achieve high NS values using the same ANN architecture. For example, for dataset DS_1, the range of NS varied from 0.893 to 0.922. In the trial and error method, if the ANN architecture is to be selected based on dataset DS_1 for data validation, then the ANN architecture Net3 will be used. This differs from data training using the optimal MSC, in which the data training performance for different ANN architectures are within 0.940. Thus, any ANN architecture trained with the optimal MSC can be used for data validation. Another example is dataset DS_2, for which the data training performance varies from 0.100 to 0.790. ANN architecture Net5 will be selected for validation if in the trial and error method, but for data training with the optimal MSC, any ANN architecture can be used in data validation since the results for different ANN architectures is within 0.908. These findings indicate that different ANN architectures do not affect the optimal data training performance. Instead, the optimal steepness coefficient that seems to exist in all ANN architectures strongly influences the data training performance results. The same conclusion is shown for dataset DS_3 to DS_6. The figures and tables show that the optimal MSC is a better approach than the trial and error method and that it produces a better result than SSC. These results or findings may help to determine future studies in ANN in which use of the optimal steepness coefficient method could be a better approach than trial and error for identifying the optimal data training performance. Table 4 shows the data training performance for the six datasets with the best SSC and optimal MSC based on data training using six ANN architectures and 10 steepness coefficients. It could be observed from Fig. 7 that the proposed modifications in MSC enhance the performance for all data sets over SSC. For more details, from Table 4, for data sets resultant in relatively good performance (DS_1, DS_3 and DS_6) utilizing SSC an improvement NS ranged between 0.9% and 2% could be observed. On the other hand, for the data sets experienced relatively poor performance using SSC, significant enhancement for NS between 12% and 14% has been reached. In fact, such improvements in the model performance are significant since achieving NS more than 0.9 is considered as satisfactory performance. Table 5 shows the model performance based on the best SSC and optimal MSC of data training models using validation dataset(DS_7). While examining DS_7, it can be depicted from Table 5 “column 2” that the model performance values NS were found to be consistent between training (DS_1 to DS_6), as shown in Table 4 “column 2” utilizing SSC architecture. On the other hand, for dataset Table 4 Data training performances (NS) of the six training datasets Dataset Best SSC Best optimal MSC Improvement (%) DS_1 DS_2 DS_3 DS_4 DS_5 DS_6 0.922 (Net1) 0.790 (Net5) 0.938 (Net1) 0.794 (Net1) 0.805 (Net1) 0.938 (Net5) 0.942 (Net5:MSC_3) 0.915 (Net5:MSC_9) 0.952 (Net5:MSC_4) 0.921 (Net5:MSC_9) 0.940 (Net5:MSC_7) 0.947 (Net5:MSC_9) 2.0 12.5 1.4 12.7 13.5 0.9 Improved Water Level Forecasting Performance 2537 Table 5 Forecasting performances (NS) of dataset DS_7 Dataset Best SSC Best optimal RMSE (mm) Improvement (%) DS_1 DS_2 DS_3 DS_4 DS_5 DS_6 0.913 (Net1) 0.873 (Net5) 0.923 (Net1) 0.615 (Net1) 0.867 (Net1) 0.930 (Net5) 0.938 (Net5:MSC_3) 0.928 (Net5:MSC_9) 0.938 (Net5:MSC_4) 0.924 (Net5:MSC_9) 0.924 (Net5:MSC_7) 0.938 (Net5:MSC_9) 344 370 344 381 382 344 2.5 5.5 1.5 30.9 5.7 0.8 DS_2 and DS_5, which are based on the SSC, the performance was better than the data training. Model performance based on the SSC range varied widely from 0.615 to 0.930; however, the forecasting performance of relatively good performance datasets (DS_1, DS_3 and DS_6) based on the optimal MSC had similar values of 0.938. Conversely, the relatively poor performance datasets (DS_2, DS_4 and DS_5) showed consistent performance values of 0.921 to 0.928. These findings indicate that the optimal forecasting performance was achieved by different good performance datasets (DS_1, DS_3 and DS_6) using the optimal MSC approach. Figure 8 shows the model performance of DS_7 based on data training using dataset DS_4 and SSC, while Fig. 9 shows the model performance of DS_7 based on data training using dataset DS_4 and the optimal MSC (MSC_9). In fact, the use of DS_4 as a training dataset is because it provides the worst data forecasting performance based on the SSC, but improved drastically while using the optimal MSC. The figures show a comparison of the observed and forecasted water level and a scatter plot of the performance of the data forecasting. The scatter plot presented in Fig. 8 shows that most data move away from the equal or middle line, while the scatter plot in Fig. 9 shows that most data are centered around the middle line. These findings show that the data forecasting based on the SSC has an NS of 0.615, while the data forecasting based on the optimal MSC has an NS of 0.924. These results demonstrate that the optimal MSC greatly improved the poor forecasting dataset. Figure 10 shows the forecasting performance of DS_7 based on data training using dataset DS_6 and SSC. Figure 11 shows the forecasting performance of DS_7 based on data training using dataset DS_6 and the optimal MSC, which is MSC_9. The use of DS_6 as a training dataset produced the best data forecasting performance based Fig. 8 Model performance of ANN based model using DS4, Net1 and standard steepness coefficient 2538 M. Sulaiman et al. Fig. 9 Model performance of ANN based model using DS4, Net5 and milder steepness coefficient MSC_9 on the SSC and optimal MSC. The scatter plots of the forecasting performances of the two models could be similar, except that in the scatter plot in Fig. 11 showed more data centered around the middle line than the plot shown in Fig. 10 specially for the observed data less than 6,000 mm. This accuracy is reflected by the performance of the SSC and optimal MSC, which had NS values of 0.93 and 0.938, respectively. The results shown in both scatter plots were slightly better than those shown in the scatter plot in Fig. 9, which had an NS value of 0.924. These findings confirm the existence of an optimal MSC in the sigmoid function that produced the optimal forecasting performance. Based on these findings, the MSC are able to provide good forecasting results; however, the mechanism by which MSC produces sigmoid values between 0 and 1 at the output neuron is unclear since it appears to have a limited range of sigmoid values (Fig. 4). For example, MSC_9 appears to produce sigmoid values limited to around 0.5. This is because the sigmoid functions shown in Fig. 4 based on the xvalues between 4 and −4. Figure 4 is suited to view sigmoid values between 0 and 1 for the SSC. However, if expanded to x-values between 100 and −100, then the sigmoid values between 0 and 1 are visible for all MSCs. For MSC 9, the sigmoid value is 0.1 if the x-value is −88, while it is 0.9 if the x-value is 88. For the SSC, to produce a sigmoid value of 0.1 and 0.9, the x-value must be −2.2 and 2.2 respectively. Thus, this explanation shows that all MSC can produce sigmoid values between 0 and 1 if appropriate x-values are passed to the sigmoid function. The mechanism that enables the summation of w and x values passed to the hidden and output neurons to have a high value is also unclear. Based on the two parameter w and x values Fig. 10 Model performance of ANN based model using DS6, Net5 and standard steepness coefficient Improved Water Level Forecasting Performance 2539 Fig. 11 Model performance of ANN based model using DS6, Net5 and milder steepness coefficient MSC_9 in the summation, only a high value of weights can increase the summation. This is because the x values will always fall between 0 and 1. Figure 12 shows an example of the bias weights of SSC and MSC_9 that are passed to the output neuron during data training. As shown in the figure, the weight for MSC_9 increased dramatically when compared with the SSC during the data training process. The weight generated by SSC was only 2.48424, but that of MSC_9 was 86.12270 after 2000 epochs. It should be noted that both bias weights were initialized with a value of 0.5 at the start of the data training process. Thus, these findings confirmed that the weights in the ANN model influence the output of MSC between 0 and 1. Linear transfer function has been successfully applied to the output layer to improve the forecasting performance of the ANN model (Toth and Brath 2007; Hornik et al. 1989). However, in our case study, based on the bias weight explanation and its effects on the summation value that is passed to output neurons, it is not possible to have a linear transfer function in the output layer and an MSC in the hidden layer. This is due to the rigidity of the back propagation algorithm to re-adjust the weights of hidden layer and output layer in case a considerable difference in the weight values is experienced. Actually, the use of MSC in hidden neurons will present a wide range different in the weight values in hidden and output layer, which turn into unfeasibility to use linear transfer function in the output neuron. However, there are several optimized algorithm rather than back propagation such as genetic algorithm and particle swarm optimization that could help overcome this drawback. Fig. 12 Weight of bias connected to output neuron (a) using standard steepness coefficient, (b) using milder steepness coefficient MSC_9 2540 M. Sulaiman et al. 4 Conclusions The results of this study demonstrated that the optimal steepness coefficient of the sigmoid function effectively improved the ANN data training performance when compared with ANN trained using the standard steepness coefficient. Based on this study, models with a steepness coefficient between 0.17 and 0.025 performed better than those with a steepness coefficient between 1 and 0.35. These results could also be applicable to other studies; therefore, it is suggested that additional studies employing steepness coefficients between 0.17 to 0.025 be used to evaluate the ANN data training and forecasting performance. The results of the present study also show that the optimal steepness coefficient method is more efficient for producing the best training performance than trial and error. Importantly, the optimal steepness coefficient significantly improved the data training of poor performance training datasets. The improved data training can help improve the accuracy of data forecasting at Rantau Panjang station and thus assist in monitoring for the possible future occurrence of flood. For future research in applying linear transfer function at output neurons and MSC in hidden neurons, it is highly recommended to find better and more flexible optimization technique that can handle problem of big difference of weights adjustment in hidden and output neurons due to the drawback of back propagation algorithm. Acknowledgements The authors thank the Environmental Research Group at the Department of Civil Engineering, Faculty of Engineering, University Kebangsaan Malaysia for a research grant (UKM-GUP-PLW-08-13-308) provided to the second and third authors. In addition, the authors appreciate the Department of Irrigation of Selangor and Johor for providing data and assisting with the background study of the Johor River. References Agarwal A, Singh RD (2004) Runoff modelling through back propagation artificial neural network with variable rainfall-runoff data. Water Resour Manage 18:285–300 Ahmed JA, Sarma AK (2007) Artificial neural network model for synthetic stream flow generation. Water Resour Manage 21(6):1015–1029 Alvisi S, Mascellani G, Franchini M, Bardossy A (2006) Water level forecasting through fuzzy logic and neural network approaches. Hydrol Earth Syst Sci 10(1):1–17 ASCE Task Committee on the application of ANN in Hydrology (2000a) Artificial neural networks in hydrology. I: preliminary concepts. J Hydrol Eng 5(2):115–123 ASCE Task Committee on the application of ANN in Hydrology (2000b) Artificial neural networks in hydrology. II: hydrological applications. J Hydrol Eng 5(2):124–137 Atiya AF, El-Shoura SM, Shaheen SI, El-Sherif MS (1999) A comparison between neural network technique—case study: river flow forecasting. IEEE Trans Neural Netw 10(2):402–409 Barron AR (1994) Approximation and estimation bounds for artificial neural networks. Mach Learn 14:115–133 Bustami RA, Bessaih N, Bong C, Suhaili S (2007) Artificial neural network for precipitation and water level predictions of Bedup River. IAENG International Journal of Computer Science 34(2):228–233 Cancelliere A, Gıuliano G, Ancarani A, Rossi G (2002) A neural networks approach for deriving irrigation reservoir operating rules. Water Resour Manage 16:71–88 Chandramouli V, Deka P (2005) Neural network based decision support model for optimal reservoir operation. Water Resour Manage 19:447–464 Chauhan S, Shrivastava RK (2008) Performance evaluation of reference evapotranspiration estimation using climate based methods and artificial neural networks. Water Resour Manage 23:825– 837 Improved Water Level Forecasting Performance 2541 Chiang YM, Chang LC, Chang FJ (2004) Comparison of static-feedforward and dynamic-feedback neural networks for rainfall–runoff modeling. J Hydrol 290:297–311 Cigizoglu HK, Kisi O (2006) Methods to improve the neural network performance in suspended sediment estimation. J Hydrol 317:221–238 Coulibaly P, Anctil F, Bobee B (2000) Daily reservoir inflow forecasting using artificial neural networks with stopped Training Approach. J Hydrol 230:244–257 El-Shafie A, Reda Taha M, Noureldin A (2007) A neuro-fuzzy model for inflow forecasting of the Nile River at Aswan High Dam. Water Resour Manage 21(3):533–556 El-Shafie A, Noureldin AE, Taha MR, Basri H (2008) Neural network model for Nile river inflow forecasting based on correlation analysis of historical inflow data. J Appl Sci 8(24):4487–4499 El-Shafie A, Abdin AE, Noureldin A, Taha MR (2009) Enhancing inflow forecasting model at Aswan high dam utilizing radial basis neural network and upstream monitoring stations measurements. Water Resour Manage 23(11):2289–2315 Fernando TMKG, Maier HR, Dandy GC, May RJ (2005) Efficient selection of inputs for artificial neural network models, Proc. of MODSIM 2005 International Congress on Modelling and Simulation: Modelling and Simulation Society of Australia and New Zealand, December 2005/Andre Zerger and Robert M. Argent (eds) 1806–1812 Han J, Moraga C, Sinne S (1996) Optimization of feedforward neural networks. Eng Appl Artif Intell 9(2):109–119 Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2:359–366 Joorabchi A, Zhang H, Blumenstein M (2007) Application of Artificial Neural Networks in Flow Discharge Prediction for Fitzroy River, Australia. J Coast Res SI 50:287–291 Kerh T, Lee CS (2006) Neural networks forecasting of flood discharge at an unmeasured station using river upstream information. Adv Eng Softw 37:533–543 Leahy P, Kiely G, Gearóid C (2008) Structural optimisation and input selection of an artificial neural network for river level prediction. J Hydrol 355:192–201 Rahnama MB, Barani GA (2005) Application of rainfall-runoff models to Zard River catchment’s. Am J Environ Sci 1(1):86–89 Rai RK, Mathur BS (2008) Event-based sediment yield modeling using artificial neural network. Water Resour Manage 22(4):423–441 Shamseldin AY, Nasr AE, O’Connor KM (2002) Comparison of different forms of the multi-layer feed-forward neural network method used for river flow forecasting. Hydrol Earth Syst Sci 6(4):671–684 Solaimani K, Darvari Z (2008) Suitability of artificial neural network in daily flow forecasting. J Appl Sci 8(17):2949–2957 Tareghian R, Kashefipour SM (2007) Application of fuzzy systems and artificial neural networks for flood forecasting. J Appl Sci 7(22):3451–3459 Toth E, Brath A (2007) Multistep ahead streamflow forecasting: role of calibration data in conceptual and neural network modeling. Water Resour Res 43:W11405. doi:10.1029/2006WR005383 Turan ME, Yurdusev MA (2009) River flow estimation from upstream flow records by artificial intelligence methods. J Hydrol 369:71–77 Wu CL, Chau KW, Li YS (2009) Methods to improve neural network performance in daily flows prediction. J Hydrol 372:80–93 Zealand CM, Burn DH, Simonovic SP (1999) Short term stream flow forecasting using artificial neural networks. J Hydrol 214:32–48 Zhang G, Patuwo BE, Hu MY (1998) Forecasting with artificial neural networks: the state of the art. Int J Forecast 14:35–62 View publication stats