CS 234 Spring 2017

Assignment 1 Solutions

1

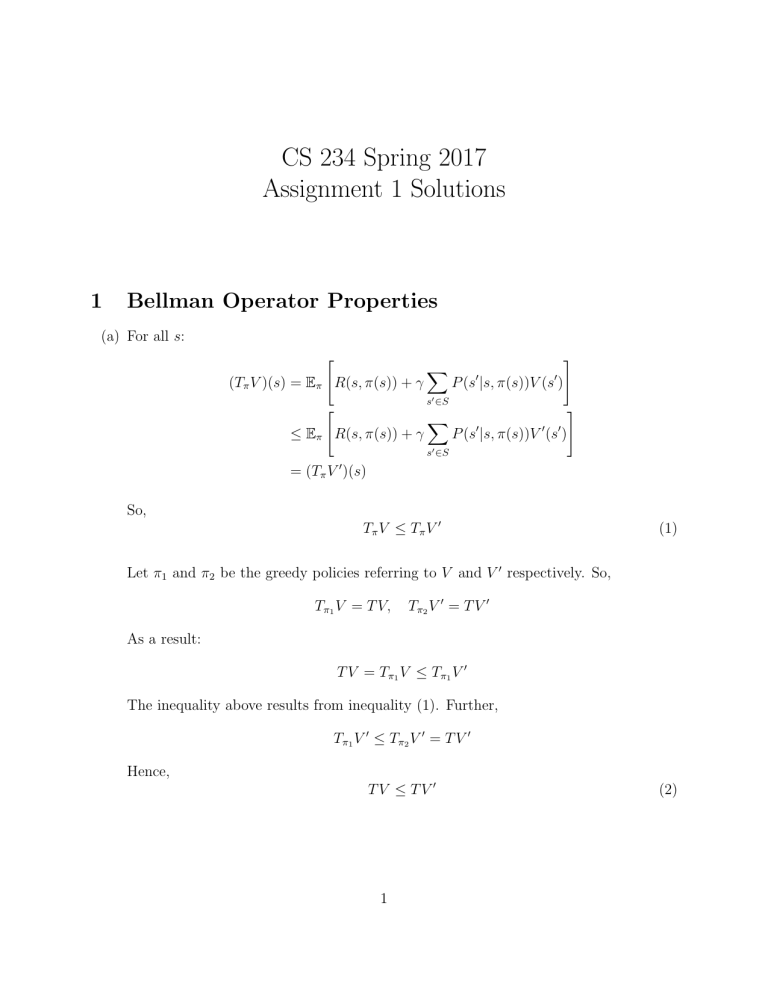

Bellman Operator Properties

(a) For all s:

"

#

(Tπ V )(s) = Eπ R(s, π(s)) + γ

X

0

0

P (s |s, π(s))V (s )

s0 ∈S

#

"

≤ Eπ R(s, π(s)) + γ

X

P (s0 |s, π(s))V 0 (s0 )

s0 ∈S

0

= (Tπ V )(s)

So,

Tπ V ≤ Tπ V 0

(1)

Let π1 and π2 be the greedy policies referring to V and V 0 respectively. So,

Tπ1 V = T V,

T π2 V 0 = T V 0

As a result:

T V = Tπ1 V ≤ Tπ1 V 0

The inequality above results from inequality (1). Further,

Tπ1 V 0 ≤ Tπ2 V 0 = T V 0

Hence,

TV ≤ TV 0

1

(2)

(b) For the Tπ operator,

"

#

(Tπ V + c~1)(s) = Eπ R(s, π(s)) + γ

X

0

0

P (s |s, π(s))(V (s ) + c)

s0 ∈S

"

#

= Eπ R(s, π(s)) + γ

X

P (s0 |s, π(s))V (s0 ) + γc

s0 ∈S

X

P (s0 |s, π(s))

s0 ∈S

"

#

= Eπ R(s, π(s)) + γ

X

P (s0 |s, π(s))V (s0 ) + γc

s0 ∈S

= (Tπ V )(s) + γc

For the T operator, the solution is similar since the constant c does not affect the max

operation.

(c) Let π1 be the greedy policy referring to αV + βV 0 . Hence, for all s:

T (αV + βV 0 )(s) = Tπ1 (αV + βV 0 )(s)

"

= Eπ1 R(s, π(s)) + γ

#

X

0

0

0

P (s |s, π(s))(αV (s ) + βV (s ))

s0 ∈S

"

#

= Eπ1 αR(s, π(s)) + γ

X

P (s0 |s, π(s))(αV (s0 ))

s0 ∈S

"

#

+ Eπ1 βR(s, π(s)) + γ

X

P (s0 |s, π(s))(βV (s0 ))

s0 ∈S

0

= (αTπ1 V )(s) + (βTπ1 V )(s)

≤ (αT V )(s) + (βT V 0 )(s)

(d) The statement is wrong. Consider the counter-example below:

V = ~0

R(s, a) > 0 for all s, a

This will result in: T V > V

2

2

Value Iteration

(a) Yes, it is possible that the policy changes again with further iterations of value iteration.

Consider the following example. There are three states {0, 1, 2}. In each state, the

available actions are indicated by outgoing edges and each is denoted by the next state

the action leads to. The corresponding rewards are indicated on the edges. The state

transition is deterministic, that is, taking an action always leads to the corresponding

state in the next time step. Assume γ = 1. Note ∈ (0, 1) is some small constant.

Over the iterations of value iteration, the value function can be computed as follows:

Iteration

V (0)

V (1) V (2)

0

0

0

0

1

1+

1

∞

2(1 + )

∞

∞

2

∞

∞

∞

3

Over the iterations of value iteration, the current best policy can be computed as

follows:

Iteration π(0)

0

Arb.

0

1

2

0

3

1

3

π(1)

Arb.

2

2

2

π(2)

Arb.

2

2

2

(b) Note the optimal value function V ∗ is such that

(

)

X

V ∗ (s) = max R(s, a) + γ

P (s0 |s, a)V ∗ (s0 ) , ∀s ∈ S .

a

s0 ∈S

The best policy for Ṽ is deterministic and is defined

(

)

X

πṼ (s) = arg max R(s, a) + γ

P (s0 |s, a)Ṽ (s0 ) , ∀s ∈ S ,

a

s0 ∈S

and the corresponding value function is such that

X

VπṼ (s) = R(s, πṼ (s)) + γ

P (s0 |s, πṼ (s))VπṼ (s0 ), ∀s ∈ S .

s0 ∈S

We first find relations on {LṼ (s)}s∈S . For any s ∈ S,

X

P (s0 |s, πṼ (s))VπṼ (s0 )

VπṼ (s) = R(s, πṼ (s)) + γ

s0 ∈S

)

(

= max R(s, a) + γ

a

X

P (s0 |s, a)Ṽ (s0 )

s0 ∈S

+γ

X

P (s0 |s, πṼ (s) ) VπṼ (s0 ) − Ṽ (s0 ) , by definition of πṼ

s0 ∈S

)

(

≥ max R(s, a) + γ

a

X

P (s0 |s, a) (V ∗ (s0 ) − )

s0 ∈S

+γ

X

P (s0 |s, πṼ (s)) VπṼ (s0 ) − (V ∗ (s0 ) + ) , since |V ∗ (s) − Ṽ (s)| ≤ , ∀s

s0 ∈S

(

)

= max R(s, a) + γ

a

X

P (s0 |s, a)V ∗ (s0 )

s0 ∈S

+γ

X

X

P (s0 |s, a) = 1, ∀s, a

P (s0 |s, πṼ (s)) VπṼ (s0 ) − V ∗ (s0 ) − 2γ , since

s0 ∈S

= V ∗ (s) + γ

X

s0 ∈S

P (s0 |s, πṼ (s)) VπṼ (s0 ) − V ∗ (s0 ) − 2γ , by definition of V ∗ .

s0 ∈S

Then, we can rewrite

X

γ

P (s0 |s, πṼ (s)) V ∗ (s0 ) − VπṼ (s0 ) + 2γ ≥ V ∗ (s) − VπṼ (s) .

s0 ∈S

Equivalently, for any s ∈ S,

X

γ

P (s0 |s, πṼ (s))LṼ (s0 ) + 2γ ≥ LṼ (s) .

s0 ∈S

4

(3)

2γ

We now show LṼ (s) ≤ 1−γ

for all s ∈ S. Let M = maxs LṼ (s) and the max be

∗

achieved at state s , i.e., LṼ (s∗ ) = M . From (3),

X

LṼ (s∗ ) ≤ γ

P (s0 |s∗ , πṼ (s∗ ))LṼ (s0 ) + 2γ

s0 ∈S

≤γ

X

P (s0 |s∗ , πṼ (s∗ ))M + 2γ

s0 ∈S

= γM + 2γ .

Then, M ≤ γM + 2γ, or M ≤

2γ

.

1−γ

It follows that LṼ (s) ≤

5

2γ

1−γ

for all s ∈ S.

3

Grid Policies

(a) Let all rewards be −1.

(b)

−4

−3

−2

−1

0

−5

4

3

2

1

4

5

4

3

2

−3

−2

−1

0

1

−4

−3

−2

−1

0

(c) No. Changing γ changes the value function but not the relative order.

(d) The value function changes (all states increase by 15), but the policy does not.

6

4

Frozen Lake MDP

(c) Stochasticity generally increases the number of iterations required to converge. In

the stochastic frozen lake environment, the number of iterations for value iteration

increases. For policy iteration, depending on the implementation method, the number

of iterations could remain unchanged; or policy iteration might not even converge at

all. The stochasticity would also change the optimal policy. In this environment, the

optimal policy of the stochastic frozen lake is different from the one of the deterministic

frozen lake.

5

Frozen Lake Reinforcement Learning

(d) Q-learning is less computationally intensive, since it only needs to update a single Qvalue per update. Model-based learning, on the other hand, must keep track of all the

counts and attempt to estimate the model parameters. However, model-based learning

uses the update data more efficiently, so it may require less experience to learn a good

policy (provided the model space is not prohibitively large).

7