IRJET-Expert Independent Bayesian Data Fusion and Decision Making Model for Multi-Sensor Systems Smart Control

advertisement

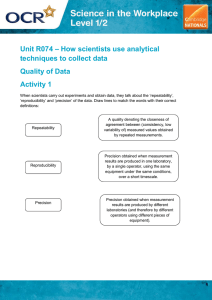

International Research Journal of Engineering and Technology (IRJET)

Volume: 06 Issue: 03 | Mar 2019

e-ISSN: 2395-0056

www.irjet.net

p-ISSN: 2395-0072

Expert Independent Bayesian Data Fusion and Decision Making Model

for Multi-Sensor Systems Smart Control

Mumbere Muyisa Forrest1, Masivi Osée Muhindo2, Kasereka Masivi Samuel3

1School

of Software, Central South University, Changsha 410000, China.

d’Informatique de Gestion, ISP/Muhangi, Butembo, DRC.

3Departement of Computer Science, Invertis University, India.

----------------------------------------------------------------------***--------------------------------------------------------------------2Département

Abstract- Internet of Things (IoT) and its applications

have increased the number of multi-sensors computer

applications. Then, the necessity of multi-sensor data

merging and expert independent decision algorithms is

real. This paper proposes a novel multi-sensor system

smart control model based on Bayesian. Proposed “study

on intelligent” algorithms are expect-dependent trainable

predicting the system only from anterior and actual data.

Simulations test on a three sensors system (sol

temperature, air temperature, and moisture) an overall

prediction precision of more than 96%. However, a real

life customizable implementation of the proposed

algorithm is needed.

system was able to stand between the source data and

the human and helped him to make decisions based on

the fused output. The big challenge with these methods

is the amount of data and processing need for training

the decision making algorithm.

Therefore, Bayesian approach, including Bayesian

analysis, Statistic, and recursive operators stood as one

of reliable alternatives. Used already for data fusion [7]

Bayesian approach is being accepted to be one of the

most classical fusion approach. Furthermore, the authors

in [8] demonstrated that the data fusion based on Bayes

estimation can weaken the possible sensor errors,

resulting from the sensor faults and noise inference. The

most appealing advantage of Bayes parameters

estimation algorithms is the small amount of training

needed for classification [9] [10] and its impendence

toward system experts.

Keywords: Naïve Bayes, Data fusion, Multi-sensor,

System smart control, Expert independent training.

1. INTRODUCTION

This paper introduces the Naïve Bayes theorem in the

decision algorithm from merged data collected from

sensors with different characteristics. The algorithm

should to make decision in control systems under multisensor context. The proposed method includes system

parameters learning and time-based system state

prediction and is expected [11] (a) to easy design

process with less free parameters to set, (b) to easy

result application to a large variety of tasks, (c) to use a

small amount of data for learning process (d) to be

computationally fast when making decisions.

The meteoric rise of Internet of Things (IoT) has

increased the number of sensors in almost all computer

applications and hence increases the necessity of multisensor systems smart control algorithms. These

algorithms are not only required to merge sensed data

from a good number of sensors into a common

representational format, but also to make relevant

decisions. Despite the fact that suggested principles,

procedures, theories and tools are approximately the

same [1], decision algorithms depend on the number of

sensors [2] [3] in the application context [4].

Furthermore,

sensors

characteristics

increase

exponentially the complexity of the system decision

algorithm.

3. PROPOSED MATHEMATICAL METHOD

In this paper, Bayesian inference is used to draw

conclusions about features (parameters) in system

control based on a sample from the same system. Both

parameters and sample data are treated as random

quantities [12]. The proposed algorithm computes the

distribution for the system parameters from the

likelihood function which defines the random process

that generates the data, and a prior probability

distribution for the parameters.

2. RELATED WORKS AND OBJECTIVES

Data fusion and decision making and related challenges

[4] have been addressed by researchers for decades.

Since then, two major approaches have emerged:

artificial intelligence methods and probabilistic based

methods. Artificial approaches, with the main focus on

machine learning, fuzzy logic, have been reputed to yield

higher accuracy compared to other techniques [2][5]. In

this line, [6] proposed a generic data fusion system

which established a relationship between the source of

data and the type of processing in order to extract

maximum possible information from data collected. The

© 2019, IRJET

|

Impact Factor value: 7.211

By assuming that all the variables are observed with no

missing data and that all classes have equal prior

probabilities [13], the proposed method estimates the

probability of a system feature by the frequency of

|

ISO 9001:2008 Certified Journal

|

Page 1799

International Research Journal of Engineering and Technology (IRJET)

Volume: 06 Issue: 03 | Mar 2019

occurrence of the same feature

database

{

}.

www.irjet.net

in the feature

| )

(

p-ISSN: 2395-0072

(

): Posterior probability of class

membership.

(

| ) : Likelihood which is the

probability of the predicator given class.

: class prior probability

Using (9), any new case X can be labeled by a class level

with the highest posterior probability. Then after

normalization, the decision distribution is :

(

)

(10)

(

)

-

The conditional likelihood of this feature is computed by

(1) and the density probability of the set of data D is

computed by (2). Since

occurs whenever

and

, (3) is deducted from (2), then the

logarithmic notation of (3) gives (4).

(

e-ISSN: 2395-0056

| )

√

Where :

∏

(1)

∏

4. PROPOSED COMPUTATIONAL MODEL

∏

(2)

∏∏

∏∏

The proposed computational protocol sketched in Figure

1, uses the following steps: (a) getting data from sensors

(b) split training set from test data (c) learning/training

the model (d) predict class using Naive Bayes model.

(3)

∏∏∏

Naive

Bayes

Model

(4)

∑∑∑

Since [6] demonstrates that (4) formula can be

simplified to (5), the Maximum Likelihood (ML)

approach used in this paper is expressed by (6).

̂

̂(

{

∑

|

)

̂

}

∑

Sensors

data

capturing

g

(5)

Figure 1: Naïve Bayes protocol for state estimation

A.

∏ (

(

| )

Table 1. Adequate range values for the system C.

(7)

Sensor S1

Values

(8)

| )

B.

( | )

( )∏ (

| )

(9)

|

Impact Factor value: 7.211

S2

S3

Learning & training the model

The proposed model is “study on intelligent” say it was

trained from data collected from sensors [15]. Hence, the

state of the system depends on values provided by

Where:

© 2019, IRJET

Collecting data from sensors

During this step, the system collects data from a given

number of sensors. Each sensor can be in one of the

three states: low, adequate or high. Each sensor is

assumed to be independent in terms of data types and

collecting rate [14] [15]. The example provided in Table

1 gives the ranges for the training system of three

sensors.

This classifier was improved by Naive Bayes to handle an

arbitrary number of independent variables by

constructing the posterior probability for the feature

{

} among a set

given a set of variables,

{

} by (7). Assuming

of possible outcomes

that the variables are statistically independent, the

likelihood is decomposed to a product of terms in (8).

Then from (8) the estimation computed by (9).

)

Posterior

probability

Predicted

State

(6)

With

being the number of features in the database

whose variable

is in the state

and parents are in the

configuration .

(

Training

data

Test data

|

ISO 9001:2008 Certified Journal

|

Page 1800

International Research Journal of Engineering and Technology (IRJET)

Volume: 06 Issue: 03 | Mar 2019

www.irjet.net

p-ISSN: 2395-0072

D. Decision

different sensors: at time t, Ct depends on the state of S1t,

S2t, S3t, Snt. From example (Table 1), the System State

had 27 different probable classes. Each class is

composed by 3 different states provide by the 3 sensors.

The class C1can be represented by: S1low, S2adequate,

S3high, meaning that at a given time t, sensor 1 sent a

value lower than 16, sensor 2 sent a value between 20

and 25 and sensor 3 sent a value higher than 120. The

learning process used it this paper can be summarized

by algorithm 1 as follow:

The proposed Bayesian classifier (Algorithm 2) is a

probabilistic model based on the Bayes rule [17] in

which each element

) is associated to a

class with a maximum a posteriori.

( |

)

( )

( | )

Algorithm 1: Training process

Input: K (Training set)

For each class c in K do

Compute mean(k) and std(k)

End for

Output: mean, std

( | )

(15)

( | )

Algorithm 2: Testing

Input: k(Learned features)

For each class li do

Calculate P(li|k)

End for

{

Output:

class)

During the training stage, the mean and standard

deviation values of the features for each class were

computed.

C.

e-ISSN: 2395-0056

}(estimated

The probabilities for the objective existing in each class

are computed and the highest is chosen as the estimated

class.

Classification

5. SIMULATION RESULTS

Assuming that system parameters are distributed

according to the Gaussian density, two parameters were

computed. First the parent node without parents . It

probability

is the frequency of the

class in the

training database. Since all the classes have the same

probability at this stage:

The proposed computational model was evaluated and

its goodness was tested using the following evaluation

parameters: accuracy, precision, recall, f1 score drawn

from a confusion matrix. Furthermore, imbalanced data

set results were compared to balanced data set results

( )

A.

Confusion matrix

( )

∑

(11)

( )

∑ ( )

Where:

: The size of the database

( ): The number of observation belonging to the class

Second, the probability of the children nodes were

computed by using the Normal law in conditional

probability of node

considering the parent

(12)

then combine the different values computed in the

previous step (13).

in (13) being constant

hence easy to compute, (13) was rewritten as (14).

Figure 2. Confusion matrix for imbalanced data set

Figure 2, shows a confusion matrix, with an accuracy

rate of 87% for imbalanced data. In Figure 3, the false

negative and the false positive have been minimized by

balancing the data in the training set; the result shows

that the accuracy of the model has improved from

87.33% to 96.33%.

(12)

( |

( |

( )

(

)

)

( | )

© 2019, IRJET

|

| )

( | )

( | )

(13)

(14)

Impact Factor value: 7.211

|

ISO 9001:2008 Certified Journal

|

Page 1801

International Research Journal of Engineering and Technology (IRJET)

Volume: 06 Issue: 03 | Mar 2019

e-ISSN: 2395-0056

www.irjet.net

p-ISSN: 2395-0072

96.33%. Accuracy is misled by the class with high

support, thus reducing the overall accuracy as shown in

(a).

Precision, recall, f1-score

Precision

120

precision

100

80

60

Precision

40

20

Cl

as

s

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

0

Figure 3. Confusion matrix with balanced data sent.

classes

Comparative Accuracy

(a)

After varying the percentage of the learning set and the

test set, we create 9 different set of data. Figure 3 show

the relationship between the size of the learning set and

the test set.

Precision

105

100

95

Precision

90

Accuracy

85

87.5

Cl

a

ss

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

80

Accuracy %

87

(b)

86.5

Accuracy

Figure 5: Precision (a) Precision for imbalance data set

(b) Precision for balanced data set.

86

85.5

Figure 5 shows that the balanced data set achieves high

precision than the imbalance data set, reaching 6 times a

precision of 100%, on the other hand, the highest

precision for classes in the imbalance data set reached

hardly reached 100%. Figure 5 represents the sensitivity

of balanced and imbalance data set.

85

10%

20%

30%

40%

50%

60%

70%

80%

90%

Training set

(a)

Accuracy

100

Accuracy %

95

In term of sensitivity, the balanced data set, produce a

higher rate of sensitivity than the imbalance data set.

F1-score conveys the balance between the precision and

the recall, when dealing with imbalance classes,

classification alone cannot be trusted to select a well

performing model. Figure 8, shows the relationship

between precision, recall and f1-score.

90

85

Accuracy

80

75

70

10%

20%

30%

40%

50%

60%

70%

80%

90%

Training set

(b)

Figure 4: Relation between the size of the

learning set and accuracy. (a) Imbalance data

set (b) Balanced data set.

Better result are obtained when the size of the training

set ranges between 40 to 65 % as shown in (a) and (b) in

Figure 4. On the other hand taking the same number of

data for each class in the training set (balanced data set)

can improve the accuracy of the system from 87.3% to

© 2019, IRJET

|

Impact Factor value: 7.211

|

ISO 9001:2008 Certified Journal

|

Page 1802

International Research Journal of Engineering and Technology (IRJET)

Volume: 06 Issue: 03 | Mar 2019

www.irjet.net

p-ISSN: 2395-0072

Precision,Recall,f1-score

Recall

120

120

100

Score in %

100

80

Score

e-ISSN: 2395-0056

60

Recall

80

Precision

60

Recall

f1-score

40

40

20

20

0

Cl

a

ss

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

C

la

ss

C 1

la

ss

C 2

la

ss

C 3

la

ss

C 4

la

ss

C 5

la

ss

C 6

la

ss

C 7

la

ss

C 8

la

s

C s9

la

ss

C 10

la

ss

C 11

la

ss

C 12

la

ss

C 13

la

ss

C 14

la

ss

15

0

Classes

classe

(a)

(a)

Precision, recall, f1-score

Recall

105

102

100

Classes

100

Score

98

Precision

95

Recall

90

96

f1-score

Recall

94

85

92

80

Cl

a

ss

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

90

88

Cl

a

ss

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

score %

(b)

Classe

Figure 8: Precision (a) Precision for imbalance data

set (b) Precision for balanced data set.

(b)

Figure 6: Recall (a) Precision for imbalance data set (b)

Precision for balanced data set.

For imbalance class in the training set, the support of a

class has a positive impact on the precision of the class;

however, classes with a very high accuracy can create an

accuracy paradox problem by predicting the value of the

majority class for all predictions and achieve high

classification accuracy.

The support of the class in the training set has a positive

impact on the precision of the class, especially when we

have imbalance class in the training set. Figure 7 shows

the relationship between the support and the precision.

C

al

ss

lC

a

ss

2 lC

a

ss

3 lC

a

ss

4 lC

a

ss

5 lC

a

ss

6 lC

a

ss

7 lC

a

ss

8 lC

a

ss C

9 al

ss

01 alC

ss

11 alC

ss

21 alC

ss

31 alC

ss

1

4 alC

ss

51

Classes

1

f1-score

0

100

80

70

60

200

50

40

30

20

erocS

Score

100

90

f1-score

Precision

300

Support

400

500

600

10

0

Cl

a

ss

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

Support & Precision

(a)

Classe

Support & Precision

(a)

30

f1-score

25

102

20

Score

100

score

98

96

support

10

94

f1-score

5

92

0

Cl

as

s

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

90

88

86

Cl 1

as

s

Cl 2

as

s

Cl 3

as

s

Cl 4

as

s

Cl 5

as

s

Cl 6

as

s

Cl 7

as

s

Cl 8

as

Cl s 9

as

s

Cl 10

as

s

Cl 11

as

s

Cl 12

as

s

Cl 13

as

s

Cl 14

as

s

15

Classes

ss

Cl

a

Precision

15

(b)

classe

Figure 9: Relationship between the support and the precision.

(a) Relationship between the support and precision for

imbalance data set (b) Relationship between the support and

precision for balanced data set.

(b)

Figure 7: f1 score (a) Precision for imbalance data set (b)

Precision for balanced data set.

© 2019, IRJET

|

Impact Factor value: 7.211

|

ISO 9001:2008 Certified Journal

|

Page 1803

International Research Journal of Engineering and Technology (IRJET)

Volume: 06 Issue: 03 | Mar 2019

www.irjet.net

6. DISCUSSIONS AND CONCLUSION

Since the trained and proposed model is “study on

intelligent”, the algorithm reduces the reliance on

experts. It is a “previous system data” dependent model.

This means that any non-expert can train and use the

system. A few expertise is only required during the

training step of the system. However, a real life

customizable implementation of the proposed algorithm

is needed.

7. REFERENCES

[1] H. Mitchell, Multi-Sensor Data Fusion: An

Introduction, Berlin: Springer.

[2] D. Hall and J. Llinas, Handbook of Multisensor Data

Fusion, New York, 2001.

[3] M. Wang and all, "City Data Fusion: Sensor Data

Fusion in the Internet of Things," International

Journal of Distributed Systems and Technologies,

June2015.

[4] F. Aiam and All, "Data Fusion and IoT for Smart

Ubiquitous Environments: A Survey. ,," IEEE.

[5] B. Yang, X. Liu and X. Z. Y. Li, "Stochastic

Blocmodeling and variational Bayes Learning for

Signed Network Analysis," in IEEE Transactions on

Knowledge and Data Engineering, May 2017.

[6] D. Hall and A. Garga, "Pitfalls in data fusion(and how

to avoid them)," Proceedings of the Second

International Conference on Information Fusion

(Fusion ’99), vol. 1, pp. 429-439, 1999.

[7] H. Holzapfel, K. Nickel and R. Stiefelhagen,

"Implementation and evaluation of a constraintbased multimodal fusion system for speech and 3D

pointing gestures," Proc. 6th Int. Conference

Multimodal interfaces- ICMI ’04, p. 175, 2004.

[8] F. Shen and R. Yan, "A Thermostatic Control

Strategy Based on Multi-sensor Data Fusion and

Fuzzy PID Method," in Tenth Internation

Conference on Sensing Technology, 2016.

[9] J. He, S. Bai and X. Wang, "An Unobtrusive Fall

Detection and Alerting System Based on Kalman

Filter and Bayes Network Classifier," Sensors 2017,

vol. 14, p. 1393, 2017.

[10] R. Mahler, Unified Bayes Multi target Fusion of

|

Impact Factor value: 7.211

p-ISSN: 2395-0072

Ambigous Data Sources, Boston, USA: Kimasi, 2003.

[11] A. Ashari, I. Paryudi and A. Tjoa, "Performance

Comparison between Naive Bayes, Decision tree

and K-Nearest Neighbor in Searching Alternative

Design in an Energy Simulation Too," IJACSA,

International Journal of Advanced Computer

Science and Applications, vol. IV, no. 11, 2013.

[12] X. Meng, G. Bai, X. Shu and D. Bao, "A study of

intelligent fault diagnosis in engine room by Bayes

method;, 2002.," IEEE; Intelligent Control and

Automation. Proceedings of the 4th World

Congress, 2002.

[13] G. Myburgh and A. V. Niekerk, "Impact of training

set size on object-based land cover classification: A

Comparison of three classifiers," International

Journal of Applied Geospacial Research, vol. V, no. 3,

pp. 49-67, July 2014.

[14] D. J. Hand and K. Yu, "Idiot’s bayes : Not so stupid

after all?," International statistical Review, 2001.

[15] M. Godec, C. Leistner, A. Saffari and H. Bischof, "OnLine Random Naïve Bayes for Tracking," IEEE,

Pattern

Recognition

(ICPR),

2010

20th

International Conference, 2010.

A novel multi-sensor system smart control model based

on Bayesian data was proposed. The proposed algorithm

is able to predict the state of a system taking into

account the actual and the anterior data collected from

different types of sensors. For test seek, a three sensors

system (sol temperature, air temperature, and moisture)

was set and the algorithm performance was measured.

Simulation tests have proved that the proposed

algorithm can predict at an overall rate of more than

96% the actual state of the system.

© 2019, IRJET

e-ISSN: 2395-0056

BIOGRAPHIES

Mumbere Muyisa Forrest is a MSc

holder in Information Technology

and a Lecturer of IT courses at

Université Adventiste de Lukanga

and Institut de Commerce de Beni in

RDC. He is currently preparing his

PhD in Software Engineering in

School of Software at Central South

University, Changsha 410000, China.

Dr MASIVI is a Computer Science

PhD holder from University of the

Philippines Los Banos. He is

currently

Associate

Professor

lecturing IT and Computer Science

courses at Rusangu University

(Zambia) and ISP/Muhangi (RDC).

Kasereka Masivi Samuel is a MSc

holder in Information Technology

and a Lecturer of courses at

Université Adventiste de Lukanga

and Université de Bunia in DRC. He

is currently preparing his PhD in

Computer Science at Invertis

University, India.

|

ISO 9001:2008 Certified Journal

|

Page 1804