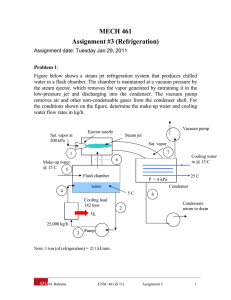

i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 available at www.sciencedirect.com w w w . i i fi i r . o r g journal homepage: www.elsevier.com/locate/ijrefrig Important sensors for chiller fault detection and diagnosis (FDD) from the perspective of feature selection and machine learning H. Han a,*, B. Gu a, T. Wang a, Z.R. Li b a Institute of Refrigeration and Cryogenics, Shanghai Jiao Tong University, 800 Dongchuan Road, Minhang District, Shanghai 200240, PR China b Institute of HVAC & G, School of Mechanical Engineering, Tongji University, 1239 Siping Road, Shanghai 200092, PR China article info abstract Article history: The benefits of applying automated fault detection and diagnosis (AFDD) to chillers include Received 7 June 2010 less expensive repairs, timely maintenance, and shorter downtimes. This study employs Received in revised form feature selection (FS) techniques, such as mutual-information-based filter and genetic- 23 July 2010 algorithm-based wrapper, to help search for the important sensors in data driven chiller Accepted 17 August 2010 FDD applications, so as to improve FDD performance while saving initial sensor cost. The Available online 21 August 2010 ‘one-against-one’ multi-class support vector machine (SVM) is adopted as a FDD tool. The results show that the eight features/sensors, centered around the core refrigeration cycle Keywords: and selected by the GA-SVM wrapper from the original 64 features, outperform the other Sensor three feature subsets by the GA-LDA (linear discriminant analysis) wrapper, with an overall Chiller classification correct rate (CR) as high as 99.53% for the 4000 test samples randomly Compression system covering the normal and seven typical faulty modes. The CRs for the four cases with FS are Detection all higher than that without FS (97.45%) and the test time is much less, about 28e36%. The Genetic FDD performance for normal or each of the faulty modes is also evaluated in details in Fault terms of hit rate (HR) and false alarm rate (FAR). ª 2010 Elsevier Ltd and IIR. All rights reserved. Capteurs importants pour la détection et le diagnostic d’anomalies des refroidisseurs du point de vue des choix des caractéristiques et des connaissances du système Mots clés : Capteur ; Refroidisseur ; Système à compression ; Détection ; Génétique ; Anomalie 1. Introduction Automated fault detection and diagnosis (AFDD) along with prognostics is the cornerstone for automated condition-based maintenance, whose wide-spread adoption will help cut down much of the waste caused by poorly maintained, degraded and/or improperly controlled equipment. Although there is a wealth of literature related to AFDD for critical processes, * Corresponding author. Tel./fax: þ86 21 3420 6260. E-mail address: happier_han@126.com (H. Han). 0140-7007/$ e see front matter ª 2010 Elsevier Ltd and IIR. All rights reserved. doi:10.1016/j.ijrefrig.2010.08.011 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Nomenclature b bias or threshold for the discriminant function c target class vector C penalty constant (also called slack penalty) ConfMat confusion matrix CR correct rate FAR false alarm rate FN number of false negative samples FP number of false positive samples FWC flow rate of condenser water HR hit rate mean of the jth variable/feature Mj MCR misclassification rate p matrix of the posterior probabilities for the classifier PO_feed pressure of oil feed training set of two class Sa possible values’ set for X Sx possible values’ set for Y Sy such as nuclear, aircraft engines or production-related processes, such as those that exist within chemical process plants, relatively little exists for application to chillers or other vapor compression equipment, especially those from the viewpoint of important sensors. Commonly recognized categorization of FDD methodology is that based on model, quantitatively or qualitatively, and that based on process history (or data driven), as Katipamula and Brambley (2005a,b) stated. Bendapudi and Braun (2002) provide a detailed list of available quantitative models, especially dynamic models for vapor compression equipment. Qualitative physics-based and rule-based systems belong to the qualitative model-based category, such as expert systems (Kaler, 1990; Grimmelius et al., 1995; Kaldorf and Gruber, 2002), rules derived from first principles (Gordon et al., 1995; Brambley et al., 1998), bond graphs (Ghiaus, 1999) and casebased reasoning (Dexter and Pakanen, 2001), etc. The data driven methodology (Tassou and Grace, 2005; Liang and Du, 2007; Han et al., 2010) is based solely/mainly on process history and contains models of black box or gray box, where pattern recognition techniques are often employed to develop the relationship between inputs and outputs, and into which the machine learning methodology to be presented in this study falls, thanks to the data-rich nature of chillers and the dedicated experiments by Comstock and Braun (1999a,b). TCO TEO TN TP TRC TR_dis TWCO TWI VE w X b X Y Z 587 temperature of condense water out by RTD temperature of evaporator water out number of true negative samples number of true positive samples saturated refrigerant temperature in condenser refrigerant discharge temperature temperature of condense water out by thermistor temperature of city water in position of the electronic valve installed in the evaporator water loop weight vector for SVM discrete random variable normalized sample matrix discrete random variable observed sample matrix Greek symbols Lagrange multiplier ai g width of Gaussian kernel function standard deviation of the jth variable/feature sj Feature selection (FS) is frequently used as a preprocessing step to machine learning. It is a process of choosing a subset of original features so that the feature space is optimally reduced according to a certain evaluation criterion. FS has been a fertile field of research and development since 1970’s and proven to be effective in removing irrelevant and redundant features, increasing efficiency in learning tasks, improving learning performance like predictive accuracy, and enhancing comprehensibility of learned results (Blum and Langley, 1997; Dash and Liu, 1997; Kohavi and John, 1997). Making use of FS technique, together with machine learning knowledge, this study aims to search preliminarily for relatively important sensors/features for chiller AFDD. As a novel machine learning method, support vector machine (SVM) (Cristianini and Taylor, 2000) is a powerful tool for solving the practical problems always characterized by nonlinearity, high dimension, local minima and/or small sample. It was first suggested by Vapnik in the 1960s and has recently become an area of intense interests and research. Besides the advantages just mentioned, the main reasons for considering SVM as an AFDD tool, are: 1) the purpose of this study is concentrated on the important sensors or features in chiller AFDD, not AFDD itself; 2) SVM proved to be the most suitable technique for chiller application in Choi et al. (2005) and also in our study (Han et al., Fig. 1 e Illustration of Feature Selection (FS). 588 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Table 1 e ConfMat for the two-class case. Diagnosed Fault (Predicted Class) Happening Fault (True Class) Yes No Yes No TP FP FN TN sensors and their influence on the AFDD performance; the conclusions are drawn in Section 6. Appendix A and B includes further information about the experiments and the features/sensors. 2. Fig. 2 e Feature selection (FS) by Genetic Algorithm (GA). 2010) for a vapor-compression refrigeration system with 4.0 kW cooling capacity. Experimental data for normal and seven faulty modes (each with four severity levels) from ASHRAE project 1043-RP (Comstock and Braun, 1999b) were utilized for the implementation and validation of FS and AFDD strategy for chiller applications. The remainder of this paper is organized as follows: Section 2 explains the FS methods used, mutual information (MI) based filter and genetic algorithm (GA) based wrapper; Section 3 briefly introduces the SVM theory, depicts the structure of the multi-class SVM with FS and elaborates the evaluation guidelines for the SVM classifiers; Section 4 describes the experimental data, the data preprocessing and the performing scheme of the AFDD strategy on the data; followed by the results of FS, SVM training and test in Section 5, along with the detailed discussions about the important Methods of feature selection (FS) adopted There are two critical parts in the process of FS (Fig. 1): searching methods by which new feature subsets are generated, and evaluation methods by which feature subsets are evaluated for decision making. Based on whether the classifier to be used is employed as the evaluation method, FS algorithms fall into two broad categories, the filter model or the wrapper model (Das, 2001; Kohavi and John, 1997). MI-based filter where MI performs evaluation with sequential forward searching and GA-based wrapper where GA acts as a searching method, were adopted in this study. 2.1. MI-based filter For a quick idea about the number of features selected and the trend of the performance in classification, MI-based filter type FS is first employed for the chiller data concerned. A brief introduction is given below. The MI (Zhu, 2000; Peng et al., 2005) of two random variables is a quantity that measures the mutual dependence of the two variables by measuring the information they share. For two discrete random variables X and Y with possible values’ set Sx and Sy respectively, MI is defined as: IðX; YÞ ¼ X X x˛Sx y˛Sy pðx; yÞlogb pðx; yÞ pðxÞpðyÞ Fig. 3 e Structure of FS D SVM model. Note: Data preprocessing will be introduced in Section 4.2. (1) 589 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Table 2 e Definition and Calculation of HR, FAR, CR and MCR. Evaluation Guidelines Definition Calculation Individual HR Performance FAR For a given class, it is defined as the fraction of the ‘predicted and happened’ (TP) samples among all the actually happened samples. For a given class, it is defined as the fraction of the ‘predicted but not happened’ (FP) samples among all the actually not-happened samples. HR ¼ True Positive Rate ¼ Recall ¼ TP/(TP þ FN) ¼ TP/row1 FAR ¼ False Positive Rate ¼ FP/(FP þ TN) ¼ FP/row2 Overall CR Performance MCR the fraction of the correctly classified samples among the total, for all classes under investigation not-correctly-classified-sample fraction for all classes CR ¼(TP þ TN)/Total ¼ Main Diagonal/Total MCR ¼ 1CR where p(x) is the probability of x, p(x,y) is the joint probability of x and y, b is the base of the logarithm with the most common values, 2, employed. It measures how much knowing one of the two variables reduces our uncertainty about the other. For example, if X and Y are independent, then knowing X does not give any information about Y and vice versa, so their mutual information is zero. Otherwise, their mutual information would be a positive number. The larger the mutual information, the greater the dependency on each other is. If one of the variables is the target class and the other is a feature, then the mutual information may give us an idea about how much important the feature is in classifying the samples, or how much relevant the feature is to the target class. That is the basic principle of MI-based FS. For experimental data matrix such as those in our study, rounding should first be done to extract possible values before any calculation of the probability and MI begins. MI-based minimal-redundancyemaximal-relevance (mR MR) FS (Peng et al., 2005) is implemented in this study, which aims to search for those features that are both minimally redundant among themselves and maximally relevant to the target classes. Incremental search methods can be used in practice to find the near-optimal features. Suppose Sm-1 is the feature set with m-1 features. The task is to select the mth feature from the remaining set. The respective incremental algorithm optimizes the following condition: 6 max 4I xj ; c xj ˛XSm1 , 3 ! X 1 7 I xj ; xi þ 0:01 5 m 1 x ˛S i GA-based wrapper GA is a time-consuming methodology itself. The original purpose of wrapping it with a simple classifier, linear discriminant analysis (LDA) (Krzanowski, 1988) by the ‘classify’ function of Matlab, is to save computational time while keeping classification information as much as possible. The selected feature subset may not be the optimal one for the machine learning method to be adopted in AFDD, but we have the opportunity to practice try-and-error process and find the near optimal subset accordingly, thanks to the shortened time period for each selection. It is a reasonable attempt in a sense. In fact, the advantage of SVM in chiller AFDD application is further confirmed by comparing the classification performance of different AFDD strategies, LDA or SVM. GA (Goldberg, 1989) is a way of solving problems by mimicking the same processes Mother Nature uses. They use the same combination of selection, recombination (or crossover) and mutation to evolve a solution to a problem. In searching for an optimal or near optimal feature subset for chiller AFDD application, the population is a collection of possible feature subsets and the fitness is measured by a function of classifier performance. Fig. 2 depicts the FS process by GA. For GA-LDA wrapper, fitness function employed in this study is a linear combination of the misclassification rate (MCR) and the posteriori probability of the classifier (Eq. (3)). For GASVM wrapper, more direct expression, 100 MCR þ 1, is used. (2) Steam m1 where c is the target class vector that stores the class labels for the samples concerned; 0.01 is added just for the non-zero requirement of the denominator. City Water Table 3 e ConfMat for the three-class case. Diagnosed Fault (Predicted Class) class 1 Happening Fault (True Class) class 1 class 2 class 3 a11 (TN) a21 (FN) a31 (TN) a class 2 class 3 a12 (FP) a22 (TP) a32 (FP) a13 (TN) a23 (FN) a33 (TN) Bold has been used for emphasis a Those in () are for class 2, as an example. Steam HX Condenser Water to City Water HX Evaporator Water Loop Chilled Water to Hot Water HX bypass Hot Water Loop 2 2.2. Condenser Water to Evaporator Water HX Chiller Evaporator Condenser Water Loop Condenser Fig. 4 e Simplified experimental layout to emphasize water flow circuits. 590 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Table 4 e Seven faulty modes under investigation. 1 2 3 4 5 6 7 8 Normal or faulty modes Abbreviations Normal Refrigerant leak/undercharge Condenser fouling Reduced condenser water flow Non-condensables in refrigerant Reduced evaporator water flow Refrigerant overcharge Excess oil Normal RefLeak ConFoul ReduCF NonCon ReduEF RefOver ExcsOil Fitness ¼ 100 MCR þ 1 meanðmaxðp;½ ; 2ÞÞ two classes may not be desirable, slack variables (also called a margin error) are employed to allow for training errors. Let Sa ¼ fðx1 ; y1 Þ; ðx2 ; y2 Þ; /; ðxN ; yN Þg be a training set for two classes, where xi ˛Rn denotes the input vectors, yi ˛f1; 1g stands for their class label, and N is the sample number. The discriminant function with kernel K(x,xi) is: ) ( N X ai yi Kðx; xi Þ þ b (4) f ðxÞ ¼ sgn i¼1 where, sgn(u) is the sign function, if u > 0 then sgn(u) ¼ 1, if u<¼0 then sgn(u) ¼ 1; x is the sample to be recognized; b is called the bias or threshold; the Lagrange multiplier ai is the solution of the following quadratic programming (QP) problem: (3) where p is a matrix of the posterior probabilities that the jth training group was the source of the ith sample observation, i.e., Pr( group jjobs i). maximize a Subject to : N X ai i¼1 N X N X N 1X yi yj ai aj xTi xj 2 i¼1 j¼1 yi ai ¼ 0; 0 ai C; (5) ci i¼1 3. Machine learning d support vector machine (SVM) This section includes a brief introduction to basic two-class SVM, multi-class SVM, SVM with FS and the evaluation guidelines for the SVM classifiers. 3.1. SVM The basic SVM classifier deals with linearly separable twoclass cases d in which the data are separated by an optimal separating surface that is defined by the support vectors. In order to solve nonlinear problems, kernel functions such as polynomial, sigmoid, Gaussian radial basis function (RBF), etc., that map input space implicitly into high-dimensional feature space where the problems may be solved linearly, are introduced. For noisy data, when complete separation of the where, C > 0 is a penalty constant (also called slack penalty) for those samples misclassified by the optimal separating plane, which sets the relative importance of maximizing the margin and minimizing the amount of slack, and will be determined via grid search and k-fold cross validation (presented in detail in our study (Han et al., 2010), including the detailed SVM training process). Only a small portion of ai (for i ¼ 1,2,.,N) are not zero, and the corresponding samples are those on the margin, i.e. the SVs, which further confirms that only the SVs play a part in defining the optimal separating hyperplane. The bias b can be obtained from: b ¼ yi wT xi (6) where xi is any support vector (although in practice it is safer to average over all support vectors (Burges, 1998)), and w is the weight vector as follows: Table 5 e List of features/variables obtained from experiments. 1 TEI 9 TSI 2 TWEI 10 TSO 3 TEO 11 TBI 4 TWEO 12 TBO 5 TCI 13 Cond Tons 6 TWCI 14 Cooling Tons 17 Evap Tons 18 Shared Evap Tons 26 TEA 34 Tsh_suc 19 Building Tons 27 TCA 35 TR_dis 20 Evap Energy Balance 28 TRE 36 Tsh_dis 21 kW 42 Tolerance% 43 Unit Status 51 VSS 59 TWO 25 FEW 33 T_suc 41 Heat Balance (%) 49 TWCD 57 VW 50 TWED 58 TWI 22 COP 7 TCO 15 Shared Cond Tons 23 kW/Ton 8 TWCO 16 Cond Energy Balance 24 FWC 29 PRE 37 P_lift 30 TRC 38 Amps 31 PRC 39 RLA% 44 Active Fault 45 TO_sump 46 TO_feed 47 PO_feed 32 TRC_sub 40 Heat Balance (kW) 48 PO_net 52 VSL 60 THI 53 VH 61 THO 54 VM 62 FWW 55 VC 63 FWH 56 VE 64 FWB Note: further information of the features is available in Appendix A. i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 591 open literature (Hsu and Lin, 2002; Platt et al., 2000; Rifkin and Klautau, 2004; Liu et al., 2006). Among them, the ‘one against one’ algorithm (Chang and Lin, 2001; Hsu and Lin, 2002), which constructs one two-class SVM between each pair of classes, is the most easy to be understood and has a slightly better performance than ‘one against others’ algorithm for the chiller data to be used in this study, though the latter proved to be better in Han et al. (2010), probably because of the different distribution of the samples. Therefore, the ‘one against one’ multi-class algorithm is chosen for this study. The detailed structure of SVM classifier with FS is demonstrated in Fig. 3. 3.2. Evaluation of SVM classifier Table 1 is a confusion matrix (ConfMat) for the two-class case, where TP (true positive) denotes the number of samples happened and diagnosed, TN (true negative) that of samples not happened and not diagnosed, FP (false positive) that of samples not happened but diagnosed, and FN (false negative) that of samples happened but not diagnosed. TP and TN are correct classifications, and FP and FN are incorrect ones. Hence, good results correspond to large numbers down the main diagonal and small, ideally zero, off-diagonal elements. There are literatures (Fawcett, 2004; Yélamos et al., 2007) that analyze ConfMat from different viewpoints. To make it simple and easy to understand for FDD use, correct rate (CR) or misclassification rate (MCR) is adopted for the overall performance of the classifier, and hit rate (HR) and false alarm rate (FAR) are used to evaluate the performance of the classifier for individual class. Their definition and calculations are given in Table 2. The wording of accuracy is avoided so as to minimize misunderstanding. For multi-class problems to which FDD belongs, ConfMat for the three-class case, labeled as 1, 2 and 3, respectively, is shown in Table 3, where CR, HR and FAR for class 2 are easy to be obtained and so do those for class 1 and 3. Fig. 5 e Performing scheme of AFDD with feature selection (FS). 4. Experimental data and AFDD framework 4.1. Experimental data The experimental data used come from ASHRAE project 1043 (Comstock and Braun, 1999a,b). The full name of the research w¼ N X ai yi xi (7) i¼1 Gaussian RBF is selected as kernel function in our model for its excellent performance (Lin and Lin, 2003): Kðx; xi Þ ¼ exp gx xi 2 (8) where, g > 0 is a parameter that controls the width of Gaussian. The greater the width, the more flexible the classifier is. It is determined together with slack penalty C in practice. Two-class classification is useful but not suitable for fault diagnosis (FD) in its original form for FD is always a problem of multi-class classification or pattern recognition. A bunch of algorithms for multi-class applications have been proposed in Fig. 6 e Cross validation results for MI-based feature selection (FS). 592 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Table 6 e Features selected by each selection scheme. Num. of Features Feature No. Selected 1e9 Case1 Case2 Case3 Case4 Case5 All features GA-LDA GA-LDA GA-LDA GA-SVM 64 13 10 8 8 10e19 e 6, 6, 4, 3, 9 8 8 7 20e29 30e39 40e49 50e59 60w e e 17, 19 18 e e 23, 24, 29 e 24, 27, 28 24 e 31, 34, 38 30 e 30, 35 e 45, 47 45, 47 47 47 e 55, 56 56 56 56, 58 e 60 60, 63 e e Note: Features No. 3 (TEO) and 4 (TWEO) are the same features obtained by different sensors, RTD and thermistor, respectively. So do No. 7 and 8. project is “Fault Detection and Diagnostic (FDD) Requirements and Evaluation Tools for Chillers” referred to as ASHRAE 1043RP in this study, where a 90-ton centrifugal chiller was installed indoor with a nearly constant ambient temperature of 72 F (22.2 C). Both the evaporator and condenser are flooded-type, 2-pass shell-and-tube heat exchangers, with water flowing in the tubes as the secondary-coolant. Fig. 4 depicts the important equipment contained within the chiller test facility and emphasizes the five water flow circuits d evaporator water circuit, condenser water circuit, hot water circuit, city water supply and steam supply. The abbreviation ‘HX’ in the figure stands for ‘heat exchanger’. Seven faults (Table 4), chosen based on the results of chiller fault survey, were artificially introduced and investigated in laboratory with each fault four levels of severity. They were sequenced in descending order according to the normalized happening frequency (Comstock and Braun, 1999a). Sixty-four features (Table 5) were obtained at 10-s intervals, with 16 of them calculated in real time by VisSim program (see Appendix A). To investigate all seven faults, four sets of normal data (Normal, Normal R1, Normal CF and Normal NC) were chosen according to the recommendation of Comstock and Braun (1999b). 4.2. Data preprocessing 4.2.1. Steady state detection Before the FDD process begins, steady state detection is needed to filter out data indicative of transient operation, such as those during chiller start-up and shut down time periods or when the driving conditions change abruptly. There are many classic steady state filters measuring the change rate of a variable with respect to time. Among them, the method of computing geometrically weighted averages and variances (Glass et al., 1995) is implemented because it has the advantage that the computations are recursive, requiring a minimum of memory, and it is sensitive in reacting promptly whenever the current data depart from their steady-state values. Three variables, i.e., temperatures of evaporator water in (TWEI), evaporator water out (TWEO) and condenser water in (TWCI), were selected as the state characteristics for they are deterministic for the performance of a chiller with constant water flow rates. Only if the weighted deviation of each of these three variables (or features) falls below a pre-determined threshold, could the chiller be considered as under steady state. In this study, the threshold is set at 0.2 C, the time increment between measurements is 10 s and the time window is 80 s. 4.2.2. Data normalization For a data set of N observations and n process variables, the observed sample matrix Z ðZ˛RNn Þ is constructed and b. normalized by Eq. (9) to obtain data matrix X 8 > > > > > > > > > < > > > > > > > > > : Mj ¼ N1 N P zi; j i¼1 sffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffi N 2 P 1 sj ¼ N1 zi; j Mj (9) i¼1 bi; j ¼ x zi; j Mj sj where Mj and sj are the mean and the standard deviation, respectively, of the jth variable; zi,j is an element of matrix Z, b bi;j is an element of matrix X. and x 4.3. Performing scheme of chiller AFDD strategy In practice, the chiller AFDD strategy combined with FS previously presented is performed according to the scheme Table 7 e SVM model and AFDD performance. Correct Rate (CR) % Select Case1 Case2 Case3 Case4 Case5 e 88.02 85.60 87.15 e Train 99.34 98.94 99.25 98.15 99.48 Note: ‘Select’ means feature selection. Bold has been used for emphasis Test 97.45 99.20 99.18 98.02 99.53 CPU Time Consumed (s) Select e 65.99 72.72 86.83 Train 11,559 2104 1460 1200 about 8 days 100.00% 18.20% 12.63% 10.38% SVM Parameters Test 3.1512 1.1388 1.0764 1.0608 0.9048 100.00% 36.14% 34.16% 33.66% 28.71% C 4 2 24 23 23 23 g 24 21 22 22 22 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 593 Table 8 e ConfMat for Case 1 and Case 5. Case1: All Features Normal RefLeak ConFoul ReduCF NonCon ReduEF RefOver ExcsOil 0 B B B B B B B B B B @ Results and discussion Data for each test run passed through the steady state detector (SSD, Section 4.2.1), first. 12 000 samples were then randomly selected from the historical data pool where the steady state data for the normal and seven faulty modes (each with four severity levels) were stored. The selected data set was split randomly, afterwards, into 8000 and 4000 samples for training and testing, respectively. Before any further application began, the training set and test set were normalized independently by Eq. (9). After that, FS was conducted on the training data and the selected features were transferred to the test data to kick out those not selected; SVM was then trained and tested for chiller AFDD and evaluation was made accordingly, as Fig. 5 shows. Both training and test sets are composed of a data matrix with rows for samples and columns for features, and a target class vector where the class labels for the samples are stored. Hence, before any FS, the training set contains an 8000 64 data matrix and an 8000 1target class vector. The results are given below. So does the corresponding discussion. All the programs were run on a notebook with a CPU of Intel Core2 P7370 (2.00 GHz), a memory of 2.00 GB and an operating system of Windows Vista Home Basic. Libsvm 2.89 (Chang and Lin, 2001) was installed as a toolbox for Matlab 7.8 with Genetic Algorithm and Direct Search Toolbox. 5.1. 0 1 479 4 0 0 0 0 0 0 62 424 0 0 0 0 6 1 C C 2 0 492 0 0 0 0 0 C C 1 0 0 532 0 0 0 0 C C 3 0 0 0 514 0 0 0 C C 0 0 0 0 0 475 0 0 C C 8 1 0 0 0 0 487 11 A 2 1 0 0 0 0 0 495 shown in Fig. 5. Detailed explanation is given in the first paragraph of Section 5. 5. Case5: GA-SVM Wrapper 8 features B B B B B B B B B B @ 5.2. 1 483 0 0 0 0 0 0 0 0 490 1 0 0 0 1 1 C C 2 0 491 1 0 0 0 0 C C 0 0 0 533 0 0 0 0 C C 0 0 0 1 516 0 0 0 C C 0 0 0 1 0 474 0 0 C C 0 4 0 0 0 0 503 0 A 4 1 1 1 0 0 0 491 GA-based wrapper In practice, GA-LDA wrapper was first employed to select 13, 10 and 8 features, respectively. The selected feature subsets were then used to train SVM model. After that, validation was performed on the trained model using the test set. The results are given below in Tables 6e8 and Figs. 7e9. Also in the tables and figures are those of GA-SVM wrapper for 8 features and no FS (64 features). 5.2.1. Features/sensors selected for each case The FS results are shown in Table 6. Features No. 47 (PO_feed) and 56 (VE) distinct themselves from other features for they appeared in each scheme. PO_feed is the pressure of oil feed and VE is the position of the electronic valve installed in the evaporator water loop. The next important features are No. 7 (TCO) or 8 (TWCO) and 24 (FWC) for they were selected by three schemes. TCO and TWCO are both the temperature of condense water out, by different types of sensors (RTD and thermistor, respectively). FWC is the flow rate of condenser water. All these four features are there in Case 5 where GASVM wrapper is adopted for selection of 8 features. That is a possible sign for Case 5 to perform the best. Further study on the characteristics of PO_feed, VE (Fig. 7) and FWC indicates that each of them almost distinctively pointed to one of the fault: PO_feed / ConFoul, VE / ReduEF MI-based mRMR feature selection (FS) Based on the mechanism introduced in Section 2.1, MI-based mRMR filter was implemented on the training data. In order to have a quick idea about the trend the AFDD performance varies with the number of features, 10-fold cross validation was implemented with the MATLAB built-in ‘crossval’ function. The results were shown in Fig. 6. It can be seen from the figure that when the number of features increases beyond certain value (say, 21), the performance will no further be improved, and there are several turning points (6, 8, 13, 17, etc.) where the trend has been shifted or changed sharply. We chose and focused on 13, 8 and 10 in-between them for further investigation. Fig. 7 e Characteristics of features No. 47 and 56. 594 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Fig. 8 e Hit rate (HR) for each class. and FWC / ReduCF. As an example, Fig. 7 depicts the entire sample points in the training set (after normalization), twodimensionally, with PO_feed as X axis and VE as Y axis. The light blue samples represent the fault of reduced evaporator flow (ReduEF) and their Y values (VE) are obviously independent from those of the other samples, so do the X values (PO_feed) of the yellow points (ConFoul), which means that VE and PO_feed are good indicators for ReduEF and ConFoul, respectively. This is reasonable based on our knowledge about refrigeration and the system. TCO or TWCO is not that distinctive, but when it is combined with other features, such as those left in Case 5, a comparatively satisfying AFDD performance may be obtained. Also in Case 5 are features numbered 3 (TEO, temperature of evaporator water out), 30 (TRC, saturated refrigerant temperature in condenser), 35 (TR_dis, refrigerant discharge temperature) and 58 (TWI, temperature of city water in). The positions of the five sensors other than those in the refrigerant cycle (TRC, TR_dis and PO_feed) are shown in Appendix B, among which two were found in the evaporator water loop (TEO and VE) and the other three were directly related to the condenser water loop (TCO, FWC and TWI). 5.2.2. Overall performance of the AFDD strategy Table 7 shows the main AFDD results by SVM model, including the correct rate (CR) of FS (LDA classifier with 10-fold cross validation), SVM training (10-fold cross validation) and testing, the corresponding CPU time consumption and the optimal (or near optimal) parameters for SVM. The CRs of LDA classifiers (‘Select’) are all about or more than ten percent less than those of SVM, which provides another proof for the better performance of SVM model in chiller AFDD. The training and test CRs are always comparable except for Case 1 where the test CR falls somewhat drastically, nearly two percent less (97.45 vs. 99.34), probably because of the noise or redundancy existed in the full feature set. Case 5 performs the best with the test CR as high as 99.53%, Case 2 comes the second with 99.20% and Case 3 the third with 99.18%. The pairwise probability (Chang and Lin, 2001) prediction for the ‘one-againstone’ multi-class SVM model was opened during training and Fig. 9 e False Alarm Rate (FAR) for each class. i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 testing for each case. That helps improve the performance slightly and reduce the test time consumption sharply. For example, without the pairwise probability prediction, the test CR for Case 5 was 99.475% and the test time for 4000 test samples was 2.0904 s, compared to 99.53% and 0.9048 s, respectively. This reduction in time, together with the high CR, makes its future promising for online AFDD application. The CPU time consumed by GA-LDA wrapper for FS is about 1.0e1.5 min (65e87 s). Even plus SVM training time, it is still much less than that of GA-SVM wrapper, about 1.86& of the latter. The much longer time for Case 5could be attributed to the combination of GA and SVM, where each of the eight individuals for each generation needs undergo the time-consuming process of grid search and 10-fold cross validation for SVM training. That is, in a sense, the price to pay for performance bettering. In fact, we found another subset of features accidentally, during an interrupted GA-SVM selection after running two days (8 generations), that behaves just like Case 2 (13 features) with a test CR of 99.2% (8 features). The features are No. 1 (TEI), 6 (TWCI), 21 (kW), 26 (TEA), 32 (TEC_sub), 45 (TO_sump), 48 (PO_net) and 58 (TWI), with the SVM parameters C ¼ 8 and g ¼ 2. The interesting thing is that this subset is mainly about the inlet sensors for water, refrigerant, power or even oil. This does not necessarily mean that the sensors at inlet are not that important compared with those at outlet, in consideration of the random process in GA algorithm and the interrupted running, but it does includes some information about the combination of sensors in chiller AFDD, which accordingly leave some space and certain direction for future investigation. It can also be noticed from the table that the less the features in the subset, the less the time consumed by training and testing (except for GA-SVM wrapper training). For Case 4 and 5, where the number of features is the same, the testing time for Case 5 is less while the CR is higher, maybe because subset 4 includes more noise or redundancy. Also in Table 7 are the near optimal parameters obtained for SVM model via grid search and 10-fold cross validation. Hsu et al. (2004) found that trying exponentially growing sequences of C and g is a practical method to identify good parameters, for example, with 2 as base. In our study, the search ranges were considered from log2C ¼ 1 w 4 and log2g ¼ 4 w 2, both with grid space 1. Searches may also be conducted on the neighborhood of the parameters listed in the table. The training time will increase, accordingly. 5.2.3. Individual performance for each class The performance for individual class is shown in Figs. 8 and 9. To make it clear, part of the figure was magnified. There is a sharp drop of HR for RefLeak Fault in Case 1, which indicts that many RefLeak samples were reported as others. This is confirmed from Table 8 for 62 out of 493 RefLeak samples were alarmed in Normal, which constitutes the major reason why the FAR for Normal in Case 1 is surprisingly high (Fig. 9). With all features (64), it is exceptionally difficult for RefLeak to be discerned from Normal. Generally speaking, Case 5 behaves the best for each class with higher (if not the highest, say, for ExcsOil) HR and lower (if not the lowest, say, for Normal and ReduCF) FAR. The middle parts of all lines in both figures are near optimal, which means that the four faults, ConFoul, ReduCF, NonCon and ReduEF, are easy to be detected and 595 diagnosed, even with the slightest severity level (level 1). CR ¼ 100% reveals that all the samples of the class are correctly predicted by the AFDD model, that is, as long as it happens, it could be exactly identified, e.g. ReduEF for Case 1, ReduCF and ReduEF for Case 2, and Normal and ReduCF for Case 5. FAR ¼ 0% suggests that all the samples reported in the class truly happened, that is, as long as it is reported, it happens, e.g. NonCon and ReduEF for all cases. For all the SVM AFDD strategies presented in this study, if a sample is identified as NonCon or ReduRF, it is definitely right. The two faults, RefLeak and RefOver, are the most difficult to be hit. The three faults, Normal, RefLeak and ExcsOil, are the easiest to be falsely alarmed. Though most of the FARs for Case 4 are higher than those for Case 5 (both with 8 features), the former performs better than the latter for ReduCF probably due to the inclusion of features No. 18 (Shared Evap Tons), 27 (TCA) and 28 (TRE) (the other five features are the same for the two cases as in Table 6). Table 8 lists the confusion matrices of Case 1 and Case 5 for comparison. As previously stated in Section 3.3, good results correspond to large numbers down the main diagonal and small, ideally zero, off-diagonal elements. It can be known at a glance that the performance of Case 5 is better than that of Case 1. The most distinct difference focuses on the first and last columns, where there are many non-zero off-diagonal elements for Case 1, especially for RefLeak and RefOver faults. If all features are used, level 1 RefLeak is easy to be misdiagnosed as Normal and level 1 RefOver is easy to be confused with ExcsOil. All faults have sample(s) reported as Normal, except for ReduEF whose HR is 100% (no non-zeros off-diagonal elements in the row) and FAR is 0% (no non-zeros off-diagonal elements in the column). When features are reduced to eight as those in Case 5, the diagnose performance for RefLeak and RefOver will be greatly improved with the HR enhanced from 86% to 99.39% and from 96.0% to 99.21%, respectively, and the FAR lowered from 0.17% to 0.14% and from 0.17% to 0.03%, respectively. Naturally, there is the price to pay d a slight drop for ReduEF’s HR to 99.79%. 6. Conclusions This study researches on the important sensors for chiller AFDD application, based on FS techniques and machine learning methodology. MI-based filter has been adopted for a preliminary idea about how the number of features affects the AFDD performance. GA-based wrapper has then been investigated in a detailed manner with GA-LDA wrapper for 13, 10 and 8 features and GA-SVM wrapper for 8 features. ‘One-against-one’ multi-class SVM has been employed as an AFDD strategy after or within FS. The results showed that the features/sensors could be successfully reduced to one-third of the original number, from 64 to 8, while achieving a much better AFDD performance d higher CR, HR, lower FAR and much less computational time. Details are as follows: 1) For the four cases with FS, the test CRs are all higher than that for no FS, and the test time is cut down about 63e72%. 2) Features No. 47 (PO_feed) and 56 (VE) distinct themselves from other features for they appeared in each selection 596 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 scheme. Detailed study on their characteristics shows that they are fairly indicative to certain fault: PO_feed / ConFoul, VE / ReduEF. FWC is also an indicator that directly points to ReduCF. See Appendix A for detailed information about the features. 3) Among all five cases, GA-SVM wrapper (Case 5) performs the best with eight features/sensors including five temperatures (TEO, TCO, TRC, TR_dis, and TWI), one pressure (PO_feed), one flow rate (FWC) and one valve position (VE). It can be said that, except the PO_feed, five of them (TCO, FWC, TRC, TR_dis, TWI) are about the condenser side, and the other two at the evaporator side (TEO, VE). See Appendix B for their locations in the system. 4) The four faults, ConFoul, ReduCF, NonCon and ReduEF, are easy to be detected and identified, even with the slightest severity level (level 1). The two faults, RefLeak and RefOver, are the most difficult to be hit, especially when all features are included. The three faults, Normal, RefLeak and ExcsOil, are the easiest to be falsely alarmed. Generally speaking, the feature selection schemes and the machine learning method employed in this study proved to be effective in improving FDD performance, reducing computational time and saving initial cost on sensors in terms of both type and quantity. It represents a useful effort, in a sense, not only for the researchers but for the chiller manufacturers. Future study includes further test with more samples, other FS methods, SVM parameter tuning, improved multi-class SVM algorithm, and other FDD technology such as combining different techniques for fault detection and fault diagnosis, etc. The practical implementation will be the final testing ground. Acknowledgement This project was supported by the National Natural Science Foundation of China (NSFC) under Grant No. 50876059. The authors would also like to thank Prof. James Braun with Purdue University and Dr. Donna Daniel with ASHRAE for their kind help in providing the detailed experimental data, Dr. Peng Hanchuan with University of California at Berkeley, for his informative discussions about mutual information and Harri M.T. Saarikoski, PhD candidate with Helsinki University, Finland, for his valuable advices on feature selection. Appendix A. Further information about features. Both Appendix A and B are from Comstock and Braun (1999a), for information only. Table A1 e Exported data from experimental test runs. Designation Source Description Units 1 2 3 4 5 6 7 8 9 Time TWE_set TEI TWEI TEO TWEO TCI TWCI TCO TWCO TSI VisSim Micro Tech JCI AHU (RTD) Micro Tech (Thermistor) JCI AHU (RTD) Micro Tech (Thermistor) JCI AHU (RTD) Micro Tech (Thermistor) JCI AHU (RTD) Micro Tech (Thermistor) JCI AHU (RTD) Second F F F F F F F F F F 10 TSO JCI AHU (RTD) 11 TBI JCI AHU (RTD) 12 TBO JCI AHU (RTD) 13 14 15 Cond Tons Cooling Tons Shared Cond Tons VisSim VisSim VisSim 16 Cond Energy Balance VisSim 17 18 Evap Tons Shared Evap Tons VisSim VisSim 19 Building Tons VisSim Real time counter Chilled water setpointdcontrol variable Temperature of Evaporator Water In Temperature of Evaporator Water In Temperature of Evaporator Water Out Temperature of Evaporator Water Out Temperature of Condenser Water In Temperature of Condenser Water In Temperature of Condenser Water Out Temperature of Condenser Water Out Temperature of Shared HX Water In (in Condenser Water Loop) Temperature of Shared HX Water Out (in Condenser Water Loop) Temperature of Building Water In (in Evaporator Water Loop) Temperature of Building Water Out (in Evaporator Water Loop) Calculated Condenser Heat Rejection Rate Calculated City Water Cooling Rate Calculated Shared HX Heat Transfer (only valid with no water bypass) Calculated 1st Law Energy Balance for Condenser Water Loop (only valid with no water bypass) Calculated Evaporator Cooling Rate Calculated Shared HX Heat Transfer (should equal Shared Cond Tons with no water bypass) Calculated Steam Heating Load F F F Tons Tons Tons Tons Tons Tons Tons 597 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Table A1 (continued). Designation Source 20 Evap Energy Balance VisSim 21 kW JCI AHU 22 23 24 25 26 COP kW/ton FWC FEW TEA VisSim VisSim JCI AHU JCI AHU Micro Tech 27 TCA Micro Tech 28 TRE Micro Tech 29 30 PRE TRC Micro Tech Micro Tech 31 32 PRC TRC_sub Micro Tech Micro Tech 33 34 T_suc Tsh_suc Micro Tech Micro Tech 35 36 TR_dis Tsh_dis Micro Tech Micro Tech 37 38 P_lift Amps Micro Tech Micro Tech 39 40 RLA% Heat Balance (kW) Micro Tech VisSim 41 Heat Balance% VisSim 42 Tolerance% VisSim 43 44 45 46 47 48 49 Unit Status Active Fault TO_sump TO_feed PO_feed PO_net TWCD Micro Tech Micro Tech Micro Tech Micro Tech Micro Tech Micro Tech Micro Tech 50 TWED Micro Tech 51 52 53 54 55 56 57 58 59 60 61 62 63 64 VSS VSL VH VM VC VE VW TWI TWO THI THO FWW FWH FWB JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU JCI AHU VisSim VisSim VisSim (RTD) (RTD) (RTD) (RTD) *Please consult the corresponding tables in Comstock and Braun (1999a). Description Calculated 1st Law Energy Balance for Evaporator Water Loop Watt Transducer Measuring Instantaneous Compressor Power Calculated Coefficient of Performance Calculated Compressor Efficiency Flow Rate of Condenser Water Flow Rate of Evaporator Water Evaporator Approach Temperature (TWEO-TRE) Condenser Approach Temperature (TRCTWCO) Saturated Refrigerant Temperature in Evaporator Pressure of Refrigerant in Evaporator Saturated Refrigerant Temperature in Condenser Pressure of Refrigerant in Condenser Liquid-line Refrigerant Subcooling from Condenser Refrigerant Suction Temperature Refrigerant Suction Superheat Temperature Refrigerant Discharge temperature Refrigerant Discharge Superheat temperature Pressure Lift Across Compressor Current Draw Across One Leg of Motor Input Percent of Maximum Rated Load Amps Calculated 1st Law Energy Balance for Chiller Calculated 1st Law Energy Balance for Chiller Calculated Heat Balance Tolerance (ARI 550 defined as allowable test tolerance on heat balance) Consult Table B.4 in Appendix* Consult Table B.3 in Appendix* Temperature of Oil in Sump Temperature of Oil Feed Pressure of Oil Feed Oil Feed minus Oil Vent Pressure Condenser Water Temperature Difference (TWCO-TWCI) Evaporator Water Temperature Difference (TWEI-TWEO) Small Steam Valve Position Large Steam Valve Position Hot Water Valve Position 3-way Mixing Valve Position Condenser Valve Position Evaporator Valve Position City Water Valve Position Temperature of City Water In Temperature of City Water Out Temperature of Hot Water In Temperature of Hot Water Out Calculated City Water Flow Rate Calculated Hot Water Flow Rate Calculated Condenser Water Bypass Flow Rate Units Tons kW kW/ton GPM GPM F F F PSIG F PSIG F F F F F PSIG Amps % kW % % 0e27 0e44 F F PSIG PSI F F %Open %Open %Open %Open %Open %Open %Open F F F F GMP GMP GMP 598 i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 Appendix B. Position of sensors Fig. B1 e Sensors mounted in condenser water circuit and city water supply. Fig. B2 e Sensors mounted on evaporator water circuit and steam supply. i n t e r n a t i o n a l j o u r n a l o f r e f r i g e r a t i o n 3 4 ( 2 0 1 1 ) 5 8 6 e5 9 9 references Bendapudi, S., Braun, J.E., 2002. A Review of Literature on Dynamic Models of Vapor Compression Equipment. HL 2002-9, Report #4036-5. Ray Herrick Laboratories, Purdue University. Blum, A., Langley, P., 1997. Selection of relevant features and examples in machine learning. Artif. Intell. 97, 245e271. Brambley, M.R., Pratt, R.G., Chassin, D.P., Katipamula, S., 1998. Automated diagnostics for outdoor air ventilation and economizers. ASHRAE J. 40 (10), 49e55. Burges, C.J.C., 1998. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 2 (2), 1e47. Chang, C.C., Lin, C.J., 2001. LIBSVM: a library for support vector machines. Software available at. http://www.csie.ntu.edu.tw/ wcjlin/libsvm/. Choi, K., Namburu, S.M., Azam, M., Luo, J., Pattipati, K., PattersonHine, A., 2005. Fault diagnosis in HVAC chillers: adaptability of a data-driven fault detection and isolation approach. IEEE Instrum. Meas. Mag. 8 (3), 24e32. Comstock, M.C., Braun, J.E., 1999a. Development of Analysis Tools for the Evaluation of Fault Detection and Diagnostics for Chillers. HL 99e20, Report # 4036-3. ASHRAE. Research Project 1043. Comstock, M.C., Braun, J.E., 1999b. Experimental Data from Fault Detection and Diagnostic Studies on a Centrifugal Chiller. ASHRAE. HL 99e18, Report # 4036-1Research Project 1043. Cristianini, N., Taylor, J.S., 2000. An Introduction to Support Vector Machines. Cambridge University Press. Das, S., 2001. Filters, Wrappers and a Boosting-Based Hybrid for Feature Selection, Proc. 8th Int. Conf. Mach. Learn. pp. 74e81. Dash, M., Liu, H., 1997. Feature selection for classifications. Intell. Data Anal. Int. J. 1, 131e156. Dexter, A., Pakanen, J., 2001. International Energy Agency Building Demonstrating Automated Fault Detection and Diagnosis Methods in Real Buildings. Technical Research Centre of Finland, Laboratory of Heating and Ventilation, Espoo, Finland. Fawcett, T., 2004. ROC Graphs: Notes and Practical Considerations for Researchers. Kluwer Academic Publishers (Printed in the Netherlands). Ghiaus, C., 1999. Fault diagnosis of air-conditioning systems based on qualitative bond graph. Energy and Buildings 30, 221e232. Glass, A.S., Gruber, P., Roos, M., Todtli, J., 1995. Qualitative model based fault detection in air-handling units. IEEE Control Syst. Mag. 15 (4), 11e22. Goldberg, D.E., 1989. Genetic Algorithms in Search, Optimization & Machine Learning. Addison-Wesley. Gordon, J.M., Ng, K.C., Chuan, H.T., 1995. Centrifugal chillers: thermodynamic modeling and a diagnostic case study. Int. J. Refrigeration 18 (4), 253e257. Grimmelius, H.T., Woud, J.K., Been, G., 1995. On-line failure diagnosis for compression refrigeration plants. Int. J. Refrigeration 18 (1), 31e41. 599 Han, H., Cao, Z.K., Gu, B., Ren, N., 2010. PCA-SVM-Based automated fault detection and diagnosis (afdd) for vaporcompression refrigeration systems. HVAC&R Research 16 (3), 295e313. Hsu, C.W., Lin, C.J., 2002. A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 13, 415e425. Hsu, C.W., Chang, C.C., Lin, C.J., 2004. A Practical Guide to Support Vector Classification. Technical Reports. Department of Computer Science and Information Engineering, National Taiwan University. Available at. http://www.csie.ntu.edu.tw/ wcjlin/papers/guide/guide.pdf. Kaldorf, S., Gruber, P., 2002. Practical experiences from developing and implementing an expert system diagnostic tool. ASHRAE Trans. 108 (1), 826e840. Kaler, G.M., 1990. Embedded expert system development for monitoring packaged HVAC equipment. ASHRAE Trans. 96 (2), 733. Katipamula, S., Brambley, M.R., 2005a. Methods for fault detection, diagnostics, and prognostics for building systemsda review, part I. HVAC&R Research 11 (1), 3e24. Katipamula, S., Brambley, M.R., 2005b. Methods for fault detection, diagnostics, and prognostics for building systemsda review, part II. HVAC&R Research 11 (2), 169e187. Kohavi, R., John, G., 1997. Wrappers for feature subset selection. Artif. Intell. 97, 273e324. Krzanowski, W.J., 1988. Principles of Multivariate Analysis: a User’s Perspective. Oxford University Press, New York. Liang, J., Du, R., 2007. Model-based fault detection and diagnosis of HVAC systems using support vector machine method. Int. J. Refrigeration 30, 1104e1114. Lin, H.T., Lin, C.J., 2003. A Study on Sigmoid Kernels for SVM and the Training of Non-PSD Kernels by SMO-type Methods. Technical Report. Department of Computer Science, National Taiwan University. http://www.csie.ntu.edu.tw/wcjlin/ papers/tanh.pdf. Liu, Y.G., You, Z.S., Cao, L.P., 2006. A novel and quick SVM-based multi-class classifier. Pattern Recognit. 39, 2258e2264. Peng, H.C., Long, F.H., Ding, C., 2005. Feature selection based on mutual information: criteria of max-dependency, maxrelevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27 (8), 1226e1238. Platt, J.C., Cristianini, N., Taylor, J.S., 2000. Large Margin DAGs for Multiclass Classification. MIT Press. http://research.microsoft. com/pubs/68541/dagsvm.pdf. Rifkin, R., Klautau, A., 2004. In defense of one-vs.-all classification. J. Mach. Learn. Res. 5, 101e141. Tassou, S.A., Grace, I.N., 2005. Fault diagnosis and refrigerant leak detection in vapour compression refrigeration systems. Int. J. Refrigeration 28, 680e688. Yélamos, Ignacio, Graells, Moisès, Puigjaner, Luis, 2007. Simultaneous fault diagnosis in chemical plants using a multilabel approach. AIChE. J. 53 (11), 2871e2884. Zhu, X.L., 2000. Fundamentals of Applied Information Theory. Tsinghua University Press, Beijing, China (in Chinese).