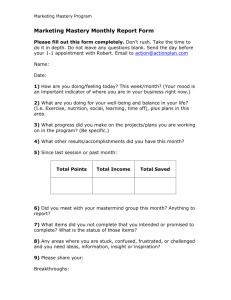

Five years of Mastery Learning – What did we learn? Claudia Ott Brendan McCane Nick Meek Dept. Computer Science University of Otago Dunedin, New Zealand claudia.ott@otago.ac.nz Dept. Computer Science University of Otago Dunedin, New Zealand brendan.mccane@otago.ac.nz Dept. Computer Science University of Otago Dunedin, New Zealand nick.meek@otago.ac.nz ABSTRACT Over the last five years we have developed our first year, first semester programming course into a mastery learning oriented course – a delivery format allowing students to work at their own pace towards the achievement level they aim for. In this paper, we report on a series of changes to the course and how these impacted students’ engagement, their learning progress and their final grades. We aim to initiate a discussion on fostering selfregulated learning early on in a course. ter one level before they can move on to the next level can be seen as a multi-stage assessment supporting self-monitoring of progress. If during practical exercises one-to-one tutor support is readily available, dialogical feedback to advise students on shortcomings, improvements and ways to practice can be provided to promote students’ self-evaluation. However, the self-paced nature of a mastery inspired learning model requires a certain level of self-regulation to stay focused and engaged throughout the course. How students reacted to the challenges of self-regulated learning in a mastery oriented course is discussed in this paper. CCS CONCEPTS • Social and professional topics -> Computing Education KEYWORDS Mastery Learning, Student Engagement, Self-Regulated Learning, Introductory programming, CS1 1 Introduction Ideally, self-regulated learners work in cycles of setting their goals, selecting suitable strategies to reach those goals, selfmonitoring their progress, adjusting the strategies if necessary, and critically reflecting at the end of the learning cycle, which leads to improvements for the next cycle [8]. The importance of self-monitoring is highlighted in a model of self-regulated learning that emphasizes student’s ability to generate internal feedback during the learning process [1]. Further work on “sustainable” feedback followed with a focus on strategies and practices to support students’ development into self-regulated learners (e.g. [4], [2]). Sustainable feedback practices are described as “dialogic processes and activities which can support and inform the student on the current task, whilst also developing the ability to selfregulate performance on future tasks” ([2], p. 397). Those practices include multistage assessments, dialogical feedback and the promotion of students’ self-evaluation. Mastery Learning [e.g. 3], where students are required to masPermission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored. For all other uses, contact the Owner/Author. Koli Calling '18, November 22–25, 2018, Koli, Finland © 2018 Copyright is held by the owner/author(s). ACM ISBN 978-1-4503-6535-2/18/11. https://doi.org/10.1145/3279720.3279752 https://doi.org/10.1145/1234567890 2 Motivation and Phases of Change An elective first year Python course was introduced in 2009 as a gentle introduction to computer programming in a traditional format of two weekly lectures and two accompanying laboratory sessions [6]. The course is offered in first semester with approx. 250 students enrolled. Based on an inquiry into students’ performance in second semester [7] we suspected that students acquired programming skills at a superficial level only. By the end of 2013 we decided to change the format to a mastery learning model where credits are gained by mastery tests, called progression levels (PLs) and scheduled practical tests. Successive changes were administered and can be divided into three distinctive phases. 2.1 Phase 1 (2014 & 2015): Mastery Learning Model introduced and adjusted In 2014 we introduced 7 PLs contributing 35% towards the final mark. Other credits were gained by a practical test (15%) and a written final exam of multiple choice questions (50%). To pass the course students needed to gain 50% of all credits. Students were scheduled for two weekly lab sessions (2 hours each) with tutors available to assist students with their lab tasks. During their lab time students could take a test (20 to 30 minutes) to complete a PL (no textbook or online resources allowed). Students passing the test could attempt the next PL whereas students failing the test were advised to review the lab work and ask tutors for help if needed. Those students could repeat the test during one of their next lab sessions with no penalty until they passed the PL. To discourage rote learning one of several PL versions was randomly assigned. As the statically paced lectures became irrelevant for a lot of students working at their own pace, the lecture content was delivered by podcasts from 2015 onwards. One lecture per week was scheduled for questions and to discuss concepts. Koli Calling’18, November, 2018, Koli, Finland 2.2 Phase 2 (2016 & 2017): Final Exam replaced In 2016 we felt confident that the mastery tests were a fair assessment of students’ programming ability which made a written final exam unnecessary. By administering mastery tests (60% towards the final grade) and two scheduled practical tests (40% of the final grade) we adopted the idea of “contract grading” (e.g. [5]) where summative assessments are obsolete and students can decide on their level of achievement and work towards that level without the uncertainty of a final exam. We assumed that such a change would promote students’ self-regulated learning in terms of setting their own goals and self-monitoring of their performance. Based on student feedback, which indicated that the weekly Q&A lecture was perceived as unorganized and not well prepared, we went back to scheduled topics covered in a weekly lecture. In 2017 we made lab attendance compulsory as we had observed in 2016 that students attended labs more infrequently and completed fewer progression levels as compared to 2014 & 2015. 2.3 Phase 3 (2018): Remedies introduced By the end of 2017 we realized that there was an urgent need to motivate students to continuously engage in the course rather than to take a last-minute approach to completing the progression levels. In 2018 we required students to make at least one attempt per week on a PL and late penalties were applied after certain cut-off dates to encourage students to attempt progression levels earlier. The progression levels after PL6 were split into four different pathways students could choose from: Object Oriented, Complex (Algorithms & Math), Graphical User Interfaces and Data Structures. We hoped that students would “buy-in” to higher progression levels by having a choice of topics to study. 3 Results The most successful year in terms of students’ engagement was the first year of mastery learning. Students were working continuously and completed 87% of the PLs available. The low submission rate during the last two weeks of the semester (6% of all submissions) indicated that students were on track with their work. 19.0% of students failed the course which was the lowest failure rate of all five years. When lectures were replaced by podcasts to allow students a more self-paced approach, we observed that students became increasingly inactive with 2.5 weeks of no attempts on PLs on average (up from 0.9 weeks of inactivity in 2014). This temporal disengagement resulted in a rush towards the end of the semester with 15.5% (up from 6%) of all PL attempts during the last two weeks. As a side effect the overall completion rate of PLs dropped to 82%. 24.3% of students failed the course after sitting the final exam. In phase 2, when the final exam was replaced by a practical test, students’ engagement with the course became even more concerning. An average inactivity of 3.2 and 3.0 weeks respectively in 2016 and 2017 lead to a further drop in completion of PLs to 69.9% and 75.6% despite high activity within the last two weeks (23% of all attempts). Most noticeable during this phase was an increase of the group of students just passing the course with a C. Ott et al. mark of 50% to 60% (25% of students in 2016 up from 12% in 2015 and 6% in 2014) despite an unchanged failure rate and a large group students (about 20%) achieving 90% and more. Phase 3 introduced remedies to increase engagement with the course which did not prove to be particularly successful as only 70.5% of all PLs were completed. The changes seemed to motivate the top students but did not help the lower achieving students to stay focused and pass the course to a higher degree. In fact, the bimodality of the final grades was more pronounced than ever with 29.7% finishing with 90% and more and 26.7% failing the course. Note: There were no late penalties applied for this study. 4 Conclusion Based on the observation that the first year of the mastery learning offering showed high engagement with a high completion rate of PLs we conclude that the quality of teaching and feedback in the computer labs is on a level where the majority of students can complete all PLs in a timely manner. If students take the opportunity to attempt PLs on a regular basis (e.g. once a week) selfmonitoring of progress is basically a side effect. The one-to-one discussion with a tutor regarding a failed attempt is a great opportunity for the student to get feedback on how to improve. The cycle of self-monitoring and feedback is broken when students disengage from the course. One way to get students back on board would be to roll back the changes made. However, we do believe that intrinsic motivation and a genuine interest to proceed are more valuable for students’ learning experience than a set pace and the “threat” of a final exam. Allowing students to work at their own pace and setting up their own goals creates a responsibility we want the students to experience in order to foster selfregulated learning. Our experiences highlight that this is not an easy undertaking, especially in a first year, first semester course which is for most students their first encounter of studying at university level. Unfortunately, the question of how to help first year students, who are accustomed to a schooling system which provides a high degree of structure, rigid schedules and continuous encouragement, to develop into pro-active learners in charge of their own learning, still remains. REFERENCES [1] D. L. Butler and P. H. Winne. 1995. Feedback and Self-Regulated Learning: A Theoretical Synthesis. Review of Educational Research, 65(3), 245–281. [2] D. Carless, D. Salter, M. Yang and J. Lam. 2011. Developing sustainable feedback practices. Studies in Higher Education, 36(4), 395–407. [3] E. J. Fox. 2004. The Personalized System of Instruction: A Flexible and Effective Approach to Mastery Learning. In D. J. Moran & R. W. Malott (Eds.), Evidence-based educational methods. San Diego: Elsevier Academic Press. [4] D. Hounsell. 2007. Towards more sustainable feedback to students. In: D. Boud and N. Falchikov (Eds.) Rethinking Assessment in Higher Education: learning for the longer term. London: Routledge. [5] N. LeJeune. 2010. Contract grading with mastery learning in CS 1. Journal of Computing Sciences in Colleges, 26(2), 149–156. [6] B. McCane. 2009. Introductory Programming with Python Curriculum. The Python Papers Monograph, 1, 1–11. [7] B. McCane, C. Ott, N. Meek and A. Robins. 2017. Mastery Learning in Introductory Programming. In Proceedings of the Nineteenth Australasian Computing Education Conference on - ACE ’17. New York, USA: ACM Press. [8] B. J. Zimmerman. 1998. Developing self-fulfilling cycles of academic regulation: An analysis of exemplary instructional models. In D. H. Schunk & B. J. Zimmerman (Eds.) Self- regulated learning: From teaching to self-reflective practice. NY: Guilford Press.