IAML W02

advertisement

Advanced Topics in Image Analysis and

Machine Learning

Image Degradation and

Restoration

Week 02

Faculty of Information Science and Engineering

Ritsumeikan University

Today’s class outline

– Overview of Soft Computing

Definition

Applications

Techniques

– Genetic Algorithms

Introduction

to Genetic Algorithms

– Image Restoration Project

Introduction

Soft Computing:

A Brief Introduction

Soft vs. Hard Computing

Hard Computing, the normal programming that we

do, requires precise and known in advance

algorithms that generate the exact solution

Soft Computing differs from conventional hard

computing in that, unlike hard computing, it is

tolerant of imprecision, uncertainty, partial truth, and

approximation

Soft Computing is to find approximate solutions to

both imprecisely and precisely formulated problems

Soft Computing: The Idea

Exploit the tolerance for imprecision,

uncertainty and partial truth to achieve

tractability, robustness, and low solution cost

There are many intractable problems in the

world that have no known polynomial

algorithm available for their solutions (e.g.

VLSI layout design, cargo placement /

packing optimisation, etc.)

Soft Computing Applications

Handwriting and speech recognition

Image processing and data compression

Automotive system control and

manufacturing

Decision-support systems (planning and

scheduling, etc.)

Artificial vision systems

Consumer electronics control

…

Techniques of Soft Computing

Soft Computing includes three major

complementary groups of techniques:

- Evolutionary Computation: Genetic

Algorithm (GA) and Genetic

Programming

- Neurocomputing: Artificial Neural

Networks (ANN) and Machine Learning

- Fuzzy Sets and Fuzzy Logic, Rough

Sets, and Probabilistic Reasoning

Overview of the Techniques

Evolu&onary Computa&on: the algorithms for search and op&miza&on Neurocompu&ng: the algorithms for learning and curve fi<ng Fuzzy Logic and Probabilis&c Reasoning: the algorithms for dealing with decision‐making imprecision and uncertainty, and Rough Sets: the approach to handle uncertainty arising from the granularity in the domain of discourse Evolutionary Computation:

Genetic Algorithm

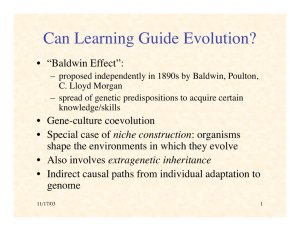

The idea (by John Holland, in 1970s):

- Evolution works biologically so, maybe, it will

work with simulated environments

- Each possible solution (whether good or bad) is

represented by a chromosome – a pattern of bits

which works as DNA. Then:

Determine the better solutions

Mate these solutions to produce new solutions which

are (hopefully) occasionally better than the parents

Repeat this for many generations

Artificial Neural Network (ANN)

ANN Training

ANN’s are trained in a variety of ways. During the training process, the weights for links among the different neurons are modified un&l the desired output is generated for each input case used in the training process OUTPUT

.9

.2

.2

.4

1.1

1.7

.2

.3

3

.4

.2 .1

2

INPUT

1

Fuzzy Sets and Fuzzy Logic

Sets with fuzzy boundaries

- Consider a set of tall people

Crisp set A

1.0

Fuzzy set B

1.0

.9

Membership

.5

function

172cm

Heights

172

186

Heights

Rough Sets

A rough set is a set with vague boundaries.

By comparison, a regular set has sharp

boundaries, that is, elements on the

boundary either belong to the set or do not

belong to it.

Rough Sets

Set membership of elements on the boundary of

a rough set is undetermined, i.e., it is not known

whether they belong to the set or not.

A rough set is characterised by its lower

approximation, that is, a collection of elements

which belong to the set for sure, and its upper

approximation, that is a collection of elements

which may belong to a set.

A boundary of a rough set is defined as a

difference between the upper approximation

and the lower approximation.

Simulated Annealing: The

Problem

A simple, smooth 2D

problem

Global Maximum

A real-world problem

Optimisation problem:

Search of the global

maximum

Simulated Annealing: The Idea

(Physical) annealing is the process of

slowly cooling down a substance (like a

heated liquid metal)

Simulated annealing is a stochastic

optimisation method implemented by the

Metropolis Algorithm (Monte Carlo):

– Current thermodynamic state = solution (hopefully, the

global maximum)

- Energy equation = objective function

- “Ground” (stable) state = global optimum

Genetic Algorithm (GA) OVERVIEW

A class of probabilistic optimisation

algorithms

Inspired by the biological evolution process

Uses concepts of “Natural Selection” and

“Genetic Inheritance” (Darwin 1859)

Originally developed by John Holland (1975)

Special Features:

– Traditionally emphasizes combining

information from good parents (crossover)

– There are many GA variants, e.g.,

reproduction models, operators

GA overview (cont)

Particularly well suited for hard problems where

little is known about the underlying search space

Widely-used in business, science and engineering

Holland’s original GA is now known as the simple

genetic algorithm (SGA). Other GAs use different:

– Representations

– Mutations

– Crossovers

– Selection mechanisms

Function Optimisation

GA's are useful for solving

multidimensional problems containing

many local maxima (or minima) in the

A real-world problem

solution space

A simple optimisation

problem (no need to use a

GA to solve this!)

global

local

A standard method of finding maxima or

minima is via the gradient

decent (gradient ascent) method

global

local

I found

the top!

Problem: this method may find only a local

maxima!

Genetic Algorithm: the Idea

My height

is 13.2m

My height

is 10.5m

My height

is 7.5m

My height

is 3.6m

The Genetic Algorithm uses

multiple climbers in parallel to

find the global optimum

Genetic algorithm – some iterations

later

A climber has approached the

“global maximum”

I found

the top!

GA Stochastic operators

Selection replicates the most successful

solutions found in a population at a rate

proportional to their relative quality

Crossover takes two distinct solutions and

then randomly mixes their parts to form novel

solutions

Mutation randomly perturbs a candidate

solution

The Evolutionary Cycle

fittest parents

selection

modification

modified

offspring

initiate &

evaluate

population

parents

evaluated 䇺strong䇻

offspring

evaluation

deleted

members

discard

GA Example:

the MAXONE problem

Suppose we want to maximise the number of

ones in a string of 10 binary digits

A gene can be encoded as a string of 10

binary digits, e.g., 0010110101

The fitness f of a candidate solution to the

MAXONE problem is the number of ones in

its genetic code, e.g. f(0010110101) = 6

We start with a population of n random

strings. Suppose that n = 6

Example (initialisation)

Our initial population of parent genes is made using

random binary data:

s1 = 1111010101 f (s1) = 7

s2 = 0111000101 f (s2) = 5

s3 = 1110110101 f (s3) = 7

s4 = 0100010011f (s4) = 4

s5 = 1110111101 f (s5) = 8

s6 = 0100110000f (s6) = 3

The fitness f of a parent gene is simply the sum of the bits.

Selection

Selection is an operation that is used to

choose the best parent genes from the

current population for breeding a new

child population

Purpose: to focus the search in promising

regions of the solution space

Example (Selection)

Next we apply fitness proportionate selection with the

roulette wheel method:

f (i )

Individual i will have a

∑ f (i )

i

probability to be chosen

We repeat the extraction

as many times as the

number of individuals we

need to have the same

parent population size

(6 in our case)

1

n

Area is

Proportional

to fitness

value

2

3

4

Example (selection continued)

Suppose that, after performing selection, we get

the following population:

Selected

parents s`

s1` = 1111010101

(s1)

s2` = 1110110101

(s3)

s3` = 1110111101

(s5)

s4` = 0111000101

(s2)

s5` = 0100010011

(s4)

s6` = 1110111101

(s5)

Original

parents (s)

Example (crossover)

• Next we mate parent strings using crossover.

• For each pair of parents we decide according

to a crossover probability (for instance 0.6)

whether to actually perform crossover or not.

• Suppose that we decide to actually perform

crossover only for pairs (s1`, s2`) and (s5`, s6`).

• For each pair, we randomly choose a

crossover point, for instance bit 2 for the first

and bit 5 for the second parent

Example (crossover cont.)

Before crossover:

s1` = 1111010101

s2` = 1110110101

s5` = 0100010011

s6` = 1110111101

After crossover:

s1`` = 1110110101

s2`` = 1111010101

s5`` = 0100011101

s6`` = 1110110011

Note: sometimes crossover

results in no changes to the pair!

Example (mutation)

The final step is to apply random mutation: for

each bit in the current gene population we allow

a small probability of mutation (for instance 0.05)

Before applying mutation:

After applying mutation:

s1`` = 1110110101

s2`` = 1111010101

s3`` = 1110111101

s4`` = 0111000101

s5`` = 0100011101

s6`` = 1110110011

s1``` = 1110100101

s2``` = 1111110100

s3``` = 1110101111

s4``` = 0111000101

s5``` = 0100011101

s6``` = 1110110001

Fitness:

f (s1``` ) = 6

f (s2``` ) = 7

f (s3``` ) = 8

f (s4``` ) = 5

f (s5``` ) = 5

f (s6``` ) = 6

Purpose: mutation adds new information that may be

missing from the current population

Example: Results

• In one generation, the total population

fitness changed from 34 to 37, thus

improved by ~9%

• At this point, we go through the same

process all over again (repetition), until a

stopping criterion is met

Another example – Maximise X2

Simple problem: maximise y=x2 over the x

interval {0,1,…,31}

GA approach:

• Representation: binary code, e.g. 01101 ↔ 13(10

• Population size: 4 genes (parents)

• Random initialisation

• Roulette wheel selection

• 1-point crossover, bit-wise mutation

We will show one generational cycle as an example

2

x

example: selection

• Make sure you understand this slide! You will implement

something similar during your image restoration coding project!

Probi calculation for gene S1:

Prob(169) = 169/1170 = 0.144

Expected count(S1 ) = Probi * n = 0.14 * 4 = 0.58

x2 example:

crossover

• Each pair of genes may undergo crossover.

• The crossover points are randomly selected.

• Notice that, after crossover, the average population fitness

increased from 293 to 439, and the best genes fitness increased

from 576 to 729!

x2 example: mutation

• All gene bits may undergo mutation (based on the mutation rate).

• Notice that, after mutation, the average population fitness

increased from 439 to 588(the best genes fitness did not change

though)!

GA Group Projects

• Today we will form teams of several students;

• Each team will implement a GA in Matlab (or C/Java/VB?) to

restore a corrupted image:

• Each team should have one good programmer, and access to a

notebook computer (preferably with Matlab)!

• You will submit a written report and give a short presentation in

week 15

GA Group Project: details

The form of the corruption source is additive noise:

N(row,col)= NoiseAmp×sin([2π×NoiseFreqRow×row]+[2π

×NoiseFreqCol×col]))

Teams must code a simple GA that optimises the three

unknown constants NoiseAmp, NoiseFreqRow, and

NoiseFreqCol such that the restoration error (the difference

between the original and GA-optimised restored image) is

minimised.

To make things easy, we will measure the average per-pixel

restoration error, thus:

Restoration error = (Ioriginal + NoiseGA)-Icorrupted

where Ioriginal is the original uncorrupted Lena image,

Icorrupted is the corrupted image (I will give you), and

NoiseGA is the modelled GA corruption noise using the

noise equation above.

GA Group Project: details

Each iteration of your GA will, for each gene in the population:

– Generate new values for NoiseAmp, NoiseFreqRow, and

NoiseFreqCol.

– Corrupt the original image using the equation

N(row,col)=NoiseAmp×sin([2π×NoiseFreqRow×row]+[2π×NoiseFreqCol×col]))

– Measure the restoration error (subtract the corrupted image from the

original image). This becomes the (inverse of) this gene’s fitness

– Make new child genes using selection, crossover, and mutation functions.

The search ranges for the three variables are:

– NoiseAmp

0 to 30.0

– NoiseFreqRow 0 to 0.01

– NoiseFreqCol 0 to 0.01

Each gene encodes all three variables. If you use 1 byte per

variable, each gene will be 24-bits, if you use 2-bytes per

variable, 48 bits:

10110111 01010001 11001010 (24-bits per gene)

NoiseAmp NoiseFreqRow NoiseFreqCol

You need to map the (binary) integer values of each gene to

floating point values for the variables. I.e, for NoiseAmp,

00000000=0.0 and 11111111=30.0

Next Lecture

We will learn more about Genetic

Algorithms (GAs)

We will discuss the image

restoration project.

Read: Gonzalez and Woods

Access to the course website:

http://www.ritsumei.ac.jp/~gulliver/iaml

Homework: Project

Preparation

Start coding your GA. User inputs are population size

(integer, e.g., 50), crossover rate (%, integer, e.g.

60), mutation rate (%, integer, e.g. 5), and total

iterations (integer, e.g. 100).

Make arrays to hold the gene binary values

Fill the arrays with random binary data

Map the gene’s binary values to the three noise

parameters’ values (floating point)

Using the equation

N(row,col)=NoiseAmp*sin([2π*NoiseFreqRo

w*row]+[2π*NoiseFreqCol*col]))

calculate the corruption noise for each pixel of the

image. Remember, the noise values can be

negative, so use signed data types.