On the frame-error rate of concatenated turbo codes

IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. 49, NO. 4, APRIL 2001 602

Transactions Papers

On the Frame-Error Rate of Concatenated

Turbo Codes

Oscar Y. Takeshita, Member, IEEE, Oliver M. Collins, Peter C. Massey, and Daniel J. Costello, Jr., Fellow, IEEE

Abstract—Turbo codes with long frame lengths are usually constructed using a randomly chosen interleaver. Statistically, this guarantees excellent bit-error rate (BER) performance but also generates a certain number of low weight codewords, resulting in the appearance of an error floor in the BER curve. Several methods, including using an outer code, have been proposed to improve the error floor region of the BER curve. In this paper we study the effect of an outer BCH code on the frame-error rate

(FER) of turbo codes. We show that additional coding gain is possible not only in the error floor region but also in the waterfall region. Also, the outer code improves the iterative APP decoder by providing a stopping criterion and alleviating convergence problems. With this method, we obtain codes whose performance is within 0.6 dB of the sphere packing bound at an FER of 10

6

.

Index Terms—BCH codes, convolutional codes, frame-error rate, sphere packing bound, turbo codes.

I. I NTRODUCTION

I N THE pioneering paper by Berrou et al. [1], turbo codes with long frame lengths were shown to achieve bit-error rate

(BER) performance as low as 10 with a signal-to-noise ratio

(SNR) less than 1 dB from capacity in the so called waterfall region of the BER curve. However, in the so called error floor region of the BER curve [2], [3], the slope becomes very shallow, making it difficult to achieve very small BERs without significantly increasing the SNR. The error floor is due to the presence of low weight codewords with small multiplicities, and thus for high SNRs a frame with decoded errors will typically have only a small number of errors, which we call residual errors.

Several techniques have been proposed to lower the error

floor [4]–[8]. One of the methods uses a high-rate algebraic outer code to correct the remaining errors after turbo decoding

Paper approved by J. Huber, the Editor for Coding and Coded Modulation of the IEEE Communications Society. Manuscript received January 8, 1999; revised May 29, 2000 and September 18, 2000. This work was supported by the

National Science Foundation under Grant NCR95-22939 and Grant NCR96-

96065 and by NASA under Grant NAG5-557 and Grant NAG5-8355. This paper was presented in part at the 1998 Allerton Conference on Communication, Control and Computing, Monticello, IL, 1998.

O. Y. Takeshita is with the Department of Electrical Engineering, Ohio State

University, Columbus, OH 43210 USA.

O. M. Collins, P. C. Massey, and D. J. Costello, Jr. are with the Department of

Electrical Engineering, University of Notre Dame, Notre Dame, IN 46656 USA

(e-mail: costello2@nd.edu).

Publisher Item Identifier S 0090-6778(01)03150-6.

[4], [5]. In this paper, we investigate several additional beneficial effects of using such an outer code. We show that it not only lowers the error floor in the BER curve, but the frame-error rate

(FER) is also greatly improved in both the error floor and water-

fall regions. With this approach, we obtain codes that are closer to the sphere packing bound [9] than any previously reported for an FER as low as 10 . We also show that the outer code fixes some deficiencies of the iterative APP decoder related to instability and convergence noted by us and other researchers.

The results presented in this paper emphasize turbo codes with long frame lengths, where the “interleaver gain” effect [10] is fully realized and the transition between the error floor and

waterfall regions is very sharp. In the examples presented in this paper, we use three variations of inner turbo codes all with an information blocklength of 16 384, 16-state rate 1/2 convolutional component codes, and an overall rate of 1/3. Two systematic recursive convolutional codes are used:

(Berrou component code [1]) and

(Primitive feedback polynomial component code). The corresponding turbo codes are obtained by the parallel concatenation of two and two convolutional codes, respectively. An asymmetric Primitive-Berrou code [11], which has good performance in both the waterfall and error floor regions, is obtained by concatenating and .

1 Also, since we are looking for practical code designs that are easy to implement, we use an algebraically constructed deterministic interleaver with parameter , shown in [12] to perform at least as well as the average of randomly chosen interleavers.

In Section II, we discuss the residual errors and several alternatives to improve BER and FER performance. We also note some deficiencies of the iterative APP decoder. The general behavior of the iterative APP decoder, which includes stability and convergence problems, is explained in Section III. In Section IV we present several positive aspects of using an algebraic outer code with a turbo code, which include improving the minimum distance of the overall concatenated code and stabilizing the iterative decoding process. Finally, in Section V we present some concluding remarks.

1

Usually only one of the component codes is terminated. We terminate

G (D) in the asymmetric code.

0090–6778/01$10.00 © 2001 IEEE

TAKESHITA et al.: ON THE FER OF CONCATENATED TURBO CODES 603

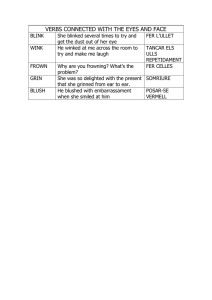

Fig. 1.

A frame with an oscillating number of errors (

E =N = 0:4 dB).

II. R ESIDUAL E RRORS AND

D

S OME D

ECODER

EFICIENCIES OF THE APP

In this section, we discuss the two major points that are addressed in this paper: the residual errors that cause the error

floor and some deficiencies of the iterative APP Decoder.

A. The Residual Errors

In a turbo code that uses either a randomly chosen interleaver or a deterministic interleaver with random-like properties, it is known that low weight codewords are often caused by weight-2 input sequences whose ones are separated by small multiples of the cycle length of the feedback polynomial. Several methods to lower the error floor, by about a factor of 10 in BER performance have been identified by noting the negative consequences of a few codewords of low weight [4]–[8].

Since in the error floor region of the BER curve, the number of bit errors in an incorrect frame is relatively small, one of the first proposed methods to lower the error floor was concatenation with a high-rate algebraic outer code [4], [5]. It was also observed that the locations in the information block that were affected by an incorrectly decoded frame in the error floor region were not random, but comprised a small subset of the possible error positions [6], [7]. This suggested a few additional ways to lower the error floor, such as modifying the interleaver [6], expurgating the weak positions [7] in the information sequence, or using an outer code only to protect the weak positions [8]. However, for those schemes that try to deal with only the weak positions, all possible weak combinations of information positions must be examined, which can be a tedious process, especially if

weak positions due to information weights greater than 2 must also be examined. Furthermore, each specific interleaver results in different weak positions, meaning that for each interleaver we must examine its own weak positions (these also depend on the component code used) for these selective methods to be successful. Thus, we prefer methods that fix the error floor without the need to examine detailed properties of the interleaver and component code.

Summarizing, lowering the error floor by about a factor of

10 was a matter of dealing with a (relatively) small number of codewords with small weight. We will refer to errors caused by small weight codewords as residual errors. Thus we say that the

error floor in the BER curve is caused by residual errors.

We have further observed that for some turbo codes residual

errors are also present in large proportion in the waterfall region of the BER curve. Although the contribution of the residual er-

rors is not significant for the BER curve in the waterfall region, it can significantly affect the FER curve. Therefore, a scheme that removes the residual errors can greatly improve the FER performance of turbo codes operating at low to moderate SNRs, i.e., in the waterfall region.

In this paper we investigate the effect of removing the

residual errors in a turbo code that uses a randomly chosen or random-like interleaver by using a binary BCH outer code over an entire frame of information bits.

B. Deficiencies of the APP Decoder

In addition to improving the BER and FER performance of turbo codes, there are several other advantages of using a BCH code over an entire frame. One is that it provides a reliable stopping criterion for the iterative APP decoder. Indeed, simulation results indicate that some turbo codes must operate with a reliable stopping criterion for the iterations in order to achieve good

BER performance due to instability in the convergence process.

(Some theoretical work that helps to explain the instability of the iterative APP decoder has recently been published [13], [14].

However, it appears that little is known about specific methods of combating the instability.)

After extensive simulations of the primitive code, we have noted the presence of some frames that, after converging to a small number of errors, begin to oscillate with large deviations in the number of errors. In Fig. 1, we show an example of an

604 IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. 49, NO. 4, APRIL 2001

Fig. 2.

FER as a function of the maximum number of iterations.

oscillating pattern for the primitive code. If a fixed number of iterations is used, then stopping near the maximum of an oscillating pattern will significantly affect the BER, especially in the

error floor region, where typically a frame in error has a small number of bit errors per frame. An important point to be noted, however, is that the minimum of an oscillating pattern always has only a small number of bit errors.

Finally, although previously proposed stopping criteria [6],

[15], [16] are sufficient to provide good BER performance for turbo codes, they are not necessarily well suited for FER performance, which is the primary focus of this paper. The reason is because these stopping criteria can be satisfied when the number of bit errors in a frame is small (which ensures a low BER), but not necessarily error-free, thus possibly causing a frame error.

B. Convergence Types

With respect to the number of bit errors in an information block, we have noted the following convergence patterns.

1) A frame converges either to zero errors or to a small number of errors (residual errors), possibly exhibiting a small jitter in the number of errors, after a moderate number of iterations. (This occurs in the large majority of cases.)

2) A frame maintains a large number of errors for many iterations (possibly many times larger than the average) before converging to zero errors.

3) A frame has an oscillating number of errors, but with a minimum number of errors that is very small (on the order of the number of residual errors). The oscillations may stop after awhile or continue indefinitely.

2

4) A frame converges to a large number of errors (about 10% of the information bits).

5) A frame maintains a large number of errors (about 10% of the information bits) with some jitter indefinitely without converging.

An interesting fact that was observed is that no frames converged to a moderate number of errors. In other words, one of the following three possibilities always occurred: 1) a small number of errors; 2) an oscillating number of errors with a small minimum; or 3) a large number of errors. This fact can be used to select an effective concatenation scheme. In a certain sense, the iterative APP decoder exhibits binary behavior, resulting either in a large number of errors or (mostly) a small number of errors

(including zero errors). This is similar to decoding of long convolutional codes with sequential decoding, where either a frame is decoded with very high confidence or an erasure is declared.

III. T HE B EHAVIOR OF I TERATIVE APP D ECODING

In this section, we describe the general behavior of iterative

APP decoding for parallel concatenated turbo codes with large frame lengths, based on conclusions drawn from extensive simulations.

C. Convergence Decisions

Although iterative APP decoding is not maximum-likelihood

(ML) for the overall turbo code [13], [17], most of the frames that converge to a small number of errors result in the ML solution. It has been reported in [4], however, that in some cases, although the number of errors is small, the decision made by the iterative APP decoder is not the ML solution. We have further observed that each of the two APP decoders, based on their respective component codes, may converge to a different solution, and that sometimes these different solutions are exchanged between the outputs of each of the APP decoders with alternating iterations.

A. Required Time for Convergence

The FER as a function of the maximum number of allowed iterations is shown in Fig. 2 for the Berrou code and for the primitive code at several different SNRs. Each curve can be modeled by three straight asymptotes: one which shows no improvement in FER for the first few iterations, one that shows a linear improvement on a log-log scale, and finally a floor, which essentially shows a saturation in the improvement of the FER with an increasing number of iterations. The floor is mostly caused by residual errors. We will see that an outer code lowers the

floor, making the descent part of the curve longer. Because of the shape of these curves, if we were able to stop the iterations as soon as a frame is correctly decoded, then a rule of thumb for the average number of iterations is roughly the point in the curve where 50% of the frames have been correctly decoded, which is about where the linear descent portion of the curve begins.

IV. C ONCATENATION OF A

O UTER

T URBO

C ODE

-C ODE WITH A BCH

The concatenation of an entire turbo code frame with an outer algebraic code has been previously proposed in [4], [5], and in

[8] the outer code was applied only to the weak bits in the information sequence. These schemes were intended to improve the BER performance in the error floor region. In this paper we emphasize the effectiveness of an outer code for improving the

FER performance, both in the error floor and waterfall regions.

2

For some frames we observed oscillations in a periodic pattern for as long as 500 iterations.

TAKESHITA et al.: ON THE FER OF CONCATENATED TURBO CODES 605 frames with an oscillating number of errors also improves the

BER performance.)

Fig. 3.

Pareto like distribution of FER as a function of the maximum number of iterations for the concatenated asymmetric Primitive-Berrou code.

For a BCH code with a design error correction capability of , an algebraic decoder based on the Berlekamp–Massey algorithm declares a decoding failure if the vector to be decoded falls outside the spheres of radius centered on codewords. Let us define a BCH code with parameters , where is the block length, is the information length, and is the number of errors to be corrected by the algebraic decoder. In particular, when , we decrease the error correction capability of the

BCH code but also decrease the probability of undetected error.

A. Required Time for Convergence of the Concatenated

Scheme

We define a successful decoding as the event when the outer

BCH code corrects a number of bit errors equal to or smaller than . Extensive simulations suggest that the probability that a frame is not successfully decoded by the iterative APP decoder concatenated with an outer BCH code, as a function of the maximum number of iterations, follows a Pareto distribution [18, p. 366], thus exhibiting a similarity to sequential decoding of long convolutional codes. This is illustrated in Fig. 3, where a

Pareto distribution can be clearly identified for the asymmetric

Primitive-Berrou code concatenated with a (16 380, 16 240, 10,

8) BCH outer code. (Fig. 3 shows the FER, which, as discussed in more detail below, is approximately equal to the probability of unsuccessful decoding.)

C. Undetected Error Probability Analysis

The outer BCH code checks the hard-decision output of the inner decoder after each iteration and stops decoding whenever it detects an error pattern within its error correcting radius

. Thus, after each iteration of the inner decoder, one of three events can occur: the output of the inner decoder lies within the error correcting radius of the correct codeword and thus the correct codeword is decoded with no errors, the output of the inner decoder lies within the error correcting radius of a valid but incorrect codeword, resulting in an undetected error, or the output of the inner decoder is not within distance of any valid codeword, in which case a decoding failure is declared and decoding continues with another iteration of the inner decoder.

The probability of undetected error after a given iteration can be expressed as since and . (Note that will be close to 1 with high probability in the early iterations of decoding.) We now develop an approximate expression for . First consider the complete space of all binary

-tuples. For a given correct codeword , the probability that a randomly chosen -tuple (error pattern) with more than errors lies within the error correcting radius of a valid but incorrect codeword [i.e., ] is the ratio of the number of -tuples within all the incorrect spheres of radius to the total number of -tuples outside the correct sphere of radius

, i.e.,

(1)

B. The Importance of a Stopping Criterion

From the previous section, it is clear that to achieve a very small FER, we need to allow at least the number of iterations inferred from the Pareto distribution. The BCH code is used to effectively stop the iterative inner decoder by checking after each iteration whether outer decoding is successful or a decoding failure is declared. When outer decoding is successful, the iterative inner decoder is stopped. This enables the decoder to stop decoding those frames with an oscillating number of errors, as long as the minimum number of errors falls within the decoding radius of the outer BCH code. (Note that increasing the maximum allowable number of iterations and correctly processing

An error pattern that results from the hard-decision output of the inner decoder will typically have a smaller number of errors than a randomly chosen error pattern. Thus, the hard-decision output will typically lie in a local subspace of the complete -tuple space centered at the correct codeword. It is reasonable to assume, however, that the density of BCH codewords within the local subspace is approximately the same as the density of codewords in the complete -tuple space. Hence, within the local subspace, the probability that a randomly chosen error pattern with more than errors lies within a radius of an incorrect codeword is approximated by (1).

Finally, we note that the hard-decision output error pattern of the inner decoder is not completely random. However,

606

TABLE I

U NDETECTED E RROR P ROBABILITY OF C ONCATENATED C ODES (I NFORMATION

B LOCK L ENGTH

= 1024)

IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. 49, NO. 4, APRIL 2001 is averaged over all possible equally likely outer BCH codewords, i.e.,

The distribution of the outer BCH codewords is independent of the distribution of inner turbo codewords. Therefore, we can appproximate by , where the error pattern is completely random. Thus (1) serves as a good approximation for and the undetected error probability, i.e., the probability that an BCH code falsely stops after a given iteration of the iterative APP decoder is approximated by

(2)

Fig. 4.

FER of concatenated and unconcatenated codes along with the sphere packing bound.

needed to achieve lower FERs. We now present simulations demonstrating the effectiveness of concatenating a parallel concatenated turbo code with an outer BCH code, itself a form of serial concatenation. In particular, at an FER of 10 , we obtain new results that are within 0.6 dB of the sphere packing bound.

The expression (2) multiplied by the number of iterations represents an estimate of the undetected error probability of the complete concatenated system.

3 We have verified by simulation of a complete system that the actual undetected error probability is very close to that predicted by (2) for the case of a rate 1/3, information block length 1024 (including four tail bits), primitive inner turbo code concatenated with a (1020, 950, or 7) BCH outer code (see Table I).

,

Since an undetected error causes a frame error, must be made sufficiently small so that it does not affect the FER. By making smaller than , it is easy to obtain a negligible for the large code lengths simulated in Section IV-E.

D. Sphere Packing Bound turbo codes were first introduced in [1] as codes that achieve

BERs on the order of 10 at SNRs within 1 dB of the Shannon capacity. However, the Shannon capacity assumes error-free transmission and does not impose a constraint on the length of the code, and thus it is not an accurate measure of the theoretically best achievable performance for a specific code length and required FER. The sphere packing bound developed by Shannon [9] is a better performance measure because, for a given rate, the minimum required SNR is a function of the blocklength and the FER. It was shown in [19] that turbo codes are within 0.7 dB of the sphere packing bound for several frame lengths and code rates at an FER of 10 . It is also mentioned in [19] that due to the error floor of parallel concatenated turbo codes, serially concatenated turbo codes [20] might be

3

Since we do not expect a small number of bit errors after inner decoding until the descent part of the Pareto curve begins, the probability of undetected error can be reduced by starting BCH outer decoding only after a given number of iterations.

E. Simulation Results

It has been shown in [11] that asymmetric turbo codes have certain advantages such as good BER and FER performance in both the error floor and waterfall regions. We have since observed that this result extends also to the concatenation scheme considered in this paper. The example we present is a (49 152,

16 240) code consisting of a Primitive-Berrou asymmetric turbo inner code concatenated with a (16 380, 16 240, 10, 8) shortened primitive narrow-sense binary BCH outer code. Since we use a

16-state component code, four tail bits are added to the BCH codeword prior to turbo inner encoding. A maximum of 50 iterations is allowed per frame but the average number of iterations at the SNRs of interest is on the order of 10.

The undetected error probability for each turbo-iteration is estimated from (2) as . Thus, even allowing a fixed number of 100 iterations per frame gives an undetected frame-error probability of about 10 , and we expect an order of magnitude less for an average of 10 iterations per frame, which is typical for the SNRs of interest. This undetected error probability is much smaller than our target FER of 10 . Out of approximately 10 simulated frames, no frame with undetected errors was observed.

In Fig. 4, we show the FER performance of the concatenated

Berrou code and the concatenated Primitive-Berrou code, both with rate 0.330, along with the FER performance of the corresponding inner turbo codes alone (these were simulated for a fixed number of 18 iterations). The improvement in performance is dramatic both in the waterfall and error floor regions.

The sphere packing bound is also plotted for a rate 0.330 code with information blocklength 16 240. The concatenated Berrou code is the best choice if we are satisfied with a FER of 10

TAKESHITA et al.: ON THE FER OF CONCATENATED TURBO CODES 607 or higher. However, for uniformly good performance as low as an FER of 10 , the concatenated asymmetric Primitive-Berrou code is the best. It is mentioned in [19] that although turbo codes are within 0.7 dB of the sphere packing bound for several code rates and information blocklengths at an FER of 10 , lower FERs are more difficult to obtain because of the error

floor. The concatenation of a parallel concatenated turbo code with an outer BCH code thus seems to be a viable approach for achieving lower FERs with only negligible penalties in rate and complexity. In particular, the asymmetric Primitive-Berrou code is within 0.6 dB of the sphere packing bound at an FER of

10 . Finally, we note that the computational complexity of an algebraic BCH decoder is small compared to an iterative APP decoder, and thus the outer decoder does not significantly increase the overall decoding complexity.

V. C ONCLUSIONS

Randomly chosen interleavers or deterministic random-like interleavers are the primary reason for the excellent BER performance of turbo codes, especially with long frame lengths, but they introduce certain deficiencies into the code, which manifest themselves as an error floor or residual errors. For turbo codes with large frame lengths, an algebraic high-rate outer code is not only extremely effective in lowering the error floor by correcting the residual errors, but it can also be used to alleviate other undesired effects of the iterative APP decoder, such as frames with an oscillating number of bit errors or frames that take a long time to converge. Another benefit of an outer code is that it can be used as a stopping criterion, resulting in an overall reduction in decoding complexity.

The effectiveness of an outer BCH code in improving the performance of a turbo code was verified by simulation for both the error floor and waterfall regions of the FER curve. We have obtained one code whose performance is within 0.6 dB of the sphere packing bound at an FER of 10 . This scheme has advantages for practical system implementation, because with a simple addition to standard turbo coding, the overall system gives significant improvement in FER performance with only a small increase in decoding complexity.

4

A more complex extension of the above scheme would be to consider a soft-in, soft-out outer decoder, which would feed soft values back to the inner decoder after each iteration. Such a hybrid serial/parallel concatenation scheme would lose the advantage of the simple stopping rule provided by the algebraic BCH decoder, though. In addition, the very high rate of the outer code implies that the extrinsic probabilities passed back to the inner decoder would contain essentially no new information, thus offering little potential for improved performance.

[2] J. D. Andersen, “The TURBO coding scheme,” Tech. Univ. of Denmark,

Lyngby, Rep. IT-146, June 1994.

[3] J. Hagenauer and L. Papke, “Decoding “Turbo”-codes with the soft output Viterbi algorithm,” in Proc. 1994 IEEE Int. Symp. Information

Theory, June 1994, p. 164.

[4] J. D. Andersen, “Turbo codes extended with outer bch code,” Electron.

Lett., vol. 32, no. 22, pp. 2059–2060, Oct. 1996.

[5] D. J. Costello Jr. and G. Meyerhans, “Concatenated turbo codes,” in

Proc. 1996 IEEE Int. Symp. Information Theory and Its Applications

(ISITA ’96), Victoria, BC, Canada, Sept. 1996, pp. 571–574.

[6] P. Robertson, “lluminating the structure of code and decoder of parallel concatenated recursive systematic (turbo) codes,” in Proc. IEEE

GLOBECOM Conf., Dec. 1994, pp. 1298–1303.

[7] M. Öberg and P. H. Siegel, “Application of distance spectrum analysis to turbo code performance improvement,” in Proc. 35th Annu. Allerton

Conf. Communication, Control, and Computing, Sept. 1997, pp.

701–710.

[8] K. R. Narayanan and G. L. Stüber, “Selective serial concatenation of turbo codes,” IEEE Commun. Lett., vol. 1, pp. 136–138, Sept. 1997.

[9] C. E. Shannon, “Probability of error for optimal codes in a Gaussian channel,” Bell Syst. Tech. J., vol. 38, pp. 611–656, 1959.

[10] S. Benedetto and G. Montorsi, “Unveiling turbo codes: some results on parallel concatenated coding schemes,” IEEE Trans. Inform. Theory, vol. 42, pp. 409–428, Mar. 1996.

[11] O. Y. Takeshita, O. M. Collins, P. C. Massey, and D. J. Costello Jr.,

“A note on asymmetric turbo codes,” IEEE Commun. Lett., vol. 3, pp.

69–71, Mar. 1999.

[12] O. Y. Takeshita and D. J. Costello Jr., “New deterministic interleaver designs for turbo codes,” IEEE Trans. Inform. Theory, vol. 46, pp.

1988–2006, Sept. 2000.

[13] L. Duan and B. Rimoldi, “The turbo decoding algorithm has fixed points,” in Proc.

1998 IEEE Int.

Symp.

Information

Theory Cambridge, MA, Aug. 1998, p. 277.

[14] T. Richardson, “The geometry of turbo-decoding dynamics,” IEEE

Trans. Inform. Theory, vol. 46, pp. 9–23, Jan. 2000.

[15] J. Hagenauer, E. Offer, and L. Papke, “Iterative decoding of binary block and convolutional codes,” IEEE Trans. Inform. Theory, vol. 42, pp. 429–445, Mar. 1996.

[16] R. Y. Shao, S. Lin, and M. P. C. Fossorier, “Two simple stopping criteria for turbo decoding,” IEEE Trans. Commun., vol. 47, pp. 1117–1120,

Aug. 1999.

[17] R. J. McEliece, D. J. C. MacKay, and J.-F. Cheng, “Turbo decoding as an instance of Pearl’s ‘belief propagation’ algorithm,” IEEE J. Select.

Areas Commun., vol. 16, pp. 140–152, Feb. 1998.

[18] S. Lin and D. J. Costello Jr., Error Control Coding: Fundamentals and

Applications.

Englewood Cliffs, NJ: Prentice-Hall, 1983.

[19] S. Dolinar, D. Divsalar, and F. Pollara, “Code performance as a function of block size,” JPL, TMO Prog. Rep. 42-133, May 1998.

[20] S. Benedetto, D. Divsalar, G. Montorsi, and F. Pollara, “Serial concatenation of interleaved codes: Performance analysis, design, and iterative decoding,” IEEE Trans. Inform. Theory, vol. 44, pp. 909–926, May

1998.

R EFERENCES

[1] C. Berrou, A. Glavieux, and P. Thitimajshima, “Near Shannon limit error-correcting coding and decoding: turbo codes,” in Proc. IEEE Int.

Conf. Communications, May 1993, pp. 1064–1070.

4

In fact, the overall number of computations is reduced due to the stopping rule function of the BCH decoder.

Oscar Y. Takeshita (M’92) was born in Sao Paulo,

SP, Brazil, on October 28, 1966. He received the

Diploma in electrical engineering with emphasis in telecommunications from Escola Politecnica da

Universidade de Sao Paulo, Brazil, in 1991, the M.E.

degree in electrical engineering from the Yohohama

National University, Japan, in 1994, and the Ph.D.

degree in electrical engineering from the University of Tokyo, Tokyo, Japan, in 1997.

In 1997, he became a Postdoctoral Research Associate at the University of Notre Dame, Notre Dame,

IN. In 1999, he joined the faculty of the Ohio State University, Columbus, as an

Assistant Professor of Electrical Engineering. His research interests include the area of communications, particularly error control coding.

608 IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. 49, NO. 4, APRIL 2001

Oliver M. Collins was born in Washington, DC. He received the B.S. degree in engineering and applied science, and the M.S. and Ph.D. degrees in electrical engineering, all from the California Institute of Technology, Pasadena, in 1986, 1987, and 1989, respectively.

From 1989 to 1995, he was an Assistant Professor, and later an Associate Professor, in the Department of Electrical and Computer Engineering at the Johns

Hopkins University, Baltimore, MD. In September

1995, he accepted appointment as Associate

Professor in the Department of Electrical Engineering of the University of

Notre Dame, Notre Dame, IN, where he teaches courses in communications, information theory, coding, and complexity theory.

Dr. Collins received the 1994 Thompson Prize Paper Award from the IEEE, the 1994 Marconi Young Scientist Award from the Marconi Foundation, and the

1998 Judith Resnik Award from the IEEE.

Peter C. Massey received the B.S.E.E. degree from

Purdue University, West Lafayette, IN, in 1985, the

M.S.E.E. degree from the University of Michigan in

1989, and the Ph.D. degree from the University of

Colorado in 1996.

He has previously worked at Delco Electronics,

Motorola Government Electronics Group, and

Unisys. He was a Visiting Assistant Professor at the University of Notre Dame, Notre Dame, IN, for two years. Currently, he is a Research Assistant

Professor at the University of Notre Dame.

Daniel J. Costello, Jr. (S’62–M’69–SM’78–F’85) was born in Seattle, WA, on August 9, 1942. He received the B.S.E.E. degree from Seattle University,

Seattle, WA, in 1964, and the M.S. and Ph.D.

degrees in electrical engineering from the University of Notre Dame, Notre Dame, IN, in 1966 and 1969, respectively.

In 1969, he joined the faculty of the Illinois Institute of Technology, Chicago, as an Assistant Professor of Electrical Engineering. He was promoted to

Associate Professor in 1973, and to Full Professor in

1980. In 1985 he became Professor of Electrical Engineering at the University of Notre Dame, Notre Dame, IN, and from 1989 to 1998 served as Chairman of the Department of Electrical Engineering.

Dr. Costello is a member of the Information Theory Society Board of Governors (BOG), and in 1986 served as President of the BOG. He has also served as Associate Editor for Communication Theory for the IEEE T RANSACTIONS

ON C OMMUNICATIONS , as Associate Editor for Coding Techniques for the IEEE

T RANSACTIONS ON I NFORMATION T HEORY , and as Co-Chair of the 1988 IEEE

International Symposium on Information Theory in Kobe, Japan, and the 1997

IEEE International Symposium on Information Theory in Ulm, Germany. In

1991, he was selected as one of 100 Seattle University Alumni to receive the

Centennial Alumni Award in recognition of alumni who have displayed outstanding service to others, exceptional leadership, or uncommon achievement.

In 1999, he received a Humboldt Research Prize from the Alexander von Humboldt Foundation in Germany. In 2000, he was named the Leonard Bettex Professor of Electrical Engineering at Notre Dame. He is also the recipient of an

IEEE Third Millennium Medal (2000).