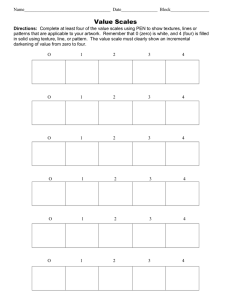

Concepts of Scale

advertisement