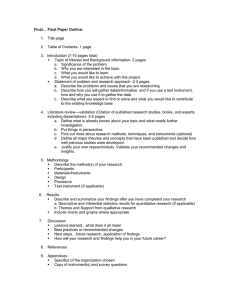

Measuring Students` Content Knowledge, Reasoning Skills, and

advertisement